Abstract

Assignment of confidence mass appropriately is still an open issue in information fusion. The most popular rule of combination, Dempster’s rule of combination, has been widely used in various fields. In this paper, a belief interval negation is proposed based on belief interval. The belief interval has a stronger ability to express basic probability assignment (BPA) uncertainty. By establishing belief interval in an exhaustive frame of discernment (FOD), the negation is obtained in the form of a new interval. Belief interval negation as an essential tool for measuring uncertainty builds the relationship among BPA, belief interval and entropy. Furthermore, the new negation is applicable to various belief entropies and entropy increment is verified in negation iterations. Two novel uncertainty measures proposed in this paper are applicable to the newly proposed belief interval negation, too. Finally, convergent mass distribution is discussed. Some numerical examples and its application in medical pattern recognition are exhibited.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Information fusion technology is now an important research course [1]. Information fusion technology can lead to more credible conclusions in the more reasonable way through multiple different sensors, by integrating the fuzzy set theory [2], evidence theory [3, 4], D-number theory, evidential reasoning [5, 6], and Z-number theory [7], etc. Because of the effectiveness in real applications, it has been well used in various fields, such as decision-making [8], supplier selection [9], preventive maintenance planning [10] and so on.

When confidence mass is assigned to multi-subsets, the confidence mass of multi-subset belief acquisition becomes a basic probability assignment (BPA). When the belief mass is only assigned to singletons, it is consistent with the Bayesian method [11]. Moreover, the model degenerates into a Bayesian probability model. The negation of a proposition can more intuitively measure the ambiguity degree of information [12] in Fig. 1 (cardinality of hypothesis (|Ω|) is N). Yager proposed a negation operation based on the Bayesian probability model [13]. For an exhaustive frame of discernment (close-world assumption), Gao and Deng proposed a confidence mass decision-making allocation scheme based on the power set [14]. Lefevre et al. proposes a method of assigning the mass of conflicts to power set relying on weights in the case of conflict information fusion [15]. Similarly, Luo and Deng combine the negation with the weight in frame of discernment (FOD) [16]. What’s more, they propose a more intuitive matrix negation method. Meanwhile, they verify that the negation of matrix negation based on the total uncertainty measure satisfies a property that entropy constant increment after each negation operation [17]. After multiple negation iterations, BPA tends to average according to cardinality.

Ambiguity degree. For a Completely trustful message (Mass= 0), its negation is Completely distrustful (Mass= 1), which cardinality of hypothesis (|Ω|) is N. Because of the huge Gap between Completely distrustful and Completely distrustful, the hypothesis Ω is called the Definite information. On the contrary, when the confidence mass perhaps tends to be relatively average (Uniformly trustful), negation explains that the ambiguity of the information is increasingly large (Definite→ Ambiguous → Most ambiguous)

The mass represents the belief degree of a certain proposition, and also indicates how much the confidence mass is assigned [18]. However, the mass cannot represent the belief of every element in a hypothesis. Therefore, the negation of the probability distribution is not suitable for the BPA model [19]. Unfortunately, most previous negations were proposed for the maximum entropy of Shannon entropy in probability distribution or maximum Nguyen entropy in BPA. As a tool of the uncertainty measure, the negation is usually only suitable for a specific entropy. However, there are currently many uncertain measures applicable to BPA that is obtained in the open-world. In contrast, there is no suitable negation method as a tool for many uncertain measures to measure the ambiguity degree. In particular, some entropies based on the belief interval (belief function and plausibility function) [20, 21] are difficult to find negation directly in the BPA framework. Therefore, it is an important research course to establish the belief interval framework and find the negation indirectly under the BPA framework.

In Section 2.1, the D-S evidence theory and the Bayesian framework will be reviewed. In Section 2.2, the negation of Bayesian probability model will be reviewed. In Section 2.3, a series of BPA negation approaches will be reviewed. In Section 2.4, distinct uncertainty measures will be compared. In Section 3.1, two novel uncertainty measures are proposed. In Section 3.2, a new belief interval negation combination method is defined. In Section 3.3, the calculation method of the newly proposed belief interval negation method is defined. In Section 3.4, the property of entropy increment is verified. In Section 4.1, the newly proposed belief interval negation method is compared with some previous negation methods. In Section 4.2, some numerical examples are exhibited. In Section 4.3, the application in medical pattern recognition is explained. In Section 4.4, convergent mass distribution is discussed. In Section 5, the paper draws a conclusion.

2 Preliminaries

2.1 Dempster Shafer theory

Uncertainty information processing is inevitable in real applications [22]. So far, many methods have been proposed to deal with uncertainty information, such as probabilistic linguistic [23, 24], fuzzy sets [25], intuitionistic fuzzy sets [26, 27], belief rule-based [28, 29], and so on [30]. As one of the most useful methods to handle the uncertainty, the evidence theory was first proposed by Dempster, then developed by Shafer [31, 32]. The subjective Bayesian method firstly has to give the prior probability. Moreover, the evidence theory has the ability to directly express uncertainty in dealing with conflicts [33]. When the probability is known, the evidence theory degenerates into probability theory.

Suppose that there is a hypothesis Ω, the elements in Ω are mutually exclusive, we call Ω a frame of discernment (FOD). The cardinality of elements of Ω is N, and the cardinality of elements of the power set 2Ω is 2N, satisfying

where ∅ is an empty set. Proposition Θ consisting of all elements is defined as the support set of Ω. For simplify these symbols, the power set corresponds to

where \(A_{2^{N}}\) has the same meaning as Θ. As a proposition Θ, then m(Θ) suggests that the confidence mass is of ignorance how to allocate. If m(Θ) = 1, confidence mass is of total ignorance how to allocate [15]. Each subset regarded as a proposition corresponds to a mass, and the magnitude of the mass represents the precise belief of a proposition, satisfying:

The mass from the information fusion of 2 sources:

The belief function (Bel) can be interpreted as confidence that a proposition is correct. The plausibility function (Pl) can be regarded as a belief assignment that a proposition may be correct [34].

2.2 Negation of probability distribution

Uncertainty management has been widely used in fault diagnosis [35], as well as pattern classification [36], data fusion [37], and so on [38, 39]. The negation is an essential tool of uncertainty measures.

Example 1

In the medical field, hormones act on specific target cells to regulate their metabolism. It is worth mentioning that the target cell tracking event is assumed to be hypothesis X. Each mutually exclusive element is the target cell \(X= \left \{x_{1}, x_{2}, \cdots , x_{n}\right \}\) probably being traced.

In Beyesian probability model, hypothesis X corresponds to sample space, elements in X corresponds to samples. The target cell is x1, which will be recorded as “On trace x1” in the fuzzy set and probability is P(x1). In contrast, if the target cell is not x1, it is recorded as “Not on trace x1” and probability is \(P(\overline {x_{1}})\).

Under the circumstance where the probability of each element xi is known, it degenerates from FOD into a Bayesian model, corresponding to the probability \(P = \left \{p_{1}, p_{2}, \cdots , p_{n}\right \}\):

where pi ∈ [0,1].

\(\bar {p_{j}}\) is defined as probability distribution negation after j negation iterations. We suppose that

Then,

Thus, \(p_{i}-\frac {1}{n}\) is a series of ratios with a common ratio of \( \frac {1}{1-n}\)

We conclude

Yager suggests that the maximal uncertainty measure corresponds to a unique distribution.

Example 2

To be continued of Example 1, supposed that hormones act on 3 kinds of specific target cells to regulate their metabolism, \(X=\left \{x_{1}, x_{2}, x_{3} \right \}\). Initially, prior probability distribution is \(P = \left \{0.1, 0.3, 0.6 \right \}\).

Owing to the known prior probability and cardinality n = 3, probability negation is obtained as:

After multiple negation iterations, it converges to the maximal uncertainty, which corresponds to the uniform distribution.

2.3 Negation of basic probability assignment method

A large number of approaches to belief mass allocation in information fusion under D-S framework. Entropy is able to describe the uncertainty or reliability of a system [40]. The application of entropy in artificial intelligence and neural network has been paid more attention [41, 42]. The negation of probability distribution cannot solve the BPA negation problems of multi-subsets under the exhaustive FOD:

where empty set ∅ = A1 and support set \({{\varTheta }} = A_{2^{N}}\). All subsets satisfy

Example 3

To be continued of Example 2, supposed that there is a exhaustive FOD that 3 kinds of target cells are marked as \({{\varOmega }}=\left \{x_{1}, x_{2}, x_{3} \right \}\). There are 4 propositions as focal elements in hypothesis Y

which satisfies yi ∈ Y and BPAs are m(Y ) = {0.5,0.3, 0.15,0.05}.

Yin and Deng proposed to assign the yi’s residual mass 1 − m(yi) to other focal elements except itself [43] as:

whose cardinality of focal elements satisfies n = 4. After multiple negation iterations, belief mass converges to uniform distribution as \(m(y_{i})=\frac {1}{4}\).

Gao and Deng proposed to assign the yi’s residual mass 1 − m(yi) to the power set except empty set ∅ and itself [14] as

After multiple negation iterations, belief mass converges to uniform distribution as \(m(y_{i})=\frac {1}{2^{3}-1}=\frac {1}{7}\).

After that, Luo and Deng proposed a negation matrix (\([G]_{2^{N}\times 2^{N}}\)) method in basic belief assignment vectors (BBAVs) space to simplify this problem, which almost all belief mass is assigned to the support set Θ [16].

where g(i,j) is an element in negation matrix G. This algorithm manifests conflict (m(∅) by (9)) increment during the negation iterations.

Furthermore, Xie and Xiao [44] has made greater improvements in the weight assignment problem. This method with the negation matrix E preserves the support set after negation iterations.

When j≠ 2N and j≠ 1, satisfy:

The new method re-allocates the mass m(yi) according to the cardinality of intersections of proposition \(\overline {y_{i}}\) and other focal elements. It explains with a better physical meaning, much more intuitively. Also satisfy convergence according to the cardinality of elements after multiple negation iterations. The case comes from Example 3, cardinality of hypothesis Y satisfies

After multiple negation iteration, m(A1) = m(∅) = 0 and \(m(A_{2^{N}})=m({{\varTheta }})=0.05\) preserves and don’t assign itself to any subset else. Distinctly, it converges to m(A2) : m(A3) : m(A4) : m(A5) : m(A6) : m(A7) = 1 : 1 : 1 : 2 : 2 : 2 after multiple negation iterations. It satisfies entropy increment during negation iterations, as well.

There are three completely different methods to allocate belief mass during the negation iterations. These three methods are compared in Section 4.

2.4 Uncertainty measure methods

Uncertainty measures have an important contribution in measuring ambiguity degree of the system. Uncertainty measures are applied to medical fields [45], prediction [46, 47], recognition [48], classification [49, 50], awareness [51] and decision making [52, 53].

Entropy is originally derived from thermodynamics. In 1948, Claude Elwood Shannon introduced entropy in thermodynamics into information theory. Therefore, it was widespread well-known as Shannon entropy and information entropy [54, 55]. Provided a random variable \(X = \left \{x_{1}, x_{2}, \cdots , x_{n}\right \}\), the probability distribution is P(X = xi) = pi, where i = 1,2,⋯ ,n. Shannon entropy satisfies

Information refers to the objects transmitted and processed by messages and communication systems, and refers to everything that human society spreads [56]. A ”message” represents an event, sample, or feature from a distribution or data stream. Entropy is a measure of uncertainty. The more information a hypothesis contains, the greater the entropy will be [57]. The concept of entropy allows information to be quantified. The probability distribution of the sample is yet another feature of the information.

Nguyen defines an entropy of BPA based on mass in FOD [58] as follow:

It is different from Shannon entropy. The entropy defined by Nguyen is based on a weaker framework than the Bayesian model, which lays a foundation for determining the uncertainty measure of BPA. It does satisfy the probabilistic consistency property. When the BPA of each proposition is uniform distribution, the entropy takes the maximum. One of the earliest entropies is defined by Höhle [59] based on belief function as

Another entropy is defined by Yager based on plausibility function [60] as

3 New belief interval negation of BPA

3.1 Newly proposed uncertainty measure approaches under D-S structure

This paper proposes two new methods of uncertainty measures, based on the belief function (HB) and plausibility function (HP). They are defined as:

Belief interval [Bel, Pl] represents that the proposition is completely correct and the proposition may be correct. The two new entropies are suitable for the belief interval negation method. Therefore, this paper defines two new approaches to measuring uncertain to prove the wide applicability of belief interval negation method.

3.2 Definition of belief interval negation

The belief interval of D-S evidence theory is widely used in various fields. D-S structure is different from the Bayesian probability model. BPA is a kind of weaker than probability theory [61]. It expresses more uncertainty with the mass, belief function and plausibility function in the FOD.

This paper proposes a belief interval negation based on the belief interval [Bel, Pl]. The newly proposed method first establishes belief intervals for all sets in the power set (\(\forall A \subseteq 2^{{{\varOmega }}}\)). Then find the negations of belief intervals. Next, the belief interval after the negation is converted into a BPA. Afterwards, we complete the entire negation process. Meanwhile, the process of mass → belief interval → belief interval negation → mass is realized. The negation of the belief interval is defined as follows. Its rationality is proved.

Thus,

Similarly, replace A with \(\overline {A}\), then we obtain

which satisfies

Therefore, belief interval negation \([\overline {Bel} ,\ \overline {Pl}]\) is defined as

Besides, We define \(\overline {m}_{\oplus }\) as orthogonal mass function and \(\overline {Pl}_{\oplus }\) as the orthogonal plausibility function

The newly proposed belief interval negation method is not only applicable to the belief entropy and plausibility entropy proposed above, but also applicable to Nguyen entropy, Höhle entropy, Yager entropy. A total of 5 uncertain measure methods are suitable for belief interval negation calculations. From a global perspective, during the negation iterations, the belief interval negation method satisfies the entropy increment.

3.3 Calculation and properties of Belief interval negation

In an exhaustive FOD, the process of belief interval negation includes mass → belief interval → belief interval negation → mass. We have a simple numerical case as an introduction to the derivation, for a better understanding.

Example 4

There is a hypothesis \({{\varOmega }} = \left \{A, B, C\right \}\), and initial BPA satisfies:

3.3.1 Step 1: belief interval establishment

Establish belief intervals for all subsets in the power set (except for the empty set ∅) by (10) and (11).

3.3.2 Step 2: belief interval negation

Establish the belief interval of power set by (34), (35), and (38).

3.3.3 Step 3: transformation from belief interval negation to BPA

We regard all the belief function (\(\overline {Bel}\)) obtained from the belief interval negation method as the new BPA (\(\overline {m}\)). Although this method is contrary to the definition of the belief function. Because the belief interval negation method has its own irrationality. This irrationality will be discussed in detail in the Section 3.4.

Therefore, in order to deal with such irrationality, we directly regard \(\overline {Bel}\) obtained by belief interval negation as BPA.

3.3.4 Step 4: BPA orthogonalization

BPA is orthogonalized after step 3. Orthogonal BPA (\(\overline {m}_{\oplus }\)) and plausibility function (\(\overline {Pl}_{\oplus }\)) is obtained from (39)-(42).

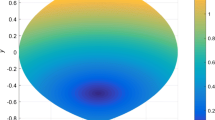

After that, the newly proposed belief interval negation method completes all operations. The mass in belief interval negation iterations is shown as Fig. 2 and Table 1.

The new belief interval negation works for Bel entropy. Bel (obtained from step 1) can be used to calculate belief entropy.

The new belief interval negation is available for Pl entropy. Pl (obtained from step 1) can be used to calculate plausibility entropy.

The new belief interval negation works for Höhle entropy. BPA (before negation process) and Bel (obtained from step 1) can be used to calculate Höhle entropy as (29).

The new belief interval negation works for Yager entropy. The orthogonal BPA (obtained after step 4) and \(\overline {Pl}_{\oplus }\) (obtained after step 3) can be used to calculate Yager entropy as (30). But it is worth noting that we need to modify Yager entropy to make the belief interval negation applicable to Yager entropy as follows

The new belief interval negation applies to Nguyen entropy. The orthogonal BPA (obtained after step 4) can be used to calculate Nguyen entropy as (28), which could be rewritten as

Uncertainty measures in belief interval negation iterations are shown in Fig. 3 and Table 2. It exactly satisfies entropy increment during the negation iterations.

3.4 View from belief interval negation

The belief interval negation method is unreasonable in step 4. To be continued of Example 4, as for the plausibility function (Pl), the subset \(A_{2} = \left \{A\right \}\) is a singleton with only one element. The subset \(A_{5} = \left \{A, B\right \}\) has two elements. Therefore, Pl(A5) ≥ Pl(A2). However, after belief interval negation, \(\overline {Bel} (A_{5}) \leq \overline {Bel} (A_{2})\). This is counter-intuitive, because

Because the belief function represents that the mass of trust assigned to a proposition, which is completely correct. To solve the counter-intuitive case where |A5|>|A2|, we directly regard \(\overline {Bel}(A)\) (\(\forall A \subseteq 2^{{{\varOmega }}}\)) obtained after the negation as a new BPA. Finally, the obtained BPA is orthogonalized. The whole process of the belief interval negation completely finishes.

4 Numerical examples and discussion

4.1 Numerical example 1

To be continued of Example 4, there is an exhaustive FOD, and BPA is given.

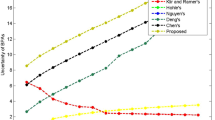

In this section, we compare three previous BPA negation methods and prove the effectiveness of the newly proposed belief interval negation method. We try to prove through such a simple case that neither Yin’s [43] nor Gao’s [14] method is suitable for the mentioned above five uncertain measure methods. Because negation iterations based on Yin’s negation method in Figs. 4 and 5 or Gao’s negation method in Figs. 6 and 7 cause the uncertainty measure values to oscillate. The negation method proposed by Luo [16] doesn’t apply to the above five uncertainty measures, since the entropy decreases sharply in the iterative process in Figs. 8 and 9. A negation matrix method proposed by Xie [44] may be applicable to Shannon, Höhle and Belief entropy, whereas it isn’t applicable to plausibility and Yager entropy in Figs. 10 and 11.

The new belief interval negation method is exactly applicable to the above five uncertain measures. Because the newly proposed belief interval negation method manifests an entropy increment during the negation iterations.

4.2 Numerical example 2

There is an exhaustive FOD (\({{\varOmega }} = \left \{A, B, C \right \}\)) similar to example 4, but BPAs are unknown. There are 10 hypotheses below in the Table 3.

Experiments have shown that the newly proposed belief interval negation method is widely applicable to various hypotheses. It is suitable to all the 5 uncertain measure methods mentioned above. During the negation iterations, it satisfies the entropy increment in Figs. 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30 and 31. These examples prove that belief interval negation method is an important tool for uncertainty measures.

4.3 Application in medical pattern recognition

Supposed that there are 3 medical patterns, the diagnosis result of a patient might be: Malaria, Typhoid, Enteritis, respectively marked as the three elements of hypothesis \({{\varOmega }}=\left \{A, B, C\right \}\). According to different expert experience and evidence, the medical patterns of 3 experts are given as follows:

Before and after a belief interval negation, results of belief mass are displayed in Table 4 and five uncertain measure approaches are compared in Table 5. These 4 uncertainty measures (HB, HP, HO, HY) point to the 3rd expert (m3) with the lowest uncertainty, which indicates the largest reliability. In addition, Nguyen entropy (HN) lost its role in this uncertainty measure. After a belief interval negation, the ambiguity of medical patterns given by the experts based on these 5 uncertainty measures has been increasing. Although HP and HY pointed out that expert 1 (\(\bar {m}_{1}\)) was more reliable, HB, HN, and HO still pointed out that expert 3 (\(\bar {m}_{3}\)) was more reliable. This application proves the consistency of the two newly proposed uncertainty measures and belief interval negation methods with Nguyen, Höhle, and Yager entropy.

4.4 View from convergent mass

In this section we will discuss the convergence of mass. After multiple belief interval negation iterations, the subsets with the same cardinality converge to the same mass. We assume that all the singletons are labeled with \({e_{1}^{c}}\). The subscript 1 indicates the cardinality N = 1, and the superscript c indicates the convergence. Similarly, we assume that all subsets of cardinality N = 2 are labeled with \({e_{2}^{c}}\), whose subscript 2 represents the cardinality N = 2, and the superscript c represents the convergence. The support set Θ disappears during the negation iterations, so \(m ({e_{3}^{c}}) = m({{\varTheta }}) = 0\). After multiple negation iterations, the mass converges. Therefore, before or after the belief interval negation, BPA is related to the cardinality of the subsets. Taking N = 3 as an example, we can obtain

Thus,

We generalize the calculation method of the convergence mass to cardinality N = n, then we can obtain

Then repeat the above method to obtain the convergent mass as

When i ≤ n − i is valid, that means \(C_{n-i}^{i}\geq 1\). When i<n − i, then we consider \(C_{n-i}^{i} = 0\).

5 Conclusion

This paper proposes a belief interval negation method, which converts BPA to belief interval negation. After the belief interval negation step is completed, it is converted back to BPA and applied to various entropies. In particular, after the process of belief interval negation, the believe function is directly regarded as the BPA of the subset. Many previous BPA negation methods are not applicable to Höhle entropy and Yager entropy. The newly proposed negation method solves this problem. Besides, this paper also proposes two new uncertainty measure methods. In addition, this paper verifies that the newly proposed negation method is applicable to the above five uncertain measure methods, and they satisfy the property of entropy increment during negation iterations. Moreover, the newly proposed uncertainty measures and belief interval negation approaches are applied to medical pattern recognition. The experimental results prove that the novel approaches are reasonable and consistent with the previous method. Furthermore, the important contribution of this paper is that the newly proposed belief interval negation method, as an important tool for uncertainty measure, build the relationship among the belief interval (belief function and plausibility function), BPA, and the uncertainty measure. It will play an essential role in describing system ambiguity in the future.

References

Liu Z, Pan Q, Dezert J, Han J-W, He Y (2018) Classifier fusion with contextual reliability evaluation. IEEE Trans Cybern 48(5):1605–1618

Fan C-, Song Y, Fu Q, Lei L, Wang X (2018) New operators for aggregating intuitionistic fuzzy information with their application in decision making. IEEE Access 6:27214–27238

Seiti H, Hafezalkotob A (2018) Developing pessimistic–optimistic risk-based methods for multi-sensor fusion: An interval-valued evidence theory approach. Appl Soft Comput 72:609–623

Xu X, Li S, Song X, Wen C, Xu D (2016) The optimal design of industrial alarm systems based on evidence theory. Control Eng Pract 46:142–156

Zhou M, Liu X-B, Chen Y-W, Yang J-B (2018) Evidential reasoning rule for MADM with both weights and reliabilities in group decision making. Knowl-Based Syst 143:142–161

Liu Z-G, Pan Q, Dezert J, Martin A (2018) Combination of classifiers with optimal weight based on evidential reasoning. IEEE Trans Fuzzy Syst 26(3):1217–1230

Liu Q, Tian Y, Kang B (2019) Derive knowledge of z-number from the perspective of dempster-shafer evidence theory. Eng Appl Artif Intell 85:754–764

Fei L (2019) On interval-valued fuzzy decision-making using soft likelihood functions. Int J Intell Syst 34:1631–1652

Fei L, Xia J, Feng Y, Liu L (2019) An ELECTRE-based multiple criteria decision making method for supplier selection using Dempster-Shafer theory. IEEE Access 7:84701–84716

Seiti H, Hafezalkotob A, Najaf S E (2019) Developing a novel risk-based MCDM approach based on D numbers and fuzzy information axiom and its applications in preventive maintenance planning. Appl Soft Comput J 82. https://doi.org/10.1016/j.asoc.2019.105559https://doi.org/10.1016/j.asoc.2019.105559

Jiang W, Cao Y, Deng X (2019) A novel z-network model based on bayesian network and z-number. IEEE Trans Fuzzy Syst 28:1585–1599

Srivastava A, Maheshwari S (2018) Some New Properties of Negation of a Probability Distribution. Int J Intell Syst 33(6):1133–1145

Yager R R (2015) On the maximum entropy negation of a probability distribution. IEEE Trans Fuzzy Syst 23(5):1899–1902

Gao X, Deng Y (2019) The Negation of Basic Probability Assignment. IEEE Access 7:107006–107014

Lefevre E, Colot O, Vannoorenberghe P (2002) Belief function combination and conflict management. Inf Fusion 3(2):149–162

Luo Z, Deng Y (2020) A matrix method of basic belief assignment’s negation in dempster-shafer theory. IEEE Trans Fuzzy Syst 28:2270–2276

Yager R R (2012) Entailment principle for measure-based uncertainty. IEEE Trans Fuzzy Syst 20(3):526–535

Zhou M, Liu X-B, Chen Y-W, Qian X-F, Yang J-B, Wu J (2019) Assignment of attribute weights with belief distributions for MADM under uncertainties. Knowl-Based Syst 189 https://doi.org/10.1016/j.knosys.2019.105110

Deng Y (2020) Uncertainty measure in evidence theory. Sci China Inf Sci 63(11):210201

Deng X, Jiang W (2019) A total uncertainty measure for D numbers based on belief intervals. Int J Intell Syst 34(12):3302–3316

Yager R R (2018) Interval valued entropies for Dempster-Shafer structures. Knowl-Based Syst 161:390–397

Yager R R (2018) On using the Shapley value to approximate the Choquet integral in cases of uncertain arguments. IEEE Trans Fuzzy Syst 26(3):1303–1310

Liao H, Mi X, Xu Z (2019) A survey of decision-making methods with probabilistic linguistic information: Bibliometrics, preliminaries, methodologies, applications and future directions. Fuzzy Optim Decis Making 19:81–134

Fang R, Liao H, Yang J-B, Xu D-L (2019) Generalised probabilistic linguistic evidential reasoning approach for multi-criteria decision-making under uncertainty. J Oper Res Soc 72:130–144

Zhou M, Liu X, Yang J (2017) Evidential reasoning approach for MADM based on incomplete interval value. J Intell Fuzzy Syst 33(6):3707–3721

Feng F, Liang M, Fujita H, Yager R R, Liu X (2019) Lexicographic orders of intuitionistic fuzzy values and their relationships. Mathematics 7(2):1–26

Cheng C, Xiao F, Pedrycz W (2020) A majority rule-based measure for atanassov type intuitionistic membership grades in mcdm. IEEE Trans Fuzzy Syst PP

Xu X, Xu H, Wen C, Li J, Hou P, Zhang J (2018) A belief rule-based evidence updating method for industrial alarm system design. Control Eng Pract 81:73–84

Xu X-B, Ma X, Wen C-L, Huang D-R, Li J-N (2018) Self-tuning method of PID parameters based on belief rule base inference. Inf Technol Control 47(3):551–563

Song Y, Fu Q, Wang Y-F, Wang X (2019) Divergence-based cross entropy and uncertainty measures of Atanassov’s intuitionistic fuzzy sets with their application in decision making. Appl Soft Comput 84. https://doi.org/10.1016/j.asoc.2019.105703

Dempster A P (2008) Upper and lower probabilities induced by a multivalued mapping. Springer

Shafer G (1976) A mathematical theory of evidence. Princeton University Press

Yager R R (2019) Generalized Dempster–Shafer structures. IEEE Trans Fuzzy Syst 27(3):428–435

Yager R R (2019) Entailment for measure based belief structures. Inf Fusion 47:111–116

Xiao F, Cao Z, Jolfaei A (2020) A novel conflict measurement in decision making and its application in fault diagnosis. IEEE Trans Fuzzy Syst PP:1–1

Liu Z, Liu Y, Dezert J, Cuzzolin F (2019) Evidence combination based on credal belief redistribution for pattern classification. IEEE Trans Fuzzy Syst 28:618–631

Ma J, Yu W, Liang P, Li C, Jiang J (2019) Fusiongan: A generative adversarial network for infrared and visible image fusion. Inf Fusion 48:11–26

Wang Q, Li Y, Liu X (2018) The influence of photo elements on EEG signal recognition. EURASIP J Image Video Process 2018(1):134

Cao Z, Ding W, Wang Y-K, Hussain F K, Al-Jumaily A, Lin C-T (2019) Effects of repetitive SSVEPs on EEG complexity using multiscale inherent fuzzy entropy. Neurocomputing 389:198–206

Zhang H, Deng Y (2021) Entropy Measure for Orderable Sets. Inf Sci 561:141–151

Raghavendra U, Fujita H, Bhandary S V, Gudigar A, Hong Tan J, Acharya U R (2018) Deep convolution neural network for accurate diagnosis of glaucoma using digital fundus images. Inf Sci 441:41–49

Acharya U R, Fujita H, Oh S L, Hagiwara Y, Tan J H, Adam M, Tan R S (2019) Deep convolutional neural network for the automated diagnosis of congestive heart failure using ecg signals. Appl Intell 49:16–27

Yin L, Deng X, Deng Y (2019) The negation of a basic probability assignment. IEEE Trans Fuzzy Syst 27:135–143

Xie K, Xiao F (2019) Negation of Belief Function Based on the Total Uncertainty Measure. Entropy 21(1)

Cao Z, Lin C-T, Lai K-L, Ko L-W, King J-T, Liao K-K, Fuh J-L, Wang S-J (2019) Extraction of SSVEPs-based Inherent fuzzy entropy using a wearable headband EEG in migraine patients. IEEE Trans Fuzzy Syst 28:14–27

Zhou D, Al-Durra A, Zhang K, Ravey A, Gao F (2019) A robust prognostic indicator for renewable energy technologies: A novel error correction grey prediction model. IEEE Trans Ind Electron 66(12):9312–9325

Son L H, Fujita H (2019) Neural-fuzzy with representative sets for prediction of student performance. Appl Intell 49:172–187

Xiao F (2019) Distance measure of intuitionistic fuzzy sets and its application in pattern classification. IEEE Transactions on Systems, Man, and Cybernetics: System. In press

Xu X, Zheng J, Yang J-, Xu D-, Chen Y- (2017) Data classification using evidence reasoning rule. Knowl-Based Syst 116:144–151

Liu Z-G, Pan Q, Dezert J, Mercier G (2017) Hybrid classification system for uncertain data. IEEE Trans Syst Man Cybern Syst 47(10):2783–2790

Fujita H, Gaeta A, Loia V, Orciuoli F (2019) Improving awareness in early stages of security analysis: A zone partition method based on grc. Appl Intell 49:1063–1077

Liu Z, Xiao F, Lin C-T, Kang B, Cao Z (2019) A generalized golden rule representative value for multiple-criteria decision analysis. IEEE Trans Syst Man Cybern Syst PP:1–12

Xiao F (2020) EFMCDM: Evidential fuzzy multicriteria decision making based on belief entropy. IEEE Trans Fuzzy Syst 28:1477–1491

Shannon C E (1948) A mathematical theory of communication. Bell Syst Techn J 27(3):379–423

Srivastava A, Kaur L (2019) Uncertainty and negation-information theoretic applications. Int J Intell Syst 34(6):1248–1260

Deng J, Deng Y (2021) Information volume of fuzzy membership function. Int J Comput Commun Control 16(1):4106

Deng Y (2020) Information volume of mass function. Int J Comput Commun Control 15(6):3983

Maeda Y, Nguyen H T, Ichihashi H (1993) Maximum entropy algorighms for uncertainty measures. Int J Uncertain Fuzz Knowl-Based Syst 01(01):69–93

Höhle U (1982) Entropy with respect to plausibility measures. Proceedings of the 12th IEEE Symposium on Multiple-Valued Logic, pp 167–169

Yager RR (1983) Entropy and specificity in a mathematical theory of evidence. Int J Gen Syst 9(4):249–260

Pan L, Deng Y (2020) Probability transform based on the ordered weighted averaging and entropy difference. Int J Comput Commun Control 15(4):3743

Acknowledgements

The work is partially supported by National Natural Science Foundation of China (Grant No. 61973332), JSPS Invitational Fellowships for Research in Japan (Short-term).

Author information

Authors and Affiliations

Contributions

Writing-original draft, H.M.;Writing-review & editing, H.M. and Y.D.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mao, H., Deng, Y. Negation of BPA: a belief interval approach and its application in medical pattern recognition. Appl Intell 52, 4226–4243 (2022). https://doi.org/10.1007/s10489-021-02641-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02641-7