Abstract

Quantile regression is a powerful complement to the usual mean regression and becomes increasingly popular due to its desirable properties. In longitudinal studies, it is necessary to consider the intra-subject correlation among repeated measures over time to improve the estimation efficiency. In this paper, we focus on longitudinal single-index models. Firstly, we apply the modified Cholesky decomposition to parameterize the intra-subject covariance matrix and develop a regression approach to estimate the parameters of the covariance matrix. Secondly, we propose efficient quantile estimating equations for the index coefficients and the link function based on the estimated covariance matrix. Since the proposed estimating equations include a discrete indicator function, we propose smoothed estimating equations for fast and accurate computation of the index coefficients, as well as their asymptotic covariances. Thirdly, we establish the asymptotic properties of the proposed estimators. Finally, simulation studies and a real data analysis have illustrated the efficiency of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Single-index models are becoming increasingly popular due to its flexibility and interpretability. They also can effectively overcome the problem of “curse of dimensionality” through projecting multivariate covariates onto one-dimensional index variate \({\varvec{x}}^T {\beta }\). Longitudinal data frequently occur in the biomedical, epidemiological, social, and economical fields. For longitudinal data, subjects are often measured repeatedly over a given time period. Thus, observations from the same subject are correlated and those from different subjects are often independent. The technique of generalized estimating equation (GEE) proposed by Liang and Zeger (1986) is widely used for longitudinal data. The GEE method produces consistent estimators for the mean parameters through specifying a working structure. However, the main drawback of GEE is that it may lead to a great loss of efficiency when the working covariance structure is misspecified. Thus, it is an interesting topic to model the covariance structure. Recently, the modified Cholesky decomposition has been demonstrated to be effective for modeling the covariance structure. It not only permits more general forms of the correlation structures, but also leads automatically to positive definite covariance matrix. Ye and Pan (2006) utilized the modified Cholesky decomposition to decompose the inverse of covariance matrix and proposed a joint mean–covariance model for longitudinal data. Leng et al. (2010) constructed a semiparametric mean–covariance model through relaxing the parametric assumption, which is more flexible. Zhang and Leng (2012) used a new Cholesky factor to deal with the within-subject structure by decomposing the covariance matrix rather than its inverse. Other related references include Mao et al. (2011), Zheng et al. (2014), Liu and Zhang (2013), Yao and Li (2013), and Liu and Li (2015).

In recent years, some statistical inference methods have been proposed for longitudinal single-index models. Xu and Zhu (2012) proposed a kernel GEE method. Lai et al. (2012) presented the bias-corrected GEE estimation and variable selection procedure for the index coefficients. Zhao et al. (2017) constructed a robust estimation procedure based on quantile regression and a specific correlation structure (e.g., compound symmetry (CS) or the first-order autoregressive (AR(1)). All these articles used some specific correlation structures when taking into account the within-subject correlation. Thus, these methods may result in a loss of efficiency when the true correlation structure is not correctly specified. Recently, Lin et al. (2016) developed a new efficient estimation procedure for single-index models by combining the modified Cholesky decomposition and the local linear smoothing method. Motivated by Leng et al. (2010), Guo et al. (2016) proposed a two-step estimation procedure for single-index models based on the modified Cholesky decomposition and the GEE method. The above two papers are built on mean regression, which is very sensitive to outliers and heavy tail errors. In contrast with mean regression, quantile regression not only has the ability of describing the entire conditional distribution of the response variable, but also can accommodate non-normal errors. Thus, it has emerged as a powerful complement to the mean regression. Although the modified Cholesky decomposition has been well studied for the mean regression models, it is lack of analyzing longitudinal single-index quantile models. In this paper, we use the modified Cholesky decomposition to parameterize the within-subject covariance structure and construct more efficient estimation procedure for the index coefficients and the link function. Compared with existing research results, the new method has several advantages. Firstly, the proposed method does not need to specify the working correlation structure to improve the estimate efficiency. So our approach not only can take the within-subject correlation into consideration, but also permits more general forms of the covariance structures, which indicates that it is more flexible than most of the existing methods. Secondly, since the proposed estimating equations include the discrete quantile score function, we construct new smoothed estimating equations for fast and accurate computation of the parameter estimates. Thirdly, the estimators of the index coefficients and the link function are demonstrated to be asymptotically efficient.

The rest of this article is organized as follows: In Sect. 2, within the framework of independent working structure, we propose the quantile score estimating functions for the index coefficients based on “remove–one–component” method, and the corresponding theoretical properties are also given in this section. In Sect. 3, we apply the modified Cholesky decomposition to decompose the within-subject covariance matrix as moving average coefficients and innovation variances, which can be estimated through constructing two estimating equations. In Sect. 4, more efficient quantile estimating functions are derived based on the estimated covariance matrix. In Sect. 5, extensive simulation studies are carried out to evaluate the finite sample performance of the proposed method. In Sect. 6, we illustrate the proposed method through a real data analysis. Finally, all the conditions and the proofs of the main results are provided in “Appendix.”

2 Estimation procedure under the independent structure

A marginal quantile single-index model with longitudinal data has the following structure

where \({Y_{ij}} = Y\left( {{t_{ij}}} \right) \in \mathbb {R}\) is the jth measurement of the ith subject, \({{\varvec{X}}_{ij}} = {\varvec{X}}\left( {{t_{ij}}} \right) \in \mathbb {R} ^p\), \(g_{0\tau }(\cdot )\) is an unknown differentiable univariate link function, \({\varepsilon _{ij, \tau }}\) is the random error term with an unspecified density function \(f_{ij}(\cdot )\) and \(P\left( {{\varepsilon _{ij,\tau }} < 0} \right) = \tau \) for any i, j and \(\tau \in \left( {0,1} \right) \), and \({\beta }_{0\tau } \) is an unknown parameter vector which belongs to the parameter space

where \({\left\| \cdot \right\| }\) is the Euclidean norm. Without loss of generality, we assume that the true vector \({\beta }\) has a positive component \({\beta _r}\) (otherwise, consider \(-{\beta }\)). For simplicity, we omit \(\tau \) from \({\varepsilon _{ij, \tau }}, {\varvec{\beta }}_{0\tau }\) and \(g_{0\tau }\left( \cdot \right) \) in the rest of this article, but we should remember that they are \(\tau \)-specific. Let \({{\varvec{Y}}_i} = {\left( {{Y_{i1}},\ldots ,{Y_{i{m_i}}}} \right) ^T}\), \({{\varvec{X}}_i} = \left( {{{\varvec{X}}_{i1}},\ldots ,{{\varvec{X}}_{i{m_i}}}} \right) ^T\), and \({{\varvec{\varepsilon }}_i} = {\left( {{\varepsilon _{i1}},\ldots ,{\varepsilon _{i{m_i}}}} \right) ^T}\). In this paper, we assume the number of measurements \(m_i\) is uniformly bounded for each i, which means that n and N (\(N = \sum _{i = 1}^n {{m_i}}\)) have the same order.

2.1 Estimations of \(g_0(\cdot )\) and its first derivative \({g'_0}(\cdot )\)

B-spline is commonly used to approximate the nonparametric function for its efficient in function approximation and numerical computation, which can refer to Ma and He (2016), Guo et al. (2016), and Zhao et al. (2017). In this paper, we adopt B-spline basis functions to approximate the unknown link function \(g_0(\cdot )\). We assume \({\varvec{X}}_{ij}^T{\beta }\) is confined in a compact set [a, b]. Consider a knot sequence with \(N_n\) interior knots, denoted by \({\xi _1} = \cdots =a = {\xi _q}< {\xi _{q + 1}}< \cdots< {\xi _{q + {N_n}}} < b = {\xi _{q + {N_n} + 1}} = \cdots = {\xi _{2q + {N_n}}}\). We set the B-spline basis functions as \({{\varvec{B}}_q}(u) = {\left( {{B_{1,q}}(u),\ldots ,{B_{{J_n,q}}}(u)} \right) ^T}\) with the order q (\(q\ge 2\)) and \(J_n=N_n+q\). We approximate the link function \(g_0(u)\) by \(g_0\left( u \right) \approx {{\varvec{B}}_q{\left( u \right) ^T}}{\varvec{\theta }}\), where \({\varvec{\theta }} = {\left( {{\theta _1},\ldots ,{\theta _{{J_n}}}} \right) ^T}\) is the spline coefficient vector. For a given \({\beta }\), we can obtain the estimator \({\hat{{\varvec{\theta }}}}\left( {\beta } \right) \) of \({\varvec{\theta }}\) by minimizing the following objective function

where \({\rho _\tau }\left( u \right) = u\left\{ {\tau - I\left( {u < 0} \right) } \right\} \) is the quantile loss function. Then, the link function \(g_0(\cdot )\) is estimated by the spline functions \(\hat{g}\left( u;{\beta } \right) = {{\varvec{B}}_q{\left( u \right) ^T}}{\hat{{\varvec{\theta }}}} \left( {\beta } \right) \). Following Ma and Song (2015), the estimator of \({{ { g'_0(\cdot )}}}\) is defined by

where \({{\hat{\omega } }_s}\left( {\beta } \right) = {{\left( {q - 1} \right) \left\{ {{{ \hat{\theta } }_s}\left( {\beta } \right) - {{ \hat{\theta } }_{s - 1}}\left( {\beta } \right) } \right\} } \Big /{\left( {{\xi _{s + q - 1}} - {\xi _s}} \right) }}\) for \(2\le s\le J_n\). Thus, we have \({{ {\hat{g}'}}}({u};{\beta } )={\varvec{B}}_{q-1}(u)^T {\varvec{D}}_1 {{\hat{{\varvec{\theta }}}}} ({\beta })\), where \({\varvec{B}}_{q-1}(u)=\left( {{B_{s,q - 1}}(u):2\le s\le J_n} \right) ^T\) is the \((q-1)\)th-order B-spline basis and

2.2 The profile-type estimating equations for \({\beta }\)

The parameter space \({{{\varvec{\varTheta }}}}\) means that \({\beta }\) is on the boundary of a unit ball. Therefore, \(g_0\left( {{\varvec{X}}_{ij}^T{\beta } } \right) \) is non-differential at the point \({\beta }\). However, we must use the derivative of \(g_0\left( {{\varvec{X}}_{ij}^T{\beta } } \right) \) with respect to \({\beta }\) when constructing the profile-type estimating equations. To solve the above problem, we employ “remove–one–component” method (Cui et al. 2011) to transform the boundary of a unit ball in \(\mathbb {R} ^p\) to the interior in \(\mathbb {R} ^{p-1}\). Specifically, let \({{\beta } ^{(r)}} = {\left( {{\beta _1},\ldots ,{\beta _{r - 1}},{\beta _{r + 1}},\ldots ,{\beta _p}} \right) ^T}\) be a \(p-1\) dimensional vector by removing the rth component \(\beta _{r}\) in \({\beta }\). Then, \({\beta }\) can be rewritten as \({\beta } ={ {\beta }} ({\beta }^{(r)} ) = {\left( {{\beta _1},\ldots ,{\beta _{r - 1}},{{\left( {1 - {{\left\| {{{\beta } ^{(r)}}} \right\| }^2}} \right) }^{{1/2}}},{\beta _{r + 1}},\ldots ,{\beta _p}} \right) ^T}\) and \({\beta } ^{(r)}\) belongs to the parameter space

So \({\beta }\) is infinitely differentiable with respect to \({\beta }^{(r)}\) and the Jacobian matrix is

where \({\varsigma _r} = - {\left( {1 - {{\left\| {{{\beta } ^{(r)}}} \right\| }^2}} \right) ^{ - {1 / 2}}}{{\beta } ^{(r)}}\) and \({\varsigma _s}\left( {1 \le s \le p,s \ne r} \right) \) is a \(\left( p-1 \right) \times 1\) unit vector with sth component 1.

Motivated by the idea of GEE (Liang and Zeger 1986), together with the estimators of \(g_0(\cdot )\) and \({g'_0}(\cdot )\), we construct the profile-type estimating equations for \(p-1\) dimensional vector \({\beta }^{(r)}\)

where \({\hat{{\varvec{G'}}}}\left( {{\varvec{X}}_i}{\beta };{\beta }\right) = {\mathrm{diag}}\left\{ {{ \hat{g}'}\left( {{\varvec{X}}_{i1}^T{\beta } } ;{\beta }\right) ,\ldots , {\hat{g}'}\left( {{\varvec{X}}_{i{m_i}}^T{\beta } } ;{\beta }\right) } \right\} \), \({\psi _\tau }\left( u \right) = I\left( {u < 0} \right) -\tau \) is the quantile score function, \({\psi _{{\tau }}}\left( {{{\varvec{u}}_i}} \right) = {\left( {{\psi _{{\tau }}}\left( {{u_{i1}}} \right) ,\ldots ,{\psi _{{\tau }}}\left( {{u_{i{m_i}}}} \right) } \right) ^T}\), \(\hat{g}\left( {{\varvec{X_i}}{\beta } ;{\beta } } \right) = {\left( {\hat{g}\left( {{\varvec{X}}_{i1}^T{\beta } ;{\beta } } \right) ,\ldots ,\hat{g}\left( {{\varvec{X}}_{i{m_i}}^T{\beta } ;{\beta } } \right) } \right) ^T}\), \({{\hat{{\varvec{X}}}}_i} = {\left( {{{\hat{{\varvec{X}}}}_{i1}},\ldots ,{{\hat{{\varvec{X}}}}_{i{m_i}}}} \right) ^T}\), \({{\hat{{\varvec{X}}}}_{ij}} = {{\varvec{X}}_{ij}} - \hat{E}\left( {{{\varvec{X}}_{ij}}\left| {\varvec{X}}_{ij}^T {\beta }\right. } \right) \) and \(\hat{E}\left( {{{\varvec{X}}_{ij}}\left| {\varvec{X}}_{ij}^T {\beta } \right. } \right) \) is the spline estimator of \(E\left( {{{\varvec{X}}_{ij}}\left| {\varvec{X}}_{ij}^T {\beta }_0 \right. } \right) \). In estimating Eq. (2), the term \({\varvec{\varLambda }}_i = {\mathrm{diag}}\left\{ {{f_{i1}}\left( 0 \right) ,\ldots ,{f_{i{m_i}}}\left( 0 \right) } \right\} \) describes the dispersions in \(\varepsilon _{ij}\). In some cases when \(f_{ij}\) is difficult to estimate, \({\varvec{\varLambda }}_i\) can be simply treated as an identity matrix with a slight loss of efficiency (Jung 1996). We define the solution of (2) as \({\hat{{\beta }}}^{(r)}\) and then use the fact \({\beta _r} = \sqrt{1 - {{\left\| {{{\beta } ^{(r)}}} \right\| }^2}} \) to obtain \({{\hat{{\beta }}}}\). The asymptotic property of \({{\hat{{\beta }}}}\) is given in Lemma 3 of “Appendix.”

2.3 Computational algorithm

Solving estimating Eq. (2) faces some interesting challenges due to the discontinuous indicator function. To overcome the calculation difficulty, we approximate \({\psi _\tau }\left( \cdot \right) \) by a smooth function \({\psi _{h\tau } }\left( \cdot \right) \) based on the idea of Wang and Zhu (2011). Define \(G\left( x \right) = \int _{u < x} {K\left( u \right) } du\) and \({G_h}\left( x \right) = G\left( {{x \big / h}} \right) \), where \(K\left( \cdot \right) \) is a kernel function and h is a positive bandwidth parameter. Then, we approximate \({\psi _\tau }\left( {{Y_{ij}} - \hat{g}\left( {{\varvec{X}}_{ij}^T{\beta } ;{\beta } } \right) } \right) \) by \({\psi _{h\tau }}\left( {{Y_{ij}} - \hat{g}\left( {{\varvec{X}}_{ij}^T{\beta } ;{\beta } } \right) } \right) = 1-{G_h}\left( {Y_{ij}}-\hat{g}\left( {{\varvec{X}}_{ij}^T{\beta } ;{\beta } } \right) \right) - \tau \). Therefore, based on the approximation, estimating Eq. (2) can be replaced by the following smoothed estimating equations

We define the solution of (3) as \({\tilde{{\beta }}^{(r)}}\). Since (3) is nonlinear equation about \({\beta }^{(r)} \), the Fisher–Scoring iterative algorithm can be used to solve it. Specifically, the iterative algorithm is described as follows:

Step 0 Start with an initial value \({\varvec{ \beta }} ^{(0)}\), which is obtained by Ma and He (2016).

Step 1 Set \(k=0\). Use the current estimate \({\beta } ^{\left( k \right) }\) and minimize \(L_n({\varvec{\beta ^{(k)}}};{\varvec{\theta }})\) with respect to \({\varvec{\theta }}\) to obtain the estimator \({\hat{{\varvec{\theta }}}}^{(k)}\). Then, we can obtain \(\hat{g}^{(k)}\left( u;{\beta }^{(k)} \right) ={{\varvec{B_q}}{{\left( u \right) }^T}{\hat{{\varvec{\theta }}}}^{(k)} }\) and \({\hat{g}'^{(k)}} \left( u ;{\beta }^{(k)}\right) = {{{\varvec{ B}}}_{q - 1}}{\left( u \right) ^T}{{\varvec{D_1}}}{\hat{{\varvec{\theta }}}}^{(k)}\).

Step 2 Utilize the estimators \(\hat{g}^{(k)}\) and \({\hat{g}'^{(k)}}\) obtained by Step 1; \({\left( {{{\beta } ^{(r)}}} \right) ^{(k)}}\) can be updated by

where

and

Step 3 Set \(k=k+1\) and repeat Steps 1 and 2 until convergence.

Step 4 With the final estimators \({\tilde{{\beta }}^{(r)}}\) and \({\hat{{\varvec{\theta }}}}\) obtained from Step 3, we can get the final estimator of \( g_0\left( u \right) \) by \(\hat{g}\left( {u;{\tilde{{\beta }}} } \right) ={{\varvec{B}}_q}{({u})^T}{{{\hat{{\varvec{\theta }}}}}}({\tilde{{\beta }}} )\), where \({{\tilde{{\beta }}}}\) is obtained by the fact \({\beta _r} = \sqrt{1 - {{\left\| {{{\beta } ^{(r)}}} \right\| }^2}} \).

Remark 1

If the sum of \(\left| {\left( {{{\beta } ^{(r)}}} \right) ^{(k + 1)}} - {\left( {{{\beta } ^{(r)}}} \right) ^{(k)}}\right| \) is smaller than a cutoff value (such as \(10^{-6}\)), we stop the iteration. Our simulation studies indicate that the Fisher–Scoring algorithm can find the numerical solution of (3) quickly.

2.4 Asymptotic properties

Let \(g_0\left( {u } \right) \) and \({\beta }_0\) be the true values of \(g\left( {u } \right) \) and \({\beta }\), respectively. In the following theorems, we need to restrict \({{\beta } } \in {\varvec{\varTheta }}_n\), where \({{\varvec{\varTheta }}_{n}} = \left\{ {{{\beta } } \in {\varvec{\varTheta }}:\left\| {{\beta } }-{{\beta } _0} \right\| \le C {n^{{{ - 1} / 2}}}} \right\} \) for some positive constant C. Since we anticipate that the estimators of \({\beta }_0\) are root-n consistent, we should look for the solutions of (3) which involve \({\beta }\) distant from \({\beta }_0 \) by order \(n^{-1/2}\). Define

and

where \({{\varvec{\varSigma }}_{\tau i}} = Cov\left( {{\psi _\tau }\left( {{{\varvec{\varepsilon }}_i}} \right) } \right) \) , \({{\varvec{G'}}\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) } = {\mathrm{diag}}\left\{ {{ g'_0}\left( {{\varvec{X}}_{i1}^T{{\beta } _0}} \right) ,\ldots ,{g'_0}\left( {{\varvec{X}}_{i{m_i}}^T{{\beta } _0}} \right) } \right\} \), \({{\tilde{{\varvec{X}}}}_i} = {\left( {{{\tilde{{\varvec{X}}}}_{i1}},\ldots ,{{\tilde{{\varvec{X}}}}_{i{m_i}}}} \right) ^T}\) and \({{\tilde{{\varvec{X}}}}_{ij}} = {{\varvec{X}}_{ij}} - E\left( {{{\varvec{X}}_{ij}}\left| {{\varvec{X}}_{ij}^T{{\beta } _0}} \right. } \right) \).

Theorem 1

Under conditions (C1)–(C7) in “Appendix,” and the number of knots satisfies \({n^{{1/{(2d + 2)}}}} \ll {N_n} \ll {n^{{1/4}}}\), where d is given in the condition (C2) of “Appendix,” we have

as \(n\rightarrow \infty \), where \(\mathop \rightarrow \limits ^d\) denotes convergence in distribution.

Theorem 2

Let \({\varvec{\theta }} ^0\) be the best approximation coefficient of \(g_0\left( {u } \right) \) in the B-spline space. When \({\beta }\) is a known constant \({\beta }_0\) or estimated to the order \({{O_p}\left( {{n^{{{ - 1}/2}}}} \right) }\), under conditions (C1)–(C7) in “Appendix,” and the number of knots satisfies \({n^{{1/{(2d + 2)}}}} \ll {N_n} \ll {n^{{1/ 4}}}\), then (i) \(\left| {{{\hat{g}}}({u};{{\beta } }) - {g_0}({u})} \right| = O_p\left( {\sqrt{{{{N_n}} / n}} + N_n^{ - d}} \right) \) uniformly in \(u \in \left[ {a,b} \right] \); and (ii) under \({n^{{1 / {(2d + 1)}}}} \ll {N_n} \ll {n^{{1 / 4}}}\),

where \(\sigma _n^2\left( u \right) = {\varvec{B}}_q^T\left( u \right) {{\varvec{V}}^{ - 1}}\left( {{{\beta } _0}} \right) \sum _{i = 1}^n {{\varvec{B}}_q^T\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {{\varvec{\varLambda }} _{ i}}{{\varvec{\varSigma }} _{\tau i}}{{\varvec{\varLambda }} _{ i}}{{\varvec{B}}_q}\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) } {{\varvec{V}}^{ - 1}}\left( {{{\beta } _0}} \right) {{\varvec{B}}_q}\left( u \right) \), \(\check{g} \left( u;{\beta } \right) = {\varvec{B}}_q^T\left( u \right) {{\varvec{\theta }} ^0\left( {\beta }\right) }\), \({\varvec{B}}_q\left( {{{\varvec{X}}_i}{\beta }_0 } \right) = {\left( {{{\varvec{B}}_q}\left( {{\varvec{X}}_{i1}^T{\beta }_0 } \right) ,\ldots ,{{\varvec{B}}_q}\left( {{\varvec{X}}_{i{m_i}}^T{\beta }_0 } \right) } \right) ^T}\) and \( {\varvec{V}}\left( {{{\beta } _0}} \right) = \sum _{i = 1}^n {{\varvec{B}}_q^T\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {{\varvec{\varLambda }} _{ i}}{{\varvec{\varLambda }} _{ i}}{{\varvec{B}}_q}\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) }. \)

3 Modeling the within-subject covariance matrix via the modified Cholesky decomposition

To incorporate the correlation within subjects, following the idea of GEE (Liang and Zeger 1986), we can use the estimating equations that take the form

Unfortunately, estimating Eq. (4) includes an unknown covariance matrix \({\varvec{\varSigma }} _{\tau i}\). So our primary task is to estimate it. To guarantee the positive definiteness of \( {\varvec{\varSigma }} _{\tau i}\), we firstly apply the modified Cholesky decomposition to decompose \( {\varvec{\varSigma }} _{\tau i}\) as

where \({\varvec{L}}_{\tau i}\) is a lower triangular matrix with 1’s on its diagonal and \({\varvec{D}}_{\tau i}\) is an \(m_i \times m_i\) diagonal matrix. Let \({{\varvec{e}}_{\tau i}} ={\varvec{L_{\tau i}}}^{-1} {\psi _\tau }\left( {{{\varvec{\varepsilon }} _{i}}} \right) = {\left( {{e_{\tau ,i1}},\ldots ,{e_{\tau ,i{m_i}}}} \right) ^T}\), so we have \(Cov\left( {{{\varvec{e}}_{\tau i}}} \right) ={\varvec{L}}_{\tau i}^{-1} {\varvec{\varSigma }} _{\tau i} \left( {\varvec{L}}_{\tau i}^{-1}\right) ^T= {{\varvec{D}}_{\tau i}} \buildrel \varDelta \over = diag\left( { d_{\tau , i1}^2,\ldots ,d _{\tau ,i{m_i}}^2} \right) \), where \(d _{\tau ,ij}^2\) is called as innovation variance. Furthermore, we assume that the below diagonal entries of \({\varvec{L}}_{\tau i}\) are \(l_{\tau , ijk} (k<j=2,\ldots ,m_i)\), and then \({{\varvec{e}}_{\tau i}} ={\varvec{L_{\tau i}}}^{-1} {\psi _\tau }\left( {{{\varvec{\varepsilon }} _{i}}} \right) \) can be rewritten as

where \(l_{\tau , ijk}\) are the so-called moving average coefficients. In this paper, we define the notation \(\sum _{k = 1}^{0} \) means zero when \(j=1\). The main advantage of the modified Cholesky decomposition is that \(l_{\tau , ijk}\) and \(d _{\tau , ij}^2\) are unconstrained. In order to estimate the moving average coefficients \({l_{\tau ,ijk}} \) and the innovation variances \(d _{\tau ,ij}^2\), we construct two generalized linear models as follows:

where \({{\gamma }_\tau } = {\left( {{\gamma _{\tau 1}},\ldots ,{\gamma _{\tau p_1}}} \right) ^T}\) and \({{\varvec{\lambda }}_\tau } = {\left( {{\lambda _{\tau 1}},\ldots ,{\lambda _{\tau p_2}}} \right) ^T}\). Based on the idea of Zhang and Leng (2012), the covariates \({\varvec{z}}_{ij}\) are those used in regression analysis, and \({\varvec{w}}_{ijk }\) is usually adopted as a polynomial of time difference \(t_{ij}-t_{ik}\). By adopting the idea of the GEE approach, we construct two estimating equations for \({{\gamma }_\tau }\) and \({\varvec{\lambda }} _\tau \) by

where \({{\partial {{\varvec{e}}_{\tau i}^T}} \big /{\partial {{\gamma }_\tau }}}\) is a \(p_1\times m_i\) matrix with the first column zero and the jth \(j\ge 2\) column \({{\partial {e_{\tau ,ij}}} \big / {\partial {{\gamma }_\tau }}} = - \sum _{k = 1}^{j - 1} {\left[ {{{\varvec{w}}_{ijk}}{e_{\tau ,ik }} + {l_{\tau ,ijk }}{{\partial {e_{\tau ,ik }}} \big / {\partial {{\gamma }_\tau }}}} \right] } \), \({{\varvec{z}}_i} = {\left( {{{\varvec{z}}_{i1}},\ldots ,{{\varvec{z}}_{i{m_i}}}} \right) ^T}\) and \({\varvec{d}}_{\tau i}^2 = {\left( {d _{\tau ,i1}^2,\ldots ,d _{\tau ,i{m_i}}^2} \right) ^T}\). Here, \({\varvec{W}}_{\tau i}\) is the covariance matrix of \({\varvec{e}}_{\tau i}^2\), namely \({{\varvec{W}}_{\tau i}} = Cov\left( {{\varvec{e}}_{\tau i}^2} \right) \). The true \({{\varvec{W}}_{\tau i}}\) is unknown and can be approximated by a sandwich “working” covariance structure \({{\varvec{W}}_{\tau i}} = {\varvec{A}}_{\tau i}^{1/2}{{\varvec{R}}_{\tau i}}\left( \rho \right) {\varvec{A}}_{\tau i}^{1/2}\) (Liu and Zhang 2013), where \({{\varvec{A}}_{\tau i}} = 2diag\left( {d _{\tau ,i1}^4,\ldots ,d _{\tau ,i{m_i}}^4} \right) \) and \({{\varvec{R}}_{\tau i}}\left( \rho \right) \) mimics the correlation between \(e_{\tau , ij}^2\) and \(e_{\tau ,ik}^2\)\((j\ne k)\) with a new parameter \(\rho \). The common structures of \({{\varvec{R}}_{\tau i}}\left( \rho \right) \) contain the compound symmetry and the first-order autoregressive. We assume that \({\hat{{\gamma }}}_\tau \) and \({\hat{{\varvec{\lambda }}}}_\tau \) are the solutions of estimating Eqs. (6) and (7). Liu and Zhang (2013) have pointed out that \({\hat{{\gamma }}}_\tau \) and \({\hat{{\varvec{\lambda }}}}_\tau \) are not sensitive to the parameter \(\rho \). So, we take \(\rho =0\) in our simulation studies and real data analysis.

Let \({\gamma }_{\tau 0}\) and \({\varvec{\lambda }}_{\tau 0}\) be the true values of \({\gamma }_\tau \) and \({\varvec{\lambda }}_\tau \), respectively. Meanwhile, we define the covariance matrix of the function \({{{{\left( {{{\varvec{U}}_1}{{\left( {\gamma }_{\tau 0} \right) }^T},{{\varvec{U}}_2}{{\left( {\varvec{\lambda }}_{\tau 0} \right) }^T}} \right) }^T}} \Bigg / {\sqrt{n} }}\) by \({{\varvec{V}}_{\tau n}} = {\left( {{\varvec{v}}_{\tau n}^{jl}} \right) _{j,l = 1,2}}\), where \({\varvec{v}}_{\tau n}^{jl} = {n^{ - 1}}Cov\left( {{{\varvec{U}}_j},{{\varvec{U}}_l}} \right) \) for \(j,l=1,2\). Furthermore, we assume that the covariance matrix \({\varvec{V}}_{\tau n}\) is positive definite at the true value \(({\gamma }_{\tau 0}^T,{\varvec{\lambda }}_{\tau 0}^T)^T\) and

where \(\mathop \rightarrow \limits ^p\) denotes convergence in probability. Then, the proposed estimators \(\left( {{\hat{{\gamma }}}_\tau ^T,{\hat{{\varvec{\lambda }}}}_\tau ^T} \right) ^T\) are \(\sqrt{n} \)-consistent and have the following asymptotic distribution

The proof is omitted since it is similar to the proof of Theorem 1 of Lv et al. (2017). Now, we show that the estimated covariance matrix \({\hat{{\varvec{\varSigma }}}}_{\tau i}\) is consistent. For a matrix \({\varvec{A}}\), \(\left\| {\varvec{A}} \right\| = {\left[ {tr\left( {{\varvec{A}}{{\varvec{A}}^T}} \right) } \right] ^{{1 / 2}}}\) denotes its Frobenius norm.

Theorem 3

Let \({\varvec{\varSigma }}_{\tau i}\) and \({\hat{{\varvec{\varSigma }}}}_{\tau i}\) be the true and estimated covariance matrix within the ith cluster, respectively. Suppose that the regularity conditions in “Appendix” hold. If the covariance matrix has the model structure (5) , we have \(\left\| {{\hat{{\varvec{\varSigma }}}}_{\tau i}-{\varvec{\varSigma }}_{\tau i}} \right\| = {O_p}\left( {{n^{{{ - 1} / 2}}}} \right) \).

4 Efficient estimating equations for the index coefficients \({\beta }\) and the link function \(g(\cdot )\)

Based on the discussions in Sect. 3, the covariance matrix \({\varvec{\varSigma }}_{\tau i}\) can be estimated by \({{\hat{{\varvec{\varSigma }}}} _{\tau i}} = {\hat{{\varvec{L}}}_{\tau i}}{\hat{{\varvec{D}}}_{\tau i}}\hat{{\varvec{L}}}_{\tau i}^T\), where \(\hat{{\varvec{D}}}_{\tau i}= diag\left( {\hat{d} _{\tau ,i1}^2,\ldots ,\hat{d} _{\tau ,i{m_i}}^2} \right) \) with \(\hat{d} _{\tau ,ij}^2= \exp \left( {\varvec{z}}_{ij}^T{\hat{{\varvec{\lambda }}}}_\tau \right) \) and the (j, k) element of \({\hat{{\varvec{L}}}}_{\tau i}\) is \(\hat{l}_{\tau ,ijk}= {\varvec{w}}_{ijk}^T{{\hat{{\gamma }}}_\tau }\) for \(k<j=2,\ldots ,m_i\). Firstly, for a given \({\beta }\), we construct efficient smoothed estimating equations of \({\varvec{\theta }}\) by

Then, the efficient smoothed estimating equations of \({\beta }^{(r)}\) are constructed similarly by

where \({\bar{{\varvec{\theta }}}}({\beta })\) is the solution of (8), \({\varvec{{\bar{G}'}}}\left( {{\varvec{X}}_i}{\beta };{\beta }\right) = {\mathrm{diag}}\Big \{ { \bar{g}'}\left( {{\varvec{X}}_{i1}^T{\beta } } ;{\beta }\right) ,\ldots , {\bar{g}'}\Big ( {{\varvec{X}}_{i{m_i}}^T{\beta } } ;{\beta }\Big ) \Big \}\) and \({\bar{g}'}(u;{\beta } ) = \sum _{s = 1}^{{J_n}} {{ B'_{s,q}}} (u){{ \bar{\theta } }_s}\left( {\beta } \right) \). Let \({\bar{{\beta }}^{(r)}}\) be the resulting estimator of (9). Therefore, the efficient estimator of \( g_0\left( u \right) \) can be achieved by \(\bar{g}\left( {u;{\bar{{\beta }}} } \right) = {{\varvec{B}}_q}{\left( u \right) ^T}{\bar{{\varvec{\theta }}}} \left( {\bar{{\varvec{{\beta }}}}} \right) \).

Remark 2

There are two main differences between the proposed estimating Eqs. (8) and (9) with Zhao et al. (2017)’s estimating Eq. (6). On the one hand, we apply a different method to smooth the discontinuous estimating equations. On the other hand, Zhao et al. (2017) applied a working correlation matrix \({\varvec{C}}_i\) which need to be specified to improve the estimation efficiency. Therefore, Zhao et al. (2017)’s approach cannot permit more general forms of the correlation structures and results in a loss of efficiency when the \({\varvec{C}}_i\) is far from the true correlation structure.

Theorem 4

Under conditions (C1)–(C7) in “Appendix,” and the number of knots satisfies \({n^{{1 / {(2d + 2)}}}} \ll {N_n} \ll {n^{{1/4}}}\), we have

as \(n\rightarrow \infty \), where

and the other symbols are the same as that in Theorem 1.

Let \({{\varvec{\varUpsilon }} _i} = {{\varvec{\varPhi }} ^{ - 1}}{\varvec{J}}_{{\beta } _0^{(r)}}^T{\tilde{{\varvec{X}}}}_i^T{\varvec{G}}'\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {{\varvec{\varLambda }} _i}{\varvec{\varSigma }} _{\tau i}^{{{ 1} / 2}} - {{\varvec{\varGamma }} ^{ - 1}}{\varvec{J}}_{{\beta } _0^{(r)}}^T{\tilde{{\varvec{X}}}}_i^T{\varvec{G}}'\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {{\varvec{\varLambda }} _i}{\varvec{\varSigma }} _{\tau i}^{{{ - 1}/2}}\). Then,

Since \({{\varvec{\varUpsilon }} _i}{\varvec{\varUpsilon }} _i^T\) is nonnegative definite, we have

Thus, \({{\varvec{\varGamma }} ^{ - 1}} \le {{\varvec{\varPhi }} ^{ - 1}}{\varvec{\varPsi }} {{\varvec{\varPhi }} ^{ - 1}}\). This implies that \({\bar{{\beta }}}\) has smaller asymptotic covariance matrix than that of \({\tilde{{\beta }}}\). So the proposed estimator \({\bar{{\beta }}}\) is asymptotically more efficient than \({\tilde{{\beta }}}\).

Motivated by (9), a sandwich formula for estimating the covariance of \({\bar{{\beta }}}\) is

where

and

Theorem 5

When \({\beta }\) is a known constant \({\beta }_0\) or estimated to the order \({{O_p}\left( {{n^{{{ - 1}/2}}}} \right) }\), under conditions (C1)–(C7) in “Appendix,” and the number of knots satisfies \({n^{{1 / {(2d + 2)}}}} \ll {N_n} \ll {n^{{1 /4}}}\), then (i) \(\left| {{\bar{g}}({u};{{\beta } }) - {g_0}({u})} \right| = O_p\left( {\sqrt{{{{N_n}} \big / n}} + N_n^{ - d}} \right) \) uniformly in \(u \in \left[ {a,b} \right] \); and (ii) under \({n^{{1 /{(2d + 1)}}}} \ll {N_n} \ll {n^{{1/4}}}\),

where \(\sigma _n^{*2}\left( u \right) = {\varvec{B}}_q^T\left( u \right) {{\varvec{V}}^{ *- 1}}\left( {{{\beta } _0}} \right) {{\varvec{B}}_q}\left( u \right) , {\varvec{V}}^*\left( {{{\beta } _0}} \right) = \sum _{i = 1}^n {\varvec{B}}_q^T\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {{\varvec{\varLambda }} _{ i}}{\varvec{\varSigma }} _{\tau i}^{ - 1}{{\varvec{\varLambda }} _{ i}}{{\varvec{B}}_q}\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) .\)

Remark 3

We can adopt a similar iterative algorithm given in Sect. 2.3 to find the solutions of estimating Eqs. (8) and (9). Here, we omit it for saving space. Furthermore, we also can prove that \({\bar{g}}({u};{{\beta } })\) is asymptotically more efficient than \({\hat{g}}({u};{{\beta } })\) by using the above similar method.

5 Simulation studies

In this section, we conduct simulation studies to compare our approach with some existing methods. The major aim is to show that the proposed method not only can deal with complex correlation structures, but also produces more efficient estimates for the index coefficients and the link function. Specifically, we compare the proposed estimators \({\bar{{\beta }}}\) and \(\bar{g}\) (denoted as \({\hat{{\beta }}}_{pr}\) and \(\hat{g}_{pr}\)) with the other three types of estimators: (i) the estimator proposed by Ma and He (2016) without using “remove–one–component” method (denoted as \({\hat{{\beta }}}_{ma}\) and \(\hat{g}_{ma}\)); (ii) the estimators \({\tilde{{\beta }}}\) and \(\hat{g}\) (denoted as \({\hat{{\beta }}}_{in}\) and \(\hat{g}_{in}\)) without considering the within-subject correlation, which are given in Sect. 2.3; (iii) the estimators proposed by Zhao et al. (2017) with the AR(1) working correlation structure (denoted as \({\hat{{\beta }}}_{ar}\) and \(\hat{g}_{ar}\)) and the compound symmetry structure (denoted as \({\hat{{\beta }}}_{cs}\) and \(\hat{g}_{cs}\)), which involve a tuning parameter \(h_1\). We set \(h_1=n^{-1/2}\) in our simulations and real data analysis according to their suggestions. Similar to Wang and Zhu (2011), we smooth the quantile score function by the following second-order (\(\nu =2\)) Bartlett kernel

In order to achieve good numerical performances, we need to select several parameters appropriately. Firstly, we fix the spline order to be \(q=4\), namely we use cubic B-splines to approximate the nonparametric link function in our numerical examples. Meanwhile, we use equally spaced knots with the number of interior knots \(N_n=[n^{1/(2q+1)}]\) which satisfies theoretical requirement. The similar strategy had been adopted by Ma and Song (2015). Secondly, Wang and Zhu (2011) had proved that the smoothed approach is robust to the bandwidth h. Thus, we fix \(h=n^{-0.3}\) which satisfies the theoretical requirement \( n{h^{2\nu }} \rightarrow 0\) with \(\nu =2\).

Example 1

Similar to Ma and He (2016), we generate the data from the following longitudinal single-index regression model

where \(\delta =0.5\), \(g_{0}(u)=\sin \left( {\frac{{0.2 \pi \left( {u - A} \right) }}{{B - A}}} \right) \) with \(A = \sqrt{3} /2 - 1.645/\sqrt{12} ,B = \sqrt{3} /2 + 1.645/\sqrt{12}\), \({\beta }_0=\left( \beta _{01},\beta _{02},\beta _{03} \right) ^T=\left( 3,2,0.4\right) ^T/\sqrt{3^2+2^2+0.4^2}\), and the covariate \({{\varvec{X}}_{ij}} = {\left( {{X_{ij1}},{X_{ij2}},{X_{ij3}}} \right) ^T}\) follows a multivariate normal distribution \(N(0,{\varvec{\varSigma }})\) with \(({\varvec{\varSigma }})_{k,l}=0.5^{|k-l|}\) for \(1\le k,l\le 3\). Here, we define \({\varepsilon _{ij }} ={\xi _{ij}}- {c_\tau } \), and \(c_\tau \) is the \(\tau \)th quantile of the random error \(\xi _{ij}\), which implies the corresponding \(\tau \)th quantile of \(\varepsilon _{ij}\) is zero. Meanwhile, we consider two error distributions of \({\varvec{\xi }} _{i}=\left( \xi _{i1},\ldots ,\xi _{im_i} \right) ^T\) for assessing the robustness and effectiveness of the proposed method.

Case 1 Correlated normal error, \({{\varvec{\xi }}}_i\) is generated from a multivariate normal distribution \(N({\varvec{0}},{\varvec{\varXi }}_i)\), where \({\varvec{\varXi }}_i\) will be listed later.

Case 2\({{\varvec{\xi }}}_i\) is generated from a multivariate t-distribution with the degree 3 and the covariance matrix \({\varvec{\varXi }}_i\).

By using a similar strategy of Liu and Zhang (2013), the covariance matrix \({\varvec{\varXi }}_i\) is constructed by \({\varvec{\varXi }}_i= {\varvec{L}}_i {\varvec{D}} _i {{\varvec{L}}_i^T}\), where \({\varvec{D}}_i=diag\left( \exp ({\varvec{h}}_{i1}^T{\varvec{\alpha }}),\ldots ,\exp ({\varvec{h}}_{im_i}^T{\varvec{\alpha }}) \right) \) and \( {\varvec{L}}_i \) is a unit lower triangular matrix with (j, k) element \({\varvec{\omega }}_{ijk}^T{{\phi }} \)\((k<j=2,\ldots ,m_i)\), where \({\phi }=\left( -0.3,0.2,0,0.5\right) ^T\), \({\varvec{\alpha }}=\left( -0.3,0.5,0.4,0\right) ^T\), \({\varvec{h}}_{ij}=\left( 1, h_{ij1},h_{ij2},h_{ij3} \right) ^T\), \({\varvec{\omega }}_{ijk}=\left( 1, (t_{ij}-t_{ik}),(t_{ij}-t_{ik})^2,(t_{ij}-t_{ik})^3 \right) ^T\), \(h_{ijl}\) follows a standard normal distribution for \(l=1,2,3\), and \(t_{ij}\) is generated from the standard uniform distribution. In addition, we adopt a similar strategy of Liu and Zhang (2013) to generate unbalanced longitudinal data. Specifically, each subject is measured \(m_i\) times with \(m_i-1 \sim Binomial(11,0.8)\), which results in different numbers of repeated measurements for each subject. For the covariates \({\varvec{z_{ij}}}\) and \({\varvec{w}}_{ijk}\) of the covariance model (5), we set \({\varvec{z}}_{ij}={\varvec{h}}_{ij}\) and \({\varvec{w}}_{ijk}={\varvec{\omega }}_{ijk}\).

Example 2

The model setup is similar to that of Example 1. Firstly, we take \(\delta =1\) and \({\beta }_0=\left( \beta _{01},\beta _{02},\beta _{03} \right) ^T=\left( 3,2,1\right) ^T/\sqrt{14}\). Secondly, we define the covariance matrix \({\varvec{\varXi }}_i\) by \({{\varvec{\varXi }} _i} = {\varvec{\varDelta }} _i^{ - 1}{{\varvec{B}}_i}{\left( {{\varvec{\varDelta }} _i^T} \right) ^{ - 1}}\), where \({\varvec{B}}_i\) is an \(m_i \times m_i\) diagonal matrix with the jth element \(\sin \left( {\pi {\varsigma _{ij}}} \right) /3 + 0.5\) and \(\varsigma _{ij} \sim U(0,2)\), and \({\varvec{\varDelta }} _i \) is a unit lower triangular matrix with (j, k) element \(-\delta _{j,k}^{(i)}\)\((k<j=2,\ldots ,m_i)\), \(\delta _{j,k}^{(i)} = 0.2 + 0.5\left( {{t_{ij}} - {t_{ik}}} \right) \) and \(t_{ij} \sim U(0,1)\). For the covariates \({\varvec{z}}_{ij}\) and \({\varvec{w}}_{ijk}\) of the regression model (5), we set \({\varvec{z}}_{ij}=\left( 1, t_{ij},t_{ij}^2,t_{ij}^3 \right) ^T\), and \({\varvec{w}}_{ijk}\) is the same as that in Example 1. Meanwhile, we set \(m_i=12\), but each element has \(20\%\) probability of being missing at random, which leads to unbalanced longitudinal data. Other settings are the same as that in Example 1.

Example 3

For a clear comparison, we adopt a similar strategy of Zhao et al. (2017) to construct the covariance matrix \({\varvec{\varXi }}_i\). We define \({\varvec{\varXi }}_i={\varvec{B}}_i^{1/2}{{\varvec{H}}_i}{\varvec{B}}_i^{1/2}\), where \({\varvec{B}}_i\) is given in Example 2 and \({\varvec{H}}_i\) follows either the compound symmetry structure (cs) or the AR(1) structure (ar1) with the correlation coefficient \(\rho =0.85\). In addition, we take \(\delta =1\) and \({\beta }_0=\left( \beta _{01},\beta _{02},\beta _{03} \right) ^T=\left( 3,2,0\right) ^T/\sqrt{13}\). The scheme of generating unbalanced longitudinal data, and \({\varvec{z}}_{ij}\) and \({\varvec{w}}_{ijk}\) are the same as that in Example 2. Other settings are the same as that in Example 1.

Tables 1, 2, 3, 4, and 5 give the biases and the standard deviations (SD) of \({\hat{{\beta }}}_{ma}\), \({\hat{{\beta }}}_{cs}\), \({\hat{{\beta }}}_{ar}\), \({\hat{{\beta }}}_{in}\), and \({\hat{{\beta }}}_{pr}\) at \(\tau =0.5, 0.75\) for \(n=100\) and 400. We can derive the following several observations from these tables. Firstly, it is easy to find that all methods yield unbiased estimators for the index coefficients \({\beta }\), since the corresponding biases are small. Furthermore, \({\hat{{\beta }}}_{pr}\) has smaller bias in most cases. Secondly, the estimator \({\hat{{\beta }}}_{in}\) performs better than \({\hat{{\beta }}}_{ma}\), which indicates “remove–one–component” method leads to more efficient estimators. Thirdly, the proposed smoothed estimator \({\hat{{\beta }}}_{pr}\) performs best (with smallest SD) among all methods. It is not surprised that the SDs of \({\hat{{\beta }}}_{cs}\) and \({\hat{{\beta }}}_{ar}\) are bigger than that of \({\hat{{\beta }}}_{pr}\) for Examples 1 and 2, because \({\hat{{\beta }}}_{cs}\) and \({\hat{{\beta }}}_{ar}\) use misspecified correlation structures for Examples 1 and 2, which results in a loss of efficiency. Fourthly, as far as we know, the correlation structure of \({\psi _\tau }\left( {{{\varvec{\varepsilon }} _i}} \right) \) also has the exchangeable structure if the correlation structure of \({\varvec{\varepsilon }} _i\) is exchangeable. Thus, \({\hat{{\beta }}}_{cs}\) use a correct working correlation structure under the compound symmetry (cs) structure in Table 5. However, \({\hat{{\beta }}}_{pr}\) also has slighter advantage than \({\hat{{\beta }}}_{cs}\). The main reasons are as follows. On the one hand, we adopt another smoothed approach that is different from that of Zhao et al. (2017) to construct the smoothed estimating equations. On the other hand, the moment approach does not work well and yields inaccuracy estimator of the correlation coefficient for unbalanced longitudinal data with large \(m_i\). This leads to a bad estimator of \({\varvec{C}}_i\) (given in Sect. 2.3 of Zhao et al. 2017). Finally, the correlation structure of \({\psi _\tau }\left( {{{\varvec{\varepsilon }} _i}} \right) \) does not possess the AR(1) correlation structure when \({\varvec{\varepsilon }} _i\) has the AR(1) correlation structure. Therefore, \({\hat{{\beta }}}_{cs}\) and \({\hat{{\beta }}}_{ar}\) use the misspecification of correlation structure under the AR(1) correlation structure in Example 3. Therefore, \({\hat{{\beta }}}_{cs}\) and \({\hat{{\beta }}}_{ar}\) perform worse than \({\hat{{\beta }}}_{pr}\). In addition, for the nonparametric link function \(g_0(\cdot )\), we apply the mean squared error (MSE) to evaluate the accuracy of the estimator, which is defined as

where \({{ g}^{(k)}}\left( {{u_{ij}}} \right) \) is the kth estimated value of \(g_0(u_{ij})\). From Table 6, the proposed \(\hat{g}_{pr}\) achieves the smallest MSE among all methods in general, which indicates that \(\hat{g}_{pr}\) outperforms the existing approaches. Overall, the proposed estimators \({\hat{{\beta }}}_{pr}\) and \(\hat{g}_{pr}\) can achieve better efficiency than the existing methods.

In order to evaluate the accuracy of the sandwich formula (10), we give the ratio of sample standard deviation (SD) and the estimated standard error (SE). For brevity, we only list the results of Example 2. In Table 7, “SD” represents the sample standard deviation of 500 estimators of the parameters. It can be taken as the true standard deviation of the resulting estimators. “SE” represents the sample average of 500 estimated standard errors by utilizing the sandwich formula (10). Table 7 indicates that the sandwich formula (10) works well for different situations, especially for large simple size (\(n=400\)), since the value of SD/SE is very close to one. Compared with the method of Zhao et al. (2017), our method provides more accurate variance estimation. In addition, we use \(P_{0.95}\) to stand for the coverage probabilities of 95\(\%\) confidence intervals over 500 repetitions. From Table 7, the proposed estimator \({\hat{{\beta }}}_{pr}\) consistently achieves higher coverage probabilities and it is closer to its nominal level.

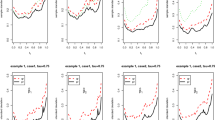

Estimators of \({\beta }=(\beta _{1},\beta _{2},\beta _{3})^T\) and \(g(\cdot )\) at \(\tau =0.5\) (black) and \(\tau =0.75\) (blue) for Case 1 in Example 2 (color figure online)

Finally, it is an interesting question to test whether the proposed estimates \({\bar{{\beta }}}\) and \(\bar{g}(\cdot )\) are sensitive to the dimensions of the covariates \({\varvec{z}}_{ij}\) and \({\varvec{w}}_{ijk}\). For brevity, we only present the results of \({\bar{{\beta }}}\) and \(\bar{g}(\cdot )\) for Case 1 in Example 2. We set \({{\varvec{w}}_{ijk }} = {\left\{ {1,{t_{ij}} - {t_{ik }},\ldots ,{{\left( {{t_{ij}} - {t_{ik }}} \right) }^{p_1-1}}} \right\} ^T}\) and \({\varvec{z}}_{ij}={\left( {1,{t_{ij}},\ldots ,t_{ij}^{p_2-1}} \right) ^T}\), where \((p_1,p_2)\) are given in Fig. 1. From Fig. 1, we can see that \({\bar{{\beta }}}\) and \(\bar{g}(\cdot )\) are not very sensitive to the dimensions \((p_1,p_2)\).

6 Real data analysis

In this section, we illustrate the proposed estimation method through an empirical example which has been studied by Zhang et al. (1998). These data include 34 women whose urine samples were collected in one menstrual cycle and whose urinary progesterone was assayed on alternate days. These women were measured 11–28 times, and it involves a total of 492 observations. Our goal is to explore the relationship between progesterone level and the following covariates: patient’s age and body mass index. Therefore, we define the log-transformed progesterone level as the response (Y), age, and body mass index are taken as the covariates. We use the longitudinal single-index quantile regression model to analyze this data set

where \((Y_{ij},X_{ij1},X_{ij2})\) is the jth observed value at the time \(t_{ij}\) for the ith woman, \(X_{ij1}\) and \(X_{ij2}\) are the standardized variables of age and body mass index, respectively. Meanwhile, repeated measurement time \(t_{ij}\) is rescaled into interval [0, 1]. For the covariance model (5), we take the corresponding covariates as

We consider different \((p_1,p_2)\) for this data set. Six estimators are considered: \(\hat{{\beta }}_{cs}\), \(\hat{{\beta }}_{ar}\), and \(\hat{{\beta }}_{in}\) are given in Sect. 5, and \(\hat{{\beta }}_{32}\), \(\hat{{\beta }}_{23}\), and \(\hat{{\beta }}_{44}\) represent the proposed estimators with \(( p_1=3,p_2=2)\), \((p_1=2,p_2=3)\), and \((p_1=4, p_2=4)\), respectively. The leave–one–out cross-validation procedure is used to evaluate the forecasting accuracy of the estimators. Specifically, we investigate the forecasting accuracy of different methods by using the prediction mean squared error (PMSE), which is defined as

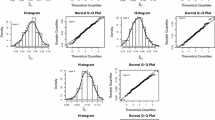

where \({{\varvec{Y}}_i} = {({Y_{i1}},\ldots ,{ Y_{i{m_i}}})^T}, {{\varvec{X}}_i} = {({{\varvec{X}}_{i1}},\ldots ,{{\varvec{X}}_{i{m_i}}})^T},{{\varvec{X}}_{ij}} = {({X_{ij1}},{X_{ij2}})^T}\), and \({\beta } _{( - i)}\) is the estimator which is obtained based on the data of the other 33 subjects except the ith subject. In Table 8, we report the PMSE, the estimated regression coefficients, and the corresponding standard errors which are obtained by the sandwich formula (10). Compared with the method of Zhao et al. (2017), our proposed method possesses smaller standard errors in general. Meanwhile, we see that our method has smaller PMSE, which indicates that the forecasting accuracy of our method is better. In addition, scatter plots of the response and the estimated link functions with 95\(\%\) confidence intervals for \(\tau =0.5\) and 0.75 are displayed in Fig. 2. It is clear that there is a nonlinear trend. Thus, using a nonlinear term in the regression is perhaps more appropriate than using a linear term.

References

Cui, X., Härdle, W. K., Zhu, L. (2011). The EFM approach for single-index models. The Annals of Statistics, 39, 1658–688.

de Boor, C. (2001). A practical guide to splines. New York: Springer.

Guo, C., Yang, H., Lv, J., Wu, J. (2016). Joint estimation for single index mean-covariance models with longitudinal data. Journal of the Korean Statistical Society, 45, 526–543.

Horowitz, J. L. (1998). Bootstrap methods for median regression models. Econometrica, 66, 1327–1351.

Jung, S. (1996). Quasi-likelihood for median regression models. Journal of the American Statistical Association, 91, 251–257.

Lai, P., Wang, Q., Lian, H. (2012). Bias-corrected GEE estimation and smooth-threshold GEE variable selection for single-index models with clustered data. Journal of Multivariate Analysis, 105, 422–432.

Leng, C., Zhang, W., Pan, J. (2010). Semiparametric mean-covariance regression analysis for longitudinal data. Journal of the American Statistical Association, 105, 181–193.

Li, Y. (2011). Efficient semiparametric regression for longitudinal data with nonparametric covariance estimation. Biometrika, 98(2), 355–370.

Liang, K. Y., Zeger, S. L. (1986). Longitudinal data analysis using generalized linear models. Biometrika, 73, 13–22.

Lin, H., Zhang, R., Shi, J., Liu, J., Liu, Y. (2016). A new local estimation method for single index models for longitudinal data. Journal of Nonparametric Statistics, 28, 644–658.

Liu, S., Li, G. (2015). Varying-coefficient mean-covariance regression analysis for longitudinal data. Journal of Statistical Planning and Inference, 160, 89–106.

Liu, X., Zhang, W. (2013). A moving average Cholesky factor model in joint mean-covariance modeling for longitudinal data. Science China Mathematics, 56, 2367–2379.

Lv, J., Guo, C., Yang, H., Li, Y. (2017). A moving average Cholesky factor model in covariance modeling for composite quantile regression with longitudinal data. Computational Statistics and Data Analysis, 112, 129–144.

Ma, S., He, X. (2016). Inference for single-index quantile regression models with profile optimization. The Annals of Statistics, 44, 1234–1268.

Ma, S., Song, P. X.-K. (2015). Varying index coefficient models. Journal of the American Statistical Association, 110, 341–356.

Mao, J., Zhu, Z., Fung, W. K. (2011). Joint estimation of mean-covariance model for longitudinal data with basis function approximations. Computational Statistics and Data Analysis, 55, 983–992.

Pollard, D. (1990). Empirical processes: Theories and applications. Hayward, CA: Institute of Mathematical Statistics.

Wang, H., Zhu, Z. (2011). Empirical likelihood for quantile regression models with longitudinal data. Journal of Statistical Planning and Inference, 141, 1603–1615.

Xu, P., Zhu, L. (2012). Estimation for a marginal generalized single-index longitudinal model. Journal of Multivariate Analysis, 105, 285–299.

Yao, W., Li, R. (2013). New local estimation procedure for a non-parametric regression function for longitudinal data. Journal of the Royal Statistical Society: Series B, 75, 123–138.

Ye, H., Pan, J. (2006). Modelling of covariance structures in generalised estimating equations for longitudinal data. Biometrika, 93, 927–941.

Zhang, D., Lin, X., Raz, J., Sowers, M. (1998). Semiparametric stochastic mixed models for longitudinal data. Journal of the American Statistical Association, 93, 710–719.

Zhang, W., Leng, C. (2012). A moving average Cholesky factor model in covariance modeling for longitudinal data. Biometrika, 99, 141–150.

Zhao, W., Lian, H., Liang, H. (2017). GEE analysis for longitudinal single-index quantile regression. Journal of Statistical Planning and Inference, 187, 78–102.

Zheng, X., Fung, W. K., Zhu, Z. (2014). Variable selection in robust joint mean and covariance model for longitudinal data analysis. Statistica Sinica, 24, 515–531.

Acknowledgements

The authors are very grateful to the editor and anonymous referees for their detailed comments on the earlier version of the manuscript, which led to a much improved paper. This work is supported by the National Social Science Fund of China (Grant No. 17CTJ015).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In the proofs, C denotes a positive constant that might assume different values at different places. For any matrix \({\varvec{A}} = \left( {{A_{ij}}} \right) _{i = 1,j = 1}^{s,t}\), denote \({\left\| {\varvec{A}} \right\| _\infty } = {\max _{1 \le i \le s}}\sum _{j = 1}^t {\left| {{A_{ij}}} \right| } \). To establish the asymptotic properties of the proposed estimators, the following regularity conditions are needed in this paper.

(C1) Let \(\mathscr {U}=\left\{ {u:u = {\varvec{X}}_{ij}^T{\beta } ,{{\varvec{X}}_{ij}} \in A,i = 1,\ldots ,n,j = 1,\ldots ,{m_i}} \right\} \) and A be the support of \({\varvec{X}}_{ij}\) which is assumed to be compact. Suppose that the density function \(f_{{\varvec{X}}_{ij}^T{\beta }}(u)\) of \({\varvec{X}} _{ij}^T{\beta }\) is bounded away from zero and infinity on \(\mathscr {U}\) and satisfies the Lipschitz condition of order 1 on \(\mathscr {U}\) for \({\beta }\) in a neighborhood of \({\beta }_0\).

(C2) The function \(g_0\left( \cdot \right) \) has the dth bounded and continuous derivatives for some \(d\ge 2\) and \(g_{1s}(\cdot )\) satisfies the Lipschitz condition of order 1, where \(g_{1s}\left( u\right) \) is the sth component of \({\varvec{g}}_1\left( u\right) =E\left( {{{\varvec{X}}_{ij}}\left| {{\varvec{X}}_{ij}^T{{\beta } _0} = u} \right. } \right) \), \(s=1,\ldots ,p\).

(C3) Let the distance between neighboring knots be \({H_{i}} = {\xi _{i}} - {\xi _{i - 1}}\) and \(H = {\max _{1 \le i \le {N_n} + 1}}\left\{ {{H_{i}}} \right\} \). Then, there exists a constant \(C_{0}\) such that \(\frac{{{H}}}{{{{\min }_{1 \le i \le {N_n} + 1}}\left\{ {{H_{i}}} \right\} }} < {C_{0}}, {\max _{1 \le i \le {N_n}}}\left\{ {{H_{i + 1}} - {H_{i}}} \right\} = o({N_n^{ - 1}})\).

(C4) The distribution function \(F_{ij}(t)=p\left( {{Y_{ij}} - g_0\left( {\varvec{X}}_{ij}^T{{\beta } _0} \right) \le t} \right) \) is absolutely continuous, with continuous densities \(f_{ij}\left( \cdot \right) \) uniformly bounded, and its first derivative \({f'_{ij}}\left( \cdot \right) \) uniformly bounded away from 0 and \(\infty \) at the point \(0, i=1,\ldots ,n, j=1,\ldots ,m_i\).

(C5) The eigenvalues of \({\varvec{\varSigma }}_{\tau i}\) are uniformly bounded and bounded away from zero.

(C6) \(K\left( \cdot \right) \) is bounded and compactly supported on \([-1,1]\). For some constant \(C_K\ne 0\), \(K\left( \cdot \right) \) is a \(\nu \)th-order kernel, i.e., \(\int {{u^j}} K\left( u \right) du = 1\) if \(j=0\); 0 if \(1 \le j \le \nu - 1\); \(C_K\) if \(j=\nu \), where \(\nu \ge 2\).

(C7) The positive bandwidth parameter h satisfies \(n{h^{2 \nu }} \rightarrow 0\).

Lemma 1

Under conditions (C1)–(C7), and \(N_n\rightarrow \infty \) and \(n N_n^{-1} \rightarrow \infty \), as \(n\rightarrow \infty \), we have (i) \(\left| {{{\hat{g}}}({u};{{\beta } _0}) - {g_0}({u})} \right| = O_p\left( {\sqrt{{{{N_n}} \big / n}} + N_n^{ - d}} \right) \) uniformly for any \(u\in [a,b]\); and (ii) under \(N_n\rightarrow \infty \) and \(n N_n^{-3} \rightarrow \infty \), as \(n\rightarrow \infty \), \(\left| {{{\hat{g}'}}({u};{{\beta } _0}) - {g'_0}({u})} \right| = O_p\left( {\sqrt{{{N_n^3} \big / n}} + N_n^{ - d + 1}} \right) \) uniformly for any \(u\in [a,b]\).

Proof

Suppose \(g^0(u)={\varvec{B}}_q(u)^T {\varvec{\theta }}^0\) is the best approximating spline function for \({g}_0(u)\). According to the result on page 149 of de Boor (2001) for \({g}_0(u)\) satisfying condition (C2), we have

Let \({\alpha _n} =N_n n^{-1/2} + N_n^{ - d+1/2}\) and set \(\left\| {{{\varvec{u}}_n}} \right\| = C\), where C is a large enough constant. Our aim is to show that for any given \(\delta > 0,\) there is a large constant C such that, for large n, we have

This implies that there is local minimum \({{\hat{{\varvec{\theta }}}} }\) in the ball \(\left\{ {{{\varvec{\theta }} ^0} + {\alpha _n}{\varvec{u}}_n:\left\| {\varvec{u}}_n \right\| \le C} \right\} \) with probability tending to one, such that \(\left\| {{\hat{{\varvec{\theta }}}} - {{\varvec{\theta }} ^0}} \right\| = {O_p}\left( {{\alpha _n}} \right) \). Define \({\varDelta _{ij}} = {{\varvec{B}}_q}{\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) ^T}{{\varvec{\theta }} ^0} - {g_0}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \). Applying the identity

we have

The observed covariates vector is written as \(\mathscr {D}=\Big \{ {\varvec{X}}_{11}^T,\ldots ,{\varvec{X}}_{1{m_1}}^T,\ldots ,{\varvec{X}}_{n1}^T,\ldots ,{\varvec{X}}_{n{m_n}}^T \Big \}^T\). Moreover, we have

and

In addition,

Moreover, the condition that \(\varepsilon _{ij}\) has the \(\tau \)th quantile zero implies \(E\left( {{\psi _\tau }\left( {{\varepsilon _{ij}}} \right) } \right) = 0\). By (11) and condition (C4), we have \(E\left( I\right) = o\left( 1\right) \) and

implies that \(I= {O_p}\left( {\sqrt{{{n\alpha _n^2} \big / {{N_n}}}} } \right) \left\| {{{\varvec{u}}_n}} \right\| \). Based on all the above, \({L_n}\left( {{{\beta } _0};{{\varvec{\theta }} ^0} + {\alpha _n}{{\varvec{u}}_n}} \right) - {L_n}\left( {{{\beta } _0};{{\varvec{\theta }} ^0}} \right) \) is dominated by \(\frac{1}{2}\alpha _n^2{\varvec{u}}_n^T\left( {\sum _{i = 1}^n {\sum _{j = 1}^{{m_i}} {{f_{ij}}\left( 0 \right) {{\varvec{B}}_q}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) {{\varvec{B}}_q}{{\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) }^T}} } } \right) {{\varvec{u}}_n}\) by choosing a sufficiently large \(\left\| {{{\varvec{u}}_n}} \right\| =C\). Therefore, (12) holds and there exists a local minimizer \({\hat{{\varvec{\theta }}}}\) such that

Since \(\left\| {{{\varvec{B}}_q}\left( u \right) {{\varvec{B}}_q}{{\left( u \right) }^T}} \right\| = O\left( 1/N_n \right) \), together with (13), we have

By the triangle inequality, \(\left| {\hat{g}\left( {u;{{\beta } _0}} \right) - {g_0}\left( u \right) } \right| \le \left| {\hat{g}\left( {u;{{\beta } _0}} \right) - {g^0}\left( u \right) } \right| + \big | {g^0}\left( u \right) - {g_0}\left( u \right) \big |\). Therefore, by (11) and (14), we have \(\left| {\hat{g}\left( {u;{{\beta } _0}} \right) - g_0\left( u \right) } \right| = {O_p}\left( {N_n^{ - d} + \sqrt{{{{N_n}} \big / n}} } \right) \) uniformly for every \(u\in [a,b]\).

Since \({{\hat{g}'}}({u};{\beta } _0)={\varvec{B}}_{q-1}(u)^T {\varvec{D}}_1 {\hat{{\varvec{\theta }}}} ({\beta }_0)\), where \({\varvec{B}}_{q-1}(u)=\{B_{s,q}(u): 2\le s \le J_n\}^T\) is the \((q-1)\)th-order B-spline basis and \({\varvec{D}}_1\) is defined in Sect. 2.1. It is easy to prove that \({\left\| {{{\varvec{D}}_1}} \right\| _\infty } = O({N_n})\). Then, employing similar techniques to that used in the proof of \({{\hat{g}}}({u};{\beta } _0)\), we obtain that

uniformly for any \(u\in [a,b]\). This completes the proof. \(\square \)

Lemma 2

Under conditions (C1)–(C7), and the number of knots satisfies \({n^{{1 / {(2d + 1)}}}} \ll {N_n} \ll {n^{{1 / 4}}}\), then for any \(J_n \times 1\)-vector \({\varvec{c}}_n\) whose components are not all 0, we have

where \(\bar{\sigma } _n^2\left( u \right) = {\varvec{c}}_n^T{{\varvec{V}}^{ - 1}}\left( {{{\beta } _0}} \right) \sum _{i = 1}^n {{\varvec{B}}_q^T\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {{\varvec{{\varLambda } }}_i}{{\varvec{\varSigma }} _{\tau i}}{{{\varvec{\varLambda }} }_i}{{\varvec{B}}_q}\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) } {{\varvec{V}}^{ - 1}}\left( {{{\beta } _0}} \right) {{\varvec{c}}_n}\) and the definition of \({\varvec{V}}\left( {{{\beta } _0}} \right) \) is given in subsection 2.4.

Proof

When \({\beta }={\beta }_0\), the minimizer \({\hat{{\varvec{\theta }}} }\) of (1) satisfies the score equations

Then, the left-hand side of Eq. (15) becomes

where \({\zeta _{ij}} = {{\varvec{B}}_q}{\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) ^T}{\varvec{\theta }} - {g_0}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \). By (11), taking Taylor’s explanation for \({{F_{ij}}\left( {{\zeta _{ij}}} \right) }\) at 0 gives

By direct calculation of the mean and variance, we can show that \( III = {o_p}\left( {\sqrt{n/{N_n}} } \right) \). This combined with (15)–(17) leads to

It is easy to derive that \(I = \sum _{i = 1}^n {{\varvec{B}}_q^T\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {\varvec{\varLambda }}_i{\psi _\tau }\left( {{{\varvec{\varepsilon }}_i}} \right) }\) is a sum of independent vector, \( E\left( I\right) =0\) and \(Cov\left( I \right) = \sum _{i = 1}^n {{\varvec{B}}_q^T\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) {\varvec{\varLambda }}_i {{\varvec{\varSigma }} _{\tau i}} {\varvec{\varLambda }}_i {{\varvec{B}}_q}\left( {{{\varvec{X}}_i}{{\beta } _0}} \right) } .\) By the multivariate central limit theorem and the Slutsky’s theorem, we can complete the proof. \(\square \)

Lemma 3

Under conditions (C1)–(C7) and the number of knots satisfies \({n^{{1 /{(2d + 2)}}}} \ll {N_n} \ll {n^{{1 / 4}}}\), we have

Proof

Define \({\varvec{H}}_i^T = {{\varvec{J}}_{{{\beta } ^{(r)}}}^T{\hat{{\varvec{X}}}}_i^T {\hat{{\varvec{G}}}'} \left( {{{\varvec{X}}_i}{\beta } ;{\beta } } \right) }\), \({\varvec{S_{i}}}=(S_{i1},\ldots ,S_{im_i})^T\) with \({S_{ij}} ={S_{ij}}\left( {\beta } \right) ={\psi _\tau }\left( {{Y_{ij}} - \hat{g}\left( {{\varvec{X}}_{ij}^T{\beta } ;{\beta } } \right) } \right) =I\left( {Y_{ij}}-{\hat{g}\left( {{\varvec{X}}_{ij}^T{\beta } ;{\beta } } \right) }\le 0 \right) - \tau \) being a discontinuous function, then \(R\left( {\beta } ^{(r)} \right) = \sum _{i = 1}^n { {{\varvec{H}}_i^T {\varvec{\varLambda }} _i{{\varvec{S}}_{i}}\left( {\beta } \right) } } \). Let \(\bar{R}\left( {\beta } ^{(r)} \right) = \sum _{i = 1}^n { {{\varvec{H}}_i^T{\varvec{\varLambda }} _i{{\varvec{P}}_{i}}\left( {\beta } \right) } } \), where \({\varvec{P_{i}}}=(P_{i1},\ldots ,P_{im_i})^T\) with \(P_{ij}={P_{ij}}\left( {\beta } \right) =p\left( {{Y_{ij}} - \hat{g}\left( {{\varvec{X}}_{ij}^T{\beta } ;{\beta } } \right) \le 0} \right) - \tau .\) For any \({\beta }^{(r)}\) satisfying \(\left\| {\beta }^{(r)}-{\beta }_0^{(r)}\right\| \le C{n^{{{ - 1} / 2}}}\), we have

At first, the first term can be written as

where \({\varvec{H}}_i^T = \left( {{{\varvec{h}}_{i1}},\ldots ,{{\varvec{h}}_{i{m_i}}}} \right) \) and \({\varvec{h}}_{ij}\) is a \((p-1)\times 1\) vector. According to Lemma 3 in Jung (1996) and Lemma 1, we have \(\sup \left| \varUpsilon \right| = {o_p}\left( {\sqrt{n} } \right) \). Then, the first term becomes

By the law of large numbers (Pollard 1990), together with Lemma 1, the second term becomes

Therefore, \( { R\left( {\beta }^{(r)} \right) - R\left( {\beta }_0^{(r)} \right) } =\bar{R}\left( {\beta } ^{(r)} \right) + {o_p}\left( {\sqrt{n} } \right) \). By Taylor’s expansion of \(\bar{R}\left( {\beta } ^{(r)} \right) \), we can obtain

Because \(R\left( {\hat{{\beta }}}^{(r)} \right) = 0\) and \({\hat{{\beta }}}^{(r)}\) is in the \(n^{-1/2}\) neighborhood of \({\beta }_0^{(r)}\), we have

where \(R\left( {{\beta }_0 ^{(r)}} \right) =\sum _{i = 1}^n {{\varvec{J}}_{{{\beta }^{(r)}}}^T{\hat{{\varvec{X}}}}_i^T{\hat{{\varvec{G'}}}}\left( {{{\varvec{X}}_i}{{\beta } };{{\beta } }} \right) {\varvec{\varLambda }} _i{{\varvec{S}}_{i}}\left( {\beta } \right) } |_{{\beta } ^{(r)}={\beta }_0^{(r)}} \), \({{\varvec{S}}_{i}}\left( {\beta }_0 \right) = {\left( {{S_{i1}}\left( {\beta }_0 \right) ,\ldots ,{S_{i{m_i}}}\left( {\beta }_0 \right) } \right) ^T}\) with \({S_{ij}}\left( {\beta } _0 \right) = {I\left( {{Y_{ij}} - \hat{g}\left( {{\varvec{X}}_{ij}^T{{\beta } _0};{{\beta } _0}} \right) \le 0} \right) }-\tau \) and

Thus, we have

where \(S_{ij}^*\left( {{{\beta } _0}} \right) =I\left( {{Y_{ij}} - g_0\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \le 0} \right) - \tau \). Moreover, \(\Big | \hat{g}\left( {{\varvec{X}}_{ij}^T{{\beta } _0};{{\beta } _0}} \right) - g_0\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \Big | = {O_p}\left( {\sqrt{{{{N_n}} \big / n}} + N_n^{ - d}} \right) \) and \(E\left\{ {I\left( {{Y_{ij}} - {g_0}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \le 0} \right) } \right\} = p\left( {{Y_{ij}} - {g_0}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \le 0} \right) = \tau \), we have \(E\left( {S_{ij}}\left( {{{\beta } _0}} \right) \right) =o\left( 1\right) \). Therefore, we have \(E (\varvec{S}_i (\beta _0)) = o (1)\) and

Based on Lemma 1, together with \({\varvec{S}}_{i}\left( {{{\varvec{\beta }}_0}} \right) \) are the independent random variables, the multivariate central limit theorem implies that

By the law of large numbers and Lemma 1, we have

Then, combine (18)–(20) and use the Slutsky’s theorem; it follows that

According to the result of (21) and the multivariate delta method, we have

\(\square \)

Proof of Theorem 1

Using conditions (C4), (C6), and (C7), similar to Lemma 3 (k) of Horowitz (1998), we obtain \({n^{{{ - 1} / 2}}}\tilde{R}\left( {{\beta }_0 ^{(r)}} \right) = {n^{{{ - 1} / 2}}}R\left( {{\beta }_0 ^{(r)}} \right) + {o_p}\left( 1 \right) \). In order to prove the asymptotic normality of \(\tilde{{\beta }}^{(r)}\), we need to prove \({n^{ - 1}}\left\{ {\tilde{D}\left( {{\beta } _0^{(r)}} \right) - D\left( {{\beta } _0^{(r)}} \right) } \right\} \mathop \rightarrow \limits ^p 0\), where

It is easy to get that

where \({\varvec{h}}_{ij}\) is given in the proof of Lemma 3. Because

where \(\varsigma _t\) is between \( \hat{g}\left( {{\varvec{X}}_{ij}^T{{\beta } _0};{{\beta } _0}} \right) -{g_0}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \) and \(ht+ \hat{g}\left( {{\varvec{X}}_{ij}^T{{\beta } _0};{{\beta } _0}} \right) -{g_0}\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) \). By condition (C4), \({f'_{ij}}\left( \cdot \right) \) is uniformly bounded; hence, there exists a constant M satisfying \(\left| {{f'_{ij}}\left( {{\varsigma _t}} \right) } \right| \le M\). Combining \(\left| {\hat{g}\left( {{\varvec{X}}_{ij}^T{{\beta } _0};{{\beta } _0}} \right) - g_0\left( {{\varvec{X}}_{ij}^T{{\beta } _0}} \right) } \right| = {O_p}\left( {\sqrt{{{{N_n}} \big / n}} + N_n^{ - r}} \right) \) with conditions (C4), (C6), and (C7), we have

So we can obtain \(\left| {{n^{ - 1}}\left\{ E\left\{ {\tilde{D}\left( {{{\beta } _0^{(r)}}} \right) } \right\} - D\left( {{{\beta } _0^{(r)}}} \right) \right\} } \right| \rightarrow 0\). By the strong law of large number, we have \({{n^{ - 1}}\tilde{D}\left( {{{\beta } _0^{(r)}}} \right) \rightarrow E\left( {{n^{ - 1}}\tilde{D}\left( {{{\beta } _0^{(r)}}} \right) } \right) }\). Using the triangle inequality, we have

Furthermore, by the Taylor series expansion of \(\tilde{R}\left( {{{\beta } ^{(r)}}} \right) \) around \({\beta }_0^{(r)}\), we have

where \({\beta } ^*\) lies between \({\beta }^{(r)}\) and \({\beta }_0^{(r)}\). Let \({\beta }^{(r)}={\tilde{{\beta }}}^{(r)}\), we have

because of \(\tilde{R}\left( {\tilde{{\beta }} }^{(r)} \right) =0\). Since \({\tilde{{\beta }}}^{(r)}\rightarrow {\beta }_0^{(r)}\), we can obtain \( {\beta }^*\rightarrow {\beta }_0^{(r)}\) and \({{\tilde{D}}^{ - 1}}\left( {{{\beta } ^*}} \right) \rightarrow {{\tilde{D}}^{ - 1}}\left( {{{\beta } _0^{(r)}}} \right) \). Since \({n^{ - 1}}\left\{ {\tilde{D}\left( {{{\beta } _0^{(r)}}} \right) - D\left( {{{\beta } _0^{(r)}}} \right) } \right\} \mathop \rightarrow \limits ^p 0\) and \({n^{{{ - 1} / 2}}}\tilde{R}\left( {{\beta } _0 ^{(r)}} \right) = {n^{{{ - 1} / 2}}}R\left( {{\beta } _0 ^{(r)}} \right) + {o_p}\left( 1 \right) \), we have

Next, similar to the proof of Lemma 3, we can complete the proof of Theorem 1. \(\square \)

Proof of Theorem 2

Since \(\left\| {{\tilde{{\beta }}}- {{\beta } _0}} \right\| ={O_p}\left( {{n^{{{ - 1} / 2}}}} \right) \), Theorem 2 (i) follows from this result and Lemma 1. Based on Lemma 2, we have

By the definition of \({\hat{g}\left( {u;{\beta } } \right) }\) and \(\check{g} \left( u;{\beta } \right) \), choosing \({\varvec{c}}_n={\varvec{B}}_q\left( {u } \right) \) yields

Thus, when \({\beta }\) is a known constant \({\beta }_0\) or estimated to the order \({{O_p}\left( {{n^{{{ - 1} / 2}}}} \right) }\), we can complete the proof of Theorem 2 (ii). \(\square \)

Proof of Theorem 3

Let \({\varvec{\varSigma }}_{\tau i}=\left( {{\sigma _{ijj'}}} \right) _{j,j' = 1}^{{m_i}}\) and \({\hat{{\varvec{\varSigma }}}}_{\tau i}=\left( {{\hat{\sigma } _{ijj'}}} \right) _{j,j' = 1}^{{m_i}}\) for \(i=1\ldots ,n\). Based on the modified Cholesky decomposition, the diagonal elements of \({\hat{{\varvec{\varSigma }}}}_{\tau i}\) are \({\hat{\sigma } _{ijj}} = \hat{d}_{_{\tau ,ij}}^2 + \sum _{k = 1}^{j - 1} {\hat{l}_{\tau ,ijk}^2\hat{d}_{{\tau ,ik}}^2} \) for \(j=1,\ldots ,m_i\), and the elements under the diagonal are \({\hat{\sigma } _{ijk}} = {{\hat{l}}_{\tau ,ijk}}\hat{d}_{_{\tau ,ik}}^2 + \sum _{k' = 1}^{k - 1} {{{\hat{l}}_{\tau ,ijk'}}{{\hat{l}}_{\tau ,ikk'}}\hat{d}_{{\tau ,ik'}}^2} \) for \(j=2,\ldots ,m_i, k=1,\ldots ,j-1\). Similarly, the diagonal elements of \({\varvec{\varSigma }}_{\tau i}\) are \({\sigma _{ijj}} = d_{{\tau ,ij}}^2 + \sum _{k = 1}^{j - 1} { l_{\tau ,ijk}^2 d_{{\tau ,ik}}^2} \) for \(j=1,\ldots ,m_i\), and the elements under the diagonal are \({\sigma _{ijk}} = {{ l}_{\tau ,ijk}} d_{_{\tau ,ik}}^2 + \sum _{k' = 1}^{k - 1} {{{ l}_{\tau ,ijk'}}{{l}_{\tau ,ikk'}} d_{{\tau ,ik'}}^2} \) for \(j=2,\ldots ,m_i, k=1,\ldots ,j-1\). Since \(\left( {{\hat{{\gamma }}}_\tau ^T,{\hat{{\varvec{\lambda }}}}_\tau ^T} \right) ^T\) are \(\sqrt{n} \)-consistent estimators, together with \(\hat{d} _{\tau ,ij}^2= \exp \left( {\varvec{z}}_{ij}^T{\hat{{\varvec{\lambda }}}}_\tau \right) \) and \(\hat{l}_{\tau ,ijk}= {\varvec{w}}_{ijk}^T{{\hat{{\gamma }}}_\tau }\) for \(k<j=2,\ldots ,m_i\), we have

Therefore, for \(j=1,\ldots ,m_i\), we have

and

for \(j=2,\ldots ,m_i, k=1,\ldots ,j-1\). This completes the proof. \(\square \)

Proof of Theorem 4

Similar to the proof of Lemma 3, we have

where \({{\bar{{\varvec{S}}}}_{i}}\left( {\beta } \right) = {\left( {{\bar{S}_{i1}}\left( {\beta } \right) ,\ldots ,{\bar{S}_{i{m_i}}}\left( {\beta } \right) } \right) ^T}\) with \({\bar{S}_{ij}}\left( {\beta } \right) =\psi _{h\tau }\left( Y_{ij} - \bar{g}\left( {{\varvec{X}}_{ij}^T{{\beta }};{{\beta } }} \right) \right) \), \(\bar{R}\left( {{\beta } _0^{(r)}} \right) =\sum _{i = 1}^n {{\varvec{J}}_{{{\beta } ^{(r)}}}^T{\hat{{\varvec{X}}}}_i^T{\bar{{\varvec{G}}'}}\left( {{{\varvec{X}}_i}{{\beta } };{{\beta } }} \right) {\varvec{\varLambda }} _i{\hat{{\varvec{\varSigma }}}} _{\tau i}^{ - 1}{{\bar{{\varvec{S}}}}_{i}}\left( {\beta } \right) } \left| {_{{{\beta } ^{(r)}} = {\beta } _0^{(r)}}} \right. \) and

Using conditions (C4), (C6), and (C7), similar to Lemma 3 (k) of Horowitz (1998), we obtain

where \({{\varvec{S}}_{i}^*}\left( {\beta } \right) = {\left( {{S_{i1}^*}\left( {\beta } \right) ,\ldots ,{S_{i{m_i}}^*}\left( {\beta } \right) } \right) ^T}\) with \({S_{ij}^*}\left( {\beta } \right) =\psi _{\tau }\left( Y_{ij} - \bar{g}\left( {{\varvec{X}}_{ij}^T{{\beta } };{{\beta } }} \right) \right) \) and \(R^*\left( {{\beta } _0^{(r)}} \right) =\sum _{i = 1}^n {{\varvec{J}}_{{{\beta } ^{(r)}}}^T{\hat{{\varvec{X}}}}_i^T{\bar{{\varvec{G}}'}}\left( {{{\varvec{X}}_i}{{\beta } };{{\beta } }} \right) {\varvec{\varLambda }} _i{\hat{{\varvec{\varSigma }}}} _{\tau i}^{ - 1}{{\varvec{S}}_{i}^*}\left( {\beta } \right) } \left| {_{{{\beta } ^{(r)}} = {\beta } _0^{(r)}}} \right. \). Similar to the proof of Theorem 1, we have

where \( D^*\left( {{{\beta } _0^{(r)}}} \right) =\sum _{i = 1}^n {\varvec{J}}_{{{\beta } ^{(r)}}}^T {\hat{{\varvec{X}}}}_i^T{\bar{{\varvec{G}}'}}\left( {{{\varvec{X}}_i}{{\beta } };{{\beta } }} \right) {\varvec{\varLambda }} _i{\hat{{\varvec{\varSigma }}}} _{\tau i}^{ - 1}{{\varvec{\varLambda }} _i} {\bar{{\varvec{G}}'}}\left( {{{\varvec{X}}_i}{{\beta } };{{\beta } }} \right) {{\hat{{\varvec{X}}}}_i}{{\varvec{J}}_{{{\beta } ^{(r)}}}}\left| {_{{{\beta } ^{(r)}} = {\beta } _0^{(r)}}} \right. .\) By (22)–(24), we have

Similar to the proof of Lemma 1, we have

uniformly for any \(u\in [a,b]\). Because \({\varvec{S}}_{i}^*\left( {{{\beta } _0}} \right) \) are the independent random variables, together with (26), we have \(E\left( {{\varvec{S}}_{i}^*}\left( {{{\beta } _0}} \right) \right) =o\left( 1\right) \) and

By the use of the following property (see Lemma 2 in Li 2011), let \({\varvec{A}}_n\) be a sequence of random matrices converging to an invertible matrix \({\varvec{A}}\), and then \({\varvec{A}}_n^{ - 1} = {{\varvec{A}}^{ - 1}} - {{\varvec{A}}^{ - 1}}\left( {{{\varvec{A}}_n} - {\varvec{A}}} \right) {{\varvec{A}}^{ - 1}} + {O_p}\left( {{{\left\| {{{\varvec{A}}_n} -{\varvec{A}}} \right\| }^2}} \right) \). This together with Theorem 3, we have \({\hat{{\varvec{\varSigma }}}} _{\tau i}^{ - 1} - {\varvec{\varSigma }} _{\tau i}^{ - 1} = {O_p}\left( {{n^{{{ - 1} / 2}}}} \right) \) uniformly for all i. By the law of large numbers, (26), and the consistency of \({\hat{{\varvec{\varSigma }}}} _{\tau i}^{ - 1}\), we have

By the multivariate central limit theorem and the Slutsky’s theorem, together with (25), we can complete the proof. \(\square \)

Proof of Theorem 5

Similar to the proof of Theorem 2, together with the consistency \({\hat{{\varvec{\varSigma }}}} _{\tau i}^{ - 1} - {\varvec{\varSigma }} _{\tau i}^{ - 1} = {O_p}\left( {{n^{{{ - 1} / 2}}}} \right) \) of \({\varvec{ \varSigma }} _{\tau i}^{ - 1}\), when \({\beta }\) is a known constant \({\beta }_0\) or estimated to the order \({{O_p}\left( {{n^{{{ - 1} / 2}}}} \right) }\), we can complete the proof of Theorem 5. \(\square \)

About this article

Cite this article

Lv, J., Guo, C. Quantile estimations via modified Cholesky decomposition for longitudinal single-index models. Ann Inst Stat Math 71, 1163–1199 (2019). https://doi.org/10.1007/s10463-018-0673-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-018-0673-x