Abstract

Longitudinal data are frequently analyzed using normal mixed effects models. Moreover, the traditional estimation methods are based on mean regression, which leads to non-robust parameter estimation under non-normal error distribution. However, at least in principle, quantile regression (QR) is more robust in the presence of outliers/influential observations and misspecification of the error distributions when compared to the conventional mean regression approach. In this context, this paper develops a likelihood-based approach for estimating QR models with correlated continuous longitudinal data using the asymmetric Laplace distribution. Our approach relies on the stochastic approximation of the EM algorithm (SAEM algorithm), obtaining maximum likelihood estimates of the fixed effects and variance components in the case of nonlinear mixed effects (NLME) models. We evaluate the finite sample performance of the SAEM algorithm and asymptotic properties of the ML estimates through simulation experiments. Moreover, two real life datasets are used to illustrate our proposed method using the \(\texttt {qrNLMM}\) package from \(\texttt {R}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nonlinear mixed-effects (NLME) models are frequently used to analyze grouped, clustered, longitudinal and multilevel data, among other situations. This is because this type of model allows dealing with nonlinear relationships between the observed response and the covariates and/or random effects, and at the same time, takes into account within- and between-subject correlations in the statistical modeling of the observed data. In general, NLME models arise as a consequence of the mathematical modeling of biological, chemical and physical phenomena, using known families of nonlinear functions with attractive properties such as the presence of asymptote, uniqueness of maximum value, monotonicity and positive range (Pinheiro and Bates 2000; Davidian and Giltinan 2003; Wu 2010). Although most of the current NLME model research is focused on estimating of the conditional mean of the response given some covariates, sometimes the estimation of this quantity lacks meaning, especially when the conditional distribution of the response (given the covariates) is asymmetric, multimodal or simply is severely affected by atypical observations (outliers). In this case, the use of conditional quantile regression (QR) methods (Koenker 2004, 2005) becomes an appropriate strategy for describing the conditional distribution of the outcome variable given the covariates. One of the advantages of using QR methods is that they do not impose any distribution assumption on the error term, except that this term must have a conditional quantile equal to zero. Moreover, and from a practical viewpoint, standard QR methods are already implemented in statistical software such \(\texttt {R}\) in its package \(\texttt {quantreg}()\) (R Core Team 2017).

QR methods were initially developed under a univariate framework (see for example Galarza et al. 2017) but, nowadays, the abundance of correlated data in real-life applications has generated the study of several extensions of QR methods based on mixed models. Some of these extensions consider the distribution-free approach (Lipsitz et al. 1997; Galvao and Montes-Rojas 2010; Galvao 2011; Fu and Wang 2012; Andriyana et al. 2016), while others consider the traditional likelihood-based approach using the asymmetric Laplace (AL) distribution (see for example, Geraci and Bottai 2007; Yuan and Yin 2010; Geraci and Bottai 2014) and Aghamohammadi and Mohammadi (2017). In this context, Geraci and Bottai (2007) proposed a Monte Carlo EM (MCEM) algorithm for the QR model considering continuous responses with a subject-specific univariate random intercept. Recently, Geraci and Bottai (2014) extended their previous work by considering a general QR linear mixed effects (QR-LME) model with multiple random effects. In that work, the authors considered the estimation of the fixed effects and the covariance components through an efficient combination of Gaussian quadrature approximations and nonsmooth optimization algorithms. On the other hand, Yuan and Yin (2010) extend the QR model proposed by Geraci and Bottai (2007) to the case of linear mixed effects (LME) models for longitudinal measurements with missing data. In the nonlinear case, Wang (2012) considered a QR-NLME model from a Bayesian perspective, showing that this model may be a better alternative than the mean regression estimation in the presence of asymmetric and multimodal data.

Although some results based on QR-NLME models have recently appeared in the statistical literature, to the best of our knowledge, there are no studies and contributions considering an exact inference for QR-NLME models from a likelihood-based perspective. For that reason, the aim of this paper is to propose a QR-NLME model using the AL distribution and considering a full likelihood-based inference via the implementation of the stochastic version of the EM algorithm, a.k.a. SAEM algorithm, proposed by Delyon et al. (1999) for the maximum likelihood (ML) estimation. The SAEM algorithm has been proved to be more computationally efficient algorithm than the classic MCEM algorithm due to the recycling of simulations from one iteration to the next in the smoothing phase. Moreover, as pointed out by Meza et al. (2012), the SAEM algorithm, unlike the MCEM, converges even with a small simulation size. In this context, Kuhn and Lavielle (2005) showed that the SAEM algorithm is very efficient for computing the ML estimates in mixed effects models. It is important to stress that, the empirical results show that the ML estimates based on our proposed SAEM algorithm provide good asymptotic properties. Moreover, the application of our method is conducted by using our recent \(\texttt {R}\) package, called, \(\texttt {qrNLMM()}\).

The paper is organized as follows. Section 2 presents some preliminary results. Particularly, we present some properties of the AL distribution and an outline of the SAEM algorithm. Section 3 provides the MCEM and SAEM algorithms for a general NLME model, while Sect. 4 outlines the likelihood-based estimation and standard errors of the parameter estimates under the proposed model. Section 5 presents the results of simulation studies conducted to analyze the performance of the proposed method with respect to the asymptotic properties of the ML estimates and the consequences of the misspecification of the random effects distribution. The analysis of two longitudinal datasets is presented in Sect. 6. Finally, Sect. 7 closes the paper by presenting a brief plan of our future work.

2 Preliminaries

In this section, we provide some useful results related to the AL distribution. We also present some background on the SAEM algorithm for ML estimation.

2.1 AL distribution

A random variable Y has an AL distribution (see Kozubowski and Nadarajah 2010) with location parameter \(\mu \), scale parameter \(\sigma >0\) and skewness parameter \(p\in (0,1)\), if its probability density function (pdf) is given by

where \(\rho _p(\cdot )\) is the check (or loss) function defined by \(\rho _p(u)=u(p-\mathbb {I}\{u<0\})\), with \(\mathbb {I}\{\cdot \}\) the indicator function. This distribution is denoted by \(\text{ AL }(\mu ,\sigma ,p)\).

The random variable \(Y \sim \text{ AL }(\mu ,\sigma ,p)\) can be represented hierarchically as

where \(\text{ Exp }(\sigma )\) denotes the exponential distribution with mean \(\sigma , \vartheta _p\) and \(\tau ^2_p\) are constants such that \(\vartheta _p=\frac{1-2p}{p(1-p)}\) and \(\tau ^2_p=\frac{2}{p(1-p)}\). Note that \(U\mid Y\sim \text{ GIG } (1/2,\delta ,\gamma )\), where \(\text{ GIG }(\nu , a, b)\) denotes the Generalized Inverse Gaussian (GIG) distribution (Barndorff-Nielsen and Shephard 2001) with pdf given by

with \(u>0, \nu \in \mathbb {R}, a,b>0,\) where \(K_{\nu }(\cdot )\) is the modified Bessel function of the third kind. The moments of the GIG distribution are given by

2.2 The SAEM algorithm

The SAEM algorithm proposed by Delyon et al. (1999) replaces the E-step of the EM algorithm (Dempster et al. 1977) by a stochastic approximation procedure. Besides having good theoretical properties, the SAEM algorithm estimates the population parameters accurately, converging to the global maxima of the ML estimates under quite general conditions (Allassonnière et al. 2010; Delyon et al. 1999; Kuhn and Lavielle 2004).

Let \({\varvec{\theta }}\) be the vector of the parameter of interest, \(\mathbf {y}\) be the observed data and \(\mathbf {q}\) be the missing data and/or latent variables. At each iteration, the SAEM algorithm successively simulates missing data/latent variables using their conditional distributions, updating the model parameters. Thus, at iteration k, the SAEM algorithm proceeds as follows:

E-Step:

Simulation: draw a sample \((\mathbf {q}^{(\ell ,k)}),\)\(\ell =1,\ldots ,m\) from the conditional distribution \(f\left( \mathbf {q}\mid {\varvec{\theta }}^{(k-1)},\mathbf {y}\right) \).

Stochastic approximation: update \( Q \left( {\varvec{\theta }}\mid \widehat{{\varvec{\theta }}}^{(k)}\right) \) as

$$\begin{aligned} Q \left( {\varvec{\theta }}\mid \widehat{{\varvec{\theta }}}^{(k-1)}\right) +\delta _{k}\left[ \frac{1}{m}\sum _{\ell =1}^{m}\ell _{c}\left( {\varvec{\theta }};\mathbf {y},\mathbf {q}^{(\ell ,k)}\right) - Q \left( {\varvec{\theta }}\mid \widehat{{\varvec{\theta }}}^{(k-1)}\right) \right] , \end{aligned}$$where m is the number of simulations and \(\delta _{k}\) is a smoothness parameter (Kuhn and Lavielle 2004), i.e., a decreasing sequence of positive numbers such that \(\sum _{k=1}^{\infty }\delta _{k}=\infty \) and \(\sum _{k=1}^{\infty }\delta _{k}^{2}<\infty \).

M-Step:

Maximization: update \(\widehat{{\varvec{\theta }}}^{(k)}\) as \(\widehat{{\varvec{\theta }}}^{(k+1)}=argmax_{{\varvec{\theta }}} Q ({\varvec{\theta }}\mid \widehat{{\varvec{\theta }}}^{(k)}).\)

Note that, although the E-Step is similar in the SAEM and MCEM algorithms, a small number of simulations m (for practical situations, \(m\le 20\) is suggested) is necessary in the first one. This is possible because, unlike the traditional EM algorithm and its variants, the SAEM algorithm uses not only the current simulation of the missing data/latent variables at the iteration k but some or all the previous simulations. In fact, this ‘memory’ property is set by the smoothing parameter \(\delta _{k}\). In our case, we suggested the following choice of the smoothing parameter given as

where W is the maximum number of iterations, and c is a cutoff point (\(0\le c\le 1\)) which determines the percentage of initial iterations with no memory.

3 The QR nonlinear mixed model

3.1 The model

In this section, we propose the following general mixed-effects model. Let \(\mathbf {y}_{i}=\left( y_{i1},\ldots ,y_{in_{i}}\right) ^{\top }\) be the continuous response of subject i and \({\varvec{\eta }}=(\eta ({\varvec{\phi }}_{i},x_{i1})\)\(,\ldots ,\eta ({\varvec{\phi }}_{i},x_{in_{i}}))^{\top }\) a nonlinear differentiable function of vector-valued random parameters \(\phi _{i}\) of dimension r. Moreover, let \(\mathbf {x}_{i}\) be a matrix of covariates of dimension \(n_{i}\times r\). The NLME model is defined as

where \(\mathbf {A}_{i}\) and \(\mathbf {B}_{i}\) are design matrices (fixed) of dimensions \(r\times d\) and \(r\times q\), respectively, possibly depending on elements of \(\mathbf {x}_{i}\) and incorporating time varying covariates in fixed or random effects; \({\varvec{\beta }}_{p}\) is the regression coefficient corresponding to the p-th quantile, \(\mathbf {b}_{i}=(b_{i1},\ldots , b_{iq})^{{\scriptscriptstyle \top }}\) is a q-dimensional random effects vector associated with the i-th subject and \({\varvec{\epsilon }}_{i}\) is the independent and identically distributed vector of random errors. We define the p-th quantile function of the response \(y_{ij}\) as

where \(Q_{p}\) denotes the inverse of the unknown distribution function F. In this setting, the random effects \(\mathbf {b}_{i}\) are independent and identically distributed (i.i.d) as \(\text {N}_{q}(\mathbf {0},{\varvec{\varPsi }}),\) where the dispersion matrix \({\varvec{\varPsi }}={\varvec{\varPsi }}({\varvec{\alpha }})\) depends on unknown and reduced parameters \({\varvec{\alpha }}\). The error terms are distributed as \(\epsilon _{ij}{\mathop {\sim }\limits ^{\mathrm{\scriptscriptstyle {iid}}}}\text {AL}(0,\sigma ,p)\), being uncorrelated with the random effects. Then, conditionally on \(\mathbf {b}_i\), the observed responses for subject i, i.e., \(y_{ij}\) for \(j=1,\ldots ,n_i\), are independent following an AL distribution with location, scale and skewness parameters given by \(\eta (\mathbf {A}_{i}{\varvec{\beta }}_{p}+\mathbf {B}_{i}\mathbf {b}_{i},\mathbf {x}_{ij}), \sigma \) and p, respectively.

The main reason for considering the AL distribution in our approach is that this distribution proves useful as a unifying bridge between the likelihood inference and the inference about QR estimation and seems to be very promising within those models, since it clearly represents a suitable error law for the least absolute estimator (Geraci and Bottai 2007). In addition, and from the Bayesian standpoint, Yu and Moyeed (2001) have showed that irrespective of the original distribution of the data, the use of the AL distribution is a very natural and effective way to model QR. Recently, Sriram et al. (2013) studied the posterior consistency of model parameters for a QR model when the AL distribution is misspecified, concluding that the use of the AL distribution works for a variety of possibilities of the true likelihood, including location models, scale models and location-scale models.

3.2 The MCEM algorithm

In this section we develop a MCEM algorithm for ML estimation of the parameters in the QR-NLME model. This model has a flexible hierarchical representation, which is useful for deriving interesting theoretical properties. From (1), we have that the QR-NLME model defined in (3)–(4), can be represented as

for \(i=1,\ldots ,n\), where \(\vartheta _{p}\) and \(\tau _{p}^{2}\) are given as in (1) and \({\varvec{D}}_{i}\) represents a diagonal matrix that contains the vector of latent variables \(\mathbf {u}_{i}=\left( u_{i1},\ldots ,u_{in_{i}}\right) ^{{\scriptscriptstyle \top }}\). Let \(\mathbf {y}_{i_{{\scriptstyle c}}}=\left( \mathbf {y}_{i}^{{\scriptscriptstyle \top }},\mathbf {b}_{i}^{{\scriptscriptstyle \top }},\mathbf {u}_{i}^{{\scriptscriptstyle \top }}\right) ^{{\scriptscriptstyle \top }}\) and let \({\varvec{\theta }}^{ (k) }=({\varvec{\beta }}_{{\scriptscriptstyle p},}^{{\scriptscriptstyle (k)\top }},\sigma ^{{\scriptscriptstyle (k)}}, {\varvec{\alpha }}^{{\scriptscriptstyle (k) \top }})^{{\scriptscriptstyle \top }}\), the estimate of \({\varvec{\theta }}\) at the k-th iteration. For simplicity, we denote \({\varvec{\eta }}_i={\varvec{\eta }}(\mathbf {A}_{i}{\varvec{\beta }}_{p}+\mathbf {B}_{i}\mathbf {b}_{i},\mathbf {x}_{i})\). Since \(\mathbf {b}_{i}\) and \(\mathbf {u}_{i}\) are independent for all \(i=1,\ldots ,n\), it follows from (1) that the complete-data log-likelihood function is given by \(\ell _{c}({{\varvec{\theta }}};\mathbf {y}_{c})=\sum _{i=1}^{n}\ell _{c}({\varvec{\theta }};\mathbf {y}_{i_{{\scriptstyle c}}}),\) where

where K denotes a constant that does not depend on the parameter of interest and \(\mathbf 1 _{p}\) is a vector of ones of dimension p. Given the current estimate \({\varvec{\theta }}={\varvec{\theta }}^{(k)}\), the E-step calculates the function \( Q \left( {{\varvec{\theta }}}\mid \widehat{{{\varvec{\theta }}}}^{(k)}\right) =\sum _{i=1}^{n} Q_{i} \left( {{\varvec{\theta }}}\mid \widehat{{\varvec{\theta }}}^{(k)}\right) ,\) where

where \(\text {tr}(\mathbf {A})\) denotes the trace of matrix \(\mathbf {A}\). The calculation of this function requires the expressions \(\widehat{{\varvec{\eta }}_{i}}^{{\scriptscriptstyle (k)}} = \mathrm {E} \left\{ {\varvec{\eta }}_{i}\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}\right\} ,\)\(\widehat{\mathbf {u}_{i}}^{{\scriptscriptstyle (k)}} = \mathrm {E} \left\{ \mathbf {u}_{i}\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}\right\} , \widehat{(\mathbf {b}\mathbf {b}^{{\scriptscriptstyle \top }})_{i}}^{{\scriptscriptstyle (k)}} = \mathrm {E} \left\{ \mathbf {b}_{i}\mathbf {b}_{i}^{{\scriptscriptstyle \top }}\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}\right\} ,\)\(\widehat{{\varvec{D}}_{i}^{{\scriptscriptstyle -1}}}^{(k)} = \mathrm {E} \left\{ {{\varvec{D}}_{i}^{{\scriptscriptstyle -1}}}\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}\right\} ,\)\((\widehat{{\varvec{D}}^{{\scriptscriptstyle -1}}{\varvec{\eta }}})_{i}^{(k)} = \mathrm {E} \left\{ {{\varvec{D}}_{i}^{{\scriptscriptstyle -1}}{\varvec{\eta }}_{i}}^{(k)}\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}\right\} ,\)\((\widehat{{\varvec{\eta }}^{{\scriptscriptstyle \top }}{\varvec{D}}^{{\scriptscriptstyle -1}}{\varvec{\eta }}})_{i}^{(k)} = \mathrm {E} \left\{ {{\varvec{\eta }}_{i}^{{\scriptscriptstyle \top }}{\varvec{D}}_{i}^{{\scriptscriptstyle -1}}{\varvec{\eta }}_{i}}\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}\right\} ,\) which do not have closed forms. Since the joint distribution of the latent variables \(\left( \mathbf {b}_{i}^{{\scriptscriptstyle (k)}},\mathbf {u}_{i}^{{\scriptscriptstyle (k)}}\right) \) is unknown and the conditional expectations cannot be computed analytically, for any function \(g(\cdot )\), the MCEM algorithm approximates these expectations using a Monte Carlo approximation given by

which depends of the simulations of the two latent variables \(\mathbf {b}_{i}^{{\scriptscriptstyle (k)}}\) and \(\mathbf {u}_{i}^{{\scriptscriptstyle (k)}}\) from the conditional density \(f(\mathbf {b}_{i},\mathbf {u}_{i}\mid {\varvec{\theta }}^{(k)},\mathbf {y}_{i})\). Since \( \mathrm {E} [g(\mathbf {b}_{i},\mathbf {u}_{i})\mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}] = \mathrm {E} [ \mathrm {E} [g(\mathbf {b}_{i},\mathbf {u}_{i})\mid {{\varvec{\theta }}}^{{\scriptscriptstyle (k)}},\mathbf {b}_{i},\mathbf {y}_{i}]\mid \mathbf {y}_{i}]\), the expected value given in (6) can be more accurately approximated by

where \(\mathbf {b}^{{\scriptscriptstyle (\ell ,k)}}_i\) is generated from \(f(\mathbf {b}_{i}\mid {\varvec{\theta }}^{(k)},\mathbf {y}_{i})\). Note that this approximation is more accurate since it only depends on one Monte Carlo approximation, instead of two (as is needed in (6)).

To generate random samples from the full conditional distribution \(f(\mathbf {u}_{i}\mid \mathbf {y}_{i},\mathbf {b}_{i})\), first note that the vector \(\mathbf {u}_{i}\mid \mathbf {y}_{i},\mathbf {b}_{i}\) can be written as \(\mathbf {u}_{i}\mid \mathbf {y}_{i},\mathbf {b}_{i}=[ u_{i1}\mid y_{i1},\mathbf {b}_{i},\)\( u_{i2}\mid y_{i2},\mathbf {b}_{i}, \cdots ,u_{in_{i}}\mid y_{in_{i}},\mathbf {b}_{i}]^{{\scriptscriptstyle {\top }}}\), since \( u_{ij} \mid y_{ij},\mathbf {b}_{i}\) is independent of \(u_{ik}\mid y_{ik},\mathbf {b}_{i}\), for all \(j,k=1,2,\ldots ,n_{i}\) and \(j\ne k\). Thus, the distribution of \(f(u_{ij}\mid y_{ij},\mathbf {b}_{i})\) is proportional to

which, from Sect. 2.1, leads to \(u_{ij}\mid y_{ij},\mathbf {b}_{i}\sim \text {GIG}(\frac{1}{2},\chi _{ij},\psi )\), where \(\chi _{ij}\) and \(\psi \) are given by \( \chi _{ij}=|y_{ij}-\eta _{ij}|/{\displaystyle \tau _{p}\sqrt{\sigma }}\) and \({\displaystyle \psi =\tau _{p}/2\sqrt{\sigma }},\) and \(\eta _{ij}\) is the component j of the vector \({\varvec{\eta }}_i.\) From (2), and after generating samples from \(f(\mathbf {b}_{i}\mid {\varvec{\theta }}^{(k)},\mathbf {y}_{i})\) (see Sect. 3.4), the conditional expectation \( \mathrm {E} [\cdot \mid {{\varvec{\theta }}},\mathbf {b}_{i},\mathbf {y}_{i}]\) in (7) can be computed analytically. Finally, the proposed MCEM algorithm to estimate the parameters of the QR-NLME model can be summarized as follows:

MC E-Step: Given \({\varvec{\theta }}={\varvec{\theta }}^{(k)}\), for \(i=1,\ldots ,n\);

Simulation step: For \(\ell =1,\ldots ,m,\) generate \(\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}}\) from \(f(\mathbf {b}_{i}\mid {\varvec{\theta }}^{(k)},\mathbf {y}_{i})\), as described next in Sect. 3.4.

Monte Carlo approximation: Using (2) and \(\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}}\), for \(\ell =1,\ldots ,m,\) evaluate \({\mathrm {E}}[g\left( \mathbf {b}_{i},\mathbf {u}_{i}\right) \mid {\varvec{\theta }}^{{\scriptscriptstyle (k)}},\mathbf {y}_{i}] \) using the approximation proposed in (7).

M-step: Update \(\widehat{{\varvec{\theta }}^{(k)}}\) by maximising \( Q ({\varvec{\theta }}\mid \widehat{{\varvec{\theta }}^{(k)}})\approx \frac{1}{m}\sum _{l=1}^{m}\sum _{i=1}^{n}\ell _{c}({\varvec{\theta }};\mathbf {y}_{i},\mathbf {b}_{i}^{{\scriptscriptstyle (l,k)}},\mathbf {u}_{i})\) over \({\varvec{\theta }}\), leading the following estimates:

where \(\mathbf J _{i}=\partial {\varvec{\eta }}({\varvec{\beta }}_{ p},\mathbf {b}_{i})/\partial {\varvec{\beta }}_{ p}^{{\scriptscriptstyle \top }}, N=\sum _{i=1}^{n}n_{i}\) and expressions \(\mathcal {E}(\mathbf {u}_{i})^{{\scriptscriptstyle (\ell ,k)}}\) and \(\mathcal {E}({\varvec{D}}_{i}^{{\scriptscriptstyle -1}})^{{\scriptscriptstyle (\ell ,k)}}\) are defined in Appendix A2.

Note that, for the MC E-step, we need to generate \(\mathbf {b}_{i}^{(\ell ,k)}, \ell =1,\ldots ,m\), from \(f(\mathbf {b}_{i}\mid {\varvec{\theta }}^{(k)},\mathbf {y}_{i})\) (see Sect. 3.4), where m is the number of Monte Carlo simulations to be used (a number suggested to be large enough).

3.3 The SAEM algorithm

As was mentioned in Sect. 2.2, the SAEM circumvents the problem of simulating a large number of latent values at each iteration, leading to a faster and efficient solution to the MCEM algorithm. In summary, the SAEM algorithm proceeds as follows:

E-step: Given \({\varvec{\theta }}={\varvec{\theta }}^{(k)}\) for \(i=1,\ldots ,n\);

Stochastic approximation: Update the Monte Carlo approximations using stochastic ones, given by

$$\begin{aligned} S_{1,i}^{(k)}= & {} S_{1,i}^{(k-1)}+\delta _{k}\left[ \frac{1}{m}\sum _{\ell =1}^{m}{\mathbf {J}_{i}^{{\scriptscriptstyle {(k)}^{\top }}}\mathcal {E}({\varvec{D}}_{i}^{{\scriptscriptstyle -1}})^{{\scriptscriptstyle (\ell ,k)}}\mathbf {J}_{i}^{(k)}}-S_{1,i}^{(k-1)}\right] ,\\ S_{2,i}^{(k)}= & {} S_{2,i}^{(k-1)}+\delta _{k}\left[ \frac{1}{m}\sum _{\ell =1}^{m}\left[ 2\mathbf {J}_{i}^{{\scriptscriptstyle {(k)}^{\top }}}\mathcal {E}({\varvec{D}}_{i}^{{\scriptscriptstyle -1}})^{{\scriptscriptstyle (\ell ,k)}}\left[ \mathbf {y}_{i}-{\varvec{\eta }}(\widehat{{\varvec{\beta }}_{ p}}^{{\scriptscriptstyle (k)}},\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}})\right. \right. \right. \\&\left. -\;\vartheta _{p}\mathcal {E}(\mathbf {u}_{i})^{{\scriptscriptstyle (\ell ,k)}}\Big ]\Big ] -S_{2,i}^{(k-1)}\right] ,\\ S_{3,i}^{(k)}= & {} S_{3,i}^{(k-1)}+\delta _{k}\left[ \frac{1}{m}\sum _{\ell =1}^{m}\left[ (\mathbf {y}_{i}-{\varvec{\eta }}(\widehat{{\varvec{\beta }}_{ p}}^{{\scriptscriptstyle (k+1)}},\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}}))^{\top }\mathcal {E}({\varvec{D}}^{{\scriptscriptstyle -1}})^{{\scriptscriptstyle (\ell ,k)}}\right. \right. \\&\left. \left. (\mathbf {y}_{i}-{\varvec{\eta }}(\widehat{{\varvec{\beta }}_{ p}}^{{\scriptscriptstyle (k+1)}},\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}}))-2\vartheta _{p}(\mathbf {y}_{i}-{\varvec{\eta }}(\widehat{{\varvec{\beta }}_{ p}}^{{\scriptscriptstyle (k+1)}},\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}}))^{\top }\mathbf {1}_{n_{i}}\right. \right. \\&\left. \left. +\;\frac{\tau _{p}^{4}}{4}\mathcal {E}(\mathbf {u}_{i})^{{\scriptscriptstyle (\ell ,k)\top }}\mathbf {1}_{n_{i}}\right] -S_{3,i}^{(k-1)}\right] ,\\ S_{4,i}^{(k)}= & {} S_{4,i}^{(k-1)}+\delta _{k}\left[ \frac{1}{m}\sum _{\ell =1}^{m} \Big [\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)}}\mathbf {b}_{i}^{{\scriptscriptstyle (\ell ,k)^{\top }}}\Big ]-S_{4,i}^{(k-1)}\right] . \end{aligned}$$

M-step: Update \(\widehat{{\varvec{\theta }}^{(k)}}\) by maximizing \( Q \left( {\varvec{\theta }}\mid \widehat{{\varvec{\theta }}^{(k)}}\right) \) over \({\varvec{\theta }}\), which leads to the following expressions:

provided that the inverse of the matrix \(S_{1,i}^{(k)}\) exists. Given a set of suitable initial values \(\widehat{{\varvec{\theta }}}^{(0)}\) (a simple strategy for specifying \(\widehat{{\varvec{\theta }}}^{(0)}\) is described in Appendix A1), the SAEM iterates until convergence at iteration k, if \(\max _{i}\left\{ \dfrac{|\widehat{\theta }_{i}^{(k+1)}- \widehat{\theta }_{i}^{(k)}|}{|\widehat{\theta }_{i}^{(k)}|+\delta _{1}}\right\} <\delta _{2}\), where \(\delta _1\) and \(\delta _2\) are pre-established small values. As suggested on page 269 in Searle et al. (1992), we used \(\delta _1=0.001\) and \(\delta _2=0.0001\). In addition, we also used a second convergence criterion defined by \(\max _{i}\left\{ \frac{|\widehat{\theta }_{i}^{(k+1)}-\widehat{\theta }_{i}^{(k)}|}{\sqrt{\mathrm {{\widehat{var}}(\theta }_{i}^{(k)}})+\delta _{1}}\right\} <\delta _{2}\), as proposed by Booth and Hobert (1999).

3.4 Simulation of random effects

In order to generate samples from \(f(\mathbf {b}_{i}\mid \mathbf {y}_{i})\), we use the Metropolis-Hastings (MH) algorithm (Metropolis et al. 1953; Hastings 1970), noting that the conditional distribution \(f\left( \mathbf {b}_{i}\mid \mathbf {y}_{i}\right) \) can be represented as \( f\left( \mathbf {b}_{i}\mid \mathbf {y}_{i}\right) \propto f\left( \mathbf {y}_{i}\mid \mathbf {b}_{i}\right) f\left( \mathbf {b}_{i}\right) , \) where \(\mathbf {b}_{i}\sim \text {N}_{q}(\mathbf 0 ,{\varvec{\varPsi }})\) and \(f\left( \mathbf {y}_{i}\mid \mathbf {b}_{i}\right) =\prod _{j=1}^{n_{i}}f(y_{ij}\mid \mathbf {b}_{i})\), with \(y_{ij}\mid \mathbf {b}_{i}\sim AL\big (\eta (\mathbf {A}_{i}{\varvec{\beta }}_{p}+\mathbf {B}_{i}\mathbf {b}_{i},\mathbf {x}_{ij}),\)\(\sigma ,p\big )\). Since this function is a product of two distributions (with support in \(\mathbb {R}^q\)), a suitable choice for the proposed density is a multivariate normal distribution with mean and variance-covariance matrix given by \(\widehat{{\varvec{\mu }}}_{\mathbf {b}_{i}}= \mathrm {E} (\mathbf {b}_i^{\scriptscriptstyle {(k-1)}}\mid \mathbf {y}_i)\) and \(\widehat{{\varvec{\varSigma }}}_{\mathbf {b}_{i}}= \mathrm {Var} (\mathbf {b}_i^{\scriptscriptstyle {(k-1)}}\mid \mathbf {y}_i)\) respectively. These quantities are obtained from the last iteration of the SAEM algorithm. Note that this candidate generates a high acceptance rate, making the algorithm fast.

4 Estimation of the likelihood and standard errors

4.1 Likelihood estimation

Given an observed data, the likelihood function \(\ell _{o}({\varvec{\theta }}\mid \mathbf {y})\) of the model defined in (3)-(4) is given by

where the integral can be expressed as an expectation with respect to \(\mathbf {b}_{i}\), i.e., \(\text{ E }[f(\mathbf {y}_{i}\mid \mathbf {b}_{i};{\varvec{\theta }})]\). The evaluation of this integral is not possible analytically, so it is often replaced by its Monte Carlo approximation involving a large number of simulations. However, alternative importance sampling (IS) procedures might require a smaller number of simulations than the typical Monte Carlo procedure. Following Meza et al. (2012), we compute this integral using an IS scheme for any continuous distribution \(\widehat{f}(\mathbf {b}_{i};{\varvec{\theta }})\) of \(\mathbf{b _i}\), having the same support as \(f(\mathbf {b}_{i};{\theta })\). Re-writing (8) as

we can express it as an expectation with respect to \(\mathbf {b}_{i}^{*}\), where \(\mathbf {b}_{i}^{*}\sim \widehat{f}(\mathbf {b}_{i}^{*};{\varvec{\theta }})\). Thus, the likelihood function can be approximated as

where \(\{\mathbf {b}_{i}^{*(\ell )}\}, l=1,\ldots ,m\), is a Monte Carlo sample from \(\widehat{f}(\mathbf {b}_{i}^{*};{\varvec{\theta }})\), and \(f(\mathbf {y}_{i}\mid \mathbf {b}_{i}^{*(\ell )};{\varvec{\theta }})\) is expressed as \(\prod _{j=1}^{n_{i}}f(y_{ij}\mid \mathbf {b}_{i}^{*(\ell )};{{\varvec{\theta }}})\) due to the conditional independence assumption. An efficient choice for \(\widehat{f}(\mathbf {b}_{i}^{*\scriptscriptstyle (\ell )};\theta )\) is \(f(\mathbf {b}_i\mid \mathbf{y}_i)\). Therefore, we use the same proposed distribution discussed in Sect. 3.4, generating \(\mathbf {b}_{i}^{*(\ell )}\sim \text {N}_{q}(\widehat{{\varvec{\mu }}}_{\mathbf {b}_{i}},\widehat{{\varvec{\varSigma }}}_{\mathbf {b}_{i}})\).

4.2 Standard error approximation

At the kth iteration, the empirical score function \(\text {s}(\mathbf {y}_i\mid {{\varvec{\theta }}})^{(k)}\) for the i-th subject can be computed as

where \(\mathbf{q }^{(\ell ,k)}, \ell =1,\ldots ,m,\) are the simulated values of the conditional distribution \(f(\cdot \mid {\varvec{\theta }}^{(k-1)},\mathbf {y}_i)\). Using Louis’s method (Louis 1982), the observed information matrix at iteration k, can be approximated as \(\mathbf I _{e}({\varvec{\theta }}\mid \mathbf {y})^{(k)}= \sum ^n_{i=1}\mathbf s (\mathbf {y}_i\mid {{\varvec{\theta }}})^{(k)}{} \mathbf s ^{\scriptscriptstyle \top }(\mathbf {y}_i\mid {{\varvec{\theta }}})^{(k)}\). Expressions for the elements of the score vector are given in Appendix A3.

5 Simulated data

In order to examine the performance of the proposed method, we conduct some simulation studies. The first simulation study shows that the ML estimates based on the SAEM algorithm provide good asymptotic properties. The second study investigates the consequences on population inferences when the normality assumption of the random effects is not considered. To do that, we used a heavy tailed distribution for the random effect term, testing the robustness of the proposed method in terms of the parameter estimation.

5.1 Finite sample properties

As in Pinheiro and Bates (1995), we performed the first simulation study with the following three parameter nonlinear growth-curve logistic model:

where \(t_{ij}=\) 100, 267, 433, 600, 767, 933, 1100, 1267, 1433, 1600 for all i. The goal is to estimate the fixed effects parameters \(\beta \)’s for a grid of percentiles \(p=\{0.50,0.75,0.95\}\). A Gaussian random effect \(b_{1i},\) for \(i=1,\ldots ,n\) is added to the first growth parameter \(\beta _1\) and its effect on the growth-curve is shown in Fig. 1.

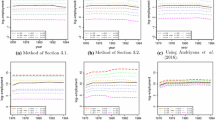

Parameter interpretation of this model is discussed in Sect. 6. The random effect \(b_{1i}\) and the error term \({\varvec{\epsilon }}_i=(\epsilon _{i1}\ldots ,\epsilon _{i10})^{\top }\) are non-correlated. In fact, \(b_{1i}{\mathop {\sim }\limits ^{\mathrm{\scriptscriptstyle {iid}}}}\text {N}(0,\sigma ^2_b)\) and \(\epsilon _{ij}{\mathop {\sim }\limits ^{\mathrm{\scriptscriptstyle {iid}}}}\text {AL}(0,\sigma _e,p)\). We set \({\varvec{\beta }}_{p}=(\beta _1,\beta _2,\beta _3)^{\top }\)\(=(200,700,350)^{\top }, \sigma _e=0.5\) and \(\sigma _b^2=10\). Using the notation in (3), the matrices \(\mathbf {A}_i\) and \(\mathbf {B}_i\) are given by \(\mathbf I _{3}\) and \((1,0,0)^{\top }\) respectively. For different sample sizes, say, \(n=25,\) 50, 100 and 200, we generate 100 datasets for each scenario. In addition, we choose \(m=20, c=0.25\) and \(W=500\) for the SAEM algorithm convergence parameters. For all scenarios, we compute the square root of the mean square error (RMSE), bias (Bias) and Monte Carlo standard deviation (Sd) for each parameter over the 100 replicates. These quantities are defined as \(\mathrm {RMSE}(\widehat{\theta _{i}}) = \sqrt{\text {Sd}^2(\widehat{\theta _{i}})+\mathrm {{Bias}}^2(\widehat{\theta _{i}})}, \mathrm {{Bias}}(\widehat{\theta _{i}}) = \overline{\widehat{\theta _{i}}} - \theta _i\) and \(\text {Sd}(\widehat{\theta _{i}}) = \sqrt{\frac{1}{99}\sum _{j=1}^{100}\big (\widehat{\theta _{i}}^{(j)} - \overline{\widehat{\theta _{i}}}\big )^2}\), where \(\overline{\widehat{\theta _i}} = \frac{1}{100}\sum _{j=1}^{100}\widehat{\theta }_i^{(j)}\) is the Monte Carlo mean (Mean) with \({\theta _{i}}^{(j)}\) the estimate of \(\theta _{i}\) from the j-th sample, \(j=1\ldots 100\). Based on Fig. 2, we conclude that the bias in the estimation of fixed effects converges to zero when n increases, i.e. the estimators are asymptotically unbiased for the parameters. The values of Sd and RMSE decrease monotonically when n increases, indicating that the estimators are consistent. Note that for quantile \(p=0.95\) (an extreme quantile), the standard deviation is much higher than quantiles \(p=0.50\) and \(p=0.75\). As an overall conclusion, we can say that, in general, the proposed SAEM algorithm provides good asymptotic properties for the ML estimates.

Table 1 shows the estimation of the model parameters. Note that the intercept (\(\beta _1\)) increases along the quantiles. This is a natural property of the intercept in QR models. As a consequence, the bias and RMSE increase significantly for extreme quantiles. In general, and for all the model parameters, good asymptotic properties in terms of bias and RMSE are observed.

As was suggested by a reviewer, Table 2 shows the coverage probability and the theoretical standard errors (obtained using the approximation proposed in Sect. 4.2) for the QR-NLME model (10) considering, for computational time reasons, a sample of size \(n=50\) and quantiles \(p=0.50\) and \(p=0.75\). From these results, one can see that the empirical (Sd) and theoretical (IM-SE) standard errors are similar. Moreover, the Monte Carlo coverage probability (CP %) is high, showing the good behavior of the obtained estimates. We have omitted the results for the intercept \(\beta _1\), because this parameter varies the quantile, capturing the location of the different quantiles of the conditional distribution. This evidence indicates that the AL assumption does not generate an important bias in the results.

5.2 Robustness study

The aim of this simulation scheme is to study the behavior of the parameter estimates when the distribution of random effects is misspecified. We consider a similar simulation scheme as in the previous subsection, but considering a set of quantiles \(p=\{0.50,0.75\}\) and a fixed sample size \(n=50\). We consider 100 Monte Carlo samples, generating the random effect term from (a) a Student’s-t distribution with \(\nu =4\) degrees of freedom (\(t_4\)) and from (b) a contaminated normal distribution with parameters \(\nu _1=0.1\) and \(\nu _2=\{0.1,0.2,0,3\}\)), i.e., three scenarios of contamination, say, 10% (C 10%), 20% (C 20%) and 30% (C 30%). We set the value of parameters as follows: \({\varvec{\beta }}_{p}=(200,700,350)^{\top }, \sigma _e=0.5\) and \(\sigma _b^2=10\).

Figure 3 shows the simulated curves from the growth-curve logistic model assuming different probability distributions for the random effect term, say, normal, Student’s-t with \(\nu =4\) and contaminated normal with \(\nu _1=0.1\) and \(\nu _2=0.1\).

50 simulated curves from the growth-curve logistic model using different distributions for the random effect term: normal (right), Student’s-t with \(\nu =4\) (center), contaminated normal with \(\nu _1=0.1\) and \(\nu _2=0.1\) (left). In all cases the location and scale parameters are \(\mu =0\) and \(\sigma _b^2=10\), respectively

From Table 3 we can see that the proposed model is robust even when the level of contamination is high. For quantile \(p=0.75\), naturally, the parameter \(\beta _1\) tends to increase for higher levels of contamination. As expected, the Sd and RMSE increase when the distribution of the random effects is heavy-tailed.

6 Illustrative examples

In this section, we illustrate the application of our method by analyzing two longitudinal datasets.

6.1 Growth curve: Soybean data

For the first application, we consider the soybean genotype data analyzed previously by Davidian and Giltinan (1995) and Pinheiro and Bates (2000). The experiment consists of measuring (along time) the leaf weight (in grams) as a measure of growth of two soybean genotypes, namely, a commercial variety called Forrest (F) and an experimental strain called Plan Introduction #416937 (P). The samples were taken approximately weekly during 8 to 10 weeks. Plants were planted for three consecutive years (1988, 1989 and 1990) in 16 plots (8 per genotype) and the mean leaf weight of six randomly selected plants was measured.

We use the three parameter logistic model in (10), introducing a random effect term for each parameter and a dichotomic covariate (gen) as

where, \(\varphi _{1i} = \beta _1 + \beta _4 gen_i + b_{1i}, \varphi _{2i} = \beta _2 + b_{2i}\) and \(\varphi _{3i} = \beta _3 + b_{3i}.\) The observed value \(y_{ij}\) represents the mean weight of leaves (in grams) from six randomly selected soybean plants in the i-th plot, \(t_{ij}\) days after planting; \(gen_i\) is a dichotomic variable indicating the genotype of plant i (0=Forrest, 1= Introduction) and \(\epsilon _{ij}\) is the measurement error term. Moreover, \({\varvec{\beta }}_{p}=(\beta _1,\beta _2,\beta _3,\beta _4)^{\scriptscriptstyle \top }\) and \(\mathbf {b}_i=(b_{1i},b_{2i},b_{3i})^{\scriptscriptstyle \top }\) are the fixed and random effects vectors respectively.

The matrices \(\mathbf {A}_i\) and \(\mathbf {B}_i\) are defined as

The interpretation of parameters is as follows: \(\varphi _1\) is the asymptotic leaf weight, \(\varphi _2\) is the time at which the leaf reaches half its asymptotic weight and \(\varphi _3\) is the time elapsed between the leaf reaching half and \(0.7311=1/(1 + e^{-1}\)) of its asymptotic weight. Since the aim of the study is to compare the final (asymptotic) growth of the two kinds of soybeans, the covariate \(gen_i\) was incorporated in the first component of the growth function. Therefore, the coefficient \(\beta _4\) represents the difference (in grams) of the asymptotic leaf weight between the introduction plan and Forrest type (control). Figure 4 shows the leaf weight profiles.

Figure 5 shows the fitted regression lines for quantiles 0.10, 0.25, 0.50, 0.75 and 0.90 by genotype. From this figure we can see how the extreme quantile estimation functions capture the full data variability, detecting some atypical observations (particularly for the Introduction genotype).

Figure 8 in Appendix A4 shows a summary of the obtained results. It can be seen that the effect of the genotype is significant for all the quantile profiles. Moreover, the difference varies with respect to the conditional quantile being more significant for lower quantiles. Using the information provided by the 95th percentile, we conclude that the soybean plants have a mean leaf weight around 19.35 grams for the Forrest genotype and 23.25 grams for the Introduction genotype. Therefore, the asymptotic difference for the two genotypes is about 4 grams. Finally, it is important to stress that the convergence of the fixed effect estimates and variance components was analyzed using graphical criteria as shown in Figure 10 (Appendix A4).

6.2 HIV viral load study

The dataset analyzed in this section comes from a clinical trial (ACTG 315), studied in previous works by Wu (2002) and Lachos et al. (2013). In this study, the HIV viral load of 46 HIV-1 infected patients under antiretroviral treatment (protease inhibitor and reverse transcriptase inhibitor drugs) is analyzed. The viral load and some other covariates were measured several times after the start of treatment. Wu (2002) found that the only significant covariate for modeling the virus load was CD4. Figure 6 shows the profile of viral load in log10 scale and CD4 cell count/100 per \(\text {mm}^3\) versus time (in days/100) for six randomly selected patients. As can be seen, an inverse relationship exists between the viral load and the CD4 cell count, i.e., high CD4 cell count leads to lower levels of viral load. This is because the CD4 cells (also called T-cells) alert the immune system in case of invasion by viruses and/or bacteria. Consequently, a lower CD4 count means a weaker immune system.

In order to fit the ACTG 315 data, we propose a bi-phasic nonlinear model considered by Wu (2002) and also used by Lachos et al. (2013). The proposed NLME model is given by,

for \(i=1,\ldots ,46, j=1,\ldots ,n_i\) with \(\varphi _{1i} = \beta _1 + b_{1i}, \varphi _{2i} = \beta _2 + b_{2i},\)\(\varphi _{3i} = \beta _3 + b_{3i}, \varphi _{4ij} = \beta _4 + \beta _5 CD4_{ij} + b_{4i},\) where the observed value \(y_{ij}\) represents the log-10 transformation of the viral load for the i-th patient at time \(j, CD4_{ij}\) is the CD4 cell count (in cells/100\(\text {mm}^3\)) for the i-th patient at time j and \(\epsilon _{ij}\) is the measurement error term. As in the previous case, \({\varvec{\beta }}_{p}=(\beta _1,\beta _2,\beta _3,\beta _4,\beta _5)^{\scriptscriptstyle \top }\) and \(\mathbf b _i=(b_{1i},b_{2i},b_{3i},b_{4i})^{\scriptscriptstyle \top }\) denote the fixed and random effects vectors respectively, and \(\mathbf CD4 _{i}=(CD4_{i1},\ldots ,\)\(CD4_{i{n_i}})^{\scriptscriptstyle \top }\). The matrices \(\mathbf {A}_i\) and \(\mathbf {B}_i\) are defined as

The parameters \(\varphi _{2i}\) and \(\varphi _{4i}\) are the two-phase viral decay rates, which represent the minimum turnover rates of productively infected cells and that of latent or long-lived infected cells if therapy was successful, respectively. For more details about the model in (11) see Grossman et al. (1999) and Perelson et al. (1997).

Figure 7 shows the fitted regression lines for quantiles 0.10, 0.25, 0.50, 0.75 and 0.90 for the ACTG 315 data. In order to plot this, first, we have fixed the CD4 covariate using the predicted sequence from a linear regression (including a quadratic term) for explaining the CD4 cell count over time. It can be seen how the quantile estimated functions follow the data behavior and make it easy to estimate a specific viral load quantile at any time of the experiment. Extreme quantile functions bound most of the observed profiles and evidence possible influential observations.

The results after fitting the QR-NLME model over the grid of quantiles \(p = \{0.05, 0.10, \ldots , 0.95\}\) are shown in Fig. 9 in Appendix A4. The first phase viral decay rate is positive and its effect tends to increase proportionally along quantiles. Moreover, the second phase viral decay rate is positively correlated with the CD4 count and therefore with the duration of therapy. Consequently, more days of treatment imply a higher CD4 cell count and therefore a higher second phase viral decay. The CD4 cell process for this model has different behavior than for the expansion phase (Huang and Dagne 2011). The significance of the CD4 covariate increases positively with respect to quantiles (until quantile \(p=0.60\) approximately) and its effect becomes constant for greater quantiles. As in the previous case, the convergence of estimates for all parameters was also assessed using the graphical criteria.

7 Conclusions

In this paper, we investigate quantile regression under nonlinear mixed effects models from a likelihood-based perspective. The AL distribution and SAEM algorithm are combined efficiently to propose an exact ML estimation method. We evaluate the robustness of estimates, as well as the finite sample performance of the algorithm and the asymptotic properties of the ML estimates through empirical experiments. To the best of our knowledge, this paper is the first attempt at exact ML estimation in the context of QR-NLME models. The methods developed can be readily implemented in \(\texttt {R}\) through the package \(\texttt {qrNLMM()}\), making our approach quite powerful and accessible to practitioners. We apply our method to two datasets from longitudinal studies, obtaining interesting results from the point of view of quantile estimation. Moreover, in the two considered applications, similar conclusions to the previous analyses of these datasets were obtained.

As was observed by a referee, Mu & He (2007) (see also Liu and Bottai 2009) noted that nonlinear QR models can be modeled using a monotone transformation over the QR linear models. Although this approach is very interesting and easy to understand, our problem considers not only a nonlinear behavior of the response but also on the random effects. In addition, we consider the assumption of the AL distribution to estimate the model parameters. In this context, an in-depth study of the properties of parameter estimation of fixed effects within the context of mixed effect models seems to be an interesting topic for future research.

Finnaly, there are a large number of possible extensions of the current work. For modeling both skewness and heavy tails in the random effects, the use of scale mixtures of skew-normal (SMSN) distributions (Lachos et al. 2010) is a feasible choice. Also, HIV viral loads studies include covariates (CD4 cell counts) that often come with substantial measurement errors (Wu 2002). How to incorporate measurement errors in covariates within our robust framework can also be part of future research. An in-depth investigation of such extensions is beyond the scope of the present paper, but certainly is an interesting topic for future research.

References

Aghamohammadi A, Mohammadi S (2017) Bayesian analysis of penalized quantile regression for longitudinal data. Stat Pap 58(4):1035–1053

Allassonnière S, Kuhn E, Trouvé A (2010) Construction of Bayesian deformable models via a stochastic approximation algorithm: a convergence study. Bernoulli 16(3):641–678

Andriyana Y, Gijbels I, Verhasselt A (2016) Quantile regression in varying-coefficients models: non-crossing quantile curves and heteroscedasticity. Stat Pap. https://doi.org/10.1007/s00362-016-0847-7

Barndorff-Nielsen OE, Shephard N (2001) Non-gaussian ornstein-uhlenbeck-based models and some of their uses in financial economics. J R Stat Soc Ser B 63(2):167–241

Booth JG, Hobert JP (1999) Maximizing generalized linear mixed model likelihoods with an automated Monte Carlo EM algorithm. J R Stat Soc Ser B 61(1):265–285

Davidian M, Giltinan D (1995) Nonlinear models for repeated measurement data. CRC Press, Boca Raton

Davidian M, Giltinan D (2003) Nonlinear models for repeated measurement data: an overview and update. J Agric Biol Environ Stat 8(4):387–419

Delyon B, Lavielle M, Moulines E (1999) Convergence of a stochastic approximation version of the EM algorithm. Ann Stat 8:94–128

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–38

Fu L, Wang Y (2012) Quantile regression for longitudinal data with a working correlation model. Comput Stat Data Anal 56(8):2526–2538

Galarza C, Lachos VH, Cabral C, Castro L (2017) Robust quantile regression using a generalized classs of skewed distributions. Statistics 6:113–130

Galvao A (2011) Quantile regression for dynamic panel data with fixed effects. J Econ 164(1):142–157

Galvao A, Montes-Rojas GV (2010) Penalized quantile regression for dynamic panel data. J Stat Plan Inference 140(11):3476–3497

Geraci M, Bottai M (2007) Quantile regression for longitudinal data using the asymmetric Laplace distribution. Biostatistics 8(1):140–154

Geraci M, Bottai M (2014) Linear quantile mixed models. Stat Comput 24(3):461–479

Grossman Z, Polis M, Feinberg M, Grossman Z, Levi I, Jankelevich S, Yarchoan R, Boon J, de Wolf F, Lange J, Goudsmit J, Dimitrov D, Paul W (1999) Ongoing HIV dissemination during HAART. Nat Med 5(10):1099–1104

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57(1):97–109

Huang Y, Dagne G (2011) A Bayesian approach to joint mixed-effects models with a skew-normal distribution and measurement errors in covariates. Biometrics 67(1):260–269

Koenker R (2004) Quantile regression for longitudinal data. J Multivar Anal 91(1):74–89

Koenker R (2005) Quantile regression. Cambridge University Press, New York

Kozubowski T, Nadarajah S (2010) Multitude of Laplace distributions. Stat Pap 51:127–148

Kuhn E, Lavielle M (2004) Coupling a stochastic approximation version of EM with an MCMC procedure. ESAIM Probab Stat 8:115–131

Kuhn E, Lavielle M (2005) Maximum likelihood estimation in nonlinear mixed effects models. Comput Stat Data Anal 49(4):1020–1038

Lachos VH, Ghosh P, Arellano-Valle RB (2010) Likelihood based inference for skew-normal independent linear mixed models. Stat Sin 20(1):303–322

Lachos VH, Castro LM, Dey DK (2013) Bayesian inference in nonlinear mixed-effects models using normal independent distributions. Comput Stat Data Anal 64:237–252

Lavielle M (2014) Mixed effects models for the population approach. Chapman and Hall/CRC, Boca Raton

Lipsitz SR, Fitzmaurice GM, Molenberghs G, Zhao LP (1997) Quantile regression methods for longitudinal data with drop-outs: application to CD4 cell counts of patients infected with the human immunodeficiency virus. J R Stat Soc Ser C 46(4):463–476

Liu Y, Bottai M (2009) Mixed-effects models for conditional quantiles with longitudinal data. Int J Biostat. https://doi.org/10.2202/1557-4679.1186

Louis TA (1982) Finding the observed information matrix when using the EM algorithm. J R Stat Soc Ser B 44(2):226–233

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E (1953) Equation of state calculations by fast computing machines. J Chem Phys 21:1087–1092

Meza C, Osorio F, De la Cruz R (2012) Estimation in nonlinear mixed-effects models using heavy-tailed distributions. Stat Comput 22:121–139

Mu Y, He X (2007) Power transformation toward a linear regression quantile. J Am Stat Assoc 102:269–279

Perelson AS, Essunger P, Cao Y, Vesanen M, Hurley A, Saksela K, Markowitz M, Ho DD (1997) Decay characteristics of HIV-1-infected compartments during combination therapy. Nature 387(6629):188–191

Pinheiro J, Bates D (1995) Approximations to the log-likelihood function in the nonlinear mixed effects model. J Comput Gr Stat 4:12–35

Pinheiro JC, Bates DM (2000) Mixed-effects models in S and S-PLUS. Springer, New York

R Core Team (2017) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna

Searle SR, Casella G, McCulloch C (1992) Variance components. Wiley, New York

Sriram K, Ramamoorthi R, Ghosh P (2013) Posterior consitency of Bayesian quantile regression based on the misspecified asymmetric Laplace distribution. Bayesian Anal 8(2):479–504

Wang J (2012) Bayesian quantile regression for parametric nonlinear mixed effects models. Stat Methods Appl 21:279–295

Wu L (2002) A joint model for nonlinear mixed-effects models with censoring and covariates measured with error, with application to AIDS studies. J Am Stat Assoc 97(460):955–964

Wu L (2010) Mixed effects models for complex data. Chapman & Hall/CRC, Boca Raton

Yu K, Moyeed R (2001) Bayesian quantile regression. Stat Probab Lett 54(4):437–447

Yu K, Zhang J (2005) A three-parameter asymmetric Laplace distribution and its extension. Commun Stat Theory Methods 34(9–10):1867–1879

Yuan Y, Yin G (2010) Bayesian quantile regression for longitudinal studies with nonignorable missing data. Biometrics 66(1):105–114

Acknowledgements

We thank the Editor and two anonymous referees whose constructive comments and suggestions led to an improved presentation of the paper. The research of C. Galarza was supported by Grant 2015/17110-9 from Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP-Brazil). L. M. Castro acknowledges Grant Fondecyt 1170258 from the Chilean government. The research of F. Louzada was supported by Grant 305351/2013-3 from from Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq-Brazil) and by Grant 2013/07375-0 from Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP-Brazil).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 A1: Specification of initial values

It is well known that a smart choice of the initial values for the ML estimates can assure a fast convergence of the algorithm to the global maxima solution. Without considering the random effect term, i.e., \(\mathbf {b}_{i}=\mathbf {0}\), let \(\mathbf{y}_{i}\sim \text {AL}({\varvec{\eta }}({\varvec{\beta }}_{ p},\mathbf {0}),\sigma ,p)\). Next, considering the ML estimates for \({\varvec{\beta }}_{ p}\) and \(\sigma \) as defined in Yu and Zhang (2005) for this model, we follow the steps below for the QR-LME model implementation:

- 1.

Compute an initial value \(\widehat{{\varvec{\beta }}}_{ p}^{{\scriptscriptstyle (0)}}\) as

$$\begin{aligned} \widehat{{\varvec{\beta }}}_{ p}^{{\scriptscriptstyle (0)}}=\textit{argmin}{\scriptscriptstyle \beta _{ p}\in \mathbb {R}^{{k}}}\sum ^n_{i=1}\rho _{p}({\mathbf{y}_{i}-{\varvec{\eta }}({\varvec{\beta }}_{ p},\mathbf {0})}). \end{aligned}$$ - 2.

Using the initial value of \(\widehat{{\varvec{\beta }}}_{ p}^{{\scriptscriptstyle (0)}}\) obtained above, compute \(\widehat{\sigma }^{(0)}\) as

$$\begin{aligned} \widehat{\sigma }^{(0)}=\frac{1}{n}\sum ^n_{i=1}\rho _{p}({\mathbf{y}_{i}-{\varvec{\eta }}({\varvec{\beta }}_{ p},\mathbf {0})}). \end{aligned}$$ - 3.

Use a \(q\times q\) identity matrix \(\mathbf I _{{\scriptscriptstyle q\times q}}\) for the the initial value \({\varvec{\varPsi }}^{(0)}\).

1.2 A2: Computing the conditional expectations

Due to the independence between \(u_{ij}\!\mid \! y_{ij},\mathbf {b}_{i}\) and \(u_{ik}\!\mid \! y_{ik},\mathbf {b}_{i}\), for all \(j,k\!=\!1,2,\ldots ,n_{i}\) and \(j\ne k\), we can write \(\mathbf {u}_{i}\mid \mathbf {y}_{i},\mathbf {b}_{i}=[\begin{array}{cccc} u_{i1}\mid y_{i1},\mathbf {b}_{i}&u_{i2}\mid y_{i2},\mathbf {b}_{i}&\cdots&u_{in_{i}}\mid y_{in_{i}},\mathbf {b}_{i}\end{array}]^{{\scriptscriptstyle {\top }}}\). Using this fact, we are able to compute the conditional expectations \(\mathcal {E}(\mathbf {u}_{i})\) and \(\mathcal {E}({\varvec{D}}_{i}^{{\scriptscriptstyle -1}})\) in the following way:

We have \(u_{ij}\mid y_{ij},\mathbf {b}_{i}\sim \text {GIG}(\frac{1}{2},\chi _{ij},\psi )\), where \(\chi _{ij}\) and \(\psi \) are defined in Sect. 3.2. Then, using (2), we compute the moments involved in the equations above as \(\mathcal {E}({u}_{ij})=\frac{\chi _{ij}}{\psi }(1+\frac{1}{\chi _{ij}\psi })\) and \(\mathcal {E}({u}_{ij}^{-1})=\frac{\psi }{\chi _{ij}}\). Thus, for the k-th iteration of the algorithm and for the \(\ell \)-th Monte Carlo realization, we can compute \(\mathcal {E}(\mathbf {u}_{i})^{{\scriptscriptstyle (\ell ,k)}}\) and \(\mathcal {E}[{\varvec{D}}_{i}^{{\scriptscriptstyle -1}}]^{{\scriptscriptstyle (\ell ,k)}}\) using equations (12)-(13) where

1.3 A3: The empirical information matrix

Using (5), the complete log-likelihood function can be rewritten as

where \(\zeta _{i}=\mathbf {y}_{i}-{\varvec{\eta }}({\varvec{\beta }}_{ p},\mathbf {b}_{i})-\vartheta _{p}\mathbf {u}_{i}\) and \({\varvec{\theta }}=({\varvec{\beta }}_{{\scriptscriptstyle p}}^{{\scriptscriptstyle \top }},\sigma ,\mathbf {{\varvec{\alpha }}^{{\scriptscriptstyle \top }}})^{{\scriptscriptstyle \top }}\). Differentiating with respect to \({\varvec{\theta }}\), we have the following score functions:

with \(\mathbf {J_{i}}\) as defined in Sect. 3.2. and

Let \({\varvec{\alpha }}\) be the vector of reduced parameters from \({\varvec{\varPsi }}\), the dispersion matrix for \(\mathbf {b}_i\). Using the trace properties and differentiating the complete log-likelihood function, we have that

Next, taking derivatives with respect to a specific \(\alpha _{j}\) from \({\varvec{\alpha }}\) based on the chain rule, we have

where, using the fact that \(\text {tr}\{\mathbf{ABCD }\}=(\mathrm {vec}(\mathbf{A }^{\top }))^{\top }\)\(({\varvec{D}}^{\top }\otimes \mathbf{B })(\mathrm {vec}(\mathbf{C }))\), (14) can be rewritten as

Let \(\mathcal {D}_{q}\) be the elimination matrix (Lavielle 2014), which transforms the vectorized \({\varvec{\varPsi }}\) (written as \(\text{ vec }({\varvec{\varPsi }})\)) into its half-vectorized form vech(\({\varvec{\varPsi }}\)), so that \(\mathcal {D}_{q}\mathrm {vec}({\varvec{\varPsi }})\)\(=\mathrm {vech}({\varvec{\varPsi }}).\) Using the fact that for all \(j=1,\ldots ,\frac{1}{2}q(q+1)\), the vector \((\mathrm {vec}(\frac{\partial {\varvec{\varPsi }}}{\partial \alpha _{j}})^{\top })^{\top }\) corresponds to the j-th row of the elimination matrix \(\mathcal {D}_{q}\), we can generalize the derivative in (14) for the vector of parameters \({\varvec{\alpha }}\) as

Finally, at each iteration, we can compute the empirical information matrix by approximating the score for the observed log-likelihood by the stochastic approximation given in (9).

1.4 A4: Figures

Graphical summary for the convergence of the fixed effect estimates, variance components of the random effects, and nuisance parameters performing a median regression \((p=0.50)\) for the soybean data. The vertical dashed line delimits the beginning of the almost sure convergence as defined by the cut-point parameter \(c=0.25\)

1.5 A5: Sample output from R package qrNLMM()

Rights and permissions

About this article

Cite this article

Galarza, C.E., Castro, L.M., Louzada, F. et al. Quantile regression for nonlinear mixed effects models: a likelihood based perspective. Stat Papers 61, 1281–1307 (2020). https://doi.org/10.1007/s00362-018-0988-y

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-018-0988-y