Abstract

A particularly important substructure in modeling joint linear chance-constrained programs with random right-hand sides and finite sample space is the intersection of mixing sets with common binary variables (and possibly a knapsack constraint). In this paper, we first revisit basic mixing sets by establishing a strong and previously unrecognized connection to submodularity. In particular, we show that mixing inequalities with binary variables are nothing but the polymatroid inequalities associated with a specific submodular function. This submodularity viewpoint enables us to unify and extend existing results on valid inequalities and convex hulls of the intersection of multiple mixing sets with common binary variables. Then, we study such intersections under an additional linking constraint lower bounding a linear function of the continuous variables. This is motivated from the desire to exploit the information encoded in the knapsack constraint arising in joint linear CCPs via the quantile cuts. We propose a new class of valid inequalities and characterize when this new class along with the mixing inequalities are sufficient to describe the convex hull.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given integers \(n,k\ge 1\), a matrix \(\varvec{W}=\left\{ w_{i,j}\right\} \in {{\mathbb {R}}}^{n\times k}_+\), a vector \(\varvec{\ell }\in {{\mathbb {R}}}_+^k\) and a nonnegative number \(\varepsilon \ge 0\), we consider the mixed-integer set defined by

We denote this set by \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). When \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+ \), constraints (1a) are often called big-M constraints, and constraints (1b) impose lower bounds on the continuous variables \(\varvec{y}\). Notice that (1c) is a constraint linking all continuous variables, but it is non-redundant only if \(\varepsilon \) is strictly positive. We will refer to (1c) as the linking constraint. When \(k=1\), \(\varvec{\ell }=\varvec{0}\), and \(\varepsilon =0\), the set \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) is nothing but what is commonly referred to as the mixing set (with binary variables) in the literature [1, 16, 20, 24, 41]. Sets of the form \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0)\) for general \(k>1\) were first considered by Atamtürk et al. [5]; we will call the set \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0)\) a joint mixing set in order to emphasize that k can be taken to be strictly greater than 1. We will refer to a set of the form \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) for general \(\varvec{\ell },\varepsilon \) as a joint mixing set with lower bounds. Finally, we will refer to a set of the form \({{{\mathcal {M}}}}(\varvec{W},{\mathbf {0}}, \varepsilon )\) for general \(\varepsilon \) as a joint mixing set with a linking constraint.

Our motivation for studying the structure of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) comes from joint linear chance-constrained programs (CCPs) with right-hand side uncertainty: given a probability space \((\varvec{\Xi }, {\mathcal {F}},{{\mathbb {P}}})\), a joint linear CCP with right-hand side uncertainty is an optimization problem of the following form:

where \({{{\mathcal {X}}}}\subseteq {{\mathbb {R}}}^m\) is a domain for the decision variables \(\varvec{x}\), \(\epsilon \in (0,1)\) is a risk level, \(\varvec{b}(\varvec{\xi })\in {{\mathbb {R}}}^k\) is the random right-hand side vector that depends on the random variable \(\varvec{\xi }\in \varvec{\Xi }\), and \(\varvec{A},\varvec{h}\) are matrices of appropriate dimension. For \(k=1\) (resp., \(k>1\)), inequality (2b) is referred to as an individual (resp., joint) chance constraint. Here, we seek to find a solution \(\varvec{x}\in {{{\mathcal {X}}}}\) satisfying the chance constraint (2b), enforcing that \(\varvec{A}\varvec{x}\ge \varvec{b}(\varvec{\xi })\) holds with probability at least the given confidence level \(1-\epsilon \), while minimizing the objective (2a). Joint chance constraints are used to model risk-averse decision-making problems in various applications, such as supply chain logistics [17, 18, 26, 38], chemical processes [14, 15], water quality management [32], and energy [33] (see [28] for further background and an extensive list of applications).

Problems with joint chance constraints are known to be notoriously challenging because the resulting feasible region is nonconvex even if all other constraints \(\varvec{x}\in {{{\mathcal {X}}}}\) and the restrictions inside the chance constraints are convex. Moreover, the sample space \(\varvec{\Xi }\) is typically continuous in practice, and the probability distribution \({\mathbb {P}}\) quantifying the uncertainty is often unavailable to the decision-maker. Consequently, the classical solution method is to use the Sample Average Approximation (SAA). The basic idea of SAA is to approximate \(\varvec{\Xi }\) via a set of sample scenarios \(\varvec{\xi }^1,\ldots , \varvec{\xi }^n\) and reduce the problem to the case with a finite-sample distribution; we refer the interested reader to [7, 8, 23] for further details of SAA for CCPs.

Inspired by this, joint linear CCPs with the finite sample space assumption have been extensively studied in the literature [1, 16, 20, 24, 41]. That is to assume that \(\varvec{\Xi }=\left\{ \varvec{\xi }^1,\ldots ,\varvec{\xi }^n\right\} \) for some integer \(n\ge 1\) and that \({{\mathbb {P}}}\left[ \varvec{\xi }=\varvec{\xi }^i\right] =p_i\) for \(i\in [n]\) for some \(p_1,\ldots ,p_n\ge 0\) with \(\sum _{i\in [n]}p_i=1\), where for any positive integer n, we define [n] to be the set \(\{1,\ldots ,n\}\). Under this setting, Luedtke et al. [24], Ruszczyński [30] observed that the joint linear CCP, defined by (2), can be reformulated as a mixed-integer linear program as follows:

where \(\varvec{b}\in {{\mathbb {R}}}^k\) is some vector satisfying \(\varvec{b}(\varvec{\xi }^i)\ge \varvec{b}\) for all i and \(\varvec{w_i}=(w_{i,1},\ldots ,w_{i,k})^\top \) denotes \(\varvec{b}(\varvec{\xi }^i)-\varvec{b}\). Note that by definition of \(\varvec{b}\), it follows that the data vector \(\varvec{w_i}\) is nonnegative for all \(i\in [n]\). Observe that \(\varvec{A}\varvec{x}\ge \varvec{b}\) are implicit inequalities, due to the chance constraint (2b) with \(1-\epsilon >0\). Here, \(z_i\) is introduced as an indicator variable to model the event \(\varvec{A}\varvec{x}\ge \varvec{b}(\varvec{\xi }^i)\). More precisely, when \(z_i=0\), the constraints (3c) enforce that \(\varvec{y}\ge \varvec{w_i}\) holds and thus \(\varvec{A}\varvec{x}\ge \varvec{b}(\varvec{\xi }^i)\) is satisfied. On the other hand, when \(z_i=1\), it follows that \(\varvec{y}\ge \varvec{0}\) and \(\varvec{A}\varvec{x}\ge \varvec{b}\), which is satisfied by default. Therefore, constraints (3c) are referred to as big-M constraints. Finally, (3d) enforces that the probability of \(\varvec{A}\varvec{x}\ge \varvec{b}(\varvec{\xi }^i)\) being violated is at most \(\epsilon \).

The size of the deterministic equivalent formulation of the joint CCP given by (3) grows linearly with the number of scenarios. Unfortunately, such a reformulation based on big-M constraints comes with the disadvantage that the corresponding relaxations obtained by relaxing the binary variables into continuous are weak. Thus, in order to achieve effectiveness in practical implementation, these reformulations must be strengthened with additional valid inequalities.

A particularly important and widely applicable class of valid inequalities that strengthen the big-M reformulations of CCPs rely on a critical specific substructure in the formulation (3), called a mixing set with binary variables; see e.g., Luedtke et al. [24] and Küçükyavuz [16]. Formally, given nonnegative coefficients \(w_{1,j},\ldots ,w_{n,j}\), a mixing set with binary variables is defined as follows:

hence the set defined by (3c) and (3e), i.e.,

is nothing but a joint mixing set that shares common binary variables \(\varvec{z}\), but independent continuous variables \(y_j\), \(j\in [k]\). Here, note that the set defined by (3c) and (3e) is precisely \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) when \(\varvec{\ell }=\varvec{0}\) and \(\varepsilon =0\). Also, it is worthwhile to note that the constraint (3d) is a knapsack constraint. Therefore, the formulation (3) can be strengthened by the inclusion of valid inequalities originating from the set defined by (3c)–(3e).

The term mixing set is originally coined by Günlük and Pochet [13] for the sets of the form

where the parameters are \(u\in {{\mathbb {R}}}_+\) and \(\varvec{q}=(q_1,\ldots ,q_n)^\top \in {{\mathbb {R}}}^n\). Such sets \(\text {GMIX}\) with general integer variables have applications in lot sizing and capacitated facility location problems; see e.g., [10, 11, 13, 25, 40] (see also [34] for a survey of the area). For mixing sets with general integer variables such as \(\text {GMIX}\) defined above, Günlük and Pochet [13] introduced the so-called mixing inequalities—an exponential family of linear inequalities that admits an efficient separation oracle—and showed that this class of inequalities are sufficient to describe the associated convex hull of the sets \(\text {GMIX}\). In fact, prior to [13], in the context of lot-sizing problems, Pochet and Wolsey [27, Theorem 18] obtained the same result, albeit without using the naming convention of mixing sets/inequalities. Furthermore, the equivalence of \(\text {MIX}_j\) and \(\text {GMIX}\) under the additional domain restrictions \(\varvec{z}\in \{0,1\}^n\) and the assumption \(u\ge \max _i q_i\) is immediate. The appearance of mixing sets with binary variables dates back to the work of Atamtürk et al. [5] on vertex covering. Essentially, it was shown in [5] that the intersection of several sets of the form MIX\(_j\) with common binary variables \(\varvec{z}\) but separate continuous variables \(y_j\), \(j\in [k]\) can be characterized by the intersection of the corresponding star inequalities; see [5, Theorem 3]. Furthermore, it is well-known [24] that mixing inequalities for \(\text {MIX}_j\) are equivalent to the star inequalities introduced in [5]. We will give a formal definition of mixing (star) inequalities for mixing sets with binary variables in Sect. 3.

Due to the importance of their use in joint CCPs, the mixing (with knapsack) substructure (3c)–(3e) present in the reformulations of joint CCPs has received a lot of attention in the more recent literature.

-

For general k, i.e., when the number of linear constraints inside the chance constraint is more than one, Atamtürk et al. [5] proved that the convex hull of a joint mixing set of the form (3c) and (3e), which is equivalent to \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0)\), can be described by applying the mixing inequalities.

-

For \(k=1\), Luedtke et al. [24], Küçükyavuz [16], and Abdi and Fukasawa [1] suggested valid inequalities for a single mixing set subject to the knapsack constraint (3d).

-

For general k, Küçükyavuz [16] and Zhao et al. [41] proposed valid inequalities for a joint mixing set with a knapsack constraint.

Luedtke et al. [24] showed that the problem is NP-hard for \(k>1\) even when the restrictions inside the chance constraints are linear and each scenario has equal probability, in which case the knapsack constraint (3d) becomes a cardinality constraint. However, Küçükyavuz [16] argued that the problem for \(k=1\) under equiprobable scenarios is polynomial-time solvable and gave a compact and tight extended formulation based on disjunctive programming. Note that while not explicitly stated in Küçükyavuz [16], when \(k=1\) the polynomial-time solvability argument extends for the unequal probability case.

Many of these prior works aim to convexify a (joint) mixing set with a knapsack constraint directly. In contrast, in our paper we exploit the knapsack structure through an indirect approach based on quantile inequalities. Given \(\varvec{c}\in {{\mathbb {R}}}_+^k\) and \(\delta >0\), the \((1-\delta )\)-quantile for \(\varvec{c}^\top \varvec{y}\) is defined as

and the inequality \(\varvec{c}^\top \varvec{y}\ge q_{\varvec{c},\delta }\) is called a \((1-\delta )\)-quantile cut. By definition, a \((1-\epsilon )\)-quantile cut is valid for the solutions satisfying (3c)–(3e). The quantile cuts have been studied in [2, 19, 22, 29, 31, 36], and their computational effectiveness has been observed in practice. As opposed to mixing sets and associated mixing inequalities, the quantile cuts link many continuous variables together; it is plausible to conjecture that this linking of the continuous variables is the one of the main sources of their effectiveness in practice.

The structure of a joint mixing set with lower bounds \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\), defined in (1), is flexible enough to simultaneously work with quantile cuts. For \(j\in [k]\), let \(\ell _j\) denote the \((1-\epsilon )\)-quantile for \(\varvec{c}^\top \varvec{y}= y_j\). Then, for any \(j\in [k]\), we have

Note that \(\ell _j\) can be computed in \(O(n\log n)\) time, because without loss of generality we can assume \(w_{1,j}\ge \cdots \ge w_{n,j}\) after possible reordering of [n], and the optimum value of the above optimization problem is precisely \(w_{t,j}\) where t is the index such that \(\sum _{i\le t-1}p_i\le \epsilon \) and \(\sum _{i\le t}p_i>\epsilon \). Although the \((1-\epsilon )\)-quantile for \(\sum _{j\in [k]}y_j\) seems harder to compute, at least we know that the value is greater than or equal to \(\sum _{j\in [k]}\ell _j\). Therefore, we have quantile cuts \(y_j\ge \ell _j\) for \(j\in [k]\) and \(\sum _{j\in [k]}y_j\ge \varepsilon +\sum _{j\in [k]}\ell _j\) for some \(\varepsilon \ge 0\), and the set defined by these quantile cuts and the constraints (3c), (3e) is precisely a set of the form \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). Similarly, it is straightforward to capture the quantile cut \(\varvec{c}^\top \varvec{y}\ge \varepsilon +\sum _{j\in [k]}c_j\ell _j\) for general \(\varvec{c}\in {{\mathbb {R}}}_+^k\), because we can rewrite \(y_j\ge \ell _j\) for \(j\in [k]\), (3c) and (3e) in terms of \(c_1y_1,\ldots ,c_jy_j\), and thus the resulting system is equivalent to a joint mixing set with lower bounds.

Next, we summarize our contributions and provide an outline of the paper.

1.1 Contributions and outline

In this paper, we study the polyhedral structure of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\), i.e., joint mixing sets with lower bounds, mainly in the context of joint linear CCPs with random right-hand sides and a discrete probability distribution. Our approach is based on a connection between mixing sets and submodularity that has been overlooked in the literature. Therefore, in Sect. 2.1, we first discuss basics of submodular functions and polymatroid inequalities as they relate to our work. In addition, we devote Sect. 2.2 to establish new tools on a particular joint submodular structure; these new tools play a critical role in our analysis of the joint mixing sets.

Our contributions are as follows:

-

(i)

We first establish a strong and somewhat surprising connection between polymatroids and the basic mixing sets with binary variables (Sect. 3). It is well-known that submodularity imposes favorable characteristics in terms of explicit convex hull descriptions via known classes of inequalities and their efficient separation. In particular, the idea of utilizing polymatroid inequalities from submodular functions has appeared in various papers in other contexts for specific binary integer programs [3, 4, 6, 35, 37, 39]. Notably, mixing sets have been known to be examples of simple structured sets whose convex hull descriptions possess similar favorable characteristics. However, to the best of our knowledge, the connection between submodularity and mixing sets has not been recognized before. Establishing this connection enables us to unify and generalize various existing results on mixing sets with binary variables.

-

(ii)

In Sect. 4, we propose a new class of valid inequalities, referred to as the aggregated mixing inequalities, for the set \({\mathcal {M}}(\varvec{W},\varvec{\ell },\varepsilon )\). One important feature of the class of aggregated mixing inequalities as opposed to the standard mixing inequalities is that it is specifically designed to simultaneously exploit the information encoded in multiple mixing sets with common binary variables.

-

(iii)

In Sect. 5, we establish conditions under which the convex hull of the set \(\mathcal M(\varvec{W},\varvec{\ell },\varepsilon )\) can be characterized through a submodularity lens. We show that the new class of aggregated mixing inequalities, in addition to the classical mixing inequalities, are sufficient under appropriate conditions.

-

(iv)

In Sect. 6, we revisit the results from a recent paper by Liu et al. [20] on modeling two-sided CCPs. We show that mixing sets of the particular structure considered in Liu et al. [20] is nothing but a joint mixing set with lower bound structure with \(k=2\) and two additional constraints involving only the continuous variables \(\varvec{y}\). Thus, our results on aggregated mixing inequalities are immediately applicable to two-sided CCPs. In addition, we show that, due to the simplicity of the additional constraints on the variables \(\varvec{y}\) in two-sided CCPs, our general convex hull results on \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) can be extended easily to accommodate the additional constraints on \(\varvec{y}\) and recover the convex hull results from [20].

Finally, we would like to highlight that although our results are motivated by joint CCPs, they are broadly applicable to other settings where the intersection of mixing sets with common binary variables is present. In addition, applicability of our results from Sect. 2.2 extend to other cases where epigraphs of general submodular functions appear in a similar structure.

1.2 Notation

Given a positive integer n, we let \([n]:=\{1,\ldots ,n\}\). We let \(\varvec{0}\) denote the vector of all zeros whose dimension varies depending on the context, and similarly, \(\varvec{1}\) denotes the vector of all ones. \({{\varvec{e}}}^{{\varvec{j}}}\) denotes the unit vector whose \(j{\mathrm{th}}\) coordinate is 1, and its dimension depends on the context. For \(V\subseteq [n]\), \(\varvec{1}_V\in \{0,1\}^n\) denotes the characteristic vector, or the incidence vector, of V. For a set Q, we denote its convex hull and the extreme points of its convex hull by \({{\,\mathrm{conv}\,}}(Q)\) and \({\mathrm{ext}}(Q)\) respectively. For \(t\in {{\mathbb {R}}}\), \(\left( t\right) _+\) denotes \(\max \{0,t\}\). Given a vector \(\varvec{\pi }\in {{\mathbb {R}}}^n\), and a set \(V\subseteq [n]\), we define \(\varvec{\pi }(V)=\sum _{i\in V}\pi _i\). For notational purposes, when \(S=\emptyset \), we define \(\max _{i\in S} s_i=0\) and \(\sum _{i\in S} s_i=0\).

2 Submodular functions and polymatroid inequalities

In this section, we start with a brief review of submodular functions and polymatroid inequalities, and then in Sect. 2.2 we establish tools on joint submodular constraints that are useful for our analysis of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\).

2.1 Preliminaries

Consider an integer \(n\ge 1\) and a set function \(f:2^{[n]}\rightarrow {{\mathbb {R}}}\). Recall that f is submodular if

Given a submodular set function f, Edmonds [12] introduced the notion of extended polymatroid of f, which is a polyhedron associated with f defined as follows:

Observe that \(EP_f\) is nonempty if and only if \(f(\emptyset )\ge 0\). In general, a submodular function f need not satisfy \(f(\emptyset )\ge 0\). Nevertheless, it is straightforward to see that the function \(f-f(\emptyset )\) is submodular whenever f is submodular, and that \((f-f(\emptyset ))(\emptyset )=0\). Hence, \(EP_{f-f(\emptyset )}\) is always nonempty. Hereinafter, we use notation \({{\tilde{f}}}\) to denote \(f-f(\emptyset )\) for any set function f.

A function on \(\{0,1\}^n\) can be interpreted as a set function over the subsets of [n], and thus, the definitions of submodular functions and extended polymatroids extend to functions over \(\{0,1\}^n\). To see this, consider any integer \(n\ge 1\) and any function \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\). With a slight abuse of notation, define \(f(V):=f(\varvec{1}_V)\) for \(V\subseteq [n]\) where \(\varvec{1}_V\) denotes the characteristic vector of V. We say that \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) is a submodular function if the corresponding set function over [n] is submodular. We can also define the extended polymatroid of \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) as in (4). Throughout this paper, given a function \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\), we will switch between its set function interpretation and its original form, depending on the context.

Given a submodular function \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\), its epigraph is the mixed-integer set given by

It is well-known that when f is submodular, one can characterize the convex hull of \(Q_f\) through the extended polymatroid of \(\tilde{f}\).

Theorem 2.1

(Lovász [21], Atamtürk and Narayanan [4, Proposition 1]) Let \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) be a submodular function. Then

The inequalities \(y\ge \varvec{\pi }^\top \varvec{z}+f(\emptyset )\) for \(\varvec{\pi }\in EP_{{{\tilde{f}}}}\) are called the polymatroid inequalities of f. Although there are infinitely many polymatroid inequalities of f, for the description of \({{\,\mathrm{conv}\,}}(Q_f)\), it is sufficient to consider only the ones corresponding to the extreme points of \(EP_{\tilde{f}}\). We refer to the polymatroid inequalities defined by the extreme points of \(EP_{{{\tilde{f}}}}\) as the extremal polymatroid inequalities of f. Moreover, Edmonds [12] provided the following explicit characterization of the extreme points of \(EP_{{{\tilde{f}}}}\).

Theorem 2.2

(Edmonds [12]) Let \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) be a submodular function. Then \(\varvec{\pi }\in {{\mathbb {R}}}^n\) is an extreme point of \(EP_{{{\tilde{f}}}}\) if and only if there exists a permutation \(\sigma \) of [n] such that \(\pi _{\sigma (t)}=f(V_{t})-f(V_{t-1})\), where \(V_{t}=\{\sigma (1),\ldots ,\sigma (t)\}\) for \(t\in [n]\) and \(V_{0}=\emptyset \).

The algorithmic proof of Theorem 2.2 from Edmonds [12] is of interest. Suppose that we are given a linear objective \({\bar{\varvec{z}}}\in {{\mathbb {R}}}^n\); then \(\max _{\varvec{\pi }}\left\{ {\bar{\varvec{z}}}^\top \varvec{\pi }:~\varvec{\pi }\in EP_{\tilde{f}}\right\} \) can be solved by the following “greedy” algorithm: given \({\bar{\varvec{z}}}\in {{\mathbb {R}}}^n\), first find an ordering \(\sigma \) such that \({{\bar{z}}}_{\sigma (1)}\ge \cdots \ge {{\bar{z}}}_{\sigma (n)}\), and let \(V_{t}:=\{\sigma (1),\ldots ,\sigma (t)\}\) for \(t\in [n]\) and \(V_{0}=\emptyset \). Then, \(\varvec{\pi }\in {{\mathbb {R}}}^n\) where \(\pi _{\sigma (t)}=f(V_{t})-f(V_{t-1})\) for \(t\in [n]\) is an optimal solution to \(\max _{\varvec{\pi }}\left\{ {\bar{\varvec{z}}}^\top \varvec{\pi }:~\varvec{\pi }\in EP_{{{\tilde{f}}}}\right\} \). Note that the implementation of this algorithm basically requires a sorting algorithm to compute the desired ordering \(\sigma \), and this can be done in \(O(n\log n)\) time. Thus, the overall complexity of this algorithm is \(O(n\log n)\).

Consequently, given a point \(({{{\bar{y}}}},{\bar{\varvec{z}}})\in {{\mathbb {R}}}\times {{\mathbb {R}}}^n\), separating a violated polymatroid inequality amounts to solving the optimization problem \(\max _{\varvec{\pi }}\left\{ {\bar{\varvec{z}}}^\top \varvec{\pi }:~\varvec{\pi }\in EP_{{{\tilde{f}}}}\right\} \), and thus we arrive at the following result.

Corollary 1

(Atamtürk and Narayanan [4, Section 2]) Let \(f:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) be a submodular function. Then the separation problem for polymatroid inequalities can be solved in \(O(n\log n)\) time.

2.2 Joint submodular constraints

In this section, we establish tools that will be useful throughout this paper. Recall that when f is submodular, the convex hull of its epigraph \(Q_f\) is described by the extremal polymatroid inequalities of f. Henceforth, we use the restriction \((y,\varvec{z})\in {{\,\mathrm{conv}\,}}(Q_f)\) as a constraint to indicate the inclusion of the corresponding extremal polymatroid inequalities of f in the constraint set.

Let \(f_1,\ldots ,f_k:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) be k submodular functions. Let us examine the convex hull of the following mixed-integer set:

When \(k=1\), the set \(Q_{f_1}\) is just the epigraph of the submodular function \(f_1\) on \(\{0,1\}^n\). For general k, \(Q_{f_1,\ldots ,f_k}\) is described by k submodular functions that share the same set of binary variables. For \((\varvec{y},\varvec{z})\in Q_{f_1,\ldots ,f_k}\), constraint \(y_j\ge f_j(\varvec{z})\) can be replaced with \((y_j,\varvec{z})\in Q_{f_j}\) for \(j\in [k]\). Therefore, the polymatroid inequalities of \(f_j\) with left-hand side \(y_j\), of the form \(y_j\ge \varvec{\pi }^\top \varvec{z}+f_j(\emptyset )\) with \(\varvec{\pi }\in EP_{\tilde{f_j}}\), are valid for \(Q_{f_1,\ldots ,f_k}\). In fact, these inequalities are sufficient to describe \({{\,\mathrm{conv}\,}}(Q_{f_1,\ldots ,f_k})\) as well.

Proposition 1

(Baumann et al. [6, Theorem 2]) Let the functions \(f_1,\ldots ,f_k:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) be submodular. Then,

By Proposition 1, when \(f_1,\ldots ,f_k\) are submodular, \({{\,\mathrm{conv}\,}}\left( Q_{f_1,\ldots ,f_k}\right) \) can be described by the polymatroid inequalities of \(f_j\) with left-hand side \(y_j\) for \(j\in [k]\). The submodularity requirement on all of the functions \(f_j\) in Proposition 1 is indeed critical. We demonstrate in the next example that even when \(k=2\), and only one of the functions \(f_i\) is not submodular, we can no longer describe the corresponding convex hull using the polymatroid inequalities for \(f_j\).

Example 1

Let \(f_1,f_2:\{0,1\}^2\rightarrow {{\mathbb {R}}}\) be defined by

While \(f_1\) is submodular, \(f_2\) is not. Since \(f_1(0,0)=f_1(1,1)=0\), we deduce that \((0,1/2,1/2)\in {{\,\mathrm{conv}\,}}(Q_{f_1})\). Similarly, as \(f_2(0,1)=f_2(1,0)=0\), it follows that \((0,1/2,1/2)\in {{\,\mathrm{conv}\,}}(Q_{f_2})\). This implies that

Notice that, by definition of \(f_1,f_2\), we have \(f_1(\varvec{z})+f_2(\varvec{z})=1\) for each \(\varvec{z}\in \{0,1\}^2\), implying in turn that \(y_1+y_2\ge 1\) is valid for \({{\,\mathrm{conv}\,}}\left( Q_{f_1,f_2}\right) \). Therefore, the point (0, 0, 1/2, 1/2) cannot be in \({{\,\mathrm{conv}\,}}\left( Q_{f_1,f_2}\right) \). So, it follows that \({{\,\mathrm{conv}\,}}\left( Q_{f_1,f_2}\right) \ne \left\{ (\varvec{y},\varvec{z})\in {{\mathbb {R}}}^2\times [0,1]^2:~(y_j,\varvec{z})\in {{\,\mathrm{conv}\,}}(Q_{f_j}),~\forall j\in [2]\right\} \).

In Sect. 3, we will discuss how Proposition 1 can be used to provide the convex hull description of a joint mixing set \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0)\).

We next highlight a slight generalization of Proposition 1 that is of interest for studying \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). Observe that \(Q_{f_1,\ldots ,f_k}\) is defined by multiple submodular constraints with independent continuous variables \(y_j\). We can replace this independence condition by a certain type of dependence. Consider the following mixed-integer set:

where \({{\varvec{a}}}_{\mathbf{1}},\ldots ,{{\varvec{a}}}_{{\varvec{m}}}\in {{\mathbb {R}}}_+^k\setminus \{\varvec{0}\}\) and \(f_1,\ldots , f_m:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) are submodular functions. Here, m can be larger than k, so \({{\varvec{a}}}_{\mathbf{1}},\ldots ,{{\varvec{a}}}_{{\varvec{m}}}\) need not be linearly independent. Now consider \(\varvec{\alpha }=\sum _{j\in [m]}c_j {{\varvec{a}}}_{{\varvec{j}}}\) for some \(\varvec{c}\in {{\mathbb {R}}}_+^m\). Notice that \(f_{\varvec{\alpha }}\ge \sum _{j\in [m]}c_jf_j\) where \(f_{\varvec{\alpha }}:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) is defined as

Definition 1

We say that \({{\varvec{a}}}_{\mathbf{1}}^\top \varvec{y},\ldots ,{{\varvec{a}}}_{{\varvec{m}}}^\top \varvec{y}\) are weakly independent with respect to \(f_1,\ldots ,f_m\) if for any \(\varvec{\alpha }=\sum _{j\in [m]}c_j{{\varvec{a}}}_{{\varvec{j}}}\) with \(\varvec{c}\in {{\mathbb {R}}}_+^m\), we have \(f_{\varvec{\alpha }}=\sum _{j\in [m]}c_jf_j\).

It is straightforward to see that if \({{\varvec{a}}}_{\mathbf{1}},\ldots ,{{\varvec{a}}}_{{\varvec{m}}}\) are distinct unit vectors, i.e., \(m=k\) and \({{\varvec{a}}}_{{\varvec{j}}}^\top \varvec{y}=y_j\) for \(j\in [k]\), then \({{\varvec{a}}}_{\mathbf{1}}^\top \varvec{y},\ldots , {{\varvec{a}}}_{{\varvec{m}}}^\top \varvec{y}\) are weakly independent. It is also easy to see that if \({{\varvec{a}}}_{\mathbf{1}},\ldots ,{{\varvec{a}}}_{{\varvec{m}}}\) are linearly independent, then \({{\varvec{a}}}_{\mathbf{1}}^\top \varvec{y},\ldots ,{{\varvec{a}}}_{{\varvec{m}}}^\top \varvec{y}\) are weakly independent. Based on this definition, we have the following slight extension of Proposition 1.

Proposition 2

Let \({{{\mathcal {P}}}}\) be defined as in (5). If \({{\varvec{a}}}_{\mathbf{1}}^\top \varvec{y},\ldots , {{\varvec{a}}}_{{\varvec{m}}}^\top \varvec{y}\) are weakly independent with respect to \(f_1,\ldots ,f_m\), then

Proof

Define \({{{\mathcal {R}}}}:=\left\{ (\varvec{y},\varvec{z})\in {{\mathbb {R}}}^k\times [0,1]^n:~({{\varvec{a}}}_{{\varvec{j}}}^\top \varvec{y},\varvec{z})\in {{\,\mathrm{conv}\,}}(Q_{f_j}),~\forall j\in [m]\right\} \). It is clear that \({{\,\mathrm{conv}\,}}\left( {{{\mathcal {P}}}}\right) \subseteq {{{\mathcal {R}}}}\). For the direction \({{\,\mathrm{conv}\,}}\left( {{{\mathcal {P}}}}\right) \supseteq {{{\mathcal {R}}}}\), we need to show that any inequality \(\varvec{\alpha }^\top \varvec{y}+{\varvec{\beta }}^\top \varvec{z}\ge \gamma \) valid for \({{\,\mathrm{conv}\,}}\left( {{{\mathcal {P}}}}\right) \) is also valid for \({{{\mathcal {R}}}}\). To that end, take an inequality \(\varvec{\alpha }^\top \varvec{y}+{\varvec{\beta }}^\top \varvec{z}\ge \gamma \) valid for \({{\,\mathrm{conv}\,}}\left( {{{\mathcal {P}}}}\right) \). Note that every recessive direction of \({{\,\mathrm{conv}\,}}({{{\mathcal {P}}}})\) is of the form \((\varvec{r},\varvec{0})\) for some \(\varvec{r}\in {{\mathbb {R}}}^k\). Moreover, \((\varvec{r},\varvec{0})\) is a recessive direction of \({{\,\mathrm{conv}\,}}({{{\mathcal {P}}}})\) if and only if \(\varvec{r}\) satisfies \({{\varvec{a}}}_{{\varvec{j}}}^\top \varvec{r}\ge 0\) for all \(j\in [m]\). Since \(\varvec{\alpha }^\top \varvec{y}+{\varvec{\beta }}^\top \varvec{z}\ge \gamma \) is valid for \({{\,\mathrm{conv}\,}}\left( {{{\mathcal {P}}}}\right) \), \(\varvec{\alpha }^\top \varvec{r}\ge 0\) for every recessive direction \((\varvec{r},\varvec{0})\) of \({{\,\mathrm{conv}\,}}({{{\mathcal {P}}}})\), and therefore, \(\varvec{\alpha }^\top \varvec{r}\ge 0\) holds for all \(\varvec{r}\in \{\varvec{r}\in {{\mathbb {R}}}^k:{{\varvec{a}}}_{{\varvec{j}}}^\top \varvec{r}\ge 0,~\forall j\in [m]\}\). Then, by Farkas’ lemma, there exists some \(\varvec{c}\in {{\mathbb {R}}}_+^m\) such that \(\varvec{\alpha }=\sum _{j\in [m]}c_j{{\varvec{a}}}_{{\varvec{j}}}\). Moreover, \(\varvec{\alpha }^\top \varvec{y}+{\varvec{\beta }}^\top \varvec{z}\ge \gamma \) is valid for

where \(f_{\varvec{\alpha }}\) is defined as in (6). Since \({{\varvec{a}}}_{\mathbf{1}}^\top \varvec{y},\ldots , {{\varvec{a}}}_{{\varvec{m}}}^\top \varvec{y}\) are weakly independent with respect to \(f_1,\ldots ,f_m\), it follows that \(f_{\varvec{\alpha }}=\sum _{j\in [m]}c_jf_j\), and therefore, \(f_{\varvec{\alpha }}\) is submodular. Then it is not difficult to see that

Therefore, to show that \(\varvec{\alpha }^\top \varvec{y}+{\varvec{\beta }}^\top \varvec{z}\ge \gamma \) is valid for \({{{\mathcal {R}}}}\), it suffices to argue that \({{{\mathcal {R}}}}\subseteq {{\,\mathrm{conv}\,}}({{{\mathcal {Q}}}})\). Let \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\in {{{\mathcal {R}}}}\). Then, by Theorem 2.1, it suffices to show that \(\varvec{\alpha }^\top {\bar{\varvec{y}}}\ge \varvec{\pi }^\top {\bar{\varvec{z}}} +f_{\varvec{\alpha }}(\emptyset )\) holds for every extreme point \(\varvec{\pi }\) of \(EP_{\tilde{f_{\varvec{\alpha }}}}\). To this end, take an extreme point \(\varvec{\pi }\) of \(EP_{\tilde{f_{\varvec{\alpha }}}}\). By Theorem 2.2, there exists a permutation \(\sigma \) of [n] such that \(\pi _{\sigma (t)}=f_{\varvec{\alpha }}(V_{t})-f_{\varvec{\alpha }}(V_{t-1})\) where \(V_{t}=\{\sigma (1),\ldots ,\sigma (t)\}\) for \(t\in [n]\) and \(V_{0}=\emptyset \). Now, for \(j\in [m]\), let \(\varvec{\varvec{\pi }^j}\in {{\mathbb {R}}}^n\) be the vector such that \(\varvec{\varvec{\pi }^j}_{\sigma (t)}=f_j(V_t)-f_j(V_{t-1})\) for \(t\in [n]\). Then, we have \(\varvec{\pi }=\sum _{j\in [m]}c_j\varvec{\varvec{\pi }^j}\) because \(f_{\varvec{\alpha }}=\sum _{j\in [m]}c_jf_j\). Moreover, by Theorem 2.2, \(\varvec{\varvec{\pi }^j}\) is an extreme point of \(EP_{\tilde{f_j}}\). Hence, due to our assumption that \(({{\varvec{a}}}_{{\varvec{j}}}^\top {\bar{\varvec{y}}},{\bar{\varvec{z}}})\in {{\,\mathrm{conv}\,}}(Q_{f_j})\), Theorem 2.1 implies \({{\varvec{a}}}_{{\varvec{j}}}^\top {\bar{\varvec{y}}}\ge (\varvec{\varvec{\pi }^j})^\top {\bar{\varvec{z}}}+\pi _j(\emptyset )\) is valid for all \(j\in [m]\). Since \(\varvec{\alpha }^\top {\bar{\varvec{y}}}\ge \varvec{\pi }^\top {\bar{\varvec{z}}} +f_{\varvec{\alpha }}(\emptyset )\) is obtained by adding up \({{\varvec{a}}}_{{\varvec{j}}}^\top {\bar{\varvec{y}}}\ge (\varvec{\varvec{\pi }^j})^\top {\bar{\varvec{z}}}+\pi _j(\emptyset )\) for \(j\in [m]\), it follows that \(\varvec{\alpha }^\top {\bar{\varvec{y}}}\ge \varvec{\pi }^\top {\bar{\varvec{z}}} +f_{\varvec{\alpha }}(\emptyset )\) is valid, as required. We just have shown that \({{{\mathcal {R}}}}\subseteq {{\,\mathrm{conv}\,}}({{{\mathcal {Q}}}})\), thereby completing the proof. \(\square \)

In Sect. 5, we will use Proposition 2 to study the convex hull of \({{{\mathcal {M}}}}(\varvec{W},{\mathbf {0}},\varepsilon )\), i.e., a joint mixing set with a linking constraint. Again, the submodularity assumption on \(f_1,\ldots ,f_m\) is important in Proposition 2. Recall that Example 1 demonstrates that in Proposition 2 even when m is taken to be equal to k and the vectors \({{\varvec{a}}}_{{\varvec{j}}}\in {{\mathbb {R}}}_+^k\setminus \{\mathbf{0}\}\), \(j\in [m]=[k]\), are taken to be the unit vectors in \({{\mathbb {R}}}^k\), the statement does not hold if one of the functions \(f_j\) is not submodular.

3 Mixing inequalities and joint mixing sets

In this section, we establish that mixing sets with binary variables are indeed nothing but the epigraphs of certain submodular functions. In addition, through this submodularity lens, we prove that the well-known mixing (or star) inequalities for mixing sets are nothing but the extremal polymatroid inequalities.

Recall that a joint mixing set with lower bounds \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\), where \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+\), \(\varvec{\ell }\in {{\mathbb {R}}}_+^k\) and \(\varepsilon \ge 0\), is defined by (1). In this section, we study the case when \(\varepsilon =0\), and characterize the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) for any \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+\) and \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\). As corollaries, we prove that the famous star/mixing inequalities are in fact polymatroid inequalities, and we recover the result of Atamtürk et al. [5, Theorem 3] on joint mixing sets \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0)\).

Given a matrix \(\varvec{W}=\{w_{i,j}\}\in {{\mathbb {R}}}^{n\times k}_+\) and a vector \(\varvec{\ell }\in {{\mathbb {R}}}_+^k\), we define the following mixed-integer set:

where

Remark 1

By definition, \((\varvec{y},\varvec{z})\in {{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) if and only if \((\varvec{y},\varvec{1}-\varvec{z})\in {{{\mathcal {P}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). Thus, the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) can be obtained after taking the convex hull of \({{{\mathcal {P}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) and complementing the z variables.

For \(j\in [k]\), we define

Then, the set \({{{\mathcal {P}}}}(\varvec{W},\varvec{\ell },0)\) admits a representation as the intersection of epigraphs of the functions \(f_j(\varvec{z})\):

We next establish that the functions \(f_j(\varvec{z}), j\in [k]\) are indeed submodular.

Lemma 1

Let \(\varvec{\ell }\in {{\mathbb {R}}}_+^k\). For each \(j\in [k]\), the function \(f_j\) defined as in (11) satisfies \(f_j(\emptyset )=\ell _j\) and it is submodular.

Proof

Let \(j\in [k]\). Notice that \(f_j(\emptyset )=f_j(\varvec{0})=\max \left\{ \ell _j,0\right\} =\ell _j\). In order to establish the submodularity of \(f_j\), for ease of notation, we drop the index j and use f to denote \(f_j\). As before, for each \(V\subseteq [n]\), let f(V) be defined as \(f(\varvec{1}_V)\) where \(\varvec{1}_V\in \{0,1\}^n\) denotes the characteristic vector of V. Consider two sets \(U,V\subseteq [n]\). By definition of f, we have \(\max \{f(U),f(V)\}=f(U\cup V)\), and \(\min \{f(U),f(V)\}\ge f(U\cap V)\). Then we immediately get

thereby proving that \(f_j\) is submodular, as required. \(\square \)

Corollary 2

Let \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\) and \(f_j\) be as defined in (11). Then,

i.e., the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) is given by the extremal polymatroid inequalities of particular submodular functions.

Proof

We deduce from Proposition 1 that

which immediately implies the desired relation via Remark 1 and Theorem 2.1 since the constraint \((y_j,\varvec{1}-\varvec{z})\in {{\,\mathrm{conv}\,}}(Q_{f_j})\) is equivalent to the set of the corresponding extremal polymatroid inequalities. \(\square \)

Corollary 2 establishes a strong connection between the mixing sets with binary variables and the epigraphs of submodular functions, and implies that the convex hull of joint mixing sets are given by the extremal polymatroid inequalities. To the best of our knowledge this connection between mixing sets with binary variables and submodularity has not been identified in the literature before.

An explicit characterization of the convex hull of a mixing set with binary variables in the original space has been studied extensively in the literature. Specifically, Atamtürk et al. [5] gave the explicit characterization of \({{\,\mathrm{conv}\,}}({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0))\) in terms of the so called mixing (star) inequalities. Let us state the definition of these inequalities here.

Definition 2

We call a sequence \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\) of indices in [n] a j-mixing-sequence if \(w_{j_1,j}\ge w_{j_2,j}\ge \cdots \ge w_{j_\tau , j}\ge \ell _j\).

For \(\varvec{W}=\{w_{i,j}\}\in {{\mathbb {R}}}^{n\times k}_+\) and \(\varvec{\ell }\in {{\mathbb {R}}}_+^k\), the mixing inequality derived from a j-mixing-sequence \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\) is defined as the following (see [13, Section 2]):

where \(w_{j_{\tau +1},j}:=\ell _j\) for convention. Atamtürk et al. [5, Proposition 3] showed that the inequality (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) for any j-mixing-sequence \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\) is valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) when \(\varvec{\ell }=\varvec{0}\). Luedtke [22, Theorem 2] later observed that the inequality (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) for any j-mixing-sequence \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\) is valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) for any \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\).

Given these results from the literature on the convex hull characterizations of mixing sets and Corollary 2, it is plausible to think that there must be a strong connection between the extremal polymatroid inequalities and the mixing (star) inequalities (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)). We next argue that the extremal polymatroid inequalities given by the constraint \((y_j,\varvec{1}-\varvec{z})\in {{\,\mathrm{conv}\,}}(Q_{f_j})\) are precisely the mixing (star) inequalities.

Proposition 3

Let \(\varvec{W}=\{w_{i,j}\}\in {{\mathbb {R}}}^{n\times k}_+\) and \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\). Consider any \(j\in [k]\). Then, for every extreme point \(\varvec{\pi }\) of \(EP_{{\tilde{f}}_j}\), there exists a j-mixing-sequence \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\) in [n] that satisfies the following:

-

(1)

\(w_{j_1,j}=\max \left\{ w_{i,j}:i\in [n]\right\} \),

-

(2)

the corresponding polymatroid inequality \(y_j +\sum _{i\in [n]}\pi _i z_i \ge \ell _j +\sum _{i\in [n]}\pi _i\) is equivalent to the mixing inequality (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) derived from the sequence \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\).

In particular, for any \(j\in [k]\), the extremal polymatroid inequality is of the form

where \(w_{j_1,j}=\max \left\{ w_{i,j}:i\in [n]\right\} \) and \(w_{j_{\tau +1},j}:=\ell _j\).

Proof

By Theorem 2.2, there exists a permutation \(\sigma \) of [n] such that \(\pi _{\sigma (t)}=f_j(V_{t})-f_j(V_{t-1})\) where \(V_{t}=\{\sigma (1),\ldots ,\sigma (t)\}\) for \(t\in [n]\) and \(V_{0}=\emptyset \). By definition of \(f_j\) in (11), we have \(\ell _j=f_j(V_0)\le f_j(V_1)\le \cdots \le f_j(V_n)\), because \(\emptyset =V_0\subset V_1\subset \cdots \subset V_n\). Let \(\{t_1,\ldots ,t_\tau \}\) be the collection of all indices t satisfying \(f_j(V_{t-1})<f_j(V_t)\). Without loss of generality, we may assume that \(w_{\sigma (t_1),j}\ge \cdots \ge w_{\sigma (t_\tau ), j}\). Notice that \(w_{\sigma (t_\tau ), j}>\ell _j\), because \(f_j(V_{t_\tau })>f_j(V_{t_{\tau }-1})\ge \ell _j\). Then, after setting \(j_s=\sigma (t_s)\) for \(s\in [\tau ]\), it follows that \(\{j_1\rightarrow \cdots \rightarrow j_\tau \}\) is a j-mixing-sequence. Moreover, we have \(w_{j_1,j}=f_j(V_{t_1})=f_j([n])=\max \left\{ w_{i,j}:i\in [n]\right\} \). Therefore, we deduce that \(\pi _i=w_{j_s,j}-w_{j_{s+1},j}\) if \(i=\sigma (t_s)=j_s\) for some \(s\in [\tau ]\) and \(\pi _i=0\) otherwise. \(\square \)

As the name “mixing” inequalities is more commonly used in the literature than “star” inequalities, we will stick to the term “mixing” hereinafter to denote the inequalities of the form (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) or (\(\text {Mix}_{\varvec{W},\varvec{\ell }}^*\)).

Proposition 1 and consequently Corollary 2 imply that, for any facet defining inequality of the set \({{\,\mathrm{conv}\,}}({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0))\), there is a corresponding extremal polymatroid inequality. Proposition 3 implies that mixing inequalities are nothing but the extremal polymatroid inequalities. Therefore, an immediate consequence of Corollary 2 and Proposition 3 is the following result.

Theorem 3.1

Given \(\varvec{W}=\{w_{i,j}\}\in {{\mathbb {R}}}^{n\times k}_+\) and any \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\), the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) is described by the mixing inequalities of the form (\(\text {Mix}_{\varvec{W},\varvec{\ell }}^*\)) for \(j\in [k]\) and the bounds \(\varvec{0}\le \varvec{z}\le \varvec{1}\).

A few remarks are in order.

Remark 2

First, note that Luedtke et al. [24, Theorem 2] showed the validity of inequality (\(\text {Mix}_{\varvec{W},\varvec{\ell }}^*\)) and its facet condition for a particular choice of \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\) in the case of \(k=1\). Also, recall that \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0)\) is called a joint mixing set, and Atamtürk et al. [5, Theorem 3] proved that \({{\,\mathrm{conv}\,}}({{{\mathcal {M}}}}(\varvec{W},\varvec{0},0))\) is described by the mixing inequalities and the bound constraints \(\varvec{y}\ge \varvec{0}\) and \(\varvec{z}\in [0,1]^n\). Since Theorem 3.1 applies to \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) for arbitrary \(\varvec{\ell }\), it immediately extends [5, Theorem 3] and further extends the validity inequality component of Luedtke et al. [24, Theorem 2].

Remark 3

Our final remark is that, since the mixing inequalities (\(\text {Mix}_{\varvec{W},\varvec{\ell }}^*\)) for \(j\in [k]\) are polymatroid inequalities, they can be separated in \(O(k\,n\log n)\) time by a simple greedy algorithm, thanks to Corollary 1. This also matches the best known separation complexity of mixing inequalities [13].

4 Aggregated mixing inequalities

As discussed in Sect. 1, in order to make use of the knapsack constraint in the MIP formulation of joint CCPs via quantile cuts, we need to study the set \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) for general \(\varepsilon \ge 0\). Unfortunately, in contrast to our results in Sect. 3 for the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\), the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) for general \(\varepsilon \ge 0\) may be complicated; we will soon see this in Example 2. In this section, we introduce a new class of valid inequalities for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) for arbitrary \(\varepsilon \ge 0\). In Sects. 5.2 and 5.3, we identify conditions under which these new inequalities along with the original mixing inequalities are sufficient to give the complete convex hull characterization.

For general \(\varepsilon \ge 0\), \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\), given by (1), is a subset of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\), which means that any inequality valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },0)\) is also valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). In particular, Theorem 3.1 implies that the mixing inequalities of the form (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) are valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). However, unlike the \(\varepsilon =0\) case, we will see that the mixing inequalities are not sufficient to describe the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) if \(\varepsilon >0\).

We first present a simplification of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\). Although it is possible that \(w_{i,j}<\ell _j\) for some i, j when \(\varvec{W},\varvec{\ell }\) are arbitrary, we can reduce \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) to a set of the form \({{{\mathcal {M}}}}({\varvec{W}}^{\varvec{\ell }},\varvec{0},\varepsilon )\) for some \({\varvec{W}}^{\varvec{\ell }}=\left\{ w^{\varvec{\ell }}_{i,j}\right\} \in {{\mathbb {R}}}_+^{n\times k}\).

Lemma 2

Let \(\varvec{\ell }\in {{\mathbb {R}}}^k_+\). Then \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )=\left\{ (\varvec{y},\varvec{z})\in {{\mathbb {R}}}^k\times {{\mathbb {R}}}^n:~(\varvec{y}-\varvec{\ell },\varvec{z})\right. \left. \in {{{\mathcal {M}}}}({\varvec{W}}^{\varvec{\ell }},\varvec{0},\varepsilon ) \right\} \), where \({\varvec{W}}^{\varvec{\ell }}=\left\{ w_{i,j}^{\varvec{\ell }}\right\} \in {{\mathbb {R}}}_+^{n\times k}\) is the matrix whose entries are given by

Proof

By definition, \((\varvec{y}-\varvec{\ell },\varvec{z})\in {{{\mathcal {M}}}}({\varvec{W}}^{\varvec{\ell }},\varvec{0},\varepsilon )\) if and only if

and \((\varvec{y},\varvec{z})\) satisfies (1b)–(1d). Consider any \(j\in [k]\). If \(\ell _j> w_{i,j}\), then the constraint (12) becomes \(y_j\ge \ell _j\) and the inequality \(y_j+w_{i,j}z_i\ge w_{i,j}\) is a consequence of \(y_j\ge \ell _j\). On the other hand, if \(\ell _j\le w_{i,j}\), then (12) is equivalent to \(y_j+(w_{i,j}-\ell _j)z_i\ge w_{i,j}\), and therefore we have \(y_j\ge w_{i,j}\) when \(z_i=0\) and have \(y_j\ge \ell _j\) when \(z_i=1\). Then, in both cases, it is clear that

is equal to

because \(\ell _j\ge 0\). Hence, we have \((\varvec{y}-\varvec{\ell },\varvec{z})\in {{{\mathcal {M}}}}({\varvec{W}}^{\varvec{\ell }},\varvec{0},\varepsilon )\) if and only if \((\varvec{y},\varvec{z})\in {{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\), as required. \(\square \)

We deduce from Lemma 2 that

and thus the convex hull description of \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\) can be obtained by taking the convex hull of \({{{\mathcal {M}}}}({\varvec{W}}^{\varvec{\ell }},\varvec{0},\varepsilon )\). Moreover, any inequality \(\varvec{\alpha }^\top \varvec{y}+\varvec{\beta }^\top \varvec{z}\ge \gamma \) is valid for \({{{\mathcal {M}}}}({\varvec{W}}^{\varvec{\ell }},\varvec{0},\varepsilon )\) if and only if \(\varvec{\alpha }^\top (\varvec{y}-\varvec{\ell })+\varvec{\beta }^\top \varvec{z}\ge \gamma \) is valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{\ell },\varepsilon )\).

So, from now on, we assume that \(\varvec{\ell }=\varvec{0}\), and we work over \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) with \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+\) and \(\varepsilon \ge 0\). Recall that \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\), which we call a joint mixing set with a linking constraint, is the mixed-integer set defined by

Let us begin with an example.

Example 2

Consider the following mixing set with a linking constraint, i.e., \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) with \(\varepsilon =7>0\).

Using PORTA [9], we derive the convex hull description of this set, which is given by

In this example, the inequalities \(y_1 +2z_1+2z_2+5z_3+z_4+3z_5\ge 13\) and \(y_2+2z_2+z_4+z_5\ge 4\) are examples of mixing inequalities from (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) that are facet-defining. Note that the five inequalities with \(y_1+y_2\) are not of the form (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)). Moreover, these non-mixing inequalities cannot be obtained by simply adding one mixing inequality involving \(y_1\) and another mixing inequality involving \(y_2\). The developments we present next on a new class of inequalities will demonstrate this point, and we will revisit this example again in Example 3.

The five inequalities with \(y_1+y_2\) in Example 2 admit a common interpretation. To explain them, take an integer \(\theta \in [n]\) and a sequence \(\Theta \) of \(\theta \) indices in [n] given by \(\{i_1\rightarrow i_2\rightarrow \cdots \rightarrow i_\theta \}\). Given two indices in the sequence \(i_p,i_q\), we say that \(i_p\) precedes \(i_q\) in \(\Theta \) if \(p< q\). Our description is based on the following definition.

Definition 3

Given a sequence \(\Theta \), a j-mixing-subsequence of \(\Theta \) is the subsequence \(\{j_1\rightarrow \cdots \rightarrow j_{\tau _j}\}\) of \(\Theta \) that satisfies the following property:

where we define \(\max \left\{ w_{i,j}:~i_\theta \text { precedes }i \text { in }\Theta \right\} =0\) for convention (\(i_\theta \) is the last element, so it precedes no element in \(\Theta \)).

Based on Definition 3, we deduce that the j-mixing-subsequence of \(\Theta \) is unique for each \(j\in [k]\) and admits a few nice structural properties as identified below.

Lemma 3

If \(\{j_1\rightarrow \cdots \rightarrow j_{\tau _j}\}\) is the j-mixing-subsequence of \(\Theta \), then \(j_{\tau _j}\) is always the last element \(i_\theta \) of \(\Theta \) and \(w_{j_1,j}\ge \cdots \ge w_{j_\tau , j}\ge 0\).

Proof

When \(p<q\), because \(j_p\) precedes \(j_q\) in \(\Theta \), it follows that \(w_{j_1,j}\ge \cdots \ge w_{j_{\tau _j},j}\ge 0\). The last element \(i_\theta \) always satisfies \(w_{i_\theta , j}\ge \max \left\{ w_{i,j}:~i_\theta \text { precedes } i\text { in } \Theta \right\} =0\). Therefore, \(i_\theta \) is part of the j-mixing-subsequence as its last element. \(\square \)

Given \(\Theta =\{i_1\rightarrow i_2\rightarrow \cdots \rightarrow i_\theta \}\), for any \(j\in [n]\), we denote by \(\Theta _j=\{j_1\rightarrow \cdots \rightarrow j_{\tau _j}\}\) the j-mixing-subsequence of \(\Theta \). By Definition 2 and Lemma 3, we deduce that \(\{j_1\rightarrow \cdots \rightarrow j_{\tau _j}\}\) is a j-mixing-sequence. Recall that for any j-mixing-sequence \(\{j_1\rightarrow \cdots \rightarrow j_{\tau _j}\}\), the corresponding mixing inequality (\(\text {Mix}_{\varvec{W},\varvec{\ell }}\)) is of the following form:

where \(w_{j_{\tau _j+1},j}:=0\), and it is valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\). In particular, when \(w_{j_1,j}=\max \{w_{i,j}:~i\in [n]\}\), (Mix) is

Also, for \(t\in [\theta ]\),

Then (Mix) can be rewritten as

In order to introduce our new class of inequalities, we define a constant \(L_{\varvec{W},\Theta }\) that depends on \(\varvec{W}\) and \(\Theta \) as follows:

Now we are ready to introduce our new class of inequalities.

Definition 4

Given a sequence \(\Theta =\{i_1\rightarrow i_2\rightarrow \cdots \rightarrow i_\theta \}\), let \(L_{\varvec{W},\Theta }\) be defined as in (16). Then, the aggregated mixing inequality derived from \(\Theta \) is defined as the following:

Remark 4

Since \(\min \left\{ \varepsilon ,~L_{\varvec{W},\Theta }\right\} \ge 0\), the aggregated mixing inequality (A-Mix) dominates what is obtained after adding up the mixing inequalities (Mix) for \(j\in [k]\).

Before proving validity of (A-Mix), we present an example illustrating how the aggregated mixing inequalities are obtained.

Example 3

We revisit the mixed-integer set in Example 2. Now take a sequence \(\Theta =\{2\rightarrow 1\rightarrow 3\}\). Then \(\{3\}\) and \(\{2\rightarrow 1\rightarrow 3\}\) are the 1-mixing-subsequence and 2-mixing-subsequence of \(\Theta \), respectively. Moreover,

In (14), we have \(\varepsilon =7\). Since \(\varepsilon \le L_{\varvec{W},\Theta }\), the corresponding (A-Mix) is

that is \(y_1+y_2+z_1+z_2+8z_3\ge 17\). In Example 2, the other four inequalities with \(y_1+y_2\) are also of the form (A-Mix), and they are derived from the sequences \(\left\{ 2\rightarrow 3\right\} \), \(\left\{ 3\rightarrow 2\right\} \), \(\left\{ 3\rightarrow 1\rightarrow 2\right\} \), and \(\left\{ 3\rightarrow 2\rightarrow 1\right\} \). So, in this example, the convex hull of (14) is obtained after applying the mixing inequalities (Mix) and the aggregated mixing inequalities (A-Mix).

We will next present the proof of validity of (A-Mix). To this end, the following lemma is useful. As the proof of this lemma is technical, we defer its proof to the appendix. Lemma 4 will be used again when proving Theorem 5.1.

Lemma 4

Let \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\in {{\mathbb {R}}}_+^k\times [0,1]^n\) be a point satisfying (13a)–(13c). If \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies (A-Mix) for all sequences contained in \(\left\{ i\in [n]:~{{\bar{z}}}_i<1\right\} \), then \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies (A-Mix) for all the other sequences as well.

Now we are ready to prove the following theorem:

Theorem 4.1

The aggregated mixing inequalities defined as in (A-Mix) are valid for \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) where \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+\).

Proof

We will argue that every point in \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) with \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+\) satisfies (A-Mix) for all sequences in [n]. To this end, take a point \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\in {{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\). Then, \({{\bar{\varvec{z}}}}\in \{0,1\}^n\) holds by definition of \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\). If \({\bar{\varvec{z}}}=\varvec{1}\), then \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies (A-Mix) if and only if \(\sum _{j\in [k]}{{\bar{y}}}_j\ge \min \left\{ \varepsilon ,~L_{\varvec{W},\Theta }\right\} \). Since \(\sum _{j\in [k]}{{\bar{y}}}_j\ge \varepsilon \), it follows that \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies (A-Mix). Thus, we may assume that \(\left\{ i\in [n]:~\bar{z}_i<1\right\} =\left\{ i\in [n]:~{{\bar{z}}}_i=0\right\} \) is nonempty. By Lemma 4, it is sufficient to show that \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies (A-Mix) for every sequence contained in the nonempty set \(\left\{ i\in [n]:~\bar{z}_i<1\right\} \). Take a nonempty sequence \(\Theta =\left\{ i_1\rightarrow \cdots \rightarrow i_\theta \right\} \) in \(\left\{ i\in [n]:~{{\bar{z}}}_i=0\right\} \). By our choice of \(\Theta \), we have \({{\bar{z}}}_{i_\theta }=0\), so \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies (A-Mix) if and only if

This inequality is precisely what is obtained by adding up the mixing inequalities (Mix) for \(j\in [k]\), and therefore, \(({\bar{\varvec{y}}},{\bar{\varvec{z}}})\) satisfies it, as required. \(\square \)

In Example 3, \(\varepsilon =7\) and \(L_{\varvec{W},\{2\rightarrow 1\rightarrow 3\}}=9\). It can also be readily checked that \(L_{\varvec{W},\{2\rightarrow 3\}}=L_{\varvec{W},\{3\rightarrow 2\}}=8\) and \(L_{\varvec{W},\{3\rightarrow 1\rightarrow 2\}}=L_{\varvec{W},\{3\rightarrow 2\rightarrow 1\}}=9\), which means \(\min \left\{ \varepsilon ,L_{\varvec{W},\Theta }\right\} =\varepsilon \) for the sequences corresponding to the five aggregated mixing inequalities in the convex hull description of (14). In general, the following holds:

Proposition 4

If \(\varepsilon \le L_{\varvec{W},\Theta }\), then the aggregated mixing inequality (A-Mix) obtained from \(\Theta \) dominates the linking constraint \(y_1+\cdots +y_k\ge \varepsilon \).

Proof

The inequality (A-Mix) is equivalent to

Since \(\sum _{s\in [\tau _j]} (w_{j_s,j}-w_{j_{s+1},j})=w_{j_1,j}\), we deduce by by Lemma 3 that for all \(j\in [k]\),

where the inequality follows from the facts that \(w_{j_s,j}-w_{j_{s+1},j}\ge 0\) for all \(j_s\in [\tau _j]\) and thus each summand is nonnegative, and the last equation follows from \(j_{\tau _j}=i_{\theta }\) and by our convention that \(w_{j_{\tau _j+1},j}=0\). Therefore, the following inequality is a consequence of (A-Mix):

Since \(0\le z_{i_\theta }\le 1\), its right-hand side is always greater than or equal to \(\min \left\{ \sum _{j\in [k]}w_{i_\theta , j},~\varepsilon \right\} \). Since \(\max \left\{ w_{i,j}:~i_\theta \text { precedes }i\text { in } \Theta \right\} =0\), it follows from the definition of \(L_{\varvec{W},\Theta }\) in (16) that \(\sum _{j\in [k]}w_{i_\theta , j}\ge L_{\varvec{W},\theta }\). Then, by our assumption that \(L_{\varvec{W},\Theta }\ge \varepsilon \), we have \(\min \left\{ \sum _{j\in [k]}w_{i_\theta , j},~\varepsilon \right\} =\varepsilon \), implying in turn that \(y_1+\cdots +y_k\ge \varepsilon \) is implied by (A-Mix), as required. \(\square \)

We next demonstrate that when \(\varepsilon \) is large, applying the aggregated mixing inequalities is not always enough to describe the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) via an example.

Example 4

The following set is the same as (14) in Examples 2 and 3 except that \(\varepsilon =9\).

Recall that \(L_{\varvec{W},\{2\rightarrow 3\}}=8\), so \(\varepsilon >L_{\varvec{W},\{2\rightarrow 3\}}\) in this case. As before, we obtain the convex hull description of (17) via PORTA [9]:

In this convex hull description, there are still two inequalities with \(y_1+y_2\), and it turns out that these are aggregated mixing inequalities. To illustrate, take a sequence \(\Theta =\{2\rightarrow 1\rightarrow 3\}\). We observed in Example 3 that \(\{3\}\) and \(\{2\rightarrow 1\rightarrow 3\}\) are the 1-mixing subsequence and the 2-mixing subsequence of \(\Theta \) and that \(L_{\varvec{W},\Theta }=9\). So, the corresponding aggregated mixing inequality (A-Mix) is \(y_1+y_2+z_1+z_2+6z_3\ge 17\). Similarly, we obtain \(y_1+y_2+2z_1+z_2+5z_3\ge 17\) from \(\{3\rightarrow 2\rightarrow 1\}\). However, unlike the system (14) in Example 2, there are facet-defining inequalities for the convex hull of this set other than the aggregated mixing inequalities, i.e., the first 6 inequalities in the above description of the convex hull have different coefficient structures on the y variables.

So, a natural question is: When are the mixing inequalities and the aggregated mixing inequalities sufficient to describe the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\)? Examples 2–4 suggest that whether or not the mixing and the aggregated mixing inequalities are sufficient depends on the value of \(\varepsilon \). In the next section, we find a necessary and sufficient condition for the sufficiency of the mixing and the aggregated mixing inequalities.

5 Joint mixing sets with a linking constraint

In this section, we study the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\), where \(\varvec{W}=\left\{ w_{i,j}\right\} \in {{\mathbb {R}}}_+^{n\times k}\) and \(\varepsilon \in {{\mathbb {R}}}_+\). More specifically, we focus on the question of when the convex hull of this set is obtained after applying the mixing inequalities and the aggregated mixing inequalities. By Remark 1, we have \((\varvec{y},\varvec{z})\in {{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) if and only if \((\varvec{y},\varvec{1}-\varvec{z})\in {{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\). In Sect. 3, we identified that \({{{\mathcal {P}}}}(\varvec{W},\varvec{\ell },0)\) defined as in (7) has an underlying submodularity structure (due to Lemma 1 and Proposition 1). In this section, we will first establish that \({{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\) has a similar submodularity structure for particular values of \(\varepsilon \). In fact, for those favorable values of \(\varepsilon \), we show that the mixing and the aggregated mixing inequalities are sufficient to describe the convex hull of \({{{\mathcal {M}}}}(\varvec{W},\varvec{0},\varepsilon )\) if and only if \({{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\) has the desired submodularity structure; this is the main result of this section.

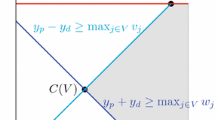

5.1 Submodularity in joint mixing sets with a linking constraint

In order to make a connection with submodularity, we first define the following functions \(f_1,\ldots ,f_k,g:\{0,1\}^n\rightarrow {{\mathbb {R}}}\): for \(\varvec{z}\in \{0,1\}^n\),

Then, we immediately arrive at the following representation of \({{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\).

Lemma 5

Let \(f_1,\ldots ,f_k,g:\{0,1\}^n\rightarrow {{\mathbb {R}}}\) be as defined in (18). Then,

Proof

We deduce the equivalence of the relations \(y_j\ge f_j(\varvec{z})\) for \(j\in [k]\) to the first set of constraints in \({{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\) from the corresponding definition of this set in (7). Also, we immediately have \(\sum _{j\in [k]}y_j\ge \max \left\{ \varepsilon ,\, \sum _{j\in [k]}f_j(\varvec{z}) \right\} \). The result then follows from the definition of the function g. \(\square \)

We would like to understand the convex hull of \({{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\) for \(\varvec{W}\in {{\mathbb {R}}}^{n\times k}_+\) and \(\varepsilon \in {{\mathbb {R}}}_+\) using Lemma 5. Observe that \(f_1,\ldots ,f_k\) defined in (18) coincide with the functions \(f_1,\ldots ,f_k\) defined in (11) for the \(\varvec{\ell }=\varvec{0}\) case. So, the following is a direct corollary of Lemma 1.

Corollary 3

For any \(j\in [k]\), the function \(f_j\) defined as in (18) is submodular and satisfies \(f_j(\emptyset )\ge 0\).

In contrast to the functions \(f_1,\ldots ,f_k\), the function g is not always submodular. However, we can characterize exactly when g is submodular in terms of \(\varepsilon \). For this characterization, we need to define several parameters based on \(\varvec{W}\) and \(\varepsilon \). For a given \(\varepsilon \), let \({{\bar{I}}}(\varepsilon )\) be the following subset of [n]:

Here, \({{\bar{I}}}(\varepsilon )\) is the collection of indices i with \(g(\{i\})=\varepsilon \). In Examples 2 and 4, we have \({{\bar{I}}}(\varepsilon )=\{4,5\}\).

Definition 5

We say that \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible if either

-

\({{\bar{I}}}(\varepsilon )=\emptyset \) or

-

\({{\bar{I}}}(\varepsilon )\ne \emptyset \) and \({{\bar{I}}}(\varepsilon )\) satisfies both of the following two conditions:

$$\begin{aligned}&\quad \max \limits _{i\in \bar{I}(\varepsilon )}\left\{ w_{i,j}\right\} \le w_{i,j}\text { for every } i\in [n]\setminus {{\bar{I}}}(\varepsilon )\text { and } j\in [k], \end{aligned}$$(C1)$$\begin{aligned}&\quad \sum _{j\in [k]}\max \limits _{i\in \bar{I}(\varepsilon )}\left\{ w_{i,j}\right\} \le \varepsilon . \end{aligned}$$(C2)

Example 5

In Example 2, it can be readily checked that \(\bar{I}(\varepsilon )\) satisfies (C1) and (C2), so \(I(\varepsilon )\) is \(\varepsilon \)-negligible. The matrix \(\varvec{W}\) of Example 4 is the same as that of Example 2, while the value of \(\varepsilon \) is higher in Example 4. Hence, \({{\bar{I}}}(\varepsilon )\) in Example 4 is also \(\varepsilon \)-negligible.

In Definition 5, (C2) imposes that \(g({{\bar{I}}}(\varepsilon ))=\varepsilon \), and (C1) requires that \(f_j(\{i\}\cup {{\bar{I}}}(\varepsilon ))=f_j(\{i\})\) for any \(i\in [n]\setminus {{\bar{I}}}(\varepsilon )\). In fact, we can argue that if \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible, \(\bar{I}(\varepsilon )\) does not affect the value of g; this is why we call this property \(\varepsilon \)-“negligibility." The following lemma formalizes this observation.

Lemma 6

Let g be as defined in (18). If \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible, then \(g(U)=g(U\setminus \bar{I}(\varepsilon ))\) for every \(U\subseteq [n]\).

Proof

Suppose \({{\bar{I}}}(\varepsilon )\) is nonempty and satisfies conditions (C1) and (C2). Take a subset U of [n]. If \(U\subseteq {{\bar{I}}}(\varepsilon )\), then \(g(U)\le g({{\bar{I}}}(\varepsilon ))\) because g is a monotone function. Since \(\sum _{j\in [k]}\max \nolimits _{i\in \bar{I}(\varepsilon )}\left\{ w_{i,j}\right\} \le \varepsilon \), we obtain \(g({{\bar{I}}}(\varepsilon ))=\varepsilon \) by definition of g in (18). So, \(g(U)=g(\emptyset )=\varepsilon \) in this case. If \(U\setminus {{\bar{I}}}(\varepsilon )\ne \emptyset \), then \(\sum _{j\in [k]}w_{p,j}>\varepsilon \) for some \(p\in U\), implying in turn that \(\sum _{j\in [k]}\max _{i\in U}\left\{ w_{i,j}\right\} >\varepsilon \). Moreover, as \(\bar{I}(\varepsilon )\) satisfies (C1), \(\sum _{j\in [k]}\max _{i\in U}\left\{ w_{i,j}\right\} =\sum _{j\in [k]}\max \nolimits _{i\in U\setminus {{\bar{I}}}(\varepsilon )}\left\{ w_{i,j}\right\} \), and therefore, \(g(U)=g(U\setminus {{\bar{I}}}(\varepsilon ))\), as required. \(\square \)

Next we show that \(\varepsilon \)-negligibility is necessary for g to be submodular.

Lemma 7

If the function g defined as in (18) is submodular, then \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible.

Proof

Assume that g is submodular. Suppose for a contradiction that \({{\bar{I}}}(\varepsilon )\) is not \(\varepsilon \)-negligible. Then \(\bar{I}(\varepsilon )\) is nonempty, and (C1) or (C2) is violated. Assume that \({{\bar{I}}}(\varepsilon )\) does not satisfy (C1). Then \(w_{q,j}>w_{p,j}\) for some \(j\in [k]\), \(p\in [n]\setminus {{\bar{I}}}(\varepsilon )\) and \(q\in \bar{I}(\varepsilon )\). By our choice of q, we have \(g(\left\{ q\right\} )=\varepsilon \). Moreover, \(w_{q,j}>w_{p,j}\) implies that \(g(\left\{ p,q\right\} )=\sum _{j\in [k]}\max \{w_{p,j},w_{q,j}\}>\sum _{j\in [k]}w_{p,j}=g(\left\{ p\right\} )\). Since \(g(\emptyset )=\varepsilon \), it follows that \(g(\{p\})+g(\{q\})<g(\emptyset )+g(\{p,q\})\), a contradiction to the submodularity of g. Thus, we may assume that \({{\bar{I}}}(\varepsilon )\) does not satisfy (C2). Then \(\sum _{j\in [k]}\max \nolimits _{i\in \bar{I}(\varepsilon )}\left\{ w_{i,j}\right\} >\varepsilon \), so \(g(\bar{I}(\varepsilon ))=\sum _{j\in [k]}\max \nolimits _{i\in \bar{I}(\varepsilon )}\left\{ w_{i,j}\right\} \). Now take a minimal subset I of \({{\bar{I}}}(\varepsilon )\) with \(g(I)>\varepsilon \). Since \(I\subseteq {{\bar{I}}}(\varepsilon )\) and \(g(I)>\varepsilon \), we know that \(|I|\ge 2\). That means that one can find two nonempty subsets U, V of I partitioning I. By our minimal choice of I, we have \(g(U)=g(V)=\varepsilon \), but this indicates that \(g(U)+g(V)<g(\emptyset )+g(I)=g(U\cap V)+g(U\cup V)\), a contradiction to the submodularity of g. Therefore, \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible. \(\square \)

On the other hand, it turns out that \(\varepsilon \)-negligibility alone does not always guarantee that g is submodular. If \(\bar{I}(\varepsilon )=[n]\), \({{\bar{I}}}(\varepsilon )\) being \(\varepsilon \)-negligible means that \(g(U)=g(\emptyset )=\varepsilon \) for every \(U\subseteq [n]\) and thus g is clearly submodular. However, when \({{\bar{I}}}(\varepsilon )\) is a strict subset of [n], g may not necessarily be submodular even though \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible.

Example 6

In Example 4, we have observed that \(\bar{I}(\varepsilon )=\{4,5\}\) and \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible. By definition, we have \(g(\emptyset )=\varepsilon =9\). Since \(2,3\notin {{\bar{I}}}(\varepsilon )\), we have that \(g(\{2\})=w_{2,1} +w_{2,2}=10\), \(g(\{3\})=w_{3,1}+w_{3,2}=15\), and \(g(\{2,3\})=\max \{w_{2,1},w_{3,1}\}+\max \{w_{2,2}+w_{3,2}\}=17\). Then \(g(\{2\})+g(\{3\})=25\) is less than \(g(\{2,3\})+g(\emptyset )=26\), so g is not submodular.

In order to understand when the function g is submodular, let us take a closer look at Example 6. In this example, \(g(\{2\})+g(\{3\})-g(\{2,3\})\) is equal to \(\min \{w_{2,1},w_{3,1}\}+\min \{w_{2,2}+w_{3,2}\}\), and this value is less than \(\varepsilon =g(\emptyset )\), implying that g is not submodular. In general, for any distinct indices \(p,q\in [n]\setminus {{\bar{I}}}(\varepsilon )\),

and this quantity needs to be greater than or equal to \(\varepsilon =g(\emptyset )\) for g to be submodular. To formalize this, we define another parameter \(L_{\varvec{W}}(\varepsilon )\in {{\mathbb {R}}}_+\) as follows:

Example 7

In Example 2, we have \({{\bar{I}}}(\varepsilon )=\{4,5\}\) and \(L_{\varvec{W}}(\varepsilon )=w_{2,1}+w_{3,2}=8\). Moreover, as \(\bar{I}(\varepsilon )=\{4,5\}\) in Example 4 as well, we still have \(L_{\varvec{W}}(\varepsilon )=8\) in Example 4.

Lemma 8

If the function g defined as in (18) is submodular, then \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\).

Proof

Suppose for a contradiction that \(\varepsilon >L_{\varvec{W}}(\varepsilon )\). Then, \(L_{\varvec{W}}(\varepsilon )\ne \infty \), implying \(\bar{I}(\varepsilon )\ne [n]\) and \(\varepsilon >\sum _{j\in [k]}\min \left\{ w_{p,j},~w_{q,j}\right\} \) for some \(p,q\in [n] \setminus {{\bar{I}}}(\varepsilon )\). Moreover, because both \(\sum _{j\in [k]} w_{p,j}\) and \(\sum _{j\in [k]}w_{q,j}\) are greater than \(\varepsilon \), we deduce that p and q are distinct. Then,

where the strict inequality follows from \(g(\emptyset )=\varepsilon \). This is a contradiction to the assumption that g is submodular. Hence, \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\), as required. \(\square \)

By Lemmas 7 and 8, both of the conditions that \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible and \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\) are necessary for the submodularity of g. In fact, we will next see that these two conditions are also sufficient to guarantee that g is submodular. So, whether the function g is submodular or not is determined entirely by \({{\bar{I}}}(\varepsilon )\) and \(L_{\varvec{W}}(\varepsilon )\).

Lemma 9

The function g defined as in (18) is submodular if and only if \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible and \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\).

Proof

(\(\Rightarrow \)): This direction is settled by Lemmas 7 and 8.

(\(\Leftarrow \)): Assume that \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible and \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\). We will show that \(g(U)+g(V)\ge g(U\cup V)+g(U\cap V)\) for every two sets \(U,V\subseteq [n]\). If \({{\bar{I}}}(\varepsilon )=[n]\), then we have \(g(U)=\varepsilon \) for every subset U of [n] due to (C2). Thus, we may assume that \(\bar{I}(\varepsilon )\ne [n]\). By Lemma 6, for every two subsets \(U,V\subseteq [n]\), \(g(U)+g(V)\ge g(U\cup V)+g(U\cap V)\) holds if and only if \(g\left( U^\prime \right) +g\left( V^\prime \right) \ge g\left( U^\prime \cup V^\prime \right) +g\left( U^\prime \cap V^\prime \right) \), where \(U^\prime :=U\setminus {{\bar{I}}}(\varepsilon )\) and \(V^\prime :=V\setminus {{\bar{I}}}(\varepsilon )\), holds. This means that it is sufficient to consider subsets of \([n]\setminus \bar{I}(\varepsilon )\). Consider two sets \(U,V\subseteq [n]\setminus \bar{I}(\varepsilon )\). If \(U=\emptyset \) or \(V=\emptyset \), the inequality trivially holds due to the monotonicity of g. So, we may assume that \(U,V\ne \emptyset \). First, suppose that \(U\cap V\ne \emptyset \). Because \(U,V\subseteq [n]\setminus {{\bar{I}}}(\varepsilon )\), we deduce that \(g(X)=\sum _{j\in [k]}f_j(X)\) for any \(X\in \{U,V,U\cup V,U\cap V\}\). Then, Corollary 3 implies that \(g(U)+g(V)\ge g(U\cup V)+g(U\cap V)\). Now, consider the case of \(U\cap V=\emptyset \). Note that for each \(j\in [k]\), the definition of \(f_j(V)=\max _{i\in V}\{w_{i,j} \}\) implies that

Hence, we have

So, it suffices to argue that \(\sum _{j\in [k]} \min \{f_j(U),f_j(V)\}\ge g(\emptyset )=\varepsilon \). Since \(U,V\ne \emptyset \) and \(U\cap V=\emptyset \), there exist distinct \(p,q\in [n]\setminus {{\bar{I}}}(\varepsilon )\) such that \(p\in U\) and \(q\in V\). Then \(f_j(U)\ge f_j(\{p\})=w_{p,j}\) and \(f_j(V)\ge f_j(\{q\})=w_{q,j}\), implying in turn that

where the last inequality follows from the definition of \(L_{\varvec{W}}(\varepsilon )\) in (22). Finally, our assumption that \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\) implies that \(\sum _{j\in [k]} \min \{f_j(U),f_j(V)\}\ge \varepsilon \) as desired. \(\square \)

Therefore, Lemma 9, along with Corollary 3, establish that \(f_1,\ldots ,f_k\) and g are submodular when \({{\bar{I}}}(\varepsilon )\) is \(\varepsilon \)-negligible and \(\varepsilon \le L_{\varvec{W}}(\varepsilon )\). Note that \({{\bar{I}}}(\varepsilon )\) can be found in O(kn) time and that \(L_{\varvec{W}}(\varepsilon )\) can be computed in \(O(kn^2)\) time, so testing whether g is submodular can be done in polynomial time.

In Sect. 4, we introduced the parameter \(L_{\varvec{W},\Theta }\) that depends on \(\varvec{W}\) and a sequence \(\Theta \) of indices in [n] to define the aggregated mixing inequality (A-Mix) derived from \(\Theta \). The following lemma illustrates a relationship between \(L_{\varvec{W}}(\varepsilon )\) and \(L_{\varvec{W},\Theta }\):

Lemma 10

If \({{\bar{I}}}(\varepsilon )\ne [n]\), then \(L_{\varvec{W}}(\varepsilon )=\min _{\Theta }\big \{L_{\varvec{W},\Theta }:~\Theta ~\text {is a nonempty sequence in} [n]\setminus {{\bar{I}}}(\varepsilon )\big \}\).

Proof

Take a nonempty sequence \(\Theta \) in \([n]\setminus \bar{I}(\varepsilon )\). When \(\Theta =\{r\}\) for some \(r\in [n]\setminus \bar{I}(\varepsilon )\), \(L_{\varvec{W},\Theta }=\sum _{j\in [k]}w_{r,j}\), so \(L_{\varvec{W}}(\varepsilon )\le L_{\varvec{W},\Theta }\) in this case. When \(\Theta =\{i_1\rightarrow \cdots \rightarrow i_\theta \}\) with \(\theta \ge 2\), for any \(s\in [\theta ]\) we have

where \(w_{i_{\theta +1},j}\) is set to 0 for convention. Then it follows from the definition of \(L_{\varvec{W},\Theta }\) in (16) that \(L_{\varvec{W},\Theta }\ge \min \left\{ \sum _{j\in [k]}\min \left\{ w_{i_s,j},~w_{i_{s+1},j}\right\} :~s\in [\theta ]\right\} \). Consequently, from the definition of \(L_{\varvec{W}}(\varepsilon )\), we deduce that \(L_{\varvec{W}}(\varepsilon )\le L_{\varvec{W},\Theta }\). In both cases, we get \(L_{\varvec{W}}(\varepsilon )\le L_{\varvec{W},\Theta }\).

Now it remains to show  . Since \({{\bar{I}}}(\varepsilon )\ne [n]\), either there exist distinct \(p,q\in [n]\setminus {{\bar{I}}}(\varepsilon )\) such that \(L_{\varvec{W}}(\varepsilon )=\sum _{j\in [k]}\min \left\{ w_{p,j},~w_{q,j}\right\} =L_{\varvec{W},\{p\rightarrow q\}}\) or there exists \(r\in [n]\setminus {{\bar{I}}}(\varepsilon )\) such that \(L_{\varvec{W}}(\varepsilon )=\sum _{j\in [k]}w_{r,j}=L_{\varvec{W},\{r\}}\), implying in turn that \(L_{\varvec{W}}(\varepsilon )\ge L_{\varvec{W},\Theta }\) for some nonempty sequence \(\Theta \) in \([n]\setminus \bar{I}(\varepsilon )\), as required. \(\square \)

. Since \({{\bar{I}}}(\varepsilon )\ne [n]\), either there exist distinct \(p,q\in [n]\setminus {{\bar{I}}}(\varepsilon )\) such that \(L_{\varvec{W}}(\varepsilon )=\sum _{j\in [k]}\min \left\{ w_{p,j},~w_{q,j}\right\} =L_{\varvec{W},\{p\rightarrow q\}}\) or there exists \(r\in [n]\setminus {{\bar{I}}}(\varepsilon )\) such that \(L_{\varvec{W}}(\varepsilon )=\sum _{j\in [k]}w_{r,j}=L_{\varvec{W},\{r\}}\), implying in turn that \(L_{\varvec{W}}(\varepsilon )\ge L_{\varvec{W},\Theta }\) for some nonempty sequence \(\Theta \) in \([n]\setminus \bar{I}(\varepsilon )\), as required. \(\square \)

We have shown in Sect. 3 that the polymatroid inequalities corresponding to the functions \(f_1,\ldots ,f_k\) are mixing inequalities. Although g is not always submodular, we now have a complete characterization of when g is submodular. In the next subsection, we show that when g is indeed submodular, the corresponding polymatroid inequalities are aggregated mixing inequalities.

5.2 Polymatroid inequalities and aggregated mixing inequalities

Consider \({{{\mathcal {P}}}}(\varvec{W},\varvec{0},\varepsilon )\) with \(\varvec{W}\in {{\mathbb {R}}}_+^{n\times k}\) and \(\varepsilon \in {{\mathbb {R}}}_+\). Then, from Lemma 5 we deduce that