Abstract

We study a substructure appearing in mixed-integer programming reformulations of chance-constrained programs with stochastic right-hand-sides over a finite discrete distribution, which we call the mixing set with a knapsack constraint. Recently, Luedtke et al. (Math. Program. 122(2):247–272, 2010) and Küçükyavuz (Math Program 132(1):31–56, 2012) studied valid inequalities for such sets. However, most of their results were focused on the equal probabilities case (when the knapsack constraint reduces to a cardinality constraint). In this paper, we focus on the general probabilities case (general knapsack constraint). We characterize the valid inequalities that do not come from the knapsack polytope and use this characterization to generalize the results previously derived for the equal probabilities case. Our results allow for a deep understanding of the relationship that the set under consideration has with the knapsack polytope. Moreover, they allow us to establish benchmarks that can be used to identify when a relaxation will be useful for the considered types of reformulations of chance-constrained programs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many optimization problems in real world applications allow to some extent a number of violated constraints, which results in a decrease in the quality of service, as well as a decrease in the cost of production. These optimization problems have been a main motive to study probabilistic (in particular, chance-constrained) programming. A difficulty when dealing with these optimization problems is that the feasible region is not necessarily convex. In this paper, we consider mixed-integer programming (MIP) reformulations of chance-constrained programs with joint probabilistic constraints in which the right-hand-side vector is random with a finite discrete distribution.Footnote 1 This model was first proposed in Sen [16], studied in Ruszczyński [15], and extended by Luedtke et. al [14] and Küçükyavuz [12]. This reformulation gives rise to a mixing-type set [10] subject to an additional knapsack constraint, which is the focus of this paper.Footnote 2

Formally, consider the following chance-constrained programming problem

where X is a polyhedron, B is a matrix with d rows, \(\xi \) is a random variable in \(\mathbb {R}^d\) with finite discrete distribution, \(\epsilon \in (0,1)\), and c is an arbitrary cost vector. Let \(\xi \) take values \(\xi ^1,\ldots ,\xi ^n\) with probabilities \(\pi _1,\ldots ,\pi _n\), respectively. We may assume without loss of generality that \(\xi ^j\ge 0\), for all \(j\in [n]:=\{1,\ldots ,n\}\) (by a mere simple linear transformation). Thus, since \(\epsilon <1\), \(Bx\ge 0\) for every feasible solution x to (PLP). By definition, for each \(j\in [n]\), \(\pi _j>0\) and \(\sum _{j=1}^{n}{\pi _j}=1\). We can reformulate the chance constraint in (PLP) using linear inequalities and auxiliary binary variables as follows: let \(z\in \{0,1\}^n\) where \(z_j=0\) guarantees that \(Bx\ge \xi ^j\). Then (PLP) is equivalent to

We may assume for all \(j\in [n]\) that \(\pi _j\le \epsilon \). Indeed, if \(\pi _j>\epsilon \) for some \(j\in [n]\), then \(z_j=0\) for all feasible solutions (x, y, z) to the above system, so we may as well drop the index j. Now let

Then (PLP) can be rewritten as

This motivates us to study the set \(\mathcal {D}\). For each \(\kappa \in [d]\), let

Then observe that

Therefore, instead of \(\mathcal {D}\), we study the lower dimensional sets \(\mathcal {D}_\kappa \).

Fix \(\kappa \in [d]\) and for notational convenience, let \(h_j:=\xi ^j_{\kappa }\) for each \(j\in [n]\). Let \(\sum _{j\in [n]}{a_j z_j}\le p\) be a valid inequality for \(\mathcal {D}_\kappa \) where \(a\in \mathbb {R}_{+}^{n}\), \(p\in \mathbb {R}_+\), \(a_j\le p\) for all \(j\in [n]\), and \(\sum _{j\in [n]}a_j>p\). Observe that this inequality may be the knapsack constraint \(\sum _{j=1}^n{\pi _jz_j}\le \epsilon \). In this paper, we focus on the set

Specifically, we try to understand the convex hull of Q. We assume without loss of generality that \(h_1 \ge h_2 \ge \cdots \ge h_n\ge 0\).

Note that the assumption that \(a_j\le p\) for all \(j\in [n]\) implies that Q is a full-dimensional set. (The points \((h_1+1,0), (h_1,e_1),\ldots ,(h_1,e_n)\) are in Q, where \(e_j\) is the \(j^\text {th}\) n-dimensional unit vector.) Also, the assumption that \(\sum _{j\in [n]}a_j>p\) implies that \(y\ge h_n\), for all \(y\in Q\). Observe that the set Q contains as a substructure the intersection of a mixing-type set, introduced by Günlük and Pochet [10], and a knapsack constraint (hence the title of our paper). Various structural properties of conv(Q) were studied in [12] and [14] when the knapsack constraint \(\sum _{j\in [n]}a_jz_j\le p\) is a cardinality constraint. In Luedtke et al. [14], a characterization of all valid inequalities for conv(Q) was givenFootnote 3, and in both [12] and [14], explicit classes of facet-defining inequalities were introduced.

1.1 Our contributions

The main contribution of this paper is to generalize the results in [12] and [14]. In particular, we show that the extended formulation and polynomial time separation that these two papers obtain for the cardinality constrained case follow from the results that we establish for LP relaxations of the knapsack set. We also introduce a class of explicit facet-defining inequalities for conv(Q), and give a necessary condition on the constant right-hand-side term for any inequality to be facet-defining. Additionally, we provide a compact extended formulation for (CMIP) similar to the one given in [12]. Finally, we present computational experiments to illustrate how our results can be used to set benchmarks to identify when a relaxation is useful and worth a deeper investigation.

1.2 Outline

In this paper, we do not make any assumptions on the knapsack constraint. In Sect. 2 we characterize the set of all valid inequalities for conv(Q). In Sect. 3 we study how different relaxations of the knapsack polytope can be used to obtain relaxations of conv(Q). We discuss in Sect. 4 some properties of facet-defining inequalities of conv(Q) and introduce an explicit class of facet-defining inequalities. In Sect. 5 we provide a compact integer extended formulation for the whole set (CMIP) that we are interested in. Computational experiments are provided in Sect. 6 and a conclusion in Sect. 7.

2 A characterization of valid inequalities for conv(Q)

To start, let \(\nu :=\max \big \{k\in \mathbb {Z}:\sum _{j=1}^k a_j\le p\big \}\). Note \(0<\nu <n\). Define for each \(k\in \{0,1,\ldots ,\nu \}\) the knapsack set

and define \(\phi :\{0,1,\ldots ,\nu \}\times \mathbb {R}^n\rightarrow \mathbb {R}\), as follows: for \(0\le k\le \nu \) and \(\alpha \in \mathbb {R}^n\), let

Note \(\phi (k,\alpha )\le 0\).

The following theorem characterizes the set of all valid inequalities for conv(Q).

Theorem 1

Take \((\gamma , \alpha ,\beta )\in \mathbb {R}\times \mathbb {R}^n\times \mathbb {R}\). Then

is a valid inequality for conv(Q) if, and only if, \(\gamma \ge 0\) and

Proof

Suppose that (2) is a valid inequality of conv(Q) for some \((\gamma , \alpha ,\beta )\in \mathbb {R}\times \mathbb {R}^n\times \mathbb {R}\). Since \((1, \mathbf{0})\) is in the recession cone of conv(Q), it follows that \(\gamma \ge 0\). Take \(0\le k\le \nu \). Choose \(z^*\in P_k\) so that \(\sum _{j=k+1}^{n} {\alpha _j}z_j^*= \phi (k, \alpha )\). Let \(y^*=h_{k+1}\). Observe that \((y^*,z^*)\in Q\) as \(z^*_1=\cdots =z^*_k=1\). Hence, since (2) is valid for Q, it follows that

Since this holds for all \(0\le k\le \nu \), it follows that (3) holds.

Conversely, suppose that \(\gamma \ge 0\) and (3) holds. Let \((y^*,z^*)\in Q\). Let \(h_0:=+\infty \). Since \(\sum _{j=1}^{n}a_jz^*_j\le p\) there exists \(0\le k\le \nu \) for which \(z^*_1=\ldots =z^*_k=1\) but \(z^*_{k+1}=0\). Observe that \(z^*\in P_k\). Since \(z^*_{k+1}=0\) we get that \(y^*\ge h_{k+1}\), and so as \(\gamma \ge 0\) it follows that

as (3) holds. Therefore, (2) is valid for \((y^*,z^*)\), and since this is true for all \((y^*,z^*)\in Q\), (2) is a valid inequality for conv(Q). \(\square \)

The following proposition states that conv(Q) has all the facets of the knapsack polytope as a subset of its facets.

Proposition 2

The inequality

defines a facet of conv(Q) if and only if it defines a facet of \(conv(P_0)\)

Proof

The proof of this proposition is straightforward from Theorem 1 and the following facts: (i) any valid inequality for \(conv(P_0)\) is also valid for conv(Q) and conversely (ii) any inequality of the form (4) valid for conv(Q) is also valid for \(conv(P_0)\). \(\square \)

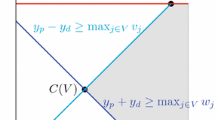

We end this section by collecting the coefficient vectors of all valid inequalities of conv(Q) as follows:

Following the terminology of [10], we refer to C as the coefficient polyhedron of Q. It is worth mentioning that the coefficient polyhedron C is reminiscent of the polar of a polyhedron.

3 A relaxation scheme for conv(Q)

The results in the previous section suggest that in order for us to describe conv(Q), we must be able to obtain the convex hull of the knapsack polytope. Since this is a far-stretched task in itself (see for instance [4, 5, 11, 17]), we consider in this section what happens if we have a polyhedral relaxation of the knapsack polytope.

For each \(0\le k\le \nu \), let \(R_k\) be a (strengthened) LP relaxation of \(P_k\) in the following sense: for some matrix \(A^k\) and column vector \(b^k\) we have \(R_k=\big \{z\in [0,1]^n: A^kz\le b^k\big \}\), \(R_k\cap \{0,1\}^n=P_k\), and

For each \(0\le k\le \nu \), define \(\varphi : \{0,1, \ldots , \nu \}\times \mathbb {R}^n\rightarrow \mathbb {R}\) as follows:

Observe that \(\varphi \) is a lower bound on \(\phi \) as \(P_k\subseteq R_k\). Let

and

Notice that \(C_{\varphi }\subseteq C\) and consequently, \(conv(Q)\subseteq Q_{\varphi }\). In words, what we are doing is picking polyhedral relaxations \(R_0,\ldots ,R_{\nu }\) of the knapsack sets \(P_0,\ldots ,P_{\nu }\) and defining a relaxation \(Q_\varphi \) of conv(Q) based on them, which is argued in the following proposition.

Proposition 3

\(Q_{\varphi }\) is an LP relaxation of Q, i.e. \(Q_{\varphi } \cap \big (\mathbb {R}_+\times \{0,1\}^n\big )=Q\) and

Proof

We first prove that \(\sum _{j=1}^n a_jz_j\le p\) is a valid inequality for \(Q_\varphi \). It suffices to show \((0,-a, -p)\in C_\varphi \). Take \(0\le k\le \nu \). Then, as (5) holds, it follows that

As this is true for all \(0\le k\le \nu \), it follows that \((0,-a,-p)\in C_\varphi \) and so \(\sum _{j=1}^n a_jz_j\le p\) is a valid inequality for \(Q_\varphi \).

Given \(i\in [n]\) we next prove \(y+h_iz_i\ge h_i\) is valid for \(Q_\varphi \). It suffices to show \((\gamma , \alpha , \beta ):=(1,h_i e_i, h_i)\in C_\varphi \), where \(e_i\) is the \(i^\text {th}\) unit vector in \(\mathbb {R}^n\). Choose \(0\le k\le \nu \). Observe that \(\varphi (k,h_ie_i)\ge 0\), and in fact, \(\varphi (k,h_ie_i)= 0\) since \(\sum _{j=1}^k e_j\in P_k\subseteq R_k\). If \(k< i\) then

Otherwise, \(k\ge i\) and so

Hence, \((1,h_ie_i, h_i)\in C_\varphi \) implying that \(y+h_iz_i\ge h_i\).

As a result,

It remains to show \(Q_{\varphi } \cap \big (\mathbb {R}_+\times \{0,1\}^n\big )=Q\). The inclusion relation above implies that \(Q_{\varphi } \cap \big (\mathbb {R}_+\times \{0,1\}^n\big )\subseteq Q\), and since \(Q\subseteq Q_\varphi \), it follows that \(Q_{\varphi } \cap \big (\mathbb {R}_+\times \{0,1\}^n\big )\supseteq Q\). Thus, \(Q_{\varphi } \cap \big (\mathbb {R}_+\times \{0,1\}^n\big )=Q\), finishing the proof. \(\square \)

3.1 Compact extended formulations for \(Q_\varphi \) and \(C_\varphi \)

Having shown that we can define a relaxation of conv(Q) based on LP relaxations of the knapsack polytope, we now start with a theorem that proposes an alternate formulation of \(Q_\varphi \). It provides insight into the structure of \(Q_\varphi \) in terms of disjunctive programming and allows the derivation of the extended formulations discussed in this subsection.

Theorem 4

For each \(0\le k\le \nu \), let \(S_k:=\big \{(h_{k+1},z): z\in R_k \big \}\). Then

Proof

Observe that

It is therefore clear that

Suppose, for a contradiction, there exists a point \((y^*,z^*)\in Q_\varphi -S\). Then there is a valid inequality \(\gamma ^* y+ \sum _{j=1}^n \alpha ^*_j z_j\ge \beta ^*\) for S that is violated by \((y^*,z^*)\). However, \((\gamma ^*,\alpha ^*,\beta ^*)\in C_\varphi \), implying that

as \((y^*,z^*)\in Q_\varphi \), a contradiction. Therefore, \(S=Q_\varphi \), as required. \(\square \)

Given the results in Theorem 4, we can now apply Balas’s theory of disjunctive programming [2, 3] to (6), enabling us to get the following extended formulation for \(Q_\varphi \). Recall that \(R_k=\big \{z\in [0,1]^n: A^kz\le b^k\big \}\) for \(0\le k\le \nu \).

Corollary 5

\(Q_\varphi = proj_{y,z} EQ_\varphi \) for

where

Moreover, we can actually get an extended formulation for \(C_\varphi \) as well, according to the following proposition.

Proposition 6

\(C_{\varphi }= proj_{\gamma ,\alpha ,\beta }EC_{\varphi }\) for

where

and where, for each \(0\le k\le \nu \), \(\alpha ^k=(0,0,\ldots ,0,\alpha _{k+1},\alpha _{k+2},\ldots ,\alpha _n)\in \mathbb {R}^n\).

Proof

For \(0\le k\le \nu \) and \(\alpha \in \mathbb {R}^n\), we know that

which, by strong LP duality, is equal to

Note now that weak LP duality implies \(C_{\varphi }\supseteq proj_{\gamma ,\alpha ,\beta } EC_{\varphi }\), and by strong LP duality, we have \(C_{\varphi }\subseteq proj_{\gamma ,\alpha ,\beta } EC_{\varphi }\). Therefore, \(C_{\varphi }= proj_{\gamma ,\alpha ,\beta }EC_{\varphi }\). \(\square \)

We note that these results generalize the previous results of [12, 14] where the extended formulations were considered by using the LP relaxation of the cardinality constrained case, which in that case coincides with the convex hull of the knapsack polytope.

3.2 On the strength of the relaxations

Here we consider what can be said about the strength of the proposed relaxations, given knowledge about the strength of the LP relaxations of the knapsack polytope. Our first theorem shows that if the relaxation is exact for a given subset of variables, then all the inequalities that are allowed to use negative coefficients for the same subset of variables are guaranteed to be considered in the relaxation.

To do so, let us define for any subset \(J\subseteq [n]\) the sets

that is, the sets \(R_k\) and \(P_k\) when we restrict ourselves only to variables in J. We now want to consider what happens when the relaxations \(R^J_k\) are exact.

Theorem 7

Suppose, for a given subset \(J\subseteq [n]\), we have that

Take \((y^*,z^*)\in Q_{\varphi }\). Then \(\gamma y^* + \sum _{j\in [n]}\alpha _jz^*_j\ge \beta \), for all \((\gamma ,\alpha ,\beta )\in C\) such that \(\{j\in [n]: \alpha _j<0\}\subseteq J\).

Proof

Choose \((\gamma ,\alpha ,\beta )\in C\) such that \(\{j\in [n]: \alpha _j<0\}\subseteq J\). Observe that, in this case, the argument of \(\phi (k,\alpha )\) will be in \(R^J_k\) since for all j with \(\alpha _j\ge 0\) we can just set \(z_j=0\) and either improve or not change the value of \(\phi (k,\alpha )\). Similarly, the argument of \(\varphi (k,\alpha )\) will be in \(P^J_k\). Therefore, we have that \(\phi (k,\alpha )= \varphi (k,\alpha )\) for all \(0\le k\le \nu \), and so \((\gamma , \alpha , \beta )\in C_\varphi \). As a consequence, since \((y^*,z^*)\in Q_\varphi \), we have that \(\gamma y^*+\sum _{j\in [n]} \alpha _jz^*_j\ge \beta \), as desired. \(\square \)

While this theorem may seem strange at first, what it is saying is that as long as we can describe completely the convex hull of the knapsack polytope restricted to a set J, then we are guaranteed that all the points in \(Q_\varphi \) satisfy all the inequalities where the only negative coefficients allowed are in J. For instance, when we are in the cardinality constrained case, we have that \(J=[n]\) and so this says that all inequalities of conv(Q) must be satisfied by \(Q_\varphi \). As another special case, for instance, one can derive the following corollary.

Corollary 8

Suppose that

Take \((y^*,z^*)\in Q_{\varphi }\). Then, for each \((\gamma ,\alpha ,\beta )\in C\) with the property that \(a_i=a_j\) for all \(\alpha _i,\alpha _j<0\), we have

In particular, if \(a_1=a_2=\cdots =a_n\) then \(Q_\varphi =conv(Q)\).

Next we study the case where \(\varphi \) is guaranteed to approximate \(\phi \). More precisely, suppose there exists a \(\delta \in (0,1)\) such that for every \(0\le k\le \nu \),

\((\diamond )~~~\max \big \{w^{\top }z:z\in P_k\big \}\ge (1-\delta )\max \big \{w^{\top }z: z\in R_k \big \}\) for all \(w\in \mathbb {R}^n\).

That is, we have that for each \(0\le k\le \nu \) and \(\alpha \in \mathbb {R}^n\),

(Recall \(\phi (k,\alpha )\le 0\).) We now show that such approximation factor can be translated into the inequalities in the following way. For any \(\alpha \in \mathbb {R}^n\), let \(S^-(\alpha ):= \sum \big ( \alpha _j: 1\le j\le n, \alpha _j<0\big )\). Then, the next lemma shows that we are guaranteed that all inequalities for conv(Q) are not violated by much if we consider \(Q_\varphi \).

Proposition 9

If \((\gamma ,\alpha ,\beta )\in C\) and \(R_0,R_1,\ldots ,R_{\nu }\) satisfy \((\diamond )\), then

\((\gamma , \alpha , \beta +\delta S^-(\alpha ))\in C_{\varphi }\).

Proof

Take \((\gamma , \alpha , \beta )\in C\). By \((\diamond ')\), for each \(0\le k\le \nu \),

On the other hand,

so

Since this is true for all \(0\le k\le \nu \), it follows that \((\gamma , \alpha , \beta + \delta S^-(\alpha ))\in C_{\varphi }\). \(\square \)

One application of Proposition 9, for instance, is to consider Bienstock’s approximate formulation for the knapsack polytope. As shown in Bienstock [8], for every \(0\le k\le \nu \) and \(\delta >0\), there exists a polyhedron \(R_k^{\delta }\) of dimension \(O\big (\delta ^{-1} n^{1+\lceil 1/\delta \rceil }\big )\) that is described by \(O\big (\delta ^{-1}n^{2+\lceil 1/\delta \rceil }\big )\) constraints whose projection onto z satisfies the desired property \((\diamond )\).

4 Facet-defining inequalities for conv(Q)

The results in the previous section allow us to study several different relaxations of conv(Q) by studying relaxations of the knapsack polytope.

In this section, we focus on developing new classes of facet-defining inequalities for conv(Q) that do not arise from facet-defining inequalities for the knapsack set \(P_0\). By Theorem 1 and Proposition 2, such inequalities must have the form \(y+\sum _{j\in [n]}\alpha _jz_j\ge \beta \).

We begin this section by giving an overview of the known classes of facet-defining inequalities for conv(Q). We then describe a property of facet-defining inequalities for conv(Q) that generalizes the results for the previously known facet-defining inequalities. Finally, we will introduce a new explicit class of facet-defining inequalities that subsumes all the previously known classes.

The first proposed class of facet-defining inequalities for conv(Q) were the so-called strengthened star inequalities.

Theorem 10

[14] The strengthened star inequalities

with \(t_1<\cdots <t_a\) and \(h_{t_{a+1}}:=h_{\nu +1}\) are valid for conv(Q). Moreover, (14) is facet-defining for conv(Q) if and only if \(h_{t_1}=h_1\). \(\square \)

As shown in [1, 9, 10], the strengthened star inequalities can be separated in polynomial time and are sufficient to describe the convex hull of

However, as one may expect, when a knapsack constraint is enforced (to obtain Q), the convex hull becomes much more complex, so the facet-defining inequalities become more difficult to find.

In 2010, Luedtke et al. [14] found a general class of facet-defining inequalities for conv(Q). Subsequently, Küçükyavuz [12] introduced a larger and subsuming class of facet-defining inequalities for conv(Q), called the \((T,{\varPi }_L)\) inequalities. Here, we only state the latter class.

Theorem 11

[12] Suppose that \(a_1=\dots =a_n=1\), p is a positive integer and \(m\in [p]\). Suppose further that

-

(i)

\(T:=\{t_1,\ldots ,t_a\}\subseteq [m]\) where \(t_1<\cdots <t_a\),

-

(i)

\(L\subseteq \{m+2,\ldots ,n\}\) is of size \(p-m\) and \({\varPi }_L:=(\ell _1,\ldots ,\ell _{p-m})\) is a permutation of the elements of L such that \(\ell _j> m+j\), for \(j\in [p-m]\).

Set \(t_{a+1}:=m+1\). Let \({\varDelta }_1:=h_{m+1}-h_{m+2}\), and for \(2\le j\le p-m\), define

Then the \((T,{\varPi }_L)\) inequality

is valid for conv(Q). Furthermore, (15) is facet-defining inequality for conv(Q) if and only if \(h_{t_1}=h_1\). \(\square \)

Observe that the \((T,\emptyset )\) inequalities are simply the strengthened star inequalities. A common aspect of Theorems 10 and 11 (and the other class of facet-defining inequalities in Luedtke et al. [14]) is the condition on the right-hand-side constant for the inequality to be facet-defining. The following result provides a generalization of those conditions to any facet-defining inequality of conv(Q).

Proposition 12

If

is valid for conv(Q) then \(\beta \le h_1+ \phi (0,\alpha )\). Moreover, if (16) is facet-defining for conv(Q), then \(\beta = h_1+ \phi (0,\alpha )\).

Proof

Take \(z^*\in P_0\) so that \(\sum _{j\in [n]} \alpha _jz^*_j=\phi (0,\alpha )\). As \((h_1, z^*)\in Q\) we have

Suppose now that (16) is facet-defining. Since conv(Q) is full-dimensional and (16) is a facet-defining inequality different from \(z_1\le 1\), it follows that there is a point \((y',z')\in Q\) on the facet defined by (16) such that \(z'_1=0\). Then \(y'= y'+h_1z'_1\ge h_1\), and since \(z'\in P_0\), it follows that

Hence, \(\beta =h_1+\phi (0,\alpha )\). \(\square \)

We note that for (14) we have \(\phi (0,\alpha )=0\) and for (15) we have \(\phi (0,\alpha )=-\sum \nolimits _{i=1}^{p-m}{\varDelta }_i\), so the above theorem implies the previously stated results on the right-hand-side constants of the inequalities.

We now introduce a class of valid inequalities for conv(Q) subsuming all the preceding classes of facet-defining inequalities. Let \(s_0:=0\) and for each \(m\in [n]\), let \(s_m:=\sum _{j=1}^m a_j\).

Theorem 13

Choose \(m\in \{0,1,\ldots ,\nu \}\) such that \(p-s_m\le n-m-1\). Let s be an integer such that \(p-s_m\le s\le n-m-1\). For \(1\le j\le s\), let \(\mathfrak {m}(j):=\max \{k: k\in [n], j\ge s_k-s_m\}\). Suppose that

-

(V1)

\(T:=\{t_1,\ldots ,t_a\}\subseteq \{1,\ldots ,m\}\) where \(t_1<\cdots <t_a\),

-

(V2)

\(L:=\{\ell _1,\ldots ,\ell _s\}\subseteq \{m+2,\ldots ,n\}\) where \(\ell _1,\ldots ,\ell _s\) are pairwise distinct,

-

(V3)

\(a_j\ge 1\) for all \(j\in L\).

Set \(t_{a+1}:=m+1\). Choose \({\varDelta }\in \mathbb {R}^L\) such that \(0\le {\varDelta }_{\ell _1}\le {\varDelta }_{\ell _2}\le \cdots \le {\varDelta }_{\ell _s}\) and

for all \(1\le j\le s\). Then

is a valid inequality for conv(Q).

A proof is included in Sect. 4.2. Under certain conditions described below, (17) becomes a facet-defining inequality for conv(Q).

Theorem 14

Choose \(m\in \{0,1,\ldots ,\nu \}\) such that \(p-s_m\le n-m-1\) and \(p-s_m\) is an integer. For \(1\le j\le p-s_m\), let \(\mathfrak {m}(j):=\max \{k: k\in [n], j\ge s_k-s_m\}\). Suppose that

-

(F1)

\(T:=\{t_1,\ldots ,t_a\}\subseteq \{1,\ldots ,m\}\) where \(t_1<\cdots <t_a\) and \(h_{t_1}=h_1\),

-

(F2)

\(L\subseteq \{m+2,\ldots ,n\}\) is of size \(p-s_m\) and \((\ell _1,\ldots , \ell _{p-s_m})\) is a permutation of the elements of L such that \(\ell _j>\mathfrak {m}(j)\), for all \(1\le j\le p-s_m\),

-

(F3)

\(a_j=1\) for all \(j\in L\), and \(a_i\le s_m\) for all \(i\in [n]-L\).

Set \(t_{a+1}:=m+1\). Let \({\varDelta }_{\ell _1}:=h_{m+1}-h_{\mathfrak {m}(1)+1}\), and for \(2\le j\le p-s_m\), define

Then (17) is a facet-defining inequality for conv(Q).

A proof can be found in Sect. 4.3. The class above coincides with the \((T,{\varPi }_L)\) inequalities in the case when \(a_1=\cdots =a_n=1\) and p is an integer.

Let us explain how this theorem implies that the \((T,{\varPi }_L)\) inequalities (15) are facet-defining for conv(Q). Observe that, in the context of Theorem 11, \(s_j=j\), for all \(0\le j\le n\). Let \(s:=p-m\) and note that \(\mathfrak {m}(j)=m+j\), for all \(1\le j\le s\). Furthermore, \(\ell _j>m+j=\mathfrak {m}(j)\) for all \(1\le j\le s\). Hence, by Theorem 14, the \((T,{\varPi }_L)\) inequalities (15) are facet-defining for conv(Q).

Observe that Theorems 13 and 14 can also be applied to any scalar multiple of the knapsack constraint, and this will potentially give us more facet-defining inequalities. That is, one can apply Theorem 14 to \(\sum _{j\in [n]}da_jz_j\le dp\), for any arbitrary positive real number d. This is explained in the following example.

Example 1

Let \(a=(2, 1.5, 2.5, 1, 1, 1, 2, 1, 0.5, 0.5)\) and \(h=(809, 405, 202, 100,60, 40, 30, 25, 23,\) 20) for \(n=10\) and \(p=9\). Note that \(\nu =6\). For \(m=6\), \(T=\{1, 3, 5\}\) and \(L=\emptyset \), the (strengthened star) inequality

is facet-defining for conv(Q).

Next set \(m=3\), \(T=\{1,2,3\}\) and \(L=\{\ell _1=5,\ell _2=6,\ell _3=8\}\). Then \(\mathfrak {m}(1)=4, \mathfrak {m}(2)=5, \mathfrak {m}(3)=6\) and the hypotheses of Theorem 14 are satisfied. Thus the inequality

is facet-defining for conv(Q).

Furthermore, one can replace a and p with the equivalent choice of 2a and 2p. In this case, set \(m=5\), \(T=\{1,2,5\}\) and \(L=\{\ell _1=9,\ell _2=10\}\). Then \(\mathfrak {m}(1)=5, \mathfrak {m}(2)=6\) and the hypotheses of Theorem 14 are once again satisfied. Thus the inequality

is facet-defining for conv(Q).

4.1 Separation of a subset of proposed facet-defining inequalities

Separating over all proposed facet-defining inequalities (17) seems to be hard, the bottleneck being minimizing for a fixed \(z^*\) the expression \(\sum _{i\in L}{\varDelta }_i(1-z^*_i)\) over all possible L’s, as the choice of L affects the values of \({\varDelta }\). To circumvent this difficulty, Küçükyavuz [12] retricted the choices for L so as to control the values of \({\varDelta }\). With not much more work, we can obtain a similar result for our general setting:

Proposition 15

There is an exact separation algorithm with running time \(O(p^4)\) that separates over the proposed facet-defining inequalities (17) for which there is a partition of L into parts F, G where the following hold:

-

(1)

\(\mathfrak {m}(1)<\mathfrak {m}(2)<\cdots <\mathfrak {m}(p-s_m)\),

-

(2)

\(F=\{\mathfrak {m}(1)+1,\ldots ,\mathfrak {m}(r)+1\}\) for some \(1\le r\le p-s_m\),

-

(3)

\(G\subseteq \{\mathfrak {m}(p-s_m)+1,\mathfrak {m}(p-s_m)+2,\ldots ,n\}\).

The proof of this result is almost identical to that in [12], so we refrain from including it.

4.2 Proof of Theorem 13

We prove Theorem 13 using the following two claims. Let \(R:=[n]- L\) and define \(\alpha \in \mathbb {R}^n\) as follows:

Claim 1

We have

Proof of Claim

Since \(\alpha _i<0\) only for indices \(i\in L\), it follows immediately that, for \(0\le k\le m\),

Next choose \(m+1\le k\le \nu \) and let \(z\in P_k\). Then

Therefore, as \(\alpha _i\ge 0\) for all \(i\in [n]- L\) and \(0\ge \alpha _{\ell _1}\ge \cdots \ge \alpha _{\ell _{p-s_m}}\), it follows that

Since this is true for all \(z\in P_k\), we must have

as claimed. \(\square \)

Claim 2

\(\left( 1,\alpha , h_{t_1}+\sum _{i\in L}\alpha _i\right) \in C\).

Proof of Claim

Let \(0\le k\le \nu \). If \(0\le k\le m\), choose \(j\in \{0,1,\ldots ,a\}\) so that \(t_j< k+1\le t_{j+1}\), where \(t_0:=0\). In this case, \( \phi (k,\alpha )\ge \sum _{i\in L}\alpha _i\) by Claim 1, so

Otherwise we have \(m+1\le k\le \nu \). Then

By Claim 1,

Let \(j:=s_k-s_m\). Note that \(\mathfrak {m}(j)=\max \{r: j\ge s_r-s_m\}=k\) as \(a_i>0\) for all \(i\in [n]\). Then

As a result, \(\left( 1,\alpha , h_{t_1}+\sum _{i\in L}\alpha _i\right) \in C\), proving Claim 2. \(\square \)

Observe that Claim 2 implies that (17) is a valid inequality for conv(Q), as (17) is equivalent to

\(\square \)

4.3 Proof of Theorem 14

As in the proof of Theorem 13, let \(R:=[n]- L\) and define \(\alpha \in \mathbb {R}^n\) as follows:

We first show that (17) is a valid inequality for conv(Q). Observe first that (V1)–(V3) are satisfied. It is clear by definition that \(0\le {\varDelta }_{\ell _1}\le \cdots \le {\varDelta }_{\ell _s}\). Moreover, for each \(1\le j\le p-s_m\),

Above, \((\dag )\) holds since \(\ell _j>\mathfrak {m}(j)\), and \((\ddagger )\) holds since \(\ell _i>\mathfrak {m}(i)\ge \mathfrak {m}(j)\) for all \(i>j\). As a result, Theorem 13 applies and implies that (17) is indeed a valid inequality for conv(Q).

We will next find \(n+1\) affinely independent points in Q that satisfy (17), or equivalently (18), at equality:

-

1.

For each \(k:=t_j\in T\), let \(y^k:=h_k\) and define \(z^k\in \{0,1\}^{n}\) as follows:

$$\begin{aligned}z^k_i:=\left\{ \begin{array}{ll} 1, ~~~ \text { if } i< k \text { or } i\in L; \\ 0,~~~ \text { otherwise.} \end{array} \right. \end{aligned}$$We have \((y^k,z^k)\in Q\), because \(z^k_i=1\) for \(i=1,\ldots ,k-1\), and

$$\begin{aligned}\sum _{i=1}^{n}a_iz^k_i= \sum _{i<k}a_iz^k_i+ \sum _{i\in L}z^k_i=s_{k-1}+|L|=s_{k-1}+p-s_m\le p.\end{aligned}$$Moreover, \((y^k,z^k)\) satisfies (18) at equality:

$$\begin{aligned} y^k+\sum _{i=1}^{a}(h_{t_i}-h_{t_{i+1}}) z^k_{t_i}+\sum _{i\in L}\alpha _iz^k_i&= h_{t_j}+\sum _{i=1}^{j-1} (h_{t_i}-h_{t_{i+1}})+\sum _{i\in L}\alpha _i\\&=h_{t_j}+h_{t_1}-h_{t_j}+\sum _{i\in L}\alpha _i\\&=h_{t_1} +\sum _{i\in L}\alpha _i.\end{aligned}$$ -

2.

For each \(k:=\ell _j\in L\), let \(\mathfrak {f}(j):=\min \{f\in [j]: {\varDelta }_{\ell _j}={\varDelta }_{\ell _f}\}\). By definition we must have, for each \(\ell _j\in L\),

$$\begin{aligned}{\varDelta }_{\ell _j}={\varDelta }_{\ell _{\mathfrak {f}(j)}}=h_{m+1}-h_{\mathfrak {m}(\mathfrak {f}(j))+1}-\sum {\big ({\varDelta }_{\ell _i}: \ell _i> \mathfrak {m}(\mathfrak {f}(j)),i<\mathfrak {f}(j)\big )},\end{aligned}$$and so

$$\begin{aligned}\alpha _{\ell _j}+ \sum {\big (\alpha _{\ell _i}: \ell _i> \mathfrak {m}(\mathfrak {f}(j)),i<\mathfrak {f}(j)\big )}= h_{\mathfrak {m}(\mathfrak {f}(j))+1}-h_{m+1}.~~(\star )\end{aligned}$$Now let \(y^k:=h_{\mathfrak {m}(\mathfrak {f}(j))+1}\) and define \(z^k\in \{0,1\}^{n}\) as follows:

$$\begin{aligned}z^k_t:= \left\{ \begin{array}{l@{\quad }l} 0,&{} \text { if } t=\ell _i> \mathfrak {m}(\mathfrak {f}(j)) \text { and } i<\mathfrak {f}(j), \\ &{} \text { or } t= \ell _j, \\ &{} \text { or } t\in R \text { and } t>\mathfrak {m}(\mathfrak {f}(j));\\ 1, &{} \text { otherwise.} \end{array} \right. \end{aligned}$$Observe that \(\ell _j>\mathfrak {m}(j)\ge \mathfrak {m}(\mathfrak {f}(j))\). We have \((y^k,z^k)\in Q\), because \(z^k_i=1\) for \(i=1,\ldots , \mathfrak {m}(\mathfrak {f}(j))\), and

$$\begin{aligned} \sum _{i=1}^{n}a_iz^k_i&= \sum _{i\le \mathfrak {m}(\mathfrak {f}(j))}a_i+ |\{\ell _i\in L: \ell _i>\mathfrak {m}(\mathfrak {f}(j)), i\ge \mathfrak {f}(j), i\ne j \}|\\&=s_{\mathfrak {m}(\mathfrak {f}(j))}+|\{\ell _i\in L: \ell _i>\mathfrak {m}(\mathfrak {f}(j)), i\ge \mathfrak {f}(j) \}|-1\\&=s_{\mathfrak {m}(\mathfrak {f}(j))}+|\{\ell _i\in L: i\ge \mathfrak {f}(j) \}|-1~~~(\star \star )\\&=s_{\mathfrak {m}(\mathfrak {f}(j))}+|L|-\mathfrak {f}(j)\\&=s_{\mathfrak {m}(\mathfrak {f}(j))}+p-s_m-\mathfrak {f}(j)\\&\le p ~~\text { by the definition of } \mathfrak {m}.\end{aligned}$$Above, \((\star \star )\) holds because \(i\ge \mathfrak {f}(j)\) implies that \(\ell _i>\mathfrak {m}(i)\ge \mathfrak {m}(\mathfrak {f}(j))\). Moreover, \((y^k,z^k)\) satisfies (18) at equality:

$$\begin{aligned}&y^k+\sum _{i=1}^{a}(h_{t_i}-h_{t_{i+1}}) z^k_{t_i}+\sum _{i\in L}\alpha _{i}z^k_{i}\\&=h_{\mathfrak {m}(\mathfrak {f}(j))+1}+ h_{t_1} -h_{m+1}+\sum _{i\in L}\alpha _i -\alpha _{\ell _j}-\sum {\big (\alpha _{\ell _i}: \ell _i> \mathfrak {m}(\mathfrak {f}(j)),i<\mathfrak {f}(j)\big )} \\&= h_{\mathfrak {m}(\mathfrak {f}(j))+1}+ h_{t_1} -h_{m+1}+\sum _{i\in L}\alpha _i+h_{m+1}-h_{\mathfrak {m}(\mathfrak {f}(j))+1}~~~\text { by } (\star )\\&=h_{t_1}+\sum _{i\in L}\alpha _i.\end{aligned}$$ -

3.

For all \(k\in R- T\), let \(y^k:=h_1\) and define \(z^k\in \{0,1\}^{n}\) as follows:

$$\begin{aligned}z^k_i:=\left\{ \begin{array}{ll} 0, ~~~ \text { if } i\in R- \{k\};\\ 1, ~~~ \text { otherwise.} \end{array} \right. \end{aligned}$$Notice \((y^k,z^k)\in Q\), because

$$\begin{aligned}\sum _{i=1}^{n}a_iz^k_i= a_k+|L|=a_k+p-s_m\le p\end{aligned}$$by (F3). Moreover,

$$\begin{aligned}y^k+\sum _{i=1}^{a}(h_{t_i}-h_{t_{i+1}}) z^k_{t_i}+\sum _{i\in L}\alpha _iz^k_i=h_1+\sum _{i\in L}\alpha _i= h_{t_1}+\sum _{i\in L}\alpha _i .\end{aligned}$$ -

4.

Lastly, let \(y^0:=h_{m+1}\) and define \(z^0\in \{0,1\}^{n}\) as follows:

$$\begin{aligned}z^0_i:=\left\{ \begin{array}{ll} 1, ~~~ \text { if } i< m+1 \text { or } i\in L; \\ 0, ~~~ \text { otherwise.} \end{array} \right. \end{aligned}$$Then \((y^0,z^0)\in Q\) because \(z^0_i=1\) for all \(i<m+1\), and

$$\begin{aligned}\sum _{i=1}^{n}a_iz^k_i= \sum _{i<m+1}a_iz^k_i+ \sum _{i\in L}z^k_i=s_{m}+|L|=s_m+p-s_m= p.\end{aligned}$$Moreover,

$$\begin{aligned} y^0+\sum _{i=1}^{a}(h_{t_i}-h_{t_{i+1}}) z^0_{t_i}+\sum _{i\in L}\alpha _iz^0_i&=h_{m+1}+\sum _{i=1}^{a}(h_{t_i}-h_{t_{i+1}})+\sum _{i\in L}\alpha _i\\&=h_{m+1}+h_{t_1}-h_{m+1}+\sum _{i\in L}\alpha _i\\&=h_{t_1}+\sum _{i\in L}\alpha _i.\end{aligned}$$

Hence, the face defined by (17) contains \((y^0, z^0), (y^1, z^1),\ldots ,(y^n, z^n)\), which are, by a routine argument, affinely independent points in Q. As a result, (17) is a facet-defining inequality for conv(Q). \(\square \)

5 A compact extended formulation for (CMIP)

So far we have only focused on the single-constraint chance-constrained problem. We now briefly turn to the general problem. In this section, we propose an extended formulation for the set given by

Let \(Q_\kappa :=\mathcal {D}_\kappa \) and \(h_{j,\kappa }:=\xi ^j_{\kappa }\) for all \(j\in [n]\) and \(\kappa \in [d]\). Let \((1_\kappa ,2_\kappa ,\ldots ,n_\kappa )\) be a permutation of [n] such that \(h_{1_\kappa ,\kappa }\ge h_{2_\kappa ,\kappa }\ge \ldots \ge h_{n_\kappa ,\kappa }\), for all \(\kappa \in [d]\). Let \(\nu _\kappa :=\max \{t:\sum _{j\le t}a_{j_\kappa }\le p\}\), for all \(\kappa \in [d]\).

Theorem 16

The formulation

is an extended formulation for the set given by (19 20 21)–(23). The continuous relaxation of the extended formulation defined by (24)–(33) is at least as strong as the continuous relaxation of the formulation defined by (19 20 21)–(22).

We would like to point out that the extended formulation given above is almost the same as the one given in [12], Theorem 8 except that our formulation replaces their (extended knapsack cover) constraint ([12]:32) by constraint (27). It is not difficult to see that neither of the constraints ([12]:32) and (27) dominate the other. However, the proof of Theorem 16 is precisely the same as the proof of that theorem, so we omit it.

6 Computational experiments

One of the advantages of our results is that they allow us to establish benchmarks to identify which are good and useful relaxations to use in the context of chance constrained problems. To illustrate this, we carried out computational experiments to establish how well does one relaxation perform compared with another.

The setup that we chose to set the benchmarks is the same one proposed in [12], that is, the probabilistic lot-sizing problem (originally described in [7]). In this setting, we have that the right-hand-sides \(\xi ^j_{\kappa }\) represent cumulative demands in time period \(\kappa = 1,\ldots , d\) under scenario \(j=1,\ldots ,n\) and the probabilistic constraint is used to ensure that the probability of shortage of products (or equivalently of not being able to fully satisfy demands) is relatively low.

We generated random instances by considering that all the data is integer and is generated randomly and independently of each other according to the following uniform distributions below:

-

the demands in any given time period and in any given scenario are Uniform(1,100),

-

the variable production costs are Uniform(1,10),

-

the setup costs are Uniform(1,1000)

-

the holding costs are Uniform(1,5)

-

the coefficients \(a_j\) of the knapsack constraint of Q are Uniform(1,100) (the interpretation of these coefficients \(a_j\) is that they are a rescaling of the probabilities \(\pi _j\), which we consider as \(\frac{a_j}{\sum \nolimits _{l=1}^n a_l}\)).

We generated 20 such random instances for each given combination of values of n (number of scenarios), d (number of time periods) and \(\epsilon \in [0,1]\) (value used to determine the right-hand-side p in the knapsack constraint of Q).

Value p is determined as follows: given a set of knapsack coefficients \(\{a_j\}_{j=1}^n\) we let \(p = \left\lfloor \epsilon \big (\sum \nolimits _{j=1}^n a_j \big ) \right\rfloor \). That way the knapsack constraint \(\sum \nolimits _{j=1}^n a_j z_j \le p\) is just a rescaling of the constraint \(\sum \nolimits _{j=1}^n \pi _j z_j \le \epsilon \), and we deal only with integer parameters.

The algorithm that was used to compare the relaxations is described in Algorithm 1 below. In it, some computational choices are implicitly described, such as a minimum violation tolerance in order to declare a cut as violated, the cut normalization and a maximum number of iterations that we allow for the cuts to not improve the bound (to avoid potential issues like cycling).

The separation LP (35) presented in Algorithm 1 is used to separate all inequalities that can be generated using Proposition 6, and that it is used to try to separate the current LP solution from \(\mathcal {D}_\kappa \) for a certain \(\kappa \in [d]\).

We also note that the algorithm depends on input parameter \(\mathcal {K}\) which defines from which of the sets \(\mathcal {D}_\kappa \) will the algorithm try to separate the current solution. Besides the choice of \(\mathcal {K}\), the algorithm implicitly depends on the choice of knapsack relaxation \(R_k\) chosen (in the definition of \(EC_{\varphi }\)). Therefore we use Algorithm 1 to benchmark different choices of \(R_k\) as follows: after completion of Algorithm 1 we compute the final lower bound obtained (and thus the gap) for a particular choice of \(R_k\) and use this value to evaluate how good this choice of relaxation is. We explicitly choose to ignore the time, because we do not claim that this is the approach that should be used if you are trying to solve such instances faster. Instead, what our approach serves is to try to identify what is the potential of each choice of relaxation in terms of lower bound improvement. With such results in hand, one can be guided as to where to look for better cuts, knowing that the reason certain classes of cuts are or are not successful is because of the relaxation they are derived from and not because of the success/failure of some separation heuristic.

We report the gap closed by the end of the algorithm, calculated as follows: given the optimal (or best known) integer solution value \(z_{IP}\) for a given problem, the value \(z_{LP}\) of the relaxation of (CMIP) without any cuts and the value \(z_{final}\) obtained at completion of Algorithm 1, the gap closed is defined as \(\frac{z_{final}-z_{LP}}{z_{IP} - z_{LP}}\).

We benchmarked different relaxations using the same separation procedures. The results are summarized in Table 1. All computations are done on an Intel Core 2 Duo with 2 CPUs of 3.06GHz with 16 GB RAM, using CPLEX 12.4. Below we describe what the columns of Table 1 represent.

6.1 Choice of \(\mathcal {K}\)

Typically, when trying to add cuts to strengthen an LP relaxation, the natural choice for \(\mathcal {K}\) is \(\mathcal {K}=[d]\). However, for our experiments, we also chose to generate cuts from a single relaxation \(\mathcal {D}_\kappa \), that is, for a set \(\mathcal {K}\) such that \(|\mathcal {K}|=1\). The reasoning for that is to try to isolate the effect of cuts for \(\mathcal {D}_\kappa \) for a single \(\kappa \) from the combined effect that several cuts can have in closing the gap (much like in the general MIP case where cuts generated from different constraints may have a combined effect that may mitigate or boost the advantages that each cut has individually).

We thus tried four different choices for \(\mathcal {K}\): \(\mathcal {K}=\{1\}\), \(\mathcal {K}=\{d/2\}\), \(\mathcal {K}=\{d\}\), and \(\mathcal {K}=[d]\). These are the values represented in the first column of Table 1 by \(\mathcal {K}=1, d/2, d\) and All, respectively.

6.2 Columns 2–4

The next three columns in Table 1 represent the data parameters of the instance as described above.

6.3 Choice of knapsack relaxation

The next six columns represent the average gaps closed over the 20 instances generated obtained by each choice of relaxation. Column R0 represents the results using only CPLEX generated cuts (i.e. not using Algorithm 1). Columns R1-R5 use only cuts generated using (35) (no CPLEX cuts) for different choices of polyhedral relaxations \(R_k\) of the knapsack.

Column R1 presents results defining \(R_k\) as the LP relaxation of the knapsack \(P_k\), R2 uses the relaxation proposed in [12] (obtained by using a single extended cover inequality) and R3 combines both relaxations. Note that, even though in principle the results in R3 should dominate R1 and R2, this does not always happen since we abort the cut generation process if there has not been a bound improvement for some prespecified number of iterations.

One can argue that these bounds will probably not be too good since the knapsack relaxations used are not too strong. Therefore, we used strengthened formulations for the knapsack relaxation \(R_k\) in bounds R4 and R5. The bound presented in column R4 uses the LP relaxation of the knapsack combined with the Chvátal-Gomory cuts of the form \(\sum \nolimits _{j=1}^n \lfloor \frac{a_j}{a_l} \rfloor z_j \le \lfloor \frac{p}{a_l} \rfloor \), for all \(l=1,\ldots ,n\).

Column R5 represents the bound obtained by using the LP relaxation of the knapsack strengthened with cover inequalities. The way we obtained cover inequalities for a relaxation \(R_k\) was by using CPLEX’s cut generator in the following manner. We set up a completely separate Integer Program whose feasible region is purely \(\left\{ z\in \{0,1\}^n: \sum \nolimits _{j=1}^n a_j z_j \le p\; ; z_1=\ldots =z_k=1\right\} \). We then repeat the following procedure 100 times:

-

1.

Generate random objective function coefficients for each \(z_j\) variable with the coefficients being drawn from Uniform(1,100).

-

2.

Turn off all CPLEX preprocessing, heuristics and also all cuts except covers and cliques, which are left on in aggressive mode.

-

3.

Let CPLEX solve only the root node of the Branch-and-cut tree and collect all cuts generated by CPLEX.

-

4.

Add all these cuts to a cut pool (discarding repeated cuts) keeping up to a maximum of 500 cuts in this pool.

The relaxation \(R_k\) will then consist of the original LP relaxation of the knapsack strengthened with any cut in the cut pool after this procedure.

We chose to generate cover cuts in this way to make use of CPLEX’s existing heuristics for separating such cuts, which are likely much better than anything we could implement for the purpose of this test. Once more we point out that this is a particularly very time consuming process and is not recommended for efficiency.

Finally, column SS represents the gap closed by the strengthened star inequalities [14] (note that the results in columns \(R_0,\ldots ,R_5\) did not include any strengthened star inequalities).

From the experiments, we can see that, when using a single choice of \(\mathcal {D}_\kappa \) (i.e. \(|\mathcal {K}|=1\)), the choice of relaxation \(R_k\) has very little impact in the final bound. Moreover, one can see that the choice of \(\kappa \) matters, as choosing \(\kappa =d\) has much more impact in the bound than choosing \(\kappa =1\). This makes sense, since the constraint for \(\kappa =d\) considers all cumulative demands from the first until the last period, so strengthening \(\mathcal {D}_\kappa \) potentially impacts previous periods implicitly as well. On the other extreme, for \(\kappa =1\), the only period involved in \(\mathcal {D}_\kappa \) is the first one and so strengthening it will not affect any other future periods.

Moreover, we can observe a significant gain in bound by use of any of the relaxations when \(\mathcal {K}=[d]\). However, no significant advantage comes from using any of the relaxations R1, R3 or R4 instead of R2 as proposed in [12]. Thus, at least for the problem and instances in consideration, the best approach would probably be to stick to the inequalities proposed in [12]. However, one can note that using relaxation R5 allows a significant additional gap to be closed (up to 6% extra). Though significant, it is not clear if such improvement is big enough to justify a big effort to implement any cut separation using relaxation R5 more efficiently for practical purposes. However, we note that in some problems, closing 6% extra gap may be the difference to allow the solution of a problem in reasonable time. In any case, if this extra gap closed is indeed crucial, then our results point to where to look for new cuts next.

The comparison with the strengthened star inequalities is also very interesting. When looking at the results for \(|\mathcal {K}|=1\), we see that if we just focus on \(\kappa =1\), compared to the strengthened stars, the inequalities that can be derived from the results in this paper close a lot more gap. However, when one considers \(\kappa =d/2\), or \(\kappa =d\), the impact of the extra effort is quite diminished. In fact, considering \(\mathcal {K}=[d]\), one sees that the impact of the extra inequalities is much smaller (sometimes even worse than using just strengthened stars).

These experiments show the usefulness of our results in that they allow us to set the appropriate benchmarks and point to a definitive answer in terms of which direction to pursue to find useful inequalities. In particular, the results show that, in this particular problem, generating inequalities based on extended covers as proposed in [12] does not seem computationally beneficial. One comment that is worth making is that it is not contradictory that the strengthened star inequalities close more gaps than the inequalities proposed using relaxations \(R_1,\ldots ,R_5\) since the strengthened star inequalities are derived using integrality of the variables directly, whereas the framework proposed uses linear relaxations of the knapsack (so no integrality is directly used).

7 Conclusion

In this paper, we studied several different properties of the mixing set with a knapsack constraint and identified the key difficulty in describing its convex hull, namely that one must be able to describe the convex hull of the knapsack polytope. Since that problem by itself is hard, we were able to devise a theory that allows one to use any polyhedral relaxation of the knapsack polytope and translate that knowledge into a similar polyhedral knowledge about the desired set. We derived extended formulations for both the set of points defined by this relaxation and the cuts obtained by them. Moreover, these results generalize the results obtained in two previous papers [12, 14] dealing with the same set and we also were able to generalize a particular class of facet-defining inequalities for such set.

Finally, our computational experiments show how these theoretical results can be used to set benchmarks for identifying useful relaxations for particular chance-constrained programs.

Notes

Sometimes the finite discrete distribution given is an approximation of an unknown continuous distribution, obtained via a Sample Average Approximation (SAA) technique.

Prior to our work, the main focus seems to have been on the equal probabilities case, justified by employing the SAA technique. Our more general framework of the general probabilities case allows for more sophisticated techniques such as Importance Sampling. See the recent work of Barrera et al. [6] for further details.

As shown in Luedtke [13], some valid inequalities for Q are also of value in the extremely general case where each mixing constraint is replaced by a set of arbitrary linear constraints.

References

Atamtürk, A., Nemhauser, G.L., Savelsbergh, M.W.P.: The mixed vertex packing problem. Math. Program. 89, 35–53 (2000)

Balas, E.: Disjunctive programming: properties of the convex hull of feasible points. Discrete Appl. Math. 89, 1–44 (1998)

Balas, E.: Disjunctive programming and a hierarchy of relaxations for discrete optimization problems. SIAM J. Algebr. Discrete Methods 6, 466–486 (1985)

Balas, E.: Facets of the knapsack polytope. Math. Program. 8, 146–164 (1975)

Balas, E., Zemel, E.: Facets of the knapsack polytope from minimal covers. SIAM J. Appl. Math. 34, 119–148 (1978)

Barrera, J., Homem-de-Mello, T., Moreno, E., Pagnoncelli, B.K., Canessa, G.: Chance-constrained problems and rare events: an importance sampling approach. http://www.optimization-online.org/DB_HTML/2014/02/4250.html

Beraldi, P., Ruszczyński, A.: A branch and bound method for stochastic integer programs under probabilistic constraints. Optim. Methods Softw. 17, 359–382 (2002)

Bienstock, D.: Approximate formulations for 0–1 knapsack sets. Oper. Res. Lett. 36, 317–320 (2008)

Guan, Y., Ahmed, S., Nemhauser, G.L.: Sequential pairing of mixed integer inequalities. Discrete Optim. 4, 21–39 (2007)

Günlük, O., Pochet, Y.: Mixing mixed-integer inequalities. Math. Program. 90, 429–457 (2001)

Hammer, P.L., Johnson, E.L., Peled, U.N.: Facets of regular 0–1 polytopes. Math. Program. 8, 179–206 (1975)

Küçükyavuz, S.: On mixing sets arising in chance-constrained programming. Math. Program. 132(1), 31–56 (2012)

Luedtke, J.: A branch-and-cut decomposition algorithm for solving chance-constrained mathematical programs with finite support. Math. Program. 146, 219–244 (2014)

Luedtke, J., Ahmed, S., Nemhauser, G.: An integer programming approach for linear programs with probabilistic constraints. Math. Program. 122(2), 247–272 (2010)

Ruszczyński, A.: Probabilistic programming with discrete distributions and precedence constrained knapsack polyhedra. Math. Program. 93, 195–215 (2002)

Sen, S.: Relaxations for probabilistically constrained programs with discrete random variables. Oper. Res. Lett. 11, 81–86 (1992)

Wolsey, L.A.: Faces for linear inequality in 0–1 variables. Math. Program. 8, 165–178 (1975)

Author information

Authors and Affiliations

Corresponding author

Additional information

Partially supported by NSERC Discovery Grant RGPIN-05623.

Rights and permissions

About this article

Cite this article

Abdi, A., Fukasawa, R. On the mixing set with a knapsack constraint. Math. Program. 157, 191–217 (2016). https://doi.org/10.1007/s10107-016-0979-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-016-0979-5

Keywords

- Chance-constrained integer program

- Mixing set

- Knapsack constraint

- Polyhedral combinatorics

- Integer programming