Abstract

Executive function (EF) allows for self-regulation of behavior including maintaining focus in the face of distraction, inhibiting behavior that is suboptimal or inappropriate in a given context, and updating the contents of working memory. While EF has been studied extensively in humans, it has only recently become a topic of research in the domestic dog. In this paper, I argue for increased study of dog EF by explaining how it might influence the owner–dog bond, human safety, and dog welfare, as well as reviewing the current literature dedicated to EF in dogs. In “EF and its Application to “Man’s Best Friend” section, I briefly describe EF and how it is relevant to dog behavior. In “Previous investigations into EF in dogs” section, I provide a review of the literature pertaining to EF in dogs, specifically tasks used to assess abilities like inhibitory control, cognitive flexibility, and working memory capacity. In “Insights and limitations of previous studies” section, I consider limitations of existing studies that must be addressed in future research. Finally, in “Future directions” section, I propose future directions for meaningful research on EF in dogs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In modern society, domestic dogs (Canis lupus familiaris) serve not only as companions, but also work alongside humans in sectors including law enforcement, search and rescue, scent detection, service to the disabled, and therapy. Given their pervasive role in human society, it is critical that dogs behave in a context-appropriate manner. For example, dogs are generally expected to refrain from jumping up on visitors and destroying furniture at home and must follow their owners’ commands to “stay,” “come,” or “leave it,” even in distracting environments such as dog parks or family gatherings. Meeting these and other expectations of their human caregivers relies in part on executive function.

Executive function (EF) is an umbrella term referring to a constellation of abilities that allow for context-appropriate responding and adapting to changes in our environment. It encompasses behaviors such as focusing on a goal in the face of distraction (i.e., selective attention), inhibiting behavior that is inappropriate for a given situation (i.e., inhibitory control), holding information in an active state (i.e., working memory capacity), and adapting behavior to accommodate changes in the environment (i.e., cognitive flexibility). In humans, EF deficits lead to a host of negative outcomes, including trouble interacting with peers, financial trouble, risky behavior, and substance abuse (Moffitt et al. 2011, 2015; Nigg 2001). Dire consequences exist for dogs as well; dogs can be fulfilling companions and diligent workers, but are also relatively large carnivores that can bite, maul, and cause destruction. Behaviors such as aggression, destructiveness, and disobedience often lead to relinquishment to an animal shelter and, in some cases, euthanasia (Salman et al. 2000).

The quality of dog welfare, human safety, and even dog-specific legislation relies on a body of rigorously conducted research on how dogs inhibit natural (but often suboptimal) urges and attend to humans amid distraction. Thus, the purpose of this paper is to illustrate EF’s relevance to dogs and encourage further, more rigorous inquiry into this topic. To achieve these ends, I have divided this paper into four sections. In “EF and its Application to “Man’s Best Friend”” section, I briefly define EF and describe abilities that fall under this term, including selective attention, inhibitory control, working memory capacity (WMC), and cognitive flexibility. I also explain how EF applies to dogs, with an emphasis on how increased knowledge of canine EF can benefit both dogs and humans. In “Previous investigations into EF in dogs” section, I provide a review of behavioral methods used by canine researchers to measure EF, and how they have conceptualized presumed causal factors like genetics and ontogeny. In “Insights and limitations of previous studies” section, I discuss limitations of the current body of research that must be addressed going forward. Although several studies have addressed EF in dogs, many contain methodological issues that limit the interpretation and applicability of their findings. Such issues, addressed in greater detail later in this paper, include pervasive use of small samples (Arden et al. 2016) and using task batteries without first assessing the reliability of each task (Bray et al. 2014; MacLean et al. 2014; Fagnani et al. 2016; Marshall-Pescini et al. 2015; Müller et al. 2016). These issues are not insurmountable, but must be remedied if we are to have an accurate understanding of how dogs regulate (or fail to regulate) their behavior. The paper concludes in “Future directions” section with suggested directions for future research from which we could learn how self-regulatory processes in dogs compare to ours and lay a theoretical foundation for better training and breeding practices, evidence-based legislation, and improved dog welfare and human safety.

EF and its application to “Man’s Best Friend”

To ensure their day-to-day survival, animals must attend to a variety of tasks including food acquisition, avoidance of danger, and the forging and maintenance of social ties. Some of these tasks become well practiced and can be carried out automatically; others require one’s full attention and, sometimes, behavioral adaptation to accommodate changes in the environment. Success in these latter tasks relies on EF, a term referring to processes that allow an animal to coordinate behavior in a manner appropriate for a given context, especially in the face of distraction. Given the complexity of EF, a full review is beyond the scope of this paper. For the sake of brevity, I constrain this section to briefly explaining how EF guides behavior, naming abilities encompassed by EF as proposed by researchers, and considering some of the consequences of one’s level of EF. The remainder of “EF and its Application to ‘Man’s Best Friend’” section is dedicated to how EF is applicable to dog behavior and how further study has the potential to benefit both dogs and humans.

Executive function: a brief primer on effortful self-regulation

A defining feature of EF is its reliance on the effortful allocation of attention, as opposed to mental processes that operate automatically. This distinction between such “controlled” executive and automatic behavior has existed since William James (1890) distinguished between “ideomotor acts,” which follow more or less immediately after conception in the mind, and “willed acts” which require an added element of volition. Many researchers have since expanded upon this automatic–executive distinction (for further reading, please see Baddeley and Hitch 1974; Norman and Shallice 1986; Schneider and Shiffrin 1977).

Depending on the demands of the environment, either automatic actions or those requiring EF can be adequate. In some cases, the ability to complete tasks automatically is beneficial; it enables an individual to behave in ways that have been effective in the past without requiring sustained attention that could be better used on other tasks (Norman and Shallice 1986). However, because many animals live in environments that are constantly changing, automatic responding can be erroneous and even dangerous. When troubleshooting, navigating a difficult or dangerous situation, and/or overcoming an automatic response are required, engagement of EF is preferred to automaticity. In these cases, one must be consciously aware of environmental conditions and be able to respond appropriately, even if this means abandoning an automatic response that had hitherto been effective (Miller and Cohen 2001). Classic models have illustrated how automatic responding is overridden in favor of a new, more appropriate behavior. For example, Norman and Shallice (1986) proposed that an executive system (known as the Supervisory Attentional System, or SAS) biases the activation of the less practiced behavior to increase the likelihood it will be expressed in place of the automatic response elicited by the environment. Further, this new behavioral sequence must be internally maintained, while it is being performed (Schneider and Shiffrin 1977), an act that requires attentional resources and generally prevents the individual from performing other behaviors at the same time.

Researchers have proposed that successfully overriding automatic responses and maintaining behavioral sequences relies on the prefrontal cortex (PFC), based on research showing that damage to this part of the brain causes deficits in behavioral flexibility and sustained attention while leaving well-learned, automatic actions intact (see Miller and Cohen 2001, for a review). Additionally, researchers have found that human and nonhuman subjects alike exhibit greater sustained activation in the PFC in tasks requiring the maintenance of information over a delay or in the face of distraction (De Pisapia and Braver 2006; Fuster and Alexander 1971; Kubota and Niki 1971; Miller et al. 1996). According to Miller and Cohen (2001), this activation is due to the PFC acting as a sort of “switchboard operator” to allow for effective behavior. Seating EF within a biological structure has made it more tractable to scientific study in human and nonhuman animals.

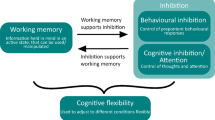

Rather than being a unitary construct, EF is a multifaceted phenomenon which draws from several distinct abilities to allow for successful responding. Across the literature, abilities proposed to be encompassed by EF include selective attention, cognitive flexibility, inhibitory control, and WMC, all of which rely heavily on the PFC (Jurado and Rosselli 2007; Miller and Cohen 2001). The reader should note that these abilities are not completely independent and, in many cases, interact.

Selective attention to stimuli

Selective attention refers to the ability to focus on one stimulus in the environment at the expense of others, achieved by an increased focus on the relevant stimulus and inhibition of signals from other, irrelevant stimuli (Miller and Cohen 2001). It is most strongly engaged when there are many salient stimuli in the environment, and reduces interference through attenuated sensitivity to distracting information (Conway et al. 2001; Hutchison 2011; Hutchison et al. 2016). Selective attention requires a certain degree of WMC; individuals with high WMC are better at focusing on relevant information and suppressing irrelevant information than individuals with low WMC (Conway and Engle 1994; Engle 2002).

Cognitive flexibility

The ability to flexibly attend and respond to the environment under changing conditions is critical in everyday life. Cognitive flexibility requires an individual to disengage attention from one task or set of rules to engage in another. Successful switching relies on preparation for the new task by mentally reconfiguring task rules, and inhibition of obsolete responses during the new task (Cepeda et al. 2001), both of which are made possible by the frontal cortex. Perseveration—the repeating of obsolete or incorrect responses—often accompanies frontal lobe damage (reviewed by Gazzaniga 2009) and age-related changes in the PFC and can be indicative of dementia risk (Berg 1948; Balota et al. 2010).

Working memory capacity

Although its name suggests the limits of memory, WMC is better described as how much information an individual can hold in an active, retrievable state in the face of distraction. Engle’s (2002) illustration of WMC consists of two distinct components: a short-term memory component that permits active maintenance of a small set of information, and an executive component that directs attention in a goal-directed manner. In fact, researchers have proposed that this executive component is the underlying factor responsible for the observed relationship between WMC and abilities such as reading comprehension, multitasking, SAT scores, and ability to multitask (Baddeley and Hitch 1974; Engle 2002; Miyake et al. 2000; Shipstead et al. 2014; Unsworth and Engle 2007).

Inhibitory control of behavior

Inhibitory control refers to one’s ability to withhold a prepotent response (one that is well practiced and has been rewarded consistently in the past). It requires top-down control to withhold or cease a behavior in favor of one that is novel, less practiced, and/or appropriate for the current situation. In humans, inhibitory control failure is related to engagement in dangerous or unhealthy behaviors (Duckworth et al. 2013), suboptimal cognitive aging (Balota et al. 2010), and dementia (Balota et al. 2010).

Implications of EF

The importance of EF can be best realized by observing consequences that follow from its presence or deficit. For example, individuals with attention-deficit hyperactivity disorder (ADHD) demonstrate marked deficits in inhibitory control, selective attention, and WMC. Compared to their typically functioning peers, they are at a greater risk of academic difficulties, having poor social relationships, aggression, experimentation with and abuse of drugs, and unemployment (Barkley 1997; Duckworth et al. 2013; Gardner and Gerdes 2015). In addition to difficulties experienced by the individual, these consequences carry a considerable cost for society in terms of public safety, productivity, and use of social programs like welfare.

In the general population, researchers have observed individual differences in EF. For example, people testing higher in various complex span tasks (referred to as “high spans” in the literature) are better able to respond appropriately to stimuli in a distracting environment than are low spans due to their ability to suppress the distracting information (Engle 2002). They are also better able to think creatively (Lee and Therriault 2013) and sustain attentional focus (Braver et al. 2007). Research with human participants has provided robust evidence that one’s capacity for EF affects problem-solving ability,Footnote 1 adapting to novel situations, and social relationships (Barkley 1997; Braver et al. 2007; Duckworth et al. 2013; Engle 2002; Gardner and Gerdes 2015; Lee and Therriault 2013; Mischel et al. 1989; Moffitt et al. 2011).

A case for increased study of dog EF

While the human EF literature is vast, we are only beginning to understand this construct in dogs. How dogs regulate (or fail to regulate) their own behavior is useful knowledge given the dog’s ubiquity in human society. Dogs often occupy households with children, other animals, and household items that can be soiled, torn, or eaten. To peacefully coexist with humans, dogs must be able to inhibit behaviors like eliminating in the house, playing too boisterously with children or other pets, and being destructive. Although training can help prevent these unwanted behaviors, a dog’s level of EF likely plays a large role in facilitating such training (Hasher and Zacks 1979) to allow it to overcome natural urges to obey its owner’s expectations for proper behavior. Conducting more, and better, research on canine EF, including the effects of genetics and ontogeny, has the potential to produce several tangible benefits to both humans and dogs, as I shall now discuss.

Modeling human EF

One benefit of a greater understanding of EF in dogs is, perhaps unexpectedly, a greater understanding of our own EF. In the field of comparative psychology, researchers have leveraged similarities between humans and other animals to study human psychology and its evolution.Footnote 2 Although humans and dogs are certainly different animals, researchers have noted many parallels between natural human and canid society: Both are communal creatures, living in cooperative family groups in which individuals hunt and move together, forge and maintain bonds, and rear young (Mech 2000; Schleidt and Shalter 2003). Selection pressures imposed by social living are believed to have shaped cognition in a variety of ways. For example, cooperative hunting requires that members of a group be able to coordinate maneuvers and inhibit chasing behavior until the right moment (Marshall-Pescini et al. 2015). They must also be able to inhibit impulsive, aggressive behavior for the sake of maintaining group cohesion. Additionally, information processing might become more complex as the social group increases in size. That is, individuals must be able to identify each member of the group and know where each member ranks relative to other members if there is a social hierarchy. Overall, one would expect that compared to solitary animals, animals living in social groups should be capable of more complex cognition and better self-control. Evidence for this notion includes the positive correlation between neocortex size and number of members of the social group in primates (Dunbar 1998), as well as the greater levels of inhibitory control exhibited by primates living in dynamic “fission–fusion” groups (Amici et al. 2008).

Dogs have been particularly useful models given their trainability, availability, and cooperative nature, especially in the area of cognitive aging. For example, researchers have found that, like humans, dogs experience declines in EF as they age (Cepeda et al. 2001; Mongillo et al. 2013; Tapp et al. 2003; Wallis et al. 2014, 2016). Researchers have also found canine analogs of attentional deficit hyperactivity disorder (ADHD; Vás et al. 2007) and Alzheimer’s disease (canine cognitive dysfunction; Azkona et al. 2009; Landsberg et al. 2003, 2012; Ruehl et al. 1995). Such research provides a starting point in developing methods of testing various cognitive abilities, as well as the efficacy of interventions, such as those aiming to reduce age-related cognitive decline (Cotman et al. 2002; Milgram 2003; Milgram et al. 2002, 2005). Increased knowledge of EF in dogs can show us which qualities (and associated brain regions) of this ability are shared between humans and dogs and which are distinct to each species, and inform the conclusions we can draw from research using dogs as models for human cognition and mental illness.

Improved owner–dog dynamics

As pointed out by Arden et al. (2016), most canine cognition studies are approached from an ethological angle. Ethology is the biological study of animal behavior, with a focus on how animals behave in their natural environment and how these behaviors contribute to their survival (Tinbergen 1963; Range and Virányi 2015). Many dog researchers are interested in understanding dog behavior in the context of the species’ shared environment with humans, both in the present-day and during the domestication process. Many contentions in the literature have arisen from different views regarding how genetics and ontogeny determine cognitive abilities of dogs (Dorey et al. 2009, 2010; Hare et al. 2002, 2009; Hare and Tomasello 2005; Merritt 2015; Virányi et al. 2008), and both of these factors likely influence EF in important ways.

Given the close relationship dogs share with humans, the dynamic between a dog and its owner is a crucial element of the dog’s development and how it responds to the environment. Clark and Chalmers (1998) argued that, although they are generally discussed as occurring internally, cognitive processes extend into our environment. For example, we often use physical aids in memory, self-regulation, and problem-solving including calculators, writing, and even the use of language. As such, we “extend” our mind into the environment. Regarding canine cognition, Merritt (2015) agrees with this notion and, further, discusses how dogs use us as physical aids, nearly always thinking “with” us. He further argues that researchers should approach the study of canine cognition with an appreciation for the “co-cognition” that takes place between humans and dogs. Researchers have found evidence for such co-cognition, showing how it has been influenced by domestication (Miklósi et al. 2003), breed (Udell et al. 2014), and training (Marshall-Pescini et al. 2009; Marshall-Pescini et al. 2016).

One of the primary ways humans influence their dogs’ behavior and cognition is through training. In terms of training techniques, positive punishment (e.g., striking a dog or rubbing its nose in its own excrement) can increase the likelihood of human-directed aggression (Casey et al. 2014), the end result of a failure to inhibit an inappropriate response. These findings mirror those found in human children subject to harsh parenting (Deater-Deckard et al. 2012). In the child psychology literature, researchers have shown that a mother’s level of EF shapes parenting practices that can ultimately help or hinder the child’s development of EF. In particular, mothers with low levels of EF were more likely to get frustrated and use harsh parenting practices than mothers with higher EF (Deater-Deckard et al. 2010, 2012).

Future research could investigate how owner EF influences training and discipline practices. Are owners with low EF more likely to endorse and use harsh training methods than owners with high EF? If so, does this predict lower EF or increased problem behaviors in their dogs? Interactions between owner and dog EF could be probed in more benign settings as well. For example, the sport of dog agility requires attentional control and flexible responding on the part of both canine and human participants. The owner must decide how to navigate a complex obstacle course while visually tracking their dog, and the dog must attend to the owner’s body language as well as the obstacles on the course. EF, particularly WMC and selective attention components, is likely involved in successful navigation of the course and subsequent scoring. Researchers could examine how much of a team’s performance is predicted by EF of the owner, EF of the dog, and the interaction between dog and owner EF. In short, the role human behavior plays in a dog’s ability to self-regulate and interact with its environment cannot be neglected in the midst of more intuitive factors like breed, training, and ontogeny.

Improved dog welfare

As a general rule, humans dictate most events in their dogs’ lives: Whether they receive training and what type of training they receive, how much physical and mental stimulation they receive, and whether they ultimately remain in the home. The amount and quality of attention owners pay their dogs can influence the development and occurrence of problem behaviors and thus increase likelihood of aggression, relinquishment, and euthanasia. In fact, one study (Salman et al. 2000) found that the top five reasons cited by owners for relinquishing their dogs to a shelter were biting, house soiling, aggression toward people, escaping, and being destructive indoors, all behaviors related to low levels of EF.

Learning more about how human behavior—including training philosophy, amount of owner–dog interaction, and previous experience with dogs—influences EF-related problem behaviors in dogs has the potential to reduce the number of dogs relinquished to shelters each year, as well as euthanasia rates of otherwise healthy dogs. Although many owners cite behavioral issues as the reason for relinquishing their dog to a shelter (Salman et al. 1998, 2010), these issues might be less reflective of a dog’s ability to behave and more reflective of their former owner’s inability to provide proper care, exercise, and training. Evidence of this notion comes from a study reporting that owners who relinquish their dogs are more likely to be young, first-time pet owners and have idealistic expectations for how much “work” the dog would require (Salman et al. 1998). Further, very few dogs who were relinquished had ever received obedience training. Unfortunately, dogs relinquished for behavioral reasons are less likely to be adopted than dogs relinquished due to cost or changing home dynamics (Lepper et al. 2002), and of the approximately 3.3 million dogs that are relinquished to shelters in the USA each year, about 670,000 of these dogs are euthanized (ASPCA, n.d.).

Increased human safety and evidence-based legislation

Legislation, local regulations, and even landlord-enacted breed restrictions would greatly benefit from improved knowledge of dog EF. With a better understanding of the factors underlying EF in dogs, we might be able to more accurately predict which dogs are at greater risk of responding to stress with aggression, allowing restrictions to be enacted on a case-by-case basis, rather than on the basis of size or breed, as is often the case. For example, researchers might find that low levels of EF are related to higher levels of aggression in dogs, as has been found to be the case in humans. Sprague et al. (2011) found that humans with low EF were more likely to respond to perceived stress with physical aggression. They concluded that EF, particularly inhibitory components, is important in managing inappropriate behavioral responses to stressors, as well as down-regulation of emotions that lead to such reactions. This supports the notion that behavioral inhibition might be an underlying factor uniting the various EF abilities (Barkley 1997). Further research might also show that problem behaviors owing to low EF are more influenced by owner characteristics and training than breed, reducing negative breed stereotypes and restrictions based on these stereotypes. If aggression or destructive tendencies are better predicted by EF than breed or size, restrictions should be modified accordingly. Further, if EF can be modified by training, this could provide a powerful intervention for dogs that might otherwise be relinquished or euthanized.

Summary

EF encompasses several distinct cognitive functions that promote flexible and context-appropriate responding. These functions include selective attention, cognitive flexibility, inhibitory control, and WMC, all of which rely, to some degree, on the frontal lobe. Researchers in the human domain have found that EF influences performance in academic contexts, finances, and social interactions, all of which are consequential to our survival. Canine cognition research suggests that EF is important for dogs as well, possibly implicated in behaviors like obedience, aggression, and suitability for working contexts. Given the potential impact of these behaviors on human safety and dog welfare, continued study of canine EF is needed.

Previous investigations into EF in dogs

EF has proven a challenging phenomenon to understand in our own species. It should not come as a surprise, therefore, that researchers have met with considerable difficulty in studying this construct in dogs, who do not share our linguistic abilities or perceptual world. Although the field of canine cognition has often focused on the dog’s understanding of human pointing and emotional expression, EF has received more attention in recent years. Hoping to better understand how genetics and ontogeny influence EF, researchers have employed a variety of cognitive tasks. Many of these tasks have been adapted from tasks used to study object permanence in human infants (e.g., A-not-B and cylinder task), whereas others were created specifically for use with dogs (e.g., social task and leash task). The purpose of this section is to review some of the behavioral tasks that have been used to study EF in dogs.

Cylinder task

The cylinder task is a modified form of detour-reaching task, in which subjects must suppress the tendency to reach directly for a visible food reward (hereafter referred to as a “treat”) in favor of approaching it via a learned “detour,” and traces its roots back to studies on object permanence in infants (Lockman 1984; Piaget 1954). It has since been modified to assess inhibitory control in a variety of nonhuman animals (see Amici et al. 2008; Boogert et al. 2011, Rørvang et al. 2015; Wallis et al. 2001), including dogs (Bray et al. 2014; Fagnani et al. 2016; MacLean et al. 2014; Marshall-Pescini et al. 2015). Using this task, researchers test a dog’s ability to successfully retrieve a treat from inside an open-ended cylindrical apparatus laid on its side. First, the dog is trained to retrieve the treat from the apparatus. During training, the dog is held in place approximately two meters from an opaque cylindrical apparatus and watches as a human experimenter places a treat into the cylinder. Once the cylinder has been baited, the dog is released and allowed to retrieve the treat. This protocol is repeated until the dog successfully retrieves the treat in four of five consecutive trials (Bray et al. 2014; Fagnani et al. 2016; MacLean et al. 2014; Marshall-Pescini et al. 2015), at which point the dog advances to the testing phase, which is identical to the training procedure with one exception: The cylinder is now transparent rather than opaque. To successfully retrieve the treat, the dog must inhibit the response of approaching the now visible reward directly (thus contacting the barrier) and instead approach it by using the detour technique learned during training. The dog’s EF is measured in terms of accuracy across ten trials.

To better understand how domestication might have affected EF, Marshall-Pescini et al. (2015) compared the performance of human-reared wolves on the cylinder task with that of trained and untrained pet dogs and human-reared “free-ranging” dogs. They found that dogs, regardless of training or housing situation, outperformed wolves and suggested that dogs might have used inadvertent human cues that led to their successful performance; however, there is conflicting evidence as to whether there exists a difference in the ability to follow human social cues between wolves and dogs (Hare and Tomasello 2005; Udell et al. 2008; Virányi et al. 2008). Further, researchers should use caution when comparing the performance of dogs to that of modern-day wolves when trying to understand the effects of domestication (see Kruska 1988).

Researchers have also examined the influence of a dog’s environment. Because shelter dogs have previously shown decrements in socio-cognitive tasks in comparison with pet dogs (Udell et al. 2010a, b), Fagnani et al. (2016) reasoned that differences in lifetime experience with humans might influence a dog’s level of EF. Residing in a home requires more context-appropriate behavior to coexist peacefully with human owners. One would then expect that pet dogs would exhibit greater EF as indicated by better performance on the cylinder task in comparison to shelter dogs. However, Fagnani et al.’s data indicated no difference in performance between the two groups. Although it is possible that shelter dogs and pet dogs simply do not differ on this measure, two limitations of this study should be addressed before drawing such a conclusion. First, the researchers used a relatively small sample when collecting their data. As discussed by Arden et al. (2016), many canine cognition studies are too underpowered to detect existing effects. Replicating this study with a larger sample would provide more reliable data and, possibly, differential performance between shelter dogs and pet dogs. Second, accuracy is often remarkably high in the cylinder task (Fagnani et al. 2016; MacLean et al. 2014; Marshall-Pescini et al. 2015), even for dogs who did not partake in training (refer to Table 1), leading the researchers to suggest a possible ceiling effect. Indeed, Fagnani et al. suggested that, if the task was “too easy” for the dogs, existing differences between the two groups might have washed out.

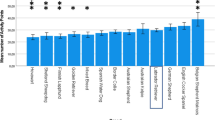

Finally, Bray et al. (2014) found that age-related differences were reflected in cylinder task performance, but not the other two tasks in their battery. Age was negatively correlated with accuracy during test trials, such that older dogs were less accurate than younger dogs. This finding is consistent with previous observations that cognitive functioning, particularly EF, tends to decline with increasing age in humans (Cepeda et al. 2001; Craik and Bialystok 2006) and dogs (Wallis et al. 2014, 2016). For example, Wallis et al. (2014) found that, similar to humans, selective attention assumes a quadratic function over the lifespan in dogs, such that middle-aged dogs show the highest level of selective attention, while adolescent and older dogs show lower levels. The authors suggest that these age-related reductions in attention are due to deficits in EF. Older dogs have also been shown to exhibit impaired inhibitory control relative to young dogs (Mongillo et al. 2013; Lazarowski et al. 2014; Tapp et al. 2003) as well as patterns observed in humans, with inhibitory processes breaking down in the later years of life (Spieler et al. 1996).

Future studies using the cylinder task should include modification to make it more challenging. As has been shown in human research, individual differences are more apparent when the task is difficult than when it is easy. Researchers have imposed such difficulty by increasing the amount of information a participant must hold in memory (Conway and Engle 1994; Turner and Engle 1989), requiring the participant to engage in self-control before partaking in the task (Baumeister et al. 1998), and reducing external reminders of the task goal (Hutchison 2011; Kane and Engle 2003). Canine researchers could use similar strategies by requiring a dog to engage in self-control prior to completing the cylinder task (refer to the self-control task devised by Miller et al. 2010 discussed later in this section) or test the dog in a distracting environment, which could increase working memory load. As shown in Table 1, the ceiling effect observed in the previously described studies is absent in the study by Bray et al. (2014). Because dogs in this study performed another socio-cognitive task (the A-not-B) prior to the cylinder task, it is possible that this reduction in accuracy is due to fatigue.

It is also important to consider the relative novelty of the cylinder task in testing canine EF. Its first usage with dogs was by Bray et al. (2014) who tested thirty dogs on a battery of three tasks—the cylinder task, the social task, and the A-not-B task—presumed to reflect a dog’s level of EF. Thus far, there have been no demonstrations of its reliability. Before seeking correlations between tasks that presumably measure the same thing (as was done in all three studies discussed), a researcher must know that the tasks in the battery are reliable (Miyake et al. 2000). That the cylinder task has not been subject to this kind of testing limits its utility, particularly in task batteries.

Detour-fence task

The detour-fence task is another detour task in which a small section of fencing is used to separate a dog from a treat. The treat is placed on the side of the fence opposite to the dog, and the dog must go around the fence to obtain it. In other words, the dogs must first move away from the food to obtain it, requiring dogs to overcome the prepotent response of approaching the food head-on. EF has been measured in terms of latency to successfully retrieve the treat (Marshall-Pescini et al. 2015); however, this task might be more reflective of problem-solving ability than EF.

In their study, Marshall-Pescini et al. (2015) tested wolves and dogs on both the cylinder task and the detour-fence task. Although the tasks are thought to be conceptually similar, the researchers failed to find any correlation in performance between the two tasks. Additionally, they found that wolves outperformed dogs on the detour-fence task, while dogs outperformed wolves on the cylinder task. Did selection for cooperation with humans increase EF or decrease it? The former possibility would lead to better performance in both tasks by dogs, while the latter would lead to better performance by wolves. However, this line of thinking assumes that both tasks are measuring the same thing and that no other factors should significantly influence performance in either task. Given the lack of correlation in performance between the tasks and the evidence for species-level specialization, the data shed little light on the original research question. There is also a possibility that the detour task is more reliant on another ability, such as spatial problem-solving, than is the cylinder task. Indeed, numerous studies (Bray et al. 2015; Marshall-Pescini et al. 2016; Pongrácz et al. 2005; Smith and Litchfield 2010) have labeled the detour-fence task as a measure of problem-solving ability. Thus, researchers should consider omitting this task from studies examining EF.

Leash task

Used in only one study as part of a three-part task battery, the leash task is similar in nature to the cylinder and detour-fence tasks in that it requires dogs to first move away from a desired object in order to ultimately make contact with it. In this task, each dog was trained by being walked on a leash by its owner. During the walk, the dog encountered a situation in which the leash became caught on an object, such as a tree or post. The owner then called to the dog, at which point the dog was required to go around the object to return to its owner within 20 s (Müller et al. 2016). The distance the dog had to travel to untangle itself from the obstacle and reach its owner was gradually increased up to two meters. After the dog had been trained for on this task for approximately 1 month, the owner tested the dog, videotaping the footage to be scored later by the experimenter. Scores on the task ranged from 0 (poor performance) to 2 (good performance).

Ultimately, Müller and colleagues found significant, but weak, correlations between performance on the leash task and two other supposed EF tasks included in their battery. They suggested that this might imply context specificity of EF (similar to the argument presented by Bray et al. 2014 based on similar results). However, one could argue that, like the detour-fence task, the leash task requires a substantial degree of spatial problem-solving ability; dogs might simply not understand how to negotiate the obstacle presented by a tangled leash. This could have negatively impacted correlations between tasks, in addition to possible low reliability of this measure. As with the cylinder task, the leash task is a novel method of testing EF and has not been subject to tests of its reliability. Reliability must first be demonstrated before searching for evidence of validity (i.e., correlation with other supposedly EF tasks). Thus, that the leash task reflects EF should be treated cautiously until it has been subject to such scrutiny.

Delay discounting

Delay discounting refers to the devaluing of future rewards relative to immediate ones (Riemer et al. 2014). Generally, preference for a reward decreases as the delay to achieve such an award increases. Inability to delay gratification can lead to negative outcomes in humans including poor financial habits, obesity, and risky behavior (Duckworth et al. 2013; Moffitt et al. 2011) and is also related to various problem behaviors in dogs as reported by their owners (Wright et al. 2011).

The use of delay-discounting tasks to assess EF in dogs is more intensive in terms of time to train and test the dogs, as well as constructing the apparatus, than some of the other tasks discussed in this paper. In these tasks, dogs are allowed to choose between a small immediate reward and a larger, delayed reward. Using delay discounting as a measure of canine inhibitory control, Riemer et al. (2014) differentiated between cognitive impulsivity (the ability to delay gratification) and motor impulsivity (the ability to inhibit prepotent responses) and used a delay-discounting task to examine how stable these two abilities were over time. Dogs were first given ten forced-choice trials on two different apparatuses. One apparatus dispensed one treat after each press, and the other dispensed three treats after a 3-s delay. After learning the contingencies, dogs were given free access to both apparatuses for 15 min. During this time, the delay increased on the second apparatus by 1 s each time the dogs chose the delayed reward. The maximum delay reached by the dogs during the 15 min served as a measure of cognitive impulsivity and the number of extra presses between the first press and the delivery of the reward served as a measure of motor impulsivity. Dogs were tested again 6 years later in the same manner.

For the ten dogs that completed testing, the maximum delay achieved was stable over the 6-year period, whereas the number of extra presses increased. In other words, a dog’s ability to delay gratification seems more stable than the ability to inhibit prepotent responses, which declined over time. From this finding, Riemer et al. (2014) concluded that cognitive and motor aspects of EF, particularly inhibitory control, might be independent. The cognitive aspect might be more of a trait that remains stable over the lifetime, whereas the motor aspect might be more influenced by the current environment. Their results are consistent with the notion that inhibitory control, at least the inhibition of prepotent responses, is context specific (Bray et al. 2014; Fagnani et al. 2016). Additionally, the finding that inhibition of prepotent responses declined with age mirrors the results obtained in the cylinder task (Bray et al. 2014).

Self-control task

While not directly used to test EF, Miller et al. (2010) created a delay-type task to better understand the mechanisms responsible for ego depletion. Ego depletion refers to the observation that people tend to persist less in a difficult cognitive task after being required to exert self-control (Baumeister et al. 1998). The causes for this phenomenon have been hotly disputed (Baumeister 2014; Beurms and Miller 2016; Inzlicht and Schmeichel 2012). One theory is that both self-control and performance on difficult cognitive tasks are dependent upon a shared, limited resource. Miller and her team tested blood glucose level as a contender for this limited resource using a sample of dogs.

Dogs in the study were first assigned to one of two conditions: kennel or self-control. Those in the kennel condition were led into a kennel which was then closed by the owner. The owner left the room, and the dog remained in the kennel for 10 min before being released. For dogs in the self-control condition, owners instructed the dogs into a sit–stay position and then left the room such that they were able to view the dog by use of a mirror without being visible to the dog. If the dog broke the sit–stay, the owner walked back in, told the dog to sit and stay again, and then left the room. This continued until 10 min had passed. An experimenter recorded both the number of sit–stay “breaks” and at what intervals they occurred.

For their first experiment, Miller et al. (2010) found that, compared to dogs who had spent 10 min in a kennel, dogs who exerted self-control persisted less on an “impossible” task requiring them to retrieve a treat from a Tug-a-Jug toy (the opening to the toy was blocked such that the dog could see the treat inside but could not get it to fall out). However, in a follow-up 2 (condition: kennel/self-control) × 2 (drink: sugar-free/glucose) factorial design, the researchers found that consuming 2 oz. of a glucose drink erased the difference in persistence between dogs who had been in the kennel and dogs who had been in a sit–stay. For dogs who had consumed the sugar-free drink, exerting self-control still reduced persistence in the impossible task compared to dogs who had been in the kennel. The authors thus argued that both dogs and humans rely on glucose as a limited resource. Regardless of how ego depletion functions, engaging in sustained inhibition clearly reduced later persistence in a difficult task. The authors later extended these findings by showing that dogs who held the 10-min sit–stay were more likely to approach and maintain proximity to an aggressive caged dog, suggesting that engaging in self-control can also increase risky, impulsive behavior (Miller et al. 2012).

Although this self-control task was intended as a manipulation, it seems to reflect EF in its own right. Remaining in a seated position for 10 min is challenging for most dogs (Miller et al. 2010, 2012) and likely requires sufficient WMC to maintain the goal of obeying the “stay” command in the absence of the commanding human.Footnote 3

This task might be useful in assessing WMC, with number of “breaks” and time elapsed prior to the first “break” used as dependent measures (similar to the conceptualizations of motor impulsivity and cognitive impulsivity measured by Riemer et al. 2014). It could also be used to remedy ceiling effects reported in the cylinder task. Having dogs engage in self-control using this method prior to engaging in these tasks could increase subsequent task difficulty and increase the likelihood of observing individual differences in EF.

Wait-for-treat task

The wait-for-treat task was used by Müller et al. (2016) as a part of an inhibitory control battery to learn whether EF influences problem-solving ability in dogs. In this task, dogs were first trained by their owners to wait for permission before consuming a treat. During training, the owner placed a treat on the floor within 10 cm in front of the dog and commanded the dog to “wait.” After a few seconds had passed, the owner said “go” and permitted the dog to consume the treat. The delay between the “wait” and “go” command then became progressively longer with each successive trial. During testing, the dog was presented with the same task and was given an inhibitory control score ranging from 0 (dog never waited at least 5 s to consume treat) to 2 (dog waited for “go” command before consuming the treat following a duration of at least 5 s during first two trials, or during three of four consecutive trials). This task was intended to measure a dog’s ability to delay gratification in a shorter, simpler manner than the delay-discounting task mentioned earlier in this section. Upon creating a composite score from this and the other two tasks (the leash task and middle cup task), the researchers found that EF (specifically, inhibitory control ability) predicted subsequent problem-solving ability better than experience with the object used in the problem-solving task.

One might argue that this task merely reflects obedience, that is, a dog’s willingness to follow its owner’s commands. Although this might be true for the initial response to the command, sustaining the command to “wait” likely relies on more than obedience, especially at longer durations. To be successful at the task, a dog must (1) inhibit the response of consuming the treat in favor of following the command to “wait” on a moment-to-moment basis and (2) internally maintain the command to wait over a progressively longer period of time. Thus, this task might be reflective of both inhibitory control and WMC. However, because the authors acknowledge that performance might rely partly on a dog’s level of trainability, training history should be accounted for when using and interpreting findings from this task. Additionally, researchers should report the range of maximum delays achieved by the subjects to evaluate task difficulty, as well as the potential influences of breed and training.

Reversal task

Reversal tasks are used to measure EF, particularly cognitive flexibility, and are especially popular in the aging literature. These discrimination tasks typically consist of an acquisition phase and a reversal phase. During the acquisition phase, subjects are rewarded for making a specific response, such as selecting a particular item from an array (wooden blocks: Tapp et al. 2003), or exiting a maze using a specific route (Mongillo et al. 2013). The acquisition phase continues until the subject has met pre-specified criteria for having learned the task, at which point the stimulus-reward contingencies are reversed. During the reversal phase, subjects must overcome the urge to perform the previously rewarded response in favor of performing the opposite response (e.g., selecting a small block when the large block had previously been rewarded; Tapp et al. 2003). This task has been used with a variety of species to examine how aging affects EF, as well as testing the efficacy of treatments for age-related cognitive decline (Cotman et al. 2002; Milgram 2003; Milgram et al. 2002, 2005).

Using a reversal task, Tapp et al. (2003) tested young, middle-aged, old, and senior laboratory-raised beagles to examine aging effects on initial acquisition and reversal ability. They found that young and middle-aged dogs were able to learn the initial discrimination more quickly than old and senior dogs and were also more successful in responding to the change in contingencies. Whereas younger dogs were able to quickly adapt to the new contingency, older dogs took markedly longer to respond correctly to the reversal. Although both old and senior dogs showed difficulty in reversing, they showed different patterns of responding, leading authors to suggest different mechanistic failures underlying each group’s performance. Specifically, old dogs showed impairment in learning stimulus–response (S-R) contingencies, whereas senior dogs showed impairment in withholding responses to previously rewarded locations. Such patterns are not specific to laboratory-raised beagles: Similar results were obtained in a sample of Labrador retrievers trained as explosive detection dogs (Lazarowski et al. 2014), as well as a large sample of pet dogs by Mongillo et al. (2013), who used a T-maze to test learning and reversal abilities rather than the size-discrimination task used by Tapp et al.

The finding that EF diminished with increasing age in this study mirrors age-related patterns found by other dog researchers (Bray et al. 2014; Wallis et al. 2014, 2016), as well as those observed in monkeys (Gray et al. 2017; Lai et al. 1995), humans (Cepeda et al. 2001; Craik and Bialystok 2006), and those diagnosed with Alzheimer’s disease (Hutchison et al. 2010). Together, these results also suggest that older dogs are more susceptible to the effects of proactive interference, a process by which information from previous trials interferes with performance on the current trial. In humans, overcoming proactive interference requires attention during encoding of the new S-R contingency as well as retrieval during subsequent trials (Kane and Engle 2000). Resilience in the face of proactive interference thus relies on EF made possible by proper functioning of the PFC which has been shown to deteriorate during the aging process of both humans and dogs, especially for individuals with Alzheimer’s disease or canine cognitive dysfunction syndrome (Hutchison et al. 2010; Landsberg et al. 2003, 2012; Ruehl et al. 1995).

A-not-B task

Like the cylinder task, the A-not-B task originated with Piaget’s (1954) study of the development of object permanence in human infants. In these studies, an experimenter places a toy under one of two boxes while an infant watches and then allows the infant to attempt retrieving the toy. For several successive trials, the toy is placed under the same box (typically referred to as “box A”). On the final, critical trial, the experimenter places the toy under box A and then removes it and places it under the other box (“box B”), while the infant watches. Resolution of the fourth stage of object permanence (and onset of inhibitory control ability; Garavan et al. 1999) is inferred if the infant successfully reaches for box B. The A-not-B error (also known as the perseveration error) occurs when the infant reaches for the now-empty box A instead of box B.

Initially created to test object permanence, the A-not-B task has since adapted and used to assess aspects of EF in human infants and nonhuman primates (Amici et al. 2008; Diamond 2002; Diamond and Goldman-Rakic 1989; Topál et al. 2008, 2009), as well as dogs (Bray et al. 2014; Cook et al. 2016a, b; Fagnani et al. 2016; MacLean et al. 2014). Successful performance on the A-not-B requires holding an object’s new location in WM, overcoming the proactive interference from previous trials, and inhibiting the prepotent response of looking for the object at the previously rewarded location. In the canine cognition literature, the A-not-B task typically consists of two phases: training and testing. In the training phase, a dog observes the experimenter place a treat under one of three opaque cups. The dog is then released and permitted to search for the treat and eat it (if it is found on the first attempt). Across a variable number of trials, the dog observes as the same cup (cup A) is baited with the treat. If the dog successfully retrieves the treat on a pre-specified majority of the trials, it moves on to the testing phase. In the ten testing trials, the dog once again watches as a human experimenter baits cup A with a treat. Then, the dog watches as the experimenter removes the reward from the baited cup (container A) and places it under the other cup (container B). The subject is then released and must avoid approaching the now-empty cup A and instead approach cup B to successfully retrieve the treat. Performance metrics in the A-not-B task vary from study to study, with researchers reporting the percentage of dogs that respond accurately during a singular test trial (Bray et al. 2014; MacLean et al. 2014), dogs’ accuracy rates across multiple test trials (Fagnani et al. 2016), or the number of trials preceding the first accurate response (Cook et al. 2016a, b; Fagnani et al. 2016).

Overall, dogs tend to perform exceptionally well during the A-not-B task (see Table 1), with eighty to ninety percent of dogs responding correctly in studies using only one test trial (Bray et al. 2014; MacLean et al. 2014). As with the cylinder task, the A-not-B task might not be sufficiently challenging to allow individual differences to emerge. Some researchers have argued that this task is not a strong measure of aspects of EF, such as inhibitory control or WMC, but rather reflect the domestic dog’s ability to use inadvertent social cues from the human experimenter. Indeed, studies have shown that dogs show significantly lower accuracy when human cues are hidden than when they are present (Kis et al. 2012; Péter et al. 2015; Topál et al. 2009; Topál et al. 2014). However, authors of two separate studies (Bray et al. 2014; Fagnani et al. 2016) used the same precaution to minimize the effect of such cuing; after baiting cup A, the experimenters stepped away from the cups and faced the wall opposite the dog, making cuing impossible. Thus, susceptibility to the A-not-B error likely reflects a combination of factors, including EF failures owing to proactive interference and/or inhibitory control failure, sensitivity to human cuing (if precautionary measures are not taken during testing), and motivation to perform the task (Sümegi et al. 2014).

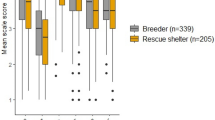

Departing from other studies showing generally high performance in this task, Fagnani et al. (2016) have provided data suggestive of lower EF in shelter dogs compared to pet dogs. In their design, both populations of dogs were given fifteen test trials, and performance was measured in terms of number of trials before the first accurate response, as well as the overall percentage of accurate responses. While the pet dogs showed a mean accuracy similar to that obtained in previous studies (M = 78%), shelter dogs showed an accuracy that was only half that (M = 39%). Future research should examine why shelter dogs exhibit such difficulty on this task. Is this finding truly due to lower EF in shelter dogs, the consequences of which might have led to their relinquishment? Might the stresses inherent in the shelter environment deplete a dog’s capacity for EF as previous research suggests (Alexander et al. 2007; Arnsten and Goldman-Rakic 1998; Baumeister et al. 1998)? Learning whether shelter and pet dogs differ in this respect, as well as the origins of reduced EF in shelter dogs, could help lay the foundation for interventions to increase EF while in the shelter, or reducing potential environmental stressors that may lead to suboptimal behavior while interacting with shelter staff and potential adoptive families.

Overall, the A-not-B task seems to be a shorter version of the reversal task mentioned earlier in this paper. Unlike the results of the aforementioned literature showing detrimental effects of aging on human and dog EF, it has not yet been shown to be affected by age. However, the ostensive ceiling effect suggests that the task is too easy to make age differences readily apparent. As with the cylinder task, future studies using the A-not-B task should (1) assess reliability of this measure, (2) use a larger sample sizes (such as that used by MacLean et al. 2014) to further examine differences relating to age and environment, and (3) explore the effects of proactive interference on EF in dogs.

Visual displacement task

The visual displacement task is another task derived from object permanence studies initially developed by Piaget (1954) and further modified to study other aspects of cognition (Uzgiris and Hunt 1975). In this task, the dog watches as an experimenter “hides” a desired object (such as a toy) behind one of four boxes. The dog’s view of the boxes is then obstructed by an opaque screen. After the passage of a pre-specified delay (ranging from 0 s to over 2 min), the screen is removed and the dog is permitted to search for the object. This task requires the dog to encode and maintain information about the object’s location over a delay and is similar to the Brown–Peterson task used to examine WMC in humans (Brown 1958; Peterson and Peterson 1959).

Using this task, Fiset et al. (2003) found that all dogs in their sample performed at above chance levels across all delays (ranging from 0 to 240 s); however, performance was significantly worse at longer delays. In their first experiment, accuracy was significantly higher at delays of 0 and 10 s than at delays of 30 and 60 s. In their second experiment, performance was significantly better at 0 and 30 s than at 60, 120, and 240 s. This pattern suggests that dogs experience greater difficulty holding spatial information in working memory for durations surpassing 30 s which could be due to surpassing the limits of a dog’s short-term memory capacity. However, it could instead reflect proactive interference from previous trials (see Keppel and Underwood 1962), which increases at longer current trial delays by impairing temporal discrimination between current and recent trials (Bjork and Whitten 1974). In tasks using nonhuman animals, proactive interference is inferred on any given trial when a subject revisits the location rewarded on the previous trial. Researchers found no evidence of proactive interference in either of the two experiments. Specifically, the dogs were no more likely to search the box rewarded on the previous trial at long delays than short delays. Further, only two out of eleven and two out of eight dogs (from Experiment 1 and Experiment 2, respectively) searched a previously rewarded box at above chance levels, suggesting proactive interference was not a significant issue in this task. According to the authors, using the visual displacement task including the introduction of an opaque screen can be a useful method for examining an animal’s working memory for disappearing objects. Although their results are compelling, this study should be replicated with a larger sample.

Summary

A variety of tasks have been used to understand EF in dogs, from the limits of their WMC to how they withhold counterproductive urges. Studies using these tasks have uncovered more parallels between human and dog cognition, such as the negative effect of aging on processes like cognitive flexibility and selective attention. However, some of the frequently used tasks (e.g., cylinder and A-not-B tasks) show robust ceiling effects, which might limit their utility in the study of EF, which requires effortful allocation of attention. Researchers might consider modifying these tasks to make them more difficult and thus more reflective of a dog’s EF.

Insights and limitations of previous studies

So far, I have described the importance of EF, presented an argument for its continued study in dogs, and reviewed some of the research methods used to increase scientific understanding of canine EF. I now summarize what researchers have learned thus far (i.e., what we know) and limitations of the current body of research (i.e., what we do not know).

First, researchers have provided preliminary evidence that the aging process affects dog and human EF similarly (Bray et al. 2014; Mongillo et al. 2013; Tapp et al. 2003; Wallis et al. 2014, 2016). Specifically, older dogs have a harder time inhibiting prepotent responses (Bray et al. 2014), selectively attending to information in their environment and sustaining attention (Wallis et al. 2014), learning new tasks, and flexibly adjusting their responding based on changing environmental conditions (Mongillo et al. 2013; Tapp et al. 2003). Because small and large dogs age at different rates, future research could examine whether dogs demonstrate different trajectories of cognitive decline based on their relative size.

Second, it is possible that shelter dogs possess lower inhibitory control than pet dogs (Fagnani et al. 2016). Although performance was not significantly different between these two groups on the cylinder tasks, pet dogs performed twice as well on the A-not-B task as shelter dogs. If this finding reflects a true deficit of EF in shelter dogs, it is of practical importance that this deficit be examined more closely. From where does this deficit emerge? Might the stressful environment of the shelter place a cognitive load on these dogs, making it difficult for them to engage EF? Does the reduced level of human contact, sometimes both quantitatively and qualitatively, lead to this deficit? Is this deficit preexisting, with low EF being the reason for relinquishment? These hypotheses are not mutually exclusive, and further exploration of the root of this deficit carries practical implications for owners, shelters, and the dogs themselves. If EF can be somehow increased through training, relinquishment can be attenuated, likelihood of adoption can be enhanced, and length of stay at the shelter can be shortened.

Although all of the studies mentioned in this paper ask (and attempt to test) important questions regarding canine EF, myriad methodological issues limit the interpretation of the findings contained therein. I have already discussed many of these issues, but briefly reiterate them here. Recurrent issues in the current body of research are consistently small sample sizes, lack of reliability assessment of measures, weak or absent attempts to address the task-impurity problem, and inconsistency in conceptualizing factors like breed and training.

First, the use of small sample sizes in dog research must be mitigated, especially when researchers are testing for potential differences between groups. The first concern is statistical: Using a small sample can prevent a researcher from detecting existing effects or lead to the finding of spurious effects that do not truly exist (Arden et al. 2016; Button et al. 2013). This can lead to low replicability or contradictory findings, even when using the exact same methods. This slows the acquisition of knowledge in the field and can create misperceptions of dogs’ abilities. The second concern is both ethical and economical. If the use of small samples provides unreliable and incorrect data, the researcher has wasted resources—in terms of time and money—that were used while conducting the study, and the accumulation of such wasted resources across several underpowered studies is likely substantial. Additionally, the subjects themselves can be viewed as wasted resources, a problem that violates reduction, one of the “three Rs” imposed by the Institutional Animal Care and Use Committee. Reduction demands that animal researchers use as few animals as possible to address their research question and is likely an important contributor to the use of small sample sizes in studies described in this paper. In other words, researchers may not be using small samples for the sake of expediency, but rather to satisfy requirements that allow them to conduct research in the first place. Striking a balance between ethical and methodological considerations thus poses a challenge to animal researchers when determining a sample size. Power analysis could be a useful tool in guiding researchers’ efforts to arrive at an appropriate sample size. Alternatively, researchers could turn to citizen science, in which the general public assist in data collection and analysis (Hecht and Rice 2014, 2015; Stewart et al. 2015).

Second, some of the tasks currently being used to measure EF have not been tested in terms of their reliability. The cylinder task, the leash task, and the wait-for-treat task are examples of measures new to the field of canine cognition which have been used, sometimes repeatedly, without knowledge of their reliability. This problem is not unique to dog research; Miyake et al. (2000) describe several tasks used in human cognition research without adequate inquiry regarding their reliability. In particular, this poses a problem interpreting the null or weak correlations observed in various test batteries. Using unreliable tasks will attenuate correlations between tasks, even if the tasks measure the same ability. This might explain why, for example, there were no correlations among tasks in batteries that included the cylinder task; assertions made by researchers that EF is context specific are thus premature. In short, reliability estimates must be obtained before a task is included in a battery.

Third, some of the existing tasks used to measure EF in dogs likely reflect other abilities including spatial problem-solving and trainability. For example, dogs who are better at inhibiting suboptimal responding might have a higher level of EF or might simply have a stronger training history. In particular, the wait-for-treat task seems to rely on a dog knowing both “sit” and “wait” commands. Additionally, the detour-fence task and leash task seem to heavily rely on spatial awareness and problem-solving ability. A dog that cannot untangle its leash from a tree might have high EF, but lack the spatial skills required to free itself. As has been learned by human cognitive researchers, no task is “process pure,” measuring only the ability of interest to the researcher. Using larger task batteries and latent variable analyses can help canine researchers extract underlying abilities that are common across tasks.

Finally, our knowledge of the effects of genetics and training are limited by the variety of ways by which these factors are defined across studies. For example, Marshall-Pescini et al. (2016) found that trained dogs were faster and more accurate at navigating the detour-fence tasks than untrained dogs. This finding should be considered in light of two things: (1) The detour-fence task was used in this study as a measure of problem-solving, not executive or inhibitory ability, and (2) the researchers defined “trained” dogs as those who had carried out agility or another dog sport at a competitive level or were certified as working dogs (police dogs, guard dogs, etc.), whereas “untrained” dogs had either no training at all or only basic obedience level training.Footnote 4 These definitions are not problematic in and of themselves, but should be considered by readers when interpreting the data. When examining the impact of genetics, researchers often use breed as a factor. However, breed can be defined in many different ways. Researchers can use AKC designations (e.g., Great Dane), breeds grouped based on genetic similarity (e.g., Mastiff type), or breeds grouped by intended function (e.g., herding breeds). Again, it is not necessarily problematic that researchers conceptualize “breed” differently, but is a fact that should be taken into account when comparing results of different studies and drawing conclusions on how breed might (or might not) determine abilities like inhibitory control or working memory capacity.

Summary

Although canine scientists have illuminated potential influences of aging and environment on a dog’s EF, future studies must address methodological issues to achieve a more complete understanding of this construct in dogs. These issues include using small samples, not accounting for variables reflected in EF task performance, and including tasks in batteries without knowing the reliability of these tasks. Increased knowledge of how genetics and training determine EF is also a fruitful area for future research.

Future directions

Because of dogs’ unique niche in human society, we can study them to better understand how to improve their behavior and welfare and to better understand ourselves. However, their continued success in our society will be enhanced if we dedicate more research to learning which factors influence their ability to behave in appropriate ways. The following are suggestions for future research that could allow us to better understand how EF works in “man’s best friend.”

Task battery development and latent variable analysis

In both dogs and humans, EF is better viewed as a multifaceted phenomenon rather than a unitary construct (Miyake et al. 2000; Müller et al. 2016; Pennington and Ozonoff 1996). Because of EF’s complexity, human researchers have used methods including task batteries and latent variable analysis to learn how it contributes to the use of semantic memory, aggression, and general fluid intelligence (Hutchison 2007; Sprague et al. 2011; Unsworth et al. 2014). When used properly, these methods indicate how much of the variance observed in performance among participants is due to specific factors, such as primary memory, secondary memory, and attentional control (Shipstead et al. 2014; Unsworth et al. 2014). Given the utility of latent variable analysis demonstrated in the human literature and the repeated calls for task batteries measuring EF in dogs (Marshall-Pescini et al. 2015; Bray et al. 2014; Fagnani et al. 2016), this is a fruitful direction for future research.

To conduct a latent variable analysis, researchers first have participants complete several cognitive tasks that have been shown to measure specific cognitive abilities, and then conduct an exploratory factor analysis to find commonality in task performance (Hutchison 2007; Miyake et al. 2000; Unsworth et al. 2014). The reason behind using such a large number of tasks is the task-impurity problem; using several tasks allows researchers to extract what is common among the tasks in terms of underlying ability. Because of variance introduced by task-specific abilities irrelevant to the underlying construct of interest, latent variable analysis has been described as a more “pure” method of assessing complex abilities like EF than using only one task (see Conway et al. 2003 for a discussion).

As previously discussed, task batteries created for use with dogs show either weak or null correlations. Although “context specificity” has become the favorite explanation for these findings, the pattern seems reminiscent of problems encountered by cognitive psychologists in studying EF in humans. As discussed by Miyake et al. (2000), some of the tasks being used to measure EF were not well validated and, further, had low reliability. The authors warn against testing correlations between such tasks as unreliable tasks will necessarily be unlikely to correlate with other tasks. Nonetheless, researchers at this time used a similar explanation as some canine researchers (Bray et al. 2014; Fagnani et al. 2016) that the measures did not correlate because independent processes were being used for each. Given that reliability estimates for tasks used in recent EF batteries in dogs are unknown, Miyake’s explanation seems more likely.

The careful construction of task batteries and use of latent variable analysis has the potential to shed light on the structure of EF in dogs and whether it is, indeed, context specific. The utility of such methods is contingent on researchers addressing problems well known to researchers studying human cognition, as well as those unique to the canine cognition field. First, any task included in a battery must first be shown to be reliable. Test–retest reliability seems most feasible for tasks like the cylinder task and the A-not-B, whereas split-half reliability could be used for the visual displacement task; however, measures of EF tend to be most valid when the task is novel (Miyake et al. 2000), a fact posing a potential challenge to assessing reliability of tasks used with dogs. Additionally, the reliability of a measure depends on researchers using the same methods when conducting their studies. Second, larger samples must be used. The consistent use of only a few dozen subjects in canine research can hinder a researcher’s power to uncover an existing effect. This problem is compounded when task reliability is questionable. Finally, researchers can test the possibility of context specificity. As described by Bray et al. (2014), their devised social task might have been more difficult for socially motivated dogs, whereas the cylinder and A-not-B tasks might have been more difficult for food-motivated dogs. A variety of questionnaires have been created to assess traits in dogs including trainability, extraversion, and excitability (Hsu and Serpell 2003; Ley et al. 2008; Mirkó et al. 2012). If Bray et al.’s proposal is correct, we should expect that dogs rated high on extraversion or affection-seeking should perform better on the social task than dogs rating lower on these factors. In conclusion, meticulous battery creation and discovery of latent EF variables could be an invaluable way to learn about relationships between EF and factors like breed, training, and environmental demands, as well as predicting a dog’s potential success in a working role, general trainability, or likelihood of adoption from a shelter.

Training EF

Because researchers have proposed that shelter dogs may have lower EF (inhibitory control, more specifically) than pet dogs (Fagnani et al. 2016), it is of practical and ethical interest whether dogs suffering from low EF can overcome this deficit through training. Studies in the human domain have shown that, despite having a strong genetic component, EF can be improved through training (Rueda et al. 2005); however, others argue that the benefits of such training are limited to short-term, task-specific abilities (Redick et al. 2015). Some canine researchers have found evidence that training can increase problem-solving ability (Marshall-Pescini et al. 2008), but the effects of training on EF are, as of yet, unexplored. Discovering whether training can positively influence EF has the potential to lead to the development of interventions that could enhance the bond between a dog and its owner, increase successful adoptions, and reduce euthanasia rates at shelters.

Eye tracking

Eye-tracking technology has been used in human research since the 1960s and uses an apparatus that reflects infrared light off the retina to observe changes in ocular activity such as pupil dilation and gaze fixation in response to presented stimuli. Researchers using this technology have found, for example, that pupil diameter increases when an individual is engaging in a challenging task, relative to an easy task (Beatty 1982; Peavler 1974), and in response to emotionally arousing stimuli relative to neutral stimuli (Partala and Surakka 2003). The use of eye trackers has been useful in understanding not only effortful cognitive processing, but also affective processing in humans (reviewed in Goldwater 1972), and could potentially shed light on EF in dogs.

Although using such methods with dogs may seem implausible, their high level of trainability makes them amenable to study with eye trackers and, as I discuss next, imaging techniques. In fact, several researchers have successfully employed eye trackers to study dog cognition in ways that traditional behavioral measures cannot. To learn how dogs process visual information presented in pictures, Somppi et al. (2012, 2014) used a contact-free eye tracker typically used in human cognition studies. Dogs were trained to lay still with their chin resting on a pad and then presented with pictures belonging to different categories (including upright and inverted human faces, upright and inverted dog faces, inanimate objects, and alphabetic characters) using a computer monitor. The researchers found that dogs tend to focus their gaze on information-rich areas of the images, such as the eye regions of dogs and humans, and preferred images of dog faces to other image categories (e.g., inanimate objects and alphabetical characters). The researchers concluded that dogs are amenable to study with the use of eye-tracking technology and that data from such studies could provide useful information regarding visual perception in dogs, including how they attend to humans and other dogs.

Subsequent investigations using eye tracking have focused on the roles of familiarity, species, and experience with humans on visual responses. Somppi et al. (2016) showed that dogs’ attend in different ways to threatening emotional expressions, depending on whether they are exhibited by a human or conspecific. Specifically, subjects in this study increased visual attention toward expressions of other dogs, but visually avoided those of humans. Other research has showed that processing of human faces is influenced by familiarity and lifetime experience with humans (Barber et al. 2016) and that administration of oxytocin can influence visual attention (Somppi et al. 2017).