Abstract

The common history of Homo sapiens and Canis lupus familiaris dates back to between 11,000 and 32,000 years ago, when some wolves (Canis lupus) started living closely with humans. Although we cannot reach back into the past to measure the relative roles of wolves and humans in the ensuing domestication process, it was perhaps the first involving humans and another animal species. Yet its consequences for both species’ history are not completely understood. One of the puzzling aspects yet to be understood about the human–dog dyad is how dogs so readily engage in communication in the context of a social interactions with humans. To be sensitive to the meaning of human speech and gestures, dogs need to attend to various visual and vocal cues, in order to reconstruct the messages from patterns of human behavior that remain stable over time, while also generalizing to unfamiliar, novel contexts. This chapter will discuss this topic in light of some of the recent findings about dogs’ perceptual capacities for social cues. We describe some of the new technologies that are being used to better describe these perceptual processes, and present the results of a preliminary experiment using a portable eye-tracking system to gather data about dogs’ visual attention in a social interaction with humans, ending with a discussion of the possible cognitive mechanisms underlying dogs’ use of human social cues.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The eyes, according to proverb, are the windows to the soul. The more mundane truth is that humans and many other social animals cannot help but indicate their attentional and intentional states with their eyes. The eyes, of course, are not the only source of such information; other cues such as head movements, changes in body posture, and pointing gestures provide essential information for the everyday lives of many social animals. Because vision is a primary mode by which the members of many gregarious species detect social cues, as well as mediating their goal-directed interactions with the environment, it is possible for observers to use the overt eye movements of others as a proxy for their intentions and as cues toward the location of significant objects (Shepherd 2010). As scientific observers, we may exploit the importance of eye movements to improve our understanding of the mechanisms of social perception by studying where species look for relevant information when performing a given task. Advances in the study of comparative cognition can be achieved by combining behavioral tasks with techniques coming from the cognitive and neural sciences, and in this chapter we describe the work of our group in applying eye-tracking technology to the study of how dogs use cues provided by humans.

Because of their long history of domestication, dogs present a very important model for making progress in understanding the evolution of social cognition. Technological progress toward capturing the dynamics of dog–human interactions could provide a very productive means of furthering this understanding, as well as furthering the aims for integrative ethological work described by Tinbergen (1963). We have been developing a fully portable eye-tracking system for use with dogs that provides excellent opportunities for gathering data about the abilities of dogs to read human social gestures. In this chapter we describe the technological and methodological challenges presented by our attempt to develop and use the eye-tracking system, and we describe some preliminary results, which should encourage further exploration of these techniques.

Before getting into the details of our methods and results, it is useful to highlight the significance of studying the relationship between humans and dogs for understanding the evolution of social cognition by outlining the likely events that led to this long-standing relationship. The history between dogs (Canis lupus familiaris) and humans (Homo sapiens) represents what is almost certainly the longest, closest ongoing relationship humans have ever had with another species of mammal. Dogs were the first species to be domesticated by humankind (Clutton-Brock 1995; Davis and Valla 1978; Hemmer 1990; Vila et al. 1997). A proto-dog (an early version of dogs) lived in a close relationship with humans even before they were a ‘properly’ domesticated species. That is, some members of Canis lupus were most probably cohabiting with humans, even before humans started exerting conscious control over their diet and reproduction. This close relationship resulted in genetic, behavioral, morphological and physiological changes that were passed on to subsequent generations (Diamond 2002). The relationship has been so close that dogs have evolved in ways that range from acquiring the ability to metabolize carbohydrates, as a result of being permitted to eat human food (Axelsson et al. 2013), to the ability to use human gestures in way that has no equivalent in the animal kingdom (e.g. Hare and Tomasello 2005; Miklósi et al. 2005; Soproni et al. 2001, 2002) (see also Prato-Previde and Marshall-Pescini, this volume).

Only recently have researchers started paying attention to the full range of implications of the long relationship between dogs and humans. One reason for this may be that dogs were often dismissed as “artificial” or “unnatural”, thus not worthy of serious attention from behavioral biologists. However, researchers have started to realize that the domestic dog is a unique yet informative case in nature and have begun to investigate its particularities.

Our focus is on the implications of this relationship for social behavior and cognition. Dogs have become keen observers of human movements and gestures. But importantly, in order for dogs (or any species) to be able to understand or make use of another species’ movements and gestures, they have to selectively allocate perceptual and cognitive resources to the detection and processing of particular social aspects of the environment (a process known as orienting of attention). Dogs are especially capable of utilizing human pointing gestures and of following our eye-gaze direction. To be able to use these cues efficiently, individuals must be able to exploit their referential nature. Pointing with hands or looking in a particular direction does not have an intrinsic value or meaning in the world; rather these actions allow perceivers to orient their attention to specific environmental cues within the particular context of a social interaction.

To arrive at an understanding of how humans and dogs have managed to achieve such tight social coordination, it is necessary to talk about the process of domestication. The exact period of time, the location or locations of dogs’ domestication, and the reasons behind it are matters of substantial debate in the scientific community (Driscoll et al. 2009; Hare and Tomasello 2005; Larson et al. 2012; Serpell 1995). Nevertheless, one thing that most researchers do agree on is that dogs are so closely related to gray wolves (C. lupus) that they most probably come from them (Clutton-Brock 1995; Driscoll et al. 2009) (but see Koler-Matznick 2002, for an argument that Canis familiaris did not descend from C. lupus). There is widespread acceptance, along the way, of the likelihood of the existence of several independent centers of dog domestication beginning in the Late Pleistocene (126,000–11,000 years ago) and early Holocene (11,000–5,000 years ago) (Crockford and Iaccovoni 2000). This is especially relevant if we take into account that the emergence of dogs as an identifiable subspecies is estimated to have occurred between 32,000 and 12,000 years ago. The later part of this range (from 12,000 years ago) coincides with the Neolithic revolution in which humans started to settle down, slowly changing the hunter-gatherer lifestyle to a more sedentary one (Bar-Yosef 1998; Weisdorf 2005). In that respect, as the first domesticated animal species, dogs were probably a strategic factor in this process, since it has been suggested that dogs helped modern humans in hunting and herding, as well as in defending territory, searching and guiding—in addition to being eaten in some cultures (Lupo 2011; Ruusila and Pesonen 2004). Humans, in turn, might have provided a secure source of food to dogs.

The fact that dogs adapted to the human environment in such a distinctive way allows us to explore whether the basis of human–dog social interactions can be informative about the evolution of relevant aspects of sociality such as communication and cooperation. Because of the long history of domestication, dogs’ particular responsiveness to human words (Fukuzawa et al. 2005; Kaminski et al. 2004; Pilley and Reid 2011; Ramos and Ades 2012) and their apparent understanding of human emotional expressions (Buttelmann and Tomasello 2013; Merola et al. 2012), it becomes possible to ask to what extent, if any, these capacities are a case of convergent evolution, the process by which species that are not closely related evolve similar traits as they adapt over time to similar ecological and social environments. During the past 12–30,000 years dogs and humans have helped to shape each others’ environments in ways that might also have facilitated a co-evolutionary process. For example, playful interactions between puppies and human children could scaffold developmental processes that have strong implications for the evolution of both species’ social capacities.

There are several reasons to postulate why it would have been necessary for organisms to develop a new set of social skills enabling them to predict and to manipulate other agents’ behaviors (Shultz and Dunbar 2007). It is reasonable to suppose that individuals benefit from creating, discovering, and taking advantage of others’ solutions to ecological challenges (Barton 1996; Reader and Laland 2002). Thus, the particularly social way in which wolves (the most probable ancestors of dogs) solve ecologically relevant issues such as foraging could be one of the sources in which dogs’ communicative abilities are rooted. The evolution of social and communication skills, and related cognitive abilities, has been related to hunting patterns of a species (Bailey et al. 2013). The gray wolf is well-known as a cooperative hunter. Hence, it has been suggested that the evident communicative skills of dogs with respect to humans originates from wolves being able to cooperate among each other to hunt (Hare and Tomasello 2005).

Wolves belong to the category of pack-living canids with gregarious but independent natures (Koler-Matznick 2002). Though they live in packs of eight to twelve animals (Clutton-Brock and Wilson 2002) it has been reported that they can also live and survive independently of the group (Mech 1970, 2012; Sullivan 1978). Wolves and primates share a highly hierarchical social structure, but unlike primates, only the dominant pair of the pack reproduces (Mech 1970) and most of the time they do so for life (Clutton-Brock and Wilson 2002). Wolves hunt both small and large prey (Clutton-Brock and Wilson 2002). As mentioned before, they hunt large prey cooperatively (Clutton-Brock 1999; Olsen 1985) and they learn to do so in their adolescence, which last up to 2 years (Koler-Matznick 2002).

Although it is difficult to determine the exact role of cooperative hunting, it is believed that such hunting carried significant implications for the evolution of sociality and cognition (Bailey et al. 2013). For instance, wolves have been shown to coordinate their actions in order to fan out and encircle prey (Muro et al. 2011). The gray wolf also has highly developed reconciliation behaviors, very similar to those seen in non-human primates (Palagi and Cordoni 2009). These reconciliation behaviors would help to decrease aggression between group members and preserve cooperative relationships and social cohesiveness (Cordoni and Palagi 2008; Palagi and Cordoni 2009), which in turn would increase the efficiency of cooperative hunting (Bailey et al. 2013). Some biological models indicate, however, that hunting both large and small prey provides evidence that wolves engage in both cooperation and cheating (Packer and Ruttan 1988).

Cooperation and cheating both require a level of vigilance directed toward the behavior of other group members. Therefore, the production of intentional signals (i.e., intended communication) and unintentional signals (e.g. involuntary behavioral cues) signals, and the ability to recognize such signals, probably plays a crucial role in the decision-making process of group members (Bailey et al. 2013).

Taking this evidence as a whole, it is reasonable to conclude that for social species that hunt cooperatively, a well-developed understanding of others’ behaviors is extremely important and probably advantageous (Bernstein 1970; Moore and Dunham 1995).

Capacities such as responding differentially to facial expressions and paying attention to another’s focus of attention can help an individual recognize, for example, when another group member is seeing a predator or a prey approaching. Similarly, attention to such cues allows organisms to predict each other’s behavior, emotional state or intentions (Shepherd 2010).

Effective prediction of other organisms requires individuals to integrate different sensory information automatically through time. Understanding how the information is integrated over time, therefore, is relevant to understanding the basis of social cognition in different species. It is possible to study this issue in a detailed way by making use of new technologies. Technological advances allow us to study how salient environmental cues (e.g. information provided by movements and gestures) are being used in many different modalities. Some of these advances aim to integrate sensory information, getting us closer to studying sociality as it occurs day to day. One example of such a technology is eye-tracking.

Eye-tracking technology allows researchers to access participants’ overt visual attention (Duchowski 2007). Research using eye-tracking techniques has revealed much about the cognitive processes underlying human behavior (e.g. Dalton et al. 2005; Felmingham et al. 2011; Gredebäck et al. 2009; Holzman et al. 1974; Yarbus et al. 1967), and recently researchers in animal behavior have started to make use of this method to answer questions regarding animals’ overt attention (Kano and Tomonaga 2013; Teglas et al. 2012; Williams et al. 2011), with greater accuracy than third-person perspectives (e.g. video-cameras and inferences from subjects’ head orientation) (Duchowski 2007). It is worth noting that the use of eye-tracking methods to study animal cognition is still quite preliminary. There are a number of unresolved issues in expanding a technique developed for humans to non-human animals. These include constraints related to calibration of the eye-tracker system, and the lack of development of non-invasive and appropriate gaze-estimation models for non-human species (Kjaersgaard et al. 2008). Other difficulties include getting a suitable and ecologically valid task for the species, and being aware of possible anthropocentric interpretations of results.

Notwithstanding these issues, eye-tracking systems have been recently used in comparative cognition research with nonhuman animals, primarily primates (Hattori et al. 2010; Hirata et al. 2010; Kano and Tomonaga 2010, 2013; Machado and Nelson 2011; Zola and Manzanares 2011) but also recently with dogs (Somppi et al. 2011; Teglas et al. 2012; Williams et al. 2011). This research has produced intriguing results. Using a video-based eye-tracker with a wide-angle lens, Kano and colleagues (Kano et al. 2011; Kano and Tomonaga 2009, 2013) recorded the eye movements of great apes and humans. Their research suggests that these species show similar visual scanning patterns for scenes and faces (particularly eyes and mouth) with respect to both conspecifics and members of other species. Differences were seen in fixation duration: shorter and stereotyped in apes, and longer and variable in humans. Humans also present more frequent and longer fixations to the eye region compared to apes. Similarly, Hirata et al. (2010) showed that when presented with pictures of conspecifics’ faces, chimpanzees focus more frequently and for a longer time on the eye region of the faces, but only for upright faces in which chimpanzees had their eyes open (vs. inverted faces or pictures of chimpanzees with closed eyes). By comparison, eye-tracking research on dogs is still very preliminary. Somppi et al. (2011) trained their participants to stay still on a cleverly designed apparatus that allows them to study a dog’s visual scanning without the use of more constrictive methods. They showed that dogs are able to focus their attention on informative regions of images displayed on a screen without any task-specific pre-training. Interestingly, they also showed that dogs seem to prefer looking at faces of conspecifics over other images (e.g. human faces, objects) and that they fixate on a familiar images longer than on novel ones. Similarly, adopting a system widely use in human and infant research measuring responses to images on a screen, Teglas et al. (2012) showed that dogs’ use of human cues is highly sensitive to the ostensive and referential use of the signals, supporting according to the authors, the existence of a functionally infant-analog social competence in both dogs and humans.

However, existing approaches to studying dogs’ visual attention suffer from various drawbacks if one is interested in studying the naturalistic behavior of these animals. For example, maintaining the animal’s natural mobility is important when researchers aim to study behaviors in a naturalistic fashion; however, all eye-tracking systems currently in use with dogs either inhibit the dogs’ movements in some way, or limit the location and type of stimuli that can be presented to the dogs (e.g. to those that can be presented on a video monitor).

Thus, although there is plenty of evidence supporting dogs’ skillfulness at reading human gestures, there are still many open questions about how dogs visually process human social cues. Such questions include: Where do dogs focus their overt visual attention when presented with human gestures? How much does familiarity with the human signaler modulate dogs’ visual behavior? How differently do dogs scan pointing with the hand to an object versus head-gaze cues directed at an object? Answering these questions will support several scientific objectives. First, it will allow detailed comparison of the visual behavior of dogs to that of primates and thus to explore further the influence of domestication in the evolution of the species’ social skills. Second, knowing whether dogs scan familiar versus unfamiliar persons differently might influence the design of experiments that tap into communicative skills of dogs. Finally, taking a step forward in understanding the salient aspects of our own behavior for dogs might allow scientists and engineers to tap into the properties that make the comprehension of human commands and intentions possible for nonhuman forms of intelligence. Thus, for example, a better understanding of the social-cognitive capacities of dogs could support appropriate developments in robotics, where robots must detect and respond to human social cues.

To start exploring these questions we made use of our head-mounted eye-tracking system (described below) and the so-called object choice paradigm, a well-known experimental paradigm that has been widely used with dogs (e.g. Hare et al. 2002; Miklósi et al. 2005; Soproni et al. 2002). The object choice task consists of giving a dog a choice between two cups—one of them containing a piece of food or a preferred toy—and then directing the dog to the correct container by human pointing gestures. We aimed to investigate, first, what are the most salient regions for dogs when scanning human gestures, second, whether familiarity modulates visual tracking in dogs and third, whether dogs’ abilities to follow a pointing gesture is related to the kind of motion (head vs. arms). For this preliminary study a familiar person (owner of the dog) and an unfamiliar person (experimenter) displayed static distal pointing and head-gazing to signal to the subject where a piece of food was hidden, while the dog’s overt visual attention was monitored by a head-mounted eye-tracker system.

With fewer spatial and visual constraints than other methods, the use of head-mounted systems such as ours might be especially relevant to the investigation of the communicative and social behavior of canines and other animals in a naturalistic setting.

Since our system allows the full eye-tracking system to be carried in a backpack worn by the dog, the dog is able to move relatively freely. Mobility has the benefit of capturing most spontaneous behaviors of dogs, albeit within the confined circumstances of a room in the lab (but see Fugazza and Miklósi, this volume, for discussion of the naturalism of a lab for dogs). Despite the fact that movements are confined to “indoor behavior”—no rough-and-tumble social play, for example—the freely-moving dogs in our experiments often changed direction abruptly, affecting the stability of the system. It is important, nevertheless, to work with freely-moving dogs because mobility brings the opportunity to study the full range of dogs’ visual behavior before they make a choice. Decision-making in dogs involves actions, which translate to movements. The system must be recalibrated after every movement, contributing a source of noise that is sometimes impossible to clean (thus decreasing the number of usable trials). Re-calibrating the system is not difficult per se, though dogs do seem to lose motivation over time. Thus, sessions have to be short in duration, including only few trials per session.

In this preliminary study, the number of dogs providing usable data was limited because of problems with camera and recording quality. More subjects need to be run with the next-generation equipment, but we offer this study as a proof of concept and illustration of the capacity to collect interesting new kinds of data bearing on canine cognitive processes.

2 Eye-Tracking Study

2.1 Methods

Participants. Six privately owned pet dogs (5 females and 1 male, age range: 2–5 years old; breeds: mixed, American pitbull terrier, Akita mix, Shiba/terrier mix and two schnauzer/poodle mixes) took part in this study. Dogs and their owners were asked to visit the lab twice on different days, one for each experimental session. All experiments were conducted at the Canine Behavior and Cognition (CBC) Laboratory of Indiana University. The Institutional Animal Care and Use Committee and the Institutional Review Board of Indiana University approved the experimental protocol.

For an average period of two weeks prior to coming to the lab, dogs were trained at home by their owners to wear an off-the-shelf set of goggles specially designed for different breeds of dogs. The criteria for inclusion in the study were, first, that the dog could successfully wear the goggles for about 10 min without disruption of the dog’s natural behavior (according to their owner’s opinion). Second, the dog should not try to take the goggles off by pawing at them or by any other means during those 10 min.

Three people were involved in running the experiment: Experimenter 1 (E1 hereafter), who gave instructions to the owner and carried out the warm-up and the unfamiliar person (UP) trials; Experimenter 2 (E2 hereafter), who assisted E1 throughout the session; and the owner of the dog, who carried out the familiar person (FP) trials and helped in handling her/his dog when required.

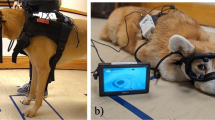

Apparatus. A head-mounted dog eye tracker system designed in the CBC Lab was used. The system consists of two small lightweight cameras, mounted on a set of commercially available dog goggles (the same ones worn by the dogs in the pre-training phase), slightly modified to improve stability. One of the cameras is strategically located, pointing at the eye of the participant [eye camera: Sony high-resolution ultra-low-light black-and-white snake camera], while the second camera sits on top of the goggle frame, right above the participant’s eye, collecting images of the world [scene camera: RMRC-MINI 12 V (6–24 V) compact camera PAL] (Fig. 6.1). Both streams of data were recorded and stored at 60 frames per second using two lightweight portable video recorders for further processing and analysis.

Warm-up trials. Before each session (‘familiar’ and ‘unfamiliar person’ sessions) dogs took part in four warm-up trials that were intended to both familiarize dogs and owners with the procedures and also to motivate dogs to participate in the study by increasing their interest in the plastic cups used in the experiment. Owners were carefully instructed about their participation before each session. Specifically, after a verbal explanation of the owners’ role in the experiment (without mentioning the purpose of it), E1 demonstrated several times the two types of pointing involved in the experiment. It is worth noting that if at some point during a trial an owner confused or misapplied the gestures, E1 stopped the trial to inform the owner and to again demonstrate how the gesture should be displayed. In that case, since the trial was disrupted it was not included in the analysis. The floor in the experimental room was marked in order to facilitate consistent location of the dogs during the experiment (2.5 m in front of the signaler) and the placement of the cups (0.5 m either side of the signaler). Before each warm-up trial, E2 led the dog and asked him to sit 2.5 m in front of E1. After E1 made sure that the dogs were calm and paying attention to her (making eye-contact) she proceeded with the two static distal pointing (1 left and 1 right) and two head-gaze (1 left and 1 right) trials (described below). The order of presentation of these trials was counterbalanced across participants. The warm-up trials were conducted in the same room as the experimental trials, but the participants were not wearing the eye-tracking system. Participants successfully selected the food container at a rate of 100 % for distal pointing and 60 % for head gazing.

Calibration. After the warm-up trials the calibration phase took place. When the dog was calm, E1 mounted the eye-tracker system on the dog’s head. When the system was correctly placed (with the eye camera correctly pointing to and capturing the whole eye), the eye-tracker was calibrated for each participant. In order to calibrate the eye-tracker, E1 stood directly in front of the dog and after calling the dog’s name and making eye contact, immediately showed the dog a piece of food, slowly moved it to one of nine Velcro-marked points on the wall, making sure the dog was following the food with his gaze. When the piece of food was in the middle of the calibration dot (a black cardboard circle attached to the Velcro marks on the wall), E1 held it there for approximately 2 s, indicating to the cameras when the calibration trial was successful (i.e., when the dog stared at the food for the entire 2 s). The same sequence was executed for the other eight marked dots on the wall. The threshold for an adequate calibration was accomplished if participants fixated their gaze at eight out of nine calibration points. If for any reason participants did not reach the criterion the experimenter proceeded to take a break before the second and last attempt. One participant out of the six did not meet the threshold and was consequently not considered for the experimental trials.

This calibration procedure was used for the experimental trials, though extra calibration trials (same procedures) were conducted if the goggles moved from the original position. In order to correct for small movements, additional drift-correction was done at arbitrary moments throughout the study.

Experimental trials. Immediately after the calibration procedure the experimental trials took place. Familiar and unfamiliar sessions consisted of exactly the same procedure as in the warm-up, with the only difference being the identity of the signaler (experimenter or owner).

Trials started with the signaler (whether E1 or FP) standing in front of the dog and calling the dog’s name to draw his attention; when the dog was attentive the signaler proceeded to hide a piece of food (i.e. dried treats previously approved by the owner) in one of two plastic cups. To control for olfactory cues, both cups were pre-baited with dried food that was taped to the bottom inside the cups (bubble wrap was used to avoid possible interference of auditory cues). Immediately after hiding the food, E1 shuffled the cups behind her back so as to avoid giving the dog visual access to the target cup. Then, the signaler placed the two cups at their corresponding marked locations from a standing position midway between them. Following that, after calling the dog’s name again and making eye-contact, the signaler displayed one of two types of pointing gestures: a static distal point or momentary head gaze. Static distal (SD) pointing consisted of signaler extending her arm and hand to the target cup (the one containing the food) and stayed in the same position until participants made their choice by approaching one of the cups, or a maximum of 15 s. The tip of the signaler’s finger was approximately 50 cm from the closest edge of the target cup. Head gaze (HG) pointing consisted of the signaler turning her head and gaze to the target cup and remaining looking at it until the participants made their choice, or a maximum of 15 s. Each session consisted of six SD and four HG trials. The low number of trials was intended to avoid fatigue and lack of interest in the dogs. The exact sequence of trials was determined before the session and written on a whiteboard visible to the signaler throughout the experiment. Each sequence was pseudo-randomized for each participant, with the only restriction that no one side was baited more than twice in a row.

Trials were considered passed and food retrieved if the participant touched or approached the target cup within 10 cm, prior to any contact with the other cup. In order to discount the effects of possible extraneous variables, participants also went through two control trials in which no signal was given to the dog by the signaler. These control trials were randomly positioned within the experimental session. For the control trial the signaler, after calling the dog’s name to draw her/his attention, maintained a neutral position facing forward and looking directly at the dog for about ten seconds.

Data analysis. A two-way repeated-measure ANOVA was used to analyze the dog’s behavior on the choice task: Familiarity (familiar, unfamiliar) versus Type of Gesture (HG, SD) using SPSS for MAC 18.0 (SPSS Inc). Significant effects were identified at p values of less than 0.05. For eye-tracking data, the language archiving tool, ELAN (Brugman and Russel 2004) was used to annotate the movie of each participant's session with the appropriate fixation location and duration. In order to analyze the eye-tracking data we used the ExpertEyes software package that was developed to perform human eye-tracking studies under demanding conditions (Parada et al. submitted). ExpertEyes permits video to be analyzed in an offline mode. Using this mode we were able to export movies containing a fixation cross indicating the subject’s overt focus of attention. Movies were imported to ELAN and, making use of the fixation cross, were coded frame-by-frame. Because the experiment was run in naturalistic conditions, it was impossible to control for factors such as consistency of duration for all trials. Thus, we only coded the first 2000 ms immediately after the signaler started making the gesture, independent of dogs’ final choice (correct or incorrect). In order to analyze the trials we first divided each image into several features (areas of interest: AOI) to quantitatively analyze participants’ viewing patterns. The AOI to be coded in all trials were: face, torso (the arm region was combined with torso for the HG trials, since the signalers stood with their arms behind their backs and elongated the respective arm only to point with the hand), legs, correct cup, and incorrect cup. For the distal pointing trials only, correct hand area and incorrect hand area were also coded. After analyzing the data, legs were discarded since no dog looked at this area during the analyzed temporal window.

We used two dependent variables indicating attention: gaze time (duration of gaze points) and the frequency with which dogs directed their gaze to the AOIs—calculated by using the proportion of frames in which any AOI was the target of the gaze points. A gaze point was scored if the gaze remained stationary for at least 70 ms. Otherwise, the recorded sample was considered as part of a saccade or noise. In order to limit analysis to the visual information actually available to the participants, we excluded the samples recorded after the first 2000 ms post-gesture. Likewise, we did not code samples recorded during saccades.

Raw data from each participant were converted to fixation duration and fixation frequency for each participant taking into account the number of usable trials per participant per session using custom in-house routines running under MATLAB 7 environment (The Mathworks).

For statistical analyses, differences in fixation frequency and gaze time (duration) were separately evaluated using a three-way repeated-measures ANOVA: Familiarity (unfamiliar vs. familiar person) X Location (face, torso, correct cup and incorrect cup) X Type of Gesture (SD vs. HG). Additionally, a two-way repeated measures ANOVA: Familiarity (unfamiliar vs. familiar person) X Location (correct hand vs. incorrect hand) was used to analyze within-subject differences with respect to correct versus incorrect hand visual behaviors for SD trials only. All statistical analyses were run using SPSS for MAC 18.0 (SPSS Inc.). Significant main effects were identified at p values of less than 0.05 (when sphericity did not hold, Greenhouse-Geisser correction was used). Post-hoc comparisons were evaluated using the Bonferroni criterion to correct for multiple comparisons with p values of less than 0.05 identifying significant effects.

2.2 Results

Behavioral data. Dogs were above chance for all the gestures except head gazing by FP, where they performed at chance. Specifically, the proportion of correct choices per conditions was 0.77 for UP SD, 0.5 for UP HG, 0.8 for FP SD and finally 0.48 for FP HG (Fig. 6.2a). Statistical analysis showed no significant differences in accuracy to type of gestures (F(1,4) = 0.433, p = 0.127) or familiarity (F(1,4) = 0.003, p = 0.962). No interaction effect was seen (F(1,4) = 0.003, p = 0.687).

a Proportion of correct choices mean (+sem) proportions of participant’s correct choices in each condition. Chance performance (0.5) noted. The x-axis depicts trial mean proportions for the four conditions: UP (unfamiliar person, E1) static distal pointing, UP (unfamiliar person, E1) head gazing, FP (familiar person) static distal pointing and FP (familiar person) head gazing. b Responses to control proportions of behavioral responses to control trials (no gestures). The x-axis depicts the choices our participants took: No choice (dog did not approach to any of the cups), Incorrect Cup (dog approached to the incorrect cup), Correct Cup (dog approached the cup that contained the food)

In the control trials, for most of the trials participants did not move from the starting point (no choice response were seen 60 % of the times for FP and 70 % for UP); on the few occasions when they did move, they could not accurately predict where the food was hidden (incorrect cup responses: 30 % for FP, 20 % for UP) (Fig. 6.2b).

Frequency. The repeated measures ANOVA showed a significant main effect of location (F(3,12) = 9.14, p = 0.002), and a significant interaction effect of location by gesture (F(3,12) = 10.44, p = 0.001). No other significant effects or interaction were seen.

A post hoc analysis comparing the effects of locations did not show any significant differences. This might be due to the effect size for this analysis (d = 1.5), which is found to exceed Cohen’s convention (1988) for a large effect (d = 0.8). However, post hoc tests comparing the effect of location by gesture revealed that dogs looked more frequently at faces when presented with head gazing gestures compared to static distal pointing. Similarly, they looked more often at the torso area when presented with distal pointing gestures compared to gazing gestures (HG).

As expected, analysis exclusively for distal pointing gestures (correct vs. incorrect hand) showed that dogs looked only to the correct hand area compared to the incorrect area (F(1,4) = 80.35, p = 0.001). No significant effect of familiarity was seen.

Gaze time (duration). Analysis showed a significant main effect of location (F(3,12) = 6.61, p = 0.007) and a significant interaction was found between location and gesture [F(3,12) = 6.01, p = 0.01). As for the frequency analysis, due to a small sample size, a post hoc test did not show significant differences between locations. Nevertheless, post hoc tests for the interaction effect of location by gesture showed the dogs looked longer at the face region compared to other regions for the head gaze trials (Figs. 6.3 and 6.4). Similarly, dogs looked marginally longer to the incorrect bowls for the head gaze trials.

Data depicting the total average duration (gaze time) that dogs spent looking at each of the six AOIs for static distal pointing trials as a function of familiarity. AOIs (roughly represented by dashed lines): face area—torso—correct hand area—incorrect hand area (not depicted because dogs did not look on that direction during the 2000 ms after the gesture)—correct cup—incorrect cup. a Familiar person (owner), b Unfamiliar person (experimenter)

Data depicting the total average duration (gaze time) that dogs spent looking at each of the five AOIs for head gazing trials as a function of familiarity. AOIs (roughly represented by dashed lines): face area—torso—correct cup—incorrect cup. a Familiar person (owner), b Unfamiliar person (experimenter)

As for gaze time, analysis exclusively for distal pointing gestures (correct vs. incorrect hand) showed that since dogs did not look at the incorrect hand area, the duration of the looks to the correct hand area was also significantly longer (F(1,40) = 23.16, p = 0.009) (Figs. 6.3 and 6.4). There was no significant effect of familiarity.

2.3 Discussion of Results

By presenting our eye-tracking system we aimed to extend the limited literature on dog’s visual scanning of human gestures and extend an invitation to researchers to make use of this and other technologies widely employed in the human cognitive sciences.

Previous work with eye-tracking on dogs had already suggested that dogs focus their attention on informative regions of images without any task-specific pre-training. This work had also shown that dogs make use of human ostensive and referential signals. In the study presented here we wanted to further explore dogs’ visual behavior in a relatively natural situation, which allowed them to move freely. The data acquired in the current study raise some interesting issues regarding the use of eye-tracking on dogs in communicative tasks. We discuss three: first, dogs’ focus of visual attention during human gestures; second, the influence of familiarity on dogs’ visual behaviors; third, pointing with the hand versus pointing head-gaze cues. We then conclude with a more general discussion.

2.3.1 Dogs’ Focus of Visual Attention During Human Gestures

Our eye-tracking data show that dogs looked longer and gazed more frequently at the face area than the rest of the locations for the HG trials and at the torso area for SD trials. This can be understood if we consider that dogs’ overt visual attention might be functioning, similar to humans, in a reflexive way. Human infants as young as 6 months old shift their attention in the direction of a pointing hand (Bertenthal and Boyer 2012). By this age they also turn their gaze after a model starts turning hers in order to attend to a toy (Gredebäck et al. 2009), and show longer looking times to the attended objects. The apparent similarity of dogs’ visual scanning behavior to human infant behavior is something that should be further explored.

2.3.2 Influence of Familiarity on Dogs’ Visual Behaviors

Our preliminary study did not find any effect of dog gaze related to familiarity of the signaler. Communicative behaviors of dogs toward an unfamiliar person have received some attention. Elgier et al. (2009) studying extinction to a human pointing gesture in an object choice task showed that for dogs, extinction of a pointing gesture took longer when the signaler was the owner; likewise, reverse learning (following the not-pointed-to container) was faster when the owner gave the cue compared with when a stranger did it (Elgier et al. 2009). This suggests that familiarity does matter for dogs. However, Kaminski et al. (2011) showed that dogs differentially communicated the location of a hidden object according to whether the item was an object of their own interest or an object of the person’s interest irrespective of whether the person was their owner or a stranger (Kaminski et al. 2011). Merola et al. (2012) had owners and strangers display emotional information (facial and vocal expressions of either happiness or fear) with respect to an electric fan. The authors found that this behavior elicited both referential looking and gaze alternation in dogs irrespective of the informant’s identity. In their study, dogs did make different use of the emotional information when coming from their owner compared to the stranger: the owners’ emotional reactions influenced the dogs’ subsequent behavior, whereas the strangers’ emotional reaction did not (Merola et al. 2012).

Rossi et al. (submitted) found that dogs show referential looking between an unfamiliar person and inaccessible food, matching the result of a previous study that had been conducted with familiar people only (Miklósi et al. 2005). But whereas Miklósi and colleagues also demonstrated that, in an apparent effort to communicate with their owners, dogs increased their vocalizations when engaged in referential looking between food and an inattentive (book-reading) owner, the dogs in the study by Rossi et al., when paired with an unfamiliar person who was being similarly inattentive, engaged in similar amounts of referential looking but did not vocalize more. Taken together, these studies suggest that the vocalization rate of dogs is modulated by familiarity when dogs are trying to get a person’s attention, even though referential looking is unmodulated.

The preliminary eye-tracking study reported in this chapter found that familiarity did not modulate dogs’ behavior in this particular experimental task. This might be related to the nature of the task, which may have been approached by the dogs in our study as a foraging task. Because the dogs in our study, as in the majority of studies using the object-choice task, were pre-trained with warm-up trials before the experimental session, the dogs might have generated some level of expectation about the receipt of food and taken the signaler, regardless of her identity, as a cooperative partner. Further experimentation is needed in order to investigate this possibility.

2.3.3 Pointing with the Hand Versus Head-Gaze Cues

As previously mentioned, dogs looked longer and gazed more often at the face area than other locations during the HG trials. Interestingly, there was a trend towards dogs looking marginally longer at the incorrect cup in the HG trials compared to the time spent looking at the incorrect cup in the SD trials (Fig. 6.4). This suggests that dogs might have scanned the scene more thoroughly during the HG trial. One possible reason is that dogs actually perceived that the signaler was signaling to one of the cups—i.e., recognizing communicative intent—but they failed when deciding which cup to approach. Perhaps this was because the means of communicating information (head-gaze) did not rise above their threshold for the decision. Similarly, a general lack of experience with gaze-following should not be discarded as an explanation for the subjects’ failure to use the cue to find the hidden food.

Our data showed that for both SD and HG dogs did not fail to attend to the source of information. Rather, they failed more frequently at the moment of making the decision during HG, being practically at chance—taking both FP and UP together—at retrieving the food from the cups. That is, for dogs in our study the provided gaze cues were probably ambiguous signals that did not provide enough information about the environment. Similar effects have been shown in other studies with dogs (Hare et al. 2002; Ittyerah and Gaunet 2009). This might be related to the kind of gesture and the motion involved. Pointing with the arm and hand is different from pointing with the head or eyes. Although pointing with the arm and hand may be harder to follow, because the relationship to the intended target is more indirect and abstractly geometrical than a direct look at the target (Cappuccio and Shepherd 2013), it also occupies more of the visual field than pointing with the eyes, likely drawing more attention and thus facilitating recognition of its target. This, along with the possibility that pet dogs might have more experience following arm and hand pointing gestures than following eye and head gazing makes a case for eye- or head-gaze being more difficult to use than arm-hand gestures.

An interesting possibility is suggested by taking a dynamical systems perspective (Spencer et al. 2001) towards our preliminary data. In the SD trials, adding the time spent looking to the arm/hand and to the correct cup yields 42 % (with the familiar person) and 47 % (unfamiliar) of the total time budget directed towards the side that the dogs should choose to obtain the food. In contrast, for the HG trials, only 28 % (familiar person) and 33 % (unfamiliar) of the total time is spent oriented towards the direction the dog needs to go. Thus, the total time in which the dog is physically oriented towards the “correct” direction in the SD trials versus the HG trials could activate an embodied action trace which lasts long enough to support the decision in the SD case but not the HG case. Although our data are currently insufficient to ground or test a dynamical model we believe that this an important future goal that will be facilitated by the use of cameras and other technology allowing the dog’s gaze to be tracked in real time. It will also be useful to use data from eye-tracking to look at the sequencing of the saccades the dogs make. In this way, it will become possible to connect comparative psychology to similar work in human developmental psychology (Smith et al. 2011; Spencer et al. 2001) in a more rigorous fashion than the all-too-common statements concerning the mental equivalence of animal subjects to toddlers of a specific age [dogs are frequently described as having the mental capacity of two-year-old children (e.g. Coren 2005; Lakatos et al. 2009)].

If one considers gaze-following from a developmental perspective, it is likely to be the result of a developmental interplay between inherited dispositions to attend to gaze cues and experience with the significant use of such cues (Gomez 2005). Accordingly, it makes sense to think that there are shared underlying mechanisms between species that are capable of following gaze. However, underlying differences in the phylogenetic roots of this ability may help explain why attentional orientation to movement is different in different species.

3 General Discussion

Primates and canids both live in a complex social system. Though the idiosyncrasies of each social structure are different, the convergences between the two, plus the long history of domestication of dogs might have facilitated orientation responses to humans. This can be seen in the data coming from the small number of eye-tracking studies reported above. Among the great apes there seem to be shared visual scanning patterns toward scenes and both conspecific and non-conspecific faces (with eyes and mouth being particularly salient). Consequently, furthering our knowledge of visual scanning patterns of dogs (and, if possible, of human-reared highly socialized wolves) will be extremely informative for assessing several factors that might be playing a role in the typical human preference for looking at the eye region.

We have shown that it is possible to accurately track the way in which dogs allocate attention to human faces, bodies, and locations when humans are providing social cues relevant to the dog as indicators of food location. However, more fine-grained analyses than we have provided are certainly desirable. For instance, how are dogs allocating attention within components of the face, such as eyes and mouth? How important is the finger in a pointing gesture, or is the dog simply extrapolating from the direction of the whole arm? Such questions are answerable in principle given further improvements in the head-mounted eye-tracking system that we have developed. Our present study cannot answer these questions due to our small sample size and due to the fact that, ironically, the mobility that is one of the main advantages of our eye-tracking system was found to be a consistent source of noise as well, which translated into having to drop several trials from the analysis.

Answering questions such as the aforementioned, and tying them to issues such as prior experience of the dogs with particular people could help tease apart ontogenetic differences. Furthermore, physiological work pairing eye-tracking with hormonal assays could help integrate findings about cognitive processes with proximal mechanisms. Although it is difficult to imagine strong comparative methods that could use eye-tracking with (unsocialized) wolves, comparative work with eye-tracking in humans will help us to quantify the degree of convergence between dogs and humans with respect to visual processing of social cues. Of particular interest might be to investigate the differences in visual behavior of dogs performing different tasks; this may help reveal underlying cognitive processes, making it possible to relate the processes to questions about the adaptive value of their interactions with humans in specific contexts.

The field of comparative animal cognition has grown enormously in the past couple of decades, and yet it still lags behind human cognitive science in many ways. Here we hope to have convinced readers that eye-tracking in particular, and the adoption of technologies used to gather data in human cognitive psychology more generally, can help advance an integrative understanding of the evolution, development, mechanisms, and adaptiveness of canine cognition in its natural environment: the dog–human social group.

References

Axelsson, E., Ratnakumar, A., Arendt, M. L., Maqbool, K., Webster, M. T., Perloski, M., et al. (2013). The genomic signature of dog domestication reveals adaptation to a starch-rich diet. Nature, 495(7441), 360–364.

Bailey, I., Myatt, J. P., Wilson, A. M. (2013). Group hunting within the Carnivora: Physiological, cognitive and environmental influences on strategy and cooperation. Behavioral Ecology and Sociobiology, 67(1), 1–17.

Bar-Yosef, O. (1998). On the nature of transitions: The middle to upper Palaeolithic and the Neolithic revolution. Cambridge Archaeological Journal, 8(2), 141–163.

Barton, R. A. (1996). Neocortex size and behavioural ecology in primates. Proceedings of the Royal Society B, 263(1367), 173–177.

Bernstein, I. S. (1970). Primate status hierarchies. Primate Behavior, 1, 71–109.

Bertenthal, B., & Boyer, T. (2012). Developmental changes in Infants' visual attention to pointing. Journal of Vision, 12(9), 480.

Brugman, H., & Russel, A. (2004). Annotating multimedia/multi-modal resources with ELAN. Proceedings of the Fourth International Conference on Language Resources and Evaluation (pp. 2065–2068). Citeseer.

Buttelmann, D., & Tomasello, M. (2013). Can domestic dogs (Canis familiaris) use referential emotional expressions to locate hidden food? Animal Cognition, 16(1), 137–145.

Cappuccio, M. L., & Shepherd, S. V. (2013). 13 Pointing Hand: Joint Attention and Embodied Symbols. The Hand, an Organ of the Mind: What the Manual Tells the Mental, 303.

Clutton-Brock, J. (1995). Origins of the dog: Domestication and early history. In: J. Serpell (Ed.), The domestic dog: Its evolution, behaviour, and interactions with people (pp. 7–20). Cambridge: Cambridge University Press.

Clutton-Brock, J. (1999). A natural history of domesticated mammals (2nd ed.). New York, USA: Natural History Museum.

Clutton-Brock, J., & Wilson, D. E. (2002). Mammals. Smithsonian handbooks (1st US ed.). London: DK.

Cordoni, G., & Palagi, E. (2008). Reconciliation in wolves (Canis lupus): New evidence for a comparative perspective. Ethology, 114(3), 298–308.

Coren, S. (2005). How dogs think: Understanding the canine mind. New York: Simon and Schuste.

Crockford, S. J., & Iaccovoni, A. (2000). Dogs through time: An archaeological perspective. Proceedings of the 1st ICAZ Symposium on the History of the Domestic Dog. BAR international series, Vol. 889. Oxford, England: Archaeopress.

Dalton, K. M., Nacewicz, B. M., Johnstone, T., Schaefer, H. S., Gernsbacher, M. A., Goldsmith, H. H., Alexander, A. L., et al. (2005). Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience, 8(4), 519–526.

Davis, S. J. M., & Valla, F. R. (1978). Evidence for domestication of dog 12,000 years ago in Natufian of Israel. Nature, 276(5688), 608–610.

Diamond, J. (2002). Evolution, consequences and future of plant and animal domestication. Nature, 418(6898), 700–707.

Driscoll, C. A., Macdonald, D. W., & O’Brien, S. J. (2009). From wild animals to domestic pets, an evolutionary view of domestication. Proceedings of the National Academy of Sciences USA, 106, 19971–9978.

Duchowski, A. T. (2007). Eye tracking methodology: Theory and practice. New York: Springer.

Elgier, A. M., Jakovcevic, A., Mustaca, A. E., & Bentosela, M. (2009). Learning and owner-stranger effects on interspecific communication in domestic dogs (Canis familiaris). Behavioural Processes, 81(1), 44–49.

Felmingham, K. L., Rennie, C., Manor, B., & Bryant, R. A. (2011). Eye tracking and physiological reactivity to threatening stimuli in posttraumatic stress disorder. Journal of Anxiety Disorders, 25(5), 668–673.

Fukuzawa, M., Mills, D. S., & Cooper, J. J. (2005). More than just a word: Non-semantic command variables affect obedience in the domestic dog (Canis familiaris). Applied Animal Behaviour Science, 91(1), 129–141.

Gomez, J. C. (2005). Species comparative studies and cognitive development. Trends in Cognitive Sciences, 9(3), 118–125.

Gredebäck, G., Johnson, S., & Von Hofsten, C. (2009). Eye tracking in infancy research. Developmental Neuropsychology, 35(1), 1–19.

Hare, B., Brown, M., Williamson, C., & Tomasello, M. (2002). The domestication of social cognition in dogs. Science, 298(5598), 1634–1636.

Hare, B., & Tomasello, M. (2005). Human-like social skills in dogs? Trends in Cognitive Sciences, 9(9), 439–444.

Hattori, Y., Kano, F., & Tomonaga, M. (2010). Differential sensitivity to conspecific and allospecific cues in chimpanzees and humans: Acomparative eye-tracking study. Biology Letters, 6(5), 610–613.

Hemmer, H. (1990). Domestication: The decline of environmental appreciation (2nd ed.). Cambridge, England: Cambridge University Press.

Hirata, S., Fuwa, K., Sugama, K., Kusunoki, K., & Fujita, S. (2010). Facial perception of conspecifics: Chimpanzees (Pan troglodytes) preferentially attend to proper orientation and open eyes. Animal Cognition, 13(5), 679–688.

Holzman, P. S., Proctor, L. R., Levy, D. L., Yasillo, N. J., Meltzer, H. Y., & Hurt, S. W. (1974). Eye-tracking dysfunctions in schizophrenic patients and their relatives. Archives of General Psychiatry, 31(2), 143.

Ittyerah, M., & Gaunet, F. (2009). The response of guide dogs and pet dogs (Canis Familiaris) to cues of human referential communication (pointing and gaze). Animal Cognition, 12(2), 257–265.

Kaminski, J., Call, J., & Fischer, J. (2004). Word learning in a domestic dog: Evidence for “fast mapping”. Science, 304(5677), 1682–1683.

Kaminski, J., Neumann, M., Brauer, J., Call, J., & Tomasello, M. (2011). Dogs, Canis familiaris, communicate with humans to request but not to inform. Animal Behaviour, 82(4), 651–658

Kano, F., Hirata, S., Call, J., & Tomonaga, M. (2011). The visual strategy specific to humans among hominids: A study using the gap-overlap paradigm. Vision research, 51(23–24), 2348–2355.

Kano, F., & Tomonaga, M. (2009). How chimpanzees look at pictures: A comparative eye-tracking study. Proceedings of the Royal Society of London. Series B: Biological, 276(1664), 1949–1955.

Kano, F., & Tomonaga, M. (2010). Attention to Emotional Scenes Including Whole-Body Expressions in Chimpanzees (Pan troglodytes). Journal of Comparative Psychology, 124(3), 287–294.

Kano, F., & Tomonaga, M. (2013). Head-mounted eye tracking of a chimpanzee under naturalistic conditions. PLoS ONE, 8(3), e59785.

Kjaersgaard, A., Pertoldi, C., Loeschcke, V., & Hansen, D. W. (2008). Tracking the gaze of birds. Journal of Avian Biology, 39(4), 466–469.

Koler-Matznick, J. (2002). The origin of the dog revisited. Anthrozoos, 15(2), 98–118.

Lakatos, G., Soproni, K., Doka, A., & Miklósi, A. (2009). A comparative approach to dogs’ (Canis familiaris) and human infants’ comprehension of various forms of pointing gestures. Animal Cognition, 12(4), 621–631.

Larson, G., Karlsson, E. K., Perri, A., Webster, M. T., Ho, S. Y., Peters, J., et al. (2012). Rethinking dog domestication by integrating genetics, archeology, and biogeography. Proceedings of the National Academy of Sciences USA, 109(23), 8878–8883.

Lupo, K. (2011). A dog is for hunting.Ethnozooarchaeology: The present and past of human–animal relationships, 4–12.

Machado, C. J., & Nelson, E. E. (2011). Eye-tracking with Nonhuman primates is now more accessible than ever before. American Journal of Primatology, 73(6), 562–569.

Mech, L. D. (1970). The wolf: The ecology and behavior of an endangered species. (1st ed.). Published for the American Museum of Natural History. Garden City, New York: Natural History Press.

Mech, L. D. (2012). Wolf. New York: Random House Digital.

Merola, I., Prato-Previde, E., & Marshall-Pescini, S. (2012). Dogs’ social referencing towards owners and strangers. PLoS ONE, 7(10), e47653.

Miklósi, A., Pongracz, P., Lakatos, G., Topal, J., & Csanyi, V. (2005). A comparative study of the use of visual communicative signals in interactions between dogs (Canis familiaris) and humans and cats (Felis catus) and humans. Journal of Comparative Psychology, 119(2), 179–186.

Moore, C. E., & Dunham, P. J. (1995). Joint attention: Its origins and role in development. New York: Lawrence Erlbaum Associates, Inc.

Muro, C., Escobedo, R., Spector, L., & Coppinger, R. P. (2011). Wolf-pack (Canis lupus) hunting strategies emerge from simple rules in computational simulations. Behavioural Processes, 88(3), 192–197.

Olsen, S. J. (1985).Origins of the domestic dog: The fossil record. Tucson, Arizona: University of Arizona Press.

Packer, C., & Ruttan, L. (1988). The evolution of cooperative hunting. The American Naturalist, 132(2), 159–198.

Palagi, E., & Cordoni, G. (2009). Postconflict third-party affiliation in Canis lupus: Do wolves share similarities with the great apes? Animal Behaviour, 78(4), 979–986.

Pilley, J. W., & Reid, A. K. (2011). Border collie comprehends object names as verbal referents. Behavioural Processes, 86(2), 184–195.

Ramos, D., & Ades, C. (2012). Two-item sentence comprehension by a dog (Canis familiaris). PLoS ONE, 7(2), e29689.

Reader, S. M., Laland, K. N. (2002). Social intelligence, innovation, and enhanced brain size in primates. Proceedings of the National Academy of Sciences USA, 99(7), 4436–4441.

Ruusila, V., Pesonen, M. (2004). Interspecific cooperation in human (Homo sapiens) hunting: The benefits of a barking dog (Canis familiaris). Annales Zoologici Fennici, 41(4), 545–549.

Serpell, J. (1995). The domestic dog: Its evolution, behaviour, and interactions with people. Cambridge: Cambridge University Press.

Shepherd, S. V. (2010). Following gaze: Gaze-following behavior as a window into social cognition. Frontiers in Integrative Neuroscience, 4(5), 1–13.

Shultz, S., & Dunbar, R. I. (2007). The evolution of the social brain: Anthropoid primates contrast with other vertebrates. Proceedings of the Royal Society B: Biological Sciences, 274(1624), 2429–2436.

Smith, L. B., Yu, C., & Pereira, A. F. (2011). Not your mother’s view: The dynamics of toddler visual experience. Developmental Science, 14(1), 9–17.

Somppi, S., Tornqvist, H., Hanninen, L., Krause, C., & Vainio, O. (2011). Dogs do look at images: Eye tracking in canine cognition research. Animal Cognition, 11(1), 167–174.

Soproni, K., Miklósi, A., Topal, J., & Csanyi, V. (2001). Comprehension of human communicative signs in pet dogs (Canis familiaris). Journal of Comparative Psychology, 115(2), 122–126.

Soproni, K., Miklósi, A., Topal, J., & Csanyi, V. (2002). Dogs’ (Canis familiaris) responsiveness to human pointing gestures. Journal of Comparative Psychology, 116(1), 27–34.

Spencer, J. P., Smith, L. B., & Thelen, E. (2001). Tests of a dynamic systems account of the A-not-B error: The influence of prior experience on the spatial memory abilities of two-year-olds. Child development, 72(5), 1327–1346.

Sullivan, J. O. (1978). Variability in the wolf, a group hunter. In R. L. Hall & H. S. Sharp (Eds.) Wolf and man: Evolution in parallel ( pp. 31–40). New York: Academic Press.

Teglas, E., Gergely, A., Kupan, K., Miklósi, A., & Topal, J. (2012). Dogs’ gaze following is tuned to human communicative signals. Current Biology, 22(3), 209–212.

Tinbergen, N. (1963). On aims and methods of ethology. Zeitschrift für Tierpsychologie, 20(4), 410–433.

Vila, C., Savolainen, P., Maldonado, J. E., Amorim, I. R., Rice, J. E., Honeycutt, R. L., et al. (1997). Multiple and ancient origins of the domestic dog. Science, 276(5319), 1687–1689.

Weisdorf, J. L. (2005). From foraging to farming: Explaining the Neolithic Revolution. Journal of Economic Surveys, 19(4), 561–586.

Williams, F. J., Mills, D. S., & Guo, K. (2011). Development of a head-mounted, eye-tracking system for dogs. Journal of neuroscience methods, 194(2), 259–265.

Yarbus, A. L., Haigh, B., & Rigss, L. A. (1967).Eye movements and vision (vol 2. vol 5.10). New York: Plenum press.

Zola, S., & Manzanares, C. (2011). A Simple behavioral task combined with Noninvasive infrared eye-tracking for examining the potential impact of viruses and vaccines on memory and other cognitive functions in humans and Nonhuman primates. Journal of Medical Primatology, 40(4), 282–283.

Acknowledgments

This research and the first author were supported by the Office of Vice Provost for Research of Indiana University. We wish to thank our participants and their owners for participating in this study. The authors would also like to thank to Mr. Jeff Sturgeon for technical advice, Dr. Bennett Bertenthal for helpful comments and discussion, Dr. Nicholas Port and the IU School of Optometry for their generous help and last but not least to the Indiana Statistical Consulting Center, particularly to Stephanie Dickinson for her guidance.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Rossi, A., Smedema, D., Parada, F.J., Allen, C. (2014). Visual Attention in Dogs and the Evolution of Non-Verbal Communication. In: Horowitz, A. (eds) Domestic Dog Cognition and Behavior. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-53994-7_6

Download citation

DOI: https://doi.org/10.1007/978-3-642-53994-7_6

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-53993-0

Online ISBN: 978-3-642-53994-7

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)