Abstract

Mining dense subgraphs on multi-layer graphs is an interesting problem, which has witnessed lots of applications in practice. To overcome the limitations of the quasi-clique-based approach, we propose d-coherent core (d-CC), a new notion of dense subgraph on multi-layer graphs, which has several elegant properties. We formalize the diversified coherent core search (DCCS) problem, which finds kd-CCs that can cover the largest number of vertices. We propose a greedy algorithm with an approximation ratio of \(1 - 1/e\) and two search algorithms with an approximation ratio of 1/4. Furthermore, we propose some optimization techniques to further speed up the algorithms. The experiments verify that the search algorithms are faster than the greedy algorithm and produce comparably good results as the greedy algorithm in practice. As opposed to the quasi-clique-based approach, our DCCS algorithms can fast detect larger dense subgraphs that cover most of the quasi-clique-based results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Dense subgraph mining, that is, finding vertices cohesively connected by internal edges, is an important task in graph mining. In the literature, many dense subgraph notions have been formalized [16], e.g., clique, quasi-clique, k-core, k-truss, k-plex, and k-club. Meanwhile, a large number of dense subgraph mining algorithms have also been proposed.

In many real-world scenarios, a graph often contains various types of edges, which represent various types of relationships between entities. For example, in biological networks, interactions between genes can be detected by different methods [13, 27]; in social networks, users can interact through different social media [21]. In [5, 20], such a graph with multiple types of edges is modeled as a multi-layer graph, where each layer independently accommodates a certain type of edges.

Finding dense subgraphs on multi-layer graphs has witnessed many real-world applications. We show two examples as follows.

Application 1 (joint mining of biological modules) Biological networks represent interactions between proteins and genes. These interactions can be detected by several different methods such as biological experiments, co-expression, gene co-occurrence, and text mining [27]. Finding groups of genes and proteins cohesively interacting with each other, called biological modules, is interesting and important in bioinformatics [13, 20]. However, the interaction data obtained by a certain method are usually very noisy, which makes the detection results unconvincing or unreliable [20]. To filter out the effects of spurious interactions, biologists try to jointly analyze the interactions in a collection of biological networks. In particular, they model these biological networks as a multi-layer graph, where each layer contains all interactions (edges) detected by a certain method. A set of vertices is regarded as a reliable biological module if they are simultaneously densely connected across multiple layers [20].

Application 2 (extracting active co-author groups) Co-authorship networks such as DBLP represent collaboration between authors. Following active co-author groups that frequently occur in research communities helps learn more about research trends and hot spots in research domains. To extract active co-author groups, scientists often organize co-authorship networks as multi-layer graphs. All connections between authors are categorized into different layers either based on time periods [31] or based on conferences [5]. A group of authors are active if they have close collaborations in multiple time periods or in multiple conferences.

Different from dense subgraph mining on single-layer graphs, dense subgraphs on multi-layer graphs must be evaluated by the following two metrics:

-

(1)

Density The interconnections between the vertices must be sufficiently dense on some individual layers.

-

(2)

Support The vertices must be densely connected on a sufficiently large number of layers.

In the literature, the most representative and widely used notion of dense subgraphs on multi-layer graphs is cross-graph quasi-clique [5, 20, 32]. On a single-layer graph, a vertex set Q is a \(\gamma \)-quasi-clique if every vertex in Q is adjacent to at least \(\gamma (|Q| - 1)\) vertices in Q, where \(\gamma \in [0, 1]\). Given a set of graphs \(G_1, G_2, \ldots , G_n\) with the same vertices (i.e., layers in our terminology), \(\gamma \in [0, 1]\) and \(min_s \in {\mathbb {N}}\), a vertex set Q is a cross-graph quasi-clique if Q is a \(\gamma \)-quasi-clique on all of \(G_1, G_2, \ldots , G_n\) and \(|Q| \ge min_s\). Although the cross-graph quasi-clique notion considers both density and support, it has several intrinsic limitations inherited from \(\gamma \)-quasi-cliques.

The lower bound of a vertex’s degree in a \(\gamma \)-quasi-clique Q is \(\gamma (|Q| - 1)\), which linearly increases with |Q|. This constraint is too strict for large dense subgraphs in real graphs. The diameter of a \(\gamma \)-quasi-clique is often too small. As proved in [20], the diameter of a \(\gamma \)-quasi-clique is at most 2 for \(\gamma \ge 0.5\). Hence, in cross-graph quasi-clique mining, a large dense subgraph tends to be decomposed into many quasi-cliques. It leads to the following limitations:

-

(1)

Finding all quasi-cliques is computationally hard and is not scalable to large graphs [5].

-

(2)

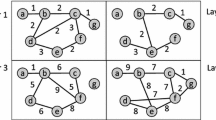

Quasi-cliques are useful in the study of micro-clusters (e.g., motifs [12]) but are not suitable for studying large clusters (e.g., communities). To alleviate this problem, quasi-cliques are merged together to restore large dense subgraphs in post-processing [16]. However, the merging process not only takes additional time but also the quality of the restored subgraphs depends on the discovered quasi-cliques. Since \(\gamma \) and \(min_s\) indirectly affects the properties of the restored subgraphs, it is difficult for users to specify appropriate parameters. For example, in the four-layer graph in Fig. 1, the vertex set \(Q = \{a, b, c, d, e, f, g, h, i, x, y ,z\}\) naturally induces a large dense subgraph on all layers. However, for \(\gamma \ge 0.5\) and \(min_s = 6\), the restored subgraph is \(\{ c, f, i, x, y, z\}\), which miss many vertices in Q.

Hence, there naturally arises the first question:

Q1 What is a better notion of dense subgraphs on multi-layer graphs, which can avoid the limitations of cross-graph quasi-cliques?

Additionally, as discovered in [5], dense subgraphs on multi-layer graphs have significant overlaps. For practical usage, it is better to output a small subset of diversified dense subgraphs with little overlaps. Reference [5] proposes an algorithm to find diversified cross-graph quasi-cliques. One of our goal in this paper is to find dense subgraphs on even larger multi-layer graphs. There will be even more dense subgraphs, so the problem of finding diversified dense subgraphs will be even more critical. Hence, we face the second question:

Q2 How to design efficient algorithms to find diversified dense subgraphs according to the new notion?

To deal with the first question Q1, we present a new notion called d-coherent core (d-CC for short) to characterize dense subgraphs on multi-layer graphs. It is extended from the d-core notion on single-layer graphs [3]. Specifically, given a multi-layer graph \({\mathcal {G}}\), a subset L of layers of \({\mathcal {G}}\) and \(d \in {\mathbb {N}}\), the d-CC with respect to (w.r.t. for short) L is the maximum vertex subset S such that each vertex in S is adjacent to at least d vertices in S on all layers in L. The d-CC w.r.t. L is unique. The d-CC notion is a natural fusion of density and support. In comparison with cross-graph quasi-clique, the constraint d on the degree of the vertices in a d-CC is independent of the size of the d-CC. There is no limit on the diameter of a d-CC, and a d-CC often consists of a large number of densely connected vertices. The d-CC notion has the following advantages:

-

(1)

A d-CC can be computed in linear time w.r.t. the size of a multi-layer graph.

-

(2)

A d-CC itself is a large dense subgraph. It is unnecessary to use a post-processing phase to restore large dense subgraphs. The parameter d directly controls the properties of the expected results. For example, in Fig. 1, for \(d = 3\), the d-CC on all layers is \(\{a, b, c, d, e, f, g, h, i, x, y, z\}\), which is directly the large dense subgraph in the multi-layer graph.

-

(3)

The d-CC notion inherits the hierarchy property of d-core: The \((d + 1)\)-CC w.r.t. L is a subset of the d-CC w.r.t. L; The d-CC w.r.t. L is a subset of the d-CC w.r.t. \(L^{\prime }\) if \(L^{\prime } \subseteq L\).

The d-CC notion overcomes the limitations of cross-graph quasi-cliques. Based on this notion, we formalize the diversified coherent core search (DCCS) problem that finds dense subgraphs on multi-layer graphs with little overlaps: Given a multi-layer graph \({\mathcal {G}}\), a minimum degree threshold d, a minimum support threshold s, and the number k of d-CCs to be detected, the DCCS problem finds k most diversified d-CCs recurring on at least s layers of \({\mathcal {G}}\). Like [2, 5], we assess the diversity of the k discovered d-CCs by the number of vertices they cover and try to maximize the diversity of these d-CCs. We prove that the DCCS problem is NP-complete.

To deal with the second question Q2, we propose a series of approximation algorithms for the DCCS problem. First, we propose a simple greedy algorithm, which finds kd-CCs in a greedy manner. The algorithm has an approximation ratio of \(1 - 1/e\). However, it must compute all candidate d-CCs and therefore is not scalable to large multi-layer graphs.

To prune unpromising candidate d-CCs early, we propose two search algorithms, namely the bottom-up search algorithm and the top-down search algorithm. In both algorithms, the process of generating candidate d-CCs and the process of updating diversified d-CCs interact with each other. Many d-CCs that are unpromising to appear in the final results are pruned in early stage. The bottom-up and top-down algorithms adopt different search strategies. In practice, the bottom-up algorithm is preferable if \(s < l/2\), and the top-down algorithm is preferable if \(s \ge l/2\), where l is the number of layers. Both of the algorithms have an approximation ratio of 1 / 4.

To further speed up the algorithms for the DCCS problem, we develop some optimization techniques. We introduce an index structure, which organizes all the vertices hierarchically. Base on this index, we propose a faster d-CC computation method with less examination of vertices. The faster d-CC computation method can be applied to all the proposed algorithms.

We conducted extensive experiments on a variety of real-world datasets to evaluate the proposed algorithms and obtain the following results:

-

(1)

The bottom-up and top-down algorithms are 1–2 orders of magnitude faster than the greedy algorithm for small and large s, respectively.

-

(2)

The optimized greedy, bottom-up, and top-down algorithms run faster than the original ones, respectively.

-

(3)

The practical approximation quality of the bottom-up and top-down algorithms is comparable to that of the greedy algorithm.

-

(4)

Our DCCS algorithms outperform the cross-graph quasi-clique mining algorithms [5, 20, 32] on multi-layer graphs in terms of both execution time and result quality.

The rest of the paper is organized as follows. Section 2 introduces the basic concepts and formalizes the DCCS problem. Section 3 introduces a method for computing d-CCs. Section 4 presents the greedy algorithm. The bottom-up and the top-down search algorithms are described in Sects. 5 and 6, respectively. The optimized algorithms are proposed in Sect. 7. The experimental results are reported in Sect. 8. Section 9 reviews the related work, and Sect. 10 concludes this paper.

2 Problem definition

In this section, we introduce some basic concepts and formalize the problem.

Multi-layer graphs A multi-layer graph is a set of graphs \(\{G_1, G_2, \dots , G_l\}\), where l is the number of layers, and \(G_i\) is the graph on layer i. Without loss of generality, we assume that \(G_1, G_2, \dots , G_l\) contain the same set of vertices because if a vertex is missing from layer i, we can add it to \(G_i\) as an isolated vertex. Hence, a multi-layer graph \(\{G_1, G_2, \dots , G_l\}\) can be equivalently represented by the tuple \((V, E_1, E_2, \dots , E_l)\), where V is the universal vertex set and \(E_i\) is the edge set of \(G_i\).

Let V(G) and E(G) be the vertex set and the edge set of graph G, respectively. For a vertex \(v \in V(G)\), let \(N_G(v) = \{u | (v, u) \in E(G)\}\) be the set of neighbors of v in G, and let \(d_G(v) = |N_G(v)|\) be the degree of v in G. The subgraph of G induced by a vertex subset \(S \subseteq V(G)\) is \(G[S] = (S, E[S])\), where E[S] is the set of edges with both endpoints in S.

Symbols and notations related to basic concepts (in Sect. 2) | |

G | Single-layer graph |

\({\mathcal {G}}\) | Multi-layer graph |

u, v, w | Vertex |

(u, v) | Edge |

\(V(G) (V({\mathcal {G}}))\) | The vertex set of single-layer graph G (multi-layer \({\mathcal {G}}\)) |

\(G[S] ({\mathcal {G}}[S])\) | The subgraph of single-layer graph G (multi-layer graph \({\mathcal {G}}\)) induced by vertex subset S |

\(E_i({\mathcal {G}}), E(G_i)\) | The edge set on layer i of multi-layer graph \({\mathcal {G}}\) |

\(l({\mathcal {G}})\) | The number of layers in multi-layer graph \({\mathcal {G}}\) |

[n] | The set \(\{1, 2, \dots , n\}\) |

\(d_{G}(v)\) | The degree of vertex v in single-layer graph G |

\(C^{d}(G)\) | The d-core on single-layer graph G |

\(C^{L}_{d}({\mathcal {G}})\) | The d-CC on multi-layer graph \({\mathcal {G}}\) w.r.t. layer subset L |

Symbols and notations related to theDCCSproblem (in Sect. 2) | |

d | Degree threshold |

k | The desired number of diversified d-CCs |

s | Support threshold |

\({\mathcal {F}}_{d, s}({\mathcal {G}})\), \({\mathcal {F}}\) | The set of d-CCs on s layers of multi-layer graph \({\mathcal {G}}\) |

\({\mathcal {R}}\) | The result set of diversified d-CCs |

\(\textsf {Cov}({\mathcal {R}})\) | The cover set of \({\mathcal {R}}\), that is, \(\bigcup _{C \in {\mathcal {R}}} C\) |

Symbols and notations related to the algorithms (in Sects. 5and6) | |

\(C^{*}({\mathcal {R}})\) (in Sect. 5.1) | The d-CC in \({\mathcal {R}}\) that covers the minimum number of vertices exclusively by itself |

\(U_{L}^{d}({\mathcal {G}})\) (in Sect. 6.1) | The potential vertex set w.r.t. the d-CC \(C_{L}^{d}(G)\) |

\(M_L (N_L)\) (in Sect. 6.2) | The set of layer numbers in Class 1 (Class 2) in L |

I (in Sect. 6.3) | The index of the multi-layer graph |

Given a multi-layer graph \({\mathcal {G}}= (V, E_1, E_2, \dots , E_l)\), let \(l({\mathcal {G}})\) be the number of layers of \({\mathcal {G}}\), \(V({\mathcal {G}})\) the vertex set of \({\mathcal {G}}\), and \(E_i({\mathcal {G}})\) the edge set of the graph on layer i. The multi-layer subgraph of \({\mathcal {G}}\) induced by a vertex subset \(S \subseteq V({\mathcal {G}})\) is \({\mathcal {G}}[S] = (S, E_1[S], E_2[S], \dots , E_l[S])\), where \(E_i[S]\) is the set of edges in \(E_i\) with both endpoints in S.

d-coherent cores We define the notion of d-coherent core (d-CC) on a multi-layer graph by extending the d-core notion on a single-layer graph [3]. A graph G is d-dense if \(d_G(v) \ge d\) for all \(v \in V(G)\), where \(d\in {\mathbb {N}}\). The d-core of graph G, denoted by \(C^{d} (G)\), is the maximum subset \(S \subseteq V(G)\) such that G[S] is d-dense. As stated in [3], \(C^{d} (G)\) is unique, and we have \(C^{d}(G) \subseteq C^{d - 1}(G) \subseteq \dots \subseteq C^{1}(G) \subseteq C^{0}(G)\) for \(d \in {\mathbb {N}}\).

For ease of notation, let \([n] = \{1, 2, \ldots , n\}\), where \(n \in {\mathbb {N}}\). Let \({\mathcal {G}}\) be a multi-layer graph and \(L \subseteq [l({\mathcal {G}})]\) be a non-empty subset of layer numbers. For \(S \subseteq V({\mathcal {G}})\), the induced subgraph \({\mathcal {G}}[S]\) is d-dense w.r.t. L if \(G_i[S]\) is d-dense for all \(i \in L\). The d-coherent core (d-CC) of \({\mathcal {G}}\) w.r.t. L, denoted by \(C^{d}_{L}({\mathcal {G}})\), is the maximum subset \(S \subseteq V({\mathcal {G}})\) such that \({\mathcal {G}}[S]\) is d-dense w.r.t. L. Similar to d-core, the concept of d-CC has the following three properties.

Property 1

(Uniqueness) Given a multi-layer graph \({\mathcal {G}}\) and a subset \(L \subseteq [l({\mathcal {G}})]\), \(C^{d}_{L}({\mathcal {G}})\) is unique for \(d \in {\mathbb {N}}\).

Property 2

(Hierarchy) Given a multi-layer graph \({\mathcal {G}}\) and a subset \(L \subseteq [l({\mathcal {G}})]\), we have \(C^{d}_{L}({\mathcal {G}}) \subseteq C^{d - 1}_{L}({\mathcal {G}}) \subseteq \dots \subseteq C^{1}_{L}({\mathcal {G}}) \subseteq C^{0}_L({\mathcal {G}})\) for \(d \in {\mathbb {N}}\).

Property 3

(Containment) Given a multi-layer graph \({\mathcal {G}}\) and two subsets \(L, L^{\prime } \subseteq [l({\mathcal {G}})]\), if \(L \subseteq L^{\prime }\), we have \(C^{d}_{L^{\prime }}({\mathcal {G}}) \subseteq C^{d}_{L}({\mathcal {G}})\) for \(d \in {\mathbb {N}}\).

For readability, we put the some proofs of properties, lemmas and theorems in Appendix A of this paper.

Problem statement Given a multi-layer graph \({\mathcal {G}}\), a minimum degree threshold \(d \in {\mathbb {N}}\) and a minimum support threshold \(s \in {\mathbb {N}}\), let \({\mathcal {F}}_{d, s}({\mathcal {G}})\) be the set of d-CCs of \({\mathcal {G}}\) w.r.t. all subsets \(L \subseteq [l({\mathcal {G}})]\) such that \(|L| = s\). When \({\mathcal {G}}\) is large, \(|{\mathcal {F}}_{d, s}({\mathcal {G}})|\) is often very large, and a large number of d-CCs in \({\mathcal {F}}_{d, s}({\mathcal {G}})\) significantly overlap with each other. For practical usage, it is better to output k diversified d-CCs with little overlaps, where k is a number specified by users. Like [2, 5], we assess the diversity of the discovered d-CCs by the number of vertices they cover and try to maximize the diversity of these d-CCs. Let the cover set of a collection of sets \({\mathcal {R}}= \{R_1, R_2, \dots , R_n\}\) be \(\textsf {Cov}({\mathcal {R}}) = \bigcup _{i = 1}^{n} R_i\). We formally define the DiversifiedCoherentCoreSearch (DCCS) problem as follows.

Given a multi-layer graph \({\mathcal {G}}\), a minimum degree threshold \(d \in {\mathbb {N}}\), a minimum support threshold \(s \in {\mathbb {N}}\), and the number \(k \in {\mathbb {N}}\) of d-CCs to be discovered, find the subset \({\mathcal {R}}\subseteq {\mathcal {F}}_{d, s}({\mathcal {G}})\) such that (1) \(|{\mathcal {R}}| = k\), and (2) \(|\textsf {Cov}({\mathcal {R}})|\) is maximized. The d-CCs in \({\mathcal {R}}\) are called the top-kdiversifiedd-CCs of \({\mathcal {G}}\) on s layers.

Theorem 1

The DCCS problem is NP-complete.

Figure 2 shows a six-layer graph \({\mathcal {G}}\). Let \(d= 3\), \(s = 2\), and \(k = 2\). The d-cores on all layers are highlighted in blue. The result of the DCCS problem is \({\mathcal {R}}= \{ C^{d}_{ \{ 1, 6 \} }({\mathcal {G}}), C^{d}_{ \{ 4, 5\} }({\mathcal {G}}) \}\), where \(C^{d}_{ \{ 1, 6 \} }({\mathcal {G}}) = \{ a, b, c, d, n, w, y\}\) and \(C^{d}_{ \{ 4, 5\} }({\mathcal {G}}) = \{ a, b, c, d, f, i, k, x, z\}\). We have \(|\textsf {Cov}({\mathcal {R}})| = 12\).

3 The d-CC computation algorithm

In this section, we propose an algorithm for finding \(C^{d}_{L}({\mathcal {G}})\), the d-CC in a multi-layer graph \({\mathcal {G}}\) w.r.t. a set L of layer numbers. This algorithm is a key component of the algorithms described in the next sections for the DCCS problem.

Our d-CC computation algorithm is inspired by the d-core decomposition algorithm [3]. In this d-core decomposition algorithm, the vertices whose degrees are less than d are iteratively removed from the input graph. Finally, the remaining vertices form the d-core of the graph. Our d-CC computation algorithm follows a similar paradigm. By the definition of d-CC, each vertex v in \(C^{d}_{L}({\mathcal {G}})\) is adjacent to at least d vertices in \(C^{d}_{L}({\mathcal {G}})\) on each layer in L. According to this fact, the central idea of our algorithm for computing \(C^{d}_{L}({\mathcal {G}})\) is removing from \({\mathcal {G}}\) the vertices that cannot satisfy this degree constraint. Specifically, let \(m(v) = \min _{i \in L} d_{G_i}(v)\) be the minimum degree of vertex v on all layers in L. If \(m(v) < d\), the degree of v must be less than d on a certain layer in L, so we have \(v \not \in C^{d}_{L}({\mathcal {G}})\) and thus remove v from \({\mathcal {G}}\). Notice that removing v may cause some vertices adjacent to v on some layers in L not satisfy the degree constraint any more. Hence, we iteratively remove all such irrelevant vertices from \({\mathcal {G}}\) until \(m(v) \ge d\) for all vertices v remaining in \({\mathcal {G}}\). Finally, the set of vertices remaining in \({\mathcal {G}}\) is identical to \(C^{d}_{L}({\mathcal {G}})\).

In the following, we present an efficient implementation of the d-CC computation method. The dCC procedure in Fig. 3 describes the pseudocode of this algorithm. The procedure takes as input a multi-layer graph \({\mathcal {G}}\), an integer \(d \in {\mathbb {N}}\), and a subset \(L \subseteq [l({\mathcal {G}})]\) and outputs the d-CC \(C^{d}_{L}({\mathcal {G}})\) in linear time w.r.t. the size of \({\mathcal {G}}\). It works as follows. At the beginning, we compute m(v) for all vertices \(v \in V({\mathcal {G}})\) (line 1). In the dCC procedure, we scan all vertices of \({\mathcal {G}}\) only once and update m(v) when needed. To this end, we exploit four arrays, namely ver, pos, sbin, and bin.

-

The array ver stores all vertices v of \({\mathcal {G}}\) in the ascending order of m(v). In ver, the consecutive vertices having the same value of m(v) constitute a bin in ver.

-

The array pos records the position (offset) of each vertex v of \({\mathcal {G}}\) in the array ver, i.e., \(\texttt {ver}[\texttt {pos}[v]] = v\).

-

The array sbin records the size of each bin in ver, i.e., \(\texttt {sbin}[i]\) is the number of vertices v such that \(m(v) = i\).

-

The array bin records the starting position (offset) of each bin in ver, i.e., \(\texttt {bin}[i]\) is the position of the first vertex v in ver such that \(m(v) = i\).

Lines 2–13 set up the arrays. Let \(M = \max _{v \in V({\mathcal {G}})} m(v)\). Obviously, the respective size of sbin and bin is \(M + 1\). First, we scan all vertices in \(V({\mathcal {G}})\) to determine the size of each bin (lines 4–5). Then, we accumulate the bins’ size in sbin to obtain the starting position of each bin (lines 6–8). Based on the starting positions, we scan all vertices of \({\mathcal {G}}\) and place them into ver and pos accordingly (lines 9–13).

In the main iterations (lines 14–27), each time we check the first vertex v in the array ver (line 15). If \(m(v) < d\), we have \(v \not \in C^{d}_{L}({\mathcal {G}})\), so we delete v from the array ver (line 17) and remove v and its incident edges from all layers of \({\mathcal {G}}\) (line 18). Then, for each vertex u adjacent to v on some layers, m(u) may change. Note that m(u) can be decreased by at most 1 since we remove only one neighbor of u from \({\mathcal {G}}\). Therefore, we recompute m(u) as \(m(u)^{\prime }\) (line 20). If \(m(u)^{\prime } \ne m(u)\), we move vertex u from the m(u)th bin to the \((m(u)-1)\)th bin. Let w be the first vertex in the m(u)th bin (line 22). We can exchange the positions of u and w in the array ver and exchange \(\texttt {pos}[w]\) and \(\texttt {pos}[v]\) accordingly (lines 23–25). After that, \(\texttt {bin}[m(u)]\) is increased by 1 to indicate that u is removed from its bin (line 26).

When the iterations terminate, all the vertices remaining in \({\mathcal {G}}\) are returned as \(C^{d}_{L}({\mathcal {G}})\) (line 28). The correctness of the dCC procedure is obvious.

Complexity analysis Let \(n = |V({\mathcal {G}})|\), \(l = l({\mathcal {G}})\), \(m_{i} = |E_i({\mathcal {G}})|\), and \(m = |\bigcup _{i \in [l({\mathcal {G}})]} E_i({\mathcal {G}}) |\). Line 1 computes m(v) for all vertices \(v \in V({\mathcal {G}})\) in O(nl) time. Lines 2–13 set up elements in the four arrays in O(n) time. In the main iterations, for each vertex u, the time for updating m(u) and changing the position of u is O(l). Let \(d_{{\mathcal {G}}}(u)\) denote \(|\bigcup _{i \in [l({\mathcal {G}})]} N_{G_i}(u)|\). The vertex u can be accessed by its neighbors at most \(d_{{\mathcal {G}}}(u)\) times. Thus, the total time cost of the main iterations is at most \(O(\sum _{u \in V({\mathcal {G}})} d_{{\mathcal {G}}}(u)l) = O(2ml)\). Consequently, the time complexity of dCC is \(O(nl + n + 2ml) = O(nl+ ml)\). The dCC procedure needs O(n) extra space to store the three arrays and m(v) of each vertex \(v \in V({\mathcal {G}})\). Thus, the space complexity of dCC is O(n).

4 The greedy algorithm

A straightforward solution to the DCCS problem is generating all candidate d-CCs and selecting kd-CCs that cover the maximum number of vertices. However, the search space of all k-combinations of d-CCs is extremely large, so this method is intractable even for small multi-layer graphs. Alternatively, fast algorithms with provable performance guarantees may be more preferable. This section proposes a simple greedy algorithm with an approximation ratio of \(1 - 1/e\). Before describing the algorithm, we present the following lemma based on Property 3. The lemma enables us to remove irrelevant vertices earlier.

Lemma 1

(Intersection bound) Given a multi-layer graph \({\mathcal {G}}\) and two subsets \(L_1, L_2 \subseteq [l({\mathcal {G}})]\), we have \(C_{L_1 \cup L_2}^{d} ({\mathcal {G}}) \subseteq C_{L_1}^{d} ({\mathcal {G}}) \cap C_{L_2}^{d}({\mathcal {G}})\) for \(d \in {\mathbb {N}}\).

The greedy algorithm GD-DCCS is described in Fig. 4. The input is a multi-layer graph \({\mathcal {G}}\) and \(d, s, k \in {\mathbb {N}}\). GD-DCCS works as follows. Line 1 preprocesses \({\mathcal {G}}\) by Procedure VertexDeletion, which will be described later. Line 2 initializes the candidate d-CC set \({\mathcal {F}}\) and the result set \({\mathcal {R}}\) to be empty. Lines 3–4 compute the d-core \(C^d(G_i)\) on each layer \(G_i\) by the algorithm in [3]. Indeed, we have \(C^{d}_{\{i\}}({\mathcal {G}}) = C^{d}(G_i)\). In order to find \(C_{L}^d({\mathcal {G}})\) for each \(L \subseteq [l({\mathcal {G}})]\) with \(|L| = s\), we first compute the intersection \(S = \bigcap _{i \in L} C^{d}(G_i)\) (line 6). By Lemma 1, we have \(C_{L}^{d}({\mathcal {G}}) \subseteq S\). Thus, we compute \(C_{L}^{d}({\mathcal {G}})\) by Procedure dCC on the induced subgraph \({\mathcal {G}}[S]\) instead of on \({\mathcal {G}}\) (line 7) and add \(C_{L}^{d}({\mathcal {G}})\) to \({\mathcal {F}}\) (line 8).

Lines 9–11 select kd-CCs from \({\mathcal {F}}\) in a greedy manner. Each time, we select the d-CC \(C^* \in {\mathcal {F}}\) that maximizes \(|\textsf {Cov}({\mathcal {R}}\cup \{C^*\})| - |\textsf {Cov}({\mathcal {R}})|\), add \(C^*\) to \({\mathcal {R}}\), and remove \(C^*\) from \({\mathcal {F}}\). Finally, \({\mathcal {R}}\) is output as the result (line 12).

Complexity analysis Let \(l = l({\mathcal {G}})\), \(n = |V({\mathcal {G}})|\) and \(m = |\bigcup _{i = 1}^{l} E_i({\mathcal {G}}) |\). Procedure dCC in line 7 runs in O(ms) time as shown in Sect. 3. Line 10 runs in \(O(n|{\mathcal {F}}|)\) time since computing \(|\textsf {Cov}({\mathcal {R}}\cup \{C\})| - |\textsf {Cov}({\mathcal {R}})|\) takes O(n) time for each \(C \in {\mathcal {F}}\). In addition, \(|{\mathcal {F}}| = {l \atopwithdelims ()s}\). Therefore, the time complexity of GD-DCCS is \(O((ns + ms + kn){l \atopwithdelims ()s})\), and the space complexity is \(O(n{l \atopwithdelims ()s})\).

Theorem 2

The approximation ratio of GD-DCCS is \(1 - \frac{1}{e}\).

Proof

At lines 1–8, GD-DCCS exactly finds \({\mathcal {F}}\), the set of all candidate d-CCs. The remaining part of GD-DCCS aims at finding the set \({\mathcal {R}}\) of kd-CCs from \({\mathcal {F}}\) that maximizes \(|\textsf {Cov}({\mathcal {R}})|\). This is an instance of the max-k-cover problem [2]. Lines 9–11 of GD-DCCS actually use the greedy algorithm [2] to solve this problem. The approximation ratio of this greedy algorithm is \(1 - 1/e\) [2]. Thus, the theorem holds. \(\square \)

Vertex deletion procedure In line 1 of GD-DCCS, we apply Procedure VertexDeletion to remove some unpromising vertices from \({\mathcal {G}}\). Let \(\textsf {Supp}(v)\) denote the number of layers i such that \(v \in C^d(G_i)\). If \(\textsf {Supp}(v) < s\), v cannot be contained in any d-CCs \(C_L^d({\mathcal {G}})\) with \(|L| = s\). Thus, we can iteratively remove all such vertices from \({\mathcal {G}}\) until \(\textsf {Supp}(v) \ge s\) for all vertices v remaining in \({\mathcal {G}}\).

The VertexDeletion procedure takes as input a multi-layer graph \({\mathcal {G}}\) and two integers \(d, s \in {\mathbb {N}}\). It removes the vertices v with \(\textsf {Supp}(v) < s\) in iterations. In each iteration, we compute the d-core \(C^{d}(G_i)\) on each layer i (lines 2–3) and then remove the vertices v from all layers in \({\mathcal {G}}\) if \(\textsf {Supp}(v) < s\) (line 6). This process is repeated until \(\textsf {Supp}(v) \ge s\) for all vertices v remaining in \({\mathcal {G}}\). Finally, the remaining graph \({\mathcal {G}}\) is returned as the result (line 8).

Limitations As verified by the experimental results in Sect. 8, GD-DCCS is not scalable to large multi-layer graphs. This is due to the following reasons:

-

(1)

GD-DCCS must keep all candidate d-CCs in \({\mathcal {F}}\). Since \(|{\mathcal {F}}| = {l({\mathcal {G}}) \atopwithdelims ()s}\), we have \(|{\mathcal {F}}| = \frac{{(l({\mathcal {G}}) - s)}^{s}}{\sqrt{2 \pi s} s^s} {(\frac{l({\mathcal {G}})}{l({\mathcal {G}}) - s})}^{l({\mathcal {G}}) + 1/2}\) according to Stirling’s approximation [9]. Obviously, for fixed s, \(|{\mathcal {F}}|\) grows exponentially as \(l({\mathcal {G}})\) increases. When \({\mathcal {F}}\) cannot fit in main memory, we store all d-CCs on the disk, and the space cost is \(O({l({\mathcal {G}}) \atopwithdelims ()s} n)\).

-

(2)

The exponential growth on the size of \({\mathcal {F}}\) significantly increases the time cost for selecting k diversified d-CCs from \({\mathcal {F}}\) (lines 9–11). When \({\mathcal {F}}\) is stored on the disk, the I/O cost for d-CC selection is \(O({l({\mathcal {G}}) \atopwithdelims ()s} n k/B)\), where B is the block size. The I/O cost is very high for large graphs.

-

(3)

The candidate d-CC generation phase (lines 2–8) is separate from the diversified d-CC selection phase (lines 9–11). There is no guidance on candidate generation, so a large number of unpromising candidates are generated in vain.

5 The bottom-up algorithm

This section proposes a bottom-up approach to the DCCS problem. In this approach, the candidate d-CC generation phase and the top-k diversified d-CC selection phase are interleaved. On the one hand, we maintain a set of temporary top-k diversified d-CCs and use each newly generated d-CC to update them. On the other hand, we guide candidate d-CC generation by the temporary top-k diversified d-CCs.

In addition, candidate d-CCs are generated in a bottom-up manner. Like the frequent pattern mining algorithm [30], we organize all d-CCs by a search tree and search candidate d-CCs on it. The bottom-up d-CC generation has the following advantage: If the d-CC w.r.t. subset L\((|L| < s)\) is unlikely to improve the quality of the temporary top-k diversified d-CCs, the d-CCs w.r.t. all \(L^{\prime }\) such that \(L \subseteq L^{\prime }\) and \(|L^{\prime }| = s\) need not be generated. As verified by the experimental results in Sect. 8, the bottom-up approach reduces the search space by 80–90% in comparison with the greedy algorithm and thus saves large amount of time. Moreover, the bottom-up DCCS algorithm has an approximation ratio of 1 / 4.

5.1 Maintenance of top-k diversified d-CCs

Let \({\mathcal {R}}\) be a set of temporary top-k diversified d-CCs. Initially, \({\mathcal {R}}= \emptyset \). To improve the quality of \({\mathcal {R}}\), we try to update \({\mathcal {R}}\) whenever we find a new candidate d-CC C. In particular, we update \({\mathcal {R}}\) with C by one of the following rules:

Rule 1: If \(|{\mathcal {R}}| < k\), we directly add C into \({\mathcal {R}}\) to enlarge its coverage.

Rule 2: For \(C^{\prime } \in {\mathcal {R}}\), let \({\varDelta }({\mathcal {R}}, C^{\prime }) = C^{\prime } - \textsf {Cov}({\mathcal {R}}- \{C^{\prime }\})\), \({\varDelta }({\mathcal {R}}, C^{\prime })\) is the set of vertices in \(\textsf {Cov}({\mathcal {R}})\) exclusively covered by \(C^{\prime }\). Let \(C^*({\mathcal {R}}) = \arg \min _{C^{\prime } \in {\mathcal {R}}} |{\varDelta }({\mathcal {R}}, C^{\prime })|\), \(C^*({\mathcal {R}})\) exclusively covers the least number of vertices by itself among all d-CCs in \({\mathcal {R}}\). If \(|{\mathcal {R}}| = k\), we can replace \(C^*({\mathcal {R}})\) by a new d-CC C if the coverage of \({\mathcal {R}}\) can be enlarged by a sufficiently large factor. According to the framework for solving the max-k-cover problem [2], we replace \(C^*({\mathcal {R}})\) with C if \(|{\mathcal {R}}| = k\) and

As proved in [2], applying these two rules can lead to a final result with guaranteed performance. The details of Update are described in Appendix B of this paper. By using two index structures, Update runs in \(O(\max \{|C|, |C^{*}({\mathcal {R}})|\})\) time.

5.2 Bottom-up candidate generation

Candidate d-CCs \(C^d_L({\mathcal {G}})\) with \(|L| = s\) are generated in a bottom-up fashion. As shown in Fig. 5, all d-CCs \(C^d_L({\mathcal {G}})\) are conceptually organized by a search tree, in which \(C_L^d({\mathcal {G}})\) is the parent of \(C_{L^{\prime }}^d({\mathcal {G}})\) if \(L \subset L^{\prime }\), \(|L^{\prime }| = |L| + 1\), and the only number \(i \in L^{\prime } - L\) satisfies \(i > \max (L)\), where \(\max (L)\) is the largest number in L (specially, \(\max (\emptyset ) = -\infty \)). Conceptually, the root of the search tree is \(C^{d}_{\emptyset }({\mathcal {G}}) = V({\mathcal {G}})\).

The d-CCs in the search tree are generated in a depth-first order. The depth-first search is realized by recursive Procedure BU-Gen described in Fig. 6. The BU-Gen takes as input a multi-layer graph \({\mathcal {G}}\), integers \(d, s, k \in {\mathbb {N}}\), two-layer subset \(L, L_Q \subseteq [l({\mathcal {G}})]\), the d-CC \(C^{d}_{L}({\mathcal {G}})\) w.r.t. L, and the result set \({\mathcal {R}}\). The BU-Gen procedure works as follows.

Given the d-CC \(C^d_L({\mathcal {G}})\), we first expand L by adding a layer number j into L. Notably, the input layer subset \(L_Q\) records some layers that cannot be used to expand L. \(L_Q\) is generated by the pruning method which will be described later. Therefore, let \(L_{P} = \{ j | \max (L) < j \le l({\mathcal {G}})\} - L_Q\) (line 1). \(L_P\) is the set of layers potentially used to expand L. We also initialize a layer subset \(L_R\) to collect all layers in \(L_P\) that can be actually used to expand L (line 2). For each \(j \in L_P\), let \(L^{\prime } = L \cup \{j\}\). By Lemma 1, we have \(C_{L^{\prime }}^{d}({\mathcal {G}}) \subseteq C_L^d({\mathcal {G}}) \cap C_{\{j\}}^d({\mathcal {G}}) = C_L^d({\mathcal {G}}) \cap C^d(G_j)\). Thus, we can compute \(C_{L^{\prime }}^d({\mathcal {G}})\) on the induced subgraph \({\mathcal {G}}[C_L^d({\mathcal {G}}) \cap C^d(G_j)]\) by Procedure dCC described in Sect. 3 (line 6 and line 20). Next, we process \(C_{L^{\prime }}^d({\mathcal {G}})\) according to the following cases:

Case 1 (lines 7–8) If \(|{\mathcal {R}}| < k\) and \(|L^{\prime }| = s\), we update \({\mathcal {R}}\) with \(C_{L^{\prime }}^d({\mathcal {G}})\) by Rule 1 specified in Sect. 5.2.

Case 2 (lines 9–10) If \(|{\mathcal {R}}| < k\) and \(|L^{\prime }| < s\), j can be used to expand L. Thus, we add j into \(L_R\).

Case 3 (lines 21–22) If \(|{\mathcal {R}}| = k\) and \(|L^{\prime }| = s\), we update \({\mathcal {R}}\) with \(C_{L^{\prime }}^d({\mathcal {G}})\) by Rule 2 specified in Sect. 5.2.

Case 4 (lines 23–25) If \(|{\mathcal {R}}| = k\) and \(|L^{\prime }| < s\), we check if \(C_{L^{\prime }}^d({\mathcal {G}})\) satisfies Eq. (1) to update \({\mathcal {R}}\). If not satisfied, none of the descendants of \(C_{L^{\prime }}^d({\mathcal {G}})\) is qualified to be a candidate, so we prune the entire subtree rooted at \(C_{L^{\prime }}^d({\mathcal {G}})\); otherwise, j can be used to expand L so we add j into \(L_R\). The correctness is guaranteed by the following lemma.

Lemma 2

(Search tree pruning) For a d-CC \(C^d_{L}({\mathcal {G}})\), if \(C^d_{L}({\mathcal {G}})\) does not satisfy Eq. (1), none of the descendants of \(C^d_{L}({\mathcal {G}})\) can satisfy Eq. (1).

Pruning methods To further improve the efficiency of the bottom-up search, we exploit several pruning methods when \(|{\mathcal {R}}| = k\) (Cases 3 and 4).

Method 1: support-based pruning (lines 12–13) For the d-CC \(C^d_L({\mathcal {G}})\), we need to add \(s - |L|\) layers into L to obtain a d-CC on s layers to update \({\mathcal {R}}\). Let \(D \subseteq L_P\) be a layer subset such that \(|D| = s - |L|\), and let \(I_{D} = \cap _{i \in D} C^{d}(G_i)\). Clearly, if \(|\arg \max _{D} C^d_L({\mathcal {G}}) \cap I_D| < \frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\), none of the decedents of \(C^d_L({\mathcal {G}})\) can update \({\mathcal {R}}\). However, the computation of the exact D that maximizes \(|C^d_L({\mathcal {G}}) \cap I_D|\) is NP-Hard and hard to approximate [6]. Alternatively, we derive a bound for all \(I_D\) according to the following lemma.

Lemma 3

Let I be the set of vertices that are contained in at least \(s - |L|\)d-cores on the layers in \(L_P\). For any subset \(D \subseteq L_P\) such that \(|D| = s - |L|\), we have \(I_D \subseteq I\).

With the bounding vertex subset I, we can stop searching the subtree rooted at \(C^d_L({\mathcal {G}})\) if we have \(|C^d_L({\mathcal {G}}) \cap I | < \frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\). The correctness is ensured by the following lemma.

Lemma 4

(Support-based pruning) For a d-CC \(C^d_L({\mathcal {G}})\) and the bounding vertex subset I, if we have \(|C^d_L({\mathcal {G}}) \cap I| < \frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\), for any descendant \(C^d_{S}({\mathcal {G}})\) of \(C^d_{L}({\mathcal {G}})\) such that \(|S| = s\), \(C_{S}^d({\mathcal {G}})\) cannot satisfy Eq. (1).

Method 2: Order-based pruning (lines 14–17) For all \(j \in L_P\), we can order the layer numbers j in decreasing order of \(|C_L^d({\mathcal {G}}) \cap C^d(G_j)|\) and generate \(C_{L \cup \{j\}}^d({\mathcal {G}})\) according to this order. For some j, if \(|C_{L}^d({\mathcal {G}}) \cap C^d(G_j)| < \frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\), we can stop searching the subtrees rooted at \(C_{L \cup \{j\}}^d({\mathcal {G}})\) and \(C_{L \cup \{j^{\prime }\}}^d({\mathcal {G}})\) for all \(j^{\prime }\) succeeding j in the order. The correctness is ensured by the following lemma.

Lemma 5

(Order-based pruning) For a d-CC \(C^d_L({\mathcal {G}})\) and each \(j \in L_P\), if \( |C_{L}^d({\mathcal {G}}) \cap C^d(G_j)| < \frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\), \(C_{L \cup \{j\}}^d({\mathcal {G}})\) cannot satisfy Eq. (1).

Method 3: Internal d-CC computation pruning (line 20) Recall that in the dCC procedure, each time we remove an unpromising vertex that cannot exist in the d-CC from the graph. Therefore, the size of the input multi-layer graph decreases gradually. At some moment when the size of the remaining vertices is too small, the generated d-CC is impossible to update the result set \({\mathcal {R}}\). Therefore, we can immediately terminate the d-CC computation at this time. We call this pruning method the internal d-CC computation pruning.

We can apply this pruning method to the dCC procedure at line 20 of BU-Gen which computes \(C^{d}_{L^{\prime }}({\mathcal {G}})\). To achieve this, several minor modifications need to be made to the dCC procedure. Specifically, the dCC procedure takes the size \(\frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\) as an additional input parameter. After line 18 of the dCC procedure, the unpromising vertex is removed from the graph, so we can check if the number of remaining vertices, i.e., \(V({\mathcal {G}})\), is less than \(\frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\). If so, the generated d-CC \(C^{d}_{L^{\prime }}({\mathcal {G}})\) is unable to update \({\mathcal {R}}\). Therefore, we can immediately terminate the dCC procedure and safely skip the execution of lines 21–25 of the BU-Gen procedure. Otherwise, the dCC procedure works as usual. The correctness of this pruning method is obvious.

Method 4: Layer pruning (lines 27–29) For each \(j \in L_P\), if \(C^{d}_{L \cup \{j\}}({\mathcal {G}})\) does not satisfy Eq. (1), we need not generate \(C^{d}_{S}({\mathcal {G}})\) for all S such that \(L \cup \{j\} \subseteq S \subseteq [l({\mathcal {G}})]\). The correctness is guaranteed by the following lemma.

Lemma 6

(Layer pruning) For a d-CC \(C^d_{L}({\mathcal {G}})\) and each \(j \in L_P\), if \(C^d_{L \cup \{j\}}({\mathcal {G}})\) does not satisfy Eq. (1), \(C^d_{S \cup \{j\}}({\mathcal {G}})\) cannot satisfy Eq. (1) for all S such that \(L \subseteq S \subseteq [l({\mathcal {G}})]\).

For each \(j \in L_R\), let \(L^{\prime } = L - \{j\}\) (line 28). By Lemma 6, any layer in \(L_Q \cup (L_{P} - L_{R})\) cannot be used to expand the d-CC \(C^d_{L^{\prime }}({\mathcal {G}})\). As a result, for each \(L^{\prime }\), we make a recursive call to BU-Gen with parameters \({\mathcal {G}}\), d, s, k, \(L^{\prime }\), \(L_Q \cup (L_{P} - L_{R})\), \(C^d_{L^{\prime }}({\mathcal {G}})\) and \({\mathcal {R}}\) (line 29).

5.3 The bottom-up algorithm

Figure 7 describes the complete bottom-up DCCS algorithm BU-DCCS. Given a multi-layer graph \({\mathcal {G}}\) and three parameters \(d, s, k \in {\mathbb {N}}\), we can solve the DCCS problem by calling BU-Gen\(({\mathcal {G}}, d, s, k, \emptyset , \emptyset , V({\mathcal {G}}), {\mathcal {R}})\) (line 4). To further speed up the algorithm, the preprocessing method (Procedure VertexDeletion) proposed in Sect. 4 is applied in line 1. In addition, we propose two additional preprocessing methods.

Sorting layers We sort the layers of \({\mathcal {G}}\) in descending order of \(|C^d(G_i)|\), where \(1 \le i \le l({\mathcal {G}})\). Intuitively, the larger \(|C^d(G_i)|\) is, the more likely \(G_i\) contains a large candidate d-CC. Although there is no theoretical guarantee on the effectiveness of this method, it is indeed effective in practice. Line 3 of BU-DCCS applies this preprocessing method.

Initialization of\({{\mathcal {R}}}\) The pruning techniques in BU-Gen are not applicable unless \(|{\mathcal {R}}| = k\), so a good initial state of \({\mathcal {R}}\) can greatly enhance the pruning power. We develop a greedy procedure InitTopK to initialize \({\mathcal {R}}\) such that \(|{\mathcal {R}}| = k\).

The InitTopK procedure takes as input a multi-layer graph \({\mathcal {G}}\), three integers \(d, s, k \in {\mathbb {N}}\) and the set \({\mathcal {R}}\) of temporary top-k diversified d-CCs. First, we set \({\mathcal {R}}\) as an empty set (line 1). The for loop (lines 2–11) executes k times. In each loop, a candidate d-CC is added to \({\mathcal {R}}\) in the following way: First, we select layer i such that the d-core \(C^d(G_i)\) can maximally enlarges \(\textsf {Cov}({\mathcal {R}})\) (line 3). Let \(C = C^{d}(G_i)\) and \(L = \{i\}\) (lines 4–5). Then, we add \(s - 1\) other layer numbers to L in a greedy manner. In each time, we choose layer \(j \in [l({\mathcal {G}})] - L\) that maximizes \(|C \cap C^{d}(G_j)|\), update L to \(L \cup \{j\}\), and update C to \(C \cap C^{d}(G_j)\) (lines 7–9). When \(|L| = s\), we compute the d-CC \(C^d_L({\mathcal {G}})\) and update \({\mathcal {R}}\) with \(C^d_L({\mathcal {G}})\) (lines 11–12).

Theorem 3

The approximation ratio of BU-DCCS is 1 / 4.

6 The top-down algorithm

The bottom-up algorithm traverses a search tree from the root down to level s. When \(s \ge l({\mathcal {G}})/2\), the efficiency of the algorithm degrades significantly. As verified by the experiments in Sect. 8, the performance of the bottom-up algorithm is close to or even worse than the greedy algorithm when \(s \ge l({\mathcal {G}})/2\). To handle this issue, we propose a top-down approach for the DCCS problem when \(s \ge l({\mathcal {G}})/2\).

In this section, we assume \(s \ge l({\mathcal {G}})/2\). In the top-down algorithm, we maintain a temporary top-k result set \({\mathcal {R}}\) and update it in the same way as in the bottom-up algorithm. However, candidate d-CCs are generated in a top-down manner. Given that we now have a d-CC C w.r.t. layer subset L, we generate the d-CC \(C^{\prime }\) w.r.t. layer subset \(L^{\prime }\) such that \(L^{\prime } \subseteq L\) in the top-down algorithm. Obviously, we have \(C \subseteq C^{\prime }\), so the pruning techniques in the bottom-up algorithm based on the containment property (Property 3) of d-CC are certainly not applicable. Therefore, we must propose a series of new pruning techniques suitable for the top-down search. Specifically, for each d-CC, we associate it with a potential set that contains all vertices in the descendants of this d-CC in the top-down search tree. We observe that the potential set satisfies the containment property. Let U and \(U^{\prime }\) be the potential set of C and \(C^{\prime }\), respectively. We have \(C^{\prime } \subseteq U^{\prime }\) and \(U^{\prime } \subseteq U\). Therefore, if \(U^{\prime }\) is unlikely to improve the quality of the result, none of the descendants of \(C^{\prime }\) can do. The top-down algorithm also has an approximation ratio of 1 / 4. As verified by the experiments in Sect. 8, the top-down algorithm is superior to the other algorithms when \(s \ge l({\mathcal {G}})/2\).

6.1 Top-down candidate generation

We first introduce how to generate d-CCs in a top-down manner. In the top-down algorithm, all d-CCs are conceptually organized as a search tree as illustrated in Fig. 8, where \(C^{d}_{L}({\mathcal {G}})\) is the parent of \(C^{d}_{L^{\prime }}({\mathcal {G}})\) if \(L^{\prime } \subset L\), \(|L| = |L^{\prime }| + 1\), and the only layer number \(i \in L - L^{\prime }\) satisfies \(i > \max ([l({\mathcal {G}})] - L)\). Except the root \(C^{d}_{[l({\mathcal {G}})]}\), all d-CCs in the search tree have a unique parent. We generate candidate d-CCs by depth-first searching the tree from the root down to level s and update the temporary result set \({\mathcal {R}}\) during search.

Let \(C^{d}_{L}({\mathcal {G}})\) be the d-CC currently visited in DFS, where \(|L| > s\). We must generate the children of \(C^{d}_{L}({\mathcal {G}})\). By Property 3 of d-CCs, we have \(C^{d}_{L}({\mathcal {G}}) \subseteq C^{d}_{L^{\prime }}({\mathcal {G}})\) for all \(L^{\prime } \subseteq L\). Thus, to generate \(C^{d}_{L^{\prime }}({\mathcal {G}})\), we only have to add some vertices to \(C^{d}_{L}({\mathcal {G}})\) but need not delete any vertex from \(C^{d}_{L}({\mathcal {G}})\).

To this end, we associate \(C^{d}_{L}({\mathcal {G}})\) with a vertex set \(U^{d}_{L}({\mathcal {G}})\). \(U^{d}_{L}({\mathcal {G}})\) must contain the vertices in all descendants \(C^{d}_{S}({\mathcal {G}})\) of \(C^{d}_{L}({\mathcal {G}})\) such that \(|S| = s\). \(U^{d}_{L}({\mathcal {G}})\) serves as the scope for searching for the descendants of \(C^{d}_{L}({\mathcal {G}})\). We call \(U^{d}_{L}({\mathcal {G}})\) the potential vertex set w.r.t. \(C^{d}_{L}({\mathcal {G}})\). Obviously, we have \(C^{d}_{L}({\mathcal {G}}) \subseteq U^{d}_{L}({\mathcal {G}})\). Initially, \(U^{d}_{[l({\mathcal {G}})]}({\mathcal {G}}) = V({\mathcal {G}})\). Section 6.2 will describe how to shrink \(U^{d}_{L}({\mathcal {G}})\) to \(U^{d}_{L^{\prime }}({\mathcal {G}})\) for \(L^{\prime } \subseteq L\), so we have \(U^{d}_{L^{\prime }}({\mathcal {G}}) \subseteq U^{d}_{L}({\mathcal {G}})\) if \(L^{\prime } \subseteq L\). The relationships between \(C^{d}_{L}({\mathcal {G}})\), \(U^{d}_{L}({\mathcal {G}})\), \(C^{d}_{L^{\prime }}({\mathcal {G}})\), and \(U^{d}_{L^{\prime }}({\mathcal {G}})\) are illustrated in Fig. 9. The arrows in Fig. 9 indicate that \(C^{d}_{L^{\prime }}({\mathcal {G}})\) is expanded from \(C^{d}_{L}({\mathcal {G}})\), and \(U^{d}_{L^{\prime }}({\mathcal {G}})\) is shrunk from \(U^{d}_{L}({\mathcal {G}})\). Keeping this in mind, we focus on top-down candidate generation in this subsection. Sections 6.2 will describe how to compute \(U^{d}_{L^{\prime }}({\mathcal {G}})\) in details.

The top-down candidate d-CC generation is implemented by the recursive procedure TD-Gen in Fig. 10. Let \(L_{R} = \{j | \max ([l({\mathcal {G}})] - L) < j \le l({\mathcal {G}})\} \cap L\) be the set of layer numbers possible to be removed from L (line 1). For each \(j \in L_{R}\), let \(L^{\prime } = L - \{j\}\). We have that \(C^{d}_{L^{\prime }}({\mathcal {G}})\) is a child of \(C^{d}_{L}({\mathcal {G}})\). We first obtain \(U^{d}_{L^{\prime }}({\mathcal {G}})\) by the method in Sect. 6.2 (line 4). After obtaining \(U^{d}_{L^{\prime }}({\mathcal {G}})\), \(C^{d}_{L^{\prime }}({\mathcal {G}})\) can be easily computed by applying the dCC procedure on input \({\mathcal {G}}[U^{d}_{L^{\prime }}({\mathcal {G}})]\), d and \(L^{\prime }\) (lines 8 and 20). Next, we process \(C_{L^{\prime }}^d({\mathcal {G}})\) based on the following cases:

Case 1 (lines 9–10) If \(|{\mathcal {R}}| < k\) and \(|L^{\prime }| = s\), we update \({\mathcal {R}}\) with \(C_{L^{\prime }}^d({\mathcal {G}})\) by Rule 1 specified in Sect. 5.2.

Case 2 (lines 11–12) If \(|{\mathcal {R}}| < k\) and \(|L^{\prime }| > s\), we recursively call TD-Gen to generate the descendants of \(C_{L^{\prime }}^d({\mathcal {G}})\).

Case 3 (lines 21–22) If \(|{\mathcal {R}}| = k\) and \(|L^{\prime }| = s\), we update \({\mathcal {R}}\) with \(C_{L^{\prime }}^d({\mathcal {G}})\) by Rule 2 specified in Sect. 5.2.

Case 4 (lines 23–30) Similar to Lemma 2, if \(|{\mathcal {R}}| = k\) and \(|L^{\prime }| > s\), we apply \(U^d_{L^{\prime }}({\mathcal {G}})\) to check whether to extend the descendants of \(C_{L^{\prime }}^d({\mathcal {G}})\) (line 24).

Lemma 7

(Search tree pruning) For a d-CC \(C^d_L({\mathcal {G}})\) and its potential vertex set \(U^d_L({\mathcal {G}})\), where \(|L| > s\), if \(U^d_{L}({\mathcal {G}})\) does not satisfy Eq. (1), any descendant \(C^d_{L^{\prime }}({\mathcal {G}})\) of \(C^d_L({\mathcal {G}})\) with \(|L^{\prime }| = s\) cannot satisfy Eq. (1).

Pruning methods If \(|{\mathcal {R}}| = k\) (Cases 3 and 4), we present several pruning methods to further speed up the top-down search process as follows.

Method 1: Order-based pruning (lines 14–17) Similar to Lemma 5, we can also order the layer numbers \(j \in L_{R}\) in descending order of \(|U^{d}_{L - \{ j \} }({\mathcal {G}})|\) (line 14) and prune some subtrees earlier (lines 17–18).

Lemma 8

(Order-based pruning) For a d-CC \(C^d_L({\mathcal {G}})\), its potential vertex set \(U^d_L({\mathcal {G}})\) and \(j > \max ([l({\mathcal {G}})] - L)\), if \(|U^d_{L - \{j\}}({\mathcal {G}})| < \frac{1}{k}{|\textsf {Cov}({\mathcal {R}})|} + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\), any descendant \(C^d_{L - \{ j \}}({\mathcal {G}})\) of \(C^d_L({\mathcal {G}})\) cannot satisfy Eq. (1).

Method 2: Potential set pruning (lines 25–28) More interestingly, for Case 4, in some optimistic cases, we need not to search the descendants of \(C^{d}_{L}({\mathcal {G}})\). Instead, we can randomly select a descendant \(C^{d}_{S}({\mathcal {G}})\) of \(C^{d}_{L}({\mathcal {G}})\) with \(|S| = s\) to update \({\mathcal {R}}\) (lines 26–28). The correctness is ensured by the following lemma.

Lemma 9

(Potential set pruning) For a d-CC \(C^d_L({\mathcal {G}})\) and its potential vertex set \(U^d_L({\mathcal {G}})\), where \(|L| > s\), if \(C^{d}_{L}({\mathcal {G}})\) satisfies Eq. (1), and \(U^d_L({\mathcal {G}})\) satisfies

the following proposition holds: For any two distinct descendants \(C^d_{S_1}({\mathcal {G}})\) and \(C^d_{S_2}({\mathcal {G}})\) of \(C^{d}_{L}({\mathcal {G}})\) such that \(|S_1| = |S_2| = s\), if \(|{\mathcal {R}}| = k\) and \({\mathcal {R}}\) has already been updated by \(C^{d}_{S_1}({\mathcal {G}})\), then \(C^{d}_{S_2}({\mathcal {G}})\) cannot update \({\mathcal {R}}\) any more.

6.2 Refinement of potential vertex sets

Let \(C^{d}_{L}({\mathcal {G}})\) be the d-CC currently visited by the DFS and \(C^{d}_{L^{\prime }}({\mathcal {G}})\) be a child of \(C^{d}_{L}({\mathcal {G}})\). To generate \(C^{d}_{L^{\prime }}({\mathcal {G}})\), Procedure TD-Gen first refines \(U^{d}_{L}({\mathcal {G}})\) to \(U^{d}_{L^{\prime }}({\mathcal {G}})\) and then generates \(C^{d}_{L^{\prime }}({\mathcal {G}})\) based on \(U^{d}_{L^{\prime }}({\mathcal {G}})\). This subsection introduces how to shrink \(U^{d}_{L}({\mathcal {G}})\) to \(U^{d}_{L^{\prime }}({\mathcal {G}})\).

First we introduce some useful notation. Given a subset of layer numbers \(L \subseteq [l({\mathcal {G}})]\), we can divide all layer numbers in L into two disjoint classes:

Class 1: By the relationship of d-CCs in the top-down search tree, for any layer number \(i \in L\) and \(i < \max ([l({\mathcal {G}})] - L)\), layer i will not be removed from L in any descendant of \(C^{d}_{L}({\mathcal {G}})\). Thus, for any descendant \(C^d_S({\mathcal {G}})\) of \(C^{d}_{L}({\mathcal {G}})\) with \(|S| = s\), we have \(i \in S\).

Class 2: By the relationship of d-CCs in the top-down search tree, for any layer number \(i \in L\) and \(i > \max ([l({\mathcal {G}})] - L)\), layer i can be removed from L to obtain a descendant of \(C^{d}_{L}({\mathcal {G}})\). Thus, for a descendant \(C^d_S({\mathcal {G}})\) of \(C^{d}_{L}({\mathcal {G}})\) with \(|S| = s\), it is undetermined whether \(i \in S\).

Let \(M_L\) and \(N_L\) denote the Class 1 and Class 2 of layer numbers w.r.t. L, respectively. Procedure RefineU in Fig. 11 refines \(U^{d}_{L}({\mathcal {G}})\) to \(U^{d}_{L^{\prime }}({\mathcal {G}})\). Let \(U = U^{d}_{L}({\mathcal {G}})\) (line 1). First, we obtain \(M_{L^{\prime }}\) and \(N_{L^{\prime }}\) w.r.t. \(L^{\prime }\) (line 2). Then, we repeat the following two refinement methods to remove irrelevant vertices from U until no vertices can be removed any more (lines 3–8). Finally, U is output as \(U_{L^{\prime }}^{d}({\mathcal {G}})\) (line 9).

Refinement Method 1 (lines 4–5) For each layer number \(i \in M_{L^{\prime }}\), we have \(i \in S\) for all descendants \(C^{d}_{S}({\mathcal {G}})\) of \(C^{d}_{L^{\prime }}({\mathcal {G}})\) with \(|S| = s\). Note that \(C^{d}_{S}({\mathcal {G}})\) must be d-dense in \(G_i\). Thus, if the degree of a vertex v in \(G_i[U]\) is less than d, we have \(v \not \in C^{d}_{S}({\mathcal {G}})\), so we can remove v from U and \({\mathcal {G}}\).

Refinement Method 2 (lines 6–7) If a vertex \(v \in U\) is contained in a descendant \(C^{d}_{S}({\mathcal {G}})\) of \(C^{d}_{L}({\mathcal {G}})\) with \(|S| = s\), v must occur in all the d-cores \(C^d(G_i)\) for \(i \in M_{L^{\prime }}\) and must occur in at least \(s - |M_{L^{\prime }}|\) of the d-cores \(C^d(G_j)\) for \(j \in N_{L^{\prime }}\). Therefore, if v occurs in less than \(s - |M_{L^{\prime }}|\) of the d-cores \(C^d(G_j)\) for \(j \in N_{L^{\prime }}\), we can remove v from U and \({\mathcal {G}}\).

6.3 Top-down algorithm

We present the complete top-down DCCS algorithm TD-DCCS in Fig. 12. The input is a multi-layer graph \({\mathcal {G}}\) and parameters \(d, s, k \in {\mathbb {N}}\). First, we apply the preprocessing methods of vertex deletion (line 1) and initializing of \({\mathcal {R}}\) (line 2). For the preprocessing method of sorting layers, we sort all layers i of \({\mathcal {G}}\) in ascending order of \(|C^d(G_i)|\) at line 3 since a layer whose d-core is small is less likely to support a large d-CC. Next, we compute \(C_{[l({\mathcal {G}})]}^{d}\) (line 4) and invoke recursive Procedure TD-Gen\(({\mathcal {G}}, d, s, k, [l({\mathcal {G}})], C_{[l({\mathcal {G}})]}^{d}, V({\mathcal {G}}), {\mathcal {R}})\) to generate candidate d-CCs and update the result set \({\mathcal {R}}\) (line 5). Finally, \({\mathcal {R}}\) is returned as the result (line 6).

Theorem 4

The approximation ratio of TD-DCCS is 1 / 4.

7 Optimized algorithms

In this section, we propose several optimized algorithms for fast finding the diversified d-CCs in a multi-layer graph. In all aforementioned algorithms for the DCCS problem, we invoke the dCC procedure proposed in Sect. 3 to compute d-CCs in the search process. However, this method is still not efficient enough since it involves lots of redundant examinations of vertices. In this section, we introduce an index structure, which organizes all vertices of the input multi-layer graph hierarchically. Base on this index, we propose a faster d-CC computation method with less examination of vertices. By applying this method to the previous DCCS algorithms, the experimental results in Sect. 8 show that all of the optimized algorithms run faster than the original algorithms.

7.1 The index structure

We introduce the index structure in this subsection. The index I organizes all the vertices of \({\mathcal {G}}\) hierarchically and helps filter out the vertices irrelevant to \(C^{d}_{L^{\prime }}({\mathcal {G}})\) efficiently. Recall that \(\textsf {Supp}(v)\) is the number of layers whose d-cores contain v. The index I is constructed based on \(\textsf {Supp}(v)\). Specifically, for \(1 \le h \le l({\mathcal {G}})\), let \(J_h\) be the set of vertices v iteratively removed from \({\mathcal {G}}\) due to \(\textsf {Supp}(v) \le h\). Let \(I_h = J_{h} - J_{h-1}\). Obviously, \(I_1, I_2, \dots , I_{l({\mathcal {G}})}\) is a disjoint partition of all vertices of \({\mathcal {G}}\)Footnote 1.

We present the BuildIndex procedure for constructing the index in Fig. 13. BuildIndex takes a multi-layer graph \({\mathcal {G}}\) and an integer \(d \in {\mathbb {N}}\) as input. At the very beginning, we compute the d-core on each layer (line 2) and initialize the index I as empty (line 3). The index I is basically the hierarchy of the vertices following \(I_1, I_2, \dots , I_{l({\mathcal {G}})}\), that is, the vertices in \(I_{i+1}\) are placed on higher levels than those in \(I_i\). Internally, the vertices in \(I_i\) are also placed on a stack of levels. Initially, we set \(h = 1\) (line 4). For each h, vertices in \(I_h\) are placed as follows.

Suppose the vertices in \(I_1, I_2, \ldots , I_{i - 1}\) have been removed from \({\mathcal {G}}\). Although the vertices \(v \in I_i\) are iteratively removed from \({\mathcal {G}}\) due to \(\textsf {Supp}(v) \le i\), they are actually removed in different batches. In each batch (lines 7–12), we select all the vertices v with \(\textsf {Supp}(v) \le i\) into S (line 7) and remove them together from all layers of \({\mathcal {G}}\) (line 12). In addition, let L(v) be the set of layer numbers on which v is contained in the d-core just before v is removed from \({\mathcal {G}}\) in batch (line 10). We associate each vertex v in the index with L(v) (line 11).

After a batch, due to the removal of vertices, we need to update the d-core on each layer (line 14). After that, some vertices v originally satisfying \(\textsf {Supp}(v) > i\) may become \(\textsf {Supp}(v) \le i\) and thus will be removed in next batch. Therefore, in \(I_i\), the vertices removed in the same batch are place on the same sub-level, and the vertices removed in a later batch are placed on a higher sub-level than the vertices removed in an early batch. We repeat the removal process until S is empty. After that, we increase h by 1 (line 16) and continue to process vertices in \(I_{h+1}\). The iteration is repeated until all vertices have been removed from the graph.

After placing all vertices of \({\mathcal {G}}\) into the index I, we add an edge between vertices u and v in the index if (u, v) is an edge on a layer of \({\mathcal {G}}\) (line 19). Finally, the index I is returned as the result (line 20).

Complexity analysis Let \(n = |V({\mathcal {G}})|\), \(l = l({\mathcal {G}})\), \(m_{i} = |E_i({\mathcal {G}})|\), \(m = |\bigcup _{i \in [l({\mathcal {G}})]} E_i({\mathcal {G}}) |\), and \(m^{\prime } = \sum _{i \in [l({\mathcal {G}})]} m_{i}\). By [3], the d-core computation consumes \(O(\sum _{i \in [l({\mathcal {G}})]} m_i) = O(m^{\prime })\) time. Let \({\varDelta }\) be the maximum degree of a vertex across all layers. Note that, for each \(1 \le h \le l({\mathcal {G}})\), there exist at most \({\varDelta }\) batches. This is because if the support number of a vertex decreases, at least one of its neighbors is removed from the graph. Thus, the time complexity of the index building process is at most \(O(m^{\prime } + {\varDelta } m l)\). Obviously, the space to store the index is at most \(O(nl + m)\).

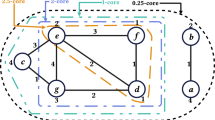

For ease of understanding, we illustrate an example of the index structure in Fig. 14 for the multi-layer graph \({\mathcal {G}}\) shown in Fig. 2 when \(d = 3\). In the index, level h represents all vertices in \(I_h\). In general, the vertices in \(I_h\) may be removed in a sequence of batches. The vertices removed together in a batch forms a sub-level of level h.

Initially \((h = 1)\), we have \(\textsf {Supp}(v) > 1\) for all vertices v in \({\mathcal {G}}\), so level 1 contains no vertex. Then, h is increased to 2, and we have \(\textsf {Supp}(w) = 2\) because \(w \in C^{d}(G_1)\) and \(w \in C^{d}(G_6)\). For each vertex v in \({\mathcal {G}}\), the table in Fig. 14 lists L(v), the set of layer numbers on which v is contained in the d-cores just before v is removed from \({\mathcal {G}}\). Hence, we place w on level 2 and remove it from \({\mathcal {G}}\). After that, we have \(\textsf {Supp}(v) > 2\) for all vertices v remaining in \({\mathcal {G}}\), so we set \(h = 3\). Now, we have \(\textsf {Supp}(m) = \textsf {Supp}(y) = \textsf {Supp}(n) = 3\), so they are placed on level 3 and removed from \({\mathcal {G}}\). Since no vertex v in \({\mathcal {G}}\) now satisfies \(\textsf {Supp}(v) > 3\), we increase h to 4. At this time, we have \(\textsf {Supp}(f) = \textsf {Supp}(g) = \textsf {Supp}(h) = 4\) and \(\textsf {Supp}(e) = \textsf {Supp}(k) =\textsf {Supp}(i) = 5\). Thus, vertices f, g, and h are removed together in the same batch, so f, g, and h are placed on the first sub-level of level 4. After removing f, g, and h, we have \(\textsf {Supp}(e) = 0\), \(\textsf {Supp}(k) = 1\), and \(\textsf {Supp}(i) = 2\). Hence, vertices e, k, and i must be removed in the same batch, so they are placed on the second sub-level of level 4. After removing e, k, and i, no vertex v in \({\mathcal {G}}\) satisfies \(\textsf {Supp}(v) \le 4\), so we increase h to 5. Later, vertices x and z are placed on level 5. Finally, h is increased to 6, and vertices a, b, c, and d are placed on level 6.

7.2 The faster d-CC computation method

In this subsection, we propose a faster d-CC computation method based on the index structure.

Main idea Recall that, in the dCC procedure proposed in Sect. 3, we need to repeatedly check whether each remaining vertex satisfies the degree constraint of the d-CC. Clearly, there involve lots of redundant vertex examinations. To alleviate this, in the fast d-CC computation method, we accelerate the d-CC computation process by using the following two strategies.

Strategy 1: Safely eliminating vertices without examination In the dCC procedure, for each remaining vertex v, we need to check the degree of v on each layer to decide whether to remove v. However, if we have already known that v must not exist in the d-CC by other means in advance, we can directly eliminate it without examination.

Strategy 2: Terminating vertices’ examination early During the dCC procedure, if a vertex v is removed from the graph, for each vertex u adjacent to v on some layer, we need to update the degree of u on each layer, whereas, if we have already known that u must in or must not in the d-CC, there is no need to update u’s degree afterward. In other words, we can terminate the examination of such vertices early.

The two strategies can help reduce lots of redundant vertex examinations in the d-CC computation. In the following, we describe their implementation details.

Details of Strategy 1 By exploiting the index structure, we can detect some vertices that must not in the d-CC \(C^{d}_{L}({\mathcal {G}})\) before examination. To this end, we first introduce some useful concepts as follows.

For each vertex w in the index, we call w a candidate vertex if \(L \subseteq L(w)\). w is possible to exist in \(C^{d}_{L}({\mathcal {G}})\). For any two vertices w and z in the index, we denote \(w \prec z\) if z is placed on a higher level than w and (w, z) is an edge in the index. If there exists a sequence of vertices \(w_0, w_1, \dots , w_n\) in the index such that \(w_0\) is a candidate vertex, and \(w_i \prec w_{i+1}\) for \(0 \le i < n\), we say there exists a candidate path in the index to the vertex \(w_n\).

With the concept of candidate path, the following lemma states a necessary condition for a vertex w existing in \(C^{d}_{L}({\mathcal {G}})\). In the d-CC computation, we can directly eliminate all vertices that do not satisfy the following condition.

Lemma 10

For each vertex \(w \in C^{d}_{L}({\mathcal {G}})\), there must exist a candidate path in the index to w.

For example, consider the index in Fig. 14. Let \(L = \{ 3, 4, 5\}\). We find that vertex w does not satisfy \(L \subseteq L(w)\), so w is not in \(C^{d}_{L}({\mathcal {G}})\). In the next level, there are no candidate paths to vertices m, y, and n, so they can also be removed. On the remaining levels, we can also remove vertices g, h and e. Therefore, we only need to examine vertices a, b, c, d, f, k, i, x, and z.

Details of Strategy 2 To achieve the early termination, we set each vertex v in the index to be in one of the following four states:

-

discarded if it has been determined that \(v \not \in C^{d}_{L^{\prime }}({\mathcal {G}})\);

-

existing if it has been determined that \(v \in C^{d}_{L^{\prime }}({\mathcal {G}})\);

-

undetermined if v has been checked but has not be determined if \(v \in C^{d}_{L^{\prime }}({\mathcal {G}})\);

-

unexplored if it has not been checked by the search process.

In the d-CC computation process, a discarded vertex or an existing vertex will not be involved in the following computation; an undetermined vertex may become discarded due to the deletion of some edges or become existing if it connects to sufficient number of existing vertices; an unexplored vertex will become undetermined after examination or directly become discarded if it does not satisfy the condition stated in Lemma 10.

For each vertex v whose state is firstly setting to be undetermined, discarded, and existing, we use the ProcessUnd procedure, the ProcessDis procedure and the ProcessEst procedure (in Fig. 15) to process the effects to other vertices of changing v’s state, respectively. The details of the three procedures are elaborated as follows.

For each \(i \in L\), let \(d_{i}^{+}(v)\) be the number of non-discarded vertices adjacent to v in \(G_i\) and let \(d_{i}^{*}(v)\) be the number of existing vertices adjacent to v in \(G_i\). Clearly, \(d_{i}^{+}(v)\) is an upper bound on v’s degree in \(G_i\). If \(d_{i}^{+}(v) < d\) for some \(i \in L\), we must have \(v \not \in C^{d}_{L}({\mathcal {G}})\), so we set v as discarded (line 2) and invoke the ProcessDis procedure on v (line 3). If \(d_{i}^{*}(v) \ge d\) for each \(i \in L\), we must have \(v \in C^{d}_{L}({\mathcal {G}})\), so we set v as existing (line 5) and invoke the ProcessEst procedure on v (line 6). Otherwise, since v is undetermined, there may exist a candidate path to each vertex u such that \(v \prec u\). By Lemma 10, u may exist in \(C^{d}_{L}({\mathcal {G}})\). Therefore, if u is unexplored, we set u as undetermined (line 10) and recursively invoke the ProcessUnd procedure to further check the vertex u (line 11).

For each \(i \in L\), let \(d_{i}^{+}(v)\) be the number of non-discarded vertices adjacent to v in \(G_i\) and let \(d_{i}^{*}(v)\) be the number of existing vertices adjacent to v in \(G_i\). Clearly, \(d_{i}^{+}(v)\) is an upper bound on v’s degree in \(G_i\). If \(d_{i}^{+}(v) < d\) for some \(i \in L\), we must have \(v \not \in C^{d}_{L}({\mathcal {G}})\), so we set v as discarded (line 2) and invoke the ProcessDis procedure on v (line 3). If \(d_{i}^{*}(v) \ge d\) for each \(i \in L\), we must have \(v \in C^{d}_{L}({\mathcal {G}})\), so we set v as existing (line 5) and invoke the ProcessEst procedure on v (line 6). Otherwise, since v is undetermined, there may exist a candidate path to each vertex u such that \(v \prec u\). By Lemma 10, u may exist in \(C^{d}_{L}({\mathcal {G}})\). Therefore, if u is unexplored, we set u as undetermined (line 10) and recursively invoke the ProcessUnd procedure to further check the vertex u (line 11).

If v is set as discarded, the removal of v may trigger the removal of other vertices. Therefore, for each undetermined or unexplored vertex u adjacent to v in the index, we decrease \(d_{i}^{+}(u)\) by 1 if (u, v) is an edge on a layer \(i \in L\) (line 3). If \(d_{i}^{+}(u) < d\) for some \(i \in L\), we also set u as discarded (line 5) and recursively invoke the ProcessDis procedure on u (line 6).

If v is set as discarded, the removal of v may trigger the removal of other vertices. Therefore, for each undetermined or unexplored vertex u adjacent to v in the index, we decrease \(d_{i}^{+}(u)\) by 1 if (u, v) is an edge on a layer \(i \in L\) (line 3). If \(d_{i}^{+}(u) < d\) for some \(i \in L\), we also set u as discarded (line 5) and recursively invoke the ProcessDis procedure on u (line 6).

If v is set as existing, we may find more existing vertices from v. Specifically, for each undetermined or unexplored vertex u adjacent to v in the index I, we increase \(d_{i}^{*}(u)\) by 1 if (u, v) is an edge on a layer \(i \in L\) (line 3). If \(d_{i}^{*}(u) \ge d\) for each \(i \in L\), we also set u as existing (line 5) and recursively invoke the ProcessEst procedure on u (line 6).

If v is set as existing, we may find more existing vertices from v. Specifically, for each undetermined or unexplored vertex u adjacent to v in the index I, we increase \(d_{i}^{*}(u)\) by 1 if (u, v) is an edge on a layer \(i \in L\) (line 3). If \(d_{i}^{*}(u) \ge d\) for each \(i \in L\), we also set u as existing (line 5) and recursively invoke the ProcessEst procedure on u (line 6).

Fast d-CC computation We present the FastdCC procedure to faster compute the d-CC in Fig. 16. The input of FastdCC includes the multi-layer graph \({\mathcal {G}}\), integers \(d, s \in {\mathbb {N}}\), the layer subset L, the index I, and two vertices subset X and Y. The two vertices subset X and Y satisfy that \(X \subseteq C^{d}_{L}({\mathcal {G}}) \subseteq Y\). At the very beginning, we obtain the vertex subset \(Z = Y \cap (\bigcup _{h = |L^{\prime }|}^{l({\mathcal {G}})} I_{h} )\) (line 1) and remove all vertices not in Z from the graph \({\mathcal {G}}\) and the index I (line 2). This is because we only need to consider vertices in Z to compute \(C^{d}_{L}({\mathcal {G}}) \) by the following lemma.

Lemma 11

\(C^{d}_{L}({\mathcal {G}}) \subseteq Y \cap \left( \bigcup _{h = |L|}^{l({\mathcal {G}})} I_{h} \right) \).

Before the search starts, we compute \(d_{i}^{+}(v)\) and \(d_{i}^{*}(v)\) for all vertices \(v \in V({\mathcal {G}})\) and for all layers \(i \in L\) (line 4). Initially, the state of a vertex v of \({\mathcal {G}}\) is set to be existing if it is in X (line 6) and unexplored otherwise (line 8).

In the main search process, we check the vertices in the index in a level-by-level fashion from lower levels to higher levels. In each iteration (lines 10–17), we examine all vertices on a level of the index. Based on the states of vertices on the level, they are processed in two cases:

Case 1 (lines 10–13) There exist some undetermined vertices in the level. At this time, since all vertices in lower levels have been examined, it implies that there exist no candidate paths to each unexplored vertex v in the level. By Lemma 10, we must have \(v \not \in C^{d}_{L}({\mathcal {G}})\). Therefore, we can directly set v to be discarded (line 12) and invoke Procedure ProcessDis on v (line 13).

Case 2 (lines 14–17) Otherwise, each unexplored vertex v on the level is potential to exist in \(C^{d}_{L}({\mathcal {G}})\). Therefore, we set v as undetermined (line 16) and invoke Procedure ProcessUnd to further check v (line 17).

After examining all levels in the index, \(C^{d}_{L}({\mathcal {G}})\) is exactly the set of all undetermined and existing vertices (line 18).

Following the previous example, let \(L =\{3, 4, 5\}\), \(X = \{a, b, c, d\}\), and \(Y = \{a, b, c, d, f, k, i, x, z\}\). All vertices in X are set to be existing. First, we examine vertex f in the lowest level. f remains to be undetermined. Therefore, we further check vertices k and i in the next level of f. For vertex k, we have \(d_{3}^{+}(k) = 1\) and \(d_{3}^{-}(k) = 0\), so k is set to be discarded. Then, we proceed to check k’s neighbors. For vertex i, we have \(d_{4}^{+}(i) = 1\) and \(d_{4}^{-}(i) = 1\), so i is also set to be discarded. Next, we have \(d_{3}^{+}(f) = 1\) and \(d_{3}^{-}(f) = 1\). Therefore, f is set to be discarded. After that, we check vertices x and z. The states of x and z remain undetermined. Finally, we obtain \(C^{d}_{\{3, 4, 5\}} = \{ a, b, c, d, x, z\}\).

Correctness analysis The correctness of the FastdCC procedure can be guaranteed by the following lemma.

Lemma 12

For any vertex \(v \not \in C^{d}_{L}({\mathcal {G}})\), v must be set to discarded in the FastdCC procedure.

Complexity analysis Let \(n = |V({\mathcal {G}})|\), \(l = l({\mathcal {G}})\), \(m_{i} = |E_i({\mathcal {G}})|\), \(m = |\bigcup _{i \in [l({\mathcal {G}})]} E_i({\mathcal {G}}) |\), and \(m^{\prime } = \sum _{i \in [l({\mathcal {G}})]} m_{i}\). The following lemma states the time complexity of FastdCC. Since \(m^{\prime } \le ml\), the time complexity of FastdCC is always lower than that of dCC. For the space complexity, the FastdCC procedure needs O(n) extra space to store the states of all vertices and O(nl) extra space to \(d^{+}_{i}(v)\) and \(d^{*}_{i}(v)\) of all vertices in all layers in L. Therefore, the space complexity of FastdCC is \(O(n + nl) = O(nl)\).

Lemma 13

The time complexity of the FastdCC procedure is \(O(nl + m^{\prime })\).

7.3 The optimized algorithms

We present several optimized DCCS algorithms, namely GD-DCCS+, BU-DCCS+, TD-DCCS+, in this subsection.

Optimized greedy algorithm The GD-DCCS+ algorithm can be simply obtained by two minor modifications of the GD-DCCS algorithm:

-

(1)

Before line 2 of GD-DCCS, we add a statement “\(I \leftarrow \textsf {BuildIndex}({\mathcal {G}}, d)\)” to construct the index I.

-

(2)

Line 7 of GD-DCCS is replaced by the statement “\(C^{d}_{L}({\mathcal {G}}) \leftarrow \textsf {FastdCC}({\mathcal {G}}, d, s, L, I, \emptyset , S)\).”

Optimized bottom-up algorithm The BU-DCCS+ algorithm can be simply obtained by several minor modifications of the BU-DCCS algorithm, the InitTopk procedure, and the BU-Gen procedure:

-

(1)

Before line 4 of BU-DCCS, we add a statement “\(I \leftarrow \textsf {BuildIndex}({\mathcal {G}}, d)\)” to construct the index I.

-

(2)

InitTopK adds the index I as an input parameter. Line 10 of InitTopK is replaced by the statement “\(C^{\prime } \leftarrow \textsf {FastdCC}({\mathcal {G}}, d, s, L, I, \emptyset , C)\).”

-

(3)

BU-Gen adds the index I as an input parameter. Line 6 and line 20 of BU-Gen are replaced by the statement “\(C^{d}_{L^{\prime }}({\mathcal {G}}) \leftarrow \textsf {FastdCC}({\mathcal {G}}, d, s, L^{\prime }, I, \emptyset , C^{d}_{L}({\mathcal {G}}) \cap C^{d}(G_j))\).”

-

(4)

The internal d-CC computation pruning method proposed in Sect. 5.2 needs to be adapted to be applied in the BU-Gen procedure. In the FastdCC procedure, we can also terminate the computation of d-CC early sometimes. Specifically, the FastdCC procedure, and the ProcessDis procedure take the size \(\frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\) as an input parameter. In the ProcessDis procedure, we check whether the number of the non-discarded vertices is less than \(\frac{1}{k}|\textsf {Cov}({\mathcal {R}})| + |{\varDelta }({\mathcal {R}}, C^*({\mathcal {R}}))|\) at the very beginning. If so, we can immediately terminate the FastdCC procedure and safely skip the execution of lines 21–25 in the BU-Gen procedure.

Optimized top-down algorithm The TD-DCCS+ algorithm can be simply obtained by several minor modifications of the TD-DCCS algorithm, the InitTopk procedure, and the TD-Gen procedure:

-

(1)

Before line 4 of TD-DCCS, we add a statement “\(I \leftarrow \textsf {BuildIndex}({\mathcal {G}}, d)\)” to construct the index I.

-

(2)

Line 4 of TD-DCCS is replaced by the statement “\(C^{d}_{[l({\mathcal {G}})]}({\mathcal {G}}) \leftarrow \textsf {FastdCC}({\mathcal {G}}, d, s, L, I, \emptyset , V({\mathcal {G}}))\).”

-

(3)

InitTopK adds the index I as an input parameter. Line 10 of InitTopK is replaced by the statement ”\(C^{\prime } \leftarrow \textsf {FastdCC}({\mathcal {G}}, d, s, L, I, \emptyset , C)\).”

-

(4)