Abstract

A reaction–diffusion–advection predator–prey model with Holling type-II predator functional response is considered. We show the stability/instability of the positive steady state and the existence of a Hopf bifurcation when the diffusion and advection rates are large. Moreover, we show that advection rate can affect not only the occurrence of Hopf bifurcations but also the values of Hopf bifurcations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The influence of environmental heterogeneity on population dynamics has been studied extensively. For example, environmental heterogeneity can increase the total population size for a single species [21]. For two competing species in heterogeneous environments, if they are identical except dispersal rates, then the slower diffuser wins [7], whereas they can coexist in homogeneous environments. The global dynamics for the weak competition case was investigated in [19, 21], and it was completely classified in [12]. The heterogeneity of environments can also induce complex patterns for predator–prey interaction models, see [9, 10, 20] and references therein.

In heterogeneous environments, the population may have a tendency to move up or down along the gradient of the environments, which is referred to as a “advection” term [2]. That is, the random diffusion term \(d\Delta u\) is replaced by

where d is the diffusion rate, \(\alpha \) is the advection rate, and m(x) represents the heterogeneity of environment. The effect of advection as (1.1) on population dynamics has been studied extensively for single and two competing species, see, e.g., [1,2,3,4,5,6, 16, 17, 22, 45]. There also exists another kind of advection for species in streams, and the random diffusion term \(du_{xx}\) is now replaced by

where \(\alpha u_x\) represents the unidirectional flow from the upstream end to the downstream end. It is shown that, if the two competing species in streams are identical except dispersal rates, then the faster diffuser wins [23, 26, 27, 44]. In [24, 33, 37, 39, 42], the authors showed the effect of advection as (1.2) on the persistence of the predator and prey. One can also refer to [13,14,15, 18, 28,29,30, 32, 36] and references therein for population dynamics in streams.

As is well known, periodic solutions occur commonly for predator–prey models [31], and Hopf bifurcation is a mechanism to induce these periodic solutions. For diffusive predator–prey models in homogeneous environments, Hopf bifurcations can be investigated following the framework of [11, 43], see also [8, 35, 40, 41] and references therein. A natural question is how advection affects Hopf bifurcations for predator–prey models in heterogeneous environments.

In this paper, we aim to give an initial exploration for this question, and investigate the effect of advection as (1.1) on Hopf bifurcations for the following predator–prey model:

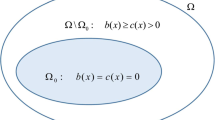

Here \(\Omega \) is a bounded domain in \(\mathbb R^N\;(1\le N\le 3)\) with a smooth boundary \(\partial \Omega \); n is the outward unit normal vector on \(\partial \Omega \), and no-flux boundary conditions are imposed; u(x, t) and v(x, t) denote the population densities of the prey and predator at location x and time t, respectively; \(d_1,d_2>0\) are the diffusion rates; \(\alpha _1\ge 0\) is the advection rate; \(l>0\) is the conversion rate; \(r>0\) is the death rate of the predator; and the function \(u/(1 + u)\) denotes the Holling type-II functional response of the predator to the prey density. The function m(x) represents the intrinsic growth rate of the prey, which depends on the spatial environment.

Throughout the paper, we impose the following assumption:

- \((\textbf{H}_1)\):

-

\(m(x)\in C^2 (\overline{\Omega })\), \(m(x)\ge (\not \equiv )0\) in \(\overline{\Omega }\), and m(x) is non-constant.

- \((\textbf{H}_2)\):

-

\(\displaystyle \frac{d_2}{d_1}=\theta >0\) and \(\displaystyle \frac{\alpha _1}{d_1}=\alpha \ge 0\).

Here \((\textbf{H}_2)\) is a mathematically technical condition, and it means that the dispersal and advection rates of the prey and predator are proportional. Then letting \(\tilde{u}=e^{-\alpha m(x)} u,\tilde{t}=d_1 t\), denoting \(\lambda = 1/ d_1\), and dropping the tilde sign, model (1.3) can be transformed to the following model:

where \(\theta \) and \(\alpha \) are defined in assumption \((\textbf{H}_2)\).

We remark that model (1.3) with \(\alpha _1=0\) (or respectively, model (1.4) with \(\alpha =0\)) was investigated in [25, 38], and they showed that the heterogeneity of the environment can influence the local dynamics, and multiple positive steady states can bifurcate from the semi-trivial steady state by using \(d_1,d_2\) (or respectively, \(\lambda \)) as the bifurcation parameters. In this paper, we consider model (1.4) for the case that \(\alpha \ne 0\) and \(0<\lambda \ll 1\). We show that when \(0<\lambda \ll 1\), the local dynamics of model (1.4) is similar to the following “weighted” ODEs:

A direct computation implies that model (1.5) admits a unique positive equilibrium \((c_{0l},q_{0l})\) if and only if \(l>\tilde{l}\), where \((c_{0l},q_{0l})\) and \(\tilde{l}\) are defined in Lemma 2.1. From the proof of Lemma 3.4, one can obtain the local dynamics model (1.5) as follows:

-

(i)

If \({\mathcal {T}}(\alpha )<0\), then the positive equilibrium \((c_{0l},q_{0l})\) of model (1.5) is stable for \(l>\tilde{l}\);

-

(ii)

If \({\mathcal {T}}(\alpha )>0\), then there exists \(l_0>\tilde{l}\) such that \((c_{0l},q_{0l})\) is stable for \(\tilde{l}<l<l_0\) and unstable for \(l>l_0\), and model (1.5) undergoes a Hopf bifurcation when \(l=l_0\).

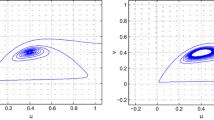

Here \({\mathcal {T}}(\alpha )\) and \(l_0\) are defined in Lemma 3.3. Similarly, model (1.4) admits a unique positive steady state \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) for \((l,\lambda )\in [\tilde{l}+\epsilon ,1/\epsilon ]\times (0,\delta _\epsilon ]\) with \(0<\epsilon \ll 1\), where \(\delta _\epsilon \) depends on \(\epsilon \) (Theorem 2.5), and admits similar local dynamics as model (1.5) when \(l\in [\tilde{l}+\epsilon ,1/\epsilon ]\) and \(0<\lambda \ll 1\) (Theorem 3.10), see also Fig. 1. Moreover, we show that the sign of \({\mathcal {T}}(\alpha )\) is key to guarantee the existence of a Hopf bifurcation curve for model (1.4). We obtain that if \(\int _{\Omega }(m(x)-1)dx<0\) and \(\{x\in \Omega :m(x)>1\}\ne \emptyset \), then there exists \(\alpha _*>0\) such that \({\mathcal {T}}(\alpha _*)=0\), \({\mathcal {T}}(\alpha )<0\) for \(0\le \alpha <\alpha _*\), and \({\mathcal {T}}(\alpha )>0\) for \(\alpha >\alpha _*\) (Theorem 4.2). Therefore, the advection rate affects the occurrence of Hopf bifurcations (Proposition 4.3). Moreover, we find that the advection rate can also affect the values of Hopf bifurcations (Proposition 4.4).

Local dynamics of model (1.4) for \((l,\lambda )\in [\tilde{l}+\epsilon ,1/\epsilon ]\times (0,\tilde{\lambda }_j(\alpha ,\epsilon ))\) with \(0<\epsilon \ll 1\). Here \(\tilde{\lambda }_j(\alpha ,\epsilon )\) means that \(\tilde{\lambda }_j\) depends on \(\alpha \) and \(\epsilon \) for \(j=1,2\). (Left): \({\mathcal {T}}(\alpha )<0\); (Right): \({\mathcal {T}}(\alpha )>0\)

For simplicity, we list some notations for later use. We denote

Denote the complexification of a real linear space Z by

the kernel and range of a linear operator T by \({\mathcal {N}} (T)\) and \({\mathcal {R}} (T)\), respectively. For \( Y_{\mathbb C}\), we choose the standard inner product \(\langle u, v \rangle =\int _\Omega {\overline{u}(x)v(x)}dx,\) and the norm is defined by \(\Vert u\Vert _2={\langle u, u \rangle }^{\frac{1}{2}}.\)

The rest of the paper is organized as follows. In Sect. 2, we show the existence and uniqueness of the positive steady state for a range of parameters, see the rectangular region in Fig. 1. In Sect. 3, we obtain the local dynamics of model (1.4) when \((l,\lambda )\) is in the above rectangular region. In Sect. 4, we show the effect of advection on Hopf bifurcations.

2 Positive steady states

In this section, we consider the positive steady states of model (1.4), which satisfy the following system:

Denote

and we have the following decompositions:

where

Let

Then substituting (2.5) into (2.1), we see that (u, v) (defined in (2.5)) is a solution of (2.1) if and only if \((c,q,\xi ,\eta )\in \mathbb R^2\times X_1^2\) solves

where \(F(c,q,\xi ,\eta ,l,\lambda ):\mathbb R^2\times X_1^2\times \mathbb R^2\rightarrow \left( \mathbb R\times Y_1\right) ^2\), and

We first solve \(F(c,q,\xi ,\eta ,l,\lambda )=0\) for \(\lambda =0\).

Lemma 2.1

Suppose that \(\lambda =0\), and let

Then, for any \(l>0\), \( F(c,q,\xi ,\eta ,l,\lambda )= 0\) has three solutions: (0, 0, 0, 0), \((\tilde{c},0,0,0)\) and \((c_{0l},q_{0l},0,0)\), where \((c_{0l},q_{0l})\) satisfies

Moreover, \(c_{0l},q_{0l}>0\) if and only if \(l>\tilde{l}\).

Proof

Substituting \(\lambda =0\) into \(f_2=0\) and \(f_4=0\), respectively, we have \( \xi = 0\) and \( \eta = 0\). Then substituting \(\xi =\eta =0\) into \(f_1=0\) and \(f_3=0\), respectively, we have

Therefore, (2.10) has three solutions: (0, 0), \((\tilde{c},0)\), \((c_{0l},q_{0l})\), where \(\tilde{c}\) is defined in (2.8), and \((c_{0l},q_{0l})\) satisfies

A direct computation implies that \(c_{0l}\) and \(q_{0l}\) satisfy (2.9). By the second equation of (2.9), we see that \(c_{0l},q_{0l}>0\) if and only if \(0<c_{0l}<\tilde{c}\). It follows from the first equation of (2.9) that

Then we obtain that \(0<c_{0l}<\tilde{c}\) if and only if \(l>\tilde{l}\), where \(\tilde{l}\) is defined in (2.8). This completes the proof.\(\square \)

We remark that \(\tilde{l}\) is the critical value for the successful invasion of the predator for model (1.4) with \(0<\lambda \ll 1\) (or respectively, (1.5)). In the following we will consider the monotonicity of \(\tilde{l}\) with respect to \(\alpha \) and show the effect of advection rate on the invasion of the predator.

Proposition 2.2

Let \(\tilde{l}(\alpha )\) be defined in (2.8). Then

where

Moreover, the following statements hold:

-

(i)

\(\tilde{l}'(\alpha )|_{\alpha =0} < 0;\)

-

(ii)

If \(\displaystyle \lim \nolimits _{\alpha \rightarrow \infty } V(\alpha )=0\), then \(\displaystyle \lim \nolimits _{\alpha \rightarrow +\infty } \tilde{l}(\alpha )=\infty \). Especially, if \(\Omega =(0,1)\) and \(m'(x)>0\) (or respectively, \(m'(x)<0\)) for all \(x\in [0,1]\), then \(\displaystyle \lim \nolimits _{\alpha \rightarrow +\infty } V(\alpha )=0\).

Proof

Since function \(\displaystyle \frac{x}{1+x}\) is concave, it follows from the Jensen’s inequality that

where \(\tilde{c}(\alpha )\) is defined in (2.8). This combined with (2.8) implies that (2.12) holds.

(i) We define

and consequently, \(\displaystyle \tilde{l}(\alpha )=\frac{r |\Omega |}{{\mathcal {F}}(\alpha )}\). A direct computation yields

Since m(x) is non-constant, it follows from the Hölder inequality that \({\mathcal {F}}'(0)>0\), and consequently \(\tilde{l}'(0)<0\).

(ii) By (2.12), we see that \(\displaystyle \lim \nolimits _{\alpha \rightarrow +\infty } \tilde{l}(\alpha )=\infty \) if \(\displaystyle \lim \nolimits _{\alpha \rightarrow \infty } V(\alpha )=0\). Next, we give a sufficient condition for \(\displaystyle \lim \nolimits _{\alpha \rightarrow \infty } V(\alpha )=0\). We only consider the case that \(m'(x)>0\) for all \(x\in \Omega \), and the other case can be proved similarly. Since

which implies that

Therefore,

which yields \(\displaystyle \lim \nolimits _{\alpha \rightarrow +\infty } V(\alpha )=0\).\(\square \)

It follows from Proposition 2.2 that \(\tilde{l}(\alpha )\) is strictly monotone decreasing when \(\alpha \) is small, and it may change its monotonicity at least once under certain condition. We conjecture that \(\tilde{l}(\alpha )\) changes its monotonicity even for general function m(x) and all \(\lambda >0\).

Now we solve (2.6) for \(\lambda >0\) by virtue of the implicit function theorem.

Lemma 2.3

For any \( l_*>\tilde{l}\), where \(\tilde{l}\) is defined in (2.8), there exists \(\tilde{\delta }_{l_*}>0\), a neighborhood \({\mathcal {O}}_{l_*}\) of \((c_{0l_*},q_{0l_*},0,0)\) in \(\mathbb R^2\times X_1^2\), and a continuously differentiable mapping

such that \(\left( c^{(\lambda ,l)},q^{(\lambda ,l)},\xi ^{(\lambda ,l)},\eta ^{(\lambda ,l)}\right) \in \mathbb R^2\times X_1^2\) is a unique solution of (2.6) in \({\mathcal {O}}_{l_*}\) for \((\lambda ,l)\in [0,\tilde{\delta }_{l_*}]\times [l_*-\tilde{\delta }_{l_*},l_*+\tilde{\delta }_{l_*}]\). Moreover,

Here \(c_{0l}\) and \(q_{0l}\) are defined in Lemma 2.1.

Proof

It follows from Lemma 2.1 that

where F is defined in (2.6). Then the Fréchet derivative of F with respect to \((c,q,\xi ,\eta )\) at \((c_{0l_*},q_{0l_*}, 0, 0,l_*,0)\) is as follows:

where \(\hat{c},\hat{q}\in \mathbb R\), \(\hat{\xi },\hat{\eta }\in X_1\), and

If \( G(\hat{c},\hat{q},\hat{\xi },\hat{\eta })= 0\), then \(\tilde{\xi }= 0\) and \( \hat{\eta }= 0\). Substituting \(\hat{\xi }=\hat{\eta }= 0\) into \(g_1=0\) and \(g_3=0\), respectively, we have

where

Noticing that

we obtain that \(\hat{c}=0\) and \(\hat{q}=0\). Therefore, G is injective and thus bijective. Then, we can complete the proof by the implicit function theorem.\(\square \)

By virtue of Lemma 2.3, we have the following result.

Theorem 2.4

Assume that \(l_*>\tilde{l}\), where \(\tilde{l}\) is defined in (2.8). Let

where \(0<\delta _{l_*}\ll 1\), and \(\left( c^{(\lambda ,l)},q^{(\lambda ,l)},\xi ^{(\lambda ,l)},\eta ^{(\lambda ,l)}\right) \) is obtained in Lemma 2.3. Then \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) is the unique positive solution of (2.1) for \((\lambda ,l)\in (0,\delta _{l_*}]\times [l_*-\delta _{l_*},l_*+\delta _{l_*}]\). Moreover,

where \(c_{0l}\) and \(q_{0l}\) are defined in Lemma 2.1.

Proof

It follows from Lemma 2.3 that when \(\delta _{l_*}<\tilde{\delta }_{l_*}\), \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) is a solution of (2.1) for \((\lambda ,l)\in (0,\delta _{l_*}]\times [l_*-\delta _{l_*},l_*+\delta _{l_*}]\), and

Note from Lemma 2.1 that \(c_{0l_*},q_{0l_*}>0\) if \(l_*>\tilde{l}\). Then \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) is a positive solution of (2.1) for \((\lambda ,l)\in (0,\delta _{l_*}]\times [l_*-\delta _{l_*},l_*+\delta _{l_*}]\) with \(0<\delta _{l_*}\ll 1\).

Next, we show that \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) is the unique positive solution of (2.1) for \((\lambda ,l)\in (0,\delta _{l_*}]\times [l_*-\delta _{l_*},l_*+\delta _{l_*}]\) with \(0<\delta _{l_*}\ll 1\). If it is not true, then there exists a sequence \(\{(\lambda _k,l_k)\}_{k=1}^\infty \) such that

and (2.1) admits a positive solution \((u_k,v_k)\) for \((\lambda ,l)=(\lambda _k,l_k)\) with

It follows from (2.3) that \((u_k,v_k)\) can also be decomposed as follows:

Plugging \((u,v,\lambda ,l)=(u_k, v_k,\lambda _k,l_k)\) into (2.1), we see that

where \(f_i\;(i=1,2,3,4)\) are defined in (2.7). It follows from (2.1) that

where we have used the divergence formula to obtain the second equation. From the maximum principle and the first equation of (2.19), we have

This, together with the second equation of (2.19), implies that

Here we remark that \(\max _{k\ge 1}l_k<\infty \) from (2.16). Consequently,

Note from (2.1) and (2.16) that

where we have used \(0<\lambda _k\ll 1\) (see (2.16)) for the first inequality. Note that \(\Omega \) is a bounded domain in \(\mathbb R^N\) with \(1\le N\le 3\). Then we see from [34, Lemma 2.1] that there exists a positive constant \(C_0\), depending only on r and \(\Omega \), such that

where we have used (2.21) in the last step.

Note from (2.20) and (2.22) that \( \{u_k\}_{k=1}^\infty \) is bounded in \(L^{\infty } (\Omega )\), and \( \{v_k\}_{k=1}^\infty \) is bounded in \(L^2 (\Omega )\). Since \(\lim _{k\rightarrow \infty }\lambda _k=0\), we see from (2.18) with \(i=2,4\) that

which implies that

Here \(X_1\) and \(Y_1\) are defined in (2.4). By (2.5), (2.20) and (2.22), we see that

and \(\{c_k\}_{k=1}^\infty \), \(\{q_k\}_{k=1}^\infty \) are bounded. Then, up to a subsequence, we see that

Taking the limits of (2.18) with \(i=1,3\) on both sides as \(k\rightarrow \infty \), respectively, we obtain that \((c^*,q^*)\) satisfies (2.10) with \(l=l_*\).

We first claim that

Suppose that it is not true. Then by (2.23) and the embedding theorems, we see that, up to a subsequence,

This yields, for sufficiently large k,

Substituting \((u,v,\lambda ,l)=(u_k,v_k,\lambda _k,l_k)\) into (2.1), and integrating the result over \(\Omega \), we obtain that

which is a contradiction. Next, we show that

By way of contradiction, \((c^*,q^*)=(\tilde{c},0)\). Similarly, by (2.23) and the embedding theorems, we see that, up to a subsequence,

From the second equation of (2.1), we have

Then taking \(k\rightarrow \infty \) on both sides of (2.24) yields

which is a contradiction.

Therefore,

This, combined with (2.23) and Lemma 2.3, implies that, for sufficiently large k,

and consequently, for sufficiently large k,

This contradicts (2.17), and the uniqueness is obtained.

By using similar arguments as in the proof of the uniqueness, we can show that, for any \(l\in [l_*-\delta _{l_*},l_*+\delta _{l_*}]\),

Note from Lemma 2.3 that \(\left( c^{(\lambda ,l)},q^{(\lambda ,l)},\xi ^{(\lambda ,l)},\eta ^{(\lambda ,l)}\right) \) is continuously differentiable for \((\lambda ,l)\in [0,\delta _{l_*}]\times [l_*-\delta _{l_*},l_*+\delta _{l_*}]\). Then we see that (2.15) holds. This completes the proof.\(\square \)

From Theorem 2.4, we see that (2.1) admits a unique positive solution when \((l,\lambda )\) is in a small neighborhood of \((l_*,0)\) with \(l_*>\tilde{l}\). In the following, we will solve (2.1) for a wide range of parameters, see the rectangular region in Fig. 1.

Theorem 2.5

Let \({\mathcal {L}}:=[ \tilde{l}+\epsilon ,1/\epsilon ]\), where \(0<\epsilon \ll 1\) and \( \tilde{l}\) is defined in Lemma 2.1. Then the following statements hold.

-

(i)

There exists \(\delta _{\epsilon } >0\) such that, for \((\lambda ,l)\in (0,\delta _\epsilon ]\times {\mathcal {L}}\), model (2.1) admits a unique positive solution \((u^{(\lambda ,l)},v^{(\lambda ,l)})\).

-

(ii)

Let \((u^{(0,l)},v^{(0,l)})=\left( c_{0l},q_{0l}\right) \) for \(l\in {\mathcal {L}}\), where \(\left( c_{0l},q_{0l}\right) \) is defined in Lemma 2.1. Then \((u^{(\lambda ,l)},v^{(\lambda ,l)})\) is continuously differentiable for \((\lambda ,l)\in [0,\delta _\epsilon ]\times {\mathcal {L}}\), and \((u^{(\lambda ,l)},v^{(\lambda ,l)})\) can be decomposed as follows:

$$\begin{aligned} u^{(\lambda ,l)}=c^{(\lambda ,l)}+\xi ^{(\lambda ,l)},\;\;v^{(\lambda ,l)}=q^{(\lambda ,l)}+\eta ^{(\lambda ,l)}, \end{aligned}$$where \(\left( c^{(\lambda ,l)},q^{(\lambda ,l)},\xi ^{(\lambda ,l)},\eta ^{(\lambda ,l)}\right) \in \mathbb R^2\times X_1^2\) solves Eq. (2.6) for \((\lambda ,l)\in [0,\delta _\epsilon ]\times {\mathcal {L}}\).

Proof

It follows from Lemma 2.4 that, for any \(l_*\in {\mathcal {L}}\), there exists \(\delta _{l_*}\) (\(0<\delta _{l_*}\ll 1\)) such that, for \((\lambda ,l)\in (0,\delta _{l_*}]\times [ l_*-\delta _{l_*}, l_*+\delta _{l_*}]\), system (2.1) admits a unique positive solution \((u^{(\lambda ,l)},v^{(\lambda ,l)})\), where \(u^{(\lambda ,l)}\) and \(v^{(\lambda ,l)}\) are defined in (2.14) and continuously differentiable for \((\lambda ,l)\in [0,\delta _{l_*}]\times [l_*-\delta _{l_*},l_*+\delta _{l_*}]\). Clearly,

Noticing that \({\mathcal {L}}\) is compact, we see that there exist finite open intervals, denoted by \(\left( {l_*^{(i)}} -\delta _{ l^{(i)}_*},l^{(i)}_* +\delta _{l^{(i)}_*} \right) \) for \(i=1,\ldots ,s\), such that

Choose \( \delta _\epsilon = \min _{1 \le i\le s} \delta _{ l_*^{(i)}}\). Then, for \((\lambda ,l)\in (0,\delta _\epsilon ]\times {\mathcal {L}}\), system (2.1) admits a unique positive solution \((u^{(\lambda ,l)},v^{(\lambda ,l)})\). By Lemma 2.3 and Theorem 2.4, we see that \((u^{(\lambda ,l)},v^{(\lambda ,l)})\) is continuously differentiable on \([0,\delta _\epsilon ]\times {\mathcal {L}}\), if \((u^{(0,l)},v^{(0,l)})=\left( c_{0l},q_{0l}\right) \).\(\square \)

Using similar arguments as in Lemma 2.3 and Theorems 2.4 and 2.5, we can show the nonexistence of positive solution under certain condition, and here we omit the proof for simplicity.

Theorem 2.6

Let \({\mathcal {L}}_1:=[\epsilon _1,\tilde{l}-\epsilon _1]\), where \(0<\epsilon _1\ll 1\) and \( \tilde{l}\) is defined in (2.8). Then there exists \(\delta _{\epsilon _1} >0\) such that, for \((\lambda ,l)\in (0,\delta _{\epsilon _1}]\times {\mathcal {L}}_1\), model (2.1) admits no positive solution.

3 Stability and Hopf bifurcation

Throughout this section, we let \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) be the unique positive solution of model (2.1) obtained in Theorem 2.5. In the following, we use l as the bifurcation parameter, and show that under certain condition there exists a Hopf bifurcation curve \(l=l_\lambda \) when \(\lambda \) is small.

Linearizing model (1.4) at \(\left( u^{(\lambda ,l)}, v^{(\lambda ,l)}\right) \), we obtain

where

Let

Then, \(\mu \in \mathbb C\) is an eigenvalue of \(A_{l}(\lambda )\) if and only if there exists \( (\varphi , \psi )^T\left( \ne (0,0)^T\right) \in X^2_{\mathbb C}\) such that

We first give a priori estimates for solutions of (3.3) for later use.

Lemma 3.1

Let \({\mathcal {L}}\) and \(\delta _\epsilon \) be defined and obtained in Theorem 2.5. Suppose that \((\mu _\lambda ,l_\lambda , \varphi _\lambda , \psi _\lambda )\) solves (3.3) for \(\lambda \in (0,\delta _\epsilon ]\), where \({\mathcal {R}}e \mu _\lambda \ge 0\), \((\varphi _\lambda , \psi _\lambda )^T\left( \ne (0,0)^T\right) \in X^2_{\mathbb C}\) and \(l_\lambda \in {\mathcal {L}}\). Then \(\left| \mu _\lambda /\lambda \right| \) is bounded for \(\lambda \in (0, \delta _\epsilon ]\).

Proof

Substituting \((\mu _\lambda ,l_\lambda , \varphi _\lambda , \psi _\lambda )\) into Eq. (3.3), we have

Then multiplying the first and second equations of (3.4) by \( e^{\alpha m(x)}\overline{\varphi }_\lambda \) and \(\overline{\psi }_\lambda \), respectively, summing these two equations, and integrating the result over \(\Omega \), we have

We see from the divergence theorem that

Noticing from Theorem 2.5 that \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) :[0,\delta _\epsilon ]\times {\mathcal {L}}\rightarrow X^2 \) is continuously differentiable, we see from the imbedding theorems that there exists a positive constant \({\mathcal {P}}_*\) such that

Then, we see from (3.5)–(3.7) that

Similarly, we can obtain that

This completes the proof.\(\square \)

To analyze the stability of \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \), we need to consider whether the eigenvalues of (3.3) could pass through the imaginary axis. It follows from Lemma 3.1 that if \(\mu =\textrm{i} \sigma \) is an eigenvalue of (3.3), then \(\nu =\sigma /\lambda \) is bounded for \(\lambda \in (0,\delta _\epsilon ]\), where \(\delta _\epsilon \) is obtained in Theorem 2.5. Substituting \(\mu =\textrm{i}\lambda \nu \;(\nu \ge 0)\) into (3.3), we have

Ignoring a scalar factor, \(( \varphi , \psi )^T\in X^2_{\mathbb C}\) in (3.8) can be decomposed as follows:

Now we can obtain an equivalent problem of (3.8) in the following.

Lemma 3.2

Let \(( \varphi , \psi )\) be defined in (3.9). Then \((\varphi , \psi ,\nu ,l)\) solves (3.8) with \(\nu \ge 0\), \(l\in {\mathcal {L}}\), if and only if \(( \delta , s_1,s_2, \nu ,w, z,l)\) solves

Here

is a continuously differentiable mapping from \(\mathbb R^4\times \left( \left( X_{1}\right) _{\mathbb C}\right) ^2\times {\mathcal {L}}\times [0,\delta _\epsilon ]\) to \(\left( \mathbb C\times \left( Y_{1}\right) _{\mathbb C}\right) ^2\times \mathbb R\), where

and \(M_{i}^{(\lambda ,l)}\) are defined in (3.1) for \(i=1,2,3,4\).

Proof

Note that \(f=c+z\) for any \(f\in Y_{\mathbb C}\), where

A direct computation implies that \({ H}(\delta , s_1,s_2, \nu , w,z,l,\lambda )\) is a continuously differentiable mapping from \(\mathbb R^4\times \left( \left( X_{1}\right) _{\mathbb C}\right) ^2\times {\mathcal {L}}\times [0,\delta _\epsilon ]\) to \(\left( \mathbb C\times \left( Y_{1}\right) _{\mathbb C}\right) ^2\times \mathbb R\). Denote the left sides of the two equations of (3.8) as \(G_1\) and \(G_2\), respectively. That is,

Plugging (3.9) into (3.8), wee see from (3.11) that \(G_1(\varphi , \psi ,\nu ,l,\lambda )=0\) if and only if

and \(G_2(\varphi , \psi ,\nu ,l,\lambda )=0\) if and only if

This completes the proof.\(\square \)

Note from (3.1) that

where \(c _{0l}\) and \(q_{0l}\) are defined in Lemma 2.1. Then we give the following result for further application.

Lemma 3.3

Let \({\mathcal {S}}(l):=\int _\Omega e^{\alpha m(x)} M_1^{(0,l)}dx\), where \(l\in {\mathcal {L}}\), and \({\mathcal {L}}:=[ \tilde{l}+\epsilon ,1/\epsilon ]\) is defined in Theorem 2.5 with \(0<\epsilon \ll 1\), and let \({\mathcal {T}} (\alpha ):=\int _\Omega e^{\alpha m(x)}( m(x) -1) dx\). Then the following two statements hold:

-

(i)

If \({\mathcal {T}} (\alpha )<0\), then \({\mathcal {S}}(l)<0\) for all \(l\in {\mathcal {L}}\);

-

(ii)

If \({\mathcal {T}} (\alpha )>0\), then there exists \(l_0\in {int}( \mathcal {L})=(\tilde{l}+\epsilon ,1/\epsilon )\) such that \({\mathcal {S}}(l_0)=0\), \({\mathcal {S}}'(l_0)>0\), \({\mathcal {S}}(l)<0\) for \(l\in [ \tilde{l}+\epsilon ,l_0)\), and \({\mathcal {S}}(l)>0\) for \(l\in (l_0,1/\epsilon ]\).

Proof

We construct an auxiliary function:

A direct computation implies that

Clearly, we see from (3.12) that

It follows from the first equation of (2.11) that

and plugging (3.15) into (3.14), we get

Here

where we have used (3.13) in the last step. Let

and consequently, we have

It follows from the first equation of (2.9) that

where \(\tilde{c}\) and \( \tilde{l}\) are defined in Lemma 2.1 (see (2.8)). Therefore, to determine the zeros of \({\mathcal {S}}(l)\) in \((\tilde{l},\infty )\), we only need to consider the zeros of \({\mathcal {S}}_3(c)\) in \((0,\tilde{c})\).

It follows from the Hölder inequality and (3.13) that

and consequently,

Note from (2.8) that \(\int _\Omega \left[ e^{\alpha m(x)} m(x)- c e^{2 \alpha m(x)}\right] dx>0\) for \(c\in (0,\tilde{c})\). This, combined with (3.13), (3.17) and (3.20), yields

It follows from (2.8) that \(\lim _{c\rightarrow \tilde{c}}{\mathcal {S}}_3(c)=\int _\Omega e^{2 \alpha m(x)} dx>0\), and

Therefore, if \({\mathcal {T}}(\alpha )<0\), then \({\mathcal {S}}_3(c)>0\) for all \(c\in (0,\tilde{c})\). If \({\mathcal {T}}(\alpha )>0\), then there exists \(c_0\in (0,\tilde{c})\) such that \({\mathcal {S}}_3(c_0)=0\), \({\mathcal {S}}_3(c)<0\) for \(c\in (0,c_0)\) and \({\mathcal {S}}_3(c)>0\) for \(c\in (c_0,\tilde{c})\). Then, we see from (3.16), (3.18) and (3.19) that if \({\mathcal {T}}(\alpha )<0\), then (i) holds; and if \({\mathcal {T}}(\alpha )>0\), then there exists \(l_0>\tilde{l}\) such that

It follows from (3.16) and (3.18) that

where we have used \(c_{0l_0}=c_0\) in the last step. Noting that \({\mathcal {S}}_3(c_0)=0\), we see from (3.19) and (3.21) that \({\mathcal {S}}'(l_0)>0\). Note from Theorem 2.5 that \({\mathcal {L}}=[ \tilde{l}+\epsilon ,1/\epsilon ]\) with \(0<\epsilon \ll 1\). Then, for sufficiently small \(\epsilon \), \(l_0\in {int}( {\mathcal {L}})=(\tilde{l}+\epsilon ,1/\epsilon )\) and (ii) holds. This completes the proof.\(\square \)

By Lemma 3.3, we can solve (3.10) for \(\lambda =0\).

Lemma 3.4

Suppose that \(\lambda =0\), and let \({\mathcal {T}} (\alpha )\) be defined in Lemma 3.3. Then the following statements hold:

-

(i)

If \({\mathcal {T}} (\alpha )<0\), then (3.10) has no solution;

-

(ii)

If \({\mathcal {T}} (\alpha )>0\), then (3.10) has a unique solution

$$\begin{aligned} (\delta ,s_1,s_2,\nu , w, z,l)=(\delta _0,s_{10},s_{20},\nu _0, w_0, z_0,l_0), \end{aligned}$$where \(l_0\) is obtained in Lemma 3.3,

$$\begin{aligned} \begin{aligned}&s_{10}=0,\;w_0= 0,\; z_0= 0,\;\nu _0=\sqrt{ -\frac{ \int _\Omega e^{\alpha m(x)} M_{2}^{(0,l_0)} dx \int _\Omega M_{3}^{(0,l_0)} dx }{ | \Omega | \int _\Omega e^{\alpha m(x)} dx } },\\&\delta _0= \sqrt{ \displaystyle \frac{1 }{1+\left( \frac{\int _\Omega M_{3}^{(0,l_0)} dx }{\nu _0|\Omega |} \right) ^2}},\;\;s_{20}=-\displaystyle \frac{\delta _0}{\nu _0|\Omega |}\displaystyle \int _\Omega M_{3}^{(0,l_0)} dx, \end{aligned} \end{aligned}$$and \(M_{i}^{(0,l)}\) is defined in (3.12) for \(i=1,2,3,4\).

Proof

It follows from (3.12) that

which implies that \(\nu _0\) is well defined.

Substituting \(\lambda =0\) into \( h_{2}=0\) and \(h_{4}=0\), respectively, we have \( w= w_0= 0\) and \( z= z_0= 0\). Note from the second equation of (2.11) that

Then plugging \( w= z=0\) and \(\lambda =0\) into \( h_i=0\) for \(i=1,3,5\), respectively, we have

and

Therefore, (3.10) has a solution if and only if (3.25)–(3.26) is solvable for some value of \((\delta ,s_1,s_2,\nu ,l)\) with \(\delta ,\nu \ge 0\), \(s_1,s_2\in \mathbb R\) and \(l\in {\mathcal {L}}\). It follows from (3.23) that (3.25)–(3.26) is solvable (or (3.10) is solvable) if and only if

is solvable for some \(l\in {\mathcal {L}}\). From Lemma 3.3, we see that if \({\mathcal {T}} (\alpha )<0\), then (3.27) has no solution in \({\mathcal {L}}\); and if \({\mathcal {T}} (\alpha )>0\), then (3.27) has a unique solution \(l_0\) in \({\mathcal {L}}\) with \({\mathcal {S}}(l_0)=0\).

Substituting \(l=l_0\) into (3.25), we compute that

Then, plugging (3.28) into (3.26), we get \(\delta =\delta _0\) and \(s_2=s_{20}\). This completes the proof.\(\square \)

Now, we solve (3.10) for \(\lambda >0\).

Theorem 3.5

Suppose that \({\mathcal {T}} (\alpha )>0\), where \({\mathcal {T}} (\alpha )\) is defined in Lemma 3.3. Then there exists \(\tilde{\lambda }\in (0,\delta _\epsilon )\), where \(\delta _\epsilon \) is obtained in Theorem 2.5, and a continuously differentiable mapping

such that (3.10) has a unique solution \((\delta _{\lambda },s_{1\lambda },s_{2\lambda }, \nu _{\lambda },w_{\lambda }, z_\lambda ,l_\lambda )\) for \(\lambda \in [0,\tilde{\lambda }]\), and

for \(\lambda =0\), where \((\delta _{0},s_{10},s_{20}, \nu _0,w_0, z_0,l_0)\) is defined in Lemma 3.4.

Proof

We first show the existence. It follows from Lemma 3.4 that \( H\left( K_0\right) = 0\), where \(K_0=(\delta _{0},s_{10},s_{20}, \nu _0,w_0, z_0,l_0,0)\). Note from (3.24) that

Then the Fréchet derivative of \(H (\delta , s_1,s_2, \nu ,w, z,l,\lambda )\) with respect to \((\delta ,s_1,s_2, \nu ,w, z, l)\) at \(K_0\) is as follows:

where \((\hat{\delta },\hat{s}_1,\hat{s}_2,\hat{\nu },\hat{w},\hat{z},\hat{l})\in \mathbb R^4\times \left( \left( X_{1}\right) _{\mathbb C}\right) ^2\times {\mathcal {L}}\), and

where we have used (3.29) to obtain \(k_3\). If \( K( \hat{\delta },\hat{s}_1,\hat{s}_2,\hat{\nu },\hat{w},\hat{z},\hat{l})= 0\), then \( \hat{w}= 0\) and \(\hat{z}= 0\). Substituting \(\hat{w}=\hat{z}= 0\) into \(k_1=0\) and \(k_3=0\), respectively, separating the real and imaginary parts, and noting that \(k_5=0\), we get

Here \(D_{ij}=0\) except

where \({\mathcal {S}}(l)\) is defined in Lemma 3.3. A direct computation implies that

Since \((\delta _0,s_{10},s_{20},\nu _0)\) satisfies (3.25), we have

Plugging (3.31) into (3.30), we have

where we have used Lemma 3.3 (ii) in the last step. Therefore, \(\hat{\delta }=0,\hat{s}_1=0,\hat{s}_2=0,\hat{\nu }=0\) and \(\hat{l}=0\), which implies that K is injective and thus bijective. From the implicit function theorem, we see that there exists \(\tilde{\lambda }\in (0,\delta _\epsilon )\) and a continuously differentiable mapping

such that \( H\left( \delta _{\lambda },s_{1\lambda },s_{2\lambda }, \nu _\lambda ,w_\lambda , z_\lambda ,l_\lambda ,\lambda \right) = 0\), and for \(\lambda =0\),

Now, we show the uniqueness. From the implicit function theorem, we only need to verify that if \(\left( \delta ^{\lambda },s_{1}^{\lambda },s_{2}^{\lambda }, \nu ^{\lambda },w^{\lambda },z^{\lambda },l^{\lambda }\right) \) is a solution of (3.10) for \(\lambda \in (0,\tilde{\lambda }]\), then

Noticing that \(h_5\left( \delta ^{\lambda },s_{1}^{\lambda },s_{2}^{\lambda },\nu ^{\lambda }, w^{\lambda },z^{\lambda },l^{\lambda }\right) =0\), we see that \(|\delta ^{\lambda }|\), \(|s_{1}^{\lambda }|\), \(|s_{2}^{\lambda }|\), \(\Vert w^{\lambda }\Vert _2\) and \(\Vert z^{\lambda }\Vert _2\) are bounded for \(\lambda \in [0,\tilde{\lambda }]\). Since \(l^\lambda \in {\mathcal {L}}\), we obtain that \(l^{\lambda }\) is bounded for \(\lambda \in [0,\tilde{\lambda }]\). Moreover, \(|\nu ^\lambda |\) is also bounded for \(\lambda \in [0,\tilde{\lambda }]\) from Lemma 3.1. Let

Noting that \(\left( \delta ^{\lambda },s_{1}^{\lambda },s_{2}^{\lambda }, \nu ^{\lambda },w^{\lambda },z^{\lambda },l^{\lambda }\right) \) is bounded in \(\mathbb R^4\times \left( (Y_1)_{\mathbb C}\right) ^2\times \mathbb R\), we see from (3.7) that \(\lim _{\lambda \rightarrow 0} \mathcal {Q}_1(\lambda )=0\) and \(\lim _{\lambda \rightarrow 0} \mathcal {Q}_2(\lambda )=0\) in \((Y_1)_{\mathbb C}\). Since

where \(L^{-1}\) and \(\Delta ^{-1}\) are bounded operators from \(\left( Y_{1}\right) _{\mathbb C}\) to \(\left( X_{1}\right) _{\mathbb C}\), we get

Since \(\left( \delta ^{\lambda },s_{1}^{\lambda },s_{2}^{\lambda }, \nu ^{\lambda },l^{\lambda }\right) \) is bounded in \(\mathbb R^5\) for \(\lambda \in (0,\tilde{\lambda }]\), we see that, up to a subsequence,

Taking \(\lambda \rightarrow 0\) on both sides of

we see that \(H\left( \delta ^{*},s_{1}^{*},s_{2}^{*},\nu ^{*}, 0,0,l^{*}\right) =0\). This combined with Lemma 3.4 implies that \(\delta ^*=\delta _0\), \(s_1^*=s_{10}\), \(s_2^*=s_{20}\), \(\nu ^*=\nu _0\) and \(l^*=l_0\). This completes the proof.\(\square \)

Then from Lemma 3.2 and Theorem 3.5, we have the following result.

Theorem 3.6

Let \({\mathcal {L}}\) be defined in Theorem 2.5. Suppose that \({\mathcal {T}} (\alpha )>0\) and \(\lambda \in (0,\tilde{\lambda }]\), where \(0<\tilde{\lambda }\ll 1\), and \({\mathcal {T}} (\alpha )\) is defined in Lemma 3.3. Then \((\varphi ,\psi ,\sigma ,l)\) solves

if and only if

where \(A_l(\lambda )\) is defined in (3.2), I is the identity operator, \(\kappa \in \mathbb C\) is a nonzero constant, and \((\delta _\lambda ,s_{1\lambda },s_{2\lambda }, \nu _{\lambda },w_{\lambda }, z_\lambda , l_\lambda )\) is obtained in Theorem 3.5.

To show that \(\textrm{i}\lambda \nu _\lambda \) is a simple eigenvalue of \(A_{l_\lambda }(\lambda )\), we need to consider the following operator

Let

Then \(A^H(\lambda )\) is the adjoint operator of \(\mathcal {I}\left( A_{l_\lambda }(\lambda )- \textrm{i}\lambda \nu _\lambda I \right) \). That is, for any \((\phi _1,\phi _2)^T\in X_{\mathbb C}\) and \((\psi _1,\psi _2)^T\in X_{\mathbb C}\), we have

Lemma 3.7

Let \(A^H(\lambda )\) be defined in (3.33). Suppose that \({\mathcal {T}} (\alpha )>0\) and \(\lambda \in (0,\tilde{\lambda }]\), where \(0<\tilde{\lambda }\ll 1\) and \({\mathcal {T}} (\alpha )\) is defined in Lemma 3.3. Then

and, ignoring a scalar factor, \((\tilde{\varphi }_\lambda ,\tilde{\psi }_\lambda )\) can be represented as

Moreover, \( \lim _{\lambda \rightarrow 0}\left( \tilde{\delta }_\lambda ,\tilde{s}_{1\lambda },\tilde{s}_{2\lambda },\tilde{w}_{\lambda },\tilde{z}_\lambda \right) =(\tilde{\delta }_0,\tilde{s}_{10},\tilde{s}_{20},\tilde{w}_0,\tilde{z}_0 )\) in \(\mathbb R^3\times \left( (X_1)_{\mathbb C}\right) ^2\), where \(\tilde{w}_0= 0,\;\tilde{z}_0= 0,\)

and \(\nu _0,l_0\) are defined in Lemma 3.4.

Proof

It follows from Theorem 3.6 that 0 is an eigenvalue of \( \mathcal {I}\left( A_{l_\lambda }(\lambda )- \textrm{i}\lambda \nu _\lambda I \right) \) and \({\mathcal {N}} \left[ \mathcal {I}\left( A_{l_\lambda }(\lambda )- \textrm{i}\lambda \nu _\lambda I \right) \right] \) is one-dimensional for \(\lambda \in (0,\tilde{\lambda }]\), where \({\mathcal {I}}\) is defined in (3.34). Then 0 is also an eigenvalue of \( A^H(\lambda )\) and \({\mathcal {N}} [ A^H(\lambda )]\) is also one-dimensional. Then \({\mathcal {N}} [ A^H(\lambda )]=\textrm{span} [(\tilde{\varphi }_\lambda ,\tilde{\psi }_\lambda )^T]\), and \(( \tilde{\varphi }_\lambda ,\tilde{\psi }_\lambda )\) can be represented as (3.36). By the similar arguments as in the proof of Theorem 3.5, we see that

and up to a subsequence,

where \(\left( \delta _0^{*}, s_{10}^*, s_{20}^*\right) \) satisfies \(\left( \delta _0^*\right) ^2+\left( s_{ 10}^*\right) ^2+\left( s_{ 20}^*\right) ^2=1\), and

A direct computation implies that \(\left( \delta _0^{*}, s_{10}^*, s_{20}^*\right) =\left( \tilde{\delta }_0, \tilde{s}_{10}, \tilde{s}_{20}\right) \), and consequently, \(\lim _{\lambda \rightarrow 0}\left( \tilde{\delta }_\lambda ,\tilde{s}_{1\lambda },\tilde{s}_{2\lambda }\right) =\left( \tilde{\delta }_0, \tilde{s}_{10}, \tilde{s}_{20}\right) \). This completes the proof.\(\square \)

Then, by virtue of Lemma 3.7, we show that \(\textrm{i} \lambda \nu _\lambda \) is simple.

Theorem 3.8

Suppose that \({\mathcal {T}} (\alpha )>0\) and \(\lambda \in (0,\tilde{\lambda }]\), where \(0<\tilde{\lambda }\ll 1\) and \({\mathcal {T}} (\alpha )\) is defined in Lemma 3.3. Then \(\textrm{i} \lambda \nu _\lambda \) is a simple eigenvalue of \( A_{l_\lambda }(\lambda ) \), where \( A_l(\lambda ) \) is defined in (3.2).

Proof

It follows from Theorem 3.6 that \({\mathcal {N}} [ A_{l_\lambda }(\lambda ) - \textrm{i}\lambda \nu _\lambda I] =\textrm{span} [( \varphi _\lambda , \psi _\lambda )^T]\), where \( \varphi _\lambda \) and \(\psi _\lambda \) are defined in Theorem 3.5. Then we show that

Letting \(( \Psi _1, \Psi _2)^T \in {\mathcal {N}} [ A_{l_\lambda }(\lambda ) - \textrm{i}\lambda \nu _\lambda I]^2\), we have

and consequently, there exists a constant \(s\in \mathbb C\) such that

Then

where \({\mathcal {I}}\) is defined in (3.34). Note that \(A^H(\lambda )\) is the adjoint operator of \({\mathcal {I}}[ A_{l_\lambda }(\lambda ) - \textrm{i}\lambda \nu _\lambda I]\), and it follows from Lemma 3.7 that

Then, by (3.35) and (3.37), we have

where

It follows from Lemmas 3.4 and 3.7 and Theorem 3.5 that

which implies that \(s=0\) for \(\lambda \in (0,\tilde{\lambda }]\) with \(0<\tilde{\lambda }\ll 1\). Therefore,

This completes the proof.\(\square \)

Note that \(\textrm{i}\lambda \nu _\lambda \) is simple if exists. Then, by using the implicit function theorem, we see that there exists a neighborhood \(P_\lambda \times V_\lambda \times O_\lambda \) of \( ( \varphi _\lambda , \psi _\lambda , \textrm{i}\nu _\lambda ,l_\lambda )\) (\(P_\lambda \), \(V_\lambda \) and \(O_\lambda \) are neighborhoods of \(( \varphi _\lambda , \psi _\lambda )\), \(\textrm{i}\nu _\lambda \) and \(l_\lambda \), respectively), and a continuously differentiable function \( ( \varphi (l), \psi (l), \mu (l)):O_\lambda \rightarrow P_\lambda \times V_\lambda \) such that \(\mu (l_\lambda )=\textrm{i}\nu _\lambda ,\;( \varphi (l_\lambda ), \psi (l_\lambda ))=( \varphi _\lambda , \psi _\lambda )\), where \(\nu _\lambda \), \(\varphi _\lambda \) and \(\psi _\lambda \) are defined in Theorem 3.5. Moreover, for each \(l \in O_\lambda \), the only eigenvalue of \(A_{l}(\lambda )\) in \(V_\lambda \) is \(\mu (l)\), and

Then, we show that the following transversality condition holds.

Theorem 3.9

Let \(l_\lambda \) be obtained in Theorem 3.5. Then

Proof

Multiplying both sides of (3.40) by \({\mathcal {I}}\) to the left, and differentiating the result with respect to l at \(l=l_\lambda \), we have

where \(M^{(\lambda ,l)}_i\;(i=1,\ldots ,4)\) and \({\mathcal {I}}\) are defined in (3.1) and (3.34), respectively. Note from (3.35) and Lemma 3.7 that

where \(\tilde{\varphi }_\lambda \) and \(\tilde{\psi }_\lambda \) are defined in Lemma 3.7. Then, multiplying both sides of (3.41) by \((\tilde{\varphi }_\lambda , \tilde{\psi }_\lambda )\) to the left, and integrating the result over \(\Omega \), we have

where \(\mathcal {W}(\lambda )\) is defined in (3.38). From Theorem 3.5 and Lemma 3.7, we see that

It follows from Theorem 2.5 that \(( u^{(\lambda ,l)}, v^{(\lambda ,l)})\) is continuously differentiable. This, combined with the embedding theorems and Eq. (3.43), implies that

It follows from Lemma 3.3 and Eq. (3.29) that

This, together (3.39), (3.42) and (3.44), yields

This completes the proof.\(\square \)

From Theorems 2.5, 3.5, 3.8 and 3.9, we can obtain the following results on the dynamics of model (1.4), see also Fig. 1.

Theorem 3.10

Let \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) be the unique positive steady state (obtained in Theorem 2.5) of model (1.4) for \(l\in {\mathcal {L}}:=[\tilde{l}+\epsilon ,1/\epsilon ]\) and \(\lambda \in (0,\delta _\epsilon ]\) with \(0<\epsilon \ll 1\), where \(\tilde{l}\) and \(\delta _\epsilon \) are defined in Eq. (2.8) and Theorem 2.5, respectively. Then the following statements hold.

-

(i)

If \({\mathcal {T}}(\alpha )<0\), where \({\mathcal {T}} (\alpha )\) is defined in Lemma 3.3, then there exists \(\tilde{\lambda }_1\in (0,\delta _\epsilon )\) such that, for each \(\lambda \in (0,\tilde{\lambda }_1]\), the positive steady state \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) of model (1.4) is locally asymptotically stable for \(l\in {\mathcal {L}}\).

-

(ii)

If \({\mathcal {T}}(\alpha )>0\), then there exists \(\tilde{\lambda }_2\in (0,\delta _\epsilon )\) and a continuously differentiable mapping

$$\begin{aligned} \lambda \mapsto l_\lambda :(0,\tilde{\lambda }_2]\rightarrow {\mathcal {L}}=[\tilde{l}+\epsilon ,1/\epsilon ] \end{aligned}$$such that, for each \(\lambda \in (0,\tilde{\lambda }_2]\), the positive steady state \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) of model (1.4) is locally asymptotically stable when \(l \in [ \tilde{l}+\epsilon ,l_\lambda )\) and unstable when \(l \in (l_\lambda , 1/\epsilon ]\). Moreover, system (1.4) undergoes a Hopf bifurcation at \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) when \(l=l_\lambda \).

Proof

To prove (i), we need to show that if \({\mathcal {T}}(\alpha )<0\), there exists \(\tilde{\lambda }_1>0\) such that

If it is not true, then there exists a sequence \(\{ (\lambda _k ,l_k )\}_{k=1}^\infty \) such that \(\lim _{k\rightarrow \infty }\lambda _k=0\), \(\lim _{k\rightarrow \infty }l_k=l^*\in {\mathcal {L}}\), and

Then, for \(k\ge 1\),

is solvable for some value of \((\mu _{k},\varphi _{k}, \psi _{k})\) with \({\mathcal {R}}e \mu _{k}\ge 0\) and \( (\varphi _{k}, \psi _{k})^T(\ne (0,0)^T)\in (X_{\mathbb C})^2\). Substituting \((\mu ,\varphi ,\psi )=(\mu _{k},\varphi _{k},\psi _{k})\) into (3.45), we have

Ignoring a scalar factor, we see that \(( \varphi _{k}, \psi _{k})^T(\ne ( 0,0)^T)\in (X_{\mathbb C})^2\) can be represented as

and \((\mu _{k},\delta _{k}, s_{1k}, s_{2k}, w_{k},z_{k})\) satisfies

where

Using similar arguments as in the proof of Theorem 3.5, we see that

Since \({\mathcal {H}}_5(\mu _{k},\delta _{k}, s_{1k}, s_{2k}, w_{k},z_{k},l_k,\lambda _k)=0\), we see that, up to a subsequence, \(\lim _{k\rightarrow \infty } \delta _{k } =\delta ^*, \lim _{k\rightarrow \infty } s_{1k } =s_1^*\) and \(\lim _{k\rightarrow \infty }s_{2k }=s_2^*\). It follows from Lemma 3.1 that, up to a subsequence, \(\lim _{k\rightarrow \infty } \mu _{k }=\mu ^*\) with \({\mathcal {R}}e \mu ^*\ge 0\). Then, taking \(k\rightarrow \infty \) on both sides of \({\mathcal {H}}_j(\mu _{k},\delta _{k}, s_{1k}, s_{2k}, w_{k},z_{k},l_k ,\lambda _k)=0\) for \(j=1,3\), we have

where \(\mathcal {S}(l)\) is defined in Lemma 3.3. Note from (3.24) that \(\displaystyle \int _\Omega M_{4}^{(0,l^*)} dx=0\), and consequently, \(\mu ^*\) is an eigenvalue of the following matrix

It follows from Lemma 3.3 that \(\mathcal {S}(l^*)<0\), which contradicts the fact that \({\mathcal {R}}e \mu ^*\ge 0\). Therefore, (i) holds.

Now we consider the case of \({\mathcal {T}}(\alpha )>0\). Then we only need to show that there exists \(\tilde{\lambda }_2>0\) such that

Note from Lemma 3.3 that \(\mathcal {S}(\tilde{l}+\epsilon )<0\). Then substituting \(l_k= \tilde{l}+\epsilon \) in the proof of (i) and using similar arguments, we can also obtain a contradiction. This proves (ii).\(\square \)

Remark 3.11

We remark that, in Theorem 3.10, \(\tilde{\lambda }_i\) depends on \(\alpha \) and \(\epsilon \) for \(i=1,2\).

4 The effect of the advection

In this section, we show the effect of advection. For later use, we first show the properties of the following auxiliary sequence:

Lemma 4.1

Let \(\{ B_k\}_{k=0}^\infty \) be defined in (4.1), and let \(\mathcal {B}=\{x\in \Omega :\;m(x)>1\}\). Then \(B_{k+1}\ge B_k\) for \(k=0,1,2,\ldots \); \(\lim _{k\rightarrow \infty } B_k =\infty \) if \({\mathcal {B}}\ne \emptyset \); and \(\lim _{k\rightarrow \infty } B_k =0\) if \({\mathcal {B}}=\emptyset \).

Proof

A direct computation implies that

where

and

Note that

Then we obtain that \(B_{k+1}\ge B_k\) for \(k=0,1,2,\ldots \), \(\lim _{k\rightarrow \infty } f_k (x)=f_* (x)\), and \(\lim _{k\rightarrow \infty } g_k (x)=g_*(x)\), where \(g_* (x)\equiv 0\), and

Then we see from the Lebesgue’s monotone convergence theorem that

Clearly, \(\int _\Omega f_* (x)dx=\infty \) if \({\mathcal {B}}\ne \emptyset \), and \(\int _\Omega f_* (x)dx=0\) if \({\mathcal {B}}=\emptyset \). This, combined with (4.2) and (4.3), implies that \(\lim _{k\rightarrow \infty } B_k =\infty \) if \({\mathcal {B}}\ne \emptyset \), and \(\lim _{k\rightarrow \infty } B_k =0\) if \({\mathcal {B}}=\emptyset \).\(\square \)

Now, we can consider function \({\mathcal {T}}(\alpha )\), which determines the existence of Hopf bifurcation from Theorem 3.10.

Theorem 4.2

Let \({\mathcal {T}} (\alpha )\) and \({\mathcal {B}}\) be defined in Lemmas 3.3 and 4.1, respectively. Then the following statements hold:

-

(i)

If \({\mathcal {T}}(0)=\int _\Omega ( m(x) -1) dx\ge 0\), then \({\mathcal {T}}(\alpha )>0\) for any \(\alpha >0\);

-

(ii)

If \({\mathcal {T}}(0)<0\) and \({\mathcal {B}}\ne \emptyset \), then there exists \(\alpha _*>0\) such that \({\mathcal {T}}(\alpha _*)=0\), \({\mathcal {T}}(\alpha )<0\) for \(0<\alpha <\alpha _*\), and \({\mathcal {T}}(\alpha )>0\) for \(\alpha >\alpha _*\);

-

(iii)

If \({\mathcal {B}}=\emptyset \), then \({\mathcal {T}}(\alpha )\le 0\) for any \(\alpha \ge 0\).

Proof

For simplicity, we denote

A direct computation yields

Note that m(x) is non-constant. Then we see that, for \(k\ge 0\),

which yields

and consequently,

Here \({\mathcal {T}}^{(k)} (0) =B_k\), where \(B_k\) is defined in (4.1).

Now we show that (i) holds. Note that \({\mathcal {T}} (0) ={\mathcal {T}}^{(0)} (0) = B_0\ge 0\). Then we see from (4.4) that \({\mathcal {T}} (\alpha )>0\) for all \(\alpha >0\). Then we show that (iii) holds. Since \({\mathcal {B}}=\emptyset \), we have \(0\le m(x)\le 1\), and consequently, \({\mathcal {T}}(\alpha )\le 0\) for all \(\alpha \ge 0\).

Finally, we consider (ii). Note that \({\mathcal {T}} (0) = B_0<0\). It follows from Lemma 4.1 that there exists an integer \(k_*\ge 1\) such that \(B_k\ge 0\) for \(k\ge k_*\) and \(B_k<0\) for \(0\le k< k_*\). This, combined with (4.4), implies that

Then \({\mathcal {T}}^{(k_*-1)} (\alpha )\) is strictly increasing for \(\alpha >0\), and consequently,

We claim that \(\alpha ^\infty _{k_*-1}=\infty \). If it is not true, then

Note from (4.5) that \({\mathcal {T}}^{(k_*)} (\alpha )\) is also strictly increasing for \(\alpha >0\). This, combined with the fact \({\mathcal {T}}^{(k_*)} (0)=B_{k_*}\ge 0\), implies that

Then we see from the L’Hospital’s rule that

which contradicts (4.6). Therefore, the claim is true and \(\lim _{\alpha \rightarrow \infty } {\mathcal {T}}^{(k_*-1)} (\alpha )=\infty \). This, combined with the fact that \({\mathcal {T}}^{(k_*-1)}(0)=B_{k_*-1}\), implies that there exists \(\alpha _{k_*-1}\) such that \({\mathcal {T}}^{(k_*-1)}(\alpha _{k_*-1} )=0\), and

This implies that \({\mathcal {T}}^{(k_*-2)}(\alpha )\) is strictly decreasing for \(\alpha \in [0,\alpha _{k_*-1}]\) and strictly increasing for \(\alpha \in [\alpha _{k_*-1},\infty )\). Therefore, \( \lim _{\alpha \rightarrow \infty } {\mathcal {T}}^{(k_*-2)} (\alpha )=\alpha ^\infty _{k_*-2}\). Then we claim that \(\alpha ^\infty _{k_*-2}=\infty \). If it is not true, then

By the L’Hospital’s rule again, we have

which contradicts (4.7). Therefore, \( \lim _{\alpha \rightarrow \infty } {\mathcal {T}}^{(k_*-2)} (\alpha )=\infty \). Then, there exist \(\alpha _{k_*-2}\) such that \({\mathcal {T}}^{(k_*-2)}(\alpha _{k_*-2} )=0\), and

Therefore, we can repeat the previous arguments to obtain (ii). This completes the proof.\(\square \)

Then, by virtue of Theorem 4.2, we show the effect of advection rate \(\alpha \) on the occurrence of Hopf bifurcations for model (1.4).

Proposition 4.3

Assume that \({\mathcal {T}}(0)<0\), \({\mathcal {B}}\ne \emptyset \), and let \(\alpha _*\) be defined in Theorem 4.2. Then for any \(\epsilon \) with \(0<\epsilon \ll 1\) and \(\alpha \ne \alpha _*\), there exists \(\tilde{\lambda }(\alpha ,\epsilon )>0\) such that the following statements hold.

-

(i)

If \(\alpha <\alpha _*\), then for each \(\lambda \in (0,\tilde{\lambda }(\alpha ,\epsilon )]\), the positive steady state \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) of model (1.4) is locally asymptotically stable for \(l\in {\mathcal {L}}:=[\tilde{l}+\epsilon ,1/\epsilon ]\), where \(\tilde{l}\) is defined in (2.8) and depends on \(\alpha \).

-

(ii)

If \(\alpha >\alpha _*\), then there exists a continuously differentiable mapping

$$\begin{aligned} \lambda \mapsto l_\lambda :[0,\tilde{\lambda }(\alpha ,\epsilon )]\rightarrow {\mathcal {L}}=[\tilde{l}+\epsilon ,1/\epsilon ] \end{aligned}$$such that, for each \(\lambda \in (0,\tilde{\lambda }(\alpha ,\epsilon )]\), the positive steady state \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) of model (1.4) is locally asymptotically stable for \(l \in [ \tilde{l}+\epsilon ,l_\lambda )\) and unstable for \(l \in (l_\lambda , 1/\epsilon ]\), where \(\tilde{l}\) is defined in (2.8) and depends on \(\alpha \). Moreover, system (1.4) undergoes a Hopf bifurcation at \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) when \(l=l_\lambda \).

By Proposition 4.3, we see that the advection rate affects the occurrence of Hopf bifurcations if \({\mathcal {T}}(0)<0\) and \({\mathcal {B}}\ne \emptyset \). Actually, there exists a critical value \(\alpha _*\) such that Hopf bifurcation can occur (or respectively, cannot occur) with \(\alpha >\alpha _*\) (or respectively, \(\alpha <\alpha _*\)). Next, we show that the advection rate can also affect the values of Hopf bifurcations if \({\mathcal {T}}(0)>0\).

Proposition 4.4

Assume that \({\mathcal {T}}(0)>0\). Then for any \(\epsilon \) with \(0<\epsilon \ll 1\) and \(\alpha \ge 0\), there exists \(\tilde{\lambda }(\alpha ,\epsilon )>0\) and a continuously differentiable mapping

such that, for each \(\lambda \in (0,\tilde{\lambda }(\alpha ,\epsilon )]\), the positive steady state \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) of model (1.4) is locally asymptotically stable for \(l \in [ \tilde{l}+\epsilon ,l_\lambda )\) and unstable for \(l \in (l_\lambda , 1/\epsilon ]\), and system (1.4) undergoes a Hopf bifurcation at \(\left( u^{(\lambda ,l)},v^{(\lambda ,l)}\right) \) when \(l=l_{\lambda }\), where \(\tilde{l}\) is defined in (2.8) and depends on \(\alpha \). Moreover, \(\lim _{\lambda \rightarrow 0}l_{\lambda }=l_0\), and \(l_0\) (defined in Lemma 3.3) depends on \(\alpha \) and satisfies the following properties:

-

(i)

If \({\mathcal {H}}>0\), then \(l'_0(\alpha )|_{\alpha =0}>0\), and \(l_0(\alpha ) \) is strictly increasing for \(\alpha \in (0,\epsilon )\) with \(0<\epsilon \ll 1\);

-

(ii)

If \({\mathcal {H}}<0\), then \(l'_0(\alpha )|_{\alpha =0}<0\), and \(l_0(\alpha )\) is strictly decreasing for \(\alpha \in (0,\epsilon )\) with \(0<\epsilon \ll 1\).

Here \({\mathcal {H}}=2\left( \displaystyle \int _{\Omega }m(x)dx\right) ^2-|\Omega |\displaystyle \int _\Omega m(x) dx-|\Omega |\displaystyle \int _{\Omega }m^2(x)dx\).

Proof

Let \({\mathcal {S}}_1(c), {\mathcal {S}}'_1(c),{\mathcal {S}}_3(c), c_0\) be defined in the proof of Lemma 3.3, where \('\) is the derivative with respect to c, and they all depend on \(\alpha \). Therefore, we denote them by \({\mathcal {S}}_1(c,\alpha ), \displaystyle \frac{\partial {\mathcal {S}}_1}{\partial c}(c,\alpha ),{\mathcal {S}}_3(c,\alpha ), c_0(\alpha )\), respectively. By (3.19) and (3.22), we see that \(c_0(\alpha )=c_{0l}\) with \(l=l_{0}(\alpha )\) and \(\displaystyle \frac{d c_{0l}}{dl}<0\), which implies that \(l'_0(\alpha )\) has the same sign as \(-c'_0(\alpha )\).

Since \({\mathcal {T}}(0)>0\), it follows from Theorem 4.2 that \({\mathcal {T}}(\alpha )>0\) for all \(\alpha \ge 0\). This combined with Lemma 3.3 implies that \(c_0(\alpha )\) exists for all \(\alpha \ge 0\). From the proof of Lemma 3.3, we see that

and \(\displaystyle \frac{\partial {\mathcal {S}}_3}{\partial c}>0\) for all \(\alpha \ge 0\). Therefore, \(-c'_0(\alpha )\) has the same sign as \(\displaystyle \frac{\partial {\mathcal {S}}_3}{\partial \alpha }(c_0(\alpha ),\alpha )\). By (3.17) and a direct computation yields

where

Substituting \(\alpha =0\) into (4.8), we obtain that

Then plugging \(c=c_0(0)\) and \(\alpha =0\) into (4.9), we have

which implies that \(l_0(\alpha )\) satisfies (i) and (ii).\(\square \)

Here we only show the effects of advection rate on the values of Hopf bifurcations for \(0<\alpha \ll 1\), and the general case still awaits further investigation.

Data availability statement

All data generated or analyzed during this study are included in this manuscript.

References

Averill, I., Lam, K.-Y., Lou, Y.: The role of advection in a two-species competition model: a bifurcation approach. Mem. Am. Math. Soc. 245(1161), v+117 (2017)

Belgacem, F., Cosner, C.: The effects of dispersal along environmental gradients on the dynamics of populations in heterogeneous environments. Can. Appl. Math. Q. 3(4), 379–397 (1995)

Cantrell, R.S., Cosner, C., Lou, Y.: Movement toward better environments and the evolution of rapid diffusion. Math. Biosci. 204(2), 199–214 (2006)

Cantrell, R.S., Cosner, C., Lou, Y.: Advection-mediated coexistence of competing species. Proc. R. Soc. Edinb. Sect. A 137(3), 497–518 (2007)

Chen, X., Lou, Y.: Principal eigenvalue and eigenfunctions of an elliptic operator with large advection and its application to a competition model. Indiana Univ. Math. J. 57(2), 627–658 (2008)

Cosner, C., Lou, Y.: Does movement toward better environments always benefit a population? J. Math. Anal. Appl. 277(2), 489–503 (2003)

Dockery, J., Hutson, V., Mischaikow, K., Pernarowski, M.: The evolution of slow dispersal rates: a reaction diffusion model. J. Math. Biol. 37(1), 61–83 (1998)

Dong, Y., Li, S., Zhang, S.: Hopf bifurcation in a reaction–diffusion model with Degn–Harrison reaction scheme. Nonlinear Anal. Real World Appl. 33, 284–297 (2017)

Du, Y., Hsu, S.-B.: A diffusive predator–prey model in heterogeneous environment. J. Differ. Equ. 203(2), 331–364 (2004)

Du, Y., Shi, J.: Allee effect and bistability in a spatially heterogeneous predator–prey model. Trans. Am. Math. Soc. 359(9), 4557–4593 (2007)

Hassard, B.D., Kazarinoff, N.D., Wan, Y.H.: Theory and applications of Hopf bifurcation. In: London Mathematical Society Lecture Note Series, vol. 41. Cambridge University Press, Cambridge (1981)

He, X., Ni, W.-M.: Global dynamics of the Lotka–Volterra competition–diffusion system: diffusion and spatial heterogeneity I. Commun. Pure Appl. Math. 69(5), 981–1014 (2016)

Huang, Q.-H., Jin, Y., Lewis, M.A.: \(R_0\) analysis of a Benthic-drift model for a stream population. SIAM J. Appl. Dyn. Syst. 15(1), 287–321 (2016)

Jin, Y., Lewis, M.A.: Seasonal influences on population spread and persistence in streams: critical domain size. SIAM J. Appl. Math. 71(4), 1241–1262 (2011)

Jin, Y., Peng, R., Shi, J.: Population dynamics in river networks. J. Nonlinear Sci. 29(6), 2501–2545 (2019)

Lam, K.-Y.: Concentration phenomena of a semilinear elliptic equation with large advection in an ecological model. J. Differ. Equ. 250(1), 161–181 (2011)

Lam, K.-Y., Lou, Y.: Evolutionarily stable and convergent stable strategies in reaction–diffusion models for conditional dispersal. Bull. Math. Biol. 76(2), 261–291 (2014)

Lam, K.Y., Lou, Y., Lutscher, F.: The emergence of range limits in advective environments. SIAM J. Appl. Math. 76(2), 641–662 (2016)

Lam, K.-Y., Ni, W.-M.: Uniqueness and complete dynamics in heterogeneous competition–diffusion systems. SIAM J. Appl. Math. 72(6), 1695–1712 (2012)

Li, S., Wu, J.: Asymptotic behavior and stability of positive solutions to a spatially heterogeneous predator–prey system. J. Differ. Equ. 265(8), 3754–3791 (2018)

Lou, Y.: On the effects of migration and spatial heterogeneity on single and multiple species. J. Differ. Equ. 223(2), 400–426 (2006)

Lou, Y.: Some reaction diffusion models in spatial ecology. Sci. Sin. Math. 45, 1619–1634 (2015). (in Chinese)

Lou, Y., Lutscher, F.: Evolution of dispersal in open advective environments. J. Math. Biol. 69(6–7), 1319–1342 (2014)

Lou, Y., Nie, H.: Global dynamics of a generalist predator-prey model in open advective environments. J. Math. Biol. 84(6), 1–40 (2022)

Lou, Y., Wang, B.: Local dynamics of a diffusive predator–prey model in spatially heterogeneous environment. J. Fixed Point Theory Appl. 19(1), 755–772 (2017)

Lou, Y., Zhao, X.-Q., Zhou, P.: Global dynamics of a Lotka–Volterra competition–diffusion–advection system in heterogeneous environments. J. Math. Pures Appl. 9(121), 47–82 (2019)

Lou, Y., Zhou, P.: Evolution of dispersal in advective homogeneous environment: the effect of boundary conditions. J. Differ. Equ. 259(1), 141–171 (2015)

Lutscher, F., Lewis, M.A., McCauley, E.: Effects of heterogeneity on spread and persistence in rivers. Bull. Math. Biol. 68(8), 2129–2160 (2006)

Lutscher, F., McCauley, E., Lewis, M.A.: Spatial patterns and coexistence mechanisms in systems with unidirectional flow. Theor. Popul. Biol. 71(3), 267–277 (2007)

Lutscher, F., Pachepsky, E., Lewis, M.A.: The effect of dispersal patterns on stream populations. SIAM Rev. 47(4), 749–772 (2005)

May, R.M.: Limit cycles in predator–prey communities. Science 177, 900–902 (1972)

Mckenzie, H.W., Jin, Y., Jacobsen, J., Lewis, M.A.: \(R_0\) analysis of a spatiotemporal model for a stream population. SIAM J. Appl. Dyn. Syst. 11(2), 567–596 (2012)

Nie, H., Wang, B., Wu, J.: Invasion analysis on a predator–prey system in open advective environments. J. Math. Biol. 81(6–7), 1429–1463 (2020)

Peng, R., Shi, J.: Non-existence of non-constant positive steady states of two Holling type-II predator–prey systems: strong interaction case. J. Differ. Equ. 247(3), 866–886 (2009)

Shi, H.-B., Ruan, S., Su, Y., Zhang, J.-F.: Spatiotemporal dynamics of a diffusive Leslie–Gower predator–prey model with ratio-dependent functional response. Int. J. Bifurc. Chaos Appl. Sci. Eng. 25(5), 1530014, 16 (2015)

Tang, D., Chen, Y.: Global dynamics of a Lotka–Volterra competition–diffusion system in advective heterogeneous environments. SIAM J. Appl. Dyn. Syst. 20(3), 1232–1252 (2021)

Tang, D., Chen, Y.: Predator-prey systems in open advective heterogeneous environments with Holling–Tanner interaction term. J. Differ. Equ. 334, 280–308 (2022)

Wang, B., Zhang, Z.: Bifurcation analysis of a diffusive predator–prey model in spatially heterogeneous environment. Electron. J. Qual. Theory Differ. Equ. 42, 1–17 (2017)

Wang, J., Nie, H.: Invasion dynamics of a predator–prey system in closed advective environments. J. Differ. Equ. 318, 298–322 (2022)

Wang, J., Shi, J., Wei, J.: Dynamics and pattern formation in a diffusive predator–prey system with strong Allee effect in prey. J. Differ. Equ. 251(4–5), 1276–1304 (2011)

Wang, M.: Stability and Hopf bifurcation for a prey–predator model with prey-stage structure and diffusion. Math. Biosci. 212(2), 149–160 (2008)

Xin, S., Li, L., Nie, H.: The effect of advection on a predator–prey model in open advective environments. Commun. Nonlinear Sci. Numer. Simul. 113, Paper No. 106567, 17 (2022)

Yi, F., Wei, J., Shi, J.: Bifurcation and spatiotemporal patterns in a homogeneous diffusive predator–prey system. J. Differ. Equ. 246(5), 1944–1977 (2009)

Zhou, P.: On a Lotka–Volterra competition system: diffusion vs advection. Calc. Var. Partial Differ. Equ. 55(6), Art. 137, 29 (2016)

Zhou, P., Xiao, D.: Global dynamics of a classical Lotka–Volterra competition–diffusion–advection system. J. Funct. Anal. 275(2), 356–380 (2018)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Neves.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research is supported by Shandong Provincial Natural Science Foundation of China (No. ZR2020YQ01) and the National Natural Science Foundation of China (No. 12171117).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, Y., Chen, S. Stability and bifurcation in a reaction–diffusion–advection predator–prey model. Calc. Var. 62, 61 (2023). https://doi.org/10.1007/s00526-022-02405-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00526-022-02405-2