Abstract

Parameter estimation is always the focus of constructing differential equations to simulate dynamic systems. In order to estimate unknown parameters in multi-factor uncertain differential equations, the definition of residuals is presented and important properties of residuals are demonstrated. Based on the property that the residuals obey the linear uncertainty distribution, moment estimation of the unknown parameters in the multi-factor uncertain differential equation is performed and the reasonableness of the parameter estimation results is verified. Some examples with real data are given to demonstrate the feasibility of the method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Using observational data to build dynamic models to explore patterns of development is a common research method. However, practical situations often involve a lack of data or difficulties in measurement. To solve this problem, Liu (2007) proposed to construct dynamic models based on expert belief degree and constructd a framework of uncertainty theory based on four axioms in 2007. As the research progressed, Liu (2009) discovered a class of stationary independent increment process and defined them as Liu processes. Uncertainty theory has attracted the interest of many scholars and has developed rapidly in recent years. In terms of practical applications, uncertainty theory stands out, such as modelling infectious diseases (Lio and Liu 2021), predicting stocks (Yao 2015a), optimising logistics networks (Peng et al. 2022), etc.

Uncertain differential equation (UDE) driven by the Liu process was proposed by Yao (2016) to model and analyze dynamical systems subject to uncertain factors. In order to model complex dynamic systems in different situations, more and more types of uncertain differential equations are proposed. Backward uncertain differential equations were presented by Ge and Zhu (2013), who also established the existence theorems for their solutions. Yao (2015b) proved the completely unique solution of the uncertain differential equation with jumps driven by the update process and the Liu process and its uncertainty measure in the sense of stability. Yao (2016) proposed the high-order uncertain differential equations with high-order derivatives. Li et al. (2015) proposed a multi-factor uncertain differential equation. The focus of this study is on parameter estimation for multi-factor uncertain differential equations.

Parameter estimation has long been a major research topic in the field of differential equations. Various parameter estimation methods for uncertain differential equations have been proposed in succession. Sheng et al. (2020) presented a least squares estimation method for estimating unknown parameters. Yao and Liu (2020) suggested the moment estimation technique based on the difference form. To improve the situation where the system of moment estimation equations has no solution, Liu (2021) proposed generalized moment estimation. In addition, Lio and Liu (2020) proposed the uncertain maximum likelihood method. Sheng and Zhang (2021) also introduced three methods for parameter estimation based on different types of solutions. Liu and Liu (2022) first proposed the definition of residual of uncertain differential equations and used residual to solve unknown parameters. Zhang et al. (2021a) also estimated the parameters of high-order uncertain differential equations. Zhang and Sheng (2022) rewrite the least squares estimation method for estimating the time-varying parameters in UDE. In the process of parameter estimation, the testing of the estimates is equally important. Ye and Liu (2023) proposed uncertainty hypothesis testing for verifying that the uncertain differential equations are consistent with the observed data. Zhang et al. (2022) used the uncertainty hypothesis testing to determine the reasonableness of the parameter estimates. Ye and Liu (2022) applied uncertainty hypothesis testing to uncertainty regression analysis.

In the study of unknown parameters of multi-factor uncertain differential equation, Zhang et al. (2021b) proposed a weighted method for moment estimation and least squares estimation of unknown parameters. However, that paper did not give a specific judgment on how to determine the rationality of the weighting method. In order to avoid complicated weighting and discuss the rationality of weighting, a new method based on residuals for estimating unknown parameters of multi-factor uncertain differential equations is proposed in this paper.

This paper introduces the idea of residuals into parameter estimation of multi-factor uncertain differential equation. This paper presents the definition of residuals and proves some properties of residuals. The moment estimation is performed on the unknown parameters from the residuals as samples from the linear uncertainty distribution. Section 2, some basic definitions and theorems of uncertainty theory are introduced. Section 3, the concept of residuals for multi-factor uncertain differential equations is presented, the properties of the residuals are proved and analytical expressions for the residuals are derived. Section 4, based on the fact that the residuals follow the linear uncertainty distribution \({\mathcal {L}}(0,1)\), moment estimation of the unknown parameters in the multi-factor uncertain differential equation is performed and the estimation results are tested. Section 5, two examples with real data are given to verify the reliability of the method. Section 6 is the summary of this paper.

2 Preliminary

In this section, some necessary definitions and theorems in uncertainty theory are introduced to help readers understand what follows.

Definition 1

(Liu 2007, 2009) Let \(\text{ L }\) be a \(\sigma \)-algebra on a nonempty set \(\Gamma .\) A set function \(\text{ M }:\) \(\text{ L }\rightarrow [0, 1]\) is called an uncertainty measure if the four following axioms are satisfied:

- Axiom 1:

-

: (normality Axiom) \(\text{ M }\{\Gamma \}=1\) for the universal set \(\Gamma .\)

- Axiom 2:

-

: (duality Axiom) \(\text{ M }\{\Lambda \}+\text{ M }\{\Lambda ^c\}=1\) for any event \(\Lambda \).

- Axiom 3:

-

: (subadditivity Axiom) For every countable sequence of events \(\Lambda _1, \Lambda _2, \ldots ,\) we have

$$\begin{aligned} \text{ M }\left\{ \bigcup _{i=1}^{\infty }\Lambda _i\right\} \le \sum _{i=1}^{\infty }\text{ M }\left\{ \Lambda _i\right\} . \end{aligned}$$The triplet \((\Gamma ,\text{ L },\text{ M})\) is called an uncertainty space. Besides, the product uncertain measure on the product \(\sigma \)-algebra \(\text{ L }\) was defined by Liu as follows:

- Axiom 4:

-

: (product Axiom) Let \((\Gamma _k,\text{ L}_k,\text{ M}_k)\) be uncertainty spaces for \(k=1, 2, \ldots \), the product uncertain measure \(\text{ M }\) is an uncertain measure satisfying

where \(\Lambda _k\) are arbitrarily chosen events from \(\text{ L}_k\) for \(k=1, 2, \ldots \), respectively.

Definition 2

(Liu 2009) An uncertain process \(C_t\) is called a Liu process if

-

(i)

\(C_0=0\) and almost all sample paths are Lipschitz continuous,

-

(ii)

\(C_t\) has stationary and independent increments,

-

(iii)

the increment \(C_{s+t}-C_s\) has a normal uncertainty distribution

Definition 3

(Liu 2007) Let \(\xi \) be an uncertain variable and its uncertainty distribution is defined by

for any real number x.

Common uncertainty distributions include linear uncertainty distributions \({\mathcal {L}}(a,b)\), zigzag uncertainty distributions \({\mathcal {Z}}(a,b,c)\) and normal uncertainty differentials \({\mathcal {N}}(e,\sigma )\). For example, suppose the uncertain variable \(\xi (x)=x\), it follows \({\mathcal {L}}(0,1)\) uncertainty distribution

Definition 4

(Li et al. 2015) Let \(C_{1t}\),\(C_{2t}\),\(\ldots \),\(C_{nt}\) be independent Liu processes, and f and \(g_{1},g_{2},\ldots ,g_{n}\) are given functions. The multi-factor uncertain differential equation with respect to \(C_{jt}\) \(( i=1,2,\ldots ,n)\)

is said to have an \(\alpha \)-path \(X_{t}^{\alpha }\) if it solves the corresponding ordinary differential equation

where

Theorem 1

(Ye and Liu 2023) Let \(\xi \) be an uncertain variable that follows a linear uncertainty distribution \({\mathcal {L}} (a,b)\) with unknown parameters a and b. The test for hypotheses

at significance level \(\alpha \) is \(W=\Bigg \{(z_1,z_2,\ldots ,z_n):\) there are at least \(\alpha \) of indexes i’s with \(1\le i\le n\) such as \( z_i <\Phi _{\theta _0}^{-1}(\frac{\alpha }{2}) or z_i >\Phi _{\theta _0}^{-1}(1-\frac{\alpha }{2}) \Bigg \}\) where \(\Phi _{\theta _0}^{-1}\) is the inverse uncertainty distribution of \({\mathcal {L}} (a,b)\), i.e.

3 Residuals of multi-factor uncertain differential equations

This section presents the definition and two important properties of residuals for multi-factor uncertain differential equations. Analytic and numerical examples of residuals are given.

Consider a multi-factor uncertain differential equation with n observations \((t_i,x_{t_i})\) \((i=1,2,\ldots ,n)\)

where f and \(g_{j}\) \((j=1,2,\ldots ,n)\) are given continuous functions and \(C_{jt}\) \((j=1,2,\ldots ,n)\) is the Liu process. From Eq. (1) and its observations, the i-th corresponding updated uncertain differential equation can be obtained:

where \(2\le i \le n\) and \(x_{t_{i-1}}\) is the new initial value at the new initial time \(t_{i-1}\).

Definition 5

For any multi-factor uncertain differential equation with discrete observations \((t_i,x_{t_i})\) \((i=1,2,\ldots ,n)\), the i-th residual is defined as \(\varepsilon _{i}\) and can be obtained by the uncertainty distribution \(\Phi _{t_{i}}(X_{t_i})\) of the uncertain variables \(X_{t_i}\) in Eq. (2),

where \(2\le i\le n\) and \(x_{t_i}\) is the observation at the corresponding moment of \(X_{t_i}\)

Example 1

Consider a multi-factor uncertain differential equation with discrete observations \((t_i,x_{t_i})\) \((i=1,2,\ldots ,n)\)

where \(\mu \) and \(\sigma _{j}\) are constants. By solving the i-th multi-factor updated uncertain differential equation below \((2<i<n)\)

we can get

Since the Liu integral

we can get the uncertainty distribution of \(X_{t_{i}}\)

By Definition 5, we get the i-th residual corresponding to \(\Phi _{t_{i}}(x_{i})\)

Example 2

Consider a multi-factor uncertain differential equation with discrete observations \((t_i,x_{t_i})\) \((i=1,2,\ldots ,n)\)

where \(\mu \), \(\sigma _{j}\) and \(\alpha \) \((0<\alpha <1)\) are constants. By solving the i-th multi-factor updated uncertain differential equation below \((2<i<n)\)

we can get

Since the Liu integrals

we can get the uncertainty distribution of \(X_{t_{i}}\)

By Definition 5, we get the i-th residual corresponding to \(\Phi _{t_{i}}(x_{t_i})\)

3.1 Important properties of residuals

The two properties of the residuals provide an important basis for subsequent processing of the data and parameter estimation.

Property 1

The updated ordinary differential equations can be obtained by Eq. (2):

where

and \(X_{t_{i}}^{\alpha }\) as the \(\alpha \)-path of \(X_{t_{i}}\). The residuals \(\varepsilon _{i}\) are equal to the value of \(\alpha \) in \(X_{t_{i}}^{\alpha }\).

Proof

Since for any \(\alpha \in (0,1)\), we have

then there must be an inverse uncertainty distribution \(\Phi _{t_{i}}^{-1}\) of \(X_{t_{i}}\).

Writing \(x=\Phi ^{-1}_{t_{i}}(\alpha )\), we can get \(\alpha =\Phi _{t_{i}}\) and

Therefore, \(\varepsilon _{i}\) can be regarded as the value of \(\alpha \) in \(X^{\alpha }_{t_{i}}\) at time \(t_{i}\). \(\square \)

Property 2

The residuals of a multi-factor uncertain differential equation obey the linear uncertainty distribution \({\mathcal {L}} (0,1)\).

Proof

The uncertainty distribution \(\Phi _{t_{i}}(X_{t_i})\) \((2\le i\le n)\) is also an uncertain variable and \(0\le \Phi _{t_{i}}(X_{t_i})\le 1\). For any \(0<x<1\), we can always get

Obviously, the distribution of \(\Phi _{t_{i}}(X_{t_i})\) is as follows

Therefore, the uncertain variable \(\Phi _{t_{i}}(X_{t_i})\) follows a linear uncertainty distribution \({\mathcal {L}} (0,1)\), and the residuals \(\varepsilon _{i}=\Phi _{t_{i}}(x_{t_i}),(i=2,\ldots ,n)\) also follow the linear uncertainty distribution \({\mathcal {L}} (0,1)\). \(\square \)

3.2 Approximate analytical expression of residuals

For the general multi-factor uncertain differential equation, if the uncertainty distribution cannot be obtained by solving the equation, the approximate expression of the residual can be obtained by the following method.

According to Eq. (3), \(X^{\alpha }_{t_{i}}\) can be expressed as

where

Since the \(\alpha \) satisfies the following minimization problem

it can be obtained that \(X^{\alpha }_{t_{i}} \approx X_{t_{i}}\).

According to Eq. (3) and Property 1 of the residuals, we can get

Therefore the Eq. (4) can be expressed as

After sorting, the expression for the residuals \(\varepsilon _{i}\) is

Example 3

Assuming a multi-factor uncertain differential equation,

the updated uncertain differential equation can be obtained

The corresponding updated ordinary differential equation is

Using the method described above, we can get

Therefore, when we have observational data, we can substitute it into the solution. The observed data of Example 3 and its residual calculation results are shown in Table 1.

4 Parameter estimation and result testing

This section presents moment estimates of the unknown parameters in multi-factor uncertain differential equations based on residuals and uses uncertainty hypothesis testing to confirm the accuracy of the estimates. Some numerical examples are provided to demonstrate the feasibility of the method.

4.1 Moment estimation based on residual

Assume a multi-factor uncertain differential equation with n observations \((t_i,x_{t_i})\) \((i=1,2,\ldots ,n)\)

where \(\mu \) and \(\sigma _{j}(j=1, 2, \ldots , n)\) are the parameters to be estimated.

Based on the observed data and the definition of residuals, a series of residuals \(\varepsilon _{2}, \varepsilon _{3}, \ldots ,\varepsilon _{n}\) can be obtained and used as a set of samples for the linear uncertainty distribution \({\mathcal {L}} (0,1)\).

According to the method of moments, the p-th sample moments is

and the corresponding p-th population moments

where \(p=1, 2, \ldots ,K\) (K is the number of unknown parameters). According to the principle of moment estimation method, we can obtain the following equations:

The estimated value \(({\hat{\mu }};\hat{\sigma _{1}},\hat{\sigma _{2}},\ldots ,\hat{\sigma _{n}})\) of unknown parameters can be obtained by solving the equations.

However, with some observations, the moment estimation method is no longer applicable when the Equation system (6) based on moment estimation has no solution. In this case, the unknown parameters can be obtained by solving the following minimization problem based on the generalized estimation of moments principle:

4.2 Reasonableness test of estimated results

By means of moment estimation, we obtain estimates of the unknown parameters \(({\hat{\mu }};{\hat{\sigma }}_{1}, {\hat{\sigma }}_{2},\ldots ,{\hat{\sigma }}_{n})\), and the residual values which obey a linear uncertainty distribution \({\mathcal {L}} (0,1)\). Next, we will test the estimation results using hypothesis testing methods.

For the residuals \(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _N\) that follow a linear uncertainty distribution \({\mathcal {L}} (0,1)\), the test for the hypotheses:

and at significance level \(\alpha \) is

\( {W=\Bigg \{(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _N): \text {there are at least}\, \alpha }\)\({\text {of indexes i's with}1\le i\le n \text {such as}z_i <\Phi ^{-1}(\frac{\alpha }{2}) \text {or} z_i > }\)\({\Phi ^{-1}(1-\frac{\alpha }{2}) \Bigg \}} \) where the inverse uncertainty distribution of \({\mathcal {L}} (0,1)\) is

If the number of \(\varepsilon _i\) satisfying

is at least \((N-1)\alpha \), then \(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _N\in W\). And the original hypothesis \(H_0\) is rejected, meaning that the parameter estimate result is erroneous.

Example 4

Consider a multi-factor uncertain differential equation with parameters \(\mu \), \(\sigma _{1}\) and \(\sigma _{2}\)

Then we can get the related updated multi-factor uncertain differential equation

By solving the uncertain variable \(X_{t_{i}}\) and its uncertainty distribution \(\Phi (X_{t_{i}})\), the residual can be expressed as

and \(\varepsilon _{i}\sim {\mathcal {L}}(0,1)\). The observed data are shown in Table 3.

According to the principle of the moment estimation, we obtain the following equations

By solving the above system of equations, the unknown parameter results are obtained

Therefore the residuals are determined as

and the values of the residuals are shown in Table 2.

For the residuals \(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{25}\), the test for the hypotheses:

at significance level \(\alpha =0.05\) is \( W=\Bigg \{ (\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{25}\)): there are at least 2 of indexes i’s with \(1\le i\le n \) such as \( z_i < \Phi ^{-1}(\frac{\alpha }{2})\) or \( z_i > \Phi ^{-1}(1-\frac{\alpha }{2})\Bigg \}\)

where

Obviously, any \(\varepsilon _i\in [0.025,0.975]\) and \((\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{25})\notin W\). The original hypothesis \(H_0\) holds and \(({\hat{\mu }},{\hat{\sigma }}_1,{\hat{\sigma }}_2)\) is reasonable for the equation.

Therefore, the multi-factor uncertain differential equation is obtained

Example 5

Assuming a multi-factor uncertain differential equation,

where \(\sigma _{1}\), \(\sigma _{2}\) are unknown parameter. The observed data are shown in Table 4.

The updated uncertain differential equation can be obtained

The corresponding updated ordinary differential equation is

and the residual expression is

According to the principle of the moment estimation, we obtain the following equations

By solving the above system of equations, we can obtain the estimated results of the unknown parameters

Therefore the residuals are determined as

and the values of the residuals are shown in Table 3.

For the residuals \(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{20}\), the test for the hypotheses:

at significance level \(\alpha =0.1\) is

\(W=\Bigg \{(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{20}): \text {there are at}\) \(\text { least 2 of} \text {indexes i's with} 1\le i\le n \text {such as} z_i <\Phi ^{-1}(\frac{\alpha }{2}) \text {or} \) \( z_i >\Phi ^{-1}(1-\frac{\alpha }{2}) \Bigg \}\) where

Obviously, only \(\varepsilon _2 \notin [0.05,0.95]\), thus \((\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{25})\notin W\). The original hypothesis \(H_0\) holds and \(({\hat{\sigma }}_1,{\hat{\sigma }}_2)\) is reasonable for the equation.

Therefore, the multi-factor uncertain differential equation is determined as

5 Numerical example

In this section, two examples of multi-factor uncertain differential equations with real data are shown to check the practicability of the parameter estimation method.

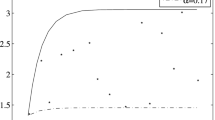

Example 6

Considering a multi-factor uncertain pharmacokinetic model with unknown parameters proposed by Liu and Yang (2021) is as follows:

where \(X_{t}\) is the drug concentration at time t and \(k_{0}\), \(k_{1}\), \(\sigma _{1}\), \(\sigma _{2}\) are the unknown constant parameters.

The research data of the JNJ-53718678 drug by Huntjens et al. (2017) was cited as the discrete data for the model. JNJ-53718678 is a small molecule fusion inhibitor for the treatment of respiratory diseases. A single injection of 250 mg of JNJ-53718678 was administered, and the plasma drug concentration was measured before injection and at 0.5 h, 1.0 h, 1.5 h, 2.0 h, 3.0 h, 4.0 h, 6.0 h, 8.0 h, 12.0 h, 16.0 h and 24.0 h after injection. The specific data is shown in Table 4.

The corresponding updated multi-factor uncertain differential equation is

and the corresponding updated ordinary differential equation is

Therefore, the residual expression can be obtained as

According to the principle of the moment estimation, we obtain the following equations

By solving the above system of equations, the moment estimates of the unknown parameters are:

The residuals can be obtained as

and the values of the residuals are shown in Table 4.

For the residuals \(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{12}\), the test for the hypotheses:

at significance level \(\alpha =0.1\) is \( W=\Bigg \{ (\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{12}): \text {there are at least 2 of indexes i's with} 1\le i\le n \text {such as} z_i <\Phi ^{-1}(\frac{\alpha }{2}) \text {or} z_i >\Phi ^{-1}(1-\frac{\alpha }{2}) \Bigg \}\) where

Obviously, only \(\varepsilon _2 \notin [0.05,0.95]\), thus \((\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{12})\notin W\). The original hypothesis \(H_0\) holds and \(({\hat{k}}_0,{\hat{k}}_1,{\hat{\sigma }}_1,{\hat{\sigma }}_2)\) is reasonable for the equation.

Eventually, the multi-factor uncertain pharmacokinetic model equation is determined as

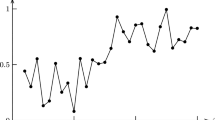

Example 7

Considering a multi-factor uncertain stock model with Alibaba stock price data (Liu and Liu 2022) from January 1 to January 30, 2019 shown in Table 5:

where \(X_{t}\) is the stock price at time t and m, a, \(\sigma _{1}\), \(\sigma _{2}\) are the unknown constant parameters.

The corresponding updated multi-factor uncertain differential equation is

and the residual expression can be obtained as

According to the principle of the moment estimation, we obtain the following equations

By solving the above system of equations, the moment estimates of the unknown parameters are:

The residuals can be obtained as

and the values of the residuals are shown in Table 5.

For the residuals \(\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{30}\), the test for the hypotheses:

at significance level \(\alpha =0.1\) is

\(W{=}\Bigg \{ (\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{30}): \text {there are at least 3 of indexes i's} \)\( { with} 1\le i\le n \text {such as} z_i <\Phi ^{-1}(\frac{\alpha }{2}) \text {or} z_i >\Phi ^{-1}(1-\frac{\alpha }{2}) \Bigg \}\) where

Obviously, \(\varepsilon _2\) and \(\varepsilon _{21} \notin [0.05,0.95]\), thus \((\varepsilon _2,\varepsilon _3,\ldots ,\varepsilon _{30})\notin W\). The original hypothesis \(H_0\) holds and \(({\hat{m}},{\hat{a}},{\hat{\sigma }}_1,{\hat{\sigma }}_2)\) is reasonable for the equation.

Eventually, the multi-factor uncertain stock model equation is determined as

6 Conclusion

This paper presents the definition of residuals for multi-factor uncertain differential equations and proves two important properties. Examples of computing residuals demonstrate how residual expressions can be obtained in various contexts. Based on the fact that the residuals obey the linear uncertainty distribution \({\mathcal {L}}(0,1)\), moment estimates of the unknown parameters in the multi-factor uncertain differential equation are performed and the estimates are tested. Several numerical examples are shown to verify the feasibility of the method. In contrast to previously proposed estimation methods, the residual-based moment estimation method does not require weighting and normalisation of the unknown parameters in the multi-factor uncertain differential equation. The residual method can also be used for estimation when the time interval is relatively large and the difference method is not applicable. In the future the residual-based moment estimation method can be used for parameter estimation of high-order uncertain differential equations or multi-dimensional uncertain differential equations.

Data availability

The data that support the findings of this study are openly available in Soft Computing.

References

Ge XT, Zhu YG (2013) A necessary condition of optimality for uncertain optimal control problem. Fuzzy Optim Decis Mak 12:41–51

Huntjens DRH, Ouwerkerk-Mahadevan S, Brochot A et al (2017) Population pharmacokinetic modeling of JNJ-53718678, a novel fusion inhibitor for the treatment of respiratory syncytial virus: results from a phase I, double-blind, randomized, placebo-controlled first-in-human study in healthy adult subjects. Clin Pharmacokinet 56:1331–1342

Li SG, Peng J, Zhang B (2015) Multifactor uncertain differential equation. J Uncertain Anal Appl 3(1):1–19

Lio WJ, Liu B (2020) Uncertain maximum likelihood estimation with application to uncertain regression analysis. Soft Comput 24:9351–9360

Lio WJ, Liu B (2021) Initial value estimation of uncertain differential equations and zero-day of COVID-19 spread in China. Fuzzy Optim Decis Mak 20:177–188

Liu B (2007) Uncertainty theory, 2nd edn. Springer, Berlin

Liu B (2009) Some research problems in uncertainty theory. J Uncertain Syst 3(1):3–10

Liu Z (2021) Generalized moment estimation for uncertain differential equations. Appl Math Comput 392:125724

Liu Y, Liu B (2022) Residual analysis and parameter estimation of uncertain differential equations. Fuzzy Optim Decis Mak 21:1–18

Liu Z, Yang Y (2021) Pharmacokinetic model based on multifactor uncertain differential equation. Appl Math Comput 392:125722

Peng J, Chen L, Zhang B (2022) Transportation planning for sustainable supply chain network using big data technology. Inf Sci 609:781–798

Sheng YH, Zhang N (2021) Parameter estimation in uncertain differential equations based on the solution. Math Method Appl Sci 44:9441–9452

Sheng YH, Yao K, Chen XW (2020) Least squares estimation in uncertain differential equations. IEEE Trans Fuzzy Syst 28(10):2651–2655

Yao K (2015a) Uncertain contour process and its application in stock model with floating interest rate. Fuzzy Optim Decis Mak 14(4):399–424

Yao K (2015b) Uncertain differential equation with jumps. Soft Comput 19:2063–2069

Yao K (2016) Uncertain differential equations, 2nd edn. Springer, New York

Yao K, Liu B (2020) Parameter estimation in uncertain differential equations. Fuzzy Optim Decis Mak 19(1):1–12

Ye TQ, Liu B (2022) Uncertain hypothesis test with application to uncertain regression analysis. Fuzzy Optim Decis Mak 21:157–174

Ye TQ, Liu B (2023) Uncertain hypothesis test for uncertain differential equations. Fuzzy Optim Decis Mak 22:195–211

Zhang GD, Sheng YH (2022) Estimating time-varying parameters in uncertain differential equations. Appl Math Comput 425:127084

Zhang J, Sheng YH, Wang XL (2021a) Least squares estimation of high-order uncertain differential equations. J Intell Fuzzy Syst 41(2):2755–2764

Zhang N, Sheng YH, Zhang J, Wang XL (2021b) Parameter estimation in multifactor uncertain differential equation. J Intell Fuzzy Syst 41(2):2865–2878

Zhang GD, Shi YX, Sheng YH (2023) Uncertain hypothesis testing and its application. Soft Comput 27:2357–2367

Funding

This work was funded by the National Natural Science Foundation of China (Grant Nos. 12061072 and 62162059) and the Xinjiang Key Laboratory of Applied Mathematics (Grant No. XJDX1401).

Author information

Authors and Affiliations

Contributions

All authors contributed to the research concept and paper design. The topic selection of the thesis, the analysis of the research results, the selection of references and the revision of the article were completed by LY and YS. The first draft of the thesis was completed by LY. Final manuscript read and approved by all authors.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This paper does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yao, L., Sheng, Y. Moments estimation for multi-factor uncertain differential equations based on residuals. Soft Comput 27, 11193–11203 (2023). https://doi.org/10.1007/s00500-023-08317-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-023-08317-3