Abstract

Parameter estimation has become a crucial issue in the development of uncertain differential equation. This paper presents a new parameter estimation method in uncertain differential equation based on uncertain maximum likelihood estimation, and gives some analytical formulae of the uncertain maximum likelihood estimators in special linear uncertain differential equations. In addition, some numerical examples are provided to illustrate this parameter estimation method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

For the sake of modeling the human’s belief degrees reasonably, uncertainty theory was established by Liu (2007) in 2007 and then perfected by Liu (2009) in 2009. Up to now, uncertainty theory has become a new branch of axiomatic mathematics and has been widely applied in many fields of science and technology.

For the purpose of handling the dynamic systems with continuous-time noises, uncertain differential equation was first proposed by Liu (2008) as a kind of differential equation driven by Liu processes. Up to now, uncertain differential equation has been extensively studied and has made significant progress. In the theoretical aspect of uncertain differential equation, Chen and Liu (2010) first proposed the existence and uniqueness theorem of the solution of uncertain differential equation under linear growth condition and Lipschitz condition. Following the existence and uniqueness theorem, Liu (2009) first defined the concept of stability of uncertain differential equation. Later, Yao et al. (2013) gave some stability theorems to develop the stability analysis of uncertain differential equation, and then other types of stability were discussed by Sheng and Wang (2014), Yao et al. (2015), Yang et al. (2017), etc. As the most significant contribution to uncertain differential equation, Yao-Chen formula was proposed by Yao and Chen (2013), which associated uncertain differential equation with ordinary differential equations, and showed that the solution of an uncertain differential equation can be represented by the solutions of a family of ordinary differential equations. Based on the Yao-Chen formula, Yao and Chen (2013) first proposed a numerical method for solving uncertain differential equation, which was then extended by Yang and Shen (2015), Yang and Ralescu (2015), Gao (2016), etc. In the practical aspect, uncertain differential equation has been widely applied in various fields and spawned many theoretical branches. For example, uncertain differential equation was widely applied in finance markets by Liu (2013) and generated uncertain finance theory. In addition, uncertain differential equation was applied in uncertain optimal control (Zhu 2010), uncertain differential game (Yang and Gao 2013), uncertain population model (Zhang and Yang 2020), uncertain heat conduction equation (Yang and Yao 2017), uncertain string vibration equation (Gao and Ralescu (2019)), uncertain spring vibration equation (Jia and Dai 2018) and uncertain epidemic model (Li et al. 2017).

However, there exist unknown parameters in the model established in the real world. Therefore, how to estimate the unknown parameters based on the observations of the solution to uncertain differential equation is a critical problem. For the purpose of solving this problem, Yao and Liu (2020) proposed a method of moment estimation based on the difference form of uncertain differential equation. Following that, Liu and Yang (2019) applied the method of moment estimation to the parameter estimation of high-order uncertain differential equation. Later, Sheng et al. (2020) presented a method of least squares estimation for estimating the unknown parameters. In addition, Lio and Liu (2020a) proposed a method of estimating the unknown initial value of uncertain differential equation based on observed data. Up to now, the parameter estimation of uncertain differential equation has received more and more attentions from scholars.

As another important method of parameter estimation, uncertain maximum likelihood estimation was proposed by Lio and Liu (2020b) under the framework of uncertainty theory, and was applied in regression analysis by estimating the unknown parameters of uncertain regression models. Since then, uncertain maximum likelihood estimation has attracted the attention of many scholars. In this paper, it is our goal to present a parameter estimation method for uncertain differential equation based on the uncertain maximum likelihood estimation. The overall structure of this paper takes the form of five sections, including this introductory section. Section 2 begins by introducing some concepts of uncertainty theory and Sect. 3 begins by proposing the parameter estimation method for uncertain differential equation, and giving some analytical formulae of the uncertain maximum likelihood estimators in special linear uncertain differential equations. In Sect. 4, we apply the proposed estimation method in some numerical examples. Finally, a concise conclusion is given in Sect. 5.

2 Preliminary

This section will introduce some concepts and theorems about uncertainty theory. The following symbols are used throughout this paper:

Definition 1

(Liu 2007) Assume that \(\varGamma \) is a universal set and \({\mathscr {L}}\) is a \(\sigma \)-algebra over \(\varGamma \), \({\mathscr {M}}\) is a measurable set function on the \(\sigma \)-algebra \({\mathscr {L}}\) by following three axioms:

Axiom 1. (Normality Axiom) \({\mathscr {M}}\{\varGamma \}=1\).

Axiom 2. (Duality Axiom) \({\mathscr {M}}\{\varLambda \}+{\mathscr {M}}\{\varLambda ^{c}\}=1\) for any event \(\varLambda \in {\mathscr {L}}\).

Axiom 3. (Subadditivity Axiom) For any countable sequence \(\{\varLambda _i\}\), we always have

Then the set function \({\mathscr {M}}\) is called an uncertain measure, and the triplet \((\varGamma ,{\mathscr {L}},{\mathscr {M}})\) is called an uncertainty space.

For the purpose of obtaining the uncertain measure of composite event, the product uncertain measure \({\mathscr {M}}\) on the product \(\sigma \)-algebra \({\mathscr {L}}\) was defined by Liu (2009) by the following product axiom.

Axiom 4. (Product Axiom) Assume \((\varGamma _i,{\mathscr {L}}_i,{\mathscr {M}}_i)\) are uncertainty spaces for \(i=1, 2, \cdots \) \({\mathscr {M}}\) is an uncertain measure on the \(\sigma \)-algebra satisfying

where \(\varLambda _i\) are arbitrarily chosen events from \({\mathscr {L}}_i\) for \(i=1, 2, \cdots \), respectively. Then, the uncertain measure \({\mathscr {M}}\) is called a product uncertain measure.

An uncertain variable \(\xi \) is a measurable function from an uncertainty space \((\varGamma ,{\mathscr {L}},{\mathscr {M}})\) to the set of real numbers, i.e., the set

is always an event for any Borel set B of real numbers. The uncertainty distribution of an uncertain variable \(\xi \) is defined by

A normal uncertain variable \(\xi \sim {\mathcal {N}}(e,\sigma )\) has a normal uncertainty distribution

and a normal uncertainty distribution is called standard if \(e = 0\) and \(\sigma = 1\).

Definition 2

(Liu 2009) An uncertain process \(C_t\) is said to be a Liu process if

-

(i)

\(C_0=0\) and almost all sample paths are Lipschitz continuous,

-

(ii)

\(C_t\) has stationary and independent increments,

-

(iii)

every increment \(C_{s+t}-C_s\) is a normal uncertain variable with expected value 0 and variance \(t^2\).

3 Parameter estimation

In this section, we first introduce a parameter estimation method for uncertain differential equation based on the uncertain maximum likelihood estimation.

Consider the uncertain differential equation denoted by

where \(C_t\) is a Liu process, \(f(t,x;\mu )\) and \(g(t,x;\theta )\) are two real-valued measurable functions on \(T\times \Re \) and satisfy that (1) has a unique solution, i.e., \(f(t,x;\mu )\) and \(g(t,x;\theta )\) satisfy the linear growth condition

and Lipschitz condition

for any \(x,y\in \Re \) and \(t\ge 0\) with some constant L, \(\mu \) and \(\theta \) are two unknown parameters to be estimated on the basis of the observations of the solution \(X_t\). Now we write equation (1) into the difference form by using the Euler method:

i.e.,

According to the definition of Liu process,

follows a standard normal uncertainty distribution. That is, we can get

Suppose that there are n observed data \(x_{t_{1}},x_{t_{2}},\cdots ,x_{t_{n}}\) of the solution \(X_t\) at time-points \(t_{1}<t_{2}<\cdots <t_{n}\). By substituting the observed data into equation (2), we write

for \(i=1,2,\cdots ,n-1\), which are \(n-1\) functions containing the unknown parameters. According to equation (2), we can regard the values of \(h_1(\mu ,\theta ),h_2(\mu ,\theta ),\cdots \), \(h_{n-1}(\mu ,\theta )\) as \(n-1\) samples of a standard normal uncertainty distribution \({\mathcal {N}}(0,1)\). Then, the following theorem gives the estimates of the parameters \(\mu \) and \(\theta \) by the uncertain maximum likelihood estimation.

Theorem 1

Assume that \(x_{t_{1}},x_{t_{2}},\cdots ,x_{t_{n}}\) are observations of the solution \(X_t\) of the uncertain differential equation (1) at the times \(t_1,t_2,\cdots ,t_n\) with \(t_{1}<t_{2}<\cdots <t_{n}\), respectively. Then, the estimates \(\mu ^{*}\) and \(\theta ^{*}\) obtained by means of the uncertain maximum likelihood estimation solve the following system of equations

where \(\lambda \) is the root of the transcendental equation \(1+x+\exp (x)-x\exp (x)=0\) and can be taken as 1.5434 approximately in numerical solution.

Proof

At first, we can regard the values of \(h_1(\mu ,\theta )\),

\(h_2(\mu ,\theta ),\cdots ,h_{n-1}(\mu ,\theta )\) as \(n-1\) samples of the population \({\mathcal {N}}(e,\sigma )\) with uncertainty distribution

Notice that \(\displaystyle \varPhi (x)\) is differentiable and

According to the definition of uncertain likelihood function presented by Lio and Liu (2020b), the likelihood function is

Since \(\varPhi '(x)\) decreases as \(|e-x|\) increases, we can rewrite the likelihood function as

Then, we can get the maximum likelihood estimates of e and \(\sigma \) by solving the maximization problem

Since the likelihood function is decreasing with respect to

the maximum likelihood estimate \(e^*\) solves the following minimization problem

whose minimum solution is

and then the maximum likelihood estimate \(\sigma ^*\) solves the maximization problem

Here we set \(\displaystyle y=\frac{\pi }{\sqrt{3}\sigma }\) and \(\displaystyle k=\bigvee \limits _{i=1}^{n-1} \left| e^*-h_i(\mu ,\theta )\right| \). Then, the maximization problem (6) is transformed into the following maximization problem

Let us write

Notice that

It is easy to see that \(p'\left( \lambda /k\right) =0\), where \(\lambda \) is the root of the transcendental equation \(1+x+\exp (x)-x\exp (x)=0\) and can be taken as 1.5434 approximately in numerical solution. Then we can obtain \(p'(y)>0\) when \(0<y<\lambda /k\) and \(p'(y)<0\) when \(y>\lambda /k\). Thus, \(y^*=\lambda /k\) is the maximum point of p(y) in the feasible region, which implies that \(y^*\) is the maximum solution of the maximization problem (7). Then,

is the maximum solution of the maximization problem (6) immediately. Thus, \(e^*\) and \(\sigma ^{*}\) are the maximum likelihood estimates of e and \(\sigma \), respectively.

Since \(h_1(\mu ,\theta ),h_2(\mu ,\theta ),\cdots ,h_{n-1}(\mu ,\theta )\) are actually the samples of the standard normal uncertainty distribution \({\mathcal {N}}(0,1)\), we must have

Therefore, it follows from (5) and (8) that

whose solutions \(\mu ^{*}\) and \(\theta ^{*}\) are the estimates of the parameters \(\mu \) and \(\theta \), respectively. That is, we can get the estimates of the parameters \(\mu \) and \(\theta \) by solving the system of equations (4). The theorem is proved. \(\square \)

The above method of estimating the parameters of uncertain differential equations is called the method of uncertain maximum likelihood.

Remark 1

Sometimes the system of equations (4) has no solution, or we often cannot find the exact solution of the system of equations (4) when \(f(t,x;\mu )\) and \(g(t,x;\theta )\) are nonlinear functions with respect to \(\mu \) and \(\theta \), respectively. In this case, we can get the numerical solution of the system of equations (4) by solving the following minimization problem,

where \(h_1(\mu ,\theta ),h_2(\mu ,\theta ),\cdots ,h_{n-1}(\mu ,\theta )\) are defined by (3), and some numerical methods such as Newton’s method, secant method and simplex method can be used.

As an important class of uncertain differential equations, linear uncertain differential equations have been widely used in financial markets. For example, Liu (2009) first proposed a stock model in which the stock price is determined by a linear uncertain differential equation. Later, Peng and Yao (2011) studied a new stock model in which the stock price follows a mean-reverting process. After that, Chen and Gao (2013) investigated an uncertain interest rate model by assuming that the interest rate follows a linear uncertain differential equation, and Liu et al. (2015) presented an uncertain currency model where the exchange rate follows a linear uncertain differential equation. Next we will give some analytical formulae of the uncertain maximum likelihood estimators in special linear uncertain differential equations.

Corollary 1

Consider the uncertain differential equation

where \(\mu \) and \(\theta >0\) are two unknown parameters to be estimated. Assume that \(x_{t_{1}},x_{t_{2}},\cdots ,x_{t_{n}}\) are observations of the solution \(X_t\) of the uncertain differential equation at the times \(t_1,t_2,\cdots ,t_n\) with \(t_{1}<t_{2}<\cdots <t_{n}\), respectively. Then, the estimates of the parameters \(\mu \) and \(\theta \) are

Proof

By substituting the observed data into equation (3), we can get

According to Theorem 1, the estimates of the unknown parameters solve

By solving the above system of equations, we can get the estimates of \(\mu \) and \(\theta \) shown in (11). \(\square \)

Corollary 2

Consider the uncertain differential equation

where \(\mu \) and \(\theta >0\) are two unknown parameters to be estimated. Assume that \(x_{t_{1}},x_{t_{2}},\cdots ,x_{t_{n}}\) are observations of the solution \(X_t\) of the uncertain differential equation at the times \(t_1,t_2,\cdots ,t_n\) with \(t_{1}<t_{2}<\cdots <t_{n}\), respectively. Then, the estimates of the parameters \(\mu \) and \(\theta \) are

Proof

By substituting the observed data into equation (3), we can get

for \(i=1,2,\cdots ,n-1.\) According to Theorem 1, the estimates of the unknown parameters solve

By solving the above system of equations, we can get the estimates of \(\mu \) and \(\theta \) shown in (12). \(\square \)

4 Numerical examples

Now we apply the method of uncertain maximum likelihood in three numerical examples to estimate the unknown parameters.

Example 1

For the following uncertain differential equation

with 16 observations given in Table 1, and the two parameters \(\mu \) and \(\theta >0\) are unknown which should be estimated. By substituting the observed data into (11), we have

Therefore, the uncertain differential equation is

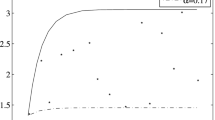

whose 0.27-path and 0.85-path are shown in Fig. 1. As we can see, all the observations fall in these two \(\alpha \)-paths, which indicates that the estimates

are acceptable.

\(\alpha \)-paths and Observations of \(X_{t}\) in Example 1

Remark 2

In fact, the true values of parameters in Example 1 are

the moment estimation and least squares estimation of parameters are

and

respectively. Obviously, for such observations, the uncertain maximum likelihood estimation is best. The reason is that when the sample size is small, the sample moments cannot provide good estimates of the corresponding population moments, and the outliers will cause greater interference to the noise term, which will cause the least square estimation to be worse than the uncertain maximum likelihood estimation. Therefore, when the sample size is small, we should choose the method of uncertain maximum likelihood instead of other methods.

Example 2

For the following uncertain differential equation

with 14 observations given in Table 2, and the two parameters \(\mu \) and \(\theta >0\) are unknown which should be estimated. By substituting the observed data into (12), we have

Therefore, the uncertain differential equation is

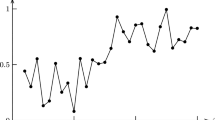

whose 0.29-path and 0.80-path are shown in Fig. 2. As we can see, all the observations fall in these two \(\alpha \)-paths, which indicates that the estimates

are acceptable.

Example 3

For the following uncertain differential equation

with 16 observations given in Table 3, and the two parameters \(\mu >0\) and \(\theta >0\) are unknown which should be estimated. By substituting the observations into equation (3), we can get

\(\alpha \)-paths and Observations of \(X_{t}\) in Example 2

for \(i=1,2,\cdots ,15\). Then, we can solve the minimization problem (10) by using MATLABFootnote 1, and get the estimates of \(\mu \) and \(\theta \) which are

Therefore, the uncertain differential equation is

whose 0.15-path and 0.82-path are shown in Fig. 3. As we can see, all the observations fall in these two \(\alpha \)-paths, which indicates that the estimates

are acceptable.

\(\alpha \)-paths and Observations of \(X_{t}\) in Example 3

5 Conclusion

This paper first proposed the method of uncertain maximum likelihood to estimate the unknown parameters in uncertain differential equation, and gave some analytical formulae of the uncertain maximum likelihood estimators in special linear uncertain differential equations. In addition, some numerical examples were also provided to illustrate the method of uncertain maximum likelihood in this paper.

Notes

MATLAB R2019a, 9.6.0.1072779, maci64, Optimization Toolbox, “fminsearch” function.

References

Chen X, Liu B (2010) Existence and uniqueness theorem for uncertain differential equations. Fuzzy Optim Decis Mak 9(1):69–81

Chen X, Gao J (2013) Uncertain term structure model of interest rate. Soft Comput 17(4):597–604

Gao R (2016) Milne method for solving uncertain differential equations. Appl Math Comput 274:774–785

Gao R, Ralescu D (2019) Uncertain wave equation for vibrating string. IEEE Trans Fuzzy Syst 27(7):1323–1331

Jia L, Dai W (2021) Uncertain Spring Vibration Equation. J Ind Manag Optim. https://doi.org/10.3934/jimo.2021073

Li Z, Sheng Y, Teng Z, Miao H (2017) An uncertain differential equation for SIS epidemic model. J Intell Fuzzy Syst 33(4):2317–2327

Lio W, Liu B (2020) Initial value estimation of uncertain differential equations and zeroday of COVID-19 spread in China. Fuzzy Optim Decis Mak 20(2):177–188

Lio W, Liu B (2020) Uncertain maximum likelihood estimation with application to uncertain regression analysis. Soft Comput 24(13):9351–9360

Liu B (2007) Uncertainty theory, 2nd edn. Springer-Verlag, Berlin

Liu B (2008) Fuzzy process, hybrid process and uncertain process. J Uncertain Syst 2(1):3–16

Liu B (2009) Some research problems in uncertainty theory. J Uncertain Syst 3(1):3–10

Liu B (2013) Toward uncertain finance theory. J Uncertain Anal Appl 1:5 (Article 1)

Liu Y, Chen X, Ralescu D (2015) Uncertain currency model and currency option pricing. Int J Intell Syst 30(1):40–51

Liu Z, Yang Y (2019) Moment estimation for parameters in high-order uncertain differential equations. Appl Math Comput 392:125724

Peng J, Yao K (2011) A new option pricing model for stocks in uncertainty markets. Int J Oper Res 8(2):18–26

Sheng Y, Wang C (2014) Stability in the p-th moment for uncertain differential equation. J Intell Fuzzy Syst 26(3):1263–1271

Sheng Y, Yao K, Chen X (2020) Least squares estimation in uncertain differential equations. IEEE Trans Fuzzy Syst 28(10):2651–2655

Yang X, Gao J (2013) Uncertain differential games with application to capitalism. J Uncertain Anal Appl 1:1 (Article 17)

Yang X, Ralescu D (2015) Adams method for solving uncertain differential equations. Appl Math Comput 270:993–1003

Yang X, Shen Y (2015) Runge-Kutta method for solving uncertain differential equations. J Uncertain Anal Appl 3:2 (Article 17)

Yang X, Ni Y, Zhang Y (2017) Stability in inverse distribution for uncertain differential equations. J Intell Fuzzy Syst 32(3):2051–2059

Yang X, Yao K (2017) Uncertain partial differential equation with application to heat conduction. Fuzzy Optim Decis Mak 16(3):379–403

Yao K, Chen X (2013) A numerical method for solving uncertain differential equations. J Intell Fuzzy Syst 25(3):825–832

Yao K, Gao J, Gao Y (2013) Some stability theorems of uncertain differential equation. Fuzzy Optim Decis Mak 12(1):3–13

Yao K, Ke H, Sheng Y (2015) Stability in mean for uncertain differential equation. Fuzzy Optim Decis Mak 14(3):365–379

Yao K, Liu B (2020) Parameter estimation in uncertain differential equations. Fuzzy Optim Decis Mak 19(1):1–12

Zhang Z, Yang X (2020) Uncertain population model. Soft Comput 24(2):2417–2423

Zhu Y (2010) Uncertain optimal control with application to a portfolio selection model. Cybern Syst 41(7):535–547

Acknowledgements

This work was supported by National Natural Science Foundation of China Grant No.61873329.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by the author.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Y., Liu, B. Estimating unknown parameters in uncertain differential equation by maximum likelihood estimation. Soft Comput 26, 2773–2780 (2022). https://doi.org/10.1007/s00500-022-06766-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-06766-w