Abstract

In this paper, we study efficient asymptotically correct a posteriori error estimates for the numerical approximation of first-order Fredholm–Volterra integro-differential equations. In the first step, we find the deviation of the error for Fredholm–Volterra integro-differential equations by using defect correction principle. Then we show that for m degree piecewise polynomial collocation method, our method provides order \(\mathcal {O}(h^{m+1})\) for the deviation of the error. Also we improve the piecewise polynomial collocation method by using the deviation of the error estimation. Numerical results in the last section are included to confirm the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In this work, we study the deviation of the error estimation for the linear and nonlinear Fredholm–Volterra integro-differential equations. The first-order Fredholm–Volterra integro-differential (FVID) equation is given by the following form

with

where \(a,b,\alpha ,\beta ,r\in R=(-\,\infty ,\infty ),\,\alpha +\beta \ne 0\) and \(b>a\).

Integro-differential equations can be found in many branches of science and engineering, for example, in the electrical circuit analysis (Moura and Darwazeh 2005) and mechanical engineering (Yogi Goswami 2004; Fidlin 2005). These equations appear in the computer vision and image processing. In particular, these equations are used in the image deblurring, denoising and its regularization (Chen et al. 2016; Huang et al. 2009; Athavale and Tadmor 2011). In addition, in the pattern recognition and machine intelligence, we can see the application of these equations (Doroshenko et al. 2011). Therefore, the numerical studies for the integro-differential equations have important role in sciences and computer vision. The numerical solution based on the piecewise polynomial collocation method is studied in Hangelbroek et al. (1977), Brunner (2004), Boor and Swartz (1973). Also other methods can be found in Volk (1988), Daşcioğlu and Sezer (2005), Reutskiy (2016). In this work, improved piecewise polynomial collocation method is introduced. In the previous work (Parvaz et al. 2016), the deviation of the error estimation analysis is given for the second-order Fredholm–Volterra integro-differential equations. It is shown that for m degree piecewise polynomial collocation method, the order of deviation of the error is at least \(\mathcal {O}(h^{m+1})\). In this study, the first-order Fredholm–Volterra integro-differential equations are studied. We prove that for m degree piecewise polynomial, collocation method provides \(\mathcal {O}(h^{m+1})\) as the order of the deviation of the error. Then according to the defect correction and the deviation of the error, piecewise polynomial collocation method can be improved. The general studies on the structure of the defect correction principle can be found in Stetter (1978), Bohmer et al. (1984). The deviation of the error estimation analysis for boundary value problems has been given in Saboor Bagherzadeh (2011). Also the error estimation based on locally weighted defect for linear and nonlinear second-order boundary value problems can be found in Saboor Bagherzadeh (2011), Auzinger et al. (2014).

This article is organized as follows: The method is presented in Sect. 2. A complete analysis of the deviation of the error for linear and nonlinear cases is given in Sect. 3. In Sect. 4, numerical results are presented. Finally, we give a summary of the main conclusions in Sect. 5.

2 Description of the method

We define W and S as follows

In this paper, we shall assume that F and \(K_l,~(l=\mathbf {f},\mathbf {v})\) are uniformly continuous in W and S, respectively. We say that \(z_\mathbf {f}[y](t)\) and \(z_\mathbf {v}[y](t)\) are linear if we can write

In this paper, when we study linear case we assume that \(\Lambda _{l,\mathbf {f}}(t,s),~\Lambda _{l,\mathbf {v}}(t,s)~(l=0,1)\) are sufficiently smooth in \(J_{\mathbf {f}}:=\{(t,s)\,|t,s \in I\}\) and \(J_{\mathbf {v}}:=\{(t,s)\,|a\le s \le t\le b\}\), respectively. Also we say that F is semilinear if we can write \(F(t,y(t),z_\mathbf {f}[y](t),z_\mathbf {v}[y](t))\) as

In the nonlinear case, we assume that \(F(t,y,z_\mathbf {f},z_\mathbf {v})\), \(F_l(t,y,z_\mathbf {f},z_\mathbf {v})\quad (l=t,y,{z_\mathbf {f}},{z_\mathbf {v}})\) are Lipschitz-continuous. Also when \(z_l[y](t)\quad (l=\mathbf {f},\mathbf {v})\) are nonlinear we assume that \(K_l(t,s,y,y^{\prime })\) and \( (K_{l})_j(t,s,y,y^{\prime })~(l=\mathbf {f},\mathbf {v}~ \& ~j=y,y^{\prime })\) are Lipschitz-continuous. We say FVID equation with boundary condition (1.2) is linear if we can write (1.1) as follows

with linear \(z_l[y](t) (l=\mathbf {f},\mathbf {v})\). Also in the linear case we assume that \(a_1(t), a_2(t)\) are sufficiently smooth in I.

2.1 Collocation method

In this subsection, we introduce the piecewise polynomial collocation method for solution of the FVID problem (1.1), (1.2). Let

and \(h_i:=\tau _{i+1}-\tau _{i}\). We define \(X_n,\,Z_n\) and \(S^{(0)}_{m}(Z_n)\) as follows

where \(\Pi _m([\tau _i,\tau _{i+1}])\) is space of real polynomial functions on \([\tau _i,\tau _{i+1}]\) of degree \(\leqslant m\). Also we define the set of collocation points as

We define h (the diameter of gird \(Z_n\)) and \(h^{\prime }\) as

In this paper, the set \(X(n):=\bigcup ^{n-1}_{i=0}X_i\) is called the set of collocation points. According to the piecewise polynomial collocation method, we are looking to find a \(p\in S^{(0)}_{m}(Z_n)\) so that (1.1), (1.2) hold for all \(t\in X(n)\). In the collocation method, since we can not determine exact value for \(z_\mathbf {f}[\cdot ](t)\) and \(z_\mathbf {v}[\cdot ](t)\), we use the following quadrature method to determine \(z_\mathbf {f}[\cdot ](t)\) and \(z_\mathbf {v}[\cdot ](t)\).

where \(\tilde{t}^{z}_{i,j}:=\tau _i+\rho _z(t_{i,j}-\tau _i)\) and the quadrature weights are given by

where

In summary, collocation method can be written as Algorithm 1.

By using the interpolation error theorem (see Stoer and Bulirsch 2002, Section 2.1), we can find the following lemma.

Lemma 2.1

For sufficiently smooth f, the following estimates hold

In a similar way to Brunner (2004), for the piecewise polynomial collocation method, we can find the following theorem.

Theorem 2.2

Assume that the FVID problem (1.1), (1.2) has a unique and sufficiently smooth solution y(t). Also assume that p(t) is a piecewise polynomial collocation solution of degree\(\,\le m\). Then for sufficiently small h, the collocation solution p(t) is well-defined and the following uniform estimates at least hold:

Remark 2.3

In special case, we can see that for equidistant collocation gird points with odd m the following uniform estimates hold

By using Theorem 2.2 and Lemma 2.1, we have the following lemma.

Lemma 2.4

For linear and nonlinear \(z_l[\cdot ](t)~(l=\mathbf {f},\mathbf {v})\), we have

2.2 Finite difference scheme

In this section, we define \(\Delta _{i,j},\mathcal {A}\) and \(\mathcal {B}\) as follows

Also we define

where \(\delta _{i,j}:=t_{i,j+1}-t_{i,j}\).

We write a general one-step finite difference scheme as

Definition 2.5

For any function u, we define

also we define

By using Taylor expansions, the following lemma is obtained easily.

Lemma 2.6

For sufficiently smooth f, the following estimates hold

By using Taylor expansion and Lemma 2.6, we can find the following estimates

where \(\eta \) and \(L^{(1)}_{\mathcal {A}}\eta \) is defined in the Definition 2.5.

2.3 Deviation of the error estimation

In this subsection, we study the deviation of the error estimation for (1.1), (1.2) by using the defect correction principle. In the first step, we consider \(y^{\prime }(t)=f(t),\,a\le t \le b\), where f(t) is permitted to have jump discontinuities in the points belonging to \(Z_n\). Using the Taylor expansion, we can find “exact finite difference scheme” for \(y^{\prime }(t)=f(t)\), which is satisfied by the exact solution.

Therefore, we can say that a solution of problem (1.1), (1.2) satisfies in the following exact finite difference scheme

According to the collocation method, we have

Now in this step we define defect at \(t_{i,j}\) as

We use quadrature formula to compute integral in (2.36)

where

For sufficiently smooth f, the following error holds

Also when m is odd and the nodes \(\rho _i\) are symmetrically, we can find the following relation.

Then we consider defect at \(t_{i,j}\) as follows

In this step, we define \({{\pi }}=\{\pi _{i,j}\,;\,(i,j)\in \mathcal {A}\}\) as the solution of the following finite difference scheme

We define \(\mathbf {D}:=\{D_{i,j}\,;\,(i,j)\in \mathcal {B}\}\). For small value \(\mathbf {D}\), we can say that

where \(\eta \) can be found in (2.28), (2.29). We define \(\varepsilon \) and e as follows

An estimate for the error e can be found in Theorem 2.2. We consider the deviation of the error in the following form

By using above discussion, improved collocation method can be written as Algorithm 2.

In the next section, we will study the order of the deviation of the error estimate for FVID equation. We can easily find the following lemmas.

Lemma 2.7

The defined defect in (2.41) has order \(\mathcal {O}(h^{m})\).

Lemma 2.8

The \(\pi -\eta \) has order \(\mathcal {O}(h^{m})\).

3 Analysis of the deviation of the error

Definition 3.1

In this section, we define \(\overline{\varepsilon }\) and \(\widehat{\varepsilon }\) as follows

Definition 3.2

We define

where ( \(l=\mathbf {f},\mathbf {v}\) )

Also we consider \(\widehat{\chi }^{\,l}[\widehat{\varepsilon }\,]_{i,j}(l=\mathbf {f},\mathbf {v})\) as follows

where ( \(l=\mathbf {f},\mathbf {v}\) )

By using Theorem 2.2, (2.31), (2.32) and Lemma 2.8, we can find the following lemma.

Lemma 3.3

The \(\overline{\varepsilon }\) and the \(\widehat{\varepsilon }\) have order \(\mathcal {O}(h)\).

Lemma 3.4

We have

Proof

By using Lemma 2.8 and Theorem 2.2, we can write

\(\square \)

By using Definition 3.2, we can say that

Lemma 3.5

For linear and nonlinear \(z_{l}[\cdot ](t)\quad (l=\mathbf {f},\mathbf {v})\), we have

Proof

For (3.15) by using Lemmas 2.1 and 2.6 we have

Similarly, we can prove (3.16). Now we prove (3.17) for \(l=\mathbf {f}\). When \(z_{l}[\cdot ](t)\) is linear, we find

Also for nonlinear case we can get

from the Lipschitz condition for \(K_{\mathbf {f}}\) and Lemma 2.8, we get

In the same way, we can find (3.17) for \(l=\mathbf {v}\). Similarly, we can prove (3.18),(3.19) and (3.20). \(\square \)

In this step, we study linear case.

Theorem 3.6

Assume that the FVID problem (2.6) with boundary conditions (1.2) has a unique and sufficiently smooth solution. Then the following estimate holds

where e is error, \(\varepsilon \) is the error estimate and \(\theta \) is the deviation of the error estimate.

Proof

Since F is linear then by using (2.28) and (2.42) we get

Also we can write

From (3.26) and (3.27), we have

Since \(\sum _{k=1}^{m+1}\gamma ^{k}_{i,j}=1\), then we rewrite \(S_1\) as

and by using Taylor expansion, we have

where \(\xi _{i}\in [\tau _{i},\tau _{i+1}]\). By using Theorem 2.2 and (3.30), we can get \(S_1=\mathcal {O}(h^{m+1})\). For \(S_2\) by using the Lemma 2.1, we get

In a similar way, we can find

By using (3.31), (3.32), we obtain

where \(\xi ^{k}_{i,j},\, \zeta ^{i}_{k,r},\, \zeta ^{\prime }_{k,r}\in [a,b]\). Therefore, we can rewrite (3.28) as

By using stability of forward Euler scheme, we find

\(\square \)

For nonlinear case we have the following theorem.

Theorem 3.7

Consider the FVID equation (1.1) with boundary conditions (1.2), where \(F(t,y,z_\mathbf {f},z_\mathbf {v})\), \(F_l(t,y,z_\mathbf {f},z_\mathbf {v}) (l=t,y,z_\mathbf {f},z_\mathbf {v})\) are Lipschitz-continuous. Also when \(z_\mathbf {f}\) and \(z_\mathbf {v}\) are nonlinear, we assume that \(K_{l}(t,s,y,y^{\prime })\) and \((K_{l})_{j}(t,s,y,y^{\prime })\)\( ~(l=\mathbf {f},\mathbf {v}\, \& \,j=y,y^{\prime })\) are Lipschitz-continuous. Assume that the FVID problem has a unique and sufficiently smooth solution. Then the following estimate holds

where e is error, \(\varepsilon \) is the error estimate and \(\theta \) is the deviation of the error estimate.

Proof

For nonlinear case, we have

We can rewrite \(I_1,I_2,I_3\) and \(I_4\) as

with

By using the Lipschitz condition for \(F_y,F_{z_{\mathbf {f}}},F_{z_{\mathbf {v}}}\), Lemma 3.4 and Lemma 2.8, we get

We can get

Analogously we can get

Then based on the above discussion, we rewrite (3.37) in the following form

By using the Lipschitz condition for F and lemma 2.1, we have

Therefore, we can write (3.56) as

where

By using the Taylor expansion we have

We can find

In this step based on the above discussion, we have

By stability of forward Euler scheme, we can find

\(\square \)

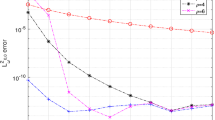

4 Numerical illustration

In this section to illustrate the theoretical results, some numerical results are presented. For all examples, we choose n collocation intervals of length 1 / n. Also we have computed the numerical results by using Mathematica-9.0 programming.

Example 4.1

To check Theorem 3.6, we consider FVID equation as follows

In this example, we assume that \(\alpha =1,\beta =0\) and \(I:=[0,1]\). Also a(t) is chosen so that exact solution is \(y(t)=\exp (2t)\). In Tables 1 and 2, we choose \(m=2\) and \(m=3\) and assume that \(\rho _i\,(i=0,\ldots ,m+1)\) are equidistant. Also in Table 3, we choose \(m=3\) and \(\{\rho _0,\rho _1\,\rho _2,\rho _3\}=\{0,0.15,0.80,1\}\).

Example 4.2

Now we consider nonlinear case as follows

We assume that \(I=[0,1]\). Also a(t) chosen so that exact solution is \(y(t)=\cos (t)\). In Table 4, we choose \(\alpha =1,\beta =0\) and \(m=4\). In Tables 5 and 6, we choose \(m=2\) and \(\alpha =\beta =1\). Also in Tables 4 and 5, we assume that \(\rho _i\,(i=0,\ldots ,m+1)\) are equidistant, and in Table 6 we choose \(\{\rho _0,\rho _1\,\rho _2,\rho _3\}=\{0,0.2,0.7,1\}\). By using this example, we reveal Theorem 3.7.

Example 4.3

We consider here the numerical results for Algorithm 2. In this example, Eq. (1.1) is considered as follows

in the interval [0, 1]. a(t) chosen so that exact solution is \(y(t)=\exp (-t)\). Tables 7, 8 and 9 compare our numerical results with collocation method. In the numerical results, \(e^*\) denotes the error of the improved collocation method. Table 7 is obtained by using \(\alpha =1,~\beta =0,~m=2,~ \{\rho _0,\rho _1\,\rho _2,\rho _3\}=\{0,0.2,0.7,1\}\). In Table 8, we consider \(\alpha =1,~\beta =0,~m=3,~\rho _i=i/4~(i=0,\ldots ,4)\). Also \(\alpha =\beta =1,~m=4,~ ~\rho _i=i/5~(i=0,\ldots ,5)\) are considered for Table 9.

Example 4.4

Consider FVID equation as

where

The exact solution of this problem is \(y(t)=t^2\). We choose \(m=2\) and assume that \(\rho _i\,(i=0,\ldots ,m+1)\) are equidistant. In Table 10, the improved collocation method has been compared with methods in Siraj-ul-Islam et al. (2014), Babolian et al. (2009), Maleknejad et al. (2011). Note that 1 / n represents the length of the partition interval. By using the results, it can be seen that the results of the proposed method are more accurate than others.

5 Conclusion

In this paper, we study the deviation of the error for the linear and nonlinear first-order FVID equations. It is shown that the order of the deviation of the error estimation is at least \(\mathcal {O}(h^{m+1})\), where m is the degree of piecewise polynomial. Also the piecewise polynomial collocation method is improved by using the defect correction principle and the deviation of the error estimation. In numerical section, examples confirming the theoretical results are given. Therefore, based on theoretical results and numerical examples, improved method can be applied to linear and nonlinear first-order Fredholm–Volterra integro- differential equations.

References

Athavale P, Tadmor E (2011) Integro-differential equations based on \((BV, L^1)\) image decomposition. SIAM J Imaging Sci 4(1):300–312

Auzinger W, Koch O, Saboor Bagherzadeh A (2014) Error estimation based on locally weighted defect for boundary value problems in second order ordinary differential equations. BIT Numer Math 54:873–900

Babolian E, Masouri Z, Hatamzadeh-Varmazyar S (2009) Numerical solution of nonlinear Volterra–Fredholm integro-differential equations via direct method using triangular functions. Comput Math Appl 58:239–247

Bohmer K, Hemker P, Stetter HJ (1984) The defect correction approach. Comput Supply 5:1–32

Boor CD, Swartz B (1973) Collocation at Gaussian points. SIAM J Numer Anal 10:582–606

Brunner H (2004) Collocation methods for volterra integral and related functional differential equations. Cambridge University Press, Cambridge

Chen K, Fairag F, Al-Mahdi A (2016) Preconditioning techniques for an image deblurring problem. Numer Linear Algebr 23(3):570–584

Daşcioğlu AA, Sezer M (2005) Chebyshev polynomial solutions of systems of higher-order linear Fredholm–Volterra integro-differential equations. J Franklin Inst 342:688–701

Doroshenko J, Dulkin L, Salakhutdinov V, Smetanin Y (2011) Principle and method of image recognition under diffusive distortions of image. In: International conference on pattern recognition and machine intelligence, 2011 Jun 27. Springer, Berlin, Heidelberg, pp 130–135

Fidlin A (2005) Nonlinear oscillations in mechanical engineering. Springer, Berlin

Hangelbroek RJ, Kaper HG, Leaf GK (1977) Collocation methods for integro-differential equations. SIAM J Numer Anal 14:377–390

Huang HY, Jia CY, Huan ZD (2009) On weak solutions for an image denoising–deblurring model. Appl Math Ser B 24(3):269–281

Maleknejad K, Basirat B, Hashemizadeh E (2011) Hybrid Legendre polynomials and block-pulse functions approach for nonlinear Volterra–Fredholm integro-differential equations. Comput Math Appl 61:2821–2828

Moura L, Darwazeh I (2005) Introduction to linear circuit analysis and modelling: from DC to RF. Newnes, Oxford

Parvaz R, Zarebnia M, Saboor Bagherzadeh A (2016) Deviation of the error estimation for second order Fredholm–Volterra integro differential equations. Math Model Anal 21(6):719–740

Reutskiy SYu (2016) The backward substitution method for multipoint problems with linear Volterra–Fredholm integro-differential equations of the neutral type. J Comput Appl Math 296:724–738

Saboor Bagherzadeh A (2011) Defect-based error estimation for higher order differential equations. PhD thesis, Vienna University of Technology

Siraj-ul-Islam, Aziz I, Al-Fhaid AS (2014) An improved method based on Haar wavelets for numerical solution of nonlinear integral and integro-differential equations of first and higher orders. J Comput Appl Math 260:449–469

Stetter HJ (1978) The defect correction principle and discretization methods. Numer Math 29:425–443

Stoer J, Bulirsch R (2002) Introduction to numerical analysis, 3rd edn. Springer, Berlin

Volk W (1988) The iterated Galerkin methods for linear integro differential equations. J Comput Appl Math 21:63–74

Yogi Goswami D (2004) The CRC handbook of mechanical engineering, 2nd edn. CRC Press, Boca Raton

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Parvaz, R., Zarebnia, M. & Bagherzadeh, A.S. An improved algorithm based on deviation of the error estimation for first-order integro-differential equations. Soft Comput 23, 7055–7065 (2019). https://doi.org/10.1007/s00500-018-3348-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3348-x