Abstract

We investigate efficient asymptotically correct a posteriori error estimates for the numerical approximation of two-point boundary value problems for second order ordinary differential equations by piecewise polynomial collocation methods. Our error indicators are based on the defect of the collocation solution with respect to an associated exact difference scheme and backsolving using a cheap, low order finite-difference scheme. We prove high asymptotical correctness of this error indicator and illustrate the theoretical analysis by numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is devoted to the efficient and accurate a posteriori error estimation for numerical approximations to boundary value problems (BVPs) for second order ordinary differential equations (ODEs). We consider the class of BVPs

with general linear boundary conditions

We assume that the problem is well-posed, with Lipschitz continuous right-hand side and a sufficiently smooth solution \( u(t) \).

Problem (1.1) can be cast into the standard form of a system of first-order ODEs, and techniques developed for the first-order case may be applied (cf. e.g. [5]). The alternative is direct discretization which we are considering here, since we are explicitly exploiting it in the design and analysis of a method for estimating the global error. As we shall see, this estimate is of a particularly high accuracy on arbitrary meshes. The present paper is of a theoretical nature; more practical questions concerning adaptive mesh selection and comparison which other established adaptive strategies are not addressed. However, in [9] it has been demonstrated that direct treatment of the second-order problem promises computational advantages particularly with respect to adaptive mesh selection, and the conditioning may also be favorable in some situations, see [13].

A preliminary version of the respective analysis has been given in the PhD thesis by the third author [18]. In particular, we consider asymptotically correct global error estimators, designed for the purpose of practical mesh adaptation, for given piecewise polynomial collocation solutions. This continues the work from [5, 6, 15], where related techniques for first order ODE systems were analyzed. Our particular focus on collocation approximations is motivated by their satisfactory and robust performance for large classes of ODE problems, see for instance [1–3, 14].

A collocation solution is a piecewise polynomial function the defect of which (i.e., its pointwise residual with respect to the given ODE) is well-defined and vanishes at the collocation nodes. The overall behavior of the defect is a quality measure which may be used for mesh adaptation, but it yields no direct indication of the behavior of the global error. In [20] it was proposed, in a general setting, to combine defect computation with a backsolving procedure based on an auxiliary, simple finite-difference scheme (SDS) in order to estimate the global error. The heuristic motivation for this approach is based on the well-known principle of defect correction [20]. But already for first order ODEs it turns out that the precise definition of the defect and its interplay with the auxiliary scheme is essential for the successful performance of defect correction algorithms, see for instance [3, 7, 8, 11, 15] and references therein, and [4, 5], in particular.

The concept of exact difference schemes (EDS) for ODEs is very useful in this context. An EDS is a system of difference equations involving integral terms obtained by locally integrating the given ODE over a grid, which is exactly satisfied by the solution of the ODE. This serves as the conceptual basis for the design of high order schemes. Detailed material on EDS and their direct or iterative computational realization can for example be found in [8, 10, 12, 16, 19]. Here, in contrast, we are using an appropriately designed EDS for defect computation only, i.e., the defect of a given numerical solution will be defined as its residual with respect to the EDS. In practice this is realized via sufficiently accurate quadrature.

1.1 Overview

The analysis of a defect-based global error estimate in the context of first-order nonlinear regular problems was given in [5]. It could be shown that for a collocation method with an \( \mathcal {O}(h) \) mesh and stage order \(\mathcal {O}(h^m)\), the error of the estimate (the difference between the global error and its estimate) is of order \(\mathcal {O}(h^{m+1})\).

In the case of second order ODEs, the analysis of the error estimator is significantly more involved. Therefore, for the details of this analysis, we restrict ourselves to a standard class of scalar second order semilinear ODEs with Dirichlet boundary conditions, see Sect. 3. The focus of our analysis is on the robustness with respect to nonequidistant grids and on the particular high asymptotic quality of the estimator. Indeed, it turns out that the deviation of the error estimator for a collocation method of order \(m\) is at least of order \(m+2\) due to a supraconvergence effectFootnote 1 and may be even higher in special situations. The scope of applicability of our approach is much wider than the presentation in this paper, however, awaiting closer investigation of more general BVPs; see the discussion in Sect. 7.3.

Remark 1.1

In our analysis we are assuming that (1.1) is well-posed and that the methods considered (a collocation method and a simple auxiliary finite-difference scheme) are stable. We do not explicitly recapitulate standard stability arguments for these schemes which are available in the literature. The stability and convergence arguments as given in [2], for instance, are natural in the sense that the stability of the discrete schemes is directly related to the conditioning of the given BVP; see [2, section 5].

However, in order to obtain sharp estimates for the deviation of the advocated global error estimate, we will make use of refined finite-difference stability estimates based on the structure of Green’s function of the (linearized) BVP, see Sect. 3.3.

2 Error estimation based on EDS defect: general setting

2.1 Design of an a posteriori error estimate

In an abstract, informal setting, the defect-based error estimation procedure can be described as follows:Footnote 2

-

Let

$$\begin{aligned} L\,u = F(u) \end{aligned}$$(2.1a)represent the given ODE problem, with exact solution \( u\). Here, \( L \) is to be identified with the leading, explicit linear part of the given differential operator.

-

An EDS is a discrete version of (2.1a) in the form of a set of finite-difference equations

$$\begin{aligned} L_{\tiny \Delta }u = {\mathcal {I}}(F(u)), \end{aligned}$$(2.1b)which is exactly satisfied by the solution \( u\) of (2.1a). Here, \( L_{\tiny \Delta }\) is a discretization of \( L \) over a given grid \( \Delta \), and \( {\mathcal {I}}(\cdot ) \) is a certain linear functional typically defined via weighted local integral means of \( F(u) \). For computational realization, \( {\mathcal {I}}(\cdot ) \) is replaced by a higher-order quadrature approximation \( {\mathcal {Q}}(\cdot ) \).

-

Furthermore, let \( {\widehat{u}}\) be defined as the solution of an auxiliary (discrete) problem

$$\begin{aligned} L_{\tiny \Delta }{\widehat{u}}= {\widehat{F}}({\widehat{u}}) \end{aligned}$$(2.2)which will be chosen as a simple, stable, low-order finite-difference approximation (SDS) to (2.1a) over a grid \( \Delta \). Note that \( L_{\tiny \Delta }\) in (2.1b) and (2.2) are assumed to be identical.

Now, for a given approximation \( {\widetilde{u}}\approx u\) (in our case computed by a high-order collocation method), we consider its (discrete) defect

such that \( {\widetilde{u}}\) is an exact solution of the ‘neighboring problem’

We approximate (2.3b) by its SDS analog,

For sufficiently small \( d\), we expect

i.e., the known error of the SDS approximation \( \widehat{\widetilde{u}}\) of the neighboring problem (2.3b) serves as an estimate for the unknown error of the SDS approximation \( {\widehat{u}}\) to the original problem (2.1) over the grid \( \Delta \). Equivalently,

i.e., the left-hand side \( \varepsilon \) of (2.5b), computed at low cost compared to the typical effort for computing \( {\widetilde{u}}\), provides an estimate for the error \( e = {\widetilde{u}}- u\).

Remark 2.1

In the concrete setting which we consider in the sequel, two different grids are involved (see Sects. 3, 4): a coarse grid \( {\Delta ^N}\) which corresponds to \( N \) collocation intervals, and a fine grid \( \Delta \) comprising \( {\Delta ^N}\) together with all interior collocation nodes. The auxiliary scheme (2.2) operates on the fine grid \( \Delta \).

2.2 How to analyze the deviation of the error estimate

Let

denote the deviation of the error estimate. For the analysis of the asymptotic behavior of \( {\theta }\) we will proceed as follows. From (2.1b) and (2.3b),

On the other hand, from (2.2) and (2.4),

Subtracting (2.8) from (2.7) the defect cancels out, giving

Relation (2.9) is sort of a nonlinear difference equation of SDS type for \( {\theta }\). In order to show that \( \varepsilon \) is asymptotically correct, i.e., that \( {\theta }\) is of higher order than \( e \) itself, one uses quasilinearization combined with stability arguments and, in addition, estimates the asymptotic behavior of both contributions to the inhomogeneity in (2.9),

-

(i)

the difference \( ({\mathcal {Q}}F-{\widehat{F}})({\widetilde{u}}) - ({\mathcal {Q}}F-{\widehat{F}})(u) \),

-

(ii)

the quadrature error \( ({\mathcal {Q}}-{\mathcal {I}})(F(u)) = ({\mathcal {Q}}-{\mathcal {I}})(L\,u)\).

Assuming sufficient smoothness of the problem data and \( u\), (ii) indicates the required order for the quadrature formula \( {\mathcal {Q}}_{\tiny \Delta }(\cdot ) \). On the other hand, (i) is typically determined by the asymptotic behavior of the error \( e \) and some of its lower derivatives. This has to be analyzed in detail with the goal to ensure that (i) is of higher order than the error \( e \) itself.

These relations become particularly simple for linear problems, \( F(u) = H u + q \), where \(H\) denotes the linear part of the affine operator \( F \). In this case, \( \varepsilon \) can be directly obtained by solving the SDS

Using an analogous notation for \( {\widehat{F}}_{\tiny \Delta }\,\) we obtain from (2.9):

The analysis in Sect. 6 will be based on (2.11) and (2.9), respectively.

Remark 2.2

Recapitulating these considerations, with the ‘classical’, pointwise defect \( L\,{\widetilde{u}}- F({\widetilde{u}}) \) instead of the modified EDS-defect (2.3a), one observes that an additional term would arise which influences the quality of \( \varepsilon \), namely \( (L_{\tiny \Delta }- L)(e) \). Typically, this term depends on a higher derivative of the error \( e \), and it will not show the desired asymptotic behavior in typical applications. We also note that for a collocation solution \( {\widetilde{u}}\) the pointwise defect evaluated at the collocation nodes vanishes and thus provides no information.

Another feature of the EDS formulation is that it is robust with respect to nonequidistant grids, e.g., for the case of Gaussian collocation points. See also the discussion in [5, 7] for the case of first order ODE systems. In this case the quadrature realization of the EDS is closely related to a higher-order implicit Runge-Kutta scheme. This way of estimating the global error for first-order problems has also been analyzed for the case of singular BVPs, see [6, 15], and it is implemented in the Matlab Footnote 3 package sbvp, an adaptive solver for first order boundary value problems, see [3].

3 Second order boundary value problems, SDS and EDS

3.1 Problem class

Considering the problem class (1.1), a complete analysis will be given for the case of Dirichlet boundary conditions

We will first consider well-posed linear Dirichlet problems

with bounded, smooth data functions. It will then be shown how this analysis extends to nonlinear problems (1.1a). The treatment of more general boundary conditions (1.1b) will be briefly discussed from the algorithmic point of view in Sect. 7.

For \( v \in C[a,b] \), as usual we denote

In the following subsections we introduce some further notation and briefly recall relevant facts about finite-difference approximations to be used later on.

3.2 Simple finite-difference scheme (SDS)

Consider a grid

For an interior grid point \( t_\ell \), we denote

Then,

Furthermore we denote

We assume that \( \Delta \) is quasiuniform, i.e.,

with a moderate-sized constant \( K \), see [2, Assunption 5.92]. On \( \Delta \), we define corresponding grid functions

The max-norm on the space of grid functions is denoted by

For a continuous function \( v \in C[a,b]\), we denote by \( R_{\tiny \Delta }\) its pointwise projection onto the space of grid functions,

We also denote \( v(t_\ell ) \) simply by \( v_\ell \), and \( f(t_\ell ,\cdots ) \) by \( f_\ell (\cdots ) \). The second difference quotient

is an approximation to \( (L\,v)(t_\ell ) \). Furthermore, let

denote the centered difference approximation to \( v'(t_\ell ) \). Both (3.6a) and (3.6b) are first-order accurate, in the equidistant case they are second-order accurate.

The standard compact, centered finite-difference approximation (SDS) to (1.1a) reads

Solution of (3.7) subject to boundary conditions (3.2b) provides a grid function

with \( {\widehat{u}}_0 = u_a \), \( {\widehat{u}}_n = u_b \), and \( {\widehat{u}}_\ell \approx u(t_\ell ) \).

For the linear BVP (3.2), the SDS (3.7) takes the form

where \({\widehat{H}}_{\tiny \Delta }v_{\tiny \Delta }\) denotes the centered discretization of \( H(v)(t_\ell ) \), i.e.,

We assume that the SDS discretization is stable with respect to \( \Vert \cdot \Vert _{\tiny \Delta }\) for sufficiently small \( h \). Typically, stability depends on \( \Delta \) in an uncritical way under the assumption of a quasiuniform grid \( \Delta \).Footnote 4

3.3 Discrete Green’s function and supraconvergence of the SDS

Since (3.6a) and (3.6b) are only first-order accurate in the nonequidistant case, the local truncation error of the SDS (3.7) is only \( \mathcal {O}(h) \). However, it is well-known that the global order of the resulting approximation is again \(\mathcal {O}(h^2)\), a phenomenon called supraconvergence. This has been analyzed in [17], see also references therein.

Here we do not repeat a proof of supraconvergence in detail, but we list the essential technical tools. Namely, in Sect. 6 we will rely on related techniques; in particular, we will show that the global error estimate to be defined in Sect. 5 is also ‘supra-accurate’.

We will make use of the following facts. Consider the simple Dirichlet problem

with solution

where \( G(t,\tau ) \) is Green’s function

The proof of the following lemma is straightforward.

Lemma 3.1

Consider an arbitrary interior grid point \(t_\ell \) and let \( g_{\Delta ;\ell }:=G(\cdot ,t_\ell )\), i.e., \( (g_{\Delta ;\ell })_k=G(t_k,t_\ell ) \). Then,

Discretization of (3.10a) according to (3.6a), i.e.,

leads to a system of \( n-1 \) difference equations for \( {\widehat{u}}_{\tiny \Delta }\), with \( {\widehat{u}}_0 = {\widehat{u}}_n = 0 \). As a consequence of Lemma 3.1, the inverse of this discrete system, i.e., its discrete Green’s function, is the exact pointwise restriction of \( G(t,\tau ) \) onto \( \Delta \times \Delta \). The following discrete solution representation is a direct consequence of this fact.

Lemma 3.2

The unique solution \( {\widehat{u}}_{\tiny \Delta }\) of (3.11) is given by

In the remainder of this section we discuss the solution structure of an SDS with a special inhomogeneity, which provides the technical basis for supraconvergence proofs and for the analysis to be given in Sect. 6. In particular, we consider

with given \( z_k \) and \( r_\ell \). Using Lemma 3.2 and applying partial summation, the solution \( {\check{v}}_{\tiny \Delta }\) of (3.13a) with \( {\check{v}}_0={\check{v}}_n=0 \), can be written as

Since \( G(t,\tau ) \) is bounded and smooth away from the diagonal \( t=\tau \), this shows that an estimate

is valid. Furthermore, from (3.13b) we obtain

with

and

This shows that there exists a constant \( \mathcal {C} \) such that we also have

For a proof of supraconvergence of the SDS one makes use of (3.13), representing the local truncation error in the form of the right-hand side in (3.13a), i.e., as a difference quotient which is \( \mathcal {O}(h) \) plus an \( \mathcal {O}(h^2) \) term. This is combined with a stability argument for the full scheme.

In a way analogous to (3.13), the solution \({\check{v}}_{\tiny \Delta }\) of an SDS of the form

with \( {\check{v}}_0={\check{v}}_n=0 \), can be written as

resulting in estimates analogous to (3.13c) and (3.16).

As a result of these considerations, a perturbation argument combined with stability of the full SDS, involving some minor technicalities, implies supraconvergence of \( {\widehat{u}}_{\tiny \Delta }\) and \( (\partial _{\tiny \Delta }{\widehat{u}}_{\tiny \Delta })_{\tiny \Delta }\), that is,

3.4 Exact finite-difference scheme (EDS)

Definition 3.1

By

we denote the class of functions from \( C^1[t_{\ell -1},t_{\ell +1}] \) for which \( v'' \) is allowed to have a jump discontinuity at \( t=t_\ell \).

Lemma 3.3

For \( v \in {\widehat{C}}_2[t_{\ell -1},t_\ell ,t_{\ell +1}] \) and \( L_{\tiny \Delta }v \) defined in (3.6a), we have

with kernel

Proof

A routine argument based on integration by parts [12, 19]. \(\square \)

Lemma 3.3 represents a special instance of an identity sometimes called Marchuk or Chawla identity, relating a general second order differential operator to its finite-difference analog via local weighted integral means.

For functions \(w=w(t)\) we adopt the denotation

with \( K_\ell \) from (3.19b). By locally integrating the ODE (1.1a), we see that, at all interior grid points \( t_\ell \), its solution \( u(t) \) exactly satisfies the EDS equations

Later on, (3.21) together with appropriate quadrature formulas \( {\mathcal {Q}}_\ell \approx {\mathcal {I}}_\ell \) will be used for defining a higher-order EDS-type defect of a given numerical solution, see Sect. 5.

4 Review of collocation methods

We recapitulate classical results for piecewise polynomial collocation methods for second order BVPs (1.1), see [2, Section 5]. For the numerical approximation we define a mesh

and set \( l_i := \tau _i-\tau _{i-1},\, i=1 \ldots N \). Here \( l_i = \mathcal {O}(h), \) with \( h \) defined in (3.5).

For collocation, \( m \) points are inserted in each subinterval \([\tau _i,\tau _{i+1}]\). This yields the (fine) gridFootnote 5

with given nodes

This grid \( \Delta \) contains \(n+1 = (m+1)N + 1\) points and is to be identified with \( \Delta \) from (3.3), with flattened indexing

Definition 4.1

Consider a quasiuniform grid \( \Delta \) as specified in (4.1a). A continuously differentiable collocation solution \( {\widetilde{u}}(t):={\widetilde{u}}_i(t),~t \in [\tau _i,\tau _{i+1}],~i=0 \ldots N-1 \), of (1.1) is a piecewise polynomial function of degree \( m+1 \), corresponding to \(m\) collocation points in each subinterval, which satisfies (1.1a) at the \( m \) collocation points in each of the \( N \) subintervals, i.e.

and, in addition, the boundary conditions (1.1b). Here, \({\widetilde{u}}(t)\) and \({\widetilde{u}}'(t)\) are required to be continuous at the endpoints of the subintervals \( [\tau _i,\tau _{i+1}] \), but \( {\widetilde{u}}''(t) \) will have jump discontinuities at the \( \tau _i \). Thus, in the neighborhood of \( \tau _i \), \( {\widetilde{u}}(t) \) is of the type \( {\widehat{C}}_2[\tau _{i-1},\tau _i,\tau _{i+1}] \) in the sense of Definition 3.1.

Let

denote the collocation error. Under standard assumptions on the given BVP, a collocation solution is stable and convergent (see [2, Chapter 5]). The following theorem states a convergence result for the problem (1.1a). The proof of the following theorem follows from general convergence results, see [2, Sect. 5.6].

Theorem 4.1

Assume that the given BVP is well-posed, i.e., it has a locally unique and sufficiently smooth solution \( u(t)\). For a collocation solution \({\widetilde{u}}(t)\) according to Definition 4.1, the following uniform estimates hold:

The higher derivatives satisfy

In the special case where \( m \) is odd and the nodes \( \rho _j \) are symmetrically distributed, i.e., \( \rho _j = \rho _{m+1-j} \), the following improved uniform estimates hold:

5 Construction of the error estimate

We design an a posteriori estimate for the global error \( e(t) \) of a given collocation solution \( {\widetilde{u}}(t) \). The construction principle has been introduced in Sect. 2.1 and it is now applied in our concrete setting. We mainly consider the case of Dirichlet conditions (3.1) where no defect occurs at the boundary. Remarks on handling more general boundary conditions are given in Sect. 7.

Let us describe the procedure and its algorithmic components in detail:

-

The given approximation is the collocation solution \( {\widetilde{u}}(t) \), for which the error \( e(t) = {\widetilde{u}}(t) - u(t) \) is to be estimated.

-

The (discrete) defect \( d_{\tiny \Delta }\) of \( {\widetilde{u}}\) is computed by inserting \( {\widetilde{u}}(t) \) into the EDS reformulation of the original problem [see (2.1b)], which has been introduced in Sect. 3.4. In the computational realization, the integral operators \( {\mathcal {I}}_\ell = {\mathcal {I}}_{i,j} \) are replaced by a set of higher-order quadrature formulas \( {\mathcal {Q}}_\ell = {\mathcal {Q}}_{i,j} \), see (2.3a) and Sect. 5.1 below.

-

The auxiliary scheme (2.2) is the SDS (3.7) operating on the fine grid \( \Delta \) given by (4.1a). Its solution is denoted by \( {\widehat{u}}_{\tiny \Delta }= ({\widehat{u}}_0,{\widehat{u}}_1,\ldots ,{\widehat{u}}_{n-1},{\widehat{u}}_n) \), see (3.8).

-

Solving the auxiliary scheme with additional inhomogeneity \( d_{\tiny \Delta }\) [see (2.4)] gives another grid function \( \widehat{\widetilde{u}}_{\tiny \Delta }= \big (\widehat{\widetilde{u}}_0,\widehat{\widetilde{u}}_1,\ldots ,\widehat{\widetilde{u}}_{n-1},\widehat{\widetilde{u}}_n\big ) \).

With these settings, the (discrete) error estimator \( \varepsilon _{\tiny \Delta }\) is defined according to (2.5b) as

5.1 Quadrature formulas for defect computation

At each interior grid point \( t_\ell = t_{i,j} \in \Delta \), the exact EDS defect of \( {\widetilde{u}}\) reads [see (3.21)]

Here,

is the integral operator defined in (3.20), with kernel

from (3.19b). Practical approximation of integrals of the form (5.3) by interpolatory quadrature can be performed in a standard way. We consider two different cases:

-

For the case where \( t_{i,j} \in (\tau _i,\tau _{i+1}) \) is an interior collocation node, we construct interpolatory quadrature formulas \( {\mathcal {Q}}_{i,j} \approx {\mathcal {I}}_{i,j} \) in the usual way via

$$\begin{aligned} {\mathcal {I}}_{i,j}( w ) \approx {\mathcal {Q}}_{i,j}( w ) := \int \limits _{-\alpha _{i,j}}^{\beta _{i,j}} K_{i,j}(\xi )\,\pi _i\big (t_{i,j} + \overline{\delta }_{i,j}\,\xi \big )\,d\xi = \sum _{k=0}^{m+1} \omega _{j,k}\, w (t_{i,k}), \end{aligned}$$where \(\pi _i\) is the local Lagrange interpolant of degree \(m+1\) to \(w\) at the nodes \( t_{i,0},\ldots ,t_{i,m+1} \).

-

For the case where \( t_{i,0} = \tau _i \in (a,b) \) is an endpoint between two neighboring collocation subintervals (\( i=1 \ldots N-1 \)), we split the integral into two parts,

$$\begin{aligned}&{\mathcal {I}}_{i,j}( w ) = {\mathcal {I}}_{i,0}^{-}( w ) + {\mathcal {I}}_{i,0}^{+}( w ) := \\&\quad = \int \limits _{-\alpha _{i,0}}^{0} \left( 1 + \frac{\xi }{\alpha _{i,0}} \right) w (\tau _i + \overline{\delta }_{i,0}\,\xi )\,d\xi + \int \limits _{0}^{\beta _{i,0}} \left( 1 - \frac{\xi }{\beta _{i,0}} \right) w \big (\tau _i + \overline{\delta }_{i,0}\,\xi \big )\,d\xi , \end{aligned}$$and we separately approximate the two terms using data from the left and right subintervals, respectively, in the same way as before. This gives

$$\begin{aligned} {\mathcal {I}}_{i,0}( w ) \approx {\mathcal {Q}}_{i,0}( w )&= {\mathcal {Q}}_{i,0}^{-}( w ) + {\mathcal {Q}}_{i,0}^{+}( w ) \\&= \sum _{k=0}^{m+1} \omega _{0,k}^{-}\, w (t_{i-1,k}) + \sum _{k=0}^{m+1} \omega _{0,k}^{+}\, w (t_{i,k}) \end{aligned}$$for \( i=1 \ldots N-1 \).

Since the distribution of the collocation nodes is fixed [defined via \( \rho _j \in [0,1] \), see (4.1)], the coefficients \( \omega _{j,k}^{[\pm ]} \) of these quadrature formulas do not depend on \( i \) and can be computed in a preprocessing phase. In this way, the defect of \( {\widetilde{u}}(t) \) is defined by the computable object

at all interior grid points \( t_{i,j} \in \Delta \).

Standard arguments show

for sufficiently smooth \( w=w(t) \). In the special case where \( m \) is odd and the nodes \( \rho _j \) are symmetrically distributed, i.e., \( \rho _j = \rho _{m+1-j} \), we have

In particular, we shall make use of (5.5) for \( w = u'' \).

6 Analysis of the error estimate

For simplicity of notation we write \( e,\,\varepsilon ,\,{\theta }\), etc. instead of \( R_{\tiny \Delta }(e),\,\varepsilon _{\tiny \Delta },\,{\theta }_{\tiny \Delta }\), etc., and we use the flattened index notation (4.2).

For the deviation \( {\theta }=e-\varepsilon \), an estimate of the form \( \Vert {\theta }\Vert _{\tiny \Delta }=\Vert e-\varepsilon \Vert _{\tiny \Delta }=\mathcal {O}(h^{m+1}) \) can be derived in a similar manner as in [5] for the case of first-order systems. Here, the asymptotic behavior of the collocation error described in Theorem 4.1 is essential. In the sequel we prove that the error estimate is even supra-accurate, i.e., \( \Vert {\theta }\Vert _{\tiny \Delta }=\Vert e-\varepsilon \Vert _{\tiny \Delta }=\mathcal {O}(h^{m+2}) \) is valid. In particular, this implies that the error estimate is asymptotically correct also in the case where already \( e = \mathcal {O}(h^{m+1}) \) holds, see Theorem 4.1.

Remark 6.1

We believe that for the case where \( e = \mathcal {O}(h^{m+1}) \), i.e., for \( m \) odd and symmetrically distributed \( \rho _j \), the estimates (4.4) and (5.5b) can be exploited in order to show that even \( \Vert {\theta }\Vert _{\tiny \Delta }=\mathcal {O}(h^{m+3}) \) is valid, which is also observed in numerical experiments, see Sect. 7. This would involve further technicalities, in particular an extension of Lemma 6.1 below, and coping with the reduced order \( e''=\mathcal {O}(h^m) \). This would however exceed the scope of the present work.

For the sake of clarity we consider the linear case first. The proof for the nonlinear case in Sect. 6.2 is an extension of the linear version, based on quasilinearization and supraconvergence arguments.

6.1 The linear case

We first derive a representation for the leading term in the inhomogeneity of the linear difference equation (2.11) for \( {\theta }\) in the form of a difference quotient plus a higher-order term.

Lemma 6.1

For the linear BVP (3.2), at all grid points \( t_\ell \) we have

Here,

Proof

By definition of \(H\) and \( {\widehat{H}}_{\tiny \Delta }\) [see (3.2), (3.9b)],

Representation and estimation of \( \mathbf {D}_0 \). By definition of \( {\mathcal {I}}_\ell \) [see (3.20)] and with \( \alpha _\ell +\beta _\ell =2 \),

In \(\mathbf {I}_{0}^{-}\) we substitute \(\frac{1}{2}+\frac{\xi }{\alpha _\ell }=\zeta \) and integrate by parts to obtain

hence

with \( z^{[0]}_{{\ell -\frac{1}{2}}} \) defined in (6.2a). Here, \( z^{[0]}_{\ell -\frac{1}{2}}= \mathcal {O}(h^{m+1}) \) follows from \( e'=\mathcal {O}(h^m) \), and after integration by parts,

follows from \( e''=\mathcal {O}(h^m) \) [see (4.3a)].

For \(\mathbf {I}_{0}^{+}\), substituting \( -\frac{1}{2}+\frac{\xi }{\beta _\ell }=\zeta \) we obtain in an analogous way

with analogous estimates. Altogether, this shows

Representation and estimation of \( \mathbf {D}_1 \). By definition of \( {\mathcal {I}}_\ell \) (see (5.3)) and with \( \alpha _\ell +\beta _\ell =2 \),

For \(\mathbf {I}_{1}^{-}\) we substitute \(\frac{1}{2}+\frac{\xi }{\alpha _\ell }=\zeta \) to obtain

By Taylor expansion of \( c_1(t) \) about \( t=t_{\ell -\frac{1}{2}}\),

and using analogous arguments as for \( \mathbf D _0 \) above we obtain

i.e.,

with \( z^{[1]}_{{\ell -\frac{1}{2}}} \) defined in (6.2b), which is indeed seen to be \( \mathcal {O}(h^{m+1}) \).

For \(\mathbf {I}_{1}^{+}\), substituting \( -\frac{1}{2}+\frac{\xi }{\beta _\ell }=\zeta \) we obtain in an analogous way

with \( z^{[1]}_{{\ell +\frac{1}{2}}} = \mathcal {O}(h^{m+1}) \) defined in (6.2b). Altogether, this shows

Combining (6.3) with (6.4a) and (6.4b) completes the proof. \(\square \)

Theorem 6.1

For the linear BVP (3.2), the deviation of the error estimate satisfies

Proof

According to (2.11), the deviation \( {\theta }=e-\varepsilon =({\widetilde{u}}-u)-(\widehat{\widetilde{u}}-{\widehat{u}}) \) satisfies the SDS-type difference equation

for \( \ell =1 \ldots n-1 \), with homogeneous boundary conditions. Here \( ({\mathcal {Q}}_\ell -{\mathcal {I}}_\ell )(F(u)) = ({\mathcal {Q}}_\ell -{\mathcal {I}}_\ell )(L\,u) \) is a higher-order quadrature error, see Sect. 5.1.

Representation and estimation of \( \mathbf {J}_1 \) and \( \mathbf {J}_2 \). According to Lemma 6.1,

with

see (6.2). The quadrature error \( \mathbf {J}_2 \) depends on the \( (m+2) \)-nd derivative of \( H e=c_1\,e' + c_0\,e \),

In order to guarantee \( \mathbf {J}_2 = \mathcal {O}(h^{m+2}) \) we thus have to show that the higher derivatives \( e^{(\nu )}={\widetilde{u}}^{(\nu )}-u^{(\nu )} \) are bounded independently of \( h \) up to \( \nu = m + 3 \). Since the degree of \({\widetilde{u}}\) is \(m+1\), we have \( e^{(\nu )} = -u^{(\nu )} \) for \( \nu =m+2,m+3 \). For \( \nu =0 \ldots m+1 \), the uniform boundedness of \( e^{(\nu )} \) immediately follows from (4.3) (Theorem 4.1). Altogether this shows

Estimation of \({\theta }\). From (6.5)–(6.7),

with homogeneous boundary conditions. Let us first consider the simplified scheme

of type (3.13a) with solution according to (3.13b),

see (3.13c). It remains to be shown that \( \Vert {\theta }-{\check{\theta }} \Vert _{\tiny \Delta }= \mathcal {O}(h^{m+2}) \) holds. Subtracting (6.8b) from (6.8a) yields

and, due to stability of the SDS, it remains to show that

is valid. Here, \( c_0(t_\ell ){\check{\theta }}_\ell = \mathcal {O}(h^{m+2}) \), and from (6.9) we obtain

with \( z_{k+\frac{1}{2}} = \mathcal {O}(h^{m+2}) \) and \( (\partial _{\tiny \Delta }^{[\nu ]} G)_{\ell ,k} \) from (3.15), which implies \( c_1(t_\ell )(\partial _{\tiny \Delta }{\check{\theta }})_\ell = \mathcal {O}(h^{m+2}) \). Altogether this shows that (6.10) indeed holds, which completes the proof. \(\square \)

6.2 The nonlinear case

Supra-accuracy of the error estimate extends to the nonlinear case. We present the proof in a concise style, using quasilinearization arguments. The focus is on how to organize this in a way such that Lemma 6.1 and (supra)convergence of the SDS can be exploited.

Theorem 6.2

For the nonlinear BVP (1.1a), (3.1), the deviation of the error estimate satisfies

Proof

We use vector notation for continuous and discrete functions,

i.e., capital or boldface capital letters typographically represent the named object together with its derivative or centered difference quotient, respectively. Furthermore, emplyoing the the mean value theorem we will denoteFootnote 6

Difference equation for \( {\theta }\) via quasilinearization. According to (2.9), the deviation \( {\theta }=e-\varepsilon =({\widetilde{u}}-u)-(\widehat{\widetilde{u}}-{\widehat{u}}) \) satisfies

for \( \ell =1 \ldots n-1 \), with homogeneous boundary conditions. Here,

and

After rearranging terms, (6.11) now takes the form of a linearized scheme of SDS type for \( \varvec{\Theta }_\ell = \varvec{E}_\ell - \varvec{\mathcal {E}}_\ell \),

Estimation of \( \mathbf {J}_0 \). In the linear case this term vanishes. For the nonlinear case we exploit supraconvergence (3.18) of the SDS, i.e., \( U(t_\ell )-{\widehat{\varvec{U}}}_\ell = \mathcal {O}(h^2) \), and \( E(t) = \mathcal {O}(h^m) \) [Theorem 4.1, (4.3)] to conclude \( \mathbf {J}_0 = \mathcal {O}(h^{m+2}) \).

Estimation of \( \mathbf {J}_1 \). For all grid points \( t_\ell \),

with smooth coefficient functions

additionally depending on \( U=(u,u') \) and \( E=(e,e') \). Furthermore,

with the same functions \( c_0 \) and \( c_1 \). Thus, for \( \mathbf {J}_1 \) we can resort to Lemma 6.1 as in the linear case.

Estimation of \( \mathbf {J}_2 \). The estimate

is obtained in the same way as in the linear case.

The assertion of the theorem is now also obtained in an analogous way as in the proof of Theorem 6.1. \(\square \)

7 Implementation, extensions, and numerical illustration

7.1 Implementation

The collocation method and the a posteriori error estimator were implemented in a test code on the basis of Matlab. For an arbitrary given collocation mesh \( {\Delta ^N}\) and given collocation nodes \( \{ \rho _j \} \) the algebraic equations defining the piecewise polynomial solution \( {\widetilde{u}}(t) \) are generated via differentiation matrices representing the evaluation of \( {\widetilde{u}}'(t_{i,j}) \) and \( {\widetilde{u}}''(t_{i,j}) \) in terms of the \( {\widetilde{u}}(t_{i,j}) \). The respective coefficients as well as the quadrature coefficients required for evaluation of the defect are static data which can be generated in a preprocessing step.

For linear problems, the respective systems of linear algebraic equations are solved by direct elimination. For the nonlinear case, Newton iteration with residual-based control was implemented.

7.2 Treatment of general linear boundary conditions

For boundary conditions (1.1b), the idea again is to convert them into exact difference equations, employing the given differential equation. For illustration, let us consider the case where Neumann data are given at \( t_n=b \), i.e., \( u'(b) = s_1 \). In the SDS (2.2) for computing \( {\widehat{u}}_{\tiny \Delta }\), the difference equation at the Neumann boundary is discretized by the one-sided difference quotient

Due to the Peano representation

the solution \( u(t) \) of the BVP exactly satisfies

The corresponding defect of the collocation solution \( {\widetilde{u}}(t) \) is

where \( {\mathcal {Q}}_n \) is the quadrature approximation to \( {\mathcal {I}}_n \) obtained in the same way as in Sect. 5.1, with quadrature nodes from the rightmost collocation interval \( [\tau _{N-1},\tau _N] \). In contrast to Dirichlet boundary conditions, which are exactly satisfied by \( {\widetilde{u}}\), we now have to account for the defect with respect to the boundary condition. This means that the boundary equation

is incorporated into the SDS (2.4) defining the auxiliary grid function \( \widehat{\widetilde{u}}_{\tiny \Delta }\).

In order to further enhance the asymptotic quality of the error estimate obtained in this way, one can modify the SDS using a one-sided second-order approximation \( \partial _2^- v \) to \( v' \) involving three consecutive grid points (as in BDF2 schemes), and replaces (7.1a) by

The respective coefficients, the corresponding ‘EDS integral operator’ \( {\mathcal {I}}_n \) and its quadrature approximation \( {\mathcal {Q}}_n \) are obtained by a routine calculation. This redefines \( d_n \), and the rest of the procedure is the same as before. For a numerical illustration, see Sect. 7.3.

It is evident how the procedure extends to the case of general linear boundary conditions (1.1b). For the treatment of nonlinear boundary conditions, see Sect. 7.3.

7.3 Examples

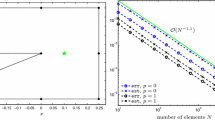

We present two selected numerical examples. These serve to illustrate the theoretical results but, in particular, Example 2 also indicates extensions of the convergence results obtained, for the case of \( m \) odd and symmetric collocation nodes, and for general linear boundary condition. Further numerical results are reported in [18].

Example 1

A nonlinear BVP with Dirichlet boundary conditions. For

the analytic solution is known. We choose \( m=4 \) (with collocation degree \( m+1=5 \)), \( \rho = (0,\rho _1,\rho _2,\rho _3,\rho _4,1) \) including Chebyshev nodes \( \rho _1,\rho _2,\rho _3,\rho _4 \), and \( N \) collocation intervals of length \( 1/N \). The results are shown in Table 1.

As the practical purpose of an error estimate is its use for mesh adaptation, it is essential that collocation intervals of variable lengths are admitted. We note that our theoretical results from Sect. 6 do not rely on the assumption of an equidistant collocation mesh \( {\Delta ^N}\). We illustrate this point by repeating the experiment documented in Table 1, but starting with a non-uniformly distributed mesh \( {\Delta ^N}\), followed by coherent refinement to observe orders. The results are shown in Table 2.

Example 2

A linear BVP with general linear boundary conditions. For

with boundary conditions

and known analytic solution,

we choose \( m=3 \) (with collocation degree \( m+1=4 \)), \( \rho = (0, \rho _1,\rho _2,\rho _3 ,1) \) including Chebyshev nodes \( \rho _1,\rho _2,\rho _3 \), and \( N \) collocation intervals of length \( 1/N \). The results are shown in Table 3.

7.4 Further extensions

7.4.1 General nonlinear boundary conditions

Linear boundary conditions frequently occur in applications, but the case of nonlinear boundary conditions

is of course also relevant. For the collocation solver, incorporation of (7.2) is straightforward; for the finite-difference schemes, \( u'(a) \) and \( u'(b) \) are again approximated by difference quotients. Since our approach relies on an underlying EDS for the computation of the defect, the question is how to translate (7.2) to the EDS context. This can be accomplished on the basis of the considerations from Sect. 7.2. Consider for instance a right boundary condition of the form

In the notation from Sect. 7.2, the solution \( u(t) \) of the BVP exactly satisfies

On the basis of this identity, the defect of an approximate solution \( {\widetilde{u}}\) with respect to (7.3) reads

In the SDS defining the auxiliary grid function \( \widehat{\widetilde{u}}_{\tiny \Delta }\), the corresponding boundary equation becomes

7.4.2 Variable coefficients, ODE systems, and higher-order problems

As the idea of EDS is rather general and well-developed, see [12, 19], it can be expected that a similar approach can be successfully applied to more general problem classes, e.g., for the case where \( Lu \) is a differential operator with variable coefficients or of higher order. Also, the extension to systems or mixed systems seems to be within the scope. Again we stress that in our approach the EDS formulation is only used for evaluation of the defect, which is significantly simpler to realize than solving the EDS equations up to high order.

Notes

See Sect. 3.3 for an explanation of the notion of supraconvergence.

In this introductory section, we refrain from denoting continuous and discrete objects in different styles.

Matlab is a trademark of The MathWorks, Inc. sbvp is available at www.mathworks.de/matlabcentral/fileexchange/1464-sbvp-1-0-package.

For convenience, we denote \( \tau _i \) by \( t_{i,0} \equiv t_{i-1,m+1},\, i=1 \ldots N-1\).

Here, \( \nabla f(t,V) \) is the gradient with respect to \( V \).

References

Ascher, U., Christiansen, J., Russell, R.: A collocation solver for mixed order systems of boundary values problems. Math. Comp. 33, 659–679 (1978)

Ascher, U., Mattheij, R., Russell, R.: Numerical Solution of Boundary Value Problems for Ordinary Differential Equations. Prentice-Hall, Englewood Cliffs (1988)

Auzinger, W., Kneisl, G., Koch, O., Weinmüller, E.: A collocation code for boundary value problems in ordinary differential equations. Numer. Algorithms 33, 27–39 (2003)

Auzinger, W., Koch, O., Praetorius, D., Weinmüller, E.: New a posteriori error estimates for singular boundary value problems. Numer. Algorithms 40, 79–100 (2005)

Auzinger, W., Koch, O., Weinmüller, E.: Efficient collocation schemes for singular boundary value problems. Numer. Algorithms 31, 5–25 (2002)

Auzinger, W., Koch, O., Weinmüller, E.: Analysis of a new error estimate for collocation methods applied to singular boundary value problems. SIAM J. Numer. Anal. 42(6), 2366–2386 (2005)

Auzinger, W., Kreuzer, W., Hofstätter, H., Weinmüller, E.: Modified defect correction algorithms for ODEs. Part I: general theory. Numer. Algorithms 36, 135–155 (2004)

Butcher, J.C., Cash, J., Moore, G.: Defect correction for 2-point boundary value-problems on nonequdistant meshes. Math. Comp. 64, 629–648 (1995)

Cash, J., Kitzhofer, G., Koch, O., Moore, G., Weinmüller, E.: Numerical solution of singular two point BVPs, JNAIAM. J. Numer. Anal. Indust. Appl. Math. 4, 129–149 (2009)

Doedel, E.J.: The construction of finite difference approximations to ordinary differential equations. SIAM J. Numer. Anal. 15, 451–465 (1978)

Dutt, A., Greengard, L., Rokhlin, V.: Spectral deferred correction methods for ordinary differential equations. BIT 40, 241–266 (2000)

Gavriluk, I.P., Hermann, M., Makarov, V.L., Kutniv, M.V.: Exact and Truncated Difference Schemes for Boundary Value ODEs. Birkhäuser, Basel (2010)

Kitzhofer, G., Koch, O., Lima, P., Weinmüller, E.: Efficient numerical solution of the density profile equation in hydrodynamics. J. Sci. Comput. 32, 411–424 (2007)

Kitzhofer, G., Koch, O., Pulverer, G., Simon, C., Weinmüller, E.: The new MATLAB code BVPSUITE for the solution of singular implicit boundary value problems. JNAIAM J. Numer. Anal. Indust. Appl. Math. 5, 113–134 (2010)

Koch, O.: Asymptotically correct error estimation for collocation methods applied to singular boundary value problems. Numer. Math. 101, 143–164 (2005)

Lynch, R.E., Rice, J.R.: A high-order difference method for differential equations. Math. Comp. 34, 333–372 (1980)

Manteuffel, T.A., White Jr, A.B.: The numerical solution of second-order boundary value problems on nonuniform meshes. Math. Comp. 47, 511–535 (1986)

Saboor Bagherzadeh, A.: Defect-based error estimation for higher order differential equations. PhD thesis, Vienna University of Technology (2011)

Samarskii, A.A.: The Theory of Difference Schemes. Marcel Dekker Inc., New York, Basel (2001)

Stetter, H.J.: The defect correction principle and discretization methods. Numer. Math. 29, 425–443 (1978)

Acknowledgments

We would like to thank Mechthild Thalhammer for helpful comments, and Gerhard Kitzler for realizing numerical experiments in Matlab.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Anne Kværnø.

Rights and permissions

About this article

Cite this article

Auzinger, W., Koch, O. & Saboor Bagherzadeh, A. Error estimation based on locally weighted defect for boundary value problems in second order ordinary differential equations. Bit Numer Math 54, 873–900 (2014). https://doi.org/10.1007/s10543-014-0488-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-014-0488-y

Keywords

- Second order boundary value problems

- Collocation methods

- Asymptotically correct a posteriori error estimates

- Defect correction principle

- Exact difference scheme

- Supraconvergence