Abstract

Classical discrete-time adaptive controllers provide asymptotic stabilization and tracking; neither exponential stabilization nor a bounded noise gain is typically proven. In our recent work, it is shown, in both the pole placement stability setting and the first-order one-step-ahead tracking setting, that if the original, ideal, projection algorithm is used (subject to the common assumption that the plant parameters lie in a convex, compact set and that the parameter estimates are restricted to that set) as part of the adaptive controller, then a linear-like convolution bound on the closed-loop behaviour can be proven; this immediately confers exponential stability and a bounded noise gain, and it can be leveraged to provide tolerance to unmodelled dynamics and plant parameter variation. In this paper, we solve the much harder problem of adaptive tracking; under classical assumptions on the set of unmodelled parameters, including the requirement that the plant be minimum phase, we are able to prove not only the linear-like properties discussed above, but also very desirable bounds on the tracking performance. We achieve this by using a modified version of the ideal projection algorithm, termed as vigilant estimator: it is equally alert when the plant state is large or small and is turned off when it is clear that the disturbance is overwhelming the estimation process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Adaptive control is an approach used to deal with systems with uncertain and/or time-varying parameters. In the classical approach to adaptive control, one combines a linear time-invariant (LTI) compensator together with a tuning mechanism to adjust the compensator parameters to match the plant. While adaptive control has been studied as far back as the 1950s, the first general proofs that parameter adaptive controllers work came around 1980, e.g. see [3, 5, 27, 32, 33]. However, the original controllers are typically not robust to unmodelled dynamics, do not tolerate time-variations well, have poor transient behaviour and do not handle noise (or disturbances) well, e.g. see [34]. During the following 2 decades, a good deal of research was carried out to alleviate these shortcomings. A number of small controller design changes were proposed, such as the use of signal normalization, deadzones and \(\sigma \)-modification, e.g. see [9, 11, 14, 15, 37]; arguably the simplest is that of using projection onto a convex set of admissible parameters, e.g. see [13, 31, 41,42,43,44]. However, in general these redesigned controllers provide asymptotic stability and not exponential stability, with no bounded gain on the noise; that being said, some of them, especially those which use projection, provide a bounded-noise bounded-state property, as well as tolerance of some degree of unmodelled dynamics and/or time-variations.

The goal of this paper is to design adaptive controllers for which the closed-loop system exhibits highly desirable LTI-like system properties, such as exponential stability, a bounded gain on the noise and ideally a convolution bound on the input-output behaviour;Footnote 1 in addition, we wish to obtain “good tracking” of a reference signal. As far as the authors are aware, in the classical approach to adaptive control a bounded gain on the noise is proven only in [44]; however, neither a “crisp” exponential bound on the effect of the initial condition nor a convolution bound on the closed-loop behaviour is proven. While it is possible to prove a form of exponential stability if the reference input is sufficiently persistently exciting, e.g. see [2], this places a stringent requirement on an exogenous input, which we would like to avoid.

There are several non-classical approaches to adaptive control which provide some of the LTI-like system properties. First of all, in [4, 23] a logic-based switching approach was used to sequence through a predefined list of candidate controllers; while exponential stability is proven, the transient behaviour can be quite poor and a bounded gain on the noise is not proven. In a related approach, a high-gain controller is used to provide excellent transient and steady-state tracking for minimum phase systems [22]; in this case as well, a bounded gain on the noise is not proven. A more sophisticated logic-based approach, labelled supervisory control, was proposed by Morse—see [7, 8, 28, 29, 39]; here a supervisor switches in an efficient way between candidate controllers, and in certain circumstances a bounded gain on the noise can be proven—see [30, 40], and the Concluding Remarks section of [29]. A related approach, called localization-based switching adaptive control, uses a falsification approach to prove exponential stability as well as a degree of tolerance of disturbances, e.g. see [45], though a bounded gain on the noise is not proven. In none of the above cases is a convolution bound on the closed-loop behaviour proven.

Another non-classical approach to adaptive control, proposed by the first author, is based on periodic probing, estimation and control: rather than estimate the plant or controller parameters, the goal is to estimate what the control signal would be if the plant parameters and plant state were known and the ‘ideal controller’ were applied. Under suitable assumptions on the plant uncertainty, exponential stability and a bounded gain on the noise are achieved, and a degree of unmodelled dynamics and slow time-variations are allowed; for non-minimum phase systems, near optimal transient performance is also provided—see [17, 18, 38], while for minimum-phase systems, near exact tracking is provided, even in the presence of rapid time-variations—see [16, 24]. While a convolution bound is not proven, the biggest drawback is that while a bounded gain on the noise is always achieved, it tends to increase dramatically the closer that one gets to optimality. Furthermore, because of the nature of the approach, it only works in the continuous-time domain.

This brings us to the proposed approach of the paper, wherein we show how to achieve our objectives in the discrete-time setting under some classical assumptions on the set of plant parameters. We adopt a common approach to classical adaptive control—the use of a projection algorithm-based estimator together with a tuneable compensator whose parameters are chosen via the certainty equivalence principle. However, while in the literature it is very common to use a modified version of the ideal projection algorithm in order to avoid division by zero,Footnote 2 here, we adopt another approach to alleviate this numerical concern. We label the resulting estimator a “vigilant estimator”: in the absence of noise it is equally alert to small signals as large signals (unlike the modified version), and in the noisy case we turn off the estimator update if it is clear that the noise is overwhelming the data. Indeed, in earlier work by the authors on the first-order setting [19] and in the pole placement setting of [20, 25], versions of this estimator are used as a building block of adaptive controllers which provide exponential stability, a bounded gain on the noise, and linear-like convolution bounds on the closed-loop behaviour; as far as the authors are aware, such LTI-like bounds have never before been proven in the adaptive setting. The objective of the present paper is to use the general approach of [19, 20, 25] to analyse the much harder adaptive tracking control problem for high-order systems.

We initially expected the analysis to follow in a straight-forward manner from either the first-order tracking approach of [19] or the pole placement approach of [20, 25]; this has not proven to be the situation. It turns out that the importance of the system delay in this setting creates significant additional complexity not found in either case, and the tracking objective is not present in the latter case. That being said, we have adopted ideas from the pole placement setting of [20, 25] as a starting point, but we have carried out several innovative modifications in order to allow us to prove not only the same highly desirable linear-like properties, but also several highly desirable tracking results:

-

(i)

if there is no noise, we prove an explicit 2-norm bound on the size of the tracking error in terms of the size of the initial condition and the reference signal (in the literature on classical adaptive control it is typically proven only that the tracking error is square summable);

-

(ii)

if there is no noise but there are slow time-variations, then we prove that we can bound the size of the average tracking error by the size of the time-variation (in the literature this situation is rarely considered);

-

(iii)

if there is noise, then under some technical conditions, we prove a bound on the average tracking error in terms of the average disturbance times another complicated quantity (in the literature it is usually proven only that the tracking error is bounded).

The proofs of the results contained herein are significantly more involved than the proofs of the classical results in the literature, such as the seminal work of Goodwin et al. [5]. The main reason is that here we are interested in obtaining crisp quantitative bounds on the closed-loop response, rather than the typical qualitative bounds provided in most of the literature.Footnote 3 Last of all, we would like to point out that an early version of this work appears in [26].

At this point, we provide an outline of the paper. In Sect. 2, we introduce the plant, rewrite it into a form more amenable to analysis and introduce the parameter estimator as well as the adaptive control law. In Sect. 3, we introduce three high-level models for use in system analysis; we provide several technical results which will be useful in the closed-loop analysis. In Sect. 4, we use the three models of the previous section to prove that highly desirable convolution bounds on the system state hold. In Sect. 5, we show that convolution bounds still hold even in the presence of a degree of unmodelled dynamics and plant parameter variations. In Sect. 6, we derive bounds on the tracking error in a variety of situations: the noise-free case, the noise-free case with time-variation and the time-invariant noisy case. In Sect. 7, we will provide several illustrative simulation examples. Last of all, we wrap up with a Summary and Conclusions in Sect. 8.

Before proceeding, we present some mathematical preliminaries. Let \(\mathbf{Z}\) denote the set of integers, \(\mathbf{Z}^+\) the set of non-negative integers, \(\mathbf{N}\) the set of natural numbers, \(\mathbf{R}\) the set of real numbers, and \(\mathbf{R}^+\) the set of non-negative real numbers. We use the Euclidean 2-norm for vectors and the corresponding induced norm for matrices and denote the norm of a vector or matrix by \(\Vert \cdot \Vert \). We let \(s ( \mathbf{R}^{n \times m} ) \) denote the set of all \(\mathbf{R}^{ n \times m}\)-valued sequences, and we let \({l_{\infty }}( \mathbf{R}^{n \times m})\) denote the subset of bounded sequences; we define the norm of \(u \in {l_{\infty }}( \mathbf{R}^{n \times m})\) by \(\Vert u \Vert _{\infty } := \sup _{k \in \mathbf{Z}} \Vert u(k) \Vert \).

If \(\mathcal{S} \subset \mathbf{R}^p\) is a convex and compact set, we define \(\Vert \mathcal{S} \Vert := \max _{x \in \mathcal{S} } \Vert x \Vert \) and the function \(\pi _\mathcal{S} : \mathbf{R}^p \rightarrow \mathcal{S}\) denotes the projection onto \(\mathcal{S}\); it is well-known that \(\pi _\mathcal{S}\) is well-defined.

2 The setup

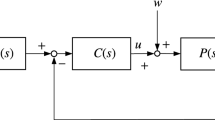

In this paper, we start with a linear time-invariant discrete-time plant described by

with

-

\(y(t) \in \mathbf{R}\) the measured output,

-

\(u(t) \in \mathbf{R}\) the control input,

-

\(w(t) \in \mathbf{R}\) the disturbance (or noise) input;

-

the parameters regularized so that \(a_0 = 1\), and

-

the system delay \(d \ge 1\) being known (so \(b_0 \ne 0 \)).

Associated with this plant model are the polynomials \(A(z^{-1} ) := \sum _{i=0}^n a_i z^{-i}\) and \( B(z^{-1}) := \sum _{i=0}^{m} b_i z^{-i} \), the transfer function \(z^{-d} \frac{B(z^{-1} )}{A(z^{-1} )}\), and the list of plant parameters:

Remark 1

It is straight-forward to verify that if the system has a disturbance at both the input and output, then it can be converted to a system of the above form.

The goal is closed-loop stability and asymptotic tracking of an exogenous reference input \(y^*(t)\). We impose several assumptions on the set of admissible parameters. The first set is standard in the literature, e.g. [5, 6]:

Assumption 1

-

(i)

n is known;

-

(ii)

m is known;

-

(iii)

the system delay d is known;

-

(iv)

\(sgn( b_0) \) is known;

-

(v)

the polynomial \(B(z^{-1} )\) has all of its zeros in the open unit disk.

Remark 2

Since we do not require \(a_n \ne 0\), Assumption 1 (i) can be interpreted as assuming that an upper bound on n is known; similarly, Assumption 1 (ii) can be interpreted as assuming that an upper bound on m is known. The constraint on the zeros of \(B(z^{-1} )\) in Assumption 1 (v) is a requirement that the plant be minimum phase; this is necessary to ensure tracking of an arbitrary bounded reference signal [21].

The second assumption is less standard:

Assumption 2

The set of admissible parameters, which we label \(\mathcal{S}_{ab} \subset \mathbf{R}^{n+m+1}\), is compact.

Remark 3

The boundedness requirement on \(\mathcal{S}_{ab} \) is quite reasonable in practical situations; it is used here to prove uniform bounds and decay rates on the closed-loop behaviour.

There are many ways to pose and solve an adaptive tracking problem, with the d-step-ahead approach and the more general model reference control approach being the standard ones—see [5, 6]. To minimize complexity, we choose the first one, since it is the simpler of the two. To proceed we use a parameter estimator together with an adaptive d-step-ahead control law. To design the estimator, it is convenient to put the plant into the so-called predictor form. To this end, following [6], we carry out long division by dividing \(A(z^{-1} )\) into one, and define \(F(z^{-1}) = \sum _{i=0}^{d-1} f_i z^{-i} \) and \( G( z^{-1} ) = \sum _{i=0}^{n-1} g_i z^{-i} \) satisfying

Hence, if we define

then we can rewrite the plant model as

Let \(\mathcal{S}_{\alpha \beta }\) denote the set of admissible \(\theta ^*\) which arise from the original plant parameters which lie in \(\mathcal{S}_{ab}\); since the associated mapping is analytic, it is clear that the compactness of \(\mathcal{S}_{ab}\) means that \(\mathcal{S}_{\alpha \beta }\) is compact as well. Furthermore, it is easy to see that \(f_0 =1\), so \(\beta _0 = b_0\), which means that the sign of \(\beta _0\) is always the same. It is convenient that the set of admissible parameters in the new parameter space be convex and closed; so at this point let \(\mathcal{S} \subset \mathbf{R}^{n+m+d}\) be any compact and convex set containing \(\mathcal{S}_{\alpha \beta }\) for which the \(n+1^{th}\) element (the one which corresponds to \(\beta _0\)) is never zero, e.g. the convex hull of \(\mathcal{S}_{\alpha \beta }\) would do.

The d-step-ahead control law is the one given by

or equivalently

in the absence of a disturbance, and assuming that this controller is applied for all \(t \in \mathbf{Z}\), we have \(y (t) = y^* (t)\) for all \( t \in \mathbf{Z}\). Of course, if the plant parameters are unknown, we need to use estimates; also, the adaptive version of the d-step-ahead control law is only applied after some initial time, i.e. for \( t \ge t_0\).

2.1 Initialization

In most adaptive control results, the goal is to prove asymptotic behaviour, so the details of the initial condition are unimportant. Here, however, we wish to get a bound on the transient behaviour so we must proceed carefully. In the pole placement setting of [20], this was relatively straight-forward: the delay plays no role, the controller is strictly causal, and we start the plant estimator off at time \(t_0\), with an initial “plant state” of \(\phi (t_0) = \phi _0\). Here we have a more complicated situation, even in the case of \(d=1\), since the proposed controller is not strictly causal.

To proceed, observe that if we wish to solve (2) for y(t) starting at time \(t_0\), then it is clear that we need an initial condition of

Observe that this is sufficient information to obtain \(\{ \phi (t_0-1 ) ,\ldots , \phi (t_0 -d) \}\).

2.2 Parameter estimation

We can rewrite the plant (2) as

with an initial condition of \(x_0\). Given an estimate \(\hat{\theta } (t)\) of \(\theta ^*\) at time t, we define the prediction error by

this is a measure of the error in \(\hat{\theta } (t)\). A common way to obtain a new estimate is from the solution of the optimization problem

yielding the ideal (projection) algorithm

at this point, we can also constrain the estimate to \(\mathcal{S}\) by projection. Of course, if \(\phi (t-d+1)\) is close to zero, numerical problems can occur, so it is very common in the literature (e.g. [5, 6]) to add a constant to the denominator and possibly another gain in the numerator: with \(0< \bar{\alpha } < 2\) and \(\bar{\beta } > 0\), consider the classical algorithmFootnote 4

However, as pointed out in [19, 20, 25], this can lead to the loss of exponential stability and a loss of a bounded gain on the noise.

We propose a middle ground: as proposed in [20, 25], we turn off the estimation if it is clear that the disturbance signal \(\bar{w} (t)\) is swamping the estimation error.Footnote 5 More specifically, if we examine the update law in (6) when \(\phi (t-d+1) \ne 0\), we see that

Suppose that \(\theta _0 \in \mathcal{S}\) and \(\hat{\theta } (t) \in \mathcal{S}\); if this update quantity is large but less than \(2 \Vert \mathcal{S }\Vert \), then it could very well be that \(\hat{\theta } (t+1) \in \mathcal{S}\) and it could be that the large update is due to \(\hat{\theta } (t)\) being very inaccurate; on the other hand, if this quantity is larger than \(2 \Vert \mathcal{S }\Vert \), then it is clear that \( \hat{\theta } (t+1) \notin \mathcal{S }\) and probably quite inaccurate—in this case the disturbance may be fairly large relative to the other signals. To this end, with \(\delta \in (0, \infty ]\), we turn off the estimator if the update is larger than \(2 \Vert \mathcal{S} \Vert + \delta \) in magnitude; so define \({\rho _{\delta }}: \mathbf{R}^{n+m+d} \times \mathbf{R}\rightarrow \{ 0,1 \}\) by

given \(\hat{\theta } (t_0 -1) = \theta _0\), for \(t \ge t_0-1\) we defineFootnote 6

which we then project onto \(\mathcal{S}\):

Remark 4

We label this approach vigilant estimation for two reasons.

-

(i)

First of all, suppose that the disturbance is zero, i.e. \(w(t)=0\), and \(\phi (t-d+1) \ne 0\). Then a careful examination of the update quantity reveals that \({\rho _{\delta }}( \cdot , \cdot ) =1\) and

$$\begin{aligned} {\rho _{\delta }}( \cdot , \cdot ) \frac{ \phi (t-d+1)}{\Vert \phi (t-d+1) \Vert ^2} e(t+1) = - \frac{ \phi (t-d+1) \phi (t-d+1)^T}{\Vert \phi (t-d+1) \Vert ^2} [ \hat{\theta } (t) - \theta ^* ] ; \end{aligned}$$so we see that the gain on the parameter estimate error \(\hat{\theta } (t) - \theta ^* \) is exactly

$$\begin{aligned} -\frac{ \phi (t-d+1) \phi (t-d+1)^T}{\Vert \phi (t-d+1) \Vert ^2}, \end{aligned}$$which is scale invariant—if we replace \(\phi (t-d+1)\) by \(c \phi (t-d+1)\) for any non-zero c, then the quantity is the same. Hence, the estimator is as alert when \(\phi \) is small as when it is large; this differs from the classical algorithm, wherein the gain gets small when \(\phi \) is small.

-

(ii)

Second of all, as discussed above, the update algorithm turns off if it is clear that the disturbance is over-whelming the estimation process.

2.3 Properties of the estimation algorithm

Analysing the closed-loop system will require a careful analysis of the estimation algorithm. We define the parameter estimation error by \(\tilde{\theta } (t): = \hat{\theta } (t) - \theta ^* \) and the corresponding Lyapunov function associated with \(\tilde{\theta } (t)\), namely \(V(t) : = \tilde{\theta } (t)^T \tilde{\theta } (t) \). In the following result, we list a property of V(t); it is a straight-forward generalization of what holds in the pole placement setup of [20, 25].

Proposition 1

For every \(t_0 \in \mathbf{Z}\), \(x_0 \in \mathbf{R}^{n+m+3d-2}\), \({\theta }_0 \in \mathcal{S}\), \(\theta _{ab}^* \in \mathcal{S}_{ab}\), \(y^*, w \in {l_{\infty }}\), and \(\delta \in ( 0, \infty ]\), when the estimator (8) and (9) is applied to the plant (1), the following holds:

2.4 The control law

The elements of \(\hat{\theta } (t)\) are partitioned in a natural way as

The d-step-ahead adaptive control law is that of

or equivalently

Hence, as is common in this setup, we assume that the controller has access to the reference signal \(y^*(t)\)exactly d time units in advance.

Remark 5

With this choice of control law, it is easy to prove that the prediction error e(t) and the tracking error

are different if \(d \ne 1\). Indeed, it is easy to see that

Notice, in particular, that (12) provides a nice closed-form expression for the tracking error \({\varepsilon }(t)\) only for \(t \ge t_0 + d\); the reason is that the tracking error for \(t= t_0 ,\ldots , t_0+d-1\) is determined by \(x_0\), w and \(y^*\).

The goal of this paper is to prove that the adaptive controller consisting of the estimator (8), (9) together with the control equation (11) yields highly desirable linear-like convolution bounds on the closed-loop behaviour as well as provides good tracking of \(y^*\). In the next section, we develop several models used in the development, after which we state and prove the main result.

Remark 6

While the proposed adaptive controller which consists of the estimator (8), (9) together with the controller (11) is nonlinear, when it is applied to the plant it turns out that the closed-loop system enjoys the homogeneity property. While it does not enjoy the additivity property needed for linearity, we will soon see that we are still able to prove linear-like convolution bounds on the closed-loop behaviour.

3 Preliminary analysis

In the pole-placement adaptive control setup of our earlier work [20, 25], a key closed-loop model consists of an update equation for \(\phi (t)\), with the state matrix consisting of controller and plant estimates; this was effective—the characteristic polynomial of this matrix is time-invariant and has all roots in the open unit disk. If we were to mimic this in the d-step-ahead setup, the characteristic polynomial would have roots which are time-varying, with some at zero and the rest at the zeros of the naturally defined polynomial \(\hat{\beta } (t, z^{-1})\), which is time-varying and may not have roots in the open unit disk. Hence, at this point we make an important deviation from the approach of [20, 25]: we construct three different models for use in the analysis.

3.1 A good closed-loop model

In the first model, we obtain an update equation for \(\phi (t)\) which avoids the use of plant parameter estimates, but which is driven by the tracking error. Only two elements of \(\phi \) have a complicated description:

and the \(u(t+1)\) term, for which we use the original plant model to write

With \(e_i \in \mathbf{R}^{n+m+d}\) the \(i^{th}\) normal vector, if we now define

then it is easy to see that there exists a matrix \(A_g \in \mathbf{R}^{(n+m+d) \times (n+m+d )}\) (which depends implicitly on \(\theta _{ab}^* \in \mathcal{S}_{ab}\)) so that the following equation holds:

The characteristic equation of \(A_g\) equals \(\frac{1}{b_0} z^{n+m+d} B( z^{-1} )\), so all of its roots are in the open unit disk.

3.2 A crude closed-loop model

At times, we will need to use a crude model to bound the size of the growth of \(\phi (t)\) in terms of the exogenous inputs. Once again, only two elements of \(\phi (t)\) have a complicated description: to describe \(y(t+1)\) we use the plant model (1):

and to describe \(u(t+1)\) we use the control law:

it is easy to define \(\bar{\theta }_{\alpha \beta } (t)\) in terms of the elements of \(\hat{\theta } (t+1)\) so that

If we combine this with the formula for \(y(t+1)\) above, we end up with

Hence, we can define matrices \(A_b (t)\), \(B_3 (t)\) and \(B_4 (t)\) so that

due to the compactness of \(\mathcal{S}_{ab}\), \(\mathcal{S}_{\alpha \beta }\) and \(\mathcal{S}\), the following is immediate:

Proposition 2

There exists a constant \(c_1\) so that for every \(t_0 \in \mathbf{Z}\), \(x_0 \in \mathbf{R}^{n+m+3d-2}\), \({\theta }_0 \in \mathcal{S}\), \(\theta _{ab}^* \in \mathcal{S}_{ab}\), \(y^*,w \in {l_{\infty }}\), and \(\delta \in ( 0, \infty ]\), when the adaptive controller (8), (9) and (11) is applied to the plant (1), the following holds:

3.3 A better closed-loop model

The good closed-loop model (15) is driven by future tracking error signals. We can now combine this with the crude closed-loop model (16) to create a new model which is driven by perturbed versions of the present and past values of \(\phi \), with the weights associated with the parameter update law. Motivated by the form of the term in the parameter estimator, we first define

The following result plays a pivotal role in the analysis of the closed-loop system.

Proposition 3

There exists a constant \(c_2\) so that for every \(t_0 \in \mathbf{Z}\), \(x_0 \in \mathbf{R}^{n+m+3d-2}\), \({\theta }_0 \in \mathcal{S}\), \(\theta _{ab}^* \in \mathcal{S}_{ab}\), \(y^*, w \in {l_{\infty }}\), and \(\delta \in ( 0, \infty ]\), when the adaptive controller (8), (9) and (11) is applied to the plant (1), the following holds:

with

and

Proof

See Appendix. \(\square \)

To make the model of Proposition 3 amenable to analysis, we define a new extended state variable and associated matrices:

and

which gives rise to a state-space model which will play a key role in our analysis:

Now \(A_g\) arises from \(\theta _{ab}^* \in \mathcal{S}_{ab}\) and lies in a corresponding compact set \(\mathcal{A}\); furthermore, its eigenvalues are at zero and at the zeros of \(B(z^{-1})\), which has all of its roots in the open unit disk, so we can use classical arguments to prove that there exist constants \(\gamma \) and \(\sigma \in (0,1)\) so that for all \(\theta _{ab}^* \in \mathcal{S}_{ab}\), we have

indeed, for every \(\sigma \) larger than

we can choose \(\gamma \) so that (21) holds.

Equations of the form given in (20) arise in classical adaptive control approaches. While we can view (20) as a linear time-varying system, we have to bear in mind that both \(\Delta (t)\) and \(\eta (t)\) are (nonlinear) implicit functions of all of the data: \(\theta _{ab}^*\), \(\theta _0\), \(x_0\), \(y^*\) and w. That being said, the linear time-varying interpretation is very convenient for analysis; to this end, we let \(\Phi _{ {A}}\) denote the state transition matrix of a general time-varying square matrix A. The following result will prove useful in analysing our closed-loop systems on sub-intervals for which the constraints hold.

Proposition 4

With \(\sigma \in ( \underline{\lambda } , 1)\), suppose that \(\gamma \ge 1\) is such that (21) is satisfied for every \(A_g \in \mathcal{A}\). For every \(\mu \in ( \sigma , 1 )\), \(\beta _0 \ge 0\), \(\beta _1 \ge 0\), and

there exists a constant \(\bar{\gamma } \ge 1\) so that for every \(A_g \in \mathcal{A}\) and \(\Delta \in s( \mathbf{R}^{( n+m+d)d \times ( n+m+d)d )} )\) satisfying

we have

Proof

Fix \(\sigma \in ( \underline{\lambda } , 1)\) and \(\gamma \ge 1\) so that (21) is satisfied for every \(A_g \in \mathcal{A}\). For every \(\mu \in ( \sigma , 1 )\), \(\beta _0 \ge 0\), \(\beta _1 \ge 0\), and

it follows from the lemma of Kreisselmeier [10] that there exists a constant \(\bar{\gamma }\), which is independent of \(A_g \in \mathcal{A}\) (though dependant on \(\beta _2\), \(\gamma \), \(\mu \), and \(\sigma \)), so that if (22) holds then (23) holds as well. \(\square \)

In the next section, we prove that the closed-loop system is exponentially stable and that there is a convolution bound on the closed-loop behaviour. Following that, we analyse robustness and address the tracking problem.

4 Closed-loop stability

Theorem 1

For every \(\delta \in ( 0 , \infty ]\) and \(\lambda \in ( \underline{\lambda } ,1)\), there exists a constant \(c>0\) so that for every \(t_0 \in \mathbf{Z}\), plant parameter \({\theta }_{ab}^* \in \mathcal{S}_{ab}\), exogenous signals \(y^*, w \in \ell _{\infty }\), estimator initial condition \(\theta _0 \in \mathcal{S}\), and plant initial condition

when the adaptive controller (8), (9) and (11) is applied to the plant (1), the following bound holds:

Remark 7

Theorem 1 implies that the system has a bounded gain (from \(y^*\) and w to y) in every p-norm.

Remark 8

Most adaptive controllers are proven to yield a weak form of stability, such as boundedness (in the presence of a non-zero disturbance) or asymptotic stability (in the case of a zero disturbance), which means that details surrounding initial conditions can be ignored. Here the goal is to prove a stronger, linear-like, convolution bound as well as exponential stability, so it requires a much more detailed analysis.

Proof

Fix \(\delta \in (0, \infty ]\) and \(\lambda \in ( \underline{\lambda }, 1)\), and let \(t_0 \in \mathbf{Z}\), \(\theta _{ab}^* \in \mathcal{S}_{ab}\), \(y^*, w\in {l_{\infty }}\), \(\theta _0 \in \mathcal{S}\), and \(x_0 \in \mathbf{R}^{n+m+3d-2}\) be arbitrary. Now choose \(\sigma \in ( \underline{\lambda } , \lambda )\). Observe that \(x_0\) gives rise to \(\phi (t_0 -1)\),..., \(\phi (t_0 - d+1)\), and therefore \(\bar{\phi } (t_0 -1)\), which we label \(\bar{\phi }_0\); it is clear that \(\Vert \bar{\phi }_0 \Vert \le d \Vert x_0 \Vert \) and

Step 1 Preamble and preliminary results

To proceed we will analyse (20), namely

and obtain a bound on \(\bar{\phi } (t)\) in terms of \(\eta (t)\), \(\bar{w} (t)\), and \(y^* (t)\), which we will then convert to the desired form. First of all, we see from Proposition 3 that there exists a constant \(\gamma _1\) so thatFootnote 7

and

Second of all, following Sect. 3.3 we see that there exists a constant \(\gamma _2\) so that for every \(A_g \in \mathcal{A}\), we have that the corresponding matrix \(\bar{A}_g\) satisfies

Before proceeding, we provide several preliminary results. The first shows that we can always obtain a nice bound on the closed-loop behaviour on a short interval.

Claim 1

There exists a constant \(\gamma _3\) so that for every \(\underline{t} \ge t_0 -1\), we have

Proof of Claim 1

Using the crude model given in (16) together with Proposition 2, we see that there exists a constant \(c_1 \ge 1\) so that

Using the definition of \(\tilde{w} (t)\), this immediately implies that

Now let \(\underline{t} \ge t_0 -1\) be arbitrary. By solving (28) from \(t = \underline{t}\), we have

If we set \(\gamma _3 := \left( \frac{c_1}{ \lambda } \right) ^{4d }\), then the result follows. \(\square \)

In order to apply Proposition 4, we need to compute a bound on the sum of \(\Vert \Delta (t) \Vert \) terms; the following result provides an avenue.

Claim 2

There exists a constant \(\gamma _4\) so that

Proof of Claim 2

Since there are 2d terms in the RHS of (27), it is easy to see that

If we apply the Cauchy–Schwarz inequality, then we have

But

so the result follows. \(\square \)

Step 2 Partition the time-line.

Now we consider the closed-loop system behaviour. To proceed, we partition the timeline into two parts: one in which the noise is small and one where it is not; the idea is that when the noise is small, the closed-loop behaviour should be similar to that when the noise is zero. Of course, here we have to choose the notion of “small” carefully: we do so by using the scaled version of the noise, namely \( \frac{ | \bar{w} (t) |}{\Vert \phi (t) \Vert }\), which appears in the bound on V given in Proposition 1. To this end, with \(\xi >0 \) to be chosen shortly, partition \(\{ j \in \mathbf{Z}: j \ge t_0 \}\) into

clearly \(\{ j \in \mathbf{Z}: \;\; j \ge t_0 \} = S_\mathrm{good} \cup S_\mathrm{bad} \).Footnote 8 Observe that this partition implicitly depends on the system parameters \(\theta _{ab}^* \in \mathcal{S}_{ab}\), as well as the initial conditions. We will apply Proposition 4 to analyse the closed-loop system behaviour on \(S_\mathrm{good}\); on the other hand, we will easily obtain bounds on the system behaviour on \(S_\mathrm{bad}\). Before doing so, we partition the time index \(\{ j \in \mathbf{Z}: j \ge t_0 \}\) into intervals which oscillate between \(S_\mathrm{good}\) and \(S_\mathrm{bad}\). To this end, it is easy to see that we can define a (possibly infinite) sequence of intervals of the form \([ k_i , k_{i+1} )\) satisfying:

-

(i)

\(k_1 = t_0 \);

-

(ii)

\([ k_i , k_{i+1} )\) either belongs to \(S_\mathrm{good}\) or \(S_\mathrm{bad}\); and

-

(iii)

if \(k_{i+1} \ne \infty \) and \([ k_i , k_{i+1} )\) belongs to \(S_\mathrm{good}\) (respectively, \(S_\mathrm{bad}\)), then the interval \([ k_{i+1} , k_{i+2} )\) must belong to \(S_\mathrm{bad}\) (respectively, \(S_\mathrm{good}\)).

Now we analyse the behaviour during each interval.

Step 3 The closed-loop behaviour on \(S_\mathrm{bad}\).

Let \(j \in [ k_i , k_{i+1} )\) be arbitrary. In this case, either \(\phi (j) = 0\) or \(\frac{[ \bar{w} (j)]^2}{ \Vert \phi (j) \Vert ^2} \ge \xi \) holds. In either case, we have

From the crude model (16) and Proposition 2, we have

If we combine this with (29), we end up with

Step 4 The closed-loop behaviour on \(S_\mathrm{good}\).

Suppose that \([ k_i , k_{i+1} )\) lies in \(S_\mathrm{good}\); this case is much more involved than in the proof of [20, 25] since the bound on \(\Vert \Delta (t) \Vert \) provided by Claim 2 extends both forward and backward in time, occasionally outside \(S_\mathrm{good}\). Furthermore, the difference equation for \(\bar{\phi }\) given in (25) only holds for \( t \ge t_0 + 2d-2\), so if \(k_i = t_0\) then we cannot use it until \(k_i + 2d -2\). For this reason, we will handle the first 2d and last 2d time units separately.

To this end, first suppose that \(k_{i+1} \le k_i + 4d\). Then by Claim 1, we see that there exists a constant \(\gamma _5\) so that

Now suppose that \(k_{i+1} > k_i+4d\). Define \(\bar{k}_i:= k_i +2d \) and \( \underline{k}_{i+1}:= k_{i+1} -2d \). By the definition of \(S_\mathrm{good}\) and the fact that \(\rho _{\delta } ( \cdot , \cdot ) \in \{ 0 , 1 \}\), it follows that

so

By Proposition 1, we see that

Using the fact that the first term in the sum is \(\nu ( j)\), using (32) to provide a bound on the second term in the sum, and using the fact that

it follows that

We would like to leverage this bound on \(\nu (j)\) to obtain a bound on \(\Vert \Delta (j) \Vert \). From Claim 2

as long as we keep

or equivalently

then we can use (33) to provide a bound on the RHS; this will definitely be the case if we restrict

resulting in

If we restrict \(\xi \le 1\), then we obtain

if we define

then for \(\xi \le 1\) we have

Now we will apply Proposition 4: we set

we need \({\beta _2} < \frac{1}{ \gamma } ( \mu - \sigma ) \), or equivalently

so we set

So from Proposition 4, we see that there exists a constant \(\gamma _7\) so that the state transition matrix \(\Phi _{\bar{A}_g + \Delta } (t , \tau )\) satisfies

In order to solve (25), we need a bound on \(\eta (t)\). But (33) implies that

so from (26) we see that

If we use this in the difference equation (25), we see that there exists a constant \(\gamma _8\) so that

However, we would like a bound on the whole interval \([k_i , k_{i+1} )\), and we’d like it on \(\phi \) rather than \(\bar{\phi }\).

Claim 3

There exists a constant \(\gamma _9\) so that

Proof of Claim 3

First of all, on the interval \([k_i , \bar{k}_i]\) we can apply Claim 1:

This provides a bound of the desired form on the first sub-interval of \([k_i , k_{i+1})\).

Next, from (34) we have

Using the definition of \(\bar{\phi }\) and Claim 1 (with \(\underline{t} = k_i\)), we have

If we now substitute this into (35), then we have

if we define \(\bar{\gamma }_8 := \gamma _8 + \gamma _8 \bar{\gamma }_3\), then it follows that

This provides a bound of the desired form on the second sub-interval of \([k_i , k_{i+1} ]\).

Last of all, we would like to obtain a bound of the desired form on \([ \underline{k}_{i+1} , k_{i+1} ]\). By Claim 1 we have

Using (36) to obtain a bound on \( \Vert \phi ( \underline{k}_{i+1} ) \Vert \), we obtain

So if we set

then the result holds. \(\square \)

Step 5 Analysing the whole time-line.

At this point, we glue together the bounds obtained on \(S_\mathrm{bad}\) and \(S_\mathrm{good}\) together to obtain a bound which holds on all of \([ t_0 , \infty )\).

Claim 4

There exists a constant \(\gamma _{10}\) so that the following bound holds:

Proof of Claim 4

If \([k_1 , k_2 ) = [t_0 , k_2) \subset S_\mathrm{good}\), then (37) holds for \(k \in [t_0 , k_2 ]\) by Claim 3 as long as

If \([k_1 , k_2 ) = [t_0 , k_2) \subset S_\mathrm{bad}\), then from (30) we see that the bound holds as long as

We now use induction - suppose that (37) holds for \(k \in [k_1 , k_i ]\); we need to prove that it holds for \(k \in ( k_i, k_{i+1} ]\) as well. If \( [k_i, k_{i+1}) \subset S_\mathrm{bad}\), then from (30) we see that the bound holds on \((k_i , k_{i+1} ]\) as long (39) holds. On the other hand, if \([k_i, k_{i+1}) \subset S_\mathrm{good}\), then \(k_i -1 \in S_\mathrm{bad}\); from (30) we have that

combining this with Claim 3, which provides a bound on \(\Vert \phi (k)\Vert \) for \(k \in [k_i , k_{i+1} )\), we have

So the bound holds in this case as long as

\(\square \)

Step 6 Obtaining a bound of the desired form

The last step is to convert the bound proven in Claim 4 to one of the desired form, i.e. we need to replace \( \tilde{w} \) with w and \(y^*\). First of all, using the definition of \(\tilde{w}\) given in (26), we see that

From the definition of \(\bar{w} (t)\), we have that

the \(f_i's\) arise from long division and are continuous functions of \(\theta _{ab}^* \in \mathcal{S}_{ab}\), so it follows that exists a constant \(\gamma _{11}\) so that for all \(\theta _{ab}^* \in \mathcal{S}_{ab}\), we have

It turns out that some of the terms in the sum are superfluous:

-

(i)

We see from the control law (11) that \(y^* (t)\) affects \(\phi (t)\) only via u(t). Indeed, u(t) explicitly depends on \(y^* (t+d)\) which means, by causality, that \(y^*( t+d+1)\) has no effect on \(\phi (t)\).

-

(ii)

We see from the original plant equation (1) that w(t) affects \(\phi (t)\) via y(t). Indeed, y(t) explicitly depends on w(t), which means, by causality, that \( \sum _{j=1}^{2d+1} | w(t+j) | \) can have no effect on \(\phi (t)\).

If we combine these observations with Claim 4, we see that the bound becomes

if we examine each term in the summation, it is straight-forward to verify that

Now \(y^*\) affects \(\phi \) via the control signal, and it is clear that \(y^* (k)\) for \(k < t_0 + d \) has no effect on \(\phi (k)\), \(k \ge t_0\), so the \(\psi _1 (k)\) term can be ignored.Footnote 9 On the other hand, the \(\psi _2 (k)\) term does have an effect: first observe that \(\psi _2\) can be rewritten as

second of all, it follows from the plant equation (1) that each of \(\{ w(t_0 -d+1),\ldots , w(t_0-1) \}\) can be rewritten as a linear function of \(x_0\), so it follows that there exists a constant \(\gamma _{12}\) so that

Since \(\Vert \phi (t_0 ) \Vert \le \Vert x_0 \Vert \), we conclude that

as desired. \(\square \)

5 Robustness to time-variations and unmodelled dynamics

It turns out that the exponential stability property and the convolution bounds proven in Theorem 1 will guarantee robustness to a degree of time-variations and unmodelled dynamics; in this way, the approach has a lot in common with an LTI closed-loop system, which also enjoys this feature. Indeed, as we have recently proven in [36], this is true even in a more general situation than that considered here. At this point, we will state the key result and then simply refer to that paper for the proof.

To proceed, consider a time-varying version of the reparameterized plant (2), in which \(\theta ^*\) is now time-varying and there are some unmodelled dynamics which enter the system via \(\bar{w} _{\Delta } (t)\):

-

(i)

Time variation model Since the mapping from \(\mathcal{S}_{ab}\) to \(\mathcal{S}_{\alpha \beta }\) is analytic and both sets are compact, we may as well consider the time-variations in the latter setting. We adopt a common model of acceptable time-variations used in adaptive control, e.g. see [10]: with \(c_0 \ge 0\) and \(\epsilon >0\), we let \({s} ( \mathcal{S}_{ \alpha \beta } , c_0, \epsilon )\) denote the subset of \({l_{\infty }}( \mathbf{R}^{n+m+d})\) whose elements \(\theta ^*\) satisfy \(\theta ^* (t) \in \mathcal{S}_{ \alpha \beta }\) for every \(t \in \mathbf{Z}\) as well as

$$\begin{aligned} \sum _{t=t_1}^{t_2-1} \Vert \theta ^* (t+1) - \theta ^* (t) \Vert \le c_0 + \epsilon ( t_2 - t_1 ) , \; t_2 > t_1 \end{aligned}$$(41)for every \(t_1 \in \mathbf{Z}\).

-

(ii)

Unmodelled dynamics We also adopt a common model of unmodelled dynamics:

$$\begin{aligned} {m} (t+1)= & {} \beta {m} (t) + \beta \Vert \phi (t) \Vert , \; m(t_0)= m_0 \; \end{aligned}$$(42)$$\begin{aligned} \bar{w} _{\Delta } (t)\le & {} \mu m(t) + \mu \Vert \phi (t) \Vert . \end{aligned}$$(43)As argued in [25], this encapsulates a large class of additive, multiplicative and uncertainty in a coprime factorization, which is common in the robust control literature, e.g. see [46], and is commonly used in the adaptive control literature, e.g. see [11].

We will now show that if the time-variations are slow enough and the size of the unmodelled dynamics is small enough, then the closed-loop system retains exponential stability as well as the convolution bounds.

Theorem 2

For every \(\delta \in ( 0 , \infty ]\), \(c_0 \ge 0\) and \(\beta \in (0,1)\), there exist an \(\epsilon >0\), \(\mu > 0\), \({\lambda } \in ( \max \{ \beta , \underline{\lambda }) \} ,1)\) and \({c} >0\) so that for every \(t_0 \in \mathbf{Z}\), plant parameter \({\theta }^* \in s ( \mathcal{S}_{\alpha \beta }, c_0 , \epsilon )\), exogenous signals \(y^*, w \in \ell _{\infty }\), estimator initial condition \(\theta _0 \in \mathcal{S}\), and plant initial condition

when the adaptive controller (8), (9) and (11) is applied to the plant (40) with \(\bar{w}_{\Delta }\) satisfying (42), (43), the following bound holds:

Proof

This was proven in [25] for the case of pole placement and then extended to a more general setting in Theorems 1 and 3 of [36]; the setup here is a special case of the latter, so the result follows immediately from that. \(\square \)

6 Tracking

We now move from the stability problem to the much harder tracking problem. We first derive a very useful bound on the tracking error in terms of the prediction error. Following that, we analyse the case when the disturbance is absent: we start with the original LTI case, and then we move to the situation in which the parameters are slowly time-varying. Last of all, we consider the original LTI case with a disturbance.

6.1 A useful bound

The d-step-ahead control law (11) can be rewritten as

Since the tracking error is \(\varepsilon (t)=y(t)-y^*(t)\), we have

Combining the above with the prediction error definition in (5), we easily obtain

Observe that the relationship in (45) is true irrespective of the plant model, i.e. it need not satisfy (1) or (2).

Now we turn to the prediction and tracking errors scaled by the state \(\phi \). For \(t\ge t_0+d\), if \(\Vert \phi (t-d)\Vert \ne 0\) then it follows from (45) that

multiplying both sides by \(\rho _{\delta }(\phi (t-d),e(t))\) yields

It turns out that the estimator property in (10) holds irrespective of the plant model; if we use this to yield a bound on the second term on the right-hand side above, then we obtain

By Cauchy–Schwartz

Hence, it follows that for \(\bar{t}\ge t_0+2d-1 \) we obtain

The inequality in (46) will prove to be useful in the forth-coming analysis.

In the next two sub-sections, we analyse the case when the disturbance is absent; we start with the case with the original LTI case, and then we move to the situation in which the parameters are slowly time-varying. After that, we anlyse the general case.

6.2 The LTI case: no disturbance

In the literature, it is typically proven that the tracking error is square summable, e.g. see [5]. Here we can prove an explicit bound on the 2-norm of the error signal in terms of the plant initial condition and the size of the reference signal, which is a significant improvement.

Theorem 3

For every \(\delta \in ( 0 , \infty ]\) and \(\lambda \in ( \underline{\lambda } ,1)\) there exists a constant \(c>0\) so that for every \(t_0 \in \mathbf{Z}\), plant parameter \({\theta }_{ab}^* \in \mathcal{S}_{ab}\), exogenous signal \(y^*\in \ell _{\infty }\), estimator initial condition \(\theta _0 \in \mathcal{S}\), and plant initial condition

when the adaptive controller (8), (9) and (11) is applied to the plant (1) in the presence of a zero disturbance w, the following bound holds:

Proof

Fix \(\delta \in ( 0 , \infty ]\) and \(\lambda \in (\underline{\lambda },1)\). Let \(t_0 \in \mathbf{Z}\), \({\theta }_{ab}^* \in \mathcal{S}_{ab}\), \(y^*\in \varvec{\ell }_{\infty }\), \(\theta _0 \in \mathcal{S}\), and \(x_0\) be arbitrary. Now suppose that \(w=0\); for this case, by the definition of the function \(\rho _{\delta }\), for \(t\ge t_0+d\) we have

If we incorporate this into the relation (46) with \(\bar{t} = t_0 + 2d-1\), then for \(T > t_0 + 2d-1\) we obtain

Since \({\varepsilon }(t) = 0\) if \(\phi (t-d) = 0\), if we now apply the bound on \(\phi (t)\) proven in Theorem 1, we conclude that there exists constants \(c_1 > 0\) and \(\lambda \in (0,1)\) so that

which yields the desired result. \(\square \)

6.3 The slowly time-varying case: no disturbance

Now we turn to the case in which the plant parameter is slowly time-varying (with no jumps in the parameters) and the disturbance w(t) is zero. We should not expect to get exact tracking; we will be able to prove, roughly speaking, that the average tracking error is small on average if the time-variation is small. To proceed, we consider the time-varying plant of (40) without the unmodelled dynamics and with zero noise:

Theorem 4

For every \(\delta \in ( 0 , \infty ]\), there exist constants \(\bar{\epsilon } >0\) and \(\gamma >0\) so that for every \(t_0 \in \mathbf{Z}\), \(\epsilon \in ( 0, \bar{\epsilon })\), \(\theta ^*\in s(\mathcal{S}_{\alpha \beta },0,\epsilon )\), \(y^*\in \varvec{\ell }_{\infty }\), \(\theta _0 \in \mathcal{S}\), and plant initial condition

when the adaptive controller (8), (9) and (11) is applied to the time-varying plant (47), the following holds:

Proof

Fix \(\delta \in ( 0 , \infty ]\) and \(\lambda _1\in (\underline{\lambda },1)\), and set \(w=0\). By Theorem 2, there exist constants \(\gamma _1>0 \) and

so that for every \(t_0\in \mathbf{Z}\), \(y^*\in \varvec{\ell }_{\infty }\), \(\theta _0 \in \mathcal{S} \), initial condition \(x_0\), and \(\theta ^*\in s(\mathcal{S}_{\alpha \beta },0,\bar{\epsilon })\), when the adaptive controller (8), (9) and (11) is applied to the plant (47), the following holds:

Now, let \(t_0\in \mathbf{Z}\), \(y^*\in \varvec{\ell }_{\infty }\), \(\theta _0 \in \mathcal{S}_{ab} \), \(x_0\), \(\epsilon \in ( 0 , \bar{\epsilon } )\) and \(\theta ^*\in s(\mathcal{S}_{\alpha \beta },0,{\epsilon })\) be arbitrary. We first want to find a bound on the tracking error in terms of the prediction error. Note that the relationship in (45) and (46) holds irrespective of the plant model. Since \(w=0\), it follows that for \(t \ge t_0 +d\):

Now apply (46); by changing the index to facilitate analysis, we obtain

Now we incorporate time-variation. Let \(t_{i}\ge t_{0}\) be arbitrary; then from plant equation (47) we have

Note from (41) that \( \Vert \Delta _i(t) \Vert \le \epsilon \Vert \phi (t)\Vert \left( t-t_i \right) . \) Define \(\tilde{\theta }_i(t):= \hat{\theta }(t)-\theta ^*(t_i)\). Since \( w=0\), by applying Proposition 1 to the plant (50), we obtainFootnote 10

Using the above bound in (49) with \(\bar{t} = t_i\), we obtain

Since the disturbance is zero here, it follows that \(\Vert \phi (j)\Vert =0 \) implies that \( \varepsilon (j+d)=0\).Footnote 11 So from (51), we conclude that

As \(t_i\ge t_0 \) is arbitrary, we can relabel the indexes and obtain

We now analyse the average tracking error. From (53), we obtain

Now define

and \(T_{\beta }\in \mathbf{N}\) by

this means that

Since \(\epsilon \le 2\Vert \mathcal{S}\Vert \) by design, we can easily check that \(T_\beta \ge d\), which means that

Incorporating this and the definition of \(T_\beta \) into (54), by choosing \(t=t_i+T_\beta \) we have

We would like to obtain a bound on \(\epsilon ^2 T_{\beta }^2\). But it follows from (55) that

so

if we define \( x = \frac{ \beta _{\epsilon }}{2 d \Vert \mathcal{S} \Vert ^2} \), then we see that

But \(\epsilon \in ( 0 , 2^{3/2} d^{1/2} \Vert \mathcal{S } \Vert )\) by hypothesis, so

so

Substituting this into (56) and simplifying yields

We now analyse the average tracking error over the whole time horizon; we do so by considering time intervals of length \(T_\beta \). From (57) we easily obtain

The bound in (58) provides a bound on the average tracking error over time intervals of lengths that are multiples of \(T_\beta \). To extend this to intervals of arbitrary length, first observe that (58) can be rewritten as

For \(k \in \{ 0,1,\ldots , T_{\beta }-1 \}\), this inequality implies that

adding these two inequalities and simplifying yields

Changing variables to enhance clarity, we see that this implies that

which means that

This means that

From (48), see that

the boundedness of the tracking error ensures that for every \(\bar{t} \ge t_0\) we have

so we conclude that

Since \(\beta _\epsilon = ( d \Vert \mathcal{S} \Vert ^2)^{2/3} \epsilon ^{2/3}\), the result follows. \(\square \)

6.4 Tracking in the presence of a disturbance

Now we turn to the much harder problem of tracking in the presence of a disturbance; throughout this sub-section, we assume that the plant is LTI. Our goal is to show that if the noise is small, then the tracking error is small; this is a stringent requirement, since in adaptive control it is usually only proven that if the noise is bounded, then the error is bounded. We can, of course, measure signal sizes in a variety of ways, with the 2-norm and the \(\infty \)-norm the most common; given that a large disturbance can lead the estimator astray and cause “temporary instability”, the 2-norm seems to be the most appropriate here.

If the closed-loop system were LTI, then by Parseval’s Theorem we could conclude that the average power of the tracking error is bounded by the average power of the disturbance, i.e. there exists a constant c so that

unfortunately, while the closed-loop system has some desirable linear-like closed-loop properties, the controller is nonlinear so the closed-loop system is clearly not LTI. However, we can prove this bound in two extreme cases:

-

if \(y^* = 0\), then \(y= {\varepsilon }\), so with c and \(\lambda \) as given in Theorem 1, it is easy to see that

$$\begin{aligned} \limsup _{T \rightarrow \infty } \frac{1}{T} \sum _{j=t_0}^{t_0 + T-1} [ {\varepsilon }(t) ]^2 \le \frac{c^2}{(1- \lambda )^2} \times \limsup _{T \rightarrow \infty } \frac{1}{T} \sum _{j=t_0}^{t_0 + T-1} [ w (t) ]^2 ; \end{aligned}$$ -

on the other hand, if \(y^* \ne 0\) and \(w =0\), then from Theorem 3 we see that

$$\begin{aligned} \limsup _{T \rightarrow \infty } \frac{1}{T} \sum _{j=t_0}^{t_0 + T-1} [ {\varepsilon }(t) ]^2 = 0. \end{aligned}$$

In the general case, we will prove something weaker than (60), but with much the same flavour; it is, however, much stronger than the standard result in the literature.

Theorem 5

For every \(\delta \in ( 0 , \infty ]\), there exists a \(\gamma >0\) so that for every \(t_0 \in \mathbf{Z}\), \({\theta }_{ab}^* \in \mathcal{S}_{ab}\), \(y^*,w\in \varvec{\ell }_{\infty }\), \(\theta _0 \in \mathcal{S}\), and plant initial condition

when the adaptive controller (8), (9) and (11) is applied to the plant (1) with \(\liminf _{t\rightarrow \infty } |y^*(t)|>0 \) then the following holds:

Remark 9

So we see that the bound proven here is similar to that of (60) which holds in the LTI case, although we have an extra term multiplied on the RHS:

If the reference signal is larger than the noise, which is what one would normally expect, then this would be bounded by

if \(| y^* (t) | \in \{ -1, 1 \}\) then this is exactly two. It is curious that the quantity gets large if \(y^* (t)\) gets close to zero; we suspect that this is an artifact of the proof, since all simulations indicate that the LTI-like bound (60) holds.

Proof

Fix \(\delta \in ( 0 , \infty ]\) and \(\lambda \in (\underline{\lambda },1)\). Let \(t_0 \in \mathbf{Z}\), \({\theta }_{ab}^* \in \mathcal{S}_{ab}\), \(y^*,w\in \varvec{\ell }_{\infty }\), \(\theta _0 \in \mathcal{S}\), and \(x_0\) be arbitrary, but so that \(\liminf _{t \rightarrow \infty } | y^* (t) | > 0\). Before proceeding, choose \(\underline{t} \ge t_0 + 2d-1\) so that

Now, by using (46) and applying Proposition 1, for \(\bar{t}\ge t_0+2d-1 \) we obtain

From the controller equation (11), we have

which means that

if we substitute this into (62), then we obtain

Now we analyse the above bound for two cases: when the estimator is turned on, i.e. when \(\rho _{\delta } ( \cdot , \cdot ) =1\) and when the estimator is turned off, i.e. when \(\rho _{\delta } ( \cdot , \cdot ) =0\). Before proceeding, we define some notation: for \(t_2\ge t_1\ge t_0\), we define

Case 1 The estimator is turned on: \(\rho _{\delta } ( \phi ( j-d ) , e(j) ) =1\).

From (63), we have

which means that

Case 2 The estimator is turned off : \(\rho _{\delta } ( \phi ( j-d) , e(j) ) =0\).

In this case, we know from the definition of \(\rho _{\delta }\) that when \(\rho _{\delta } ( \phi (t-d) , e(t))=0\):

-

if \(\delta = \infty \) then \(\phi (t-d) = 0\), so

$$\begin{aligned} \Vert \phi (t-d) \Vert \le \frac{1}{\delta } | \bar{w} ( t-d ) |; \end{aligned}$$ -

if \(\delta \in (0 , \infty ) \), then we have that

$$\begin{aligned} |e(t)| \ge (2\Vert \mathcal{S}\Vert +\delta )\Vert \phi (t-d) \Vert ; \end{aligned}$$using the formula for the prediction error given in (13) we see that

$$\begin{aligned} | e(t) |\le & {} \Vert \phi (t-d) \Vert \times \Vert \tilde{\theta } (t-1) \Vert + | \bar{w} (t-d) | \\\le & {} 2 \Vert \mathcal{S} \Vert \times \Vert \phi (t-d) \Vert + | \bar{w} (t-d) | ; \end{aligned}$$combining these two equations yields

$$\begin{aligned} \Vert \phi (t-d) \Vert \le \frac{1}{\delta } | \bar{w} (t-d) | . \end{aligned}$$

Using the formula for the tracking error given in (12), we have

Hence,

We can now combine (65) and (66) of Case 1 and Case 2, respectively, to yield

By Theorem 1, there exists constants \(c>0\) and \(\lambda \in (0,1)\) so that

so we can choose \(\bar{t} \ge \underline{t}\) (which depends implicitly on \(x_0\), \(y^*\), \(\theta _0\), and \(\theta ^*\)) such that

as well as

If we incorporate this into (67), then we obtain

which means that

But \(\bar{w} (t)\) is a weighted sum of \(\{ w(t+1) ,\ldots , w ( t+d) \}\), and the boundedness of all variables makes the starting point of the average sums irrelevant, so the desired bound (61) follows. \(\square \)

7 A simulation example

Here we provide several simulation examples to illustrate the results of this paper. Consider the second-order time-varying plant with relative degree one:

with \(a_1(t)\in [-3,3], a_2(t)\in [-4,4], b_0(t)\in [1.5,5]\) and \(b_1(t)\in [-1,1]\). When the parameters are fixed, we see that the corresponding system has a zero in \([- \frac{2}{3} , \frac{2}{3} ]\) and poles which may be stable or unstable.

7.1 Simulation 1: the benefit of vigilant estimation

In the first simulation, we illustrate that the vigilant estimation algorithm provides better performance than the classical algorithm does. More specifically, we compare the adaptive controller using the vigilant estimator (8), (9) (with \(\delta = \infty \)) with the adaptive controller using the classical estimation algorithm (7) suitably modified to incorporate projection onto \(\mathcal{S}\) (and with \(\bar{\alpha } = \bar{\beta } = 1 \)). In each case, we apply the adaptive controller to this plant with the parameters chosen as

We set \(y^*=0\) (so \(\varepsilon (t)=y(t)\)) and the noise to

we set \(y(-1)=y(0)=-0.1\), \(u(-1)= u(-2)=0\), and the initial parameter estimates to the midpoint of the respective intervals. Fig. 1 shows tracking errors during the transient phase of 200 steps, and Fig. 2 shows the tracking error and parameter estimation for the rest of the simulation time of 2000 steps. We can clearly see that the proposed controller provides better transient performance as well as better disturbance rejection than the classical algorithm. Furthermore, you can see that the proposed controller does a much better job of tracking the parameter variations.

Column a shows results using vigilant estimation, and column b shows results using classical estimation. In each column, the upper plot shows the tracking error for \(t\ge 200 \); the next plot shows the control signal for \(t \ge 200\); the last four plots show the parameter estimates (solid) and actual parameters (dashed) for \(t\ge 200 \)

7.2 Simulation 2: illustrating robustness

In the second simulation, we illustrate the tolerance to time-variation and unmodelled dynamics. We apply the proposed adaptive controller (with \(\delta = \infty \)) to the plant when the parameters are time-varying:

and when the unmodelled dynamics (in the associated plant model (40)) are described by

We set the reference signal to

and the noise signal to

we choose initial conditions of \(y(-1)=y(0)=-1\) and \(u(-1)= u(-2)=0\), and the initial parameter estimates to \(\theta _0=[-2 \;\; -2 \;\; 2 \;\; 1]^\top \); Fig. 3 shows the results. The adaptive controller clearly shows robust performance to both unmodelled dynamics and time-variations, including parameter jumps: the tracking is quite good, and the parameter estimator (approximately) tracks the time-varying parameters.

7.3 Simulation 3: tracking with no noise

Theorem 3 says that there exists a constant c so that

i.e. the 2-norm of the tracking error is not only finite, but is bounded by a constant time the sizes of the reference signal and plant initial condition. In this simulation, we apply the proposed adaptive controller (with \(\delta = \infty \)) to the plant when

We set the disturbance signal to zero and the reference signal to

with amplitudes of

we set the plant initial condititon to \(y(-1)=y(0)=-1\) and \(u(-1)=u(-2)=0\), and we set the initial parameter estimate to the midpoint of the respective intervals. We simulate for 100, 000 steps. Because there is no noise, we get asymptotic tracking; we compute the square sum of the tracking error and compare it to the square of the reference amplitude and the square of the norm of the plant initial condition; the results are in Table 1. We see that the result is consistent with Theorem 3: the gain is bounded above by approximately 1000.

7.4 Simulation 4: tracking with slowly varying parameters

In our fourth simulation, we illustrate the result in Theorem 4; we show that the average tracking error is proportional to the speed of the parameter variation. We apply the proposed controller (with \(\delta = \infty \)) with plant parameters of

with

We choose plant initial conditions of \(y(-1)=y(0)=-1\) and \(u(-1)= u(-2)=0\), and the initial parameter estimate equal to the midpoint of the respective intervals. With a zero disturbance and

we simulate the closed-loop system for \(T=5000\) steps; we plot the tracking error for the last 3000 steps in Fig. 4, i.e. after the transient effect has been eliminated. We see that, consistent with Theorem 4, average tracking error increases with the speed of the plant parameter variation.

7.5 Simulation 5: tracking with noise

In the final simulation, we illustrate the tracking result in the presence of noise, namely Theorem 5. We show that, on average, the tracking error is proportional to the size of the exogenous noise. We apply the proposed adaptive controller (with \(\delta = \infty \)) to the plant when

We choose an initial condition of \(y(-1)=y(0)=-1\) and \(u(-1)=u(-2)=0\) and set the initial parameter estimates to the midpoint of the respective intervals. We set the noise to

with different amplitudes:

we choose the reference signal \(y^* \) to be a square wave of amplitude one and period 300; observe that \(\liminf _{t\rightarrow \infty } |y^*(t) |=1 \), \(\Vert y^*\Vert _\infty =1 \) and \(\Vert w\Vert _\infty =|W_0| \). We simulate for \(T=5000\) steps; in Fig. 5, we plot the tracking error for the last 3000 steps, so that we focus on the “steady-state behaviour”. We clearly see that the average tracking error magnitude is roughly proportional to the average noise signal magnitude.

8 Summary and conclusions

Under common assumptions on the plant model, in this paper we use a modified version of the original, ideal, projection algorithm (termed a vigilant estimator) to carry out parameter estimation; the corresponding d-step-ahead adaptive controller guarantees linear-like convolution bounds on the closed-loop behaviour, which confers exponential stability and a bounded noise gain, unlike almost all other parameter adaptive controllers. This is then leveraged in a modular way to prove tolerance to unmodelled dynamics and plant parameter variation. We examine the tracking ability of the approach and are able to prove properties which most adaptive controllers do not enjoy:

-

(i)

in the absense of a disturbance, we obtain an explicit 2-norm on the size of the tracking error in terms of the size of the initial condition and the reference signal;

-

(ii)

if there is no noise but there are slow time-variations, then we prove that we can bound the size of the average tracking error by the size of the time-variation;

-

(iii)

if there is noise, then under some technical conditions we bound the size of the average tracking error in terms of the size of the average disturbance times another complicated quantity.

We are working on several extensions of the approach. First of all, we would like to use a multi-estimator approach to reduce the amount of structural information on the plant; we have proven this already in the case of first-order systems [35], but extending this to the general case has proven to be challenging. Second of all, we would like to obtain a crisper bound on the average tracking error than that discussed in (iii) above, i.e. prove that the size of the average tracking error is bounded above by a constant time the size of the average disturbance; while all of our simulations confirm this, as of yet we have not been able to prove it.

Notes

It is proven in [36] that for a large class of plant/controller combinations, a convolution bound on the input-output behaviour can be leveraged to prove tolerance to slow variations in the plant parameters as well as to a small amount of unmodelled dynamics.

An exception is the work of Ydstie [43, 44], who considers the ideal projection algorithm as a special case; however, a crisp bound on the effect of the initial condition and a convolution bound on the effect of the exogenous inputs are not proven. Another notable exception is the work of Akhtar and Bernstein [1], where they are able to prove Lyapunov stability; however, they do not prove a convolution bound on the effect of the exogenous inputs either, and they assume that the high-frequency gain is known.

An exception is the work of [12]: they provide crisp bounds on the size of the tracking error; however, they do not prove the nice linear-like properties which we desire.

We can make this even more general by letting \(\bar{\alpha }\) be time-varying.

It is common in the literature to turn off the estimator if the prediction error is small, e.g. see [6]; this is a completely different idea.

If \(\delta = \infty \), then we adopt the understanding that \(\infty \times 0 = 0\), in which case this formula collapses into the original version (6).

We’ve chosen to define \(\tilde{w} (t)\) in this way to simplify the analysis later on in the proof.

If the disturbance is zero, then \(S_\mathrm{good}\) may be the whole time-line \( [ t_0 , \infty )\).

Alternatively, we can argue that the closed-loop system response for \(k \ge t_0\) is identical if we were to replace \(y^*\) by another signal which is the same for \(t \ge t_0+d\) and zero elsewhere, in which case the corresponding \(\psi _1 (k) =0\) for \(k \ge t_0\).

Observe that \( \sum _{k=1}^{m} k^2 = \frac{m(m+1)(2m+1)}{6} = \frac{m^3}{3}+\frac{m^2}{2}+\frac{m}{6} \le m^3. \)

Observe that this is true even when \(\theta ^* (t)\) is time-varying.

It is similar to \(\nu (t-1)\) except for the \({\varepsilon }(t)\) rather than e(t) at the end.

References

Akhtar S, Bernstein DS (2005) Lyapunov-stable discrete-time model reference adaptive control. In: 2005 American control conference, Portland, OR, USA, pp 3174–3179

Anderson BDO, Johnson CR (1982) Exponential convergence of adaptive identification and control algorithms. Automatica 18(1):1–13

Feuer A, Morse AS (1978) Adaptive control of single-input, single-output linear systems. IEEE Trans Autom Control 23(4):557–569

Fu M, Barmish BR (1986) Adaptive stabilization of linear systems via switching control. IEEE Trans Autom Control AC–31:1097–1103

Goodwin GC, Ramadge PJ, Caines PE (1980) Discrete time multivariable control. IEEE Trans Autom Control AC–25:449–456

Goodwin GC, Sin KS (1984) Adaptive filtering prediction and control. Prentice Hall, Englewood Cliffs, NJ

Hespanha JP, Liberzon D, Morse AS (2003) Hysteresis-based switching algorithms for supervisory control of uncertain systems. Automatica 39:263–272

Hespanha JP, Liberzon D, Morse AS (2003) Overcoming the limitations of adaptive control by means of logic-based switching. Syst Control Lett 49(1):49–65

Ioannou PA, Tsakalis KS (1986) A robust direct adaptive controller. IEEE Trans Autom Control AC–31(11):1033–1043

Kreisselmeier G (1986) Adaptive control of a class of slowly time-varying plants. Syst Control Lett 8:97–103

Kreisselmeier G, Anderson BDO (1986) Robust model reference adaptive control. IEEE Trans Autom Control AC–31:127–133

Kristic M, Kokotovic PV, Kanellakopolous I (1993) Transient-performance improvement with a new class of adaptive controllers. Syst Control Lett 21(6):451–461

Li Y, Chen H-F (1996) Robust adaptive pole placement for linear time-varying systems. IEEE Trans Autom Control AC–41:714–719

Middleton RH et al (1988) Design issues in adaptive control. IEEE Trans Autom Control 33(1):50–58

Middleton RH, Goodwin GC (1988) Adaptive control of time-varying linear systems. IEEE Trans Autom Control 33(2):150–155

Miller DE (2003) A new approach to model reference adaptive control. IEEE Trans Autom Control AC–5:743–757

Miller DE (2006) Near optimal LQR performance for a compact set of plants. IEEE Trans Autom Control 9:1423–1439

Miller DE (2016) An adaptive controller to provide near optimal LQR performance. In: 2016 IEEE 55th conference on decision and control, Las Vegas, Nevada, pp 3813–3818

Miller DE (2017) A parameter adaptive controller which provides exponential stability: the first order case. Syst Control Lett 103:23–31

Miller DE (2017) Classical discrete-time adaptive control revisited: exponential stabilization. In: 1st IEEE conference on control technology and applications, Mauna Lani, HI, pp 1975–1980

Miller DE (1996) On necessary assumptions in discrete-time model reference adaptive control. Int J Adapt Control Signal Process 10(6):589–602

Miller DE, Davison EJ (1991) An adaptive controller which provides an arbitrarily good transient and steady-state response. IEEE Trans Autom Control AC–36:68–81

Miller DE, Davison EJ (1989) An adaptive controller which provides Lyapunov stability. IEEE Trans Autom Control AC–34:599–609

Miller DE, Mansouri N (2010) Model reference adaptive control using simultaneous probing, estimation, and control. IEEE Trans Autom Control 55(9):2014–2029

Miller DE, Shahab MT (2018) Classical pole placement adaptive control revisited: linear-like convolution bounds and exponential stability. Math Control Signals Syst 30(4):19

Miller DE, Shahab MT (2019) Classical d-step-ahead adaptive control revisited: linear-like convolution bounds and exponential stability. In: 2019 American control conference, Philadelphia, pp 417–411

Morse AS (1980) Global stability of parameter-adaptive control systems. IEEE Trans Autom Control AC–25:433–439

Morse AS (1996) Supervisory control of families of linear set-point controllers—part 1: exact matching. IEEE Trans Autom Control AC–41:1413–1431

Morse AS (1997) Supervisory control of families of linear set-point controllers—part 2: robustness. IEEE Trans Autom Control AC–42:1500–1515

Morse AS (1998) A bound for the disturbance to tracking error gain of a supervised set-point control system. In: Normand-Cyrot D (ed) Perspectives in control. Springer, London

Naik SM, Kumar PR, Ydstie BE (1992) Robust continuous-time adaptive control by parameter projection. IEEE Trans Autom Control AC–37:182–297

Narendra KS, Lin YH (1980) Stable discrete adaptive control. IEEE Trans Autom Control AC–25(3):456–461

Narendra KS, Lin YH, Valavani LS (1980) Stable adaptive controller design, part II: proof of stability. IEEE Trans Autom Control AC–25:440–448

Rohrs CE et al (1985) Robustness of continuous-time adaptive control algorithms in the presence of unmodelled dynamics. IEEE Trans Autom Control AC–30:881–889

Shahab MT, Miller DE (2018) Multi-estimator based adaptive control which provides exponential stability: the first-order case. In: 2018 IEEE conference on decision and control, Miami Beach, USA, pp 2223–2228

Shahab MT, Miller DE (2019) A convolution bound implies tolerance to time-variations and unmodelled dynamics. arXiv:1910.02112

Tsakalis KS, Ioannou PA (1989) Adaptive control of linear time-varying plants: a new model reference controller structure. IEEE Trans Autom Control 34(10):1038–1046

Vale JR, Miller DE (2011) Step tracking in the presence of persistent plant changes. IEEE Trans Autom Control 1:43–58

Vu L, Chatterjee D, Liberzon D (2007) Input-to-state stability of switched systems and switching adaptive control. Automatica 43:639–646

Vu L, Liberzon D (2011) Supervisory control of uncertain linear time-varying systems. IEEE Trans Autom Control 1:27–42

Wen C (1994) A robust adaptive controller with minimal modifications for discrete time-varying systems. IEEE Trans Autom Control AC–39(5):987–991

Wen C, Hill DJ (1992) Global Boundedness of discrete-time adaptive control using parameter projection. Automatica 28(2):1143–1158

Ydstie BE (1989) Stability of discrete-time MRAC revisited. Syst Control Lett 13:429–439

Ydstie BE (1992) Transient performance and robustness of direct adaptive control. IEEE Trans Autom Control 37(8):1091–1105

Zhivoglyadov PV, Middleton RH, Fu M (2001) Further results on localization-based switching adaptive control. Automatica 37:257–263

Zhou K, Doyle JC, Glover K (1995) Robust and optimal control. Prentice Hall, Englewood Cliffs, NJ

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by a grant from the Natural Sciences Research Council of Canada.

Appendix

Appendix

Proof of Proposition 3

To proceed, we analyse the good closed-loop model of Sect. 3.1. From (15), it is clear that we need to obtain a bound on the terms \(B_1 {\varepsilon }(t+1)\) and \(B_2 {\varepsilon }( t+j)\) for \(j=1,\ldots ,d+1\). It will be convenient to define an intermediate quantityFootnote 12:

Step 1 Obtain a desirable bound on \(B_i {\varepsilon }(t)\) in terms of \(\bar{\nu } (t-1)\), \(\phi (t-d)\) and \(\bar{w} (t-d)\).

First of all, for \(i=1,2\) define

It is easy to see that

So

Using (12) and (13) and the definition of \(\rho _{\delta } ( \phi (t-d) , e(t))\), it is easy to show that

furthermore, it is clear that

Step 2 Bound \(\bar{\nu } (t-1)\) in terms of \( \nu (t) ,\ldots , \nu (t-d)\).

It follows from the formulas for \({\varepsilon }(t)\) and e(t) given in (12) and (13) that

Using the definition of \(\bar{\nu } (t-1)\), we have

Now it follows from the estimator update law that

We conclude that

Step 3 Obtain a bound on \(B_i {\varepsilon }(t)\) in terms of \(\{ \nu (t) ,\ldots , \nu (t-d) \}\), \(\phi (t-d)\) and \(\bar{w} (t-d)\).

If we combine (68), (69), (70) and (71), we see that

with

and

Step 4 Apply the result of Step 3 to (15).

We can apply the above result to each of the terms on the RHS of (15) containing \({\varepsilon }( \cdot )\). So from Step 3, we have

and

Each term except one is of the desired form: the case of \(j=d\) is problematic since it contains a \(\phi (t+1)\) term. However, we can use the crude model given in (16) to see that

If we now combine (72), (73) and (74), we see that we should define

and

It is clear from Step 3 that this choice of \(\Delta _i\) has the desired property for \(t \ge t_0 + d - 1\). Last of all, we group all of the remaining terms into \(\eta (t)\):

If we apply Proposition 2 and use the bound on \(\eta _0 (t)\) given in Step 3, then we see that \(\eta (t)\) has the desired property for \(t \ge t_0 + d -1 \) as well. \(\square \)

Rights and permissions

About this article

Cite this article

Miller, D.E., Shahab, M.T. Adaptive tracking with exponential stability and convolution bounds using vigilant estimation. Math. Control Signals Syst. 32, 241–291 (2020). https://doi.org/10.1007/s00498-020-00255-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00498-020-00255-x