Abstract

This paper addresses the problem of simulating multivariate random fields with stationary Gaussian increments in a d-dimensional Euclidean space. To this end, one considers a spectral turning-bands algorithm, in which the simulated field is a mixture of basic random fields made of weighted cosine waves associated with random frequencies and random phases. The weights depend on the spectral density of the direct and cross variogram matrices of the desired random field for the specified frequencies. The algorithm is applied to synthetic examples corresponding to different spatial correlation models. The properties of these models and of the algorithm are discussed, highlighting its computational efficiency, accuracy and versatility.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Random fields are extensively used in the earth and environmental sciences for spatial prediction and uncertainty quantification. To ease the inference of the spatial correlation structure, an assumption of stationarity is often made, by considering that the finite-dimensional distributions of the random field of interest are invariant under a translation in space (strict stationarity) or that the expectation and covariance function exist and are invariant under a translation in space (second-order stationarity) (Chilès and Delfiner 2012). However, these assumptions are sometimes questionable, in particular, in the presence of spatial trends, long-range dependence and persistence characteristics.

To cope with this situation, intrinsic random fields, i.e., random fields with second-order stationary increments, can be considered. A well-known example of such fields is the fractional Brownian surface, which has the property of repeating itself at all spatial scales (self-similarity). This model has been used in landscape modeling (Mandelbrot 1982; Palmer 1992; Arakawa and Krotkov 1994), seafloor topography (Malinverno 1995), geophysics (Jensen et al. 1991; Turcotte 1986, 1997), geology (Herzfeld 1993), hydrology (Chi et al. 1973; Molz et al. 2004), soil sciences (Comegna et al. 2013), environmental sciences (Ott 1981), image analysis (Peitgen and Saupe 1988; Chen et al. 1989; Huang and Turcotte 1989), telecommunication (Willinger et al. 1995), biology (Collins and De Luca 1994), ecology (Pozdnyakov et al. 2014), econometrics (Smith 1994; Asmussen and Taksar 1997) and social sciences (Romanow 1984), among other disciplines. Currently, a few exact algorithms exist for simulating a fractional Brownian surface, in particular the Cholesky factorization (Asmussen 1998; Michna 1999), circulant embedding approaches and discrete spectral representations (Beran 1994; Stein 2002; Danudirdjo and Hirose 2011). Approximate algorithms have also been designed, such as midpoint displacement approaches (Fournier et al. 1982; Voss 1985; Peitgen and Saupe 1988), wavelet representations (Combes et al. 1989; Flandrin 1989, 1992; Walker 1997; Dale and Mah 1998; Pipiras 2005; Albeverio et al. 2012), turning bands (Emery and Lantuéjoul 2008) and iterative algorithms based on Markov chains (Arroyo and Emery 2015). The reader is referred to Coeurjolly (2000), Chilès and Delfiner (2012) and references therein for details. Although most of these algorithms can be extended to the simulation of intrinsic random fields, they are applicable only for simulating at a limited number of locations or at evenly-spaced locations in \(\mathbb {R}^d\). Two notable exceptions are the Gibbs sampler by Arroyo and Emery (2015) and the spectral turning-bands algorithm by Emery and Lantuéjoul (2008), which can approximately simulate intrinsic random fields at any set of locations in \(\mathbb {R}^d\), irrespective of their spatial configuration.

The mathematical setting of the intrinsic random field theory is the following (Chilès and Delfiner 2012). A random field defined in a d-dimensional Euclidean space, say \(Y=\{Y(\mathbf {x}):\mathbf {x}\in \mathbb {R}^d\}\), is an intrinsic random field without drift if the following conditions are satisfied:

-

(1)

Expectation of increments:

\(\forall \mathbf {x}, \mathbf {x}^{\prime} \in \mathbb {R}^{d},\mathbb {E}\left\{ Y(\mathbf {x}^{\prime}) - Y(\mathbf {x})\right\} = 0\).

-

(2)

Variance of increments:

\(\forall \mathbf {x}, \mathbf {x}^{\prime} \in \mathbb {R}^{d},\mathbb {E}\left\{ \left[ Y(\mathbf {x}^{\prime}) - Y(\mathbf {x})\right] ^{2}\right\} = 2 \gamma (\mathbf {\mathbf {x}^{\prime} - \mathbf {x}})\), where \(\gamma \) is known as the variogram.

It can be shown (Appendix 1) that the covariance between any two increments exists and is invariant under a translation of the locations supporting these increments, therefore the intrinsic random field has second-order stationary increments. Since adding a constant to the random field does not change the above properties, a particular representation of the random field is often considered by setting \(Y(\mathbf {0}) = 0\). Under this additional constraint, the previous two conditions are equivalent to expressing the expectation and covariance function of the intrinsic random field as follows (Appendix 1):

-

(1′)

Expectation: \(\forall \mathbf {x}\in \mathbb {R}^{d},\mathbb {E}\left\{ Y(\mathbf {x})\right\} = 0\).

-

(2′)

Covariance function:

\(\forall \mathbf {x}, \mathbf {x}^{\prime} \in \mathbb {R}^{d},\mathbb {E}\left\{ Y(\mathbf {x}^{\prime})\cdot Y(\mathbf {x})\right\} = \gamma (\mathbf {x}^{\prime}) + \gamma (\mathbf {x}) - \gamma (\mathbf {x}^{\prime}-\mathbf {x})\).

For the purpose of simulation, one has to specify the finite-dimensional distributions of the intrinsic random field, not only its expectation and covariance function. In the following, we will consider the case of Gaussian random fields, i.e., random fields whose finite-dimensional distributions are multivariate Gaussian (multinormal). In such a case, the distribution of the increments is fully characterized by their first two moments (expectation and covariance function), so that the second-order stationarity of the increments is actually equivalent to their strict stationarity.

One can generalize the definition of an intrinsic Gaussian random field without drift to the multivariate case, by considering a vector random field \(\mathbf {Y}=\{\mathbf {Y}(\mathbf {x}):\mathbf {x}\in \mathbb {R}^d\}\) with P components, such that:

-

(1)

\(\mathbf {Y}(\mathbf {0}) = \mathbf {0}\).

-

(2)

Expectation: \(\forall \mathbf {x}\in \mathbb {R}^{d},\mathbb {E}\left\{ \mathbf {Y}(\mathbf {x})\right\} = \mathbf {0}\).

-

(3)

Covariance of increments:

$$\begin{aligned} \forall \mathbf {x}, \mathbf {x}+\mathbf {h} \in \mathbb {R}^{d}, \frac{1}{2}\mathbb {E}\left\{ \left[ \mathbf {Y}(\mathbf {x}+\mathbf {h})-\mathbf {Y}(\mathbf {x})\right] \left[ \mathbf {Y}(\mathbf {x}+\mathbf {h})-\mathbf {Y}(\mathbf {x})\right] ^T\right\} = \Upsilon (\mathbf {h}) \end{aligned}$$(1)where T indicates vector transposition and \(\Upsilon (\mathbf {h})\) is the \(P \times P\) matrix of direct (diagonal terms) and cross (off-diagonal terms) variograms of the vector random field for a given separation vector \(\mathbf {h}\).

-

(4)

The finite-dimensional distributions of \(\mathbf {Y}\) are multivariate Gaussian.

To our knowledge, there is no available algorithm for simulating such a vector random field for any number and configuration of the target locations in \(\mathbb {R}^d\), a problem that will be addressed in the next sections. The outline is the following: Sect. 2 introduces a spectral simulation algorithm for generating multivariate intrinsic Gaussian random fields, while Sect. 3 shows applications of this algorithm to synthetic examples. Discussions and conclusions are presented in Sect. 4 and proofs are reported in Appendices.

2 Methodology

To simulate \(\mathbf {Y}\), let us consider a vector random field \(\mathbf {Y}_S\) defined as follows:

where \(\langle ,\rangle \) represents the inner product in \(\mathbb {R}^d\), \(\{\mathbf {U}_{p}: p = 1,\ldots , P\}\) are mutually independent vectors (frequencies) with probability density \(g: \mathbb {R}^d \rightarrow \mathbb {R}_+\), \(\{\phi _{p}: p = 1,\ldots ,P\}\) are mutually independent scalars (phases) uniformly distributed over the interval \(\left[ 0, 2\pi \right) \), and \(\{\varvec{\alpha }_p: p = 1,\ldots , P\}\) are deterministic vector-valued mappings with P real-valued components.

The vector random field \(\mathbf {Y}_S\) so simulated clearly fulfills the first two properties of an intrinsic Gaussian random field:

-

(1)

\(\mathbf {Y}_S(\mathbf {0}) = \mathbf {0}\).

-

(2)

\(\forall \mathbf {x}\in \mathbb {R}^{d},\mathbb {E}\left\{ \mathbf {Y}_S(\mathbf {x})\right\} = \mathbf {0}\).

To characterize the spatial correlation structure of \(\mathbf {Y}_S\), let us calculate its matrix of direct and cross variograms:

Accounting for the fact that \(\{\phi _{p}: p = 1,\ldots ,P\}\) are independent and uniformly distributed in \([0,2\pi )\), the only terms that do not vanish are found when \(p = q\). The previous equation then simplifies into

It is seen that these direct and cross variograms only depend on the separation vector \(\mathbf {h}\), which indicates that the simulated vector random field \(\mathbf {Y}_S\) has second-order stationary increments. Let us denote by \(\Upsilon _S(\mathbf {h})\) its \(P\times P\) matrix of direct and cross variograms. It comes:

where \(\mathbf {A}(\mathbf {u})\) is the \(P\times P\) matrix whose p-th column is \(\alpha _p(\mathbf {u})\).

Compare this expression with the spectral representation of a variogram (Chilès and Delfiner 2012):

where \(\chi \) is a positive symmetric measure with no atom at the origin and satisfying

If \(\chi (\mathrm{d}\mathbf {u})\) is absolutely continuous, then the previous representation can be rewritten as:

with \(f(\mathbf {u}) \mathrm{d}\mathbf {u} = \displaystyle {\frac{\chi (\mathrm{d}\mathbf {u})}{4\pi ^2\Vert \mathbf {u}\Vert ^2}}\). Henceforth, f will be referred to as the spectral density of the variogram \(\gamma (\mathbf {h})\). In the multivariate context, this spectral density becomes a \(P \times P\) matrix \(\mathbf {f}: \mathbb {R}^d \rightarrow H^+_P\), associated with the matrix \(\Upsilon (\mathbf {h})\) of direct and cross variograms, where \(H^+_P\) denotes the set of Hermitian positive semi-definite matrices of size \(P\times P\) (Chilès and Delfiner 2012).

For the simulated vector random field \(\mathbf {Y}_S\) to have direct and cross variograms associated with a given spectral density matrix \(\mathbf {f}\), the following must be satisfied:

or, equivalently,

The only necessary condition to find a real-valued matrix \(\mathbf {A}(\mathbf {u})\) fulfilling the above equation is that \(\mathbf {f}(\mathbf {u})\) is a real-valued symmetric positive semi-definite matrix for every \(\mathbf {u} \in \mathbb {R}^{d}\) and that the support of g contains the support of \(\mathbf {f}\) (so that the right-hand side member of Eq. (4) is defined for any \(\mathbf {u}\) in \(\mathbb {R}^d\) and is a real-valued symmetric positive-semi-definite matrix). In such a case, \(\mathbf {A}(\mathbf {u})\) can be taken as a square root matrix of \(\dfrac{2\mathbf {f}(\mathbf {u})}{g(\mathbf {u})}\). The only restriction to define the direct and cross variograms is to meet the positive semi-definiteness condition for the spectral density matrix \(\mathbf {f}(\mathbf {u})\) for all \(\mathbf {u} \in \mathbb {R}^d\).

Finally, to obtain a vector random field with multivariate Gaussian finite-dimensional distributions, one can add and properly scale many independent basic random fields defined as in Eq. (2):

with \(L \in \mathbb {N}^{*}\). If L is large, the finite-dimensional distributions of \(\mathbf {Y}_S\) are approximately multinormal, by virtue of the multivariate central limit theorem, while its expectation and its spatial correlation structure (direct and cross variograms) remain the same as that of the random field defined in Eq. (2).

Accordingly, the first three properties introduced in Sect. 1 to define an intrinsic vector Gaussian random field are exactly reproduced, while the fourth property is only approximate as the simulated random field is not perfectly Gaussian. To determine whether or not the approximation is acceptable, one approach is to compare the distribution of a linear combination of \(\mathbf {Y}_S\) at specific locations with the distribution that would be obtained if \(\mathbf {Y}_S\) were perfectly Gaussian; an upper bound of the Kolmogorov distance between both distributions can be obtained by using the Berry-Esséen theorem (Lantuéjoul 1994; Emery and Lantuéjoul 2008). Another approach is to check the fluctuations of regional statistics calculated over a set of realizations, by means of hypothesis testing (Emery 2008).

In summary, the steps for simulating a P-variate intrinsic Gaussian random field at a given set of target locations in \(\mathbb {R}^d\) are the following:

-

1.

Identify the spectral density matrix \(\mathbf {f}: \mathbb {R}^d \rightarrow H^+_P\) associated with the direct and cross variograms of the target random field.

-

2.

Choose a probability density \(g: \mathbb {R}^d \rightarrow \mathbb {R}_{+}\) with support containing the support of \(\mathbf {f}\).

-

3.

Choose a large integer L.

-

4.

For \(p=1,\ldots ,P\) and \(l=1,\ldots ,L\):

-

(a)

Generate a random phase \(\phi _{l,p}\) uniformly distributed on \([0,2\pi )\).

-

(b)

Generate a random vector \(\mathbf {U}_{l,p}\) with density g.

-

(c)

Calculate the square root of matrix \(\dfrac{2\mathbf {f}(\mathbf {U}_{l,p})}{g(\mathbf {U}_{l,p})}\).

-

(d)

Identify \(\alpha _p(\mathbf {U}_{l,p})\) as the p-th column of the square root matrix calculated at step (c).

-

(a)

-

5.

Calculate the simulated random field \(\mathbf {Y}_S\) at all target locations as per Eq. (5).

3 Examples

The proposed algorithm is now tested to simulate intrinsic random fields on a regular two-dimensional grid (\(d=2\)) with \(500\times 500\) nodes and a unit mesh. The simulation is performed by adding \(L = 500\) basic random fields in Eq. (5). The probability density g is chosen as the following function (depending on two positive scalar parameters a and \(\nu \)) with support equal to \(\mathbb {R}^d\):

which is nothing else than the spectral density of an isotropic Matérn variogram with unit sill, scale parameter a and shape parameter \(\nu \) (Lantuéjoul 2002):

According to Emery and Lantuéjoul (2006), a random vector in \(\mathbb {R}^d\) with probability density g can be simulated by scaling a standard Gaussian random vector by the square root of an independent standard gamma random variable with shape parameter \(\nu \). In the following examples, which differ by the expression assumed for the cross variograms of the simulated random fields, we will consider the specific values \(a = 30\) and \(\nu = 0.25\), although the algorithm is applicable with any other choice for these parameters.

3.1 Example 1: Intrinsic vector random field with power variograms

In this subsection, let us consider the case when all the direct and cross variograms are of the form \(\mathbf {h}\longrightarrow \Vert \frac{\mathbf {h}}{b}\Vert ^{\theta }\), where \(b > 0\) and \(\theta \in (0, 2)\). The spectral density of such a power variogram is (Chilès and Delfiner 2012):

Consider a bivariate random field (\(P=2\)) with a matrix of direct and cross variograms of the form:

with \(\theta _1 \in (0,2)\), \(\theta _2 \in (0,2)\), \(\theta _{12} \in (0,2)\) and \(\rho \in \mathbb {R}\).

The corresponding spectral density matrix for a given frequency vector \(\mathbf {u} \in \mathbb {R}^d\) is:

This matrix is real-valued and symmetric. It is positive semi-definite for any \(\mathbf {u}\) in \(\mathbb {R}^d\) if and only if the following conditions hold (proof in Appendix 2):

-

1.

\(\theta _{12}=\displaystyle {\frac{\theta _1+\theta _2}{2}}\)

-

2.

\(|\rho |\le \displaystyle {\frac{2\Gamma \left( 1-\frac{\theta _1+\theta _2}{4}\right) }{(\theta _1+\theta _2)\Gamma \left( \frac{\theta _1+\theta _2}{4}+\frac{d}{2}\right) }\sqrt{\frac{\theta _1\theta _2\Gamma \left( \frac{\theta _1+d}{2}\right) \Gamma \left( \frac{\theta _2+d}{2}\right) }{\Gamma \left( 1-\frac{\theta _1}{2}\right) \Gamma \left( 1-\frac{\theta _2}{2}\right) }}}.\)

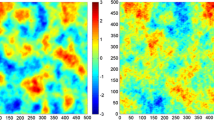

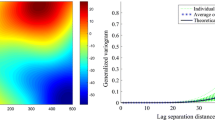

As an example, Fig. 1 shows the map of one realization obtained by running the proposed algorithm with \(\theta _1=0.5, \theta _2=1.5, \theta _{12}=1.0\) and \(\rho =0.5\). Figure 2 compares the experimental direct and cross variograms of one hundred realizations (calculated along the abscissa axis) with the theoretical power variograms. The average experimental variograms almost perfectly match the theoretical ones, which corroborates that the simulated random field reproduces the desired spatial correlation structure.

3.2 Example 2: Intrinsic vector random field with power-Matérn variograms

In this subsection, one is interested in simulating a bivariate intrinsic random field whose direct variograms are power variograms with scale parameters \(b_{1} > 0\) and \(b_{2} > 0\) and exponents \(\theta _{1}\) and \(\theta _{2}\) in (0, 2), respectively, and whose cross variogram is a Matérn variogram with sill \(\rho \) in \(\mathbb {R}\), scale parameter \(a_{12}>0\) and shape parameter \(\nu _{12}>0\):

The corresponding spectral density matrix for a given frequency vector \(\mathbf {u} \in \mathbb {R}^d\) is:

Again, this matrix is real-valued and symmetric. It is positive semi-definite for any \(\mathbf {u}\) in \(\mathbb {R}^d\) if and only if the following conditions hold (proof in Appendix 3):

-

1.

\(\displaystyle {\nu _{12}\ge \frac{\theta _1+\theta _2}{4}}\)

- 2.

As an example, Fig. 3 shows the map of one realization for \(\theta _1=0.5, \theta _2=1.5, b_1 = 10, b_2=20, a_{12}=10, \nu _{12} = 1\) and \(\rho =0.4\), while Fig. 4 compares the experimental direct and cross variograms of one hundred realizations (calculated along the abscissa axis) with the theoretical power and Matérn variogram models. As for the first example, the match between the average experimental variograms and the theoretical variogram models is almost perfect.

4 Discussion and conclusions

Some comments about the presented algorithm are worth being made. At a given location \(\mathbf {x}\), the simulated random field \(\mathbf {Y}_S\) (Eq. (5)) is calculated by projecting \(\mathbf {x}\) onto a set of frequency vectors \(\{\mathbf {U}_{l,p}: l = 1, \ldots , L; p = 1,\ldots , P\}\), which makes the proposed algorithm a particular case of the turning bands method (Matheron 1973). Even more, since the basic random field defined in Eq. (2) is a weighted sum of cosine waves with weights \((\varvec{\alpha }_{p}(\mathbf {U}_{l,p}))\) that depend on the spectral density of the target direct and cross variograms, the proposal can be classified as a spectral turning-bands algorithm. Such an algorithm generalizes previous approaches for simulating stationary Gaussian vector random fields (Shinozuka 1971; Mantoglou 1987; Emery et al. 2016) or for simulating univariate random fields with stationary Gaussian increments (Emery and Lantuéjoul 2008).

Interestingly, the frequency vectors \(\{\mathbf {U}_{l,p}: l = 1, \ldots , L;\,\,p = 1,\ldots , P\}\) turn out to be generated with a probability density g that can be chosen by the user, instead of the spectral density f of the variogram associated with a specific component of the desired intrinsic vector random field (Eq. 8), which is not a genuine probability density function (f is non-integrable in \(\mathbb {R}^d\)). Some solutions based on the spectral density f were proposed in the past decades, but they are approximate since they require truncating f at low frequencies (Chilès 1995). Also note that the algorithm proposed in this paper is intrinsically different from other spectral approaches based on discrete Fourier transforms, which rely on periodizations and/or circulant embeddings and allow simulating the desired random field only at evenly-spaced locations in \(\mathbb {R}^d\). Here, the simulation can be performed for any number and any configuration of the target locations. Apart from this versatility, the proposed spectral turning-bands algorithm appears to be faster than existing algorithms, with a computational cost to generate a simulated field directly proportional to the number of target locations (see Emery et al. 2016 for an analysis of the necessary floating point operations), and is not demanding in terms of memory storage requirements.

The presented examples also show models for bivariate intrinsic random fields with different spatial correlation models. In particular, in the second example (power-Matérn model), each component of the simulated random field has a power variogram, therefore self-similar. However, the bivariate random field is no longer self-similar when one considers its two components jointly, because the cross variogram is not self-similar. In contrast, in the first example (power-power model), the direct and cross variograms are self-similar and so is the simulated bivariate random field (Herzfeld 1993); this random field is an approximation of a bivariate fractional Brownian surface (Amblard et al. 2013) that would be obtained if the number L of basic random fields were infinitely large, in which case the increments would have multivariate Gaussian distributions. Although this example is quite restrictive, insofar as the exponent associated with the cross-structure must be the average of the exponents associated with the direct structures, it is of interest because it could allow generating a long-range dependent field (with exponent greater than 1.0) cross-correlated with a low-range dependent field (with exponent less than 1.0). The procedure to find the conditions for admissible models, based on analyzing the positive semi-definiteness of the spectral density matrices, can easily be extended to identify cross variogram models other than the power or Matérn and to more components \((P > 2)\).

In conclusion, we designed a continuous spectral algorithm that simulates vector random fields with the spatial correlation structure of a desired multivariate intrinsic random field, its only approximation being the fact that the finite-dimensional distributions of the simulated random field are not exactly multivariate Gaussian because the number L of basic random fields that are summed in Eq. (5) is finite. The algorithm excels by its versatility, fastness and low computational cost.

References

Albeverio S, Jorgensen PET, Paolucci AM (2012) On fractional Brownian motion and wavelets. Complex Anal Oper Theory 6:33–63

Amblard PO, Coeurjolly JF, Lavancier F, Philippe A (2013) Basic properties of the multivariate fractional Brownian motion. Séminaires et Congrès 28:65–87

Arakawa K, Krotkov E (1994) Modeling of natural terrain based on fractal geometry. Syst Comput Jpn 25(11):99–113

Arroyo D, Emery X (2015) Simulation of intrinsic random fields of order k with Gaussian generalized increments by Gibbs sampling. Math Geosci 47(8):955–974

Asmussen S, Taksar M (1997) Controlled diffusion models for optimal dividend pay-out. Insur Math Econ 20(1):1–15

Asmussen S (1998) Stochastic simulation with a view towards stochastic processes. University of Aarhus, Centre for Mathematical Physics and Stochastics

Beran J (1994) Statistics for long-memory processes. Chapman and Hall, London

Chen CC, Daponte J, Fox M (1989) Fractal feature analysis and classification in medical imaging. IEEE Trans Med Imaging 8:133–142

Chi M, Neal E, Young GK (1973) Practical application of fractional brownian-motion and noise to synthetic hydrology. Water Resour Res 9(6):1523–1533

Chilès JP (1995) Quelques méthodes de simulations de fonctions aléatoires intrinsèques. In Cahiers de Géostatistique 5, Paris School of Mines, pp 97–112

Chilès JP, Delfiner P (2012) Geostatistics: modeling spatial uncertainty, 2nd edn. John Wiley & Sons, New York

Coeurjolly JF (2000) Simulation and identification of the fractional Brownian motion: a bibliographical and comparative study. J Stat Softw 5(7):1–53

Collins JJ, De Luca CJ (1994) Upright, correlated random walks: a statistical-biomechanics approach to the human postural control system. Chaos 5(1):57–63

Combes JM, Grossmann A, Tchmitchian PH (1989) Wavelets. Springer, New York

Comegna A, Coppola A, Comegna V, Sommella A, Vitale CD (2013) Use of a fractional brownian motion model to mimic spatial horizontal variation of soil physical and hydraulic properties displaying a power-law variogram. Proc Environ Sci 19:416–425

Dale MRT, Mah M (1998) The use of wavelets for spatial pattern analysis in ecology. J Veg Sci 9:805–814

Danudirdjo D, Hirose A (2011) Synthesis of two-dimensional fractional Brownian motion via circulant embedding. In 18th IEEE International Conference on Image Processing, pp 1085–1088

Emery X, Arroyo D, Porcu E (2016) An improved spectral turning-bands algorithm for simulating stationary vector Gaussian random fields. Stoch Environ Res Risk Assess, 1–11

Emery X (2008) Statistical tests for validating geostatistical simulation algorithms. Comput Geosci 34(11):1610–1620

Emery X, Lantuéjoul C (2006) TBSIM: a computer program for conditional simulation of three-dimensional Gaussian random fields via the turning bands method. Comput Geosci 32(10):1615–1628

Emery X, Lantuéjoul C (2008) A spectral approach to simulating intrinsic random fields with power and spline generalized covariances. Comput Geosci 12(1):121–132

Flandrin P (1989) On the spectrum of fractional Brownian motion. IEEE Trans Inf Theory 35:197–199

Flandrin P (1992) Wavelet analysis and synthesis of fractional Brownian motion. IEEE Trans Inf Theory 38(2):910–917

Fournier A, Fusell D, Carpenter RL (1982) Computer rendering of stochastic models. Graph Image Process 25(6):371–384

Herzfeld UC (1993) Fractals in geosciences: challenges and concerns. Computers in geology: 25 years of progress. Oxford University Press, New York, pp 176–230

Horn RA, Johnson CR (1985) Matrix analysis. Cambridge University Press, Cambridge

Huang J, Turcotte DL (1989) Fractal mapping of digitized images: application to the topography of Arizona and comparisons with synthetic images. J Geophys Res 94:7491–7495

Jensen OG, Todoeschuk JP, Crossley DJ, Gregotski M (1991) Fractal linear models of geophysical processes. In: Schertzer D, Lovejoy S (eds) Non-linear variability in geophysics, scaling and fractals. Kluwer Academic, Dordrecht, pp 227–239

Lantuéjoul C (1994) Non conditional simulation of stationary isotropic multigaussian random functions. In: Armstrong M, Dowd PA (eds) Geostatistical simulations. Kluwer Academic, Dordrecht, pp 147–177

Lantuéjoul C (2002) Geostatistical simulation: models and algorithms. Springer, Berlin

Malinverno A (1995) Fractals and ocean floor topography: a review and a model. In: Barton CC, La Pointe PR (eds) Fractals in the earth sciences. Springer, New York, pp 107–130

Mandelbrot BB (1982) The fractal geometry of nature. Freeman, San Francisco

Mantoglou A (1987) Digital simulation of multivariate two- and three-dimensional stochastic processes with a spectral turning bands method. Math Geol 19(2):129–149

Matheron G (1973) The intrinsic random functions and their applications. Adv Appl Probab 5:439–468

Michna Z (1999) On tail probabilities and first passage times for fractional Brownian motion. Math Methods Oper Res 49:335–354

Molz FJ, Rajaram H, Lu S (2004) Stochastic fractal-based models of heterogeneity in subsurface hydrology: origins, applications, limitations, and future research questions. Rev Geophys 42(1):1–42

Ott WR (1981) A Brownian motion model of pollutant concentration distributions. Department of Statistics and SIAM Institute for Mathematics and Society, Stanford University, p 57

Palmer MW (1992) The coexistence of species in fractal landscapes. Am Nat 139:375–397

Peitgen HO, Saupe D (1988) The science of fractal images. Springer, New York

Pipiras V (2005) Wavelet-based simulation of fractional Brownian motion revisited. Appl Comput Harmonic Anal 19(1):49–60

Pozdnyakov V, Meyer T, Wang YB, Yan J (2014) On modeling animal movements using Brownian motion with measurement error. Ecology 95(2):247–253

Romanow AL (1984) A Brownian motion model for decision making. J Math Sociol 10:1–28

Shinozuka M (1971) Simulation of multivariate and multidimensional random processes. J Acoust Soc Am 49(1B):357–367

Smith WT (1994) Investment, uncertainty and price stabilization schemes. J Econ Dyn Control 18:561–579

Stein ML (2002) Fast and exact simulation of fractional Brownian surfaces. J Comput Graph Stat 11(3):587–599

Turcotte DL (1986) A fractal model for crustal deformation. Tectonophysics 132:261–269

Turcotte DL (1997) Fractals and chaos in geology and geophysics, 2nd edn. Cambridge University Press, New York

Voss RF (1985) Random fractal forgeries. In Earnshaw R.A. (ed.) Springer, Berlin, 805–835

Walker JS (1997) Fourier analysis and wavelet analysis. Notices AMS 44(6):658–670

Willinger W, Taqqu MS, Leland WE, Wilson DV (1995) Self-similarity in high-speed packet traffic: analysis and modeling of Ethernet traffic measurements. Stat Sci 10(1):67–85

Acknowledgments

The authors acknowledge the funding by the Chilean Commission for Scientific and Technological Research, through Projects CONICYT / FONDECYT / POSTDOCTORADO / N\(^{\circ }\)3140568, CONICYT / FONDECYT / REGULAR / N\(^{\circ }\)1130085 and CONICYT PIA Anillo ACT1407. Constructive comments from two anonymous reviewers helped to improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Covariance and variogram

Consider a scalar intrinsic random field \(Y=\{Y(\mathbf {x}):\mathbf {x}\in \mathbb {R}^d\}\) and denote by \(\gamma (\mathbf {h})\) its variogram for the separation vector \(\mathbf {h}\). For given locations \(\mathbf {x}\), \(\mathbf {x}'\) and \(\mathbf {x}_0\), let us express the covariance between the two increments \(Y(\mathbf {x}) - Y(\mathbf {x}_0)\) and \(Y(\mathbf {x}') - Y(\mathbf {x}_0)\) in terms of the variogram:

Accordingly:

Note that this covariance is unchanged by shifting the locations \(\mathbf {x}\), \(\mathbf {x}'\) and \(\mathbf {x}_0\) by a given vector \(\mathbf {h}\), i.e., \(C(\mathbf {x}, \mathbf {x}^{\prime}, \mathbf {x}_0) = C(\mathbf {x}+\mathbf {h}, \mathbf {x}^{\prime}+\mathbf {h}, \mathbf {x}_0+\mathbf {h})\), showing that the intrinsic random field has second-order stationary increments. Furthermore, by taking \(\mathbf {x}_0 = \mathbf {0}\) and considering that \(Y(\mathbf {0}) = 0\), it ensues:

which shows that the knowledge of the variogram is equivalent to that of the covariance function of the random field.

Appendix 2: Existence conditions for Example 1

The spectral density matrix \(\mathbf {f}(\mathbf {u})\) defined in Eq. (10) is Hermitian because it is a real symmetric matrix. To check that it is positive semi-definite, one therefore only needs to verify that its determinant is non-negative (Horn and Johnson 1985), that is:

that is:

From this inequality to hold, one first needs to verify the following:

If \(2\theta _{12}<\theta _1+\theta _2\), inequality (14) is not satisfied for \(\Vert \mathbf {u}\Vert >1\), whereas if \(2\theta _{12}>\theta _1+\theta _2\), inequality (14) is not satisfied for \(\Vert \mathbf {u}\Vert <1\). Therefore, one must have

in which case Eq. (14) obviously holds \(\forall \mathbf {u}\in \mathbb {R}^d\). Under this condition, one obtains from Eq. (13):

Appendix 3: Existence conditions for Example 2

The spectral density matrix \(\mathbf {f}(\mathbf {u})\) defined in Eq. (12) is Hermitian positive semi-definite if its determinant is non-negative, i.e.:

that is:

with

The mapping \(\varphi :\mathbb {R}_+\rightarrow \mathbb {R}\) is unbounded if \(\theta _1+\theta _2>4\nu _{12}\), in which case inequality (15) cannot be satisfied. In the converse \((\theta _1+\theta _2\le 4\nu _{12})\), the maximum of \(\varphi \) is found to be

Therefore, for the spectral density matrix \(\mathbf {f}(\mathbf {u})\) to be positive semi-definite for all \(\mathbf {u}\in \mathbb {R}^d\), the following necessary and sufficient conditions must be fulfilled:

and

In the particular case when \(\theta _1+\theta _2=4\nu _{12}\), then \(\varphi _{\max }=(2\pi a_{12})^{-(\theta _1+\theta _2)}\), and the limit value for \(|\rho|\) is given by

Rights and permissions

About this article

Cite this article

Arroyo, D., Emery, X. Spectral simulation of vector random fields with stationary Gaussian increments in d-dimensional Euclidean spaces. Stoch Environ Res Risk Assess 31, 1583–1592 (2017). https://doi.org/10.1007/s00477-016-1225-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-016-1225-7