Abstract

Failure-probability-based global sensitivity (FP-GS) analysis can measure the effect of the input uncertainty on the failure probability. The state-of-the-art for estimating the FP-GS are less efficient for the rare failure event and the implicit performance function case. Thus, an adaptive Kriging nested Importance Sampling (AK-IS) method is proposed in this work to efficiently estimate the FP-GS. For eliminating the dimensionality dependence in the calculation, an equivalent form of the FP-GS transformed by the Bayes’ formula is employed by the proposed method. Then the AK model is nested into IS for recognizing the failure samples. After all the failure samples are correctly identified from the IS sample pool, the failure samples are transformed into those subjected to the original conditional probability density function (PDF) on the failure domain by the Metropolis–Hastings algorithm, on which the conditional PDF of the input on the failure domain can be estimated for the FP-GS finally. The proposed method highly improves the efficiency of estimating the FP-GS comparing with the state-of-the-art, which is illustrated by the results of several examples in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the uncertainty analysis in the engineering structures such as aerospace engineering has attracted much attention [1]. Failure probability is the safety degree measure of the structural systems under the widely existing random uncertainty. The failure-probability-based global sensitivity (FP-GS) analysis can quantify the effect of the input uncertainty on the failure probability. After the important random inputs are correctly recognized by the FP-GS, the failure probability can be reduced at a low price by reducing the uncertainties of the important random inputs.

Generally, the failure-probability-based sensitivity can be classified into two categories. The first category is the failure-probability-based local sensitivity [2, 3], it is defined as the partial derivative of the failure probability with respect to the distribution parameter of the input. The failure-probability-based local sensitivity can be estimated by the moment-based methods [4,5,6], the sampling-based methods and the surrogate-based methods. Usually, the moment-based methods include the second moment method, the third one and the forth one. The sampling-based methods include the Monte Carlo Simulation (MCS) and the advanced MCS such as the Importance Sampling (IS) [7], the Subset Simulation (SS) [8] and the Line Sampling (LS) [9]. The surrogate-based methods include the Response Surface Method (RSM) [10], the Support Vector Machine (SVM) [11], the Neural Network (NN) [12] and the adaptive Kriging (AK) model [13]. However, the failure-probability-based local sensitivity can only reflect the local effect of the distribution parameter on the failure probability at the nominal point. The second category is the failure-probability-based global sensitivity (FP-GS). Considering the uncertainties of the random inputs, Cui et al. [14] defined the FP-GS as the average absolute difference between the unconditional failure probability and the conditional failure probability when fixing the input at its realization generated by the PDF, it is shown in Eq. (1).

where \(E[ \cdot ]\) is the expectation operator, \(F\) means the failure event, \(f_{{X_{i} }} (x_{i} )\) is the probability density function (PDF) of the input \(X_{i}\), and \(P\{ \cdot \}\) is the probability operator.

It can be seen from Eq. (1) that the FP-GS reflects the expected effect of the input \(X_{i}\) over its whole distribution on the failure probability. For the sake of computation, Li et al. [15] re-expressed Eq. (1) as the form shown in Eq. (2)

Based on Eq. (2), Li et al. discovered that the FP-GS is the exact expression of the variance-based global sensitivity of the failure domain indicator function \(I_{F} ({\varvec{X}})\), i.e.,

where \(V[ \cdot ]\) is the variance operator, \(I_{F} ({\varvec{X}}){ = }1\) in case the random input vector \({\varvec{X}} \in F\), and \(I_{F} ({\varvec{X}}){ = 0}\) in case \({\varvec{X}} \notin F\).

The direct method For estimating the FP-GS defined in Eq. (2) is the double-loop MCS. In the inner loop of the double-loop MCS, the unconditional failure probability \(P\{ F\}\) is estimated by the MCS. In the outer loop, the realization of \(X_{i}\) is sampled for estimating the conditional failure probability \(P\{ F\left| {X_{i} \} } \right.\) and the expectation of \([P\{ F\} - P\{ F\left| {X_{i} \} } \right.]\). The double-loop MCS is obviously time-consuming especially for the rare failure event, in which the failure probability is small, such as \(10^{ - 4} \sim 10^{ - 7}\)(e.g., example 5.2.1 in this work), and the implicit performance function case, in which the performance function cannot be explicitly expressed by an analytical formulation (e.g., example 5.2.2 in this work). Guaranteed by the law of the large number, the results of the double-loop MCS can be used as the reference, it converges to the real values as the number of the samples tends to be infinite.

Wei et al. [16] divided Eq. (3) by the variance of \(I_{F} \left( {\mathbf{X}} \right)\), and the normalized FP-GS is obtained as Eq. (4),

where \(V\left( {I_{F} \left( {\mathbf{X}} \right)} \right) = E\left( {I_{F}^{2} \left( {\mathbf{X}} \right)} \right) - E^{2} \left( {I_{F} \left( {\mathbf{X}} \right)} \right) = E\left( {I_{F} \left( {\mathbf{X}} \right)} \right) - E^{2} \left( {I_{F} \left( {\mathbf{X}} \right)} \right){ = }P\left\{ F \right\} - P^{2} \left\{ F \right\}\). Equation (4) implies the normalized FP-GS is the main sensitivity index of \(I_{F} \left( {\mathbf{X}} \right)\).

Since \(\xi_{i}\) is the normalized form of \(\delta_{i}\), the importance of the inputs estimated by these two indexes are the same. This work focuses on the efficient estimation method of \(\xi_{i}\), the main sensitivity index based on the failure probability, and \(\xi_{i}\) can be used to determine the prioritization of the important random inputs for effectively reducing the failure probability.

The rest of the context is organized as follows. The literature review of methods for estimating the FP-GS and those introduced in this work are presented in Sect. 2. The double-loop MCS for estimating the FP-GS is briefly introduced according to the definition in Eq. (4) in Sect. 3. Section 3 also describes the Bayes’ formula transformation of the FP-GS and its corresponding estimation process by the direct MCS. An efficient method for estimating the FP-GS by nesting the AK into IS is constructed in Sect. 4. In Sect. 5, several examples are employed to verify the accuracy and efficiency of the proposed method by comparing with the state-of-the-art. Conclusions are drawn in Sect. 6.

2 Literature review

The single-loop MCS, the IS method and the truncated IS method were presented to estimate the FP-GS in [16]. The computational efficiencies of the single-loop MCS, IS method and truncated IS method are greatly improved compared to the double-loop MCS, but their computational cost is still too large for practical engineering. Through the maximum entropy constrained by the fractional moments, Ref. [17] presented an efficient method to estimate the FP-GS. However, this method is limited by the applicability of the multiplication dimensionality reduction method (M-DRM) for approximating the fractional moments [18].

Recently, several methods based on the classification of the model output were developed for estimating the FP-GS [19,20,21,22]. The classification method for estimating FP-GS originates from the Bayes’ formula. In the classification-based method, the model output is classified into two categories corresponding to that the output value is less than zero or bigger than zero, i.e., the failure one or the safety one, on which the FP-GS can be easily evaluated by employing the Bayes’ formula. In the classification method for estimating the FP-GS, the failure probability and the conditional PDFs of all inputs on the failure domain are necessary and sufficient for obtaining the FP-GS. When the failure probability is approached by the sampling-based methods such as the MCS, the Importance Sampling (IS) and the Subset Simulation (SS), the estimation of the conditional PDFs of all inputs on the failure domain can be simultaneously obtained by the failure samples as byproducts. Since the FP-GS estimations for all inputs can be obtained by the same set of samples, the dimensionality dependence of the computational cost is eliminated by the classification method.

However, an efficient method for estimating the FP-GS is still a challenge for engineering application with the rare failure probability and the implicit performance function.

From the definition of \(\xi_{i}\), it is well known that the key for estimating \(\xi_{i}\) is to efficiently obtain the conditional failure probability \(P\{ F\left| {X_{i} \} } \right.\) on the realization \(X_{i} = x_{i}\). The Bayes’ formula [23] provides an alternative way for obtaining \(P\{ F\left| {X_{i} = x_{i} \} } \right.\),

where \(f_{{X_{i} }} \left( {x_{i} } \right)\) is the known original PDF of \(X_{i}\), and \(f_{{X_{i} }} \left( {x_{i} |F} \right)\) is the unknown conditional PDF of \(X_{i}\) on the failure domain \(F\).

By the Bayes’ formula transformation, \(P\{ F\left| {X_{i} \} } \right.\) at the realization \(X_{i} = x_{i}\) can be estimated by the ratio of \(f_{{X_{i} }} \left( {x_{i} |F} \right)\) to \(f_{{X_{i} }} \left( {x_{i} } \right)\) at \(X_{i} = x_{i}\) multiplying by the failure probability \(P\left\{ F \right\}\). If the conditional PDF \(f_{{X_{i} }} \left( {x_{i} |F} \right)\) of \(X_{i}\) on the failure domain \(F\) can be easily estimated, then the FP-GS \(\xi_{i}\) can be further obtained without any extra computational cost. To estimate \(f_{{X_{i} }} \left( {x_{i} |F} \right)\), the classification method does use the \(i\)-th dimension failure samples \(x_{li}^{F}\)\(\left( {l = 1,2, \ldots ,M_{F} } \right)\) (\(M_{F}\) is the number of the failure samples) screened from the failure samples \({\mathbf{x}}_{l}^{F}\)\(\left( {l = 1,2, \ldots ,M_{F} } \right)\) of the input vector \({\mathbf{X}} = \left\{ {X_{1} ,X_{2} , \ldots ,X_{n} } \right\}\), where the failure samples \({\mathbf{x}}_{l}^{F}\)\(\left( {l = 1,2, \ldots ,M_{F} } \right)\) of the input vector are classified once the failure probability \(P\left\{ F \right\}\) is estimated by the AK-based sampling method.

AK-based sampling methods are popular in estimating the failure probability recently for their good balance between the estimation accuracy and efficiency. These methods firstly construct the initial Kriging model with some training samples by the design of experiment (DOE), and then new samples selected by the learning function are iteratively added to the training sets to update the Kriging model until the convergence criterion is satisfied. Based on the convergent Kriging model, the failure probability can be obtained accurately [24]. There are many AK-based sampling methods, such as AK-MCS [24], AK-IS [25,26,27], AK-SS [28] and AK-LS [29], etc., they, respectively, combine the AK model with the Monte Carlo Simulation (MCS), the Importance Sampling (IS), the Subset Simulation (SS) and the Line Sampling (LS). In this kind of AK-based sampling method, the AK model is used as the representation of the performance function. Based on the convergent AK instead of the performance function, whether the sample is the failure one or the safety one can be evaluated efficiently. Since the AK model is nested into the sampling methods to estimate the failure probability, the sample classification information obtained from these AK-based sampling processes can be directly used for estimating the FP-GS without extra evaluation of the performance function.

Two ways, including the separating way of AK from the sampling process as well as the nesting way of AK into the sampling process, are usually adopted. In a separating way, a sampling pool is generated first for constructing the AK model. After the AK model is updated completely, the failure probability is estimated by another sample pool, in which the performance function value of each sample is evaluated by the constructed AK model. This way may not guarantee the precision because the samples used to estimate the failure probability may not be included in the sample pool for training the AK model. While in the nesting way, the sample pool for training the AK model is the same as that for estimating the failure probability. The AK model is iteratively updated by screening all the samples, and the failure probability is estimated by the convergent AK model trained by the same sample pool, in which the prediction precision of the sample classification can be guaranteed. Thus the nesting way is preferred and adopted in this work for the FP-GS.

IS is an advanced MCS for estimating the failure probability [30]. By introducing the IS PDF, the sampling efficiency can be improved. In theory, the optimal IS PDF denoted as \(h_{{\varvec{X}}}^{*} ({\varvec{x}})\) is shown in Eq. (6).

Due to the unknown failure probability \(P\{ F\}\) in advance and the implicit performance function, the analytical expression of the optimal IS PDF shown in Eq. (6) cannot be available. Usually, the IS PDF is constructed by shifting the sampling center of the original PDF to the most probable failure point (MPP), where the MPP can be searched by several existing methods [31, 32] such as advanced first order and second moment (AFOSM).

The Metropolis–Hastings (M–H) algorithm is employed to generate a set of random samples subjected to a desired probability distribution which is difficult to directly sample in statistics [33]. The M–H algorithm generates samples in such a way that the next sample is only dependent on the current sample. At each iteration, the M–H algorithm generates a candidate for the next sample according to the proposal probability distribution, and then the candidate is either accepted (in this case the candidate is selected as the sample in the next iteration) or rejected (in this case the current sample is selected as the sample in the next iteration) according to the Metropolis–Hastings criterion [33].

Kernel density estimation (KDE) was first proposed by Rosenblatt [34], and then further described and demonstrated by Parzen [35] and Cacoullos [36]. Traditional density estimation by the histogram is simple and intuitive for observation, but it is difficult to determine the observation interval of the histogram. KDE method avoids the disadvantages of the histogram. The basic theory of the KDE is modelling the real probability density using the smooth kernel function to approximate the existing samples. Many kernel functions can be used in this method, such as the uniform type, the triangular type and the Gaussian type [34]. Because the Gaussian type kernel function usually performs well for different problems (i.e., linear and non-linear) and has been widely used in many engineering applications, the Gaussian type kernel function is used in this work.

In summary, using the Bayes’ formula, the key estimation of the conditional failure probability in FP-GS is transformed to that of the conditional PDF of each input on the failure domain. The latter one can be simultaneously estimated by the failure samples recognized by the AK nested IS method. After the failure samples from the IS sample pool are transformed into those subjected to the original conditional probability density function on the failure domain by the Metropolis–Hastings algorithm, the conditional PDF of the input on the failure domain can be estimated by the KDE method.

3 The definition of the FP-GS and its Bayes’ formula transformation

3.1 Definition of the FP-GS and its estimation by the double-loop MCS

For the structure with \(n\)-dimensional input vector \(X = (X_{1} ,X_{2} ,...,X_{n} )\) described by the joint PDF \(f_{{\varvec{X}}} ({\varvec{x}}) = \prod\nolimits_{i = 1}^{n} {f_{{X_{i} }} \left( {x_{i} } \right)}\)(where \(f_{{X_{i} }} (x_{i} )\) is the marginal PDF of the \(i\)-th input \(X_{i}\)), assume the performance function is denoted by \(Y = g({\varvec{X}})\). The failure domain \(F\) is defined by \(g({\varvec{x}}) \le 0\) as \(F = \{ {\varvec{x}}{{:}}g({\varvec{x}}) \le 0\}\). The definition of the FP-GS is shown in Eq. (4), in which the failure probability of the structure can be estimated by Eq. (7).

where the failure domain indicator function \(I_{F} ({\varvec{x}})\) is defined by Eq. (8).

The conditional failure probability on fixing \(X_{i} = x_{i}\) can be estimated by Eq. (9).

where \({\varvec{X}}_{\sim i} = \left( {X_{1} , \ldots ,X_{i - 1} ,X_{i + 1} \ldots X_{n} } \right)\) and \(f_{{{\varvec{X}}_{\sim i} }} ({\varvec{x}}_{\sim i} )\) is the joint PDF of \({\varvec{X}}_{\sim i}\).

Based on Eqs. (4), (7) and (9), the following double-loop MCS can be constructed to estimate the FP-GS.

-

(1)

Estimate the failure probability by the MCS.

Generate an \(N\)-size sample set \(({\varvec{x}}_{1} , \cdots ,{\varvec{x}}_{k} , \cdots ,{\varvec{x}}_{N} )^{T}\)(where \({\varvec{x}}_{k} = (x_{k1} ,x_{k2} ,...,x_{kn} )\)) according to the joint PDF \(f_{{\varvec{X}}} \left( {\varvec{x}} \right)\) for the failure probability estimation \(\hat{P}\{ F\}\), where \(N\) is determined by the criterion that the variation coefficient of \(\hat{P}\{F\}\) is less than 5%. The MCS estimation \(\hat{P}\{F\}\) of the failure probability \(P\{ F\}\) is shown in Eq. (10).

$$ \hat{P}\{ F\} = \frac{1}{N}\sum\nolimits_{k = 1}^{N} {I_{F} ({\varvec{x}}_{k} )} . $$(10) -

(2)

Estimate the conditional failure probability by the MCS.

Generate an \(N_{1}\)-size sample set \((x_{1i} ,x_{2i} ,...,x_{{N_{1} i}} )^{T}\) according to the PDF \(f_{{X_{i} }} (x_{i} )\) of the input \(X_{{\text{i}}}\), where \(N_{1}\) is determined by the criterion that the variation coefficient of the estimation \(\hat{\xi }_{i}\) of \(\xi_{i}\) is less than 5%. For a certain realization \(x_{ji}^{{}}\)(\(j = 1, \cdots ,N_{1}\)), generate an \(N_{2}\)-size sample set \(({\varvec{x}}_{{1,{{\sim }}i}} ,{\varvec{x}}_{{2,{{\sim }}i}} , \cdots ,{\varvec{x}}_{{N_{2} ,\sim i}} )^{T}\) according to the joint PDF of \(f_{{{\varvec{X}}_{\sim i} }} \left( {{\varvec{x}}_{\sim i} } \right)\), where \(N_{2}\) is determined by the criterion that the variation coefficient of the estimation \(\hat{P}\{ F|x_{ji}^{{}} \}\) of \(P\{ F|x_{ji}^{{}} \}\) is less than 5%. Then the conditional failure probability \(P\{ F|x_{ji}^{{}} \}\)(\(j = 1, \cdots ,N_{1}\)) can be estimated by Eq. (11)

$$ \hat{P}\{ F|x_{ji}^{{}} \} { = }\frac{1}{{N_{2} }}\sum\nolimits_{l = 1}^{{N_{2} }} {I_{F} } {(}{\varvec{x}}_{l,\sim i}^{{}} {, }x_{ji}^{{}} {) = }\frac{1}{{N_{2} }}\sum\nolimits_{l = 1}^{{N_{2} }} {I_{F} } (x_{l,1}^{{}} , \cdots ,x_{l,i - 1}^{{}} ,x_{ji}^{{}} ,x_{l,i + 1}^{{}} \cdots x_{l,n}^{{}} ). $$(11) -

(3)

Estimate the FP-GS \(\xi_{i}\) by the estimation \(\hat{\xi }_{i}\) shown in Eq. (12).

$$ \hat{\xi }_{i} = \frac{{\frac{1}{{N_{1} }}\sum\nolimits_{j = 1}^{{N_{1} }} {[\hat{P}\{ F\} - \hat{P}\{ F|x_{ji} {{\} ]}}^{2} } }}{{\hat{P}\{ F\} - \hat{P}^{2} \{ F\} }}. $$(12)

It is shown from the estimation process of the double-loop MCS that a total number of evaluating the performance function is \(N + n \times N_{1} \times N_{2}\) for estimating the FP-GS of all inputs, where the number of the performance function evaluations for \(\hat{P}\{ F\}\) is \(N\), and that for \(\hat{P}\{ F\left| {X_{i} } \right.\}\) is \(n \times N_{1} \times N_{2}\) for the \(n\)-dimensional input vector. It is easy to conclude that the computational cost depends on the dimensionality of the input vector. For the rare failure event which is common in engineering, \(N\) and \(N_{2}\) should be large for obtaining the convergent estimations of \(\hat{P}\{ F\}\) and \(\hat{P}\{ F|X_{i} \} ,\) respectively, thus the computational cost of the double-loop MCS is too large to be affordable for the engineering application.

3.2 The Bayes’ formula transformation of the FP-GS and its corresponding MCS estimation

Substitute Eq. (5) into Eq. (4), a new expression of the FP-GS \(\xi_{i}\) can be obtained by Eq. (13) [21].

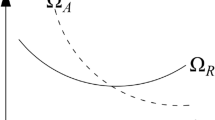

It can be seen from Eq. (13) that the FP-GS of \(X_{i}\) requires estimating the failure probability and the integral of the square difference between the original PDF \(f_{{X_{i} }} (x_{i} )\) and the conditional PDF \(f_{{X_{i} }} (x_{i} |F)\) of \(X_{i}\) on the failure domain. The larger the square difference between \(f_{{X_{i} }} \left( {x_{i} } \right)\) and \(f_{{X_{i} }} \left( {x_{i} |F} \right)\) is, the larger the effect of \(X_{i}\) has on the failure probability.

From Eq. (13), it can be also observed that there is no need to estimate \(P\{ F|X_{i} \}\) anymore, but \(f_{{X_{i} }} \left( {x_{i} |F} \right)\) instead. \(f_{{X_{i} }} \left( {x_{i} |F} \right)\) can be simultaneously approached by the failure samples used for estimating \(P\{F\}\). That is to say, the sample information obtained for estimating the failure probability by the sampling-based method can be repeatedly used for estimating \(f_{{X_{i} }} \left( {x_{i} |F} \right)\). If the MCS is used to estimate the failure probability with an \(N\)-size sample set, then the total number of evaluating the performance function is also \(N\) for estimating the FP-GS. The computational cost has been greatly reduced comparing with the double-loop MCS. The MCS estimations for \(\xi_{i}\) based on Bayes’ formula are shown as follows.

-

(1)

Estimate failure probability by the MCS.

Generate an \(N\)-size sample set \({\varvec{x}}_{k} (k = 1,2,...,N)\)(\({\varvec{x}}_{k} = \{x_{k1} ,.x_{k2} ,...,x_{kn} \}\)) according to the joint PDF \(f_{{\varvec{X}}} \left( {\varvec{x}} \right)\), then estimate the failure probability \(P\{ F\}\) by the sample mean shown in Eq. (10).

-

(2)

Employ the failure samples repeatedly for estimating \(f_{{X_{i} }} (x_{i} |F)\).

Assume \(N_{F}\) failure samples are found in the \(N\)-size sample set \({\varvec{x}}_{k} (k = 1,2,...,N)\) in the last step. Record the \(N_{F}\) failure samples as \({\varvec{x}}_{l}^{F} (l = 1,2,...,N_{F} )\), then these failure samples can be repeatedly used to obtain the estimation \(\hat{f}_{{X_{i} }} (x_{i} |F)\) by the KDE method[33, 37], which is represented as Eq. (14):

$$ \hat{f}_{{X_{i} }} (x_{i} |F) = \frac{1}{{M_{F} }}\sum\limits_{l = 1}^{{M_{F} }} {\frac{1}{{\left( {\omega \lambda_{l} } \right)^{n} }}K\left( {\frac{{x - x_{li}^{F} }}{{\omega \lambda_{l} }}} \right)} , $$(14)where \(\omega\) is the bandwidth parameter, which determines the smoothness of the kernel probability density function, \(\lambda_{l}\) is the local bandwidth factor, \(n\) is the dimension of the inputs. \(\omega\) and \(\lambda_{l}\) are determined by the following equations:

$$ \omega = M_{d}^{{ - \frac{1}{n + 4}}} , $$(15)$$ \lambda_{l} = \left\{ {\frac{{\left[ {\prod\limits_{l = 1}^{{M_{F} }} {f_{{X_{i} }} \left( {x_{li}^{F} } \right)} } \right]^{{\frac{1}{{M_{F} }}}} }}{{f_{{X_{i} }} \left( {x_{li}^{F} } \right)}}} \right\}^{\alpha } , $$(16)where \(M_{d}\) is the number of the samples with different values, and \(0 \le \alpha \le 1\) is the sensitive factor.

\(K\left( \cdot \right)\) is the kernel probability density function, the Gaussian type is adopted in this paper and represented as Eq. (17)

$$ K\left( \cdot \right) = \frac{1}{{\sqrt {\left( {2\pi } \right)^{n} \left| {\mathbf{S}} \right|} }}\exp \left( { - \frac{1}{2}x^{T} {\mathbf{S}}^{ - 1} x} \right), $$(17)where \({\mathbf{S}}\) is the covariance matrix of \(\left\{ {x_{i}^{F} } \right\}\), and \({\mathbf{S}} = \sum\limits_{l = 1}^{{M_{F} }} {\left( {x_{li}^{F} - \overline{x}_{i}^{F} } \right)} \left( {x_{li}^{F} - \overline{x}_{i}^{F} } \right)^{T}\).

-

(3)

Estimate the FP-GS.

Substitute \(\hat{P}\{F\}\) in step (1) and \(\hat{f}_{{X_{i} }} (x_{i} |F)\) in step (2) into Eq. (13), then the estimation \(\hat{\xi }_{i}\)(\(i = 1,2, \cdots ,n\)) of \(\xi_{i}\) can be obtained by Eq. (18)

$$ \hat{\xi }_{i} = \frac{{\hat{P}\{ F\} \int_{ - \infty }^{ + \infty } {[f_{{X_{i} }} (x_{i} ) - \hat{f}_{{X_{i} }} (x_{i} |F)]^{2} } dx_{i} }}{{1 - \hat{P}\{ F\} }}. $$(18)

By the Bayes’ formula transformation shown in Eq. (13), the MCS can be directly employed for estimating \(\xi_{i}\). Although the computational cost of estimating \(\xi_{i}\) according to Eq. (13) by the MCS is greatly less than that by the double-loop MCS shown in Sect. 3.1, its efficiency is still desired to be improved because the number of samples \(N\) for obtaining the convergent estimation of \(P\{ F\}\) is very large in case of the rare failure event and the implicit performance function case. From the literature review, it is well known that the sampling methods cannot provide a satisfying efficiency for the rare failure event and the implicit performance function case. Thus exploring a new way such as combining AK model with the sampling method is feasible to address the inefficiency resulting from the sampling methods. The next section probes this feasible way by nesting the AK model into IS method to estimate the FP-GS.

4 Nesting AK model into IS for efficiently estimating the FP-GS

4.1 The direct IS method for \(\hat{\xi }_{i}\) based on the Bayes’ formula transformation of \(\xi_{i}\)

When the direct IS method is used to estimate the failure probability \(P\{F\}\), the IS PDF \(h_{{\varvec{X}}} ({\varvec{x}})\) should be constructed first, and then the failure probability can be estimated by the following equation:

Generating an \(M\)-size IS PDF sample pool denoted by \({\varvec{x}}_{k}^{h} (k = 1,2,...,M)\)(\({\varvec{x}}_{k}^{h} = \{x_{k1}^{h} ,x_{k2}^{h} ,...,x_{kn}^{h} \}\)) according to the IS PDF \(h_{{\varvec{X}}} ({\varvec{x}})\), then the failure probability can be estimated by the sample mean shown in Eq. (20).

Comparing the failure probability estimated by the MCS shown in Eq. (10) and that by the IS shown in Eq. (20), it is well known that the variance of Eq. (20) is smaller than that of Eq. (10) [6]. Assuming that there are \(M_{F}\) IS failure samples denoted by \({\varvec{x}}_{l}^{hF} (l = 1,2,...,M_{F} )\) in the \(M\)-size IS PDF sample pool, the estimation \(\hat{h}_{{X_{i} }} (x_{i} |F)\) of the conditional IS PDF \(h_{{X_{i} }} (x_{i} |F)\) on the failure domain can be approximated by the kernel density estimation (KDE) and the \(M_{F}\) IS failure samples for all \(n\)-dimensional inputs simultaneously. But it should be noted that \(\hat{h}_{{X_{i} }} (x_{i} |F)\), the conditional IS PDF estimation on the failure domain, cannot replace \(\hat{f}_{{X_{i} }} (x_{i} |F)\), the conditional PDF on the failure domain, to estimate the FP-GS shown in Eq. (13). Fortunately, Metropolis–Hastings [35, 38, 39] algorithm makes it possible to transform the \(M_{F}\) failure samples \({\varvec{x}}_{l}^{hF} (l = 1,2,...,M_{F} )\) following the conditional IS PDF \(h_{{X_{i} }} (x_{i} |F)\) on \(F\) into \(M_{F}\) failure samples \({\varvec{x}}_{l}^{F} (l = 1,2,...,M_{F} )\) following the conditional PDF \(f_{{X_{i} }} (x_{i} |F)\) on \(F\). Set the first sample as \({\varvec{x}}_{l}^{F\left( 1 \right)} { = }{\varvec{x}}_{l}^{hF\left( 1 \right)}\), and \({\varvec{x}}_{l}^{F} (l = 2,...,M_{F} )\) can be obtained by executing the following two steps:

-

(1)

Compute the ratio

$$ r\left( {{\varvec{x}}_{l}^{F\left( l \right)} ,{\varvec{x}}_{l}^{{F\left( {l + 1} \right)}} } \right) = \frac{{f_{{\mathbf{X}}} \left( {{\varvec{x}}_{l}^{{hF\left( {l + 1} \right)}} } \right)h_{{\mathbf{X}}} \left( {{\varvec{x}}_{l}^{F\left( l \right)} } \right)}}{{f_{{\mathbf{X}}} \left( {{\varvec{x}}_{l}^{F\left( l \right)} } \right)h_{{\mathbf{X}}} \left( {{\varvec{x}}_{l}^{{hF\left( {l + 1} \right)}} } \right)}}. $$(21) -

(2)

The next sample \({\varvec{x}}_{l}^{{F\left( {l + 1} \right)}}\) is selected from two candidates, as shown in Eq. (22)

$$ {\varvec{x}}_{l}^{{F\left( {l + 1} \right)}} { = }\left\{ {\begin{array}{*{20}c} {{\varvec{x}}_{l}^{{hF\left( {l{ + }1} \right)}} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \min \left( {1,r} \right) > u{\kern 1pt} } \\ {{\varvec{x}}_{l}^{F\left( l \right)} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \min \left( {1,r} \right) \le u} \\ \end{array} } \right., $$(22)where \(u\) is a random number following the uniform distribution in the interval \(\left[ {0,1} \right]\).

Although the IS can combine the Bayes’ formula transformation to estimate the FP-GS, the performance function should be evaluated at \(M\) IS PDF samples \({\varvec{x}}_{k}^{h} (k = 1,2,...,M)\), this computational cost is still too large to be used in the engineering application. Thus, in the next subsection an efficient method by nesting the AK model into IS method is constructed for estimating the FP-GS, in which the failure samples are recognized by the AK model.

4.2 AK nested IS method for the FP-GS

In the AK nested IS method (AK-IS), the sample pool is composed of \(M\) IS PDF samples \({\varvec{x}}_{k}^{h} (k = 1,2,...,M)\), the AK model is updated by a stepwise way in the IS sample pool. After the AK model is converged, the failure samples in the sample pool with \(M\) IS PDF samples can be accurately recognized by the convergent AK model, then the FP-GS can be estimated by the AK nested IS method, and the precision of the FP-GS estimated by the AK-IS method is almost same as that by the direct IS method. The detailed steps of the AK nested IS method for the FP-GS are shown as follows, and the flowchart for the proposed AK-IS method is given in Fig. 1.

-

(1)

Search the MPP by the AFOSM method, and construct the IS PDF \(h_{{\varvec{X}}} ({\varvec{x}})\).

-

(2)

Generate the sample pool \({\varvec{S}}^{h}\) by sampling \(M\) IS PDF samples denoted by \({\varvec{x}}_{k}^{h} (k = 1,2,...,M)\)(\({\varvec{x}}_{k}^{h} = \{x_{k1}^{h} ,x_{k2}^{h} ,...,x_{kn}^{h} \}\)) according to the \(h_{{\varvec{X}}} ({\varvec{x}})\), then \({\varvec{S}}^{h} = \{ {\varvec{x}}_{1}^{h} ,{\varvec{x}}_{2}^{h} ,...,{\varvec{x}}_{M}^{h} \}\).

-

(3)

(3). Nest AK model into IS method to accurately recognize the failure samples in \({\varvec{S}}^{h}\).

-

(3.1)

Construct the initial AK model \(g_{K} ({\varvec{x}})\).

Randomly select \(M_{1}\)(\(M_{1} \ll M\)) samples \({\varvec{x}}_{j}^{hT} (j = 1,2,...,M_{1} )\) from \({\varvec{S}}^{h}\) and evaluate \(g({\varvec{x}}_{j}^{hT} )\). Then the training set \({\varvec{T}}^{h} = \{ ({\varvec{x}}_{1}^{hT} ,g({\varvec{x}}_{1}^{hT} )),({\varvec{x}}_{2}^{hT} ,g({\varvec{x}}_{2}^{hT} )),...,({\varvec{x}}_{{M_{1} }}^{hT} ,g({\varvec{x}}_{{M_{1} }}^{hT} ))\}\) is established to construct the initial AK model \(g_{K} ({\varvec{x}})\) by the Matlab toolbox.

-

(3.2)

Judge the convergence of \(g_{K} ({\varvec{x}})\).

The convergence of the AK model is judged by the minimum value of the U learning function, which is shown in Eq. (23)

$$ U({\varvec{x}}_{k}^{h} ) = \left| {\frac{{\mu_{{g_{K} }} ({\varvec{x}}_{k}^{h} )}}{{\sigma_{{g_{K} }} ({\varvec{x}}_{k}^{h} )}}} \right|_{{{\varvec{x}}_{k}^{h} \in {\varvec{S}}^{h} }} , $$(23)where \(\mu_{{g_{K} }} ({\varvec{x}}_{k}^{h} )\) and \(\sigma_{{g_{K} }} ({\varvec{x}}_{k}^{h} )\) are the prediction mean and the standard deviation of the AK model \(g_{K} ({\varvec{x}})\) respectively.

It is known that \(\mathop {\min }\limits_{{{\varvec{x}}_{k}^{h} \in {\varvec{S}}^{h} }} (U({\varvec{x}}_{k}^{h} )) \ge 2\) corresponds the probability of misjudging the sign of \(g({\varvec{x}})\) by \(g_{K} ({\varvec{x}})\) is less than \(\Phi \left( { - 2} \right) \approx 2.27\%\) [24]. This threshold value to stop updating the AK model is widely accepted in the existing literature. Thus, stop updating the AK model \(g_{K} ({\varvec{x}})\) and execute step (4) when \(\mathop {\min }\limits_{{{\varvec{x}}_{k}^{h} \in {\varvec{S}}^{h} }} (U({\varvec{x}}_{k}^{h} )) \ge 2\). Otherwise, turn to step (3.3).

-

(3.3)

Update the AK model \(g_{K} ({\varvec{x}})\).

Search a new training point \({\varvec{x}}_{new}^{hT}\) in \({\varvec{S}}^{h}\) to refine \(g_{K} ({\varvec{x}})\) by the U learning function. The sample \({\varvec{x}}_{k}^{h}\) with the smallest \(U({\varvec{x}}_{k}^{h} )\) in \({\varvec{S}}^{h}\) is desired to be calibrated, which is selected by the following equation:

$$ {\varvec{x}}_{new}^{hT} { = }\arg \mathop {\min }\limits_{{{\varvec{x}}_{k}^{h} \in {\varvec{S}}^{h} }} (U({\varvec{x}}_{k}^{h} )). $$(24)Evaluate \(g({\varvec{x}}_{new}^{hT} )\) and add new training point \(({\varvec{x}}_{new}^{hT} ,g({\varvec{x}}_{new}^{hT} ))\) into the last training set \({\varvec{T}}^{h}\), i.e., \({\varvec{T}}^{h} { = }\{ {\varvec{T}}^{h} \cup ({\varvec{x}}_{new}^{hT} ,g({\varvec{x}}_{new}^{hT} ))\}\). Refine the AK model and execute step (3.2).

-

(3.1)

-

(4)

Estimate the failure probability.

Assume that \(M_{F}\) failure samples \({\varvec{x}}_{l}^{hF} (l = 1,2,...,M_{F} )\) in \({\varvec{S}}^{h}\) are recognized by the convergent \(g_{K} ({\varvec{x}})\), then the failure probability can be estimated by the following equation simplified from Eq. (20).

$$ \hat{P}(F) = \frac{1}{M}\sum\limits_{l = 1}^{{M_{F} }} {\frac{{f_{{\varvec{X}}} ({\varvec{x}}_{l}^{hF} )}}{{h_{{\varvec{X}}} ({\varvec{x}}_{l}^{hF} )}}} . $$(25) -

(5)

Transform the failure samples by M–H algorithm and estimate \(\hat{f}_{{X_{i} }} (x_{i} |F)\) by the KDE method.

Use the Metropolis–Hastings algorithm to transform \(M_{F}\) failure samples \({\varvec{x}}_{l}^{hF} (l = 1,2,...,M_{F} )\) following \(h_{{X_{i} }} (x_{i} |F)\) to \(M_{F}\) failure samples \({\varvec{x}}_{l}^{F} (l = 1,2,...,M_{F} )\) following \(f_{{X_{i} }} (x_{i} |F)\), the details of which can be seen in Sect. 4.1. The process of KDE method for approaching the estimation \(\hat{f}_{{X_{i} }} (x_{i} |F)\) is described in Sect. 3.2.

-

(6)

After \(\hat{P}(F)\) and \(\hat{f}_{{X_{i} }} (x_{i} |F)\) are respectively estimated by the above steps, the FP-GS of all inputs can be obtained by substituting \(\hat{P}\{ F\}\) and \(\hat{f}_{{X_{i} }} (x_{i} |F)\) into Eq. (13).

5 Test examples

Since the random variables subjected to any other distributions can be transformed into those normally distributed [40], the test examples mainly focus on the normal random inputs.

The variation coefficient of \(\xi_{i}\) (denoted as \({\text{COV}}\left( {\xi_{i} } \right)\)) and the variation coefficient of \(P\{ F\}\) (denoted as \({\text{COV}}\left( {\hat{P}\{ F\} } \right)\)) are used to evaluate the accuracy of the results. The accuracy of estimating \(\xi_{i}\) is important for evaluating the importance of the inputs. The larger the sensitivity index (SI) value of the input is, the more effective the input has on the failure probability. By controlling the variation coefficient of \(\xi_{i}\) less than 5%, the accuracy of the FP-GS for every input can be guaranteed.

In the engineering application, one evaluation of the performance function is usually time-consuming due to the finite element analysis of the structure is required. In this work, the number of evaluating the performance function (\(N_{pf}\)) for obtaining the convergent FP-GS is used to represent the efficiency of the method.

5.1 Numerical example

5.1.1 Example 1

Consider the following numerical performance function:

where \(x{}_{1}\) and \(x_{2}\) follow normal distribution \(N(6,1)\) and \(N(0,1)\), respectively.

Table 1 lists the SI values estimated by the analytical method, the state-of-the-art such as the MCS, the single-loop MCS [16], the single-loop IS [16], the Subset Simulation [21] and the proposed method. In the premise that \({\text{COV}}\left( {\hat{P}\{ F\} } \right)\) and \({\text{COV}}\left( {\xi_{{X_{i} }} } \right)\) are less than 5%, \(\xi_{{X_{i} }}\) estimated by the state-of-the-art requires millions of the performance function evaluation, which is much more than the proposed method. The proposed method is proved to be more efficient for estimating the FP-GS than the compared methods in this example.

5.1.2 Example 2

A simplified riveting model with headless rivet [41] is employed for analysis in this work. When the maximum squeeze stress exceeds the ultimate squeeze strength during the riveting process, the failure of the rivet will happen. The maximum squeeze stress for a certain riveting process can be estimated as

where the strain hardening exponent of the material is \(n_{{{\text{SHE}}}} = 0.15\), the ultimate squeeze strength is \(\sigma_{{{\text{sq}}}} = 585\) MPa, the height of the driven rivet head is \(H = 2.2\) mm. Thus, the following performance function can be obtained

The inputs all follow the normal distributions and the distribution parameters of the inputs are given in Table 2.

Table 3 lists the SI values for the headless rivet. Among the random inputs, the uncertainty of the strength coefficient \(K\) cannot be reduced in the practical engineering problems although the SI value of \(K\) is the largest. The inputs \(h\), \(d\), \(D_{0}\) and \(t\) can be considered to reduce the failure probability of the rivet. From Table 3, it is shown that \(h\) is most influential on the failure probability comparing with \(d\), \(D_{0}\) and \(t\), which indicates that \(h\) should be given priority to reduce the failure probability.

To further observe the effect of the uncertainty of the random inputs on the failure probability, the curves of the failure probability vs variation coefficient of the inputs are plotted in Fig. 2. Figure 2 shows h, the most influential input, would reduce the failure probability most when the variation coefficient of h is reduced. Assuming that the failure probability to be reduced by 5% is required, it is much more efficient to reduce the variation coefficient of h from 0.01 to 0.009, compared with reducing that of \(d\) or \(D_{0}\) from 0.01 to 0.001. The SI value of \(t\) is so small that the reduction on its variation coefficient is low efficient and even impracticable for reducing the failure probability. Reducing the uncertainty of h can be realized by improving the processing technology and reducing the variance of h.

The proposed method devotes to efficiently estimate the FP-GS. Table 3 shows the SI values and the failure probability estimated by the single-loop IS, the Subset Simulation and the proposed method. Since the MCS method is so time-consuming that its results of the FP-GS are not provided. Although the single-loop IS and the Subset Simulation methods can reduce the computational burden to some degree, the computational cost is still huge, which respectively require \(40 + 7 \times 10^{7}\) and \(3 \times 10^{6}\) evaluations of the performance function. For the proposed method, only 40 + 36 performance function evaluations are needed. Therefore, for the rare failure event shown in this example, the numbers of evaluating the performance function required by the state-of-the-art are far more than that required by the proposed method at the given accuracy level. The proposed method highly improves the efficiency of estimating the FP-GS.

5.2 Engineering examples

Two engineering models are presented in this subsection for demonstrating the high efficiency and the practical applicability of the proposed method.

5.2.1 The stiffener rib on the leading edge of a civil aircraft

Figure 3 shows a stiffener rib [42] on the leading edge of a civil aircraft, the simplified structure with the detailed size parameters is shown in Fig. 4. There are six circular holes in the stiffener rib, the maximum of which is used to fix the engine for controlling the movement of the slat. The hole at the top is for the pipelines and the cables to pass through. The rest four holes are used to support the slideway of the slat. The stiffener rib is made of aluminum alloy 7050-T7451, with Poisson’s ratio \(v = 0.3\). Force analysis in the stiffener rib shown in Fig. 5 includes the concentrated loads \(F_{1}\), \(F_{2}\), \(F_{3}\), \(F_{4}\), \(F_{5}\) and \(F_{6}\) acting on the web and the aerodynamic loads \(P_{1}\) and \(P_{2}\) on the edges. The random inputs including the thickness of the web \(d\), the elastic modulus of the aluminum alloy \(E\), the aerodynamic loads \(P_{1}\),\(P_{2}\), and the concentrated loads \(F_{1}\), \(F_{2}\), \(F_{3}\), \(F_{4}\), \(F_{5}\), \(F_{6}\) are normally distributed random variables, the distribution parameters are shown in Table 4.

The detailed steps of the finite element analysis of the stiffener rib based on the mean value of random variables are as follows, the process of the finite element analysis is shown in Fig. 6.

-

1.

Establish the finite element model of the stiffener rib by the finite element analysis software ANSYS;

-

2.

Mesh the finite element model and applying constraints, aerodynamic loads and concentrated loads on the model;

-

3.

Obtain the displacement of the model.

The performance function is constructed with the maximum longitudinal displacement of the structure not exceeding 0.065 mm. The SI values for the stiffener rib are listed in Table 5.

In this example, the uncertainties of the working environment inputs including \(F_{1}\) ~ \(F_{6}\), \(P_{1}\) and \(P_{2}\) of the stiffener rib cannot be reduced. Thus, the FP-GS results of these random inputs can only be used to observe the effects on the failure probability, but they cannot be changed to meet the required failure probability. From Table 5, the aerodynamic loads \(P_{1}\) and \(P_{2}\) have much more effect on the failure probability compared with the concentrated loads \(F_{1}\) ~ \(F_{6}\).

There are two input random variables, the uncertainties of which can be changed, including the thickness \(d\) of the web and the elastic modulus \(E\). The FP-GS results of this example listed in Table 5 also show that \(d\) is more important than \(E\). Then it should reduce the uncertainty of \(d\) prior to \(E\) for decreasing the failure probability effectively. The curves of the failure probability vs the variation coefficients of these two inputs are illustrated in Fig. 7. In Fig. 7, when the variation coefficient of \(d\) varies from 0.05 to 0.02, the failure probability can be reduced by almost 90%. The SI value of \(E\) is so small that the reduction on its variation coefficient almost has no effect on the failure probability. Reducing the variability of the important variable \(d\) can be realized by decreasing the variance by changing the technology of processing the stiffener web.

In this engineering case, the performance function to be evaluated cannot be explicitly formulated by a mathematical expression, i.e., the performance function of this case is implicit. The performance function value is estimated by the finite element analysis (ANSYS is adopted in this work). In general, the time cost of calling the finite element software is large (in this example, it costs about 3 s for one call of the performance function). Table 5 lists the failure probability as well as \(\xi_{{X_{i} }}\) estimated by the Subset Simulation and the proposed method. The computational cost for estimating \(\xi_{{X_{i} }}\) y the MCS, the single-loop MCS and the single-loop IS methods are large and unaffordable, thus the results by these three methods are not listed in Table 5. The proposed method provides a feasible and efficient way to estimate the FP-GS for the engineering application with the implicit performance function compared to the Subset Simulation, as shown in Table 5.

5.2.2 The turbine blade structure

The turbine blade of the aeroengine bears several cyclic loads during the working time. Failure may occur due to the fatigue-creep damage, which is caused by the centrifugal load, the aerodynamic load, the temperature load and so on. With the fatigue-creep damage, the life of the turbine blade can be evaluated by the structural response of the checking points, which can be acquired by the finite element analysis. A simplified turbine blade is considered in this example, the geometry and the mesh model of which are respectively shown in Fig. 8a and b. Perform FEA by the ANAYS software, in which the loads applied on the turbine blade and the boundary conditions are illustrated in Fig. 9.

The turbine blade is working on three conditions: max working condition, idling working condition and cruising working condition. The inputs affecting the fatigue-creep life of the turbine blade are listed as follows, and the distribution parameters of the inputs are listed in Table 6.

-

(1)

The geometry parameters of the film hole, i.e., the radius of the film hole \(r\), the horizontal coordinate of the film hole center \(L\), the vertical coordinate of the film hole center at the bottom \(M\);

-

(2)

The temperature loads applied on the tip of the turbine blade \(T_{1}^{\max }\), \(T_{1}^{{{\text{idl}}}}\) and \(T_{1}^{{{\text{cru}}}}\),the temperature loads applied on the bottom of the tenon \(T_{2}^{\max }\), \(T_{2}^{{{\text{idl}}}}\) and \(T_{2}^{{{\text{cru}}}}\), the rotational speeds \(\omega^{\max }\), \(\omega^{{{\text{idl}}}}\) and \(\omega^{{{\text{cru}}}}\), where the superscripts represent the working conditions;

-

(3)

The auxiliary input variable for the fatigue life \(u_{L}\) and the auxiliary input variable for the creep life \(u_{C}\).

The stress response of the turbine blade under the maximum working condition based on the mean value of inputs is shown in Fig. 10, which is similar to that under the idling working condition and cruising working condition. The stress concentration point of the film hole at the bottom is selected as the checking point to evaluate the fatigue-creep life of the turbine blade. Construct the performance function as the fatigue-creep life of the turbine blade cannot below 1000 cycles, the SI values for the turbine blade structure are listed in Table 7.

The temperature loads, the rotational speeds and the auxiliary input variables are related to the working environment so that the uncertainties of these inputs cannot be reduced. As is seen from Table 7, the auxiliary input variable for the fatigue life \(u_{C}\) has the most effect on the failure probability. Among the geometry input variables, the radius of the film hole \(r\) is the most important one. The rotational speed \(\omega^{\max }\), \(\omega^{{{\text{idl}}}}\), \(\omega^{{{\text{cru}}}}\) are more influential to the failure probability compared with the temperature loads \(T_{1}^{\max }\), \(T_{1}^{{{\text{idl}}}}\), \(T_{1}^{{{\text{cru}}}}\), \(T_{2}^{\max }\), \(T_{2}^{{{\text{idl}}}}\), \(T_{2}^{{{\text{cru}}}}\).

There are three geometry input variables, including the radius of the film hole \(r\), the horizontal coordinate of the film hole center \(L\), the vertical coordinate of the film hole center at the bottom \(M\). The uncertainties of these geometry input variables can be reduced by improving the technology of processing. The curves of the failure probability vs the variation coefficient of \(r\), \(L\), \(M\) are illustrated in Fig. 11. When the variation coefficient of \(r\) varies from 0.01 to 0.001, the failure probability can be reduced by 38%, while the reduction on the variation coefficient of \(L\) and \(M\) almost has no effect on the failure probability, which is consistent with the results of FP-GS estimates.

It is observed from Table 5 that the Subset Simulation method for estimating \(\xi_{{X_{i} }}\) requires \(2 \times 10^{5}\) calls of the performance function. Since the FEA is time-consuming for once, the proposed method is much more efficient and applicable for this implicit performance function case, with only 292 times’ evaluations on the performance function.

6 Conclusions

The FP-GS aims at evaluating the effect of the input uncertainty on the failure probability, which helps the designer to recognize the important random inputs. By reducing the variation coefficient of the important random inputs, the failure probability can be reduced at a low price. A high accuracy of the SI values is important so that the importance degree of the random inputs is correctly identified, on which the important random inputs can be given priority to more efficiently reduce the failure probability. The inputs with large SI values would cause a significant reduction in the failure probability when their variation coefficients are reduced.

Two numerical examples and two engineering examples are presented to validate the accuracy and efficiency of the proposed method. It is shown from the results of the examples that a great reduction on the computational cost is realized by the proposed method, comparing with existing methods such as the traditional MCS method and the Subset Simulation method.

Since AFOSM for searching MPP is not suitable for multi-MPP problems or multi-failure domain problems, the proposed method deals with the problems with a unique most probable failure point, which is the case of the test examples in the submitted manuscript. For multi-MPP problems or multi-failure domain problems, some other surrogate-based methods can be employed to estimate the failure-probability-based global sensitivity (FP-GS) without approaching MPPs. This issue can be discussed in future works.

References

Wu XJ, Zhang WW, Song SF, Ye ZY (2018) Sparse grid-based polynomial chaos expansion for aerodynamics of an airfoil with uncertainties. Chin J Aeronaut 31(5):997–1011

Roger M, Jan CM, Noortwijk V (1999) Local probabilistic sensitivity measures for comparing FORM and Monte Carlo calculations illustrated with dike ring reliability calculations. Comput Phys Commun 117(1):86–98

Song SF, Lu ZZ, Qiao HW (2009) Subset simulation for structural reliability sensitivity analysis. Reliab Eng Syst Saf 94(2):658–665

Zhao YG, Ono T (2001) Moment methods for structural reliability. Struct Saf 23(1):47–75

Zhao YG, Ono T (2004) On the problems of the Fourth moment method. Struct Saf 26(3):343–347

Xu L, Cheng GD (2003) Discussion on: moment methods for structural reliability. Struct Saf 25(2):193–199

Song K, Zhang Y, Zhuang X et al (2019) Reliability-based design optimization using adaptive surrogate model and importance sampling-based modified SORA method. Eng Comput 37(2):1295–1314

Abdollahi A, Azhdary Moghaddam M, Hashemi Monfared SA et al (2020) Subset simulation method including fitness-based seed selection for reliability analysis. Eng Comput. https://doi.org/10.1007/s00366-020-00961-9

Song SF, Lu ZZ, Zhang WW, Ye ZY (2009) Reliability and sensitivity analysis of transonic flutter using improved line sampling technique. Chin J Aeronaut 22(5):513–519

Rocha LCS, Rotela Junior P, Aquila G et al (2020) Toward a robust optimal point selection: a multiple-criteria decision-making process applied to multi-objective optimization using response surface methodology. Eng Comput. https://doi.org/10.1007/s00366-020-00973-5

Yang I, Hsieh Y (2013) Reliability-based design optimization with cooperation between support vector machine and particle swarm optimization. Eng Comput 29:151–163

Gordan B, Koopialipoor M, Clementking A et al (2019) Estimating and optimizing safety factors of retaining wall through neural network and bee colony techniques. Eng Comput 35:945–954

Zhai Z, Li H, Wang X (2020) An adaptive sampling method for Kriging surrogate model with multiple outputs. Eng Comput. https://doi.org/10.1007/s00366-020-01145-1

Cui LJ, Lu ZZ, Zhao XP (2010) Moment-independent importance measure of basic random variable and its probability density evolution solution. Sci China Technol Sci 53(4):1138–1145

Li LY, Lu ZZ, Feng J, Wang BT (2012) Moment-independent importance measure of basic variable and its state dependent parameter solution. Struct Saf 38:40–47

Wei PF, Lu ZZ, Hao WR, Feng J, Wang BT (2012) Efficient sampling methods for global reliability sensitivity analysis. Comput Phys Commun 183(8):1728–1743

Yun WY, Lu ZZ, Jiang X (2019) An efficient method for moment-independent global sensitivity analysis by dimensional reduction technique and principle of maximum entropy. Reliab Eng Syst Saf 187:174–182

Zhang XF, Pandey MD (2013) Structural reliability analysis based on the concepts of entropy, fractional moment and dimensional reduction method. Struct Saf 43:28–40

Wang YP, Xiao SN, Lu ZZ (2019) An efficient method based on Bayes’ theorem to estimate the failure-probability-based sensitivity measure. Mech Syst Signal Process 115:607–620

Wang YP, Xiao SN, Lu ZZ (2018) A new efficient simulation method based on Bayes’ theorem and importance sampling Markov chain simulation to estimate the failure-probability-based global sensitivity measure. Aerosp Sci Technol 79:364–372

Yun WY, Lu ZZ, Zhang Y, Jiang X (2018) An efficient global reliability sensitivity analysis algorithm based on classification of model output and subset simulation. Struct Saf 74:49–57

Xiao SN, Lu ZZ (2017) Structural reliability sensitivity analysis based on classification of model output. Aerosp Sci Technol 71:52–61

Zellner A (2007) Generalizing the standard product rule of probability theory and Bayes’s Theorem. J Econom 138(1):14–23

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining Kriging and Monte Carlo simulation. Struct Saf 33(2):145–154

Echard B, Gayton N, Lemaire M, Relun N (2013) A combined Importance Sampling and Kriging reliability method for small failure probabilities with time-demanding numerical models. Reliab Eng Syst Saf 111:232–240

Cadini F, Santos F, Zio E (2014) An improved adaptive kriging-based importance technique for sampling multiple failure regions of low probability. Reliab Eng Syst Saf 131:109–117

Dubourg V, Sudret B, Deheeger F (2013) Metamodel-based importance sampling for structural reliability analysis. Probab Eng Mech 33:47–57

Huang XX, Chen JQ, Zhu HP (2016) Assessing small failure probabilities by AK–SS: an active learning method combining Kriging and Subset Simulation. Struct Saf 59:86–95

Depina I, Le TMH, Fenton G, Eiksund G (2016) Reliability analysis with Metamodel line sampling. Struct Saf 60:1–15

Engelund S, Rackwitz R (1993) A benchmark study on importance sampling techniques in structural reliability. Struct Saf 12(4):255–276

Alibrandi U, Der Kiureghian A (2012) A gradient-free method for determining the design point in nonlinear stochastic dynamic analysis. Probab Eng Mech 28:2–10

Ibrahim Y (1991) Observations on applications of importance sampling in structural reliability analysis. Struct Saf 9(4):269–281

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57(1):97–109

Rosenblatt M (1956) Remarks on some nonparametric estimates of a density function. Ann Math Stat 27(3):832–837

Parzen E (1962) On estimation of a probability density function and mode. Ann Math Statist 33(3):1065–1076

Cacoullos T (1966) Estimation of a multivariate density. Ann Math Stat 18(2):179–189

Botev ZI, Grotowski JF, Kroese DP (2010) Kernel density estimation via diffusion. Ann Statist 38(5):2916–2957

Au SK (2004) Probabilistic failure analysis by importance sampling Markov Chain simulation. J Eng Mech 130(3):303–311

Johnson AA, Jones GL, Neath RC (2013) Component-Wise Markov Chain Monte Carlo: uniform and geometric ergodicity under mixing and composition. Stat Sci 28(3):360–375

Rackwitz R, Flessler B (1978) Structural reliability under combined random load sequences. Comput Struct 9(5):489–494

Wang P, Lu ZZ, Tang ZC (2013) A derivative based sensitivity measure of failure probability in the presence of epistemic and aleatory uncertainties. Comput Math Appl 65:89–101

Lv ZY, Lu ZZ, Wang P (2015) A new learning function for Kriging and its applications to solve reliability problems in engineering. Comput Math Appl 70(5):1182–1197

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grants 51775439).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: The variation coefficient of the estimates

The variation coefficient of \(\hat{P}\left\{ F \right\}\) is estimated by the following equation:

The variation coefficient of \(\hat{P}\left\{ {F\left| {x_{ji} } \right.} \right\}\) is estimated by the following equation:

It is difficult to derive the variation coefficient of \(\hat{\xi }_{i}\) and no derivation process about the variation coefficient of \(\hat{\xi }_{i}\) has been found in the existing literature. Generally, the variation coefficient of \(\hat{\xi }_{i}\) can be obtained by repeated calculation process. Assumed that \(\hat{\xi }_{i}\) is calculated for \(N_{C}\) times by \(N_{1}\) samples, which are randomly generated for each time. The variation coefficient of \(\hat{\xi }_{i}\) can be obtained by the following equation:

where \(\hat{\xi }_{i}^{\left( q \right)}\) is the estimated value of \(\hat{\xi }_{i}\) calculated for the \(q\)-th time, and \(q = 1,2, \ldots ,N_{C}\).

Appendix B: Determination for \(N\) , \(N_{1}\) , \(N_{2}\) and \(M\)

Taking \(N\) as example, to meet the requirement for the variation coefficients of \(\hat{P}\left\{ F \right\}\), the number \(N\) is determined by the following process:

-

(1)

Generate \(N\) random samples of the inputs and construct the sample pool \({\mathbf{S}}^{f}\), \(N{ = }10000\) is advised at the first time to execute step (1);

-

(2)

Estimate the failure probability;

-

(3)

Calculate the variation coefficient of \(\hat{P}\left\{ F \right\}\), i.e., \(COV\left( {\hat{P}\left\{ F \right\}} \right)\), if \(COV\left( {\hat{P}\left\{ F \right\}} \right) < 5\%\), turn to step (5), else turn to step (4);

-

(4)

Set \(N{ = }N + 5000\) and turn to step (1);

-

(5)

Record the results of \(\hat{P}\left\{ F \right\}\), \(COV\left( {\hat{P}\left\{ F \right\}} \right)\).

The processes for determining \(N_{1}\), \(N_{2}\) and \(M\) are similar to that for \(N\) and no longer illustrated here.

Rights and permissions

About this article

Cite this article

Lei, J., Lu, Z. & Wang, L. An efficient method by nesting adaptive Kriging into Importance Sampling for failure-probability-based global sensitivity analysis. Engineering with Computers 38, 3595–3610 (2022). https://doi.org/10.1007/s00366-021-01402-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01402-x