Abstract

Reliability-based design optimization (RBDO) has been an important research field with the increasing demand for product reliability in practical applications. This paper presents a new RBDO method combining adaptive surrogate model and Importance Sampling-based Modified Sequential Optimization and Reliability Assessment (IS-based modified SORA) method, which aims to reduce the number of calls to the expensive objective function and constraint functions in RBDO. The proposed method consists of three key stages. First, the samples are sequentially selected to construct Kriging models with high classification accuracy for each constraint function. Second, the samples are obtained by Markov Chain Monte Carlo in the safety domain of design space. Then, another Kriging model for the objective function is sequentially constructed by adding suitable samples to update the Design of Experiment (DoE) of the objective function. Third, the expensive objective and constraint functions of the original optimization problem are replaced by the surrogate models. Then, the IS-based modified SORA method is performed to decouple reliability optimization problem into a series of deterministic optimization problems that are solved by a Genetic Algorithm. Several examples are adopted to verify the proposed method. The optimization results show that the proposed method can reduce the number of calls to the original objective function and constraint functions without loss of precision compared to the alternative methods, which illustrates the efficiency and accuracy of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In traditional engineering design optimization, the optimum design is obtained by deterministic design optimization (DDO) with regard to the limits of the problem constraints [1]. However, there are various uncertainties (such as material properties, structural dimensions, boundary conditions and loads) in practical engineering problems, and this can lead to the constraint functions with high failure probability at the deterministic optimal solution [2]. Therefore, reliability-based design optimization (RBDO) methods have been developed to achieve a balance between cost and safety, and thus produce designs that are not only reliable but also economical for the engineering systems with uncertainties [3,4,5]. A typical RBDO problem can be formulated as:

where \(C\) is the objective or cost function; \(G_{j}\) is the jth constraint function (i.e. performance function); \(\beta_{j}^{t}\) is the target reliability index for the jth probabilistic constraint; \(\varPhi\) is the standard normal cumulative distribution function; \(\varvec{d}\) represents distribution parameters of the random variable vector \(\varvec{x}\); \(n_{y}\) is the number of probabilistic constraints; \(\varvec{d}_{\varvec{L}}\) and \(\varvec{d}_{\varvec{U}}\) denote the lower and upper limits of distribution parameters \(\varvec{d}\), respectively.

Equation (1) shows that RBDO intrinsically involves a so-called double-loop procedure where the outer optimization loop includes inner loops of reliability analysis. In general, RBDO methods include simulation-based methods and analytical methods for solving the probabilistic constraints in Eq. (1) [2, 3].

Simulation-based methods are accurate and robust methods, which are mainly used for reliability assessment of the constraint functions of a RBDO problem in the complex engineering application. In the simulation-based methods, Monte Carlo simulation (MCS) is one of the most accurate simulation methods for approximating the failure probability, but it always involves heavy computation work [6, 7]. Many sampling techniques have been proposed to reduce the required samples, such as Importance Sampling (IS) method [8], Subset Simulation (SS) method [9, 10] and Line Sampling (LS) method [11]. Nevertheless, these techniques are more difficult to solve RBDO problems with small probabilities or implicit constraint functions. Many researchers have proposed and developed some simulation-based methods for solving the RBDO problems to improve the computational efficiency and effectiveness, such as cell evolution method [12], sampling-based method with a reweighting scheme [13] and weighed simulation method (WSM) [14, 15]. The WSM method is one of the most promising simulation-based methods, and it involves generating uniformly distributed samples and applying the product of probability distribution functions (PDFs) of the variables as a weight index at any sample. The failure probability is then regarded as the ratio of the sum of the weight indices in failure domain over the sum of the indices in the entire optimization design domain. Many practical methods have been proposed based on WSM for solving RBDO problems [1, 2, 16, 17], such as WSM–RBDO method [1], hybrid PSO–WSM method [2], improved weighted average simulation approach [16], and hybrid WSM–NSGA-II method [17]. However, the above-mentioned simulation methods require many expensive black-box function calls, which are often unacceptable, especially for the RBDO problems with time-consuming simulation models.

In general, analytical methods are more efficient than simulation-based methods for special RBDO problems such as linear systems with normal random variables since they are almost gradient based. Those methods include the moment matching method [18] and the Most Probable Point (MPP) [19, 20]-based method. In analytical methods, reliability index approach (RIA) and performance measurement approach (PMA) are the most commonly used MPP-based methods [2, 3]. RIA can convert the reliability constraints into the constraints of reliability indexes at the current design point by First-Order Reliability Method (FORM) and Second-Order Reliability Method (SORM), and then the RBDO problem is transformed into a relatively simple DDO problem [21, 22]. PMA, a more stable and efficient method than RIA, is proposed to reduce the computational cost of RBDO. PMA transforms the reliability constraint into deterministic constraints by solving the optimization problem of inverse MPP, which is easier with respect to the searching process of MPP [23, 24]. Lee et al. proved that PMA is more stable and efficient than RIA [25]. Moreover, Du et al. proposed the SORA method, and two optimization procedures in each iteration circle of the SORA method are included: the optimization with the modified constraints and the optimization searching for the inverse MPPs [3, 26]. In this way, the two-level optimization of RBDO is decoupled into serial single-level optimizations, which greatly reduces the computational cost. More recently, some modified methods based on the RIA, PMA, and SORA and some other analytical methods [27,28,29,30,31,32,33,34,35] were suggested, such as single loop approach (SLA) [27], sequential approximate programming [28], reliable design space (RDS) method [5], penalty-based decoupled approach (PDA) [29], approximate sequential optimization reliability assessment (ASORA) method [30]. In recent years, Keshtegar and his team have an indispensable contribution to enrich the analytical methods for RBDO [36,37,38,39,40,41,42], such as adaptive hybrid approach (AHA) [36], modified mean value (MMV) method [37], self-adaptive modified chaos control (SMCC) method [38], Hybrid descent mean value (HDMV) approach [39], enhanced single-loop method (ESM) [40], second-order reliability method-based stepped up sequential optimization and reliability assessment (SSORA–SORM) approach [41], and adaptive conjugate single-loop approach (AC-SLA) [42]. Moreover, some relatively efficient analytical methods for RBDO have recently been proposed, such as enriched self-adjusted mean value (ESMV) method [43], augmented step size adjustment (ASSA) method [44] and hybrid conjugate mean value (HCMV) method [45]. However, such methods still require a large number of calls. Meanwhile, these methods based on the MPPs or gradient have large errors for the constraint functions with high nonlinear, even difficult for complex engineering problems with implicit function.

In addition, with the increasing complexity of engineering problems, more and more researchers are turning their attention to approximation techniques (also named surrogate model or meta-model method). Surrogate models are often constructed to replace the original objective or constraint functions to solve RBDO problems [6, 26], thereby the computational efficiency is increased. Chen et al. proposed a variety of Kriging based methods for RBDO, such as local adaptive sampling (LAS) method [46], important boundary sampling (IBS) method [47] and local approximation method using the most probable point (LMPP) [48]. Shi et al. [49] developed a RBDO method using first-order score function analysis and adaptive response surface that is obtained by integrating Bayesian metric and Gaussian process-based model bias correction method. Lehký et al. [50] proposed an inverse reliability method based on Artificial Neural Network (ANN) and small-sample simulation. Yang et al. [6] proposed a RBDO framework that combines Particle Swarm Optimization (PSO), Support Vector Machine (SVM) and SS to enhance computational efficiency. Li et al. [26] proposed a sequential surrogate model method for reliability-based optimization (SSRBO) based on radial basis function (RBF) and MCS to reduce the number of the expensive black-box function calls in RBDO. Although these surrogate model-based RBDO methods can greatly reduce the number of calls to the original model, their computational efficiency is limited by the reliability assessment method. For example, the SSRBO with MCS [26] and the method proposed in [6] with SS have lower computational efficiency to assess the reliability of constraints for solving RBDO problems.

In this paper, a new RBDO method based on adaptive surrogate model and importance sampling-based modified SORA method is proposed to reduce the number of original objective function and constraint functions calls and ensure computational efficiency. In practical engineering problems, constraint conditions or boundary conditions are usually dependent with each other, which makes the required samples to construct the surrogate models of the constraint functions independent. So, we assume that the constraint functions are dependent on each other and the constraint function is independent of the objective function in this paper. First, the adaptive Kriging models of the constraint functions are sequentially constructed until the accuracy requirements are met. Second, sufficient samples are generated by MCMC in the safety domain of design space, and then another Kriging model for the objective function is constructed by adaptively adding suitable samples to update the DoE of the objective function. After the Kriging models of the constraint functions and the Kriging model of the objective function are constructed, the expensive objective and constraint functions of the original optimization problem are replaced by these Kriging models. Third, the IS-based modified SORA method that combines the advantage of IS in accuracy and the advantage of SORA in efficiency is performed to decouple reliability optimization problem into a series of deterministic optimization problems that are solved by a Genetic Algorithm (GA). At last, the optimal solution of the original RBDO problem is obtained.

The remainder of this paper is presented as follows. The basic theories and formulations of main methods used in our method are introduced in Sect. 2. The detailed principles and process of the proposed method are described and discussed in Sect. 3. Four numerical examples are used to validate the efficiency and accuracy of the proposed method in Sect. 4. Finally, conclusions and future work are summarized in Sect. 5.

2 Basic theories and formulations

2.1 Kriging model

Kriging model is a statistical theory-based interpolation technique, and it consists of a parametric linear regression model and a nonparametric stochastic process [51,52,53,54]. Assuming that those input variables are defined as x and the corresponding response is defined as G(x), Kriging is given as:

where the averaged approximation response \(F\left( {\varvec{\beta},\varvec{x}} \right)\) is defined as an ordinary polynomial regression of x, and it is simplified to be a constant in the ordinary Kriging, which is taken as \(F\left( {\varvec{\beta},\varvec{x}} \right) = \beta\). All the following formulas are deduced with the simplification of ordinary Kriging, and \(\widehat{G}\left( \varvec{x} \right)\) can be simplified as

Here \(\varvec{\delta}\left( \varvec{x} \right)\) is a zero-mean stationary Gaussian process, and the auto-covariance of samples x and w is expressed as

where σ2 is the variance of the process. Auto-correlation function \(R\left( {\varvec{x},\varvec{w}} \right)\) can also be considered by several functions, such as cubic correlation function, exponential correlation function, and gauss correlation function. The gauss correlation function is employed in this paper, and it is defined as Eq. (5).

where \(n_{x}\) is the dimension of design variables. \(x_{i}\) and \(w_{i}\) denote the ith component of variable x and variable w, respectively. \(\theta_{i}\) is the correlation parameter to ensure the Kriging model with an high flexibility.

\(\varvec{X} = \left[ {\varvec{x}_{1} ,\varvec{x}_{2} , \ldots ,\varvec{x}_{{N_{d} }} } \right]^{T} \left( {\varvec{x}_{i} \in R^{n} } \right)\) and \(\varvec{Z} = \left[ {G\left( {\varvec{x}_{1} } \right),G\left( {\varvec{x}_{2} } \right), \ldots ,G\left( {\varvec{x}_{{N_{d} }} } \right)} \right]^{T} \left( {G\left( {\varvec{x}_{i} } \right) \in R} \right)\) indicate \(N_{d}\) experimental samples and the true responses of these samples in DoE. We define \(\varvec{R} = \left[ {R\left( {\varvec{x}_{i} ,\varvec{x}_{j} } \right)} \right]_{{N_{d} \times N_{d} }}\) and F as a \(N_{d} \times 1\) unit vector, and the unbiased estimator \(\widehat{\beta }\) and \(\widehat{\sigma }^{2}\) of the unknown parameters β and σ2 can be deduced with least squares method:

The parameter β of regression coefficients and the parameter σ2 of constant process are dependent on the correlation parameters θ through the correlation matrix. Therefore, the correlation parameters θ must first be determined by maximum likelihood estimation with Eq. (8).

After determining θ, β and σ2 are defined, the linear unbiased estimator \(\widehat{G}\left( {\varvec{x}_{p} } \right)\) and \(\widehat{\sigma }_{{\widehat{G}}}^{2} \left( {\varvec{x}_{p} } \right)\) of the response and Kriging variance for the sample \(\varvec{x}_{p}\) to be predicted can be computed by Eq. (9) and Eq. (10), respectively.

where \(\varvec{r}\left( {\varvec{x}_{p} } \right)^{T}\) is a \(N_{d}\)-dimensional row vector representing the dependence between the predicted sample \(\varvec{x}_{p}\) and each sample in experimental samples \(\varvec{X}\), and it can be expressed as \(\varvec{r}\left( {\varvec{x}_{p} } \right)^{T} = \left[ {R\left( {\varvec{x}_{p} ,\varvec{x}_{1} } \right),R\left( {\varvec{x}_{p} ,\varvec{x}_{2} } \right), \ldots ,R\left( {\varvec{x}_{p} ,\varvec{x}_{{N_{d} }} } \right)} \right]^{\varvec{T}}\), and \(\varvec{u}\left( {\varvec{x}_{p} } \right)^{T}\) = \(\varvec{F}^{T} \varvec{R}^{ - 1} \varvec{r}\left( {\varvec{x}_{p} } \right) - 1\).

The calculating process of the predictor response \(\widehat{G}\left( {\varvec{x}_{p} } \right)\) and the Kriging variance \(\widehat{\sigma }_{{\widehat{G}}}^{2} \left( {\varvec{x}_{p} } \right)\) can be implemented by the MATLAB DACE toolbox [52], which has been applied in several references [53, 54].

2.2 Markov chain Monte Carlo

Markov Chain Monte Carlo (MCMC) simulation is a powerful approach for sampling according to an arbitrary probability distribution. In MCMC, samples are simulated as the states of a Markov Chain which has the target probability distribution as its limit stationary distribution under the Traversal Hypothesis. Since the MCMC method with the Metropolis–Hastings algorithm becomes inefficient to simulate samples with many independent components, Au and Beck [10] proposed a component-wise (or modified M–H) algorithm to improve the sampling efficiency of MCMC. Instead of using an n-dimensional proposal PDF in the original method, each coordinate \(\xi_{j}\) of the pre-candidate \(\xi\) is generated from a one-dimensional proposal PDF \(p_{j}^{ *} \left( {\xi_{j} |x_{j} } \right)\), which depends on the jth coordinate \(x_{j}\) of the current state. And the proposal PDF \(p_{j}^{ *} \left( {\xi_{j} |x_{j} } \right)\) has a symmetry property, i.e. \(p_{j}^{*} \left( {\xi |x} \right) = p_{j}^{*} \left( {x |\xi } \right)\).

In this paper, the MCMC simulation with component-wise algorithm is employed to generate \(N_{m}\) samples that are lying in the required safety region. The process of generating a sequence of samples \(\left\{ {\varvec{x}_{1} ,\varvec{x}_{2} , \ldots ,\varvec{x}_{{N_{m} }} } \right\}\) from a given or randomly generated sample \(\varvec{x}_{1}\) to \(\varvec{x}_{{N_{m} }}\) is briefly summarized as follows [55, 56]:

-

(1)

Generate candidate sample \(\widetilde{\varvec{x}}\)

-

(a)

Generate \(\xi_{j}\) by sampling from the proposal PDF \(p_{j}^{*} \left( {\xi_{j} |x_{kj} } \right)\).

In the process of Markov Chain, the transfer from a state to another state is controlled by the proposal distribution. In this paper, an n-dimensional uniform distribution within the interval \([x_{kj} - l_{j} /2,x_{kj} + l_{j} /2]\left( {j = 1,2, \ldots ,n} \right)\) is selected as the proposal distribution, that is,

$$p_{j}^{*} \left( {\xi_{j} |x_{kj} } \right) = \left\{ {\begin{array}{*{20}c} {1/l_{j} } & {i{\text{f}}\; \left| {\xi_{j} - x_{kj} } \right| \le l_{j} /2 } \\ 0 & {\text{else}} \\ \end{array} , } \right.$$(11)where \(\xi_{j}\) and \(x_{kj}\) are the jth component of n dimensional vector \(\varvec{\xi}\) and the kth sample x of MCMC, respectively. \(l_{j}\) is the side length of \(x_{kj}\) component of n-dimensional hypercube, and it determines the maximum allowable range of the next sample deviate from the current sample. \(l_{j}\) takes the empirical value \(6N_{m}^{{ - 1/\left( {n + 4} \right)}}\).

-

(b)

Accept or reject \(\xi_{j}\)

$$\widetilde{x}_{j} = \left\{ {\begin{array}{*{20}l} {\xi_{j} ,{\text{with }}\;{\text{probability}}\;{ \hbox{min} }\left\{ {1,\frac{{q_{j} \left( {\xi_{j} } \right)}}{{q_{j} \left( {x_{kj} } \right)}}} \right\} } \hfill \\ {x_{kj} ,{\text{with }}\;{\text{probability}} 1 - { \hbox{min} }\left\{ {1,\frac{{q_{j} \left( {\xi_{j} } \right)}}{{q_{j} \left( {x_{kj} } \right)}}} \right\}} \hfill \\ \end{array} } \right.,$$(12)where \(q_{j} \left( \cdot \right)\) is the jth coordinate \(x_{j}\) of the limit stationary distribution q(x) of Markov Chain.

-

(a)

-

(2)

Accept or reject \(\widetilde{\varvec{x}}\)

$$\varvec{x}_{k + 1} = \left\{ {\begin{array}{*{20}l} {\widetilde{\varvec{x}}\varvec{ }} & {\widetilde{\varvec{x}}\varvec{ }{\text{in}}\varvec{ D}_{\varvec{S}} \varvec{ }} \\ {\varvec{x}_{k} } & {\widetilde{\varvec{x}}\varvec{ }{\text{not}}\;{\text{in}}\varvec{ D}_{\varvec{S}} } \\ \end{array} , } \right.$$(13)where \(\varvec{D}_{\varvec{S}}\) is the required safety region. Equation (13) expresses that the next sample \(\varvec{x}_{k + 1}\) is set to candidate sample \(\widetilde{\varvec{x}}\varvec{ }\) or the last sample \(\varvec{x}_{k}\) according to whether candidate sample \(\widetilde{\varvec{x}}\varvec{ }\) lies in \(\varvec{D}_{\varvec{S}}\) or not.

Here, only a brief introduction to MCMC simulation is provided. For more details, the reader may refer to the references [10, 56].

2.3 Importance sampling for reliability assessment

Generally, failure probability \(P_{F}\) can be expressed as follows [8, 57],

where \(\varvec{x}\) denotes basic input variables, \(G\left( \varvec{x} \right)\) is the performance function of constraint function, \(R^{n}\) shows the n-dimension variable space, \(I_{F}\) is the denoting function, \(I_{F} \left( \varvec{x} \right) = 1\) when \(G\left( \varvec{x} \right) \le 0\) and \(I_{F} \left( \varvec{x} \right) = 0\) when \(G\left( \varvec{x} \right) > 0\) as an opposite, and \(f_{\varvec{x}} \left( \varvec{x} \right)\) is the joint probability density function of basic variables.

The basic principle of importance sampling is to shift the sampling center towards the failure region to gain information more efficiently. Equation (14) can be expressed in Eq. (15) by introducing importance the sampling density function \(h_{\varvec{x}} \left( \varvec{x} \right)\).

In this paper, the sampling density function is determined by moving sampling center to the design point (also the most probable failure point) that obtained by simulation-based method.

2.4 Sequential optimization and reliability assessment

The sequential optimization and reliability assessment (SORA) method was proposed to decouple a double-loop process of the conventional RBDO method [3]. The flowchart of the SORA approach is shown in Fig. 1.

In SORA, a sequence of deterministic optimization and reliability estimation are repeatedly performed at each cycle. The PMA method is used to estimate the MPP \(\varvec{x}_{j}^{{\varvec{*}\left( k \right)}}\) with the design variables \(\varvec{d}^{\left( k \right)}\) of the kth cycle. A shift vector \(\varvec{s}_{j}^{\left( k \right)}\) is calculated as follows:

The failure surface of the probabilistic constraint is shifted to the feasible direction based on the obtained shift vector \(\varvec{s}_{j}^{\left( k \right)}\). Deterministic optimization is performed with shifted constraints to find new design variables as follows:

The process continues until the convergence condition is satisfied. The SORA method uses the reliability information from the previous cycle to shift to the violated deterministic constraints in the feasible domain.

3 The proposed RBDO method

3.1 Basic principles

In this paper, the proposed method can be treated as an improvement of the sequential optimization and reliability assessment (SORA) method by surrogate model and importance sampling. It constructs surrogate models of the constraint functions and the objective function in original RBDO problem and replaces them. Then, the surrogate model-based RBDO is solved by the Importance Sampling-based modified Sequential Optimization and Reliability Assessment (IS-based modified SORA) method that is proposed in this paper.

As shown in Fig. 2, the proposed method can be divided into three stages: constructing surrogate models of the constraint functions, constructing surrogate model of the objective function and solving RBDO by IS-based modified SORA. In the first phase, the surrogate models are constructed to approximate the classification boundaries of constraint functions with the active learning mechanism, which makes the constructed surrogate models with higher classification accuracy. In the second phase, a large number of samples are generated by the above-mentioned MCMC in the safety region with the constructed surrogate models of constraint functions. Subsequently, an initial surrogate model of the objective function is constructed, and it is sequentially updated with another active learning mechanism until the accuracy requirements are satisfied. After the first two phases are completed, the objective function and constraint functions in original RBDO problem are replaced by the constructed surrogate models, respectively. At last, the third phase is executed with an IS-based modified SORA method, and then the original RBDO problem is decoupled into a sequence of deterministic optimization problem that is solved by a Genetic Algorithm (GA).

Detailed computational steps of each stage in the proposed method are explained in Sects. 3.2, 3.3 and 3.4, respectively.

3.2 Phase 1: constructing surrogate models of constraint functions

To further reduce the number of calls to the constraint functions and the objective function in the process of RBDO, the approximation technique is used to construct available surrogate models. Subsequently, the constraint functions and the objective function in the original RBDO problem are replaced by the corresponding surrogate models, respectively. Kriging model is one of the most popular surrogate models currently, and it is used in this paper.

In this section, the ‘Phase 1’ for constructing surrogate models of constraint functions in proposed method is introduced as the following steps. The flowchart of ‘Phase 1’ is shown in Fig. 2.

- Step 1.1:

-

Transform design space For the purpose of eliminating the influence of the variable magnitudes, the first step is the transformation of design variable or design space \(\left[ {\varvec{d}_{\varvec{L}} ,\varvec{d}_{\varvec{U}} } \right]\), constraint functions \(\left( {G_{j} \left( \varvec{x} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)} \right)\) and objective function \(\left( {C\left( \varvec{x} \right)} \right)\). The optimization design interval of each variable is usually a closed interval that can be determined in reliability optimization problems, while the optimization range of half-open or full-open can also be determined according to the “6σ criterion”. For simplicity, we assume that the optimization design interval of each variable is a closed interval. The design space \(\left[ {\varvec{d}_{\varvec{L}} ,\varvec{d}_{\varvec{U}} } \right]\) is converted to \(\left[ {0,1} \right]^{{n_{x} }}\) (\(n_{x}\) is the number of random variables), and the transformation between \(\varvec{y} \in \left[ {0,1} \right]^{{n_{x} }}\) and \(\varvec{x} \in \left[ {\varvec{d}_{\varvec{L}} ,\varvec{d}_{\varvec{U}} } \right]\) can be expressed as Eq. (18). Then, the functions (\({\text{g}}_{j} \left( \varvec{y} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\)) are also converted to the corresponding constraint functions \(G_{j} \left( \varvec{x} \right)\left( {j = 1,2, \cdots ,n_{y} } \right)\) by Eq. (19), and the function \(\left( {c\left( \varvec{y} \right)} \right)\) is converted to the corresponding objective function \(C\left( \varvec{x} \right)\) by Eq. (20)

$$\varvec{y} = \frac{{\varvec{x} - \varvec{x}_{L} }}{{\varvec{d}_{U} - \varvec{d}_{L} }},$$(18)$$G_{j} \left( \varvec{x} \right) = G_{j} \left( {\varvec{y}\left( {\varvec{d}_{\varvec{U}} - \varvec{d}_{\varvec{L}} } \right) + \varvec{d}_{\varvec{L}} } \right) = {\text{g}}_{j} \left( \varvec{y} \right),$$(19)$$C\left( \varvec{x} \right) = C\left( {\varvec{y}\left( {\varvec{d}_{\varvec{U}} - \varvec{d}_{\varvec{L}} } \right) + \varvec{d}_{\varvec{L}} } \right) = c\left( \varvec{y} \right).$$(20) - Step 1.2:

-

Generate uniform sample set The constructed surrogate models \(\widehat{\text{g}}_{j} \left( \varvec{y} \right)\) of constraint functions \({\text{g}}_{j} \left( \varvec{y} \right)\) should have higher classification accuracy in reliability analysis, and the training samples used to construct surrogate model should be updated to improve the accuracy of constructed surrogate models. So, a uniform sample set is adopted to catch the global capacity of the model classification and update the training samples, and it is termed as \(\varvec{Y}_{U}\). In this paper, the Halton sampling approach [58] as the one of available uniform sampling approaches is employed to generate \(N_{us}\) uniform samples for constructing the set \(\varvec{Y}_{U} = \left[ {\varvec{y}_{U}^{1} ,\varvec{y}_{U}^{2} , \ldots ,\varvec{y}_{U}^{{N_{us} }} } \right]^{T}\) in \(\left[ {0,1} \right]^{{n_{x} }}\). The reference value of \(N_{us}\) is recommended to be set as \(\left[ {a\sqrt {n_{x} } } \right]\) (the symbol ‘\([ \cdot ]\)’ is an upwardly integer operator symbol), and it means that the average number of samples projected on the unit diagonal is equal to \(a\). As a result of the inexpensive calculations of constructed surrogate models for constraint functions, the parameter \(a\) can be set to a larger value, and \(a = 10^{5}\) is sufficient for a more accurate result in the proposed method.

- Step 1.3:

-

Construct initial Design of Experiment (DoE) for constraint functions An initial DoE is required to construct the initial surrogate models of constraint functions, and set z = 1. To ensure the spatial filling characteristics of the initial samples, the Maximum Minimum Distance Criterion is adopted to select \(N_{d}\) samples in \(\varvec{Y}_{U}\), sequentially. The number \(N_{d}\) of initial DoE is taken as \(\left[ {b\sqrt {n_{x} } } \right]\). \(b\) can generally be selected as 6–12, and it is taken as 6 in this paper. For more accurate initial surrogate model, a larger one should be taken. After determining the \(N_{d}\) samples, the functions \({\text{g}}_{j} \left( \varvec{y} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\) are called to compute the values of corresponding constraint functions \(G_{j} \left( \varvec{x} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\). The initial DoE \(\varvec{S}\) can be expressed as the following equation.

$$\varvec{S} = \left[ {\begin{array}{*{20}c} {\varvec{y}_{S}^{1} ,{\text{g}}_{1} \left( {\varvec{y}_{S}^{1} } \right),{\text{g}}_{2} \left( {\varvec{y}_{S}^{1} } \right), \ldots ,{\text{g}}_{{n_{y} }} \left( {\varvec{y}_{S}^{1} } \right)} \\ {\varvec{y}_{S}^{2} ,{\text{g}}_{1} \left( {\varvec{y}_{S}^{2} } \right),{\text{g}}_{2} \left( {\varvec{y}_{S}^{2} } \right), \ldots ,{\text{g}}_{{n_{y} }} \left( {\varvec{y}_{S}^{2} } \right)} \\ \vdots \\ {\varvec{y}_{S}^{{N_{d} }} ,{\text{g}}_{1} \left( {\varvec{y}_{S}^{{N_{d} }} } \right),{\text{g}}_{2} \left( {\varvec{y}_{S}^{{N_{d} }} } \right), \ldots ,{\text{g}}_{{n_{y} }} \left( {\varvec{y}_{S}^{{N_{d} }} } \right)} \\ \end{array} } \right],$$(21) - Step 1.4:

-

Build or rebuild Kriging models for constraint functions In this step, the surrogate model \(\widehat{g}_{j} \left( \varvec{y} \right)\) is constructed or reconstructed with the current DoE \(\varvec{S}_{j}\), and \(\varvec{S}_{j}\) expressed in Eq. (22) denotes the DoE for the jth function \({\text{g}}_{j} \left( \varvec{y} \right)\) corresponding to the jth constraint function \(G_{j} \left( \varvec{x} \right)\).

$$\varvec{S}_{j} = \left[ {\begin{array}{*{20}c} {\varvec{y}_{S}^{1} ,{\text{g}}_{j} \left( {\varvec{y}_{S}^{1} } \right)} \\ {\varvec{y}_{S}^{2} ,{\text{g}}_{j} \left( {\varvec{y}_{S}^{2} } \right)} \\ \vdots \\ {\varvec{y}_{S}^{{N_{d} }} ,{\text{g}}_{j} \left( {\varvec{y}_{S}^{{N_{d} }} } \right)} \\ \end{array} } \right],$$(22) - Step 1.5:

-

Implement active learning process and identify the optimal next sample For adaptive surrogate model methods, one of the main concerns is to determine the location of the selected new training sample in iterations. In the adaptive process for constructing surrogate models, it is necessary to select the optimal new training samples with a learning strategy. Therefore, a learning strategy for constraint functions is developed to select the optimal new training samples that not only locate far away from the existing training samples, but also have a higher chance of being misclassified.

To make the optimal next sample far away from the existing training samples, the Euclidean distance \(d_{i}^{j}\) between ith sample \(\varvec{y}_{U}^{i}\) in \(\varvec{Y}_{U}\) and jth sample \(\varvec{y}_{S}^{j}\) in \(\varvec{S}\) can be calculated by Eq. (23). Then, the distance matrix \(\varvec{D}\) is obtained and presented in Eq. (24). Let \(D_{ \hbox{min} }^{i}\) be the minimum value of the ith row of distance matrix \(\varvec{D}\), and it denotes the Euclidean distance between the sample \(\varvec{y}_{U}^{i}\) and each existing sample in \(\varvec{S}\). \(D_{ \hbox{min} }^{i}\) is calculated by Eq. (25), and then the minimum distance matrix\(\varvec{D}_{ \hbox{min} }\) is obtained as presented in Eq. (26).

$$d_{i}^{j} = \parallel \varvec{y}_{U}^{i} - \varvec{y}_{S}^{j} \parallel ,$$(23)$$\varvec{D} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {d_{1}^{1} } & {d_{1}^{2} } \\ {d_{2}^{1} } & {d_{2}^{2} } \\ \end{array} } & \cdots & {\begin{array}{*{20}c} {d_{1}^{{N_{d} }} } \\ {d_{2}^{{N_{d} }} } \\ \end{array} } \\ \vdots & \ddots & \vdots \\ {\begin{array}{*{20}c} {d_{{N_{us} }}^{1} } & {d_{{N_{us} }}^{2} } \\ \end{array} } & \cdots & {d_{{N_{us} }}^{{N_{d} }} } \\ \end{array} } \right],$$(24)$$D_{min}^{i} = \hbox{min} \left[ {\varvec{D}\left( {i,:} \right)} \right],i = 1,2, \ldots ,N_{us} ,$$(25)$$\varvec{D}_{ \hbox{min} } = \left[ {D_{ \hbox{min} }^{1} ,D_{ \hbox{min} }^{2} , \ldots ,D_{ \hbox{min} }^{{N_{us} }} } \right]^{T} ,$$(26)After obtaining the minimum distance matrix \(\varvec{D}_{ \hbox{min} }\), the samples in \(\varvec{Y}_{U}\) are evaluated by \(\widehat{\text{g}}_{j} \left( \varvec{y} \right)\), and the predictor response \(\widehat{\text{g}}_{j} \left( {\varvec{y}_{U}^{i} } \right)\) and the Kriging variance \(\widehat{\sigma }_{{\widehat{\text{g}}_{j} }} \left( {\varvec{y}_{U}^{i} } \right)\) can be obtained. The results are stored in \(\varvec{Y}_{P}\) and \(\varvec{\sigma}_{P}\) as presented in Eq. (27) and Eq. (28), respectively. \(y_{j}^{i}\) and \(\sigma_{j}^{i}\) denote that the predictor response and the standard deviation of the ith sample \(\varvec{y}_{U}^{i}\) in \(\varvec{Y}_{U}\) are predicted by the jth function \(\widehat{\text{g}}_{j} \left( \varvec{y} \right)\), respectively. Subsequently, the misclassification probability \(p_{m}^{ij}\) of the ith sample \(\varvec{y}_{U}^{i}\) in \(\varvec{Y}_{U}\) for the jth function \(\widehat{\text{g}}_{j} \left( \varvec{y} \right)\) can be calculated by Eq. (29) [50, 54], and then the misclassification probability matrix \(\varvec{P}_{m}\) is obtained as presented in Eq. (30).

$$\varvec{Y}_{P} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {y_{1}^{1} } & {y_{2}^{1} } \\ {y_{1}^{2} } & {y_{2}^{2} } \\ \end{array} } & \cdots & {\begin{array}{*{20}c} {y_{{n_{y} }}^{1} } \\ {y_{{n_{y} }}^{2} } \\ \end{array} } \\ \vdots & \ddots & \vdots \\ {\begin{array}{*{20}c} {y_{1}^{{N_{us} }} } & {y_{2}^{{N_{us} }} } \\ \end{array} } & \cdots & {y_{{n_{y} }}^{{N_{us} }} } \\ \end{array} } \right],$$(27)$$\varvec{\sigma}_{P} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {\sigma_{1}^{1} } & {\sigma_{2}^{1} } \\ {\sigma_{1}^{2} } & {\sigma_{2}^{2} } \\ \end{array} } & \cdots & {\begin{array}{*{20}c} {\sigma_{{n_{y} }}^{1} } \\ {\sigma_{{n_{y} }}^{2} } \\ \end{array} } \\ \vdots & \ddots & \vdots \\ {\begin{array}{*{20}c} {\sigma_{1}^{{N_{us} }} } & {\sigma_{2}^{{N_{us} }} } \\ \end{array} } & \cdots & {\sigma_{{n_{y} }}^{{N_{us} }} } \\ \end{array} } \right],$$(28)$$p_{m}^{ij} \left( {\varvec{y}_{U}^{i} } \right) = \varPhi \left( { - \frac{{\left| {y_{j}^{i} } \right|}}{{\sigma_{j}^{i} }}} \right),$$(29)$$\varvec{P}_{m} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {p_{m}^{11} } & {p_{m}^{12} } \\ {p_{m}^{21} } & {p_{m}^{22} } \\ \end{array} } & \cdots & {\begin{array}{*{20}c} {p_{m}^{{1n_{y} }} } \\ {p_{m}^{{2n_{y} }} } \\ \end{array} } \\ \vdots & \ddots & \vdots \\ {\begin{array}{*{20}c} {p_{m}^{{N_{us} 1}} } & {p_{m}^{{N_{us} 2}} } \\ \end{array} } & \cdots & {p_{m}^{{N_{us} n_{y} }} } \\ \end{array} } \right],$$(30) - Step 1.6:

-

For the purpose of meeting the requirement of the aforementioned learning strategy, the product \(L_{DP} \left( {i,j} \right)\) of minimum distance \(\varvec{D}_{ \hbox{min} } \left( i \right)\) and \(\varvec{P}_{m} \left( {i,j} \right)\) is calculated by Eq. (31), and then the matrix \(\varvec{L}_{DP}\) is obtained as presented in Eq. (32). The maximum value \(L_{ \hbox{max} }\) of matrix \(\varvec{L}_{DP}\) is computed by Eq. (33). Then, the element \(L_{DP} \left( {i_{\text{opt}} ,j_{\text{opt}} } \right)\) in the matrix \(\varvec{L}_{DP}\) can be positioned by judging whether its value is equal to the maximum value \(L_{ \hbox{max} }\), that is, \(L_{DP} \left( {i_{\text{opt}} ,j_{\text{opt}} } \right) = L_{ \hbox{max} }\). Finally, the optimal next sample is determined in \(\varvec{Y}_{U}\), and it is named as \(\varvec{y}_{U}^{{i_{\text{opt}} }}\). Based on this learning strategy, it can be ensured that the selected optimal sample with a higher chance of being misclassified is located far away from the existing training samples.

$$L_{DP} \left( {i,j} \right) = D_{ \hbox{min} }^{i} p_{m}^{ij} ,$$(31)$$\varvec{L}_{DP} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {L_{DP} \left( {1,1} \right)} & {L_{DP} \left( {1,2} \right)} \\ {L_{DP} \left( {2,1} \right)} & {L_{DP} \left( {2,2} \right)} \\ \end{array} } & \cdots & {\begin{array}{*{20}c} {L_{DP} \left( {1,n_{y} } \right)} \\ {L_{DP} \left( {2,n_{y} } \right)} \\ \end{array} } \\ \vdots & \ddots & \vdots \\ {\begin{array}{*{20}c} {L_{DP} \left( {N_{us} ,1} \right)} & {L_{DP} \left( {N_{us} ,2} \right)} \\ \end{array} } & \cdots & {L_{DP} \left( {N_{us} ,n_{y} } \right)} \\ \end{array} } \right],$$(32)$$L_{ \hbox{max} } = \mathop {\hbox{max} }\limits_{i,j} \left[ {L_{DP} \left( {i,j} \right)} \right],i = 1,2, \ldots ,N_{us} ,j = 1,2, \ldots ,n_{y},$$(33) - Step 1.7:

-

Calculate convergence index and judge convergence criteria for Kriging models of constraint functions The DoE and the constructed Kriging models of constraint functions are successively updated, and a stopping criteria is required. To fully utilize the binary classification feature in reliability analysis with constraint functions, a misclassification index between two adjacent iterations is proposed to judge whether the classification boundary of the constructed Kriging model is stable.

At the zth iteration, the classification label \(C_{\text{label}}^{\left( z \right)} \left( {i,j} \right)\) with the prediction value \(y_{j}^{i}\) at the ith sample \(\varvec{y}_{U}^{i}\) in \(\varvec{Y}_{U}\) by the jth Kriging model \(\widehat{\text{g}}_{j} \left( \varvec{y} \right)\) should be calculated by Eq. (34). Assume that \(C_{\text{label}}^{\left( 0 \right)} \left( {i,j} \right)\) equals to 0. Subsequently, the classification label matrix \(\varvec{C}_{\text{label}}^{\left( z \right)}\) can be written as Eq. (35) according the Eq. (27). The misclassification index \(\delta_{\text{mis}}^{\left( z \right)} \left( j \right)\) can describe the difference of classification boundary between jth constructed Kriging model \(\widehat{\text{g}}_{j}\) at the zth iteration and the one at the (z-1)th iteration, and it can be calculated by Eq. (36).

$$C_{\text{label}}^{\left( z \right)} \left( {i,j} \right) = \left\{ {\begin{array}{*{20}c} 1 & {y_{j}^{i} > 0} \\ { - 1} & {y_{j}^{i} \le 0} \\ \end{array} } \right. ,$$(34)$$\varvec{C}_{\text{label}}^{\left( z \right)} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {C_{\text{label}}^{\left( z \right)} \left( {1,1} \right)} & {C_{\text{label}}^{\left( z \right)} \left( {1,2} \right)} \\ {C_{\text{label}}^{\left( z \right)} \left( {2,1} \right)} & {C_{\text{label}}^{\left( z \right)} \left( {2,2} \right)} \\ \end{array} } & \cdots & {\begin{array}{*{20}c} {C_{\text{label}}^{\left( z \right)} \left( {1,n_{y} } \right)} \\ {C_{\text{label}}^{\left( z \right)} \left( {2,n_{y} } \right)} \\ \end{array} } \\ \vdots & \ddots & \vdots \\ {\begin{array}{*{20}c} {C_{\text{label}}^{\left( z \right)} \left( {N_{us} ,1} \right)} & {C_{\text{label}}^{\left( z \right)} \left( {N_{us} ,2} \right)} \\ \end{array} } & \cdots & {C_{\text{label}}^{\left( z \right)} \left( {N_{us} ,n_{y} } \right)} \\ \end{array} } \right],$$(35)$$\delta_{\text{mis}}^{\left( z \right)} \left( j \right) = \frac{1}{{2N_{us} }}\mathop \sum \limits_{i = 1}^{{N_{us} }} \left| {C_{\text{label}}^{\left( z \right)} \left( {i,j} \right) - C_{\text{label}}^{{\left( {z - 1} \right)}} \left( {i,j} \right)} \right|,$$(36)For the purpose of making the constructed Kriging model of each constraint function meet the requirements of classification accuracy, the stopping criterion can be defined as

$$\delta_{\text{mis}}^{ \hbox{max} } \le \Delta \delta ,$$(37)where \(\delta_{\text{mis}}^{ \hbox{max} } = \hbox{max} \left[ {\delta_{\text{mis}}^{\left( z \right)} \left( j \right)} \right],\left( {j = 1,2, \ldots ,n_{y} } \right)\),.\(\Delta \delta\). is a small positive number that can be set by user. To balance accuracy and efficiency, \(\Delta \delta\) can be taken as a number in the range: \(1 \times 10^{ - 5} \sim1 \times 10^{ - 4}\). A smaller value should be taken for a more accurate result. In this paper, \(\Delta \delta\) is set to \(5 \times 10^{ - 5}\), which is sufficient for obtaining Kriging models of each constraint function with an accurate and efficient classification result in the proposed method.

If the stopping criterion is satisfied, the active learning process is stopped, and the algorithm goes to next step, otherwise it goes to next Step 1.7.

- Step 1.8:

-

Update previous DoE If the stopping criterion is not satisfied, the previous Kriging models of each constraint function are not considered to be precise enough to approximate classification boundaries of the actual constraint functions. Consequently, previous DoE \(\varvec{S}\) should be updated. Firstly, the optimal next sample identified in Step 1.5 is evaluated by calling the functions \({\text{g}}_{j} \left( \varvec{y} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\), and then the evaluations and the sample are added to previous DoE \(\varvec{S}\). Simultaneously, set z = z + 1 and \(N_{d} = N_{d} + 1\).

3.3 Phase 2: constructing surrogate model of objective function

Since the optimal design needs to meet the requirements of reliability constraints, it should be located in the safety domain determined by the constraint functions. Therefore, it is necessary that the constructed surrogate model of objective function has sufficient prediction accuracy for evaluating samples within the safety domain, and the prediction accuracy for samples within the failure domain can be relaxed. In this section, the ‘Phase 2’ for constructing surrogate models of objective function in proposed method is introduced in the following steps, and the flowchart of ‘Phase 2’ is presented in Fig. 2.

- Step 2.1:

-

Determine initial sample of each Markov chain In general, at least one constraint function is included in RBDO problem. To more uniformly simulate the samples in the safety domain, the samples are uniformly simulated using Markov chain Metropolis algorithm with multiple Markov chains, and the number of Markov chains is equal to the number \(n_{y}\) of constraint functions. The initial point \(\varvec{y}_{0}\) of the optimization iteration is defined as the mean point of the optimized design space, which is \(\varvec{y}_{0} = \left[ {0.5,0.5, \ldots ,0.5} \right]_{{1 \times n_{x} }}\). The joint probability density value of each sample in \(\varvec{Y}_{U}\) is evaluated with this initial point as the mean point of the reliability analysis, and then the maximum possible failure point \(\varvec{y}_{\text{MPP}}^{j}\) in \(\varvec{Y}_{U}\) is determined by each Kriging model \(\widehat{\text{g}}_{j} \left( \varvec{y} \right)\).

- Step 2.2:

-

Generate Markov chains and set up candidate sample set \(n_{y}\) Markov chains are generated, and each chain contains \(N_{m}\) samples with the initial point \(\varvec{y}_{\text{MPP}}^{j}\) of each constraint function. Subsequently, all samples in these Markov chains are used to set up the candidate sample set \(\varvec{Y}_{M}\), and so the number of samples in \(\varvec{Y}_{M}\) is \(N_{M} = 3N_{m}\). In this work, the reference value of \(N_{m}\) is recommended to set as \(\left[ {h\sqrt {n_{x} } } \right]\). This means that the average number of samples projected on the unit diagonal is equal to \(h\). As a result of the inexpensive calculations of constructed surrogate models for constraint functions in generating Markov chains, the parameter \(h\) can be set to a larger value. To balance the generation efficiency and filling ability of each Markov chain, \(h = 10^{4}\) is set in the proposed method.

- Step 2.3:

-

Construct initial Design of Experiment (DoE) for objective function As mentioned in Step 1.3, an initial DoE is also required to construct the initial surrogate model of objective function, and set z = 1. To ensure the spatial filling characteristics of the initial samples, the maximum minimum distance criterion is adopted to select \(N_{c}\) samples in \(\varvec{Y}_{M}\), sequentially. The number \(N_{c}\) of initial DoE is the same as \(N_{d}\) in Step 1.3. After determining the \(N_{c}\) samples, the function \(c\left( \varvec{y} \right)\) is called to compute the values of corresponding objective function \(C\left( \varvec{x} \right)\). The initial DoE \(\varvec{S}_{c}\) can be expressed as

$$\varvec{S}_{c} = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {\varvec{y}_{{S_{c} }}^{1} ,c\left( {\varvec{y}_{{S_{c} }}^{1} } \right)} \\ {\varvec{y}_{{S_{c} }}^{2} ,c\left( {\varvec{y}_{{S_{c} }}^{2} } \right)} \\ \end{array} } \\ {\begin{array}{*{20}c} \vdots \\ {\varvec{y}_{{S_{c} }}^{{N_{c} }} ,c\left( {\varvec{y}_{{S_{c} }}^{{N_{c} }} } \right)} \\ \end{array} } \\ \end{array} } \right],$$(38) - Step 2.4:

-

Build or rebuild Kriging model for objective function In this step, the surrogate model \(\widehat{c}\left( \varvec{y} \right)\) is constructed or reconstructed with the current DoE \(\varvec{S}_{c}\).

- Step 2.5:

-

Implement active learning process and identify the optimal next sample As constructing surrogate models for constrained functions, constructing surrogate model for objective function also requires an adaptive strategy to determine the optimal next sample. A learning strategy for constructing surrogate model of the objective function is also developed to select the optimal new training samples that not only locate far away from the existing training samples, but also have a higher chance to change current surrogate modelUsing the same calculation method in Step 1.5, the minimum distance matrix \(\varvec{D}_{ \hbox{min} }^{c}\) between samples in \(\varvec{Y}_{M}\) and samples in \(\varvec{S}_{c}\) is calculated, and it is presented in Eq. (39).

$$\varvec{D}_{ \hbox{min} }^{c} = \left[ {D_{ \hbox{min} }^{c1} ,D_{ \hbox{min} }^{c2} , \ldots ,D_{ \hbox{min} }^{{cN_{M} }} } \right]^{T} ,$$(39)After obtaining the minimum distance matrix \(\varvec{D}_{ \hbox{min} }^{c}\), the samples in \(\varvec{Y}_{M}\) are evaluated by \(\widehat{c}\left( \varvec{y} \right)\). Then, the predictor response \(\widehat{c}\left( {\varvec{y}_{M}^{i} } \right)\) and the Kriging variance \(\widehat{\sigma }_{{\widehat{c}}} \left( {\varvec{y}_{M}^{i} } \right)\) can be obtained. The results are stored in \(\varvec{Y}_{c}\) and \(\varvec{\sigma}_{c}\) as presented in Eq. (40) and Eq. (41). \(y_{c}^{i}\) and \(\sigma_{c}^{i}\) denote that the predictor response and the standard deviation of the ith sample \(\varvec{y}_{M}^{i}\) in \(\varvec{Y}_{M}\) are predicted, respectively.

$$\varvec{Y}_{C} = \left[ {y_{c}^{1} ,y_{c}^{2} , \ldots ,y_{c}^{{N_{M} }} } \right]^{T} ,$$(40)$$\varvec{\sigma}_{C} = \left[ {\sigma_{c}^{1} ,\sigma_{c}^{2} , \ldots ,\sigma_{c}^{{N_{M} }} } \right]^{T} ,$$(41)For the purpose of meeting the requirement of the aforementioned learning strategy for objective function, the product \(L_{D\sigma } \left( i \right)\) of minimum distance \(\varvec{D}_{ \hbox{min} }^{c} \left( i \right)\) and \(\varvec{\sigma}_{C} \left( i \right)\) is calculated by Eq. (42), and then the \(N_{M} \times 1\) matrix \(\varvec{L}_{D\sigma }\) is obtained as presented in Eq. (43). The maximum value \(L_{ \hbox{max} }\) of matrix \(\varvec{L}_{DP}\) is computed by Eq. (44). Then, the element \(L_{D\sigma } \left( {i_{\text{opt}}^{M} } \right)\) in the matrix \(\varvec{L}_{D\sigma }\) is positioned by judging whether its value is equal to the maximum value \(L_{D\sigma }^{ \hbox{max} }\), that is, \(L_{D\sigma } \left( {i_{\text{opt}}^{M} } \right) = L_{D\sigma }^{ \hbox{max} }\). Finally, the optimal next sample for updating the surrogate model of objective function is determined in \(\varvec{Y}_{M}\), and it is termed as \(\varvec{y}_{M}^{{i_{\text{opt}}^{M} }}\).

$$L_{D\sigma } \left( i \right) = D_{ \hbox{min} }^{ci} \sigma_{c}^{i} ,$$(42)$$\varvec{L}_{D\sigma } = \left[ {L_{D\sigma } \left( 1 \right),L_{D\sigma } \left( 2 \right), \ldots ,L_{D\sigma } \left( {N_{M} } \right)} \right]^{T} ,$$(43)$$L_{D\sigma }^{ \hbox{max} } = \mathop {\hbox{max} }\limits_{i} \left[ {L_{D\sigma } \left( i \right)} \right],i = 1,2, \ldots ,N_{M} ,$$(44) - Step 2.6:

-

Calculate convergence index and judge convergence criteria for surrogate model of objective function The DoE and the constructed Kriging model of objective function are sequentially updated, a stopping criterion is required. To obtain a Kriging model with global precision for objective function in safety domain, a relative error between two adjacent iterations is used as an indicator to judge whether the prediction accuracy of the constructed Kriging model is enough at the zth iteration. The predictor response vector \(\varvec{Y}_{c}\) in Step 2.5 is stored in another vector \(\varvec{Y}_{c}^{\left( z \right)}\). Assume that \(\varvec{Y}_{c}^{\left( 0 \right)} \left( i \right) = 0 \left( {i = 1,2, \ldots ,N_{M} } \right)\). The relative error \(r^{\left( z \right)} \left( i \right)\) describes the difference of predictor response between constructed Kriging model \(\widehat{c}\left( \varvec{y} \right)\) at the zth iteration and the one at the (z-1)th iteration, and it can be calculated by Eq. (45).

$$r^{\left( z \right)} \left( i \right) = \left| {\frac{{\varvec{Y}_{c}^{\left( z \right)} \left( i \right) - \varvec{Y}_{c}^{{\left( {z - 1} \right)}} \left( i \right)}}{{\varvec{Y}_{c}^{\left( z \right)} \left( i \right)}}} \right|,$$(45)For the purpose of making the constructed Kriging model of the objective function meet the accuracy requirement, the stopping criterion can be defined as

$$r_{ \hbox{max} }^{\left( z \right)} \le \Delta r,$$(46)where \(r_{ \hbox{max} }^{\left( z \right)} = \hbox{max} \left[ {r^{\left( z \right)} \left( i \right)} \right],\left( {i = 1,2, \ldots ,N_{M} } \right)\), \(\Delta r\) is a small positive number that can be set by user. In order to balance accuracy and efficiency, \(\Delta r\) can usually be taken as a number in the range: \(1 \times 10^{ - 5} \sim1 \times 10^{ - 4}\). A smaller value should be taken for a more accurate result. In this paper, \(\Delta r\) is set to \(5 \times 10^{ - 5}\), which is sufficient to obtain an accurate and efficient Kriging model of the objective function.

If the stopping criterion is satisfied, the active learning process is stopped, and the algorithm goes to Step 2.8, otherwise it goes to Step 2.7.

- Step 2.7:

-

Update previous DoE If the stopping criterion is not satisfied, the previous Kriging model for objective function is considered not to be precise enough to approximate the function \(c\left( \varvec{y} \right)\). Consequently, previous DoE \(\varvec{S}_{c}\) should be updated. Firstly, the optimal next sample identified in Step 2.5 is evaluated with the function \(c\left( \varvec{y} \right)\). Then, the sample and its evaluation is added to update previous DoE \(\varvec{S}_{c}\). Simultaneously, set z = z + 1 and \(N_{c} = N_{c} + 1\).

- Step 2.8:

-

Inverse transform design space After the Kriging model \(\widehat{c}\left( \varvec{y} \right)\) for objective function is determined, all constructed Kriging models should be converted to the original design space so as to replace the objective function and the constraint functions in original RBDO problem. Therefore, the Kriging models \(\widehat{c}\left( \varvec{y} \right)\) and \(\widehat{\text{g}}_{j} \left( \varvec{y} \right) \left( {j = 1,2, \ldots ,n_{y} } \right)\) are converted to the functions \(\widehat{C}\left( \varvec{x} \right)\) and \(\widehat{G}_{j} \left( \varvec{x} \right) \left( {j = 1,2, \ldots ,n_{y} } \right)\) by Eq. (47) and Eq. (48). Subsequently, the function \(\widehat{C}\left( \varvec{x} \right)\) and the functions \(\widehat{G}_{j} \left( \varvec{x} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\) are used to replace the objective function \(C\left( \varvec{x} \right)\) and the constraint functions \(G_{j} \left( \varvec{x} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\) in original RBDO problem as presented in Eq. (1), and then go to next phase for solving the RBDO problem with surrogate models as presented in Eq. (49).

$$\widehat{c}\left( \varvec{y} \right) = \widehat{c}\left( {\frac{{\varvec{x} - \varvec{x}_{L} }}{{\varvec{d}_{U} - \varvec{d}_{L} }}} \right) = \widehat{C}\left( \varvec{x} \right),$$(47)$$\widehat{\text{g}}_{j} \left( \varvec{y} \right) = \widehat{\text{g}}_{j} \left( {\frac{{\varvec{x} - \varvec{x}_{L} }}{{\varvec{d}_{U} - \varvec{d}_{L} }}} \right) = \widehat{G}_{j} \left( \varvec{x} \right),$$(48)$$\begin{array}{*{20}l} {{\text{Find}} \;\varvec{d} } \hfill \\ {\hbox{min} \widehat{\;C}\left( \varvec{d} \right) } \hfill \\ {{\text{s}} . {\text{t}}. \;\left\{ {\begin{array}{*{20}l} {P\left[ {\widehat{G}_{j} \left( \varvec{x} \right) \le 0|\varvec{d}} \right] \le\Phi \left( { - \beta_{j}^{t} } \right),} \hfill \\ {j = 1,2, \ldots ,n_{y} ,} \hfill \\ {\varvec{d}_{L} \le \varvec{d} \le \varvec{d}_{U} } \hfill \\ \end{array} } \right.} \hfill \\ \end{array}$$(49)

3.4 Phase 3: solving RBDO by IS-based modified SORA

After the first two phases of the proposed method being executed, objective function \(C\left( \varvec{x} \right)\) and the constraint functions \(G_{j} \left( \varvec{x} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\) in the original RBDO problem (as presented in Eq. (1)) are replaced with the function \(\widehat{C}\left( \varvec{x} \right)\) and the functions \(\widehat{G}_{j} \left( \varvec{x} \right)\left( {j = 1,2, \ldots ,n_{y} } \right)\) (as presented in Eq. (49)), respectively. In this section, the RBDO problem as presented in Eq. (49) is solved by IS-based modified SORA. The IS-based modified SORA method can be treated as an improvement of SORA. The proposed IS-based MSORA method consists of the following steps, and the flowchart of ‘Phase 3’ is shown in Fig. 2.

- Step 3.1:

-

Set initial point and construct failed sample set of each constraint function Like most analytical methods of RBDO, the initial point for solving the RBDO problem is also needed, in the proposed IS-based MSORA method. So, set k = 1, and the initial point \(\varvec{d}^{\left( 1 \right)}\) is defined as the mean point of the optimization design space, which is \(\varvec{d}^{\left( 1 \right)} = 0.5\left( {\varvec{d}_{\varvec{L}} + \varvec{d}_{\varvec{U}} } \right)\). To avoid the disadvantages of solving the MPP-based gradient information of performance function in traditional method, the simulation-based method is used to find the MPP with the highest probability density value in the failure domain. Therefore, the failed sample sets \(\varvec{X}_{\varvec{F}}^{j} \left( {j = 1,2, \ldots ,n_{y} } \right)\) can be constructed. All samples in \(\varvec{Y}_{U}\) are converted to the design space \(\left[ {\varvec{d}_{\varvec{L}} ,\varvec{d}_{\varvec{U}} } \right]\) with Eq. (50), and the sample set \(\varvec{X}_{U}\) is obtained. Then, all samples in \(\varvec{X}_{U}\) are classified by the sign of the response value that is calculated by calling the function \(\widehat{G}_{j} \left( \varvec{x} \right)\), and the samples with negative response value are stored in \(\varvec{X}_{\varvec{F}}^{j}\).

$$\varvec{x} = \varvec{y}\left( {\varvec{d}_{\varvec{U}} - \varvec{d}_{\varvec{L}} } \right) + \varvec{d}_{\varvec{L}} .$$(50) - Step 3.2:

-

Find MPPs and reliability assessment with importance sampling The sample \(\varvec{x}_{j}^{{\varvec{*}\left( k \right)}}\) with the highest probability density value in \(\varvec{X}_{\varvec{F}}^{j}\) for the corresponding function \(\widehat{G}_{j} \left( \varvec{x} \right)\) is found at the current optimal solution \(\varvec{d}^{\left( k \right)}\), and it is considered the MPP of the function \(\widehat{G}_{j} \left( \varvec{x} \right)\). Subsequently, \(N_{IS}\) important samples at each \(\varvec{x}_{j}^{{\varvec{*}\left( k \right)}}\) are generated by executing importance sampling algorithm, and these samples are stored in \(\varvec{X}_{{\varvec{IS}}}^{j}\), respectively. Then, all samples in \(\varvec{X}_{{\varvec{IS}}}^{j}\) are classified by the sign of the response value that is calculated by calling the function \(\widehat{G}_{j} \left( \varvec{x} \right)\), and the samples with negative response value are added in \(\varvec{X}_{\varvec{F}}^{j}\). Simultaneously, the failure probability \(P_{IS}^{j}\) of the function \(\widehat{G}_{j} \left( \varvec{x} \right)\) is calculated by Eq. (15). In this paper, the number \(N_{IS}\) of important samples is taken as \(1 \times 10^{5}\), and it is sufficient to obtain an accurate assessment for failure probability.

- Step 3.3:

-

Calculate correction factors and modified shifting vectors Failure probability evaluated by MPP is based on linear assumption in the traditional SORA method, and it is accurate if and only if the performance function is a linear function. Therefore, the SORA should be modified to obtain an accurate optimal design in the RBDO problem with the non-linear constraint function. In this paper, the shifting vector \(\varvec{s}_{j}^{\left( k \right)}\) is modified with a correction factor \(\lambda_{j}\). The correction factor \(\lambda_{j}\) can be calculated by Eq. (51), and the shifting vector \(\varvec{s}_{j}^{\left( k \right)}\) can be calculated by Eq. (52).

$$\lambda_{j} = \frac{{\parallel \left( {\varvec{d}^{\left( k \right)} - \varvec{x}_{j}^{*\left( k \right)} } \right)\varvec{\sigma}^{ - 1} \parallel }}{{\varPhi^{ - 1} \left( {1 - P_{IS}^{j} } \right)}},$$(51)$$\varvec{s}_{j}^{\left( k \right)} = - \beta_{j}^{t} \lambda_{j} \frac{{\left[ {\left( {\varvec{d}^{\left( k \right)} - \varvec{x}_{j}^{{\varvec{*}\left( k \right)}} } \right)\varvec{\sigma}^{ - 1} } \right]}}{{\parallel \left( {\varvec{d}^{\left( k \right)} - \varvec{x}_{j}^{{\varvec{*}\left( k \right)}} } \right)\varvec{\sigma}^{ - 1} \parallel }}\varvec{\sigma ,}$$(52) - Step 3.4:

-

Solve deterministic optimization by GA After determining each shifting vector \(\varvec{s}_{j}^{\left( k \right)}\), the failure surfaces of the probabilistic constraints of the RBDO problem in Eq. (49) can be shifted to the feasible direction. Deterministic optimization is performed with shifted constraints to find a new design as presented in Eq. (53), and it is solved by GA in the proposed method.

$$\begin{array}{*{20}l} {{\text{Find }}\varvec{d}^{{\left( {k + 1} \right)}} } \hfill \\ {{\text{min }}\widehat{C}\left( {\varvec{d}^{{\left( {k + 1} \right)}} } \right) } \hfill \\ {{\text{s}}.{\text{t}}. \left\{ {\begin{array}{*{20}l} {\widehat{G}_{j} \left( {\varvec{d}^{{\left( {k + 1} \right)}} } \right) \ge 0, } \hfill \\ {\widehat{G}_{j} \left( {\varvec{d}^{{\left( {k + 1} \right)}} - \varvec{s}_{j}^{\left( k \right)} } \right) \ge 0, } \hfill \\ { \varvec{d}_{\varvec{L}} \le \varvec{d} \le \varvec{d}_{\varvec{U}} , \quad j = 1,2, \ldots ,n_{y} } \hfill \\ \end{array} } \right.} \hfill \\ \end{array}$$(53) - Step 3.5:

-

Check stopping criterion The stopping criterion is defined as Eq. (54), and \(\varepsilon\) can be calculated by Eq. (55). If it is satisfied, then stop and go to Step 3.6, otherwise set k = k + 1 and go to Step 3.2. In this paper, the allowable value is taken as \(1 \times 10^{ - 3}\).

$$\varepsilon \le \Delta \varepsilon ,$$(54)$$\varepsilon = \mathop \sum \limits_{i = 1}^{{n_{x} }} \left| {\frac{{\varvec{d}^{{\left( {k + 1} \right)}} \left( i \right) - \varvec{d}^{\left( k \right)} \left( i \right)}}{{\varvec{d}^{{\left( {k + 1} \right)}} \left( i \right)}}} \right|,$$(55) - Step 3.6:

-

End the algorithm of the proposed RBDO method The last design \(\varvec{d}^{{\left( {k + 1} \right)}}\) is considered as the final result of the proposed RBDO method, which is accurate enough for the original RBDO problem

The proposed method not only guarantees the classification accuracy of the constructed surrogate models for the constraint function and the approximation accuracy of the constructed surrogate model for the objective function, but also inherits the accuracy of important sampling and the high efficiency of SORA for solving RBDO problem. Compared with other conventional RBDO methods based on the MPPs or gradient, its computational efficiency and accuracy can be increased greatly.

4 Numerical examples

4.1 A nonlinear 2D test problem

To better illustrate the proposed method, a two-dimensional example is tested, and it has been used as the verification example in the literatures [32, 42, 44, 45, 59]. This RBDO problem contains three constraints, the second one is highly nonlinear and others are weakly nonlinear. There are two random variables which obey the normal distribution \(x_{i} \sim N\left( {d_{i} ,0.3^{2} } \right)\), \(i = 1,2\). The target reliability index is \(\beta_{j}^{t} = 3,j = 1,2,3.\)

The formulation for RBDO can be given by Eq. (56).

To better illustrate the proposed method, these main processes of constructing surrogate models of constraint functions, constructing a surrogate model of the objective function and solving RBDO by IS-based modified SORA are separately shown in Figs. 3, 4 and 5. Figure 3a shows the initial surrogate models for constraint functions with the initial DoE \(\varvec{S}\) which contains 9 samples. As the number of samples in DoE \(\varvec{S}\) is constantly increased, the surrogate models for constraint functions gradually converge. The surrogate models for the first, the second and the third constraint function are considered stable at the 11th iteration (Fig. 3b), at the 27th iteration (Fig. 3c) and at the 40th iteration (Fig. 3d), respectively. Subsequently, the surrogate models for constraint functions obtained at the 40th iteration are used to generate Markov chains and set up candidate sample set \(\varvec{Y}_{M}\), as shown in Fig. 4a. Then, the DoE \(\varvec{S}_{c}\) is constructed as presented in Fig. 4b, and the surrogate model of objective function is constructed. After surrogate models of the constraint functions and objective function are determined, these surrogate models are used to replace the original constraint functions and objective function. Hereafter, the IS-based modified SORA method is executed to solve the optimal solution. Figure 5 shows the variation of the optimal solution with optimization process.

The probabilistic constraints at the optimal solution are evaluated by the Monte Carlo simulation (MCS) with ten million samples and listed in Table 1 in comparison with those methods by previous publications. The reliability optimization results are captured based on the methods listed in Table 1, and the optimal design variables, optimal value of objective function, and the total number of constraint function calls and objective function calls are also summarized. \(N_{\text{total}}\), \(N_{\text{con}}\) and \(N_{\text{obj}}\) represent the total evaluation number of constraint function calls and objective function calls, the evaluation number of constraint function calls and the evaluation number of objective function calls in the original RBDO problem, respectively.

It can be seen that almost all reliability optimization methods listed in Table 1 converge to a similar optimum result. The optimum result of the AC-SLA is less than that of the other methods including the proposed method, but it is much more than the proposed method in the evaluation number of constraint function calls and objective function calls. In addition, the optimum result of AC-SLA does not satisfy the reliability constraint with \(\beta^{t} \ge 3\) for the first constraint function. Therefore, the proposed method is more efficient and accurate than the other methods.

4.2 Optimal design of a roof truss

A roof truss subject to the uniform loads is to be designed, and it has been studied in the literatures [1, 39, 43, 56]. As shown in Fig. 6, top members and compression bars are made by reinforced concrete, and bottom members and tension bars are made by steel. Table 2 presents parameters of random variables. The vertical deflection at the peak of structure (node C) is limited to 0.03 m, and the problem is given as follows:

This example involves four normal random variables and two normal random design variables i.e. \(A_{S} ,A_{C}\). The distribution parameters of the random variables are listed in Table 2.

Similar to example 1, the optimal results with the proposed method and those methods by previous publications are listed in Table 3. It can be seen that the HCC, RMV, MMV, AAMV, DMV, HDMV and ESMV method converge to the similar unreliable optimal results, which does not satisfy the reliability constraint with \(\beta^{t} \ge 3\); while the DCC, RBDO + WAM and the proposed method converges to the reliable optimal results with \(\beta^{t} \ge 3\). The result of RBDO + WAM is better than that of DCC and proposed method on the objective function, but it is less efficient than all other methods for this example. As presented in Table 3, the total number of constraint function calls and objective function calls of the proposed method is much less than that of other methods for obtaining a reliable and nearly optimal result. So, the proposed method is more efficient than the other methods listed in Table 3.

4.3 Welded beam problem

As shown in Fig. 7, this example considered is the welded beam problem, which has been used as a benchmark to compare with some previous publications by many scholars such as Yi et al. [30], Chen et al. [33] and Keshtegar et al. [37, 40, 45, 60]. The problem has five probabilistic constraints involving four random variables. The probabilistic constraints are related to limitation of shear stress, bending stress, buckling, and displacement. The objective of this problem is to minimize the welding cost. All random variables are statistically independent and follow normal distribution. The fixed system parameters \(z\) and \(c\) of the welded beam problem are listed in Table 4.

The RBDO model of the welded beam problem can be formulated as:

The RBDO results of this problem with the proposed method and previous methods are summarized in Table 5. It is seen that the objective function values of the optimal results obtained by the proposed method agree relatively well with those of other previous publications. Among these methods listed in Table 5, the SORA, ASORA, ADA, ESM, HCMV and the proposed method are relatively efficient in terms of the total number of constraint function calls and objective function calls. It can be seen that the proposed method is the best one in terms of the total number and the objective function value. So, the proposed method is more efficient than other methods to be employed for solving the RBDO problem of the welded beam.

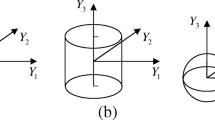

4.4 Speed reducer RBDO problem

A speed reducer illustrated in Fig. 8 is used to rotate the engine and propeller with efficient velocity in light plane, and it is commonly studied by researchers as a benchmark example [2, 26, 30, 33, 36, 42, 45,46,47]. This RBDO problem has 11 probabilistic constraints involving 7 random variables. The probabilistic constraints are related to physical quantities such as bending stress, contact stress, longitudinal displacement, stress of the shaft, and geometry constraints. The random design variables are gear width (\(d_{1}\)), gear module (\(d_{2}\)), the number of pinion teeth (\(d_{3}\)), distance between bearings (\(d_{4} ,d_{5}\)), and diameter of each shaft (\(d_{6} ,d_{7}\)).

All random variables are statistically independent and have normal distributions. The objective function is to minimize the weight. The RBDO formulation of the speed reducer can be expressed as

The optimization results are summarized in Table 6. The optimal results of all the approaches are almost identical to those in the Ref. [36]. The probabilistic constraints at the optimal solutions of these methods listed in Table 6 are evaluated by the Monte Carlo simulation (MCS) with ten million samples, respectively. It can be seen that almost all the probabilistic constraints satisfy the target reliability at the optimum of each method. Among them, the LAS, IBS, and the proposed method are more efficient with fewer than 100 in terms of the total number of constraint function calls and objective function calls. Although the SSRBO method is also efficient in the RBDO problem, the sixth probabilistic constraint at the optimal solution with it does not satisfy the target reliability. Therefore, the efficiency of the proposed method is verified.

5 Conclusions

In this paper, an efficient method combining adaptive surrogate model and importance sampling-based modified SORA method is proposed for reliability-based design optimization. In the proposed method, this optimization process is cleverly divided into three phases: 1) constructing surrogate models of the constraint functions, 2) constructing surrogate model of the objective function and 3) solving RBDO by IS-based modified SORA. The proposed method is adopted to solve four classical RBDO examples, and its accuracy and efficiency are verified by comparisons with previous methods.

The accuracy and efficiency of the proposed method depend on the accuracy and efficiency in constructing surrogate models phase and the optimization solution phase. So, there are two main directions in our efforts for practical engineering in the future. The first is how to further improve the accuracy and efficiency of constructing surrogate models in a more efficient way or with a more efficient surrogate model, such as radial basis function (RBF) and Support Vector Machine (SVM). The second is to employ a more efficient analytical method in the third phase of the proposed method, such as the ASORA method, the AHA method, the AC-SLA method, etc.

References

Rashki Mohsen, Miri Mahmoud, Moghaddam Mehdi Azhdary (2014) A simulation-based method for reliability based design optimization problems with highly nonlinear constraints. Autom Constr 47:24–36

Hamzehkolaei Naser Safaeian, Miri Mahmoud, Rashki Mohsen (2016) An enhanced simulation-based design method coupled with meta-heuristic search algorithm for accurate reliability-based design optimization. Eng Comput 32:477–495

Du X, Chen W (2004) Sequential optimization and reliability assessment method for efficient probabilistic design. J Mech Des 126(2):225–233

Zhao J, Chan AHC, Roberts C, Madelin KB (2007) Reliability evaluation and optimisation of imperfect inspections for a component with multi-defects. Reliab Eng Syst Saf 92:65–73

Songqing Shan G, Wang Gary (2008) Reliable design space and complete single-loop reliability-based design optimization. Reliab Eng Syst Saf 93:1218–1230

Yang T, Hsieh YH (2013) Reliability-based design optimization with cooperation between support vector machine and particle swarm optimization. Eng Comput 29:151–163

Pradlwarter HJ, Schuëller GI (2010) Local domain Monte Carlo simulation. Struct Saf 32:275–280

Choi SK, Grandhi RV, Canfield RA (2007) Reliability-based structural design. Springer, London

Song Shufang Lu, Zhenzhou Qiao Hongwei (2009) Subset simulation for structural reliability sensitivity analysis. Reliab Eng Syst Saf 94:658–665

Au SK, Beck J (2001) Estimation of small failure probabilities in high dimensions by subset simulation. Probab Eng Mech 16(4):263–277

Angelis MD, Patelli E, Beer M (2015) Advanced line sampling for efficient robust reliability analysis. Struct Saf 52:170–182

Chen Chyi-Tsong, Chen Mu-Ho, Horng Wei-Tze (2014) A cell evolution method for reliability-based design optimization. Appl Soft Comput 15:67–79

Zhu Ping, Shi Lei, Yang Ren-Jye, Lin Shih-Po (2015) A new sampling-based RBDO method via score function with reweighting scheme and application to vehicle designs. Appl Math Model 39:4243–4256

Rashki M, Miri M, Azhdary Moghaddam M (2012) A new efficient simulation method to approximate the probability of failure and most probable point. Struct Saf 39:22–29

Rashki M, Miri M, Azhdary Moghaddam M (2014) Closure to “A new efficient simulation method to approximate the probability of failure and most probable point”. Struct Saf 46:15–16

Okasha Nader M (2016) An improved weighted average simulation approach for solving reliability-based analysis and design optimization problems. Struct Saf 60:47–55

Hamzehkolaei Naser Safaeian, Miri Mahmoud, Rashki Mohsen (2018) New simulation-based frameworks for multi-objective reliability-based design optimization of structures. Appl Math Model 62:1–20

Du X, Chen W (2001) A most probable point-based method for efficient uncertainty analysis. J Des Manuf Autom 4(1):47–66

Hasofer AM, Lind NC (1974) Exact and invariant second-moment code format. J Eng Mech Div 100(1):111–121

Lee I, Choi KK, Du L, Gorsich D (2008) Inverse analysis method using MPP-based dimension reduction for reliability-based design optimization of nonlinear and multi-dimensional systems. Comput Methods Appl Mech Eng 198(1):14–27

Grandhi RV, Wang L (1998) Reliability-based structural optimization using improved two-point adaptive nonlinear approximations. Finite Elem Anal Des 29:35–48

Tu J, Choi KK, Park YH (1999) A new study on reliability-based design optimization. J Mech Des 121:557–564

Youn BD, Choi KK, Yang RJ, Gu L (2004) Reliability-based design optimization for crashworthiness of vehicle side impact. Struct Multidiscip Optim 26:272–283

Tsompanakis Y, Lagaros ND, Papadrakakis M (2010) Structural design optimization considering uncertainties. Taylor and Francis, London

Youn BD, Choi KK (2004) An investigation of nonlinearity of reliability-based design optimization approaches. J Mech Des 126(3):403–411

Li Xu, Gong Chunlin, Liangxian Gu, Jing Zhao, Fang Hai, Gao Ruichao (2019) A reliability-based optimization method using sequential surrogate model and Monte Carlo simulation. Struct Multidiscip Optim 59(2):439–460

Liang J, Mourelatos ZP, Nikolaidis E (2007) A single-loop approach for system reliability-based design optimization. J Mech Des 129(12):1215–1224

Cheng G, Xu L, Jiang L (2006) A sequential approximate programming strategy for reliability-based structural optimization. Comput Struct 84(21):1353–1367

Li Fan, Teresa Wu, Mengqi Hu, Dong Jin (2010) An accurate penalty-based approach for reliability-based design optimization. Res Eng Des 21:87–98

Yi P, Zhu Z, Gong J (2016) An approximate sequential optimization and reliability assessment method for reliability-based design optimization. Struct Multidiscip Optim 54:1367–1378

Ho-Huu V, Nguyen-Thoi T, Le-Anh L, Nguyen-Trang T (2016) An effective reliability-based improved constrained differential evolution for reliability-based design optimization of truss structures. Adv Eng Softw 92:48–56

Jeong Seong-Beom, Park Gyung-Jin (2017) Single loop single vector approach using the conjugate gradient in reliability based design optimization. Struct Multidiscip Optim 55:1329–1344

Chen Zhenzhong, Qiu Haobo, Gao Liang, Liu Su, Li Peigen (2013) An adaptive decoupling approach for reliability-based design optimization. Comput Struct 117:58–66

Jiang Chen, Qiu Haobo, Gao Liang, Cai Xiwen, Li Peigen (2017) An adaptive hybrid single-loop method for reliability-based design optimization using iterative control strategy. Struct Multidiscip Optim 56:1271–1286

Chen Zhenzhong, Li Xiaoke, Chen Ge, Gao Liang, Qiu Haobo, Wang Shengze (2018) A probabilistic feasible region approach for reliability-based design optimization. Struct Multidiscip Optim 57:359–372

Li Gang, Meng Zeng, Hao Hu (2015) An adaptive hybrid approach for reliability-based design optimization. Struct Multidiscip Optim 51:1051–1065

Keshtegar Behrooz (2017) A modified mean value of performance measure approach for reliability-based design optimization. Arab J Sci Eng 42:1093–1101

Keshtegar Behrooz, Hao Peng, Meng Zeng (2017) A self-adaptive modified chaos control method for reliability-based design optimization. Struct Multidiscip Optim 55:63–75

Keshtegara Behrooz, Hao Peng (2018) A hybrid descent mean value for accurate and efficient performance measure approach of reliability-based design optimization. Comput Methods Appl Mech Eng 336:237–259

Keshtegara Behrooz, Hao Peng (2018) Enhanced single-loop method for efficient reliability-based design optimization with complex constraints. Struct Multidiscip Optim 57:1731–1747

Hao Peng, Wang Yutian, Ma Rui, Liu Hongliang, Wang Bo, Li Gang (2019) A new reliability-based design optimization framework using isogeometric analysis. Comput Methods Appl Mech Eng 345:476–501

Meng Zeng, Keshtegar Behrooz (2019) Adaptive conjugate single-loop method for efficient reliability-based design and topology optimization. Comput Methods Appl Mech Eng 344:95–119

Keshtegar Behrooz, Hao Peng (2018) Enriched self-adjusted performance measure approach for reliability-based design optimization of complex engineering problems. Appl Math Model 57:37–51

Hao Peng, Ma Rui, Wang Yutian, Feng Shaowei, Wang Bo, Li Gang, Xing Hanzheng, Yang Fan (2019) An augmented step size adjustment method for the performance measure approach: toward general structural reliability-based design optimization. Struct Saf 80:32–45

Zhu Shun-Peng, Keshtegar Behrooz, Trung Nguyen-Thoi, Yaseen Zaher Mundher, Bui Dieu Tien (2019) Reliability-based structural design optimization: hybridized conjugate mean value approach. Eng Comput. https://doi.org/10.1007/s00366-019-00829-7

Chen Zhenzhong, Qiu Haobo, Gao Liang, Li Xiaoke, Li Peigen (2014) A local adaptive sampling method for reliability-based design optimization using Kriging model. Struct Multidiscip Optim 49:401–416

Chen Zhenzhong, Peng Siping, Li Xiaoke, Qiu Haobo, Xiong Huadi, Gao Liang, Li Peigen (2015) An important boundary sampling method for reliability-based design optimization using kriging model. Struct Multidiscip Optim 52:55–70

Li Xiaoke, Qiu Haobo, Chen Zhenzhong, Gao Liang, Shao Xinyu (2016) A local kriging approximation method using MPP for reliability-based design optimization. Comput Struct 162:102–115

Shi Lei, Lin Shih-Po (2016) A new RBDO method using adaptive response surface and first-order score function for crashworthiness design. Reliab Eng Syst Saf 156:125–133

Lehký D, Slowik O, Novák D (2018) Reliability-based design: artificial neural networks and double-loop reliability-based optimization approaches. Adv Eng Softw 117:123–135