Abstract

This paper investigates second order properties of a stationary continuous time process after random sampling. While a short memory process always gives rise to a short memory one, we prove that long-memory can disappear when the sampling law has very heavy tails. Despite the fact that the normality of the process is not maintained by random sampling, the normalized partial sum process converges to the fractional Brownian motion, at least when the long memory parameter is preserved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most of the papers on time series analysis assume that observations are equally spaced in time. However, irregularly spaced time series data appear in many applications, for instance in astrophysics, climatology, high frequency finance, signal processing. Elorrieta et al. (2019) and Eyheramendy et al. (2018) propose generalisations of autoregressive models for irregular time series motivated by an application in astronomy. The spectral analysis of these data is also studied in many papers with applications into astrophysics, climatology, physics [see for instance Scargle (1982), Broersen (2007), Mayo (1978), Masry and Lui (1975)].

A possible way to address the problem of non-equally spaced data is to transform the data into equally spaced observations using some methods of interpolation [see for instance Adorf (1995), Friedman (1962), Nieto-Barajas and Sinha (2014)].

An alternative one consists in assuming that time series can be embedded into a continuous time process. The data are then interpreted as a realization of a continuous temporal process observed at random times [see for instance Jones (1981), Jones and Tryon (1987), Brockwell et al. (2007)]. This approach requires the study of the effects of random sampling on the properties of continuous time process, as well as the development of inference methods for these models. Mykland (2003) studies the effects of the random sampling on the parameter estimation of time-homogeneous diffusion [see also Duffie and Glynn (2004)]. Masry (1994) establishes the properties of spectral density function estimation.

In this paper we focus particularly on time series with long-range dependence [see Beran et al. (2013), Giraitis et al. (2012) for a review of available results for long-memory processes].

Some models and estimation methods have been proposed for continuous-time processes [see Tsai and Chan (2005a), Viano et al. (1994), Comte and Renault (1996), Comte (1996)]. Tsai and Chan (2005a) introduced the continuous-time autoregressive fractionally integrated moving average (CARFIMA(p,d,q)) model. Under the long-range dependence condition \(d\in (0,1/2)\), they calculate the autocovariance function of the stationary CARFIMA process and its spectral density function [see Tsai and Chan (2005b)]. These properties are extended to the case \(d\in (-1/2,1/2)\) in Tsai (2009). In Viano et al. (1994), continuous-time fractional ARMA processes are constructed. They establish the \(L^2\) properties (spectral density and autocovariance function) and the dependence structure. Comte and Renault (1996) study the continuous time moving average fractional process, a family of long memory model. The statistical inference for continuous-time processes is generally constructed from the sampled process [see Tsai and Chan (2005a, b), Chambers (1996), Comte (1996)]. Different schemes of sampling can be considered. In Tsai and Chan (2005a), the estimation method is based on the maximum likelihood estimation for irregularly spaced deterministic time series data. Under the assumption of identifiability, Chambers (1996) considers the estimation of the long memory parameter of a continuous time fractional ARMA process with discrete time data using the low-frequency behaviour of the spectrum. Comte (1996) studied two methods for the estimation with regularly spaced data: Whittle likelihood method and the semiparametric approach of Geweke and Porter-Hudak. In this article we are interested in irregularly spaced data when the sampling intervals are independent and identically distributed positive random variables. In the light of previous results in discrete time, there was an effect of the random sampling on the dependence structure of the process. Indeed, Philippe and Viano (2010) show that the intensity of the long memory is preserved when the law of sampling intervals has finite first moment, but they also pointed out situations where a reduction of the long memory is observed.

We adopt the most usual definition of second order long memory process. Namely, a stationary process \(\mathbf {U}\) has the long memory property if its autocovariance function \(\sigma _U\) satisfies the condition

We study the effect of random sampling on the properties of a stationary continuous time process. More precisely, we start with \(\mathbf {X}=(X_t)_{t\in {\mathbb {R}}^+}\), a second-order stationary continuous time process. We assume that it is observed at random times \((T_n)_{n\ge 0}\) where \((T_n)_{n\ge 0}\) is a non-decreasing positive random walk independent of \(\mathbf {X}\). We study the discrete-time process \(\mathbf {Y}\) defined by

The process \({\mathbf {Y}}\) obtained by random sampling is called the sampled process.

In this paper, we study the properties of this. In particular, we show that the results obtained by Philippe and Viano (2010) on the auto-covariance function are preserved for continuous time process \(\mathbf {X}\). The large-sample statistical inference relies often on limit theorems of probability theory for partial sums. We show that Gaussianity is lost by random sampling. However, we prove that the asymptotic normality of the partial sum is preserved with the same standard normalization [see Giraitis et al. (2012), Chapter 4 for a review].

In Sect. 2, we study the behavior of the sampled process (1.1) for the general case. We establish that Gaussianity of \(\mathbf{X} \) is not transmitted to \(\mathbf {Y}\). Under rather weak conditions on the covariance \(\sigma _X\), the weak dependence is preserved. A stronger assumption on the first moment of \(T_1 \) is necessary to preserve the long memory property. Under the condition \({\mathbb {E}}[T_{1}]<\infty \), if X is a long-memory process then Y also in the sense of definition above. In Sect. 3, we present the more specific situation of a regularly varying covariance where preservation or non-preservation of the memory can be quantified. In particular, we prove that for heavy tailed sampling distribution, a long memory process \(\mathbf {X}\) can give raise to a short memory process \(\mathbf {Y}\). In Sect. 4, we establish a Donsker’s invariance principle when the initial process \(\mathbf {X}\) is Gaussian and the long memory parameter is preserved.

2 General properties

Throughout this document we assume that the following properties hold on the initial process \({\mathbf {X}}\) and the random sampling scheme:

Assumption \(\mathcal {H}\):

- \(\mathcal {H}\)1::

-

\(\mathbf {X}=(X_t)_{t\in {\mathbb {R}}^+}\) is a second-order stationary continuous time process with zero mean and autocovariance function \(\sigma _X\).

- \(\mathcal {H}\)2::

-

The random walk \((T_n)_{n\ge 0}\) is independent of \(\mathbf {X}\).

- \(\mathcal {H}\)3::

-

\(T_0= 0\).

- \(\mathcal {H}\)4::

-

The increments \(\Delta _j = T_{j+1}-T_j\) (\( j \in {\mathbb {N}}\) ) are independent and identically distributed. The common distribution admits a probability density function s (with respect to the Lebesgue measure) supported by \({\mathbb {R}}^+\).

Remark 1

In Assumption \(\mathcal {H}3\), we impose the specific initialization \(T_0=0\) only to simplify our notations since it implies that \(\Delta _j = T_{j+1}-T_j\) for all \(j\in {\mathbb {N}}\). However, all the results remain true if we take \(T_0 = \Delta _0 \) and \(\Delta _j = T_{j}-T_{j-1}\), for \(j\ge 1\).

The following proposition gives the \(L^2\)-properties of the sampled process \(\mathbf {Y}\).

Proposition 2.1

Under Assumption \(\mathcal {H}\), the discrete-time process \(\mathbf {Y}\) defined in (1.1) is also second-order stationary with zero mean and its autocovariance sequence is

Proof

These properties are obtained by applying Fubini’s theorem, and using the independence between \(\mathbf {X}\) and \((T_n)_{n\ge 0}\). Indeed, for all \(h\in {\mathbb {N}}\), we have

and

\(\square \)

2.1 Distribution of the sampled process

This part is devoted to the properties of the finite-dimensional distributions of the process \(\mathbf {Y}\).

Proposition 2.2

If \(\mathbf {X}\) is a strictly stationary process satisfying \( \mathcal {H}2- \mathcal {H}4\), then the sampled process \(\mathbf {Y}\) is a strictly stationary discrete-time process.

Proof

We arbitrarily fix \(n\ge 1\), \(p\in {\mathbb {N}}^*\) and \(k_1,\dots k_n \in {\mathbb {N}}\) such that \(0\le k_1<\dots <k_n\). We show that the joint distribution of \((Y_{k_1+p},\dots ,Y_{k_n+p})\) does not depend on \(p\in {\mathbb {N}}\).

For \((y_1,\dots ,y_n)\in {\mathbb {R}}^n\), we have

where \((\Delta _j)_{j\in {\mathbb {N}}}\) are the increments defined in Assumption \(\mathcal {H}\). By the strict stationarity of \( \mathbf {X}\) the right-hand-side of the last equation is equal to

where \( U_i=\Delta _{i+p}\) are i.i.d with density s. This concludes the proof. \(\square \)

The following proposition is devoted to the particular case of a Gaussian process. We establish that the Gaussianity is not preserved by random sampling.

Proposition 2.3

Under Assumption \(\mathcal {H}\), if \(\mathbf {X}\) is a Gaussian process then the marginals of the sampled process \(\mathbf {Y}\) are Gaussian. Furthermore, if \(\sigma _X\) is not almost everywhere constant on the set \(\{ x: s(x) >0 \}\), then \(\mathbf {Y}\) is not a Gaussian process.

Proof

We first prove the normality of marginal distributions.

Let U be a random variable, we denote \(\Phi _{U}\) its characteristic function. We have, for all \(t\in {\mathbb {R}}\)

Conditionally on \(T_k\), the probability distribution of \(X_{T_k}\) is the Gaussian distribution with zero mean and variance \(\sigma _X(0)\). We get

and thus \(Y_k\) is a Gaussian variable with zero mean and variance \(\sigma _X(0)\).

We are now proving that the process is not Gaussian by contraposition, i.e. if \({\mathbf {Y}}\) is a Gaussian process then \(\sigma _X\) is almost everywhere constant on the set \(\{ x: s(x) >0 \}\).

If \({\mathbf {Y}}\) is a Gaussian process then the random variable \(Y_1+Y_2\) has a Gaussian distribution (since it is a linear combination of two components of \({\mathbf {Y}}\)),

and

Then, for all \(t\in {\mathbb {R}}\),

According to Jensen’s inequality, this equality is achieved if and only if \(\sigma _X(T_2-T_1)\) is constant almost everywhere. \(\square \)

Example 1

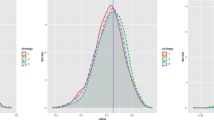

In Fig. 1, we illustrate the non-Gaussianity of the sampled process from the distribution of \((Y_1,Y_2)\). To simulate a realization from the distribution of \((Y_1,Y_2)\), we proceed as follows:

1. Generate the time interval \(T_2-T_1\) according to an exponential distribution with mean 1.

2. Generate \((Y_1,Y_2)\) as a Gaussian vector with zero mean and covariance

We take \(\mathbf {X}\) a long memory Gaussian process with the standard form of autocovariance function,

and \((T_i)_{i\in {\mathbb {N}}}\) is a homogeneous Poisson counting process with rate 1. The parameter \(d\in (0,1/2)\) in 2.2 measures the intensity of long-range dependence. In Sect 3 we study this specific form of autocovariance function, and specify the effect of subsampling on the value of d.

We simulate from the distribution of \((Y_1,Y_2)\) a sample of size p. In Fig. 1a we represent the kernel estimate of the joint probability density function of \((Y_1,Y_2)\). In order to compare the probability distribution of the sampled process with the corresponding Gaussian one, we simulate a sample of centered Gaussian vector \((W_1,W_2)\) having the same variance matrix as \((Y_1,Y_2)\) i.e.

where \(\Sigma _{1,2}=\int _0^\infty \sigma _X(t) e^{-t} {\ \mathrm {d}}t = \int _0^\infty e^{-t} (1+t ^{1-2d} ) ^{-1} {\ \mathrm {d}}t \) can be calculated numerically. In Fig. 1b, we represent the kernel estimate of the density of \((W_1,W_2)\). The simulations are done with \(d= .05\). We see that the form of the distribution of sampled process differs widely from Gaussian distribution. Note that for stronger long memory, the difference is visually more difficult to detect.

a The estimated density of the centered couple \((Y_1,Y_2)\) is represented for intervals \(\Delta _j\) having an exponential distribution with mean 1 and Gaussian initial process with autocovariance function \(\sigma _X(t)=(1+t^{0.9})^{-1}\). b Represents the estimated density of the centered Gaussian vector \((W_1,W_2)\) with the same covariance matrix \(\Sigma _{Y_1,Y_2}\) as \((Y_1,Y_2)\). Estimations are calculated on sample of size \(p=50{,}000\)

2.2 Dependence of the sampled process

We are interested in the dependence structure of the \({\mathbf {Y}}\) process. In the following propositions, we provide sufficient conditions to preserve the weak (respectively long) memory after sampling.

Proposition 2.4

Assume Assumption \({\mathcal {H}}\) holds. Let p be a real greater than 1 (\(p \ge 1\)). If there is a positive bounded function \(\sigma _*(.)\), non-increasing on \({\mathbb {R}}^+\), such that

-

1.

\(\vert \sigma _X(t)\vert \le \sigma _*(t),\quad \forall t\in {\mathbb {R}}^+\)

-

2.

\(\displaystyle \int _{{\mathbb {R}}^+} \sigma _*^p(t) d t<\infty \)

then, the sampled process Y has an autocovariance function (2.1) in \(\ell ^p\), i.e \(\displaystyle \sum _{h\ge 0} \vert \sigma _Y(h)\vert ^p <\infty \).

Remark 2

The proposition confirms an intuitive claim: random sampling cannot produce long memory from short memory. The particular case \(p=1\) implies that if \(\mathbf {X}\) has short memory then, the sampled process \(\mathbf {Y}\) has short memory too.

Proof

It is clearly enough to prove that

Since \(\sigma _* \) is a decreasing function, we have

Taking the expectation of the left-hand-side and noting that \(\Delta _h\) and \(T_h\) are independent, we obtain, for every \(a>0\),

Since \(\sigma _*^p(T_h+u)\le \sigma _*^p(T_h)\) and \(u-a\le 0\), we get

It is possible to choose a such that \( P(\Delta _0\in [0,a])<1\). For such a choice we obtain

After summation, the inequalities (2.5) give, for every \(K\ge 0\)

which implies

Then, using (2.4)

and consequently, as \(a-\ell (a)>0\)

\(\square \)

We now consider the case of long memory processes. We give conditions on \(T_1\) that ensure the preservation of the long memory property.

Proposition 2.5

Assume Assumption \({\mathcal {H}}\) holds. We suppose that \(\sigma _X(.)\) is ultimately positive and non-increasing on \({\mathbb {R}}^+\), i.e there exists \(t_{0}\ge 0\) such that \(\sigma _X(.)\) is positive and non-increasing on the interval \([t_0,\infty )\). If \({\mathbb {E}}[T_1]<\infty \), then the long memory is preserved after the subsampling, i.e. \(\int _{ {\mathbb {R}}^+} \vert \sigma _X(x)\vert {\ \mathrm {d}}x =\infty \) implies \(\sum _{h \ge 0} \vert \sigma _Y(h)\vert =\infty \).

Remark 3

In this proposition, we only show that the long memory is preserved in the sense of the non-summability of autocovariance function. Additional assumptions are required to compare the convergence rates of \(\sigma _X\) and \(\sigma _Y\). This question is addressed in Sect. 3 where we impose semi parametric form on \(\sigma _X\).

Remark 4

The assumptions on positivity and the decrease of the auto-covariance function are not too restrictive. They are satisfied in most of studied models. The condition of integrability of intervals \(\Delta _j\) is the most difficult to verify since the underlying process is generally not observed.

Proof

Let \(h_0\) be the (random) first index such that \(T_{h_0}\ge t_0\). For every \(h\ge h_0\),

Summing up gives

Now, taking expectations, and noting that, since \({\mathbb {E}}[T_1]={\mathbb {E}}[\Delta _1]>0\), the law of large numbers implies that \(T_h\xrightarrow []{a.s.} \infty \), and in particular \(h_0<\infty \) a.s., whence

The left hand side is infinite. Since \(\Delta _h\) is independent of \(\sigma _X(T_h)\,\mathbb {I}_{h_0\le h}\), the right hand side is \(\displaystyle {\mathbb {E}}[T_1]\sum _{h\ge 1}{\mathbb {E}}[\sigma _X(T_h)\,\mathbb {I}_{h_0\le h}]\). Consequently, since \({\mathbb {E}}[T_1]<\infty \), we have

It remains to be noted that \({\mathbb {E}}[h_0]<\infty \) [see for example Feller (1966) p. 185], which implies

leading, via (2.8) to \(\sum _{h\ge 1}\vert {\mathbb {E}}[\sigma _X(T_h)]\vert =\infty \). \(\square \)

3 Long memory processes

We consider a long memory process \(\mathbf {X}\) and we impose a semi parametric form to autocovariance function. We assume that the autocovariance \(\sigma _X\) is regularly varying function at infinity of the form

where \(0<d<1/2\) and L is ultimately non-increasing and slowly varying at infinity, in the sense that L is positive on \([t_0,\infty )\) for some \(t_0>0\) and

This class of models contains for instance CARFIMA models.

The parameter d characterizes the intensity of the memory of \(\mathbf{X}\). In the following propositions, we evaluate the long memory parameter of the sampled process \({\mathbf {Y}}\) as a function of d and the probability distribution of \(T_1\).

3.1 Preservation of the memory when \({\mathbb {E}}[T_{1}]<\infty \)

Theorem 3.1

Under Assumption \({\mathcal {H}}\) and (3.1), if \(0<{\mathbb {E}}[T_1]<\infty \), the discrete time process \(\mathbf {Y}\) has a long memory and its covariance function behaves as

Remark 5

We can rewrite

where \(\tilde{L}\) is slowly varying at infinity and \( \tilde{L}(h)\sim ({\mathbb {E}}[T_1])^{-1+2d}L(h)\) as \(h\rightarrow \infty \). In particular, \({\mathbf {X}}\) and \({\mathbf {Y}}\) have the same memory parameter d.

Proof

-

We show first that

$$\begin{aligned} \liminf _{h\rightarrow \infty }\frac{\sigma _Y(h)}{(h{\mathbb {E}}[T_1])^ {-1+2d}L(h)}\ge 1. \end{aligned}$$Let \(0<c<{\mathbb {E}}[T_1]\), and \(h\in {\mathbb {N}}\) such that \(ch\ge 1\),

$$\begin{aligned} \sigma _Y(h)\ge & {} {\mathbb {E}}\left[ \sigma _X(T_h)\,\mathbb {I}_{T_h>ch}\right] \ge \inf _{t>ch}\{L(t)t^{2d}\}{\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h}\right] . \end{aligned}$$Thanks to Hölder inequality,

$$\begin{aligned} \left( P(T_h> ch)\right) ^2 \le {\mathbb {E}}[T_h] {\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h}\right] , \end{aligned}$$that is

$$\begin{aligned} {\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h}\right] \ge \frac{\left( P(T_h> ch)\right) ^2}{h{\mathbb {E}}[T_1]}. \end{aligned}$$Summarizing,

$$\begin{aligned} \sigma _Y(h)\ge & {} \inf _{t>ch}\{L(t)t^{2d}\} \frac{\left( P(T_h> ch)\right) ^2}{h{\mathbb {E}}[T_1]}, \nonumber \\&\frac{\sigma _Y(h)}{ (h{\mathbb {E}}[T_1])^{-1+2d}L(h)}\ge \inf _{t>ch}\{L(t)t^{2d}\} \frac{\left( P(T_h> ch)\right) ^2}{(h{\mathbb {E}}[T_1])^{2d}L(h)}. \end{aligned}$$(3.2)Using Bingham et al. (1989) (Th 1.5.3, p23), we obtain, since \(d > 0 \)

$$\begin{aligned} \inf _{t\ge ch}\{L(t)t^{2d}\} \sim L(ch)(ch)^{2d}, \text { as } h \rightarrow \infty . \end{aligned}$$(3.3)The law of large numbers implies that \( T_h/h\xrightarrow []{a.s.} {\mathbb {E}}[T_1]\). As \(c<{\mathbb {E}}[T_1]\), we have \(P(T_{h}>ch)\rightarrow 1\) and the r.h.s. of (3.2) tends to \(\displaystyle \left( c/{\mathbb {E}}[T_1]\right) ^{2d}\) as \(h\rightarrow \infty \). Finally, for all \(c<{\mathbb {E}}[T_1]\),

$$\begin{aligned} \liminf _{h\rightarrow \infty }\frac{\sigma _Y(h)}{(h{\mathbb {E}}[T_1])^ {-1+2d}L(h)}\ge \left( \frac{c}{{\mathbb {E}}[T_1]}\right) ^{2d}. \end{aligned}$$Taking the limit as \(c\rightarrow {\mathbb {E}}[T_1]\), we get the lower bound.

-

Let us now prove

$$\begin{aligned} \limsup _{h\rightarrow \infty }\frac{\sigma _Y(h)}{(h{\mathbb {E}}[T_1])^{-1+2d}L(h)}\le 1. \end{aligned}$$We use a proof similar to that presented in Shi et al. (2010) (Theorem 1). We denote for \(h\ge 1\) and \(0<s<1\),

$$\begin{aligned} \mu _h= & {} {\mathbb {E}}[T_h]=h{\mathbb {E}}[T_1],\\ T_{h,s}= & {} \sum _{j=0}^{h-1}\Delta _j\,\mathbb {I}_{\Delta _j\le \mu _h^s/\sqrt{h}} \ ,\\ \mu _{h,s}= & {} {\mathbb {E}}\left[ T_{h,s}\right] =h{\mathbb {E}}\left[ \Delta _0 \,\mathbb {I}_{\Delta _0\le \mu _h^s/\sqrt{h}}\right] . \end{aligned}$$Since \({\mathbb {E}}[T_1]<\infty \), we have for \(\frac{1}{2}<s<1\), \(\mu _{h,s}\sim \mu _h\) as \(h\rightarrow \infty \). Let \(\frac{1}{2}<s<\tau <1\), \(t_0\) such that L(.) is non-increasing on \([t_0,\infty )\) and h such that \(\mu _{h,s}-\mu _{h,s}^\tau \ge t_0\),

$$\begin{aligned} \sigma _Y(h)= & {} {\mathbb {E}}\left[ T_h^{-1+2d}L(T_h) \,\mathbb {I}_{T_{h,s}\ge \mu _{h,s}-\mu _{h,s}^\tau }\right] +{\mathbb {E}}\left[ T_h^{-1+2d}L(T_h)\,\mathbb {I}_{T_{h,s}<\mu _{h,s}-\mu _{h,s}^\tau }\right] \\=: & {} M_1+M_2. \end{aligned}$$We will now establish upper bounds for the terms M1 and M2. We have

$$\begin{aligned} M_1\le & {} {\mathbb {E}}\left[ T_{h,s}^{-1+2d}L(T_{h,s}) \,\mathbb {I}_{T_{h,s}\ge \mu _{h,s}-\mu _{h,s}^\tau }\right] \le \left( \mu _{h,s}-\mu _{h,s}^\tau \right) ^{-1+2d}L(\mu _{h,s}-\mu _{h,s}^\tau ) \nonumber \\= & {} (h{\mathbb {E}}[T_1])^{-1+2d}L(h)\left( \frac{\mu _{h,s} -\mu _{h,s}^\tau }{h{\mathbb {E}}[T_1]}\right) ^{-1+2d}\frac{L(\mu _{h,s} -\mu _{h,s}^\tau )}{L(h)} . \end{aligned}$$(3.4)As \(\tau <1\) and \(1/2<s<1\), \(\left( \frac{\mu _{h,s}-\mu _{h,s}^\tau }{h{\mathbb {E}}[T_1]}\right) ^{-1+2d}\rightarrow 1\) as \(h\rightarrow \infty \). Then,

$$\begin{aligned} \frac{L(\mu _{h,s}-\mu _{h,s}^\tau )}{L(h)}=\frac{L\left( h{\mathbb {E}}[T_1] \frac{\mu _{h,s}-\mu _{h,s}^\tau }{h{\mathbb {E}}[T_1]}\right) }{L(h{\mathbb {E}}[T_1])}\frac{L(h{\mathbb {E}}[T_1])}{L(h)}. \end{aligned}$$As we have uniform convergence of \(\lambda \mapsto \frac{L(h{\mathbb {E}}[T_1] \lambda )}{L(h{\mathbb {E}}[T_1])}\) to 1 (as \(h \rightarrow \infty \)) in each interval [a, b] and as \(\frac{\mu _{h,s}-\mu _{h,s}^\tau }{h{\mathbb {E}}[T_1]}\rightarrow 1\), we get

$$\begin{aligned} \frac{L(\mu _{h,s}-\mu _{h,s}^\tau )}{L(h)}\rightarrow 1, \end{aligned}$$as \(h\rightarrow \infty \). We obtain

$$\begin{aligned} M_1\le \left( \mu _{h,s}-\mu _{h,s}^\tau \right) ^{-1+2d}L(\mu _{h,s}-\mu _{h,s}^\tau )\sim (h{\mathbb {E}}[T_1])^{-1+2d}L(h). \end{aligned}$$(3.5)Since \(\displaystyle \sup _{t\in {\mathbb {R}}^+}\vert \sigma _X(t)\vert =\sigma _X(0) <\infty \), we have

$$\begin{aligned} M_2\le & {} \sigma _X(0) P \left( T_{h,s}<\mu _{h,s}-\mu _{h,s}^\tau \right) = \sigma _X(0) P \left( -T_{h,s}+{\mathbb {E}}[T_{h,s}]>\mu _{h,s}^\tau \right) . \end{aligned}$$We apply Hoeffding inequality to variables \(Z_j=-\Delta _j \,\mathbb {I}_{\Delta _j\le \mu _h^s/\sqrt{h}}\) which are a.s in \([-\frac{\mu _h^s}{\sqrt{h}} ,0] \) to get,

$$\begin{aligned} M_2\le \sigma _X(0) \exp \left( -2\left( \frac{\mu _{h,s}^\tau }{\mu _h^s}\right) ^2\right) \end{aligned}$$and \(\displaystyle \left( \frac{\mu _{h,s}^\tau }{\mu _h^s}\right) ^2\sim (h{\mathbb {E}}[T_1])^{2(\tau -s)}\). Finally

$$\begin{aligned} M_2=o((h{\mathbb {E}}[T_1])^{-1+2d}L(h)). \end{aligned}$$(3.6)

\(\square \)

3.2 Decrease of memory

The phenomenon is the same as in the discrete case [see Philippe and Viano (2010)]: starting from a long memory process, a heavy tailed sampling distribution can lead to a short memory process.

Proposition 3.2

Assume that the covariance of \(\mathbf {X}\) satisfies

where \(0<d<1/2\). If there exists \(\beta \in (0,1)\) such that

then, there exists \(C>0\) such that

Remark 6

The condition (3.8) implies that \({\mathbb {E}}[T_1^\beta ]= \infty \). As \(\beta \in (0,1)\), the first moment of increments is infinite contrary to assumption of Sect. 3.1.

Proof

From assumption (3.7),

We have

As \({\mathbb {E}}[\min \{1,T_h^{-1+2d}\}]\le 1\), the integral in the right hand side is finite

Since \(-2+2d \in [-2 \, , \, -1[\), we also have

Thus, the integration by parts can be applied, and we get

and

From assumption (3.8) on the tail of the sampling law, it follows that, there exists \(C>0\) and \(x_0\ge 1\) such that

Furthermore for \(x\in [1,x_0],\)

We obtain: \(\forall x\ge 1, ~ P(T_1 >x)\ge \tilde{C} x^{-\beta } \) with \(\tilde{C}=Cx_0^{-\beta }\), and then

Gathering (3.10) and (3.11) then gives

Since

we obtain

Thus (3.9) is proven with \(C = c D \frac{1-2d}{\beta } \). \(\square \)

Under some additional assumptions, we show that the bound obtained in Proposition 3.2 is equal to the convergence rate (up to a multiplicative constant).

Proposition 3.3

Assume that

where \(0<d<1/2\) and where L is slowly varying at infinity and ultimately monotone. We denote

If \(\beta \in (0,1)\) then, for every \(\varepsilon > 0\), there exists \(C_\varepsilon >0\) such that

Proof

Let \(\varepsilon > 0 \). We have

where

Using Proposition 1.3.6 in Bingham et al. (1989),

Moreover \(\delta >\frac{1}{\beta }\). From (3.12), this implies \({\mathbb {E}}[T_1^{1/\delta }]<\infty \). Then, the law of large numbers of Marcinkiewicz-Zygmund [see Stout (1974) Theorem 3.2.3] yields

From (3.14) and (3.15) we obtain

Therefore by Fatou’s Lemma, we get

This convergence implies the inequality (3.13). \(\square \)

Remark 7

In this context the long memory parameter d of the initial process \(\mathbf {X}\) is not identifiable using the sampled process. Information on probability distribution of \(\Delta _1\) is required.

4 Limit theorems in semiparametric case

We consider the process of partial sums

In Theorem 4.2, we show that if \(\mathbf {X}\) is a Gaussian process and \({\mathbf {X}}\) and \({\mathbf {Y}}\) have the same long memory parameter, the normalized partial sum process converges to a fractional Brownian motion. According to Proposition 2.3, Gaussianity is lost after sampling, however we get the classical behavior obtained by Taqqu (1975) and Davydov (1970).

4.1 Convergence of the partial sum process

To prove the convergence of the normalized partial sum process, we first need a result on the convergence in probability of conditional variance of \(S_n\).

Lemma 4.1

Let \(\mathbf {X}\) be a Gaussian process with regularly varying covariance function

where \(0<d<1/2\) and where L is slowly varying at infinity and ultimately non-increasing.

If \({\mathbb {E}}[T_1]<\infty \), then we have

where \(\gamma _d:=\frac{{\mathbb {E}}[T_1]^{-1+2d}}{d(1+2d)}\).

Proof

See “Appendix”. \(\square \)

Theorem 4.2

Assume Assumption \({\mathcal {H}}\) holds. If \(\mathbf {X}\) is a Gaussian process with regularly varying covariance function \(\sigma _X(t) = L(t) t^{-1+2d}\), with \(0<d<1/2\) and L slowly varying at infinity and ultimately non-increasing (Hypothesis 3.1). Then, if \({\mathbb {E}}[T_1]<\infty \), we get

where \(B_{\frac{1}{2}+d}\) is the fractional Brownian motion with parameter \(\frac{1}{2}+d\), and where \(\gamma _d\) is defined in Lemma 4.1.

Proof

We first prove the weak convergence in finite-dimensional distributions of

to the corresponding finite-dimensional distributions of \(B_{\frac{1}{2}+d}(.)\).

It suffices to show that for every \(k\ge 1\), \((b_1,~\dots ~,b_k)\in {\mathbb {R}}^k\), \(0\le t_1,\dots ,t_k \le 1\),

satisfies

If \(t_1=\dots =t_k=0\), then \(\gamma _d^{-1/2}L(n)^{-1/2}n^{-1/2-d} \sum _{i=1}^k b_i S_n(t_i)=\sum _{i=1}^k b_i B_{\frac{1}{2}+d}(t_i)=0\). So we fix n large enough to have \([n\max _i(t_i)]\ge 1\) and denote \(T^{(n)}=(T_1,\dots ,T_{[n\max _i(t_i)]})\). The characteristic function of \(A_n\) is

Moreover, we have

By Lemma 4.1,

Therefore

and for \(t_i>t_j\)

Finally, we have

where \(r_{\frac{1}{2}+d}\) is the covariance function of a fractional Brownian motion, and hence

Therefore, applying dominated convergence theorem, we get

The sequence of partial-sum processes \(L(n)^{-1/2} n^{-1/2-d}S_n(.)\) is tight with respect to the uniform norm [see Giraitis et al. (2012) Prop 4.4.2 p78, for the proof of the tightness] and then we get the convergence in \(\mathcal {D}[0,1]\) with the uniform metric. \(\square \)

4.2 Estimation of the long memory parameter

An immediate consequence of this limit theorem is to provide a nonparametric estimation of the long memory parameter d using the well-known R/S statistics. This is a heuristic method for estimating the long memory parameter. To validate the convergence of more efficient estimates (e.g. Whittle’s estimate or estimators based on the spectral approach), a non trivial study of the asymptotic properties of periodogram is required. Indeed the sampled process do not satisfied the classical assumptions (Gaussian process, Linear process with independent and identically distributed innovations) under which the properties are established [see Giraitis et al. (2012), Beran et al. (2013)].

The R/S statistic is defined as the quotient between \(R_n\) and \(S_n\) where

and

Proposition 4.3

Under the same assumptions as Theorem 4.2, we have

where \(B_{\frac{1}{2}+d}^0(t)=B_{\frac{1}{2}+d}(t)-tB_{\frac{1}{2}+d}(1)\) is a fractional Brownian bridge and \(\gamma _d\) is a constant defined in Lemma 4.1.

Proof

Using the equality

and the convergence of the partial-sum process given in Theorem 4.2, we get

Then, we establish the convergence in probability of \(S_n^2\) defined in (4.5). As

we have for \(\varepsilon >0\)

and

For \((s,t)\in ({\mathbb {R}}^+)^2\), we decompose \(X_s^2\) and \(X_t^2\) in the complete orthogonal system of Hermite polynomials \((H_k)_{k\ge 0}\):

thus, we get

Using the orthogonality property of Hermite polynomials for a bivariate normal density with unit variances [see Giraitis et al. (2012), Prop 2.4.1], we obtain

Finally,

If \(0\le d\le 1/4\), we apply Proposition 2.4 with \(p=1\) and the function \(\sigma _X^2\) to obtain

If \(1/4< d< 1/2\), Theorem 3.1 can be applied to \(\sigma _X^2\), and we get

Since L is positive, ultimately non-increasing, it admits a limit \(L(x)\xrightarrow []{x\rightarrow \infty } L_\infty \), and so

According to Giraitis et al. (2012) (Proposition 3.3.1 page 43), we get

Therefore, we get in both cases

We conclude that \(S_n\xrightarrow [n\rightarrow \infty ]{p} \sqrt{\sigma _X(0)}\) and

\(\square \)

An application of this result is the estimation of long-memory parameter by the R/S estimate [see e.g. Mandelbrot and Wallis (1969), Li et al. (2019)]. We assume that the autocovariance function satisfies the following condition \(\sigma _X(t) \sim c t^{2d-1} \) as \(t\rightarrow \infty \) (or equivalently \(L(t) \rightarrow c>0 \) as \(t\rightarrow \infty \)). Taking the logarithm of both sides of (4.6) we get the heuristic identity

where \((\varepsilon _n)_n\) is a sequence of random variables which converges to zero in probability. Then we estimate the slope of the regression line of \((\log (n),\log (R_n/S_n))\), which gives the R/S estimate of d. Because of the asymptotic linear relation, we do not fit the straight line on all points, but only on values of n large enough.

Example 2

Let us consider the same model as in Example 1, i.e. the intervals \(\Delta _j\) have an exponential distribution and \(\mathbf {X}\) is a Gaussian process with autocovariance function (2.2).

To evaluate the effects of random sampling on the R/S estimator, we also estimate the long memory parameter of Gaussian FARIMA(0, d, 0) processes. A FARIMA(0, d, 0) process is a stationary discrete-time process whose autocovariance function behaves as

See Giraitis et al. (2012) and Beran et al. (2013) for detailed presentations of this model.

We compare the performance of R/S estimate \({\hat{d}}_n\) for different values of \({\mathbb {E}}[T_1] \in \{ 1/2, \ 1, \ 2 \}\) and different sample sizes \(n\in \{1000,\ 5000, \ 100{,}000\}\). We fix \(d = 0.25\), the long memory parameter is the same for all simulated processes. We regress \(\log (R_k/S_k)\) against \(\log (k)\) with \(k > m\). The value of m is fixed according to the bias-variance tradeoff on the FARIMA model. In Fig. 2 we represent the boxplots of estimation errors. For all the models, the bias and the variance decrease as function of the sample size n. The boxplots show that the precision is of the same order of magnitude for sampled processes and for the FARIMA process. The R/S estimate is less efficient in terms of mean squared error for the sampled processes in particular for the small values of \({\mathbb {E}}[T_1]\). This effect can be explained by the fact that continuous time process is observed inside a random time interval \([0,T_n]\) with \(T_n\sim {\mathbb {E}}[T_1] n\) as \(n\rightarrow \infty \).

Boxplots of the estimation error \({\hat{d}}_n-d\) and representation of the mean squared error (MSE) \({\mathbb {E}}\left[ ({\hat{d}}_n -d)^2\right] \) for different values of n and \({\mathbb {E}}[T_1]\). The samples are simulated from the models defined in Example 2. The true value of the long memory parameter is \(d=0.25\). Estimations are done on \(p=500\) independent copies

References

Adorf HM (1995) Interpolation of irregularly sampled data series—a survey. In: Astronomical society of the pacific conference series, vol 77

Beran J, Feng Y, Ghosh S, Kulik R (2013) Long-memory processes. Probabilistic properties and statistical methods. Springer, Heidelberg

Bingham NH, Goldie CM, Teugels JL (1989) Regular variation. In: Encyclopedia of mathematics and its applications, vol 27, Cambridge University Press, Cambridge

Brockwell PJ, Davis RA, Yang Y (2007) Continuous-time Gaussian autoregression. Stat Sin 17(1):63–80

Broersen PM (2007) Time series models for spectral analysis of irregular data far beyond the mean data rate. Meas Sci Technol 19(1):015103

Chambers MJ (1996) The estimation of continuous parameter long-memory time series models. Econom Theory 12(2):374–390

Comte F (1996) Simulation and estimation of long memory continuous time models. J Time Ser Anal 17(1):19–36

Comte F, Renault E (1996) Long memory continuous time models. J Econom 73(1):101–149

Davydov YA (1970) The invariance principle for stationary processes. Theory Probab Appl 15:487–498

Duffie D, Glynn P (2004) Estimation of continuous-time markov processes sampled at random time intervals. Econometrica 72:1773–1808

Elorrieta F, Eyheramendy S, Palma W (2019) Discrete-time autoregressive model for unequally spaced time-series observations. Astron Astrophys 627:A120

Eyheramendy S, Elorrieta F, Palma W (2018) An irregular discrete time series model to identify residuals with autocorrelation in astronomical light curves. Mon Not R Astron Soc 481:4311–4322

Feller W (1966) An introduction to probability theory and its applications, vol 2. Wiley, New York

Friedman M (1962) The interpolation of time series by related series. J Am Stat Assoc 57(300):729–757

Giraitis L, Koul HL, Surgailis D (2012) Large sample inference for long memory processes. Imperial College Press, London

Jones RH (1981) Fitting a continuous time autoregression to discrete data. In: Applied time series analysis II, Elsevier, pp 651–682

Jones RH, Tryon PV (1987) Continuous time series models for unequally spaced data applied to modeling atomic clocks. SIAM J Sci Stat Comput 8(1):71–81

Li D, Robinson PM, Shang HL (2019) Long-range dependent curve time series. J Am Stat Assoc. https://doi.org/10.1080/01621459.2019.1604362

Mandelbrot BB, Wallis JR (1969) Robustness of the rescaled range r/s in the measurement of noncyclic long run statistical dependence. Water Resour Res 5:967–988

Masry E, Lui M-C (1975) A consistent estimate of the spectrum by random sampling of the time series. SIAM J Appl Math 28(4):793–810

Masry K-SLE (1994) Spectral estimation of continuous-time stationary processes from random sampling. Stoch Process Appl 52:39–64

Mayo WT (1978) Spectrum measurements with laser velocimeters. In: Hansen BW (ed) Proceedings of the dynamic flow conference 1978 on dynamic measurements in unsteady flows, Springer, Dordrecht, Netherlands, pp 851–868

Mykland YAPA (2003) The effects of random and discrete sampling when estimating continuous-time diffusions. Econometrica 71:483–549

Nieto-Barajas LE, Sinha T (2014) Bayesian interpolation of unequally spaced time series. Stoch Environ Res Risk Assess 29:577–587

Philippe A, Viano M-C (2010) Random sampling of long-memory stationary processes. J Stat Plan Inference 140(5):1110–1124

Scargle JD (1982) Studies in astronomical time series analysis. ii—statistical aspects of spectral analysis of unevenly spaced data. Astrophys J 263:835–853

Shi X, Wu Y, Liu Y (2010) A note on asymptotic approximations of inverse moments of nonnegative random variables. Stat Probab Lett 80(15–16):1260–1264

Stout WF (1974) Almost sure convergence. In: Probability and mathematical statistics, vol 24, Academic Press, New York

Taqqu MS (1975) Weak convergence to fractional Brownian motion and to the Rosenblatt process. Z Wahrscheinlichkeitstheorie Verw Gebiete 31:287–302

Tsai H (2009) On continuous-time autoregressive fractionally integrated moving average processes. Bernoulli 15(1):178–194

Tsai H, Chan KS (2005a) Maximum likelihood estimation of linear continuous time long memory processes with discrete time data. J R Stat Soc Ser B Stat Methodol 67(5):703–716

Tsai H, Chan KS (2005b) Quasi-maximum likelihood estimation for a class of continuous-time long-memory processes. J Time Ser Anal 26(5):691–713

Viano M-C, Deniau C, Oppenheim G (1994) Continuous-time fractional ARMA processes. Stat Probab Lett 21(4):323–336

Acknowledgements

We thank the Associate Editor and the referees for valuable comments that led to an improved this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

To prove Lemma 4.1, we need the following intermediate result:

Lemma 5.1

If \({\mathbb {E}}[T_1]<\infty \) and \(\mathbf {X}\) has a regularly varying covariance function

with \(0<d<1/2\) and L slowly varying at infinity and ultimately non-increasing. Then,

Proof

By Theorem 3.1, we have \( {\mathbb {E}}[\sigma _X(T_h)] \underset{h\rightarrow \infty }{\sim }L(h)(h{\mathbb {E}}[T_1])^{-1+2d}\). To get the result, it is enough to prove that

To prove the asymptotic behavior of \({\mathbb {E}}[\sigma _X(T_h)^2]\), we will follow a similar proof as theorem 3.1:

-

Let \(0<c<{\mathbb {E}}[T_1]\), and \(h\in {\mathbb {N}}\) such that \(ch\ge 1\),

$$\begin{aligned} {\mathbb {E}}[\sigma _X(T_h)^2]\ge & {} {\mathbb {E}}\left[ \sigma _X(T_h)^2\,\mathbb {I}_{T_h>ch}\right] \ge {\mathbb {E}}\left[ L(T_h)^2T_h^{-2+4d}\,\mathbb {I}_{T_h> ch}\right] \\\ge & {} \inf _{t>ch}\{L(t)^2t^{4d}\}{\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h^2}\right] . \end{aligned}$$Thanks to Jensen and Hölder inequalities,

$$\begin{aligned} {\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h^2}\right] \ge {\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h}\right] ^2 \text { and } P(T_h> ch)^2 \le {\mathbb {E}}[T_h] {\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h}\right] , \end{aligned}$$that is

$$\begin{aligned} {\mathbb {E}}\left[ \frac{\,\mathbb {I}_{T_h> ch}}{T_h^2}\right] \ge \frac{P(T_h> ch)^4}{{\mathbb {E}}[T_h]^2}. \end{aligned}$$Summarizing,

$$\begin{aligned} \frac{{\mathbb {E}}[\sigma _X(T_h)^2] }{ L(h)^2(h{\mathbb {E}}[T_1])^{-2+4d}}\ge \frac{\inf _{t>ch}\{L(t)^2t^{4d}\}}{L(h)^2h^{4d}{\mathbb {E}}[T_1]^{4d}}P(T_h> ch)^4. \end{aligned}$$(5.2)Then, for \(c<{\mathbb {E}}[T_1]\), we have \(P(T_{h}>ch)\rightarrow 1\) and \(\inf _{t>ch}\{L(t)^2t^{4d}\}\sim L(ch)^2(ch)^{4d}\). Finally, for all \(c<{\mathbb {E}}[T_1]\),

$$\begin{aligned} \liminf _{h\rightarrow \infty }\frac{{\mathbb {E}}[\sigma _X(T_h)^2] }{ L(h)^2(h{\mathbb {E}}[T_1])^{-2+4d}}\ge \left( \frac{c}{{\mathbb {E}}[T_1]}\right) ^{4d}. \end{aligned}$$Taking the limit as \(c\rightarrow {\mathbb {E}}[T_1]\), we get

$$\begin{aligned} \liminf _{h\rightarrow \infty }\frac{{\mathbb {E}}[\sigma _X(T_h)^2] }{ L(h)^2(h{\mathbb {E}}[T_1])^{-2+4d}}\ge 1. \end{aligned}$$ -

Let \(\frac{1}{2}<s<\tau <1\), \(t_0\) such that L(.) is non-increasing and positive on \([t_0,\infty )\) and h such that \(\mu _{h,s}-\mu _{h,s}^\tau \ge t_0\), with the same notation as Theorem 3.1,

$$\begin{aligned} {\mathbb {E}}[\sigma _X(T_h)^2]= & {} {\mathbb {E}}\left[ L(T_h)^2T_h^{-2+4d} \,\mathbb {I}_{T_{h,s}\ge \mu _{h,s}-\mu _{h,s}^\tau }\right] +{\mathbb {E}}\left[ \sigma (T_h)^2\,\mathbb {I}_{T_{h,s}<\mu _{h,s}-\mu _{h,s}^\tau }\right] \\\le & {} L(\mu _{h,s}-\mu _{h,s}^\tau )^2\left( \mu _{h,s}-\mu _{h,s} ^\tau \right) ^{-2+4d}+\sigma _X(0)^2 P \left( T_{h,s}<\mu _{h,s}-\mu _{h,s}^\tau \right) . \end{aligned}$$We get

$$\begin{aligned} \frac{{\mathbb {E}}[\sigma _X(T_h)^2] }{ L(h)^2(h{\mathbb {E}}[T_1])^{-2+4d}}&\le \left( \frac{L(\mu _{h,s}-\mu _{h,s}^\tau )}{L(h)}\right) ^{2} \left( \frac{\mu _{h,s}-\mu _{h,s}^\tau }{h{\mathbb {E}}[T_1]}\right) ^{-2+4d}\\ {}&\quad +\sigma _X(0)^2 \frac{P \left( T_{h,s}<\mu _{h,s}-\mu _{h,s}^\tau \right) }{L(h)^2(h{\mathbb {E}}[T_1])^{-2+4d}}, \end{aligned}$$and finally

$$\begin{aligned} \limsup _{h\rightarrow \infty }\frac{{\mathbb {E}}[\sigma _X(T_h)^2] }{ L(h)^2(h{\mathbb {E}}[T_1])^{-2+4d}}\le 1. \end{aligned}$$

\(\square \)

Proof of Lemma 4.1:

Denote

We want to prove that \(W_n\) converges in probability to \(\gamma _d\). To do this, we will show that \({\mathbb {E}}[W_n]\xrightarrow [n\rightarrow \infty ]{} \gamma _d\) and \({\mathrm {Var}}(W_n)\xrightarrow [n\rightarrow \infty ]{} 0\).

-

As \(\mathbf {X}\) is a centered process \(E[W_n]=L(n)^{-1}n^{-1-2d}{\mathrm {Var}}(Y_1+\dots +Y_n)\). By Theorem 3.1, we have

$$\begin{aligned} \sigma _Y(h) \sim L(h)(h{\mathbb {E}}[T_1])^{-1+2d} \qquad h\rightarrow \infty , \end{aligned}$$then

$$\begin{aligned} L(n)^{-1}n^{-1-2d}{\mathrm {Var}}(Y_1+\dots +Y_n)\xrightarrow [n\rightarrow \infty ]{} \gamma _d, \end{aligned}$$(5.3)[see Giraitis et al. (2012) Proposition 3.3.1, page 43]. Therefore we obtain

$$\begin{aligned} E[W_n]\xrightarrow [n\rightarrow \infty ]{} \gamma _d . \end{aligned}$$ -

Furthermore,

$$\begin{aligned} {\mathrm {Var}}(W_n)&=L(n)^{-2}n^{-2-4d}{\mathrm {Var}}\left( \sum _{i=1}^n\sum _{j=1}^n \sigma _X(T_j-T_i)\right) \\&\le L(n)^{-2}n^{-2-4d}\left( \sum _{i=1}^n\sum _{j=1}^n \sqrt{{\mathrm {Var}}(\sigma _X(T_j-T_i))}\right) ^2\\&=\left( 2n^{-1-2d}L(n)^{-1} \sum _{h=1}^n(n-h)\sqrt{{\mathrm {Var}}(\sigma _X(T_h))}\right) ^2. \end{aligned}$$Then, by Lemma 5.1, \(\sqrt{{\mathrm {Var}}(\sigma _X(T_h))}=\circ (L(h)h^{-1+2d})\) and \( 2 \sum _{h=1}^n (n-h)L(h)h^{-1+2d}\sim \frac{L(n)n^{1+2d}}{d(1+2d)}\). We get

$$\begin{aligned} 2 \sum _{h=1}^n(n-h)\sqrt{{\mathrm {Var}}(\sigma _X(T_h))}=\circ (L(n)n^{1+2d}). \end{aligned}$$Finally, \({\mathrm {Var}}(W_n)=\circ (1)\) which means that \({\mathrm {Var}}(W_n)\xrightarrow [n\rightarrow \infty ]{} 0\). We obtain

$$\begin{aligned} W_{n}\xrightarrow [n\rightarrow \infty ]{L^2,~ p} \gamma _d. \end{aligned}$$

Rights and permissions

About this article

Cite this article

Philippe, A., Robet, C. & Viano, MC. Random discretization of stationary continuous time processes. Metrika 84, 375–400 (2021). https://doi.org/10.1007/s00184-020-00783-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-020-00783-1