Abstract

Here, we develop numerical methods for finite-state mean-field games (MFGs) that satisfy a monotonicity condition. MFGs are determined by a system of differential equations with initial and terminal boundary conditions. These non-standard conditions make the numerical approximation of MFGs difficult. Using the monotonicity condition, we build a flow that is a contraction and whose fixed points solve both for stationary and time-dependent MFGs. We illustrate our methods with a MFG that models the paradigm-shift problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The mean-field game (MFG) framework [24, 25, 27, 28] models systems with many rational players (see the surveys [18] and [19]). In finite-state MFGs, players switch between a finite number of states (see [16] for discrete-time and [7, 14, 17, 22], and [23] for continuous-time problems). Finite-state MFGs have applications in socio-economic problems, for example, in paradigm-shift and consumer choice models [8, 20, 21] or in corruption models [26]. They also arise in the approximation of continuous-state MFGs [1, 3, 6]. The MFG framework is a major paradigm change in the analysis of N-agent games. MFGs are an alternative approach to particle or agent-based models, which frequently are intractable from the analytical and numerical point of view and often provide no insight on the qualitative properties of the models. Finite-state MFGs are amenable to analytical tools and flexible enough to address a wide range of applications and to provide quantitative and qualitative information. However, in many cases of interest, they have no simple closed-form solution. Hence, the development of numerical methods is critical to applications of MFGs.

Finite-state MFGs comprise systems of ordinary differential equations with initial-terminal boundary conditions. Because of these conditions, the numerical computation of solutions is challenging. Often, MFGs satisfy a monotonicity condition that was first used in [27] and [28] to study the uniqueness of solutions. Besides the uniqueness of solutions, monotonicity implies the long-time convergence of MFGs (see [14] and [17] for finite-state models and [10] and [11] for continuous-state models). Moreover, monotonicity conditions were used in [15] to prove the existence of solutions to MFGs and in [6] to construct numerical methods for stationary MFGs. Here, we consider MFGs that satisfy a monotonicity condition and develop a numerical method to compute their solutions. For stationary problems, our method is a modification of the one in [6]. Our main advance here is how we handle initial-terminal boundary conditions, to which the methods from [6] cannot be applied directly.

We consider MFGs in which each player can be at a state in \(I_d=\{1,\ldots ,d\}\), \(d\in {\mathbb {N}}\), \(d>1\), the players’ state space. Let \(\mathcal {S}^d=\{\theta \in ({\mathbb {R}}_0^+)^d : \sum _{i=1}^d \theta ^i=1\}\) be the probability simplex in \(I_d\). For a time horizon, \(T>0\), the macroscopic description of the game is determined by a path \(\theta : [0,T] \rightarrow \mathcal {S}^d\) that gives the probability distribution of the players in \(I_d\). All players seek to minimize an identical cost. Each coordinate, \(u^i(t)\), of the value function, \(u: [0,T] \rightarrow {\mathbb {R}}^d\), is the minimum cost for a typical player at state \(i\in I_d\) at time \(0\le t\le T\). Finally, at the initial time, the players are distributed according to the probability vector \(\theta _0\in \mathcal {S}^d\) and, at the terminal time, are charged a cost \(u_T\in {\mathbb {R}}^d\) that depends on their state.

In the framework presented in [16], finite-state MFGs have a Hamiltonian, \(h:{\mathbb {R}}^d\times \mathcal {S}^d\times I_d\rightarrow {\mathbb {R}}\), and a switching rate, \(\alpha ^*_i:{\mathbb {R}}^d\times \mathcal {S}^d\times I_d\rightarrow {\mathbb {R}}_0^+\), given by

where \(\Delta _i:{\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\) is the difference operator

We suppose that h and \(\alpha ^*\) satisfy the assumptions discussed in Sect. 2. Given the Hamiltonian and the switching rate, we assemble the following system of differential equations:

which, together with initial-terminal data

with \(\bar{\theta }_0\in \mathcal {S}^d\) and \(\bar{u}_T\in {\mathbb {R}}^d\), determines the MFG.

Solving (2) under the non-standard boundary condition (3) is a fundamental issue in time-dependent MFGs. There are several ways to address this issue, although prior approaches are not completely satisfactory. First, we can solve (2) using initial conditions \(\theta (0)=\bar{\theta }_0\) and \(u(0)=u_0\) and then solve for \(u_0\) such that \(u(T)=\bar{u}_T\). However, this requires solving (2) multiple times, which is computationally expensive. A more fundamental difficulty arises in the numerical approximation of continuous-state MFGs by finite-state MFGs. There, the Hamilton–Jacobi equation is a backward parabolic equation whose initial-value problem is ill-posed. Thus, a possible way to solve (2) is to use a Newton-like iteration. This idea was developed in [1, 5] and used to solve a finite-difference scheme for a continuous-state MFG. However, Newton’s method involves inverting large matrices, whereas it is convenient to have algorithms that do not require matrix inversions. A second approach is to use a fixed-point iteration as in [12, 13]. Unfortunately, this iteration is not guaranteed to converge. A third approach (see [20, 21]) is to solve the master equation, which is a partial differential equation whose characteristics are given by (2). To approximate the master equation, we can use a finite-difference method constructed by solving an N-player problem. Unfortunately, even for a modest number of states, this approach is computationally expensive.

Our approach to the numerical solution of (2) relies on the monotonicity of the operator, \(A:{\mathbb {R}}^d\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\times {\mathbb {R}}^d\), given by

More precisely, we assume that A is monotone (see Assumption 2) in the sense that

for all \(\theta , \tilde{\theta }\in \mathcal {S}^d\) and \(u, \tilde{u}\in {\mathbb {R}}^d\). Building upon the ideas in [6] for stationary problems (also see the approaches for stationary problems in [4, 9, 29, 30]), we introduce the flow

Up to the normalization of \(\theta \), the foregoing flow is a contraction provided that \(\theta \in \mathcal {S}^d\). Moreover, its fixed points solve

In Sect. 3, we construct a discrete version of (5) that preserves probabilities; that is, it preserves both the total mass of \(\theta \) and its non-negativity.

The time-dependent case is substantially more delicate. Our method to approximate its solutions is our main contribution. The operator associated with the time-dependent problem, \(A:H^1(0,T; {\mathbb {R}}^d\times {\mathbb {R}}^d)\rightarrow L^2(0,T; \mathcal {S}^d\times {\mathbb {R}}^d)\), is

Under the initial-terminal condition in (3), A is a monotone operator. Thus, the flow

for \((\theta , u)\in L^2(0,T; {\mathbb {R}}^d\times {\mathbb {R}}^d)\) is formally a contraction. Unfortunately, even if this flow is well defined, the preceding system neither preserves probabilities nor such boundary conditions (3). Thus, in Sect. 4, we modify (7) in a way that it becomes a contraction in \(H^1\) and preserves the boundary conditions. Finally, we discretize this modified flow and build a numerical algorithm to approximate solutions of (2)-(3). Unlike Newton-based methods, our algorithm does not need the inversion of large matrices and scales linearly with the number of states. This is particularly relevant for finite-state MFGs that arise from the discretization of continuous-state MFGs. We illustrate our results in a paradigm-shift problem introduced in [8] and studied from a numerical perspective in [21].

We conclude this introduction with a brief outline of the paper. In the following section, we discuss the framework, main assumptions, and the paradigm-shift example that illustrates our methods. Next, we address stationary solutions. Subsequently, in Sect. 4, we discuss the main contribution of this paper by addressing the initial-terminal value problem. There, we outline the projection method, explain its discretization, and present numerical results. The paper ends with a brief concluding section.

2 Framework and main assumptions

Following [17], we present the standard finite-state MFG framework and describe our main assumptions. Then, we discuss a paradigm-shift problem from [8] that we use to illustrate our methods.

2.1 Standard Setting for Finite-State MFGs

Finite-state MFGs model systems with many identical players who act rationally and non-cooperatively. These players switch between states in \(I_d\) in seeking to minimize a cost. Here, the macroscopic state of the game is a probability vector \(\theta \in \mathcal {S}^d\) that gives the players’ distribution in \(I_d\). A typical player controls the switching rate, \(\alpha _j(i)\), from its state, \(i\in I_d\), to a new state, \(j\in I_d\). Given the players’ distribution, \(\theta (r),\) at time r, each player chooses a non-anticipating control, \(\alpha \), that minimizes the cost

In the preceding expression, \(c:I_d\times \mathcal {S}^d\times ({\mathbb {R}}_0^+)^d\rightarrow {\mathbb {R}}\) is a running cost, \(\Psi \in {\mathbb {R}}^d\) is the terminal cost, and \({\mathbf{i}}_s\) is a Markov process in \(I_d\) with switching rate \(\alpha \). The Hamiltonian, h, is the generalized Legendre transform of \(c(i,\theta ,\cdot )\):

The first equation in (2) determines the value function, u, for (8). The optimal switching rate from state i to state \(j\ne i\) is given by \(\alpha ^*_j(\Delta _iu,\theta ,i)\), where

Moreover, at points of differentiability of h, we have (1). The rationality of the players implies that each of them chooses the optimal switching rate, \(\alpha ^*\). Hence, \(\theta \) evolves according to the second equation in (2).

2.2 Main Assumptions

Because we work with the Hamiltonian, h, rather than the running cost, c, it is convenient to state our assumptions in terms of the former. For the relation between assumptions on h and c, see [17].

We begin by stating a mild assumption that ensures the existence of solutions for (2).

Assumption 1

The Hamiltonian \(h(z,\theta , i)\) is locally Lipschitz in \((z, \theta )\) and differentiable in z. The map \(z\mapsto h(z, \theta , i)\) is concave for each \((\theta , i)\). The function \(\alpha ^*(z, \theta , i)\) given by (1) is locally Lipschitz.

Under Assumption 1, there exists a solution to (2)–(3) (see [17]). This solution may not be unique as the examples in [20] and [21] show. Monotonicity conditions are commonly used in MFGs to prove the uniqueness of solutions. For finite-state MFGs, the appropriate monotonicity condition is stated in the next Assumption. Before proceeding, we define \(\Vert v \Vert _{\sharp }=\inf _{\lambda \in \mathbb {R}}\Vert v +\lambda \mathbf {1} \Vert \).

Assumption 2

There exists \(\gamma >0\) such that the Hamiltonian, h, satisfies the following monotonicity property:

Moreover, for each \(M>0\), there exist constants, \(\gamma _i\), such that on the set \(\Vert w\Vert ,\Vert z\Vert _\sharp \le M\), h satisfies the following concavity property:

Under the preceding assumptions, (2)–(3) has a unique solution (see [17]). Here, the previous condition is essential to the convergence of our numerical methods, for both stationary problems in Sect. 3 and for the general time-dependent case in Sect. 4.

Remark 1

As shown in [17], Assumption 2 implies the inequality

for any \(u, \tilde{u}\in {\mathbb {R}}^d\), \(\theta , \tilde{\theta }\in \mathcal {S}^d\), and \(k, \tilde{k}\in {\mathbb {R}}\).

2.3 Solutions and Weak Solutions

Because the operator, A, in (6) is monotone, we have a natural concept of weak solutions for (2)–(3). These weak solutions were considered for continuous-state MFGs in [6] and [15]. We say that \((u, \theta )\in L^2((0,T), {\mathbb {R}}^d)\times L^2((0,T), \mathcal {S}^d) \) is a weak solution of (2)–(3) if for all \((\tilde{u}, \tilde{\theta })\in H^1((0,T), {\mathbb {R}}^d)\times H^1((0,T), \mathcal {S}^d)\) satisfying (3), we have

Any solution of (2)–(3) is a weak solution, and any sufficiently regular weak solution with \(\theta >0\) is a solution.

Now, we turn our attention to the stationary problem. We recall (see [17]) that a stationary solution of (2) is a triplet, \((\bar{\theta },\bar{u}, \bar{k}) \in \mathcal {S}^d \times {\mathbb {R}}^d \times {\mathbb {R}},\) satisfying

for \(i=1,\ldots ,d\). As discussed in [17], the existence of solutions to (10) holds under an additional contractivity assumption. In general, as for continuous-state MFGs, solutions for (10) may not exist. Thus, we need to consider weak solutions. For a finite-state MFG, a weak solution of (10) is a triplet, \((\bar{u}, \bar{\theta }, \bar{k})\in {\mathbb {R}}^d\times \mathcal {S}^d\times {\mathbb {R}},\) that satisfies

for \(i=1,\ldots ,d\), with equality in the first equation for all indices, i, such that \(\bar{\theta }^i>0\).

2.4 Potential MFGs

In a potential MFG, the Hamiltonian takes the form

where \(\tilde{h}: {\mathbb {R}}^d \times I_d \rightarrow {\mathbb {R}}\), \(f:{\mathbb {R}}^d \times I_d \rightarrow {\mathbb {R}}\) and f is the gradient of a convex function, \(F:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\); that is, \(f(\theta ,\cdot ) = \nabla _{\theta }F(\theta )\). We define \(H:{\mathbb {R}}^d \times {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) as

Then, (2) can be written in Hamiltonian form as

In particular, H is conserved as follows:

In Sect. 4.6, we use this last property as an additional test for our numerical method.

2.5 A Case Study: The Paradigm-Shift Problem

A paradigm shift is a change in a fundamental assumption within a scientific theory. Scientists can simultaneous work in the context of multiple competing theories or problems. Their choice of theoretical grounding is made to maximize recognition (citations, awards, or prizes) and scientific activity (conferences or collaborations, for example). The paradigm-shift problem was formulated as a two-state MFG in [8]. Subsequently, it was studied numerically in [21] and [20] using an N-player approximation and PDE methods. Here, we present the stationary and time-dependent versions of this problem. Later, we use these versions to validate our numerical methods.

We consider the running cost, \(c:I_d\times \mathcal {S}^d \times ({\mathbb {R}}_0^+)^2\rightarrow {\mathbb {R}},\) given by

The functions \(f=f(i,\theta )\) are productivity functions with constant elasticity of substitution, given by

for \(r\ge 0\) and \(0\le a_1, a_2\le 1\). The Hamiltonian is

and the optimal switching rates are

For illustration, we examine the case where \(a_1=1\), \(a_2=0\), and \(r=1\) in the productivity functions above. In this case, \(f=\nabla _\theta F(\theta )\) with

Moreover, the game is potential with

Furthermore, \((\bar{\theta }, \bar{u},k)\) is a stationary solution if it solves

and

Since \(\theta ^1+\theta ^2=1\), and using the symmetry of (13)–(14), we conclude that

The time-dependent paradigm-shift problem is determined by

and

together with initial-terminal conditions

for \(i=1,2\), \(\theta _0\in \mathcal {S}^2\), and \(u_T\in {\mathbb {R}}^2\).

3 Stationary Problems

To approximate the solutions of (10), we introduce a flow closely related to (5). This flow is the analog for finite-state problems of the one considered in [6]. The monotonicity in Assumption 2 gives the contraction property. Then, we construct a numerical algorithm using a Euler step combined with a projection step to ensure that \(\theta \) remains a probability. Finally, we test our algorithm in the paradigm-shift model.

3.1 Monotone Approximation

To preserve the mass of \(\theta \), we introduce the following modification of (5):

where \(k:{\mathbb {R}}_0^+\rightarrow {\mathbb {R}}\) is such that \(\sum _{i=1}^d \theta ^i(s) = 1\) for every \(s\ge 0\). For this condition to hold, we need \(\sum _{i=1}^d \theta _s^i=0\). Therefore,

Proposition 1

Suppose that Assumptions 1–2 hold. Let \((u,\theta )\) and \((\tilde{u}, \tilde{\theta })\) solve (18)–(19). Assume that \(\sum _i \theta ^i(0)= \sum _i \tilde{\theta }^i(0)=1\) and that \(\theta (s), \tilde{\theta }(s)\ge 0\). Then,

Proof

We begin with the identity

Using (18) in the previous equality, we obtain

by Remark 1. \(\square \)

3.2 Numerical Algorithm

Let A be given by (4). Due to the monotonicity, for small \(\mu \), the Euler map,

is a contraction, provided that \(\theta \) is nonnegative; that is, the case when \(\theta \) is a probability vector. However, \(E_\mu \) may not keep \(\theta \) non-negative and, in general, \(E_\mu \) also does not preserve the mass. Thus, we introduce the following projection operator on \(\mathcal {S}^d\times {\mathbb {R}}^d\):

where \(\varpi (\theta )_i=(\theta ^i+\xi )^+\) and \(\xi \) is such that

Clearly, P is a contraction because it is a projection on a convex set. Finally, to approximate weak solutions of (10), that is solutions (11), we consider the iterative map

We have the following result:

Proposition 2

Let \((\bar{\theta }, \bar{u}, \bar{k})\) solve (11). Then, \((\bar{\theta }, \bar{u} )\) is a fixed point for (20). Moreover, for any fixed point of (20), there exists \(\bar{k}\) such that \((\bar{\theta }, \bar{u}, \bar{k})\) solves (11).

Finally, if \(\mu \) is small enough and (11) has a weak solution, \((\bar{\theta }, \bar{u}, \bar{k})\), with \(\bar{\theta }>0\), then the iterates in (20) are bounded and converge to \((\bar{\theta }, \bar{u} )\). Moreover, the solution is unique.

Proof

Clearly, a solution of (11) is a fixed point for (20). Conversely, let \((\bar{\theta }, \bar{u} )\) be a fixed point for (20). Then,

Hence,

Additionally, we have

for some \(\xi \). Thus, for \(\bar{k}=\frac{\xi }{\mu }\),

with equality when \(\bar{\theta }^i>0\).

If \(\mu \) is small enough, \(E_\mu \) is a contraction because A is a monotone Lipschitz map. Thus, if there is a solution of (11), the iterates in (20) are bounded. Then, the convergence follows from the monotonicity of \(E_\mu \) and the strict contraction given by \(\bar{\theta }>0\). \(\square \)

Remark 2

Concerning the convergence rate and the choice of the parameter \(\mu \) in the preceding theorem, we observe the following. The operator A is locally Lipschitz. Thus, given bound on the initial conditions, we can assume the Lipschitz constant to be a number, \(L>0\). By selecting

we get that \(E_{\mu }\) is a contraction and we may assume that the initial bound on data is preserved (for example, by looking at the norm of the difference between the iterates and a given stationary solution). If there is a strictly positive stationary solution, the convergence is exponential because, for u and \(\tilde{u}\) with mean 0, we have

The constant, however, depends on the lower bounds on the stationary solution and thus, we do not have a direct estimate on the rate of convergence.

3.3 Numerical Examples

To illustrate our algorithm, we consider the paradigm-shift problem. The monotone flow in (18) is

and

According to (19),

Now, we present the numerical results for this model using the iterative method in (20). We set \(s\in [0,8]\) and discretize this interval into \(N=300\) subintervals. First, we consider the following initial conditions:

The convergence towards the stationary solution is illustrated in Fig. 1a, b for \(\theta \) and u. The behavior of k is shown in Fig. 2a. In Fig. 2b, we illustrate the contraction of the norm

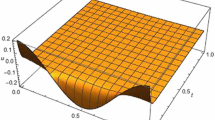

where \((\bar{\theta }, \bar{u})\) is the stationary solution in (15). Next, we consider the case in which the iterates of \(E_\mu \) do not preserve positivity. In Fig. 3, we compare the evolution of \(\theta \) by iterating \(E_\mu \), without the projection and using (20). In the first case, \(\theta \) may not remain positive, although, in this example, convergence holds. In Fig. 3, we plot the evolution through (20) of \(\theta \) towards the analytical solution \(\theta ^1=\theta ^2=0.5\). As expected from its construction, \(\theta \) is always non-negative and a probability. The contraction of the norm is similar to the previous case, see Fig. 4.

Comparison between the iterates of \(E_\mu \) and \(PE_\mu \) for \(\theta ^1_0=0.8\), \(\theta ^2_0=0.2\), \(u^1_0=5\), and \(u^2_0=2\). The quantities corresponding to the state 1 and 2 are depicted in blue and orange, respectively. a Non positivity of the distribution using \(E_\mu \). b Convergence using (20) while preserving the positivity of \(\theta \) (Color figure online)

4 Initial-Terminal Value Problems

The initial-terminal conditions in (3) are the key difficulty in the design of numerical methods for the time-dependent MFG, (2). Here, we extend the strategy from the previous section to handle initial-terminal conditions. We start with an arbitrary pair of functions, \((u(t,0),\theta (t,0))\), that satisfies (3) and build a family \((u(t,s),\theta (t,s))\), \(s\ge 0\), that converges to a solution of (2)–(3) as \(s\rightarrow \infty \), while preserving the boundary conditions for all \(s\ge 0\).

4.1 Representation of Functionals in \(H^1\)

We begin by discussing the representation of linear functionals in \(H^1\). Consider the Hilbert space, \(H^1_T=\{\phi \in H^1([0,T], {\mathbb {R}}^d): \phi (T)=0\}\). For \(\theta , u\in H^1([0,T], {\mathbb {R}}^d)\), we consider the variational problem

A minimizer, \(\phi \in H^1_T\), of the preceding functional represents the linear functional

for \(\eta \in H^1_T\), as an inner product in \(H^1_T\); that is,

for \(\phi ,\eta \in H^1_T\). The last identity is simply the weak form of the Euler–Lagrange equation for (23),

whose boundary conditions are \(\phi (T)=0\) and \(\dot{\phi }(0)=0\). For \(\theta , u\in H^1([0,T], {\mathbb {R}}^d)\), we define

Next, let \(H^1_I=\{\psi \in H^1([0,T], {\mathbb {R}}^d): \psi (0)=0\}\). For \(\theta , u\in H^1([0,T], {\mathbb {R}}^d)\), we consider the variational problem

The Euler–Lagrange equation for the preceding problem is

with the boundary conditions \(\psi (0)=0\) and \(\dot{\psi }(T)=0\). Moreover, if \(\psi \in H^1_I\) minimizes the functional in (26), we have

for \(\eta , \psi \in H^1_I\). For \(\theta , u\in H^1([0,T], {\mathbb {R}}^d)\), we define

4.2 Monotone Deformation Flow

Next, we introduce the monotone deformation flow,

where \(\Phi \) and \(\Psi \) are given in (25) and (28). As we show in the next proposition, for smooth enough solutions, the previous flow is a contraction in \(H^1\). Moreover, if \((\theta , u)\) solve (2)–(3), we have

Hence, solutions of (2)–(3) are fixed points for (29).

Before stating the contraction property, we recall that the \(H^1\)-norm of a pair of functions is given by

for \(v,\eta :[0,T]\rightarrow {\mathbb {R}}^d\).

Proposition 3

Let \((u, \theta )\) and \((\tilde{u}, \tilde{\theta })\) be \(C^2\) solutions of (29). Suppose that \(\theta , \tilde{\theta } \ge 0\). Then,

with strict inequality if \((u, \theta )\ne (\tilde{u}, \tilde{\theta })\).

Proof

We have

Using (29), the term in the right-hand side of the previous equality becomes

where we used integration by parts in the last equality. Because \(u(T)= \tilde{u}(T)\), \(\theta (0) = \tilde{\theta }(0)\), \(\phi _t(0) = \tilde{\phi }_t(0)\), \(\psi _t(T) = \tilde{\psi }_t(T)\), and using (24) and (27), we obtain

due to Remark 1. \(\square \)

4.3 Monotone Discretization

To build our numerical method, we begin by discretizing (29). We look for a time-discretization of

that preserves monotonicity, where \(f(u,\theta )=\sum _j \tilde{\theta }^j \alpha ^*(\Delta _j \tilde{u}, \tilde{\theta },j)\).

With Hamilton–Jacobi equations, implicit schemes have good stability properties. Because the Hamilton–Jacobi equation in (29) is a terminal value problem, we discretize it using an explicit forward-in-time scheme (hence, implicit backward-in-time scheme). Then, to keep the adjoint structure of A at the discrete level, we are then required to choose an implicit discretization forward in time for the first component of A. Usually, implicit schemes have the disadvantage of requiring the numerical solution of non-linear equations at each time step. Here, we discretize the operator, A, globally, and we never need to solve implicit equations.

More concretely, we split [0, T] into N intervals of length \(\delta t=\frac{T}{N}\). The vectors \(\theta _n\in \mathcal {S}^d\) and \(u_n\in {\mathbb {R}}^d\), \(0\le n\le N\) approximate \(\theta \) and u at time \(\frac{nT}{N}\). We set \(\mathcal {M}_N=(\mathcal {S}^d\times {\mathbb {R}}^{d})^{N+1}\) and define

where

and \(\delta \theta ^i_n = \theta _{n+1}^i-\theta _n^i\). Next, we show that \(A^N\) is a monotone operator in the convex subset of vectors in \(\mathcal {M}\) that satisfy the initial-terminal conditions in (3). We denote by \(\langle \cdot , \cdot \rangle \), the duality pairing in \((\mathcal {S}^d\times {\mathbb {R}}^d)^{N+1}\). More precisely, for \((\theta , u), (\tilde{\theta }, \tilde{u})\in (\mathcal {S}^d\times {\mathbb {R}}^d)^{N+1}\)

Proposition 4

\(A^N\) is monotone in the convex subset \(\mathcal {M}_N\) of all \((\theta , u)\in (\mathcal {S}^d\times {\mathbb {R}}^d)^{N+1}\) such that \(\theta _0=\bar{\theta }_0\) and \(u_{N}=\bar{u}_T\). Moreover, we have the inequality

Proof

We begin by computing

With the sums developed and the indices relabeled, the preceding expression becomes

The second and last lines above are zero since \(\theta _0=\tilde{\theta }_0=\bar{\theta }_0\) and \(u_{N}=\tilde{u}_{N}=\bar{u}_T\). Using Remark 1, we obtain

We now show that the last two lines add to zero. Let \(a_n = \theta _n-\tilde{\theta }_n\) and \(b_n = u_n-\tilde{u}_n\). Accordingly, we have

where we summed the first term by parts and relabeled the index, n, in the last term of the first line. The last equality follows from the assumption in the statements, \(a_0=\theta _0-\tilde{\theta }_0 = 0\) and \(b_N = u_N-\tilde{u}_N = 0\). \(\square \)

Using the techniques in [6], we prove the convergence of the solutions of the discretized problem as \(\delta t \rightarrow 0\). As usual, we discretize the time interval, [0, T], into \(N+1\) equispaced points.

Proposition 5

Let \((\theta ^N, u^N)\in {\mathcal {M}}_N\) be a solution of

satisfying the initial-terminal conditions in (3). Suppose that \(u^N\) is uniformly bounded. Consider the step functions \(\bar{u}^N\) and \(\bar{\theta }^N\) taking the values \(\bar{u}^{N i}_n \in {\mathbb {R}}\) and \(\bar{\theta }_n^{N i} \in \mathcal {S}\) in \([\frac{(n-1)T}{N},\frac{n T}{N}]\), with \(0\le n \le N\), for \(i\in I_d\), respectively. Then, extracting a subsequence if necessary, \(\bar{u}^{N i} \rightharpoonup \bar{u}^i\) and \(\bar{\theta }^{N i} \rightharpoonup \theta ^i\) weakly-* in \(L^\infty \) for \(i \in I_d\). Furthermore, \((\bar{u}, \bar{\theta })\) is a weak solution of (2).

Proof

Because \(u^N\) is bounded by hypothesis and \(\theta ^N\) is bounded since it is a probability measure, the weak-* convergence in \(L^\infty \) is immediate. Hence, there exist \(\bar{u}^i \in L^\infty ([0,T])\) and \(\theta ^i \in L^\infty ([0,T])\) as claimed.

Let \(\tilde{u}^{i}, \tilde{\theta }^{i} \in C^{\infty }([0,T])\), with \(\tilde{\theta }^{i} \ge 0\) for all \(i\in I_d\), and \(\sum _{i \in I_d} \tilde{\theta }^i =1\). Suppose further that \(\tilde{u}^{i}, \tilde{\theta }^{i}\) satisfy the boundary conditions in (3). Let \(\tilde{u}_n^{N} = \tilde{u} \left( \frac{n}{N}T \right) \), \(\tilde{\theta }_n^{N} = \tilde{\theta } \left( \frac{n}{N}T \right) \) be the vectors whose components are \(\tilde{u}_n^{N i}\) and \(\tilde{\theta }_n^{N i}\), respectively. By the monotonicity of \(A^N\), we have

and taking the limit \(N\rightarrow \infty \) gives the result. \(\square \)

4.4 Monotone Discretization for the \(H^1\) Projections

Next, we discuss the representation of linear functionals for the discrete problem. For that, proceeding as in Sect. 4.1, we compute the optimality conditions of the discretized versions of (23) and (26).

Fix \((u,\theta )\in \mathcal {M}_N\) and consider the following discrete analog to (23):

where \(\delta g_n = g_{n+1}-g_n\), and \(\tilde{H}^1_T =\{\phi =(\phi _0, \ldots , \phi _N)\in ({\mathbb {R}}^d)^{(N+1)}:\phi _N=0\}\). The corresponding optimality conditions (the discrete Euler–Lagrange equation) is

for \(n=1,\ldots ,N-1\), coupled with the boundary conditions \(\phi _N = 0\) and \(\phi _{1}=\phi _0\).

A minimizer of the problem above represents the following discrete linear functional

as an inner product in \(\tilde{H}^1_T\)

For \((\theta _n,u_n) \in \mathcal {M}_N\), we define

We now examine a second discrete variational problem corresponding to (26). For \((u,\theta )\in \mathcal {M}_N\), we consider

where \(\tilde{H}^1_I =\{\psi =(\psi _0, \ldots , \psi _N)\in ({\mathbb {R}}^d)^{(N+1)}:\psi _0=0\}\).

The discrete Euler–Lagrange equation is

for \(n=1,\ldots ,N-1\), together with the conditions \(\psi _0=0\) and \(\psi _N=\psi _{N-1}\).

From the Euler–Lagrange equation, we obtain the following representation formula in the Hilbert space \(\{\psi \in H_n^1(\{0,\ldots ,N\}): \psi _0 = 0\}\):

Finally, we define

for \((u,\theta )\in \mathcal {M}_N\).

Proposition 6

Let \(\Phi \) and \(\Psi \) be given by (33) and (35). Consider the following operator:

Let \({\mathcal {M}}_N^{\bar{\theta }_0,\bar{u}_T}\) be the set of all \((\theta , u) \in {\mathcal {M}}_N\) that satisfy the initial condition \(\theta _0=\bar{\theta }_0\) and the terminal condition \(u_N=\bar{u}_T\). Then, \(Q_A\) is monotone with respect to the discrete \(H^1_N\) inner product corresponding to the norm

Proof

Let \((u,\theta )\in {\mathcal {M}}_N^{\bar{\theta }_0,\bar{u}_T}\) and \((\tilde{u}, \tilde{\theta })\in {\mathcal {M}}_N^{\bar{\theta }_0,\bar{u}_T}\). Let \(\phi , \tilde{\phi }\) and \(\psi , \tilde{\psi }\) be given by (33) and (35). We begin by computing

Reorganizing, we see that the previous two lines are equal to

Using the notation

we write (39) multiplied by \((\delta t)^2\) as

where we used summation by parts in the last equality. Because \(\psi _N=\psi _{N-1}\), we have \(b_{N-1}=0\). Moreover, since \(\theta _0=\tilde{\theta }_0\), we have \(a_0=0\), and \(\phi _1=\phi _0\) implies that \(d_0=0\). Thus, we further have

where we used the terminal condition \(u_N=\tilde{u}_N\). According to these identities, (40) becomes

Therefore, (39) can be written as

Shifting the index \(n+1\) into n in (41), we obtain

Using the Euler–Lagrange equations (32) and (34) in the preceding expression yields

Finally, plugging the previous result into (38), we obtain

by using Remark 1 and arguing as at the end of Sect. 4.3. \(\square \)

4.5 Projection Algorithm

As shown in Sect. 3, the monotone flow may not keep \(\theta \) positive. Thus, to preserve probabilities and prevent \(\theta \) from taking negative values, we define a projection operator through the following optimization problem. Given \((\eta , w)\in {\mathcal {M}}_N\), we solve

for \(n\in \{0,\ldots , N\}\). Then, we set

for \(0\le n \le N\). We note that if \(\eta _n\) is a probability, then \(\lambda _n=\eta _n\). Moreover, P is a contraction.

Now, we introduce the following iterative scheme:

where \(w_k = (\theta _k, u_k)\), \(Q_A\) is defined in (36), and \(\upsilon >0\) is the step size.

Proposition 7

For small enough \(\upsilon \), the map (43) is a contraction. Moreover, if there exists a solution \((\tilde{\theta }, \tilde{u})\) of

satisfying the initial-terminal conditions \(\tilde{\theta }_0=\bar{\theta }_0\) and \(\tilde{u}^N=\bar{u}_T\), the iterates of (43) satisfy

as \(k\rightarrow \infty \).

Proof

The operator \(E_{\upsilon }\) is a contraction because \(Q_A\) is a monotone Lipschitz map (see Proposition 6). The convergence in the statement follows from the series

being convergent. \(\square \)

Proposition 8

Let \((\bar{\theta }, \bar{u})\in {\mathcal {M}}_N\) solve

with \(u_N=\bar{u}_T\) and \(\theta _0=\bar{\theta }_0\). Then, \((\bar{\theta }, \bar{u})\) is a fixed point of (43).

Conversely, let \((\tilde{\theta }, \tilde{u})\in {\mathcal {M}}_N\) be a fixed point of (43) with \(\tilde{\theta }>0\). Then, there exists a solution to (44), \((\bar{\theta }, \bar{u}),\) with \(\bar{\theta }=\tilde{\theta }\) and \(\bar{u}\) given by

with \(\bar{u}_N=\bar{u}_T\).

Proof

The first claim of the proposition follows immediately from the definition of \(Q_A\). To prove the second part, let \((\tilde{\theta },\tilde{u})\in {\mathcal {M}}_N\) be a fixed point of (43). For all \(n\in \{0,\ldots ,N\}\) and \(i \in I_d\), we have

Therefore, \(\phi _n(\tilde{u}_n, \tilde{\theta }_n)=0\). Hence, from (32), we conclude that

Furthermore, for \(\tilde{\theta }_n^i = \lambda _n^i\), where \(\lambda _n^i\) solves (42), we have

for some \(\kappa _n\ge 0\). If \(\tilde{\theta }^i_n > 0\), \(\psi _n(\tilde{u}_n, \tilde{\theta }_n)=\kappa _n\). Otherwise, \(\psi _n(\tilde{u}_n, \tilde{\theta }_n) \ge \kappa _n\).

If \(\tilde{\theta }^i_n > 0\), using the fact that \(\psi \) solves (34), we gather

Now, we define \(\bar{u}\) as in the statement of the proposition. A simple computation gives

Hence, \(\Delta _j \bar{u}_n=\Delta _j \tilde{u}_n\). Consequently,

Thus, \((\bar{\theta }, \bar{u})\) solves (2). \(\square \)

Remark 3

The convergence of solutions of (44) to weak solutions of (2) follows from the Minty’s method and the monotonicity of the operator A as shown in Proposition 5.

4.6 Numerical Examples

Finally, we present numerical simulations for the time-dependent paradigm-shift problem. As explained before, we discretize the time variable, \(t \in [0,T]\), into N intervals of length \(\delta t=\frac{T}{N}\). We then have N equations for each state. Because \(d=2\), this system consists of 4N evolving equations according to (43).

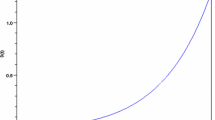

To compute approximate solutions to (16)–(17), we use the projection algorithm, (43), with \(N=400\). We first consider a case in which the analytical solution can be computed explicitly. We choose \(\theta ^1=\theta ^2=\frac{1}{2}\). Thus, from (16), it follows that \(u^1=u^2\) are affine functions of t with \(u^1_t=u^2_t-\frac{1}{2}\). Our results are depicted in Figs. 5, 6, and 7. In Fig. 5, for \(t\in [0,T]\), \(T=8\), we plot the initial guess (\(s=0\)) for \(\theta \) and u, and the analytical solution. In Fig. 6, we see the evolution of the density of players and the value functions for \(s\in [0,20]\). The final results, \(s=20\), are shown in Fig. 7. Finally, in Fig. 8, we show the evolution of the \(H^1\) norm of the difference between the analytical, \((\tilde{u}, \tilde{\theta })\), and computed, \((u,\theta )\), solutions. The norm \(\Vert (\tilde{u}, \tilde{\theta }) - (u,\theta ) \Vert _{H^1([0,T])}^2(s)\) is computed as

for \(s\ge 0\), where \(v^i_j=v^i(t_j,s)\) and \(\delta t\) is the size of the time-discretization step.

The blue lines correspond to the initial values (\(s=0\)) for state 1, \((\theta ^1,u^1)\): the orange lines correspond to the initial values for state 2, \((\theta ^2,u^2)\); the green lines correspond to the analytical solution \(\theta ^1=\theta ^2\) and \(u^1=u^2\) for \(t\in [0,8]\). a Initial condition \(\theta (\cdot ,0)\) versus exact solution. b Initial condition \(u(\cdot ,0)\) versus exact solution (Color figure online)

The paradigm-shift problem is a potential MFG with the Hamiltonian corresponding to

in (12). Thus, as a final test to our numerical method, we investigate the evolution of the Hamiltonian. In this case, as expected, the Hamiltonian converges to a constant (see Fig. 9).

In the preceding example, while iterating (43), \(\theta \) remains away from 0. In the next example, we consider a problem in which, without the projection P in (43), positivity is not preserved. We set \(N=400\) and choose initial conditions as in Fig. 10. In Fig. 11, we show the evolution by (43) for \(s\in [0,20]\). In Fig. 12, we see the final result for \(s=20\). Finally, in Fig. 13, we show the evolution of the \(H^1\) norm of the difference \(\Vert (\tilde{u}, \tilde{\theta }) - (u,\theta ) \Vert _{H^1([0,T])}^2(s)\) for \(s\in [0,20]\).

In Fig. 14, we plot the evolution of the Hamiltonian determined using the projection method. Again, we obtain the numerical conservation of the Hamiltonian.

5 Conclusions

As the examples in the preceding sections illustrate, we have developed an effective method for the numerical approximation of monotonic finite-state MFGs. As observed previously, [1,2,3, 5], monotonicity properties are essential for the construction of effective numerical methods to solve MFGs and were used explicitly in [6]. Here, in contrast with earlier approaches, we do not use a Newton-type iteration as in [1, 3] nor do we require the solution of the master equation as in [20, 21]. While for \(d=2\), the master equation can be handled numerically as in the preceding references, this approach becomes numerically prohibitive when there is a large number of states. The master equation determines the value function \(U(\theta , i, t)\), where \(\theta \in \mathcal {S}^d\). A direct approach to the master equation requires a grid in \(\mathcal {S}^d\), or equivalently in a subset of \({\mathbb {R}}^{d-1}\). However, when d is moderately large, a direct approach requires the storage of an extremely large number of points. With our approach, we only need 2d values for each time step. The key contribution of this work is the projection method that makes addressing the initial-terminal value problem possible. This was an open problem since the introduction of monotonicity-based methods in [6]. Our methods can be applied to discretizing continuous-state MFGs, and we foresee additional extensions. The first one concerns the planning problem considered in [2]. A second extension regards boundary value problems, which are natural in many applications of MFGs. Finally, our methods may be improved by using higher-order integrators in time, provided that monotonicity is preserved. These matters will be the subject of future research.

References

Achdou, Y.: Finite difference methods for mean field games. In: Hamilton-Jacobi Equations: Approximations, Numerical Analysis and Applications, Lecture Notes in Mathematics, vol. 2074, pp. 1–47. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-36433-4_1

Achdou, Y., Camilli, F., Capuzzo-Dolcetta, I.: Mean field games: numerical methods for the planning problem. SIAM J. Control Optim. 50(1), 77–109 (2012)

Achdou, Y., Capuzzo-Dolcetta, I.: Mean field games: numerical methods. SIAM J. Numer. Anal. 48(3), 1136–1162 (2010)

Achdou, Y., Cirant, M., Bardi, M.: Mean field games models of segregation. Math. Models Methods Appl. Sci. 27(1), 75–113 (2017)

Achdou, Y., Perez, V.: Iterative strategies for solving linearized discrete mean field games systems. Netw. Heterog. Media 7(2), 197–217 (2012)

Al-Mulla, N., Ferreira, R., Gomes, D.: Two numerical approaches to stationary mean-field games. Dyn. Games Appl. 7(4), 657–682 (2016)

Basna, R., Hilbert, A., Kolokoltsov, V.N.: An epsilon-Nash equilibrium for non-linear Markov games of mean-field-type on finite spaces. Commun. Stoch. Anal. 8(4), 449–468 (2014)

Besancenot, D., Dogguy, H.: Paradigm shift: a mean-field game approach. Bull. Econ. Res. 67(3), 289–302 (2015). https://doi.org/10.1111/boer.12024

Briceño Arias, L.M., Kalise, D., Silva, F.J.: Proximal methods for stationary mean field games with local couplings. SIAM J. Control Optim. 56(2), 801–836 (2018). https://doi.org/10.1137/16M1095615

Cardaliaguet, P., Lasry, J.-M., Lions, P.-L., Porretta, A.: Long time average of mean field games. Netw. Heterog. Media 7(2), 279–301 (2012)

Cardaliaguet, P., Lasry, J.-M., Lions, P.-L., Porretta, A.: Long time average of mean field games with a nonlocal coupling. SIAM J. Control Optim. 51(5), 3558–3591 (2013)

Carlini, E., Silva, F.J.: A fully discrete semi-Lagrangian scheme for a first order mean field game problem. SIAM J. Numer. Anal. 52(1), 45–67 (2014)

Carlini, E., Silva, F.J.: A semi-Lagrangian scheme for a degenerate second order mean field game system. Discret. Contin. Dyn. Syst. 35(9), 4269–4292 (2015)

Ferreira, R., Gomes, D.: On the convergence of finite state mean-field games through \(\Gamma \)-convergence. J. Math. Anal. Appl. 418(1), 211–230 (2014)

Ferreira, R., Gomes, D.: Existence of weak solutions for stationary mean-field games through variational inequalities. Preprint (2016)

Gomes, D., Mohr, J., Souza, R.R.: Discrete time, finite state space mean field games. J. Math. Pures Appl. 93(2), 308–328 (2010)

Gomes, D., Mohr, J., Souza, R.R.: Continuous time finite state mean-field games. Appl. Math. Optim. 68(1), 99–143 (2013)

Gomes, D., Pimentel, E., Voskanyan, V.: Regularity theory for mean-field game systems. Springer Briefs in Mathematics. Springer, Cham (2016)

Gomes, D., Saúde, J.: Mean field games models—a brief survey. Dyn. Games Appl. 4(2), 110–154 (2014)

Gomes, D., Velho, R.M., Wolfram, M.-T.: Dual two-state mean-field games. In: Proceedings CDC 2014 (2014)

Gomes, D., Velho, R.M., Wolfram, M.-T.: Socio-economic applications of finite state mean field games. In: Proceedings of the Royal Society A, Bd. 372(2028(S.)) (2014)

Guéant, O.: Existence and uniqueness result for mean field games with congestion effect on graphs. Appl. Math. Optim. 72(2), 291–303 (2015). https://doi.org/10.1007/s00245-014-9280-2

Guéant, O.: From infinity to one: the reduction of some mean field games to a global control problem. Preprint (2011)

Huang, M., Caines, P.E., Malhamé, R.P.: Large-population cost-coupled LQG problems with nonuniform agents: individual-mass behavior and decentralized \(\epsilon \)-Nash equilibria. IEEE Trans. Autom. Control 52(9), 1560–1571 (2007)

Huang, M., Malhamé, R.P., Caines, P.E.: Large population stochastic dynamic games: closed-loop McKean-Vlasov systems and the Nash certainty equivalence principle. Commun. Inf. Syst. 6(3), 221–251 (2006)

Kolokoltsov, V.N., Malafeyev, O.A.: Mean-field-game model of corruption. Dyn. Games Appl. 7(1), 34–47 (2017)

Lasry, J.-M., Lions, P.-L.: Jeux à champ moyen. I. Le cas stationnaire. C. R. Math. Acad. Sci. Paris 343(9), 619–625 (2006)

Lasry, J.-M., Lions, P.-L.: Jeux à champ moyen. II. Horizon fini et contrôle optimal. C. R. Math. Acad. Sci. Paris 343(10), 679–684 (2006)

Mészáros, A., Silva, F.J.: On the variational formulation of some stationary second-order mean field games systems. SIAM J. Math. Anal. 50(1), 1255–1277 (2018). https://doi.org/10.1137/17M1125960

Mészáros, A.R., Silva, F.J.: A variational approach to second order mean field games with density constraints: the stationary case. J. Math. Pures Appl. (9) 104(6), 1135–1159 (2015)

Author information

Authors and Affiliations

Corresponding author

Additional information

D. Gomes was partially supported by KAUST baseline and start-up funds and KAUST SRI, Center for Uncertainty Quantification in Computational Science and Engineering. J. Saúde was partially supported by FCT/Portugal through the CMU-Portugal Program.

Rights and permissions

About this article

Cite this article

Gomes, D.A., Saúde, J. Numerical Methods for Finite-State Mean-Field Games Satisfying a Monotonicity Condition. Appl Math Optim 83, 51–82 (2021). https://doi.org/10.1007/s00245-018-9510-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-018-9510-0