Abstract

We propose and investigate a general class of discrete time and finite state space mean field game (MFG) problems with potential structure. Our model incorporates interactions through a congestion term and a price variable. It also allows hard constraints on the distribution of the agents. We analyze the connection between the MFG problem and two optimal control problems in duality. We present two families of numerical methods and detail their implementation: (i) primal-dual proximal methods (and their extension with nonlinear proximity operators), (ii) the alternating direction method of multipliers (ADMM) and a variant called ADM-G. We give some convergence results. Numerical results are provided for two examples with hard constraints.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The class of mean field game (MFG) problems was introduced by Lasry and Lions in [34,35,36] and Huang et al. in [33] to study interactions among a large population of agents. The agents of the game optimize their own dynamical system with respect to a criterion; the criterion is parameterized by some endogenous coupling terms. These coupling terms are linked to the collective behavior of all agents and thus induce an interaction between them. It is assumed that an isolated agent has no impact on the coupling terms and that all agents are identical. At a mathematical level, MFGs typically take the form of a system of coupled equations: a dynamic programming equation (characterizing the optimal behavior of the agents), a Kolmogorov equation (describing the distribution of the agents), and coupling equations.

In this work we study a class of discrete time and finite state space mean field games with potential structure. The dynamical system of each agent is a Markov chain, with controlled probability transitions. Few publications deal with fully discrete models; in a seminal work, Gomes et al. [25] have studied the existence of a Nash equilibrium via a fixed point approach and investigated the long-term behavior of the game. The proof relies on a monotonicity assumption for the congestion term. In [31], in a similar setting, the convergence of the fictitious play algorithm is established. In addition [31] proves the convergence of a discrete mean field game problem (with an entropic regularization of the running cost) toward a continuous first-order mean field game.

Potential (also called variational) MFGs are coupled systems which can be interpreted as first-order conditions of two control problems in duality whose state equations are respectively a Kolmogorov equation and a dynamic programming equation. The primal problem (involving the Kolmogorov equation) can be interpreted as a stochastic optimal control problem with cost and constraints on the law of the state and the control. Its numerical resolution is thus of interest beyond the context of MFGs.

1.1 Framework

In our model, the agents interact with each other via two coupling terms: a congestion variable \(\gamma \) and a price variable P. The congestion \(\gamma \) is linked to the distribution of the agents via the subdifferential of a proper convex and l.s.c. potential F. The price P is linked to the joint law of states and controls of the agents via the subdifferential of a proper convex and l.s.c. potential \(\phi \). A specificity of our discrete model is that the potentials F and \(\phi \) can take the value \(+\infty \) and thus induce constraints on the distribution of the agents, referred to as hard constraints. In the continuous case, four classes of variational MFGs can be identified. Our model is general enough to be seen as the discrete counterpart of these four cases. Case 1: MFGs with monotone congestion terms (F is differentiable, \(\phi =0\)). The first variational formulation was given in [35] and has been widely studied in following works [8, 14, 16, 37, 38]. Case 2: MFGs with density constraints (F has a bounded domain, \(\phi =0\)). These models are of particular interest for describing crowd motions. The coupling variable \(\gamma \) has there an incentive role. The reader can refer to [16, 37, 41, 42]. Case 3: MFGs with Cournot interactions (\(F=0\), \(\phi \) is differentiable). In this situation, each agent optimally chooses a quantity to be sold at each time step of the game. Interactions with the other players occur through the gradient of \(\phi \) which maps the mean strategy (the market demand) to a market price. See for example [9, 27,28,29,30]. Case 4: MFGs with price formation (\(F=0\), \(\phi \) has a bounded domain). These models incorporate a hard constraint on the demand. The price variable is the associated Lagrange multiplier and has a incentive role. We refer to [26].

The first part of the article is devoted to the theoretical analysis of the MFG system. We first introduce a potential problem, shown to be equivalent to a convex problem involving the Kolmogorov equation via a change of variable, similar to the one widely employed in the continuous setting (e.g. in [5]). Under a suitable qualification condition, we establish a duality result between this problem and an optimal control problem involving the dynamic programming equation. We show the existence of solutions to these problems and finally we show the existence of a solution to the MFG system. A uniqueness result is proved (when F and \(\phi \) are differentiable).

The second part of the article is devoted to the numerical resolution of the MFG system. We focus on two families of methods: primal-dual methods and augmented Lagrangian methods. These two classes exploit the duality structure discussed above and can deal with hard constraints. They have already been applied to continuous MFGs, see for example the survey article [3]. Primal-dual methods have been applied to stationary MFGs with hard congestion terms in Briceno-Arias et al. [13] and to time-dependent MFGs in [12]. In a closely related setting, [21] applies a primal-dual method to solve a discretized optimal transport problem. Augmented Lagrangian methods have been applied to MFGs in [6] and to MFGs with hard congestion terms in [8]. Other methods exploiting the potential structure have been investigated in the literature, they are out of the scope of the current article. Let us mention the Sinkhorn algorithm [7]. The fictitious play method has been investigated in various settings: [23] shows the connection between the fictitious play method and the Frank-Wolfe algorithm in a discrete and potential setting; [15] considers a continuous setting, with a non-convex potential.

Let us emphasize that the above references all deal with interaction terms depending on the distribution of the states of the agents; very few publications are concerned by interactions through the controls (see [2]). The present work is the first to address methods for “Cournot" mean field games.

1.2 Contributions

Let us comment further on the families of methods under investigation and our contributions. The primal-dual algorithms that we have implemented were introduced by Chambolle and Pock [17] and applied to mean field games in [13]. A novelty of our work is also to show that the extension of primal-dual methods of [18], involving nonlinear proximity operators (based on Bregman divergences), can also be used to solve MFGs. The augmented Lagrangian method that we have implemented is applied to the dual problem (involving the dynamic programming equation), as originally proposed in [5] for optimal transportation problems. As in [5], we have actually implemented a variant of the augmented Lagrangian method, called alternating direction method of multipliers (ADMM). The method was introduced by Glowinski and Marroco [24] and studied by Gabay and Mercier [22]. It relies on a successive minimization of the augmented Lagrangian function. One of the main limitations of ADMM is that when the number of involved variables is greater or equal to three, as it is the case for our problem, convergence is not granted. A novelty of our work is to consider a variant of ADMM, the alternating direction method with Gaussian back substitution (ADM-G), introduced in [32]. At each iteration of this method, the ADMM step is followed by a Gaussian back substitution step. Convergence is ensured. The practical implementation of the additional step turns out to be inexpensive in our framework.

The last contribution of this work is to propose and solve numerically two hard constraints problems: a congestion mean field game problem and a “Cournot" mean field game. Following our analysis we define a notion of residuals allowing us to compare the empirical convergence of each method in a common setting.

1.3 Organization of the article

The article is organized as follows. In Sect. 2 we provide the main notations, the mean field game system under study and the underlying individual player problem. In Sect. 3 we formulate a potential problem and perform the announced change of variable. In Sect. 4 we form a dual problem and we establish a duality result. In Sect. 5 we provide our main results: existence and uniqueness of a solution to the mean field game. In Sect. 6 we provide a detailed implementation of the primal-dual proximal algorithms, ADMM and ADM-G, and we give theoretical convergence results when possible. In Sect. 7 we present numerical results for two concrete problems. We provide outputs obtained for each method: errors, value function, equilibrium measure, mean displacement, congestion, demand and price.

2 Discrete mean field games

2.1 Notation

Sets. Let \(T \in {\mathbb {N}}^\star \) denote the duration of the game. We set \({\mathcal {T}}= \{ 0,..., T-1 \}\) and \(\bar{{\mathcal {T}}} = \{ 0,...,T \}\). Let \(S = \{ 0,..., n-1 \}\) denote the state space. We set

For any finite set A, we denote by \({\mathbb {R}}(A)\) the finite-dimensional vector space of mappings from A to \({\mathbb {R}}\). For any finite set B and linear operator \(L :{\mathbb {R}}(A) \rightarrow {\mathbb {R}}(B)\), we denote \(L^\star :{\mathbb {R}}(B) \rightarrow {\mathbb {R}}(A)\) the adjoint operator satisfying the relation

All along the article, we make use of the following spaces:

Convex analysis. For any function \(g :{\mathbb {R}}^d \rightarrow {\mathbb {R}} \cup \{+ \infty \}\), we denote

The subdifferential of g is defined by

By convention, \(\partial g(x)= \emptyset \) if \(g(x)= + \infty \). Note also that \(x^\star \in \partial g(x)\) if and only if \(g(x) + g^\star (x^\star ) = \langle x, x^\star \rangle \), where \(g^\star \) is the Fenchel transform of g, defined by

Note that the subdifferential and Fenchel transforms of \(\ell \), F, and \(\phi \) (introduced in the next paragraph) are considered for fixed values of the time and space variables.

We denote by \(\chi \) the indicator function of \(\{ 0 \}\) (without specifying the underlying vector space). For any subset \(C \subseteq {\mathbb {R}}^d\), we denote by \(\chi _C\) the indicator function of C. For any \(x \in C\), we denote by \(N_{C}(x)\) the normal cone to C at x,

We set \(N_{C}(x)= \emptyset \) if \(x \notin C\).

Nemytskii operators. Given two mappings \(g :{\mathcal {X}} \times {\mathcal {Y}} \rightarrow {\mathcal {Z}}\) and \(u :{\mathcal {X}} \rightarrow {\mathcal {Y}}\), we call Nemytskii operator the mapping \(\varvec{g}[u] :{\mathcal {X}} \rightarrow {\mathcal {Z}}\) defined by

We will mainly use this notation in order to avoid the repetition of time and space variables, for example, we will write \(\varvec{\ell }[\pi ](t,x)\) instead of \(\ell (t,x,\pi (t,x))\).

All along the article, we will transpose some notions associated with g to the Nemytskii operator \(\varvec{g}[u]\). When \({\mathcal {Y}}= {\mathbb {R}}^d\) and \({\mathcal {Z}}= {\mathbb {R}}\cup \{ + \infty \}\), we define the domain of g by

We define \(\varvec{g}^\star [v] :{\mathbb {R}}^{d} \rightarrow {\mathbb {R}}\cup \{+\infty \}\) by \(\varvec{g}^\star [v](x) = g^\star (x,v(x)), \) where \(g^\star \) is the Fenchel transform of g with respect to the second variable.

2.2 Coupled system

Data and assumption. We fix an initial distribution \(m_0 \in \varDelta (S)\) and four maps: a running cost \(\ell \), a potential price function \(\phi \), a potential congestion cost F, and a displacement cost \(\alpha \),

The following convexity assumption is in force all along the article. Note that we will later make use of an additional qualification assumption (Assumption 2).

Assumption 1

(Convexity) For any \((t,s,x) \in {\mathcal {T}} \times \bar{{\mathcal {T}}} \times S\), the maps \(\ell (t,x,\cdot )\), \(F(s,\cdot )\), and \(\phi (t,\cdot )\) are proper, convex and lower semicontinuous. In addition \({{\,\textrm{dom}\,}}(\ell (t,x,\cdot )) \subseteq \varDelta (S)\).

Coupled system. The unknowns of the MFG system introduced below are denoted \(((m,\pi ),(u,\gamma ,P)) \in {\mathcal {R}} \times {\mathcal {K}}\). They can be described as follows:

-

\(\gamma \) and P are the coupling terms of the MFG: \(\gamma (t,x)\) is a congestion term incurred by agents located at \(x \in S\) at time \(t \in \bar{{\mathcal {T}}}\) and P(t) is a price variable

-

\(\pi (t,x,y)\) denotes the probability transition from \(x \in S\) to \(y \in S\), for agents located at x at time t

-

m(t, x) denotes the proportion of agents located at \(x \in S\) at time \(t \in \bar{{\mathcal {T}}}\)

-

u(t, x) is the value function of the agents.

For any \((\gamma ,P) \in {\mathcal {U}}\), we define the individual cost \(c :{\mathcal {T}} \times S \times S \times \varDelta (S) \rightarrow {\mathbb {R}}\),

Given \((m,\pi ) \in {\mathcal {R}}\), we denote

We aim at finding a quintuplet \((m,\pi ,u,\gamma ,P)\) such that for any \((t,s,x) \in {\mathcal {T}} \times \bar{{\mathcal {T}}} \times S\),

Heuristic interpretation.

-

The dynamical system of each agent is a Markov chain \((X_s^\pi )_{s\in \bar{{\mathcal {T}}}}\) controlled by \(\pi \in \varDelta \), with initial distribution \(m_0\): for any \( (t,x,y) \in {\mathcal {T}} \times S^2\),

$$\begin{aligned} {\mathbb {P}}\left( X_{t+1}^\pi = y \vert X_{t}^\pi = x \right) = \pi (t,x,y), \quad {\mathbb {P}}( X_{0}^\pi = x) = m_0(x). \end{aligned}$$(1)Given the coupling terms \((\gamma , P) \in {\mathcal {U}}\), the individual control problem is

$$\begin{aligned} \inf _{\pi \in \varDelta } J_{\gamma ,P}(\pi ) := {\mathbb {E}} \Big ( \sum _{t \in {\mathcal {T}}} c_{\gamma ,P}(t,X_{t}^\pi ,X_{t+1}^\pi ,\pi (t,X_t^\pi )) + \gamma (T,X_T^\pi ) \Big ). \end{aligned}$$(2)The equations (MFG,i-ii) are the associated dynamic programming equations: given \((\gamma ,P) \in {\mathcal {U}}\), if u and \(\pi \) satisfy these equations, then \(\pi \) is a solution to (2). The reader can refer to [10, Chapter 7] for a detailed presentation of the dynamic programming approach for the optimal control of Markov chains.

-

Given \(\pi \in \varDelta \), denote by \(m^\pi \) the probability distribution of \(X^\pi \), that is, \(m^\pi (t,x)={\mathbb {P}}(X_t^\pi = x)\). Then \(m^\pi \) is obtained by solving the Kolmogorov equation (MFG,iii). In the limit when the number of agents tends to \(\infty \), the distribution \(m^\pi \) coincides with the empirical distribution of the agents.

-

Finally, the equations (MFG,iv-v) link the coupling terms \(\gamma \) and P to the distribution of the agents m and their control \(\pi \).

In summary: given a solution \(((m,\pi ),(u,\gamma ,P)) \in {\mathcal {R}} \times {\mathcal {K}}\) to (MFG), the triplet \((\pi ,\gamma ,P)\) is a solution to the mean field game

Potential problem. The next section of the article will be dedicated to the connection between the coupled system and the potential problem (P), introduced page 10. We provide here a stochastic formulation of (P) as an optimal control problem of a Markov chain, which has its own interest:

where \((X^{\pi }_t)_{t\in \bar{{\mathcal {T}}}}\) is a controlled Markov satisfying (1).

Remark 1

-

At any time \(t \in {\mathcal {T}}\), it is possible to encode constraints on the transitions of the agents located at \(x \in S\) by defining \(\ell \) in such a way that \({{\,\textrm{dom}\,}}(\ell (t,x,\cdot ))\) is strictly included into \(\varDelta (S)\). An example will be considered in Sect. 7.

-

If F and \(\phi \) are differentiable, then their subdifferentials are singletons and thus the coupling terms \(\gamma \) and P are uniquely determined by m and \(\pi \) through the equations (MFG, iv-v).

-

The equations (MFG, iv-v) imply that \(m \in {{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{F})\) and \(\varvec{Q}[m,\pi ] \in {{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{\phi })\). Thus they encode hard constraints on m and \(\pi \) if the coupling functions F or \(\phi \) take the value \(+\infty \). For example, they can be chosen in the form \(G :{\mathbb {R}}^d \rightarrow {\mathbb {R}} \cup \{ + \infty \}\), \(G = g + \chi _K\), where \(g :{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) is convex and differentiable and where K is a closed and convex subset of \({\mathbb {R}}^d\). Then by [4, Corollary 16.38],

$$\begin{aligned} \partial G(x) = \nabla g(x) + N_K(x), \quad \forall x \in {\mathbb {R}}^d. \end{aligned}$$

2.3 Further notation

We introduce now two linear operators, \(\varvec{A}\) and \(\varvec{S}\). They will allow to bring out the connection between the coupled system and the potential problem. The operator \(\varvec{A} :{\mathbb {R}}({\mathcal {T}} \times S^2) \rightarrow {\mathbb {R}}({\mathcal {T}})\) and its adjoint \(\varvec{A}^\star :{\mathbb {R}}({\mathcal {T}}) \rightarrow {\mathbb {R}}({\mathcal {T}} \times S^2)\) are given by

The operator \(\varvec{S} :{\mathbb {R}}({\mathcal {T}} \times S^2) \rightarrow {\mathbb {R}}(\bar{{\mathcal {T}}} \times S)\) and its adjoint \(\varvec{S}^\star :{\mathbb {R}}(\bar{{\mathcal {T}}} \times S) \rightarrow {\mathbb {R}}({\mathcal {T}} \times S^2)\) are given by

We can now reformulate the dynamic programming equations of the coupled system (MFG,i-ii) as follows:

3 Potential problem and convex formulation

3.1 Perspective functions

Given \(h :{\mathbb {R}}^d \rightarrow {\mathbb {R}}\cup \{+\infty \}\) a proper l.s.c. and convex function with bounded domain, we define the perspective function \({\tilde{h}} :{\mathbb {R}} \times {\mathbb {R}}^d \rightarrow {\mathbb {R}}\cup \{+\infty \}\) (following [39, Section 3]) by

Lemma 1

The perspective function \({\tilde{h}}\) is proper, convex, l.s.c. and its domain is given by \({{\,\textrm{dom}\,}}({\tilde{h}}) = \big \{ (\theta ,x) \in {\mathbb {R}}_+ \times {\mathbb {R}}^d \, \big | \, x \in \theta {{\,\textrm{dom}\,}}(h) \big \}.\) For any \((\theta ^\star , x^\star ) \in {\mathbb {R}} \times {\mathbb {R}}^d\), we have

where \(Q := \{ (\theta ^\star , x^\star ) \in {\mathbb {R}} \times {\mathbb {R}}^d, \, h^\star (x^\star ) + \theta ^\star \le 0 \}\).

Proof

The proof is a direct application of [11, Lemmas 1.157, 1.158] when h has a bounded domain. In this case the recession function of h is the indicator function of zero. \(\square \)

Lemma 2

Let \((\theta , x),(\theta ^\star , x^\star ) \in {\mathbb {R}} \times {\mathbb {R}}^d\). Then \((\theta ^\star ,x^\star ) \in \partial {\tilde{h}}(\theta ,x)\) if and only if

Proof

Direct application of [20, Proposition 2.3]. \(\square \)

3.2 Potential problem

We define the following criterion

and the following potential problem (recall that \(m^\pi \) is the solution to the Kolmogorov equation (MFG,iii), given \(\pi \in \varDelta \)):

The link between the mean field game system (MFG) and the potential problem (P) will be exhibited in Sect. 5. Notice that Problem (P) is not convex. Yet we can define a closely related convex problem, whose link with (P) is established in Lemma 3.

We denote by \({\tilde{\ell }} :{\mathcal {T}} \times S \times {\mathbb {R}} \times {\mathbb {R}}(S) \rightarrow {\mathbb {R}}\cup \{+\infty \}\) the perspective function of \(\ell \) with respect to the third variable. By Lemma 1 the function \({\tilde{\ell }}(t,x,\cdot ,\cdot )\) is proper convex and l.s.c. for any \((t,x) \in {\mathcal {T}} \times S\). We define

In the above definition, \(\tilde{\varvec{\ell }}\) is the Nemytskii operator of \({\tilde{\ell }}\), that is, for any \((t,x) \in {\mathcal {T}} \times S\),

We consider now the following convex problem:

where \({\bar{m}}_0 \in {\mathbb {R}}(\bar{{\mathcal {T}}} \times S)\) is defined by

Lemma 3

Let \({{\,\textrm{val}\,}}({ \text {P}})\) and \({{\,\textrm{val}\,}}({ \tilde{\text {P}}})\) respectively denote the values of problems (P) and (\(\tilde{P}\)). Then \({{\,\textrm{val}\,}}({ \text {P}}) = {{\,\textrm{val}\,}}({ \tilde{\text {P}}})\). In addition, if Problem (P) is feasible, then both problems (P) and (\(\tilde{P}\)) have a non-empty bounded set of solutions.

Proof

Step 1: \({{\,\textrm{val}\,}}({ \text {P}}) \ge {{\,\textrm{val}\,}}({ \tilde{\text {P}}})\). Let \((m,\pi ) \in {{\,\textrm{dom}\,}}({\mathcal {J}})\) be such that \(m=m^\pi \). Let

for any \((t,x) \in {\mathcal {T}} \times S\). Then (m, w) is feasible for problem (\(\tilde{P}\)) and

for any \((t,x)\in {\mathcal {T}}\times S\). Indeed by definition of \({\tilde{\ell }}(t,x,\cdot ,\cdot )\), if \(m(t,x) > 0\) then (5) holds and if \(m(t,x) = 0\) then \(w(t,x,\cdot ) = 0\) and (5) still holds. It follows that \({\mathcal {J}}(m,\pi ) = \tilde{{\mathcal {J}}}(m,w)\) and consequently, \({{\,\textrm{val}\,}}({ \text {P}}) \ge {{\,\textrm{val}\,}}({ \tilde{\text {P}}})\).

Step 2: \({{\,\textrm{val}\,}}({ \text {P}}) \le {{\,\textrm{val}\,}}({ \tilde{\text {P}}})\). Let \((m,w) \in {{\,\textrm{dom}\,}}(\tilde{{\mathcal {J}}})\) be such that \(\varvec{S}w-m = {\bar{m}}_0\) and let \(\pi \) be such that

for all \((t,x)\in {\mathcal {T}}\times S\). Then (5) is satisfied and \((m,\pi )\) is feasible for (P). Thus \({\mathcal {J}}(m,\pi ) = \tilde{{\mathcal {J}}}(m,w)\), and consequently, \({{\,\textrm{val}\,}}({ \text {P}}) \le {{\,\textrm{val}\,}}({ \tilde{\text {P}}})\).

Step 3: non-empty and bounded sets of solutions. Since \({\mathcal {J}}(m^\pi ,\pi )\) is l.s.c. with non-empty bounded domain, it reaches its minimum on its domain. Then the set of solutions to (P) is non-empty and bounded. Now let \((m,\pi )\) be a solution to (P) and let w be given by (4). We have that

thus we deduce that the set of solutions to (\(\tilde{P}\)) is non-empty. It remains to show that the set of solutions to (\(\tilde{P}\)) is bounded. Let (m, w) be a solution to (\(\tilde{P}\)). The Kolmogorov equation implies that \(0 \le m(t,x) \le 1\), for any \((t,x) \in \bar{{\mathcal {T}}} \times S\). By Lemma 1, we have \(w(t,x,\cdot ) \in m(t,x) \varDelta (S)\), which implies that \(0 \le w(t,x,y) \le 1\). \(\square \)

Note that the above proof shows how to deduce a solution to (\(\tilde{P}\)) out of a solution to (P) and vice-versa, thanks to relations (4) and (6).

4 Duality

We show in this section that Problem (\(\tilde{P}\)) is the dual of an optimization problem, denoted (D), itself equivalent to an optimal control problem of the dynamic programming equation, problem (\(\tilde{D}\)). For this purpose, we introduce a new assumption (Assumption 2), which is assumed to be satisfied all along the rest of the article.

4.1 Duality result

The dual problem is given by

Note that the above kind of dynamic programming equation involves inequalities (and not equalities as in (MFG,i)).

We introduce now a qualification condition, which will allow to prove the main duality result of the section. For any \(\varepsilon = (\varepsilon _1,\varepsilon _2,\varepsilon _3) \in {\mathcal {K}}\) and \(\pi \in {{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{\ell })\) we define \(m_1[\varepsilon ,\pi ]\) the solution to the following perturbed Kolmogorov equation

We also define, for any \((t,x,y) \in {\mathcal {T}}\times S \times S\),

Assumption 2

(Qualification) There exists \(\alpha >0\) such that for any \(\varepsilon = (\varepsilon _1,\varepsilon _2,\varepsilon _3)\) in \({\mathcal {K}}\) with \(\Vert \varepsilon \Vert \le \alpha \), there exists \(\pi \in {{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{\ell })\) such that

Note that the qualification assumption implies the feasibility of Problems (\(\tilde{P}\)) and (P).

Remark 2

Assume that \({{\,\textrm{int}\,}}({{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{F}))\) and \({{\,\textrm{int}\,}}({{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{\phi }))\) are non-empty sets. Then in this case, Assumption 2 is satisfied if there exists \(\pi \in {{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{\ell })\) such that

Remark 3

Let \({\bar{m}}_T \in \varDelta (S)\) be such that \({\bar{m}}_T(x) > 0\) for any \(x \in S\), let \(F(T) = \chi _{\left\{ {\bar{m}}_T\right\} }\) and let \(\phi = 0\). In this case (MFG) is a discrete mean field game planning problem (see [1]).

Now further assume that \(F(t) = 0\) for all \(t\in {\mathcal {T}}\), then (MFG) can be interpreted as an optimal transport problem (see [1, 5]). In this setting, Assumption 2 is satisfied if and only if there exists \(\alpha >0\) such that the following holds: for any \(\varepsilon \in {\mathbb {R}}(\bar{{\mathcal {T}}} \times S)\) with \(\Vert \varepsilon \Vert \le \alpha \), there exists \(\pi \in {{\,\mathrm{{{\textbf {dom}}}}\,}}(\varvec{\ell })\) and \(m_1\) such that

Theorem 1

Let Assumption 2 hold true. Then the dual problem (D) has a bounded set of solutions and \({{\,\textrm{val}\,}}({ \text {D}}) = {{\,\textrm{val}\,}}({ \tilde{\text {P}}})\).

Proof

The primal problem (\(\tilde{P}\)) can formulated as follows:

where the maps \({\mathcal {F}}:{\mathcal {C}} \rightarrow {\mathbb {R}} \cup \{ + \infty \}\) and \({\mathcal {G}}:{\mathcal {K}} \rightarrow {\mathbb {R}}\cup \{ + \infty \}\) and the operator \({\mathcal {A}} :{\mathcal {C}} \rightarrow {\mathcal {K}}\) are defined by

We next prove that the qualification condition

is satisfied. This is equivalent to show the existence of \(\alpha >0\) such that for any \(\varepsilon = (\varepsilon _1,\varepsilon _2,\varepsilon _3) \in {\mathcal {K}}\), with \(\Vert \varepsilon \Vert \le \alpha \), there exists \((m_1,w,m_2,D) \in {{\,\textrm{dom}\,}}({\mathcal {F}})\) satisfying

This is a direct consequence of Assumption 2. Therefore, we can apply the Fenchel-Rockafellar theorem (see [40, Theorem 31.2]) to problem (\({\mathfrak {P}}\)). It follows that the following dual problem has the same value as (\({\mathfrak {P}}\)) and possesses a solution:

It remains to calculate \({\mathcal {F}}^\star \), \({\mathcal {G}}^\star \), and \({\mathcal {A}}^\star \). For any \((s,x) \in \bar{{\mathcal {T}}} \times S\), we define

We then define

For any \((y_1,y_2,y_3,y_4) \in {\mathcal {C}}\) we have by Lemma 1 that

The adjoint operator \({\mathcal {A}}^\star :{\mathcal {K}}\rightarrow {\mathcal {C}}\) is given by

It follows that

Moreover, \({\mathcal {G}}^\star (u,\gamma ,P) = - \langle u(0,\cdot ), m_0\rangle .\) It follows that (D) and (\({\mathfrak {D}}\)) are equivalent, which concludes the proof of the theorem. \(\square \)

4.2 A new dual problem

We introduce in this section a new optimization problem, equivalent to (D). We define the mapping \(\varvec{U} :{\mathcal {U}} \rightarrow {\mathbb {R}}(\bar{{\mathcal {T}}} \times S)\) which associates with \((\gamma ,P) \in {\mathcal {U}}\) the solution \(u \in {\mathbb {R}}(\bar{{\mathcal {T}}} \times S)\) to the dynamic programming equation

We define the following problem

Note that the above dual criterion is of similar nature as the dual criterion in [19, Remark 2.3].

Lemma 4

Problems (D) and (\(\tilde{D}\)) have the same value. Moreover, for any solution \((u,\gamma ,P)\) to (D), \((\gamma ,P)\) is a solution to (\(\tilde{D}\)); conversely, for any solution \((\gamma ,P)\) to (\(\tilde{D}\)) (there exists at least one), \((\varvec{U}[\gamma ,P],\gamma ,P)\) is a solution to (D).

Proof

Let \((\gamma ,P) \in {\mathcal {U}}\). Then \((u:=\varvec{U}[\gamma ,P],\gamma ,P)\) is feasible for problem (D) and by definition, \({\mathcal {D}}(u,\gamma ,P)= \tilde{{\mathcal {D}}}(\gamma ,P)\). Therefore, val(D) \(\ge \) val(\(\tilde{D}\)).

Conversely, let \((u,\gamma ,P)\) be feasible for (D). Let \({\hat{u}}= \varvec{U}[\gamma ,P]\). Now we claim that \({\hat{u}}(t,x) \ge u(t,x)\), for any \((t,x) \in \bar{{\mathcal {T}}} \times S\) (this is nothing but a comparison principle for our dynamic programming equation). The proof of the claim relies on a monotonicity property of \(\ell ^\star \). Given b and \(b' \in {\mathbb {R}}(S)\), we say that \(b \le b'\) if \(b(x) \le b'(x)\), for all \(x \in S\). Since \(\ell (t,x,\cdot )\) has its domain included in \(\varDelta (S)\), we have

Using the above property, it is easy to prove the claim by backward induction. It follows that \(\tilde{{\mathcal {D}}}(\gamma ,P) = {\mathcal {D}}({\hat{u}},\gamma ,P) \ge {\mathcal {D}}(u,\gamma ,P)\) and finally, val(\(\tilde{D}\)) \(\ge \) val(D). Thus the two problems have the same value.

The other claims of the lemma are then easy to verify. \(\square \)

Lemma 5

For any \((t,x) \in {\mathcal {T}} \times S\), the map \((\gamma ,P) \in {\mathcal {U}} \mapsto \varvec{U}[\gamma ,P](t,x)\) is concave.

Proof

Let \((t,x) \in {\mathcal {T}} \times S\). Given \(\pi \in \varDelta \), consider the Markov chain \((X_s^\pi )_{s=t,...,T}\) defined by

By the dynamic programming principle, we have

The criterion to be minimized in the above equality is affine with respect to \((\gamma ,P)\), thus it is concave. The infimum of a family of concave functions is again concave, therefore, \({\mathcal {U}}[\gamma ,P](t,x)\) is concave with respect to \((\gamma ,P)\). \(\square \)

As a consequence of the above Lemma, the criterion \(\tilde{{\mathcal {D}}}\) is concave.

5 Connection between the MFG system and potential problems

The connection between the MFG system and the potential problems can be established with the help of seven conditions, which we introduce first. We say that \((m_1,w,m_2,D) \in {\mathcal {C}}\) and \((u,\gamma ,P) \in {\mathcal {K}}\) satisfy the condition (C1) if for any \((t,x) \in {\mathcal {T}} \times S\),

where \( \pi (t,x) = w(t,x)/m_1(t,x)\). We say that the conditions (C2-C7) are satisfied if

We show in the next lemma that the conditions (C1-C7) are necessary and sufficient optimality conditions for (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)).

Lemma 6

We have that \((m_1,w,m_2,D) \in {\mathcal {C}}\) and \((u,\gamma ,P) \in {\mathcal {K}}\) are respectively solutions of (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)) if and only if the conditions (C1-C7) hold.

Proof

Let \((m_1,w,m_2,D) \in {\mathcal {C}}\) and \((u,\gamma ,P) \in {\mathcal {K}}\). We define the two quantities a and b as follows:

By Theorem 1, \((m_1,w,m_2,D) \in {\mathcal {C}}\) and \((u,\gamma ,P) \in {\mathcal {K}}\) are respectively solutions of (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)) if and only if \(a+b = 0\). Then we have the following decomposition

where

for any \((t,s,x) \in {\mathcal {T}} \times \bar{{\mathcal {T}}} \times S\). By the Fenchel-Young inequality,

Then \(a+b = 0\) if and only if

By Lemma 2 we have that (C1) holds if and only if \(a_1(s,x) = 0\) and it is obvious that (C2-C7) holds if and only if \(a_2(x) = a_3(s) = a_4(t) = b_1(t) = b_2(s) = b_3(s) = 0\). Then the conditions (C1-C7) hold if and only if (13) holds, which concludes the proof. \(\square \)

Proposition 1

Let \((m_1,\pi ,u,\gamma ,P) \in {\mathcal {R}} \times {\mathcal {K}}\) be a solution to (MFG) and let

for any \((t,x) \in {\mathcal {T}} \times S\). Then \((m_1,w,m_2,D)\) and \((u,\gamma ,P)\) are respectively solutions to (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)). Moreover, \((m_1,w)\) is solution to (\(\tilde{P}\)), \((m_1,\pi )\) is solution to (P), and \((\gamma ,P)\) is solution to (\(\tilde{D}\)).

Proof

The conditions (C1-C7) are obviously satisfied. It immediately follows from Lemma 6 that \((m_1,w,m_2,D)\) and \((u,\gamma ,P)\) are optimal for (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)). The optimality of \((m_1,w)\) and \((m_1,\pi )\) is then deduced from the proof of Lemma 3. The optimality of \((\gamma ,P)\) is a consequence of Lemma 4. \(\square \)

For any \((m,w) \in {\mathcal {R}}\), \((u,\gamma ,P)\in {\mathcal {K}}\) we define the set \(\varvec{\pi }[m,w,u,\gamma ,P]\) of controls \( \pi \in \varDelta \) satisfying

if \(m(t,x) >0\) and

if \(m(t,x) = 0\), for any \((t,x)\in {\mathcal {T}}\times S\). Note that for any \(\pi \in \varvec{\pi }[m,w,u,\gamma ,P]\), we have \(w(t,x,\cdot )= m(t,x) \pi (t,x,\cdot )\), for any \((t,x) \in {\mathcal {T}} \times S\). We have now the following converse property to Proposition 1.

Proposition 2

Let \((m_1,w,m_2,D)\) and \((u,\gamma ,P)\) be respectively solutions to (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)). Let \({\hat{u}}= \varvec{U}[\gamma ,P]\) and let \(\pi \in \varvec{\pi }[m,w,{\hat{u}},\gamma ,P]\). Then \((m,{\hat{\pi }},{\hat{u}},\gamma ,P)\) is a solution to (MFG).

Proof

By Lemma 4, \(({\hat{u}},\gamma ,P)\) is a solution to (D). The pairs \((m_1,w,m_2,D)\) and \(({\hat{u}},\gamma ,P)\) are solutions to (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\))), respectively, therefore they satisfy conditions (C1–C7), by Lemma 6. Equations (MFG,iii–v) are then obviously satisfied. By definition, \({\hat{u}}\) satisfies (MFG,i). Finally, (MFG,ii) is satisfied, by condition (C1) and by definition of the set \(\varvec{\pi }[m,w,u,\gamma ,P]\). It follows that \((m,{\pi },{\hat{u}},\gamma ,P) \in {\mathcal {R}} \times {\mathcal {K}}\) is solution to (MFG). \(\square \)

Since the existence of solutions to (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)) has been established in Lemmas 3 and 4, we have the following corollary.

Corollary 1

There exists a solution to (MFG).

We finish this section with a uniqueness result.

Proposition 3

Let \((m,\pi ,u,\gamma ,P)\) and \( (m',\pi ',u',\gamma ',P')\) be two solutions to the coupled system (MFG). Assume that F and \(\phi \) are differentiable with respect to their second variable. Then \((u,\gamma ,P)=(u',\gamma ',P')\). If moreover, for any \((t,x) \in {\mathcal {T}} \times S\), \(\ell (t,x,\cdot )\) is strictly convex, then \((m,\pi )=(m',\pi ')\) and thus (MFG) has a unique solution.

Proof

It follows from Proposition 1 that \((m,w:=m\pi ,m,D:=\varvec{A}w )\) is a solution to (\({\mathfrak {P}}\)) and that \((u,\gamma ,P)\) and \((u',\gamma ',P')\) are solutions to (\({\mathfrak {D}}\)). Thus by Lemma 6, the conditions (C3) and (C4) are satisfied, both for (m, w, m, D) and \((u,\gamma ,P)\) and for (m, w, m, D) and \((u',\gamma ',P')\), which implies that \(\gamma = \nabla \varvec{F}[m] = \gamma '\) and \(P= \nabla \varvec{\phi }[D]= P'\). It further follows that \(u= \varvec{U}[\gamma ,P]= \varvec{U}[\gamma ',P']= u'\).

If moreover \(\ell (t,x,\cdot )\) is strictly convex for any \((t,x) \in {\mathcal {T}} \times S\) then the minimal argument in (MFG,ii) is unique, which implies that \(\pi =\pi '\) and finally that \(m=m^\pi =m^{\pi '}=m'\). \(\square \)

6 Numerical methods

In this section we investigate the numerical resolution of the problems (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)). We investigate different methods: primal-dual proximal algorithms, ADMM and ADM-G. For all methods, it is assumed that the computation of the prox operators (defined below) of \({\tilde{\ell }}(t,x,\cdot )\), \(F(t,\cdot )\) and \(\phi (t,\cdot )\) are tractable. Note that for the method involving the Kullback-Leibler distance in Sect. 6.2.2, the prox of \({\tilde{\ell }}\) is replaced by a stightly more complex optimization problem.

We explain in the “Appendix A” how to calculate the prox of \(\ell \) (and the nonlinear proximator based on the entropy function) in the special case where \(\ell \) is linear on its domain. We explain in Sect. 6.4 how to recover a solution to (MFG).

6.1 Notations

Let \(X_1\) be a subset of \({\mathbb {R}}^d\), let \({\bar{X}}_1\) denote its closure. Let \(f :{\bar{X}}_1 \rightarrow {\mathbb {R}}\). Assume that the following assumption holds true.

Assumption 3

The set \({\bar{X}}_1\) is convex and the map f is continuous and 1-strongly convex on \({\bar{X}}_1\). There exists an open subset \(X_2\) containing \(X_1\) such that f can be extended to a continuous differentiable function on \(X_2\).

We define then the Bregman distance \(d_f :X_1 \times X_1 \rightarrow {\mathbb {R}}\) by

If f is the Euclidean distance \(\frac{1}{2}|\cdot |^2\), then \(d_f(x,y) = \frac{1}{2} |x-y|^2\).

Given a l.s.c., convex and proper function \(g :{\mathbb {R}}^d \rightarrow {\mathbb {R}}\), we define its proximal operator \({{\,\textrm{prox}\,}}_g :{\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) as follows:

For any non-empty, convex and closed \(K \subseteq {\mathbb {R}}^d\), we define the projection operator \({{\,\textrm{proj}\,}}_K\) of \(x\in {\mathbb {R}}^d\) on K by

Finally, we denote \({\bar{\alpha }}(t) = \sum _{(x,y) \in S \times S} \alpha (t,x,y)^2\) for any \(t \in {\mathcal {T}}\).

6.2 Primal-dual proximal algorithms

In this subsection we present the primal-dual algorithms proposed by Chambolle and Pock in [17] and [18]. For the sake of simplicity, we denote by x the primal variable \((m_1,w,m_2,D)\) and by y the dual variable \((u,\gamma ,P)\). The primal-dual algorithms rely on the following saddle-point problem

which is equivalent to problem (\({\mathfrak {P}}\)) (defined in the proof of Theorem 1). Let \({\mathcal {C}}_1\) and \({\mathcal {K}}_1\) be two subsets of \({\mathcal {C}}\) and \({\mathcal {K}}\), respectively. Let \(f :\bar{{\mathcal {C}}}_1 \rightarrow {\mathbb {R}}\) and let \(g :\bar{{\mathcal {K}}}_1 \rightarrow {\mathbb {R}}\) satisfy Assumption 3.

For any \(\tau ,\sigma > 0\) and for any \((x',y') \in {\mathcal {C}} \times {\mathcal {K}}\) we define:

Then we define the following algorithm.

Theorem 2

Let \(\tau , \sigma >0 \) be such that \(\tau \sigma \Vert {\mathcal {A}}\Vert ^2 < 1\), where \(\Vert {\mathcal {A}} \Vert \) denotes the operator norm of \({\mathcal {A}}\) (for the Euclidean norm). Assume that \(\text {dom}({\mathcal {F}}) \subseteq \bar{{\mathcal {C}}}_1\) and \(\text {dom}({\mathcal {G}}^\star ) \subseteq \bar{{\mathcal {K}}}_1\). Assume that the iteration (15) is well-defined, that is, the minimal arguments in (i) and (iii) exist. Let \((x^k,y^k)_{k\in {\mathbb {N}}}\) denote the sequence generated by the algorithm. For any \(k \in {\mathbb {N}}\) we set

Let \((x,y) \in {\mathcal {C}} \times {\mathcal {K}}\). Then the following holds:

-

1.

The sequence \(({\bar{x}}^k)_{k\in {\mathbb {N}}}\) converges to a solution of (\(\tilde{P}\)) and the sequence \(({\bar{y}}^k)_{k\in {\mathbb {N}}}\) converges to a solution of (D). In addition the saddle-point gap is such that

$$\begin{aligned}{} & {} {\mathcal {L}}({\bar{x}}^k,y) - {\mathcal {L}}(x,{\bar{y}}^k) \nonumber \\{} & {} \quad \le \frac{1}{k}\left( d_f(x,{\bar{x}}^k)/\tau + d_g(y,{\bar{y}}^k))/\sigma - \langle {\mathcal {A}}(x-x^0), (y-y^0) \rangle \right) .\nonumber \\ \end{aligned}$$(17) -

2.

If f and g are the Euclidean distance \(\frac{1}{2}|\cdot |^2\), then the sequence \((x^k)_{k\in {\mathbb {N}}}\) converges to a solution of (\(\tilde{P}\)) and the sequence \((y^k)_{k\in {\mathbb {N}}}\) converges to a solution of (D).

Proof

Point 1 holds as a direct application of [18, Theorem 1, Remark 3]. Point 2 holds as a direct application of [17, Theorem 1], applied with \(\theta = 1\). \(\square \)

Remark 4

Fix (x, y), solution to (14). Let \(({{\hat{x}}},{{\hat{y}}}) \in {\mathcal {C}} \times {\mathcal {K}}\) . Then we have that \(0 \le \delta ({{\hat{x}}}) := {\mathcal {L}}({{\hat{x}}},y) - {\mathcal {L}}(x,y)\) and \(0\le \delta '({{\hat{y}}}) := {\mathcal {L}}(x,y) - {\mathcal {L}}(x,{{\hat{y}}})\), with equality if \({{\hat{x}}}\) (resp. \({{\hat{y}}}\)) is a primal (resp. dual) solution. These measures of optimality (for the saddle-point problem) trivially satisfy

for which an upper-bound is provided by (17).

Lemma 7

Let \(a = \max _{t \in {\mathcal {T}}} {\bar{\alpha }}(t)\). Then \(\Vert {\mathcal {A}} \Vert \le \sqrt{\max \left\{ n + a, 4 \right\} }\), where n is the cardinal of the set S.

Proof

For any \((m_1,w,m_2,D)\in {\mathcal {C}}\), we have

We have \(\Vert \varvec{A}\Vert \le a\) and \(\Vert \varvec{S}\Vert \le n\), which concludes the proof. \(\square \)

6.2.1 Euclidean distance

Now we explicit the update rule (15) in the case where f and g are both equal to the Euclidean distance \(\frac{1}{2}|\cdot |^2\) and defined on \({\mathcal {C}}\) and \({\mathcal {K}}\) respectively. In this situation (i,15) and (iii,15) can be expressed via proximal operators:

Now we detail the computation of the proximal steps in the above algorithm.

Primal step. For any \(x = (x_1,x_2,x_3,x_4) \in {\mathcal {C}}\), we have by Moreau’s identity

As a consequence of (12), the proximal operator of \({\mathcal {F}}^\star \) is given by

Then (i,19) is given by

and for any \((t,s) \in {\mathcal {T}} \times \bar{{\mathcal {T}}}\),

Dual step. It follows from (10) that \({{\,\textrm{prox}\,}}_{\sigma {\mathcal {G}}^\star }(y_1,y_2,y_3) = (y_1 + \sigma {\bar{m}}_0, y_2 ,y_3).\) Then (iii,19) is given by

Remark 5

An alternative formulation of the primal problem (avoiding the decoupling \(m_1\) and \(m_2\)) is as follows:

where

This formulation may have numerical advantages since the operator \(\bar{{\mathcal {A}}}\) has a smaller norm than \({\mathcal {A}}\). In full generality, there is however no analytic form for the proximal operator of the \(\bar{{\mathcal {F}}}\) that would be based on the proximal operators of \({\tilde{\ell }}(t,\cdot )\) and \(F(t,\cdot )\). Therefore we do not explore any further this formulation.

6.2.2 Kullback–Leibler divergence

In this section we slightly modify the Euclidean framework above. Instead of considering a Euclidean distance \(d_f\) in (i,15), we consider an entropy based Bregman distance called Kullback-Leibler divergence. Let us define

For any \((m_1,w,m_2,D) \in \bar{{\mathcal {C}}}_1\), we define

with the convention that \(0 \ln (0) = 0\). Then, given \((m_1,w,m_2,D) \in {\mathcal {C}}_1\) and \((m_1',w',m_2',D') \in {\mathcal {C}}_1\), we have

where

As can be easily verified, the map f is 1-strongly convex on \(\bar{{\mathcal {C}}}_1\). The domain of \({\mathcal {F}}\) is not contained in \(\bar{{\mathcal {C}}}_1\) in general (as required by Theorem 2), however f is not 1-strongly convex on \({\mathcal {C}}\). This is a minor issue, since any solution to (14) lies in \(\bar{{\mathcal {C}}}_1\), thus we can replace \({\mathcal {F}}\) by \({\mathcal {F}} + \chi _{\bar{{\mathcal {C}}}_1}\) without modifying the solution set to the problem.

Compared to the Sect. 6.2.1, the computations of (21) still hold. The projection step (20) is now replaced by

Note that it is not necessary to explicit the constraint \(w(t,x,y) \le 1\) in the above problem; it is satisfied as a consequence of Assumption 1 and Lemma 1. In general, the computation of this proximal operator can be difficult. In Sect. 6 we consider a linear running cost and explain (in Appendix 1) how to solve explicitly problem (24) in this specific case.

6.3 ADMM and ADM-G

We now present ADMM and ADM-G. Introducing the variables

and recalling the definition of Q and \({\mathcal {F}}^*\) (see the proof of Theorem 1), the problem (D) can be written as follows:

Remark 6

Let \(D_t \) and \(D_x\) be finite difference operators defined for any \((t,x,y) \in {\mathcal {T}} \times S \times S\) by

Since \({{\,\textrm{dom}\,}}(\ell (t,x,\cdot )) \subseteq \varDelta (S)\), for any \((u,b) \in {\mathcal {R}}\) we have that

for any \((t,x) \in {\mathcal {T}} \times S\). Then we have that \((a,b) \in Q\) if and only if \(({\tilde{a}},{\tilde{b}}) \in Q\), where

Thus the problem (25) can be alternatively written

This problem is close to the problem studied in [5, Section 4] in the context of optimal transport theory.

Let \(r>0\). The Lagrangian and augmented Lagrangian associated with problem (25) are defined by

when evaluated at \((u,\gamma ,P,a,b,m,w)\). Note that their definition is different from the one introduced in (14). We define an ADMM step which consists in the updates of u, \((\gamma ,P)\) and (a, b) via three successive minimization steps and in the update of (m, w) via a gradient ascent step of the augmented Lagrangian:

6.3.1 ADMM

The ADMM method is given by Algorithm 2.

Unlike in [5] this algorithm does not reduce to ALG2, thus we have no theoretical guarantee about the convergence. But as we will see in Sect. 6.3.2, convergence results are available for ADM-G. The relation (28,i) is given by

The relation (28,ii) can be written under a proximal form,

for any \((t,s) \in {\mathcal {T}} \times \bar{{\mathcal {T}}}\). The relation (28, iii) can be written as a projection step

6.3.2 ADM-G

We explicit now the implementation of the ADM-G algorithm introduced in [32]. To fit their framework we define

with appropriate dimensions, so that the constraint of problem (25) writes \(A_1 u + A_2 (\gamma ,P)+ A_3 (a,b) = 0\). We define

Then we have

Theorem 3

Let \((u^k,\gamma ^k,P^k,a^k,b^k,m_1^k,w^k)_{k\in {\mathbb {N}}}\) be the sequence generated by Algorithm 3, and let \(m_2^k = m_1^k\), \(D^k = \varvec{A}w^k\), for any \(k\in {\mathbb {N}}\). Then the sequence \((m_1^k,w^k,m_2^k,D^k)_{k\in {\mathbb {N}}}\) converges to a solution of (\(\tilde{P}\)) and the sequence \((u^k,\gamma ^k,P^k)_{k\in {\mathbb {N}}}\) converges to a solution of (D).

Proof

By [32, Theorem 4.7] we have that \((u^k,\gamma ^k,P^k,a^k,b^k,m_1^k,w^k)_{k\in {\mathbb {N}}}\) converges to a saddle-point of the Lagrangian (26). Thus by definition of \((m_2^k,D^k)\), the sequence \((m_1^k,w^k,m_2^k,D^k)_{k\in {\mathbb {N}}}\) converges to a solution of (\({\mathfrak {P}}\)) and the sequence \((u^k,\gamma ^k,P^k)_{k\in {\mathbb {N}}}\) converges to a solution of (\({\mathfrak {D}}\)). \(\square \)

Remark 7

In our case the first equality of the Gaussian back substitution step in Algorithm (3) can be written

The Gaussian back substitution step is thus given by

where \((\varvec{A} \varvec{A}^\star )^{-1} P(t) = P(t)/ {\bar{\alpha }}(t)\) for any \(t \in {\mathcal {T}}\). Then the differences between ADM-G and ADMM can be summarized by the two corrections (30) and (31).

6.4 Residuals

Let \((m_1^k,w^k,m_2^k,D^k)_{k\in {\mathbb {N}}}\) and \((u^k,\gamma ^k,P^k)_{k\in {\mathbb {N}}}\) denote the two sequences generated by a numerical method. Let us consider

It was shown in Proposition 2 that if for some \(k \in {\mathbb {N}}\), \((m_1^k,w^k,m_2^k,D^k)\) and \((u^k,\gamma ^k,P^k)\) are solutions to (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)), then \((m_1^k,\pi ^k,{\hat{u}}^k,\gamma ^k,P^k)\) is a solution to (MFG).

Therefore, we look the sequence \((m_1^k,\pi ^k,{\hat{u}}^k,\gamma ^k,P^k)_{k \in {\mathbb {N}}}\) as a sequence of approximate solutions to (MFG). Note that (MFG,i) is exactly satisfied, by construction. We consider the residuals \((\varepsilon _m,\varepsilon _\pi , \varepsilon _\gamma , \varepsilon _P) \in {\mathcal {R}} \times {\mathcal {U}}\) defined as follows, in order to measure the satisfaction of the remaining relations in the coupled system:

for all \((t,s,x) \in {\mathcal {T}} \times \bar{{\mathcal {T}}}\times S\). If the residuals are null, then \((m_1^k,\pi ^k,{\hat{u}}^k,\gamma ^k,P^k)\) is a solution to (MFG). The errors are then defined as the norms of \(\varepsilon _\pi \), \(\varepsilon _m\), \(\varepsilon _\gamma \), and \(\varepsilon _P\).

Remark 8

In the following numerical Sect. 7, we plot residuals for ADMM, ADMG, Chambolle–Pock and Chambolle–Pock–Bregman algorithms. The difference in nature of the convergence results (ergodic versus classical) makes the performance comparison between different methods delicate:

-

In view of the ergodic convergence result (Theorem 2, point 1), we plot the residuals associated with the averaged iterates (as defined in (16)). In particular, the performances of Chambolle–Pock and Chambolle–Pock–Bregman can be compared.

-

Following Theorem 3, we plot the sequence of residuals for ADM-G and ADMM.

7 Numerical results

In this section we provide two problems that we solve with the algorithms presented in the previous section. We set \(n = T = 50\) and we define two scaling coefficients \(\varDelta _x = 1/n\) and \(\varDelta _x = 1/T\). We solve two instances of the following scaled system:

One can show that this system is connected to two optimization problems of very similar nature as Problems (\({\mathfrak {P}}\)) and (\({\mathfrak {D}}\)), which can be solved as described previously. For both examples, \(\ell \) is defined by

where \(S_x= \{ x, x-1, x+1 \} \cap \{ 0,..., n-1 \}\). Since \(\ell \) is linear with respect to \(\rho \), we can interpret \(\beta \) as displacement cost from state x to state y that is fixed (in opposition to the displacement cost induced by \(P(t)\alpha (t,x,y)\) that depends on the price). In Appendix 1 the reader can find detailed computations of the Euclidean projection (20) (Sect. 1) and the computation of (24) (Sect. 2) for this particular choice of running cost \(\ell \). The notion of residuals that we use in the following is adapted from Sect. 6.4 to the scaled system (MFG\(_\varDelta \)). In all subsequent graphs, the state space is represented by \(\{ 0, \varDelta _x,..., 1 \}\) and the set of time steps by \(\{ 0, \varDelta _t,...1 \}\).

7.1 Example 1

In our first example, we take \(\varvec{\phi } = 0\) and \(\alpha = 0\). We consider a potential \(\varvec{F}\) of the form \(\varvec{F}[m] = \varvec{F}_1[m] + \varvec{F}_2[m]\), where

and where \(\eta \in {\mathbb {R}}_{+}(\bar{{\mathcal {T}}} \times S)\) is given by

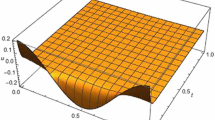

for any \((s,x) \in \bar{{\mathcal {T}}} \times {\mathbb {R}}(S)\). We refer to \(\varvec{F}_1\) as the soft congestion term and to \(\varvec{F}_2\) as the hard congestion term (Fig. 1). We call narrow region the set of points (s, x) for which \(\eta (s,x)= 0.5\) and we call spacious region the set of points for which \(\eta (s,x)=3\). In this situation the state of an agent represents its physical location on the interval [0, 1]. Each agent aims at minimizing the displacement cost induced by \(\ell \) and avoids congestion as time evolves from time \(t=0\) to \(t=1\). The congestion term is linked to \(\eta \) by the following relation (see Remark 1):

As shown on the graphs below, we have two regimes at equilibrium: in the spacious regions \(\gamma \) plays the role of a classical congestion term and \(\gamma = \nabla \varvec{F}_1[m]\). In the narrow region the constraint is binding, \(\gamma \) is such that the constraint \(m\in [0,\eta ]\) is satisfied at the equilibrium and is maximal for the dual problem.

We give a representation of the solution to the mean field system in Fig. 2. Since it is hard to give a graphical representation of \(\pi \), we give instead a graph of the mean displacement v, defined by

For each variable, a 3D representation of the graph and a 2D representation of the contour plots are provided (Fig. 2). For the contour plots, the horizontal axis corresponds to the state space and the vertical axis to the time steps (to be read from the bottom to the top).

Let us comment the results. We start with the interpretation of the measure m and the mean displacement v. At the beginning of the game, the distribution of players is given by the initial condition \(m(0) = {\bar{m}}_0\). Then the players spread since they are in the spacious region to avoid congestion.

Thus the mean displacement is negative on the left (black region) and positive on the right (yellow region), around \(t= 0\). The distribution becomes uniform after some time. In a second phase, the agents move again towards the border of the state space, anticipating the narrow region. They start their displacement before entering into the narrow region due to their limited speed and displacement cost. Then we are in a stationary regime (purple region), the mean displacement is null and the mass does not vary until the end of the narrow region. At the end of the narrow region, the agents spread again along the state axis and the distribution m becomes uniform.

We now interpret the value u and the congestion \(\gamma \). The value function has to be interpreted backward in time. At the end of the game, the terminal condition imposes that the value is equal to the congestion. Since the congestion is positive and accumulates backward in the value function (which can be seen in the dynamic programming equation), the value function increases backward in time. At the end and at the beginning of the narrow region we observe irregularities in the value function due to the irregularities of the congestion term \(\gamma \). But the impact on the value function is limited due to the trade-off between the variables u and \(\gamma \) in the dual problem. At the beginning of the game the value function is higher at the middle of the space because of the initial distribution of players that are accumulated at this point. The congestion term \(\gamma \) is high enough at the beginning of the narrow region to ensure that the constraint on the distribution of players is satisfied at this point. Then \(\gamma \) is high enough at the end of the narrow region to ensure that the constraint on the distribution of players is satisfied for all time indices \(T/3\le s \le 2T/3\). At the exception of these two moments, \(\gamma \) plays the role of a classical congestion term.

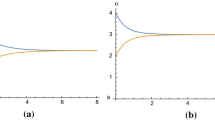

Figure 3 shows the evolution of the error terms in function of the iterations. The execution time of each algorithm is given in Table 1.

7.2 Example 2

Here we assume that \(\varvec{F} = 0\). In this situation the state of an individual agent represents a level of stock. We set \(\alpha (t,x,y) = y-x\); it represents the quantity bought in order to “move" from x to y. Therefore the variable D (used in the primal problem) is the average quantity which is bought; it has to be understood as a demand, since at equilibrium,

We define the potential \(\varvec{\phi }[D] = \varvec{\phi }_1[D] + \varvec{\phi }_2[D]\), where

The potential \(\varvec{\phi }\) is the sum of a convex and differential term \(\varvec{\phi }_1\) with full domain and a convex non-differentiable term \(\varvec{\phi }_2\). The quantity \({\bar{D}}\) is a given exogenous quantity which represent a net demand (positive or negative) to be satisfied by the agents. In this example \({\bar{D}}(t) = 2\sin (4\pi t/(T-1))\) for any \(t \in {\mathcal {T}}\) and \(D_{\text {max}} = 0\).

In this situation each agent faces a price and chooses to increase or deplete her stock. The price mechanism is given by

where \(D_{\text {eff}} := D + {\bar{D}}\) is called the effective demand and follows two regimes.

When the constraint on the demand D is not binding, we are in a soft regime, the price plays the role of a classical price term and is given by \(P(t) = \frac{1}{2}D_{\text {eff}}(t)\). The quantity \({\bar{D}}\) is an exogenous quantity which can be positive or negative. If the quantity \({\bar{D}}(t) > 0\), the exogenous quantity is interpreted as being a demand and the agents have an incentive to deplete their stock to satisfy this demand. If \({\bar{D}}(t) < 0\), the exogenous quantity is interpreted as being a supply. In the absence of a hard constraint, the agents would have interest to increase their stock to absorb this supply. When the constraint on the demand is binding, we are in a hard regime and the price plays the role of an adjustment variable so that the constraint \(D(t) \le D_{max}\) is satisfied and is maximal for the dual problem.

In the case where it is not profitable to buy or sell, we have that \(D(t) = 0\) and thus \(D_{\text {eff}}(t) = {\bar{D}}(t)\). This situation occurs when the quantity \({\bar{D}}(t) \le 0\), since the hard constraint prevents the agents from buying on the market. On the graph this corresponds to the case where the red and the black curves coincide (Fig. 4).

When \({\bar{D}}(t) \ge 0\) we observe that the red curve is lower than the black curve meaning that a certain amount (given by the blue curve on the following graph) of the demand has been satisfied by the agents. Three effects prevent the agents from fully satisfying the demand: their level of stock, their trading cost and their depletion speed limitation.

At the optimum we observe that the demand D is indeed below the threshold \(D_{\text {max}}\), meaning that the constraint is satisfied. We now comment the measure m, the mean displacement v and the value function u (Fig. 5). For a given initial distribution of the level of stock, we observe that the measure m is shifted to the left with time. This means that the agents deplete their stocks with time. This is consistent with the mean displacement v where we observe two regimes: either the agents choose to sell as much as possible or the agents choose not to sell on average. The value function u can be interpreted backward. At the end of the game the value is null due to the terminal condition. Then the higher the level of stock, the lower the value function that is to say the value function is increasing in time and decreasing in space. This comes from the definition of \(\alpha \) and the constraint \(D \le 0\), which implies that the price is positive.

ADMM and ADM-G again converge faster (Fig. 6). The execution time of each algorithm is given in Table 2.

References

Achdou, Y., Camilli, F., Capuzzo-Dolcetta, I.: Mean field games: numerical methods for the planning problem. SIAM J. Control Optim. 50(1), 77–109 (2012)

Achdou, Y., Kobeissi, Z.: Mean field games of controls: finite difference approximations (2020)

Achdou, Y., Laurière, M.: Mean Field Games and Applications: Numerical Aspects. In: Cardaliaguet, P., Porretta, A. (eds) Mean Field Games. Lecture Notes in Mathematics, vol 2281. Springer, Cham. (2020). https://doi.org/10.1007/978-3-030-59837-2_4

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, vol. 408. Springer, New York (2011)

Benamou, J.D., Brenier, Y.: A computational fluid mechanics solution to the Monge-Kantorovich mass transfer problem. Numer. Math. 84(3), 375–393 (2000)

Benamou, J.D., Carlier, G.: Augmented Lagrangian methods for transport optimization, mean field games and degenerate elliptic equations. J. Optim. Theory Appl. 167(1), 1–26 (2015)

Benamou, J.D., Carlier, G., Di Marino, S., Nenna, L.: An entropy minimization approach to second-order variational mean-field games. Math. Models Methods Appl. Sci. 29(08), 1553–1583 (2019)

Benamou, J.D., Carlier, G., Santambrogio, F.: Variational mean field games. In: Active Particles, vol. 1, pp. 141–171. Springer (2017)

Bonnans, J., Hadikhanloo, S., Pfeiffer, L.: Schauder estimates for a class of potential mean field games of controls. Appl. Math. Optim. 83(3), 1431–1464 (2021)

Bonnans, J.F.: Convex and Stochastic Optimization. Universitext Series. Springer-Verlag, Berlin (2019)

Bonnans, J.F., Shapiro, A.: Perturbation Analysis of Optimization Problems. Springer Science & Business Media, Berlin (2000)

Briceño-Arias, L., Kalise, D., Kobeissi, Z., Laurière, M., González, A.M., Silva, F.J.: On the implementation of a primal-dual algorithm for second order time-dependent mean field games with local couplings. ESAIM: Proceedings and Surveys 65, 330–348 (2019)

Briceno-Arias, L.M., Kalise, D., Silva, F.J.: Proximal methods for stationary mean field games with local couplings. SIAM J. Control Optim. 56(2), 801–836 (2018)

Cardaliaguet, P., Graber, P.J., Porretta, A., Tonon, D.: Second order mean field games with degenerate diffusion and local coupling. Nonlinear Differ. Equ. Appl. 22(5), 1287–1317 (2015)

Cardaliaguet, P., Hadikhanloo, S.: Learning in mean field games: the fictitious play. ESAIM Control Optim. Calcul. Var. 23(2), 569–591 (2017)

Cardaliaguet, P., Mészáros, A.R., Santambrogio, F.: First order mean field games with density constraints: pressure equals price. SIAM J. Control Optim. 54(5), 2672–2709 (2016)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chambolle, A., Pock, T.: On the ergodic convergence rates of a first-order primal-dual algorithm. Math. Program. 159(1), 253–287 (2016)

Chow, Y., Li, W., Osher, S., Yin, W.: Algorithm for Hamilton–Jacobi equations in density space via a generalized Hopf formula. J. Sci. Comput. 80(2), 1195–1239 (2019)

Combettes, P.L.: Perspective functions: properties, constructions, and examples. Set-Valued Var. Anal. 26(2), 247–264 (2018)

Erbar, M., Rumpf, M., Schmitzer, B., Simon, S.: Computation of optimal transport on discrete metric measure spaces. Numer. Math. 144(1), 157–200 (2020)

Gabay, D., Mercier, B.: A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 2(1), 17–40 (1976)

Geist, M., Pérolat, J., Laurière, M., Elie, R., Perrin, S., Bachem, O., Munos, R., Pietquin,O. :Concave utility reinforcement learning: the mean-field game viewpoint. In: Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, pp. 489–497 (2022)

Glowinski, R., Marroco, A.: Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité d’une classe de problèmes de dirichlet non linéaires. ESAIM: Mathematical Modelling and Numerical Analysis-Modélisation Mathématique et Analyse Numérique 9(R2), 41–76 (1975)

Gomes, D.A., Mohr, J., Souza, R.R.: Discrete time, finite state space mean field games. J. Math. Pures et Appl. 93(3), 308–328 (2010)

Gomes, D.A., Saude, J.: A mean-field game approach to price formation. Dyn. Games Appl. pp. 1–25 (2020)

Graber, P., Mullenix, A., Pfeiffer, L.: Weak solutions for potential mean field games of controls. Nonlinear Differ. Equ. Appl. 28(5), 1–34 (2021)

Graber, P.J., Bensoussan, A.: Existence and uniqueness of solutions for Bertrand and Cournot mean field games. Appl. Math. Optim. vol 77. https://doi.org/10.1007/s00245-016-9366-0 (2015)

Graber, P.J., Ignazio, V., Neufeld, A.: Nonlocal Bertrand and Cournot mean field games with general nonlinear demand schedule. J. Math. Pures Appl. 148, 150–198 (2021)

Graber, P.J., Mouzouni, C.: Variational mean field games for market competition. In: PDE models for multi-agent phenomena, pp 93–114. Springer (2018)

Hadikhanloo, S., Silva, F.J.: Finite mean field games: fictitious play and convergence to a first order continuous mean field game. J. Math. Pures Appl. 132, 369–397 (2019)

He, B., Tao, M., Yuan, X.: Alternating direction method with gaussian back substitution for separable convex programming. SIAM J. Optim. 22(2), 313–340 (2012)

Huang, M., Malhamé, R.P., Caines, P.E.: Large population stochastic dynamic games: closed-loop McKean-Vlasov systems and the Nash certainty equivalence principle. Commun. Inf. Syst. 6(3), 221–252 (2006)

Lasry, J.M., Lions, P.L.: Jeux à champ moyen. i-le cas stationnaire. Comptes Rendus Math. 343(9), 619–625 (2006)

Lasry, J.M., Lions, P.L.: Jeux à champ moyen. ii-horizon fini et contrôle optimal. Comptes Rendus Math. 343(10), 679–684 (2006)

Lasry, J.M., Lions, P.L.: Mean field games. Jpn. J. Math. 2(1), 229–260 (2007)

Mészáros, A.R., Silva, F.J.: A variational approach to second order mean field games with density constraints: the stationary case. J. Math. Pures Appl. 104(6), 1135–1159 (2015)

Prosinski, A., Santambrogio, F.: Global-in-time regularity via duality for congestion-penalized mean field games. Stochastics 89(6–7), 923–942 (2017)

Rockafellar, R.T.: Level sets and continuity of conjugate convex functions. Trans. Am. Math. Soc. 123(1), 46–63 (1966)

Rockafellar, R.T.: Convex Analysis, vol. 36. Princeton University Press, Princeton (1970)

Santambrogio, F.: A modest proposal for MFG with density constraints. Netw. Heterog. Media 7(2), 337–347 (2012)

Santambrogio, F.: Crowd motion and evolution PDEs under density constraints. ESAIM Proc. Surv. 64, 137–157 (2018)

Acknowledgements

The first author was supported by the FiME Lab Research Initiative (Institut Europlace de Finance). The third author was supported by a public grant as part of the Investissement d’avenir project, reference ANR-11-LABX-0056-LMH, LabEx LMH.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix

A Appendix

We detail here the calculation of the projection on Q and the non-linear proximity operator in (24), for a running cost of the form

The adaptation to the case where \(\ell \) is defined by (33) is straightforward.

1.1 A.1 Projection on Q

We detail the computation of \({{\,\textrm{proj}\,}}_Q\), as it appears in (20) and (29). First notice that the projection is decoupled in space and time, then for any \((t,x) \in {\mathcal {T}} \times S\) and \(({\bar{a}},{\bar{b}}) \in {\mathbb {R}} \times {\mathbb {R}}(S)\), we need to compute

where \(Q_{t,x} = \left\{ (a,b) \in {\mathbb {R}} \times {\mathbb {R}}(S), \, a + b(y) - \beta (y) \le 0 \right\} \). The corresponding problem is

For any \(a\in {\mathbb {R}}\), the solution of the inner minimization problem is given by

Then replacing into (35), the minimization problem is now given by

where \({\tilde{\beta }}(y) := \beta (y)-{\bar{b}}(y)\). It is now relatively easy to minimize g. Let us sort the sequence \(({\tilde{\beta }}(y))_{y \in S}\), that is, let us consider \((y_i)_{i\in \{0,\ldots ,n-1\}}\) such that \({\tilde{\beta }}(y_0) \le \cdots \le {\tilde{\beta }}(y_{n-1})\). It is obvious that the function g is strictly convex and polynomial of degree 2 on each of the intervals \((-\infty , {\tilde{\beta }}(y_0))\), \(({\tilde{\beta }}(y_0),{\tilde{\beta }}(y_1))\),..., and \(({\tilde{\beta }}(y_{n-1}),+\infty )\). One can identify on which of these intervals a stationary point of g exists, by evaluating \(\partial g({\tilde{\beta }}(y_i)\), for all \(i=0,...,n-1\). Then one can obtain an analytic expresison of the (unique) stationary point \(a^\star \), which minimizes g. Finally, we have \({{\,\textrm{proj}\,}}_{Q_{t,x}}({\bar{a}},{\bar{b}})= (a^\star ,b^\star (a^\star , \cdot ))\).

1.2 A.2 Entropic proximity operator

Here we detail the computation of the solution to (24). For notational purpose we set \(c_1 = \tau (- u' + \gamma ')\) and \(c_2 =\tau (\beta + \varvec{A}^\star P' + \varvec{S}^\star u')\). By definition of the running cost \(\ell \), we have that

Problem (24) writes

To find the solution, we define the following Lagrangian with associated multipliers \((\lambda _1,\lambda _2) \in {\mathbb {R}}({\mathcal {T}} \times S) \times {\mathbb {R}}_{+}(\bar{{\mathcal {T}}} \times S)\):

For any \((t,s,x,y) \in {\mathcal {T}} \times \bar{{\mathcal {T}}} \times S \times S\), a saddle point of the Lagrangian is given by the following first order conditions,

Case 1: \(\lambda _2(s,x) > 0\). At time \(s=T\) we have that \({\hat{m}}_1(s,x) = 1\). For any \(s<T\) we have that \({\hat{m}}_1(s,x) = 1\) and \(\sum _{y \in S}{\hat{w}}(s,x,y) = 1\) and by a direct computation we have that

where \(C(s,x) = \sum _{y \in S } w'(s,x,y) \exp (-c_2(s,x,y))\).

Case 2: \(\lambda _2(s,x) = 0\). At time \(s=T\) we have that \({\hat{m}}_1(s,x) = m_1'(s,x) \exp (-c_1(s,x))\). For any \(s<T\) we have by a direct computation

where \(C(s,x) = \Big (m_1'(s,x) \exp (-c_1(s,x)) / \sum _{y \in S } w'(s,x,y) \exp (- c_2(s,x,y)) \Big )^{1/2}. \)

In order to identify which of the two cases arises, one can compute a solution with formula (37) and check a posteriori that \(\lambda _2(s,x) > 0\). If this is not the case, we deduce that the solution to (36) is given by (38).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bonnans, J.F., Lavigne, P. & Pfeiffer, L. Discrete potential mean field games: duality and numerical resolution. Math. Program. 202, 241–278 (2023). https://doi.org/10.1007/s10107-023-01934-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-023-01934-8

Keywords

- Mean field games

- Dynamic programming

- Kolmogorov equation

- Duality theory

- Primal-dual optimization

- ADMM

- ADM-G