Abstract

Laser is a very useful technology in the field of welding to obtain the deepest point of the metal being joined by providing a light source at the weld seam. However, laser imaging may be challenging because there are various laser reflection profiles on the workpiece that make it difficult to extract the desired laser image. In this paper, an extension study on feature point extraction was proposed to determine the depth of the V-groove. By taking use of laser image which has intensity noise around the edges, a noise rejection technique is applied to improve the quality of laser image. A data fitting method for the purpose of extracting feature points based on the reference row has been proposed because it is suitable for use after the laser centerline search. Then, the feature points are obtained by assigning the orientation position based on the “V” shape. Afterward, the extension study from feature point extraction looks on how to determine the V-groove depth by using the intersection and distance measurement method. To validate the accuracy of the proposed method, several samples of straight line and half-moon line types were tested. The performance of the system was evaluated against the actual value. It was observed that the proposed method is acceptable when subjected to laser reflection and lighting variations. The proposed method matches accurately when the respective line types showed an error percentage within 2 to 6%. This study is an extension step from feature point extraction, which can provide further analysis for weld seam tracking applications

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many manufacturing industries have been using welding robots to realize the welding process over the past decade. The development of welding robots that replace the ‘teach-and-play’ mode should not be disputed in terms of welding processing speed, high production, and reduced operating costs [1,2,3,4]. There are many techniques for performing welding processes such as acoustic, magnetic, electrical, and mechanical [1, 5] but up to now, vision sensor technology can provide a better platform for the advancement of the image processing field toward improving the production of welding. Subsequently, the image processing algorithm plays an important role in determining the robustness of the weld seam accuracy [6,7,8]. Welding seam tracking and weld pool monitoring are the applications that used vision sensors as a guide for the robot to perform welding accuracy [1, 2, 9, 10].

The vision sensors cooperate with a laser to achieve the desired feature points of the weld seam. The special feature of the laser is that the light reflected on the surface of the workpiece can provide a source of light on the weld seam groove and in turn, can determine the position and height of the groove joint. In image processing, it has specific characteristics when there is high contrast between the laser line and the background, hence definitely can segment well the laser line from the background. The main challenge when working with a camera, as well as a laser, is in terms of lighting. Besides that, strong noise in the image will affect the performance of weld seam tracking. These strong noises may come from the arc light and welding spatter from the actual welding [9, 11,12,13]. Though many methods are presented to remove noises that come from the lighting, uneven material surface of a workpiece, and material reflection, little discussion about the laser reflection on the workpiece has been reported except for [2]. The laser width somehow is much wider than the joint gap, therefore more studies should be done for narrow-gap joints [2]. The width of the laser line in the image varies from one laser to another. The wider the laser line that may be emitted from a low-quality laser, the more difficult it is to extract the laser centerline.

Image pre-processing becomes the first and main step towards the success of feature extraction and it depends on the laser line orientation [10]. The accuracy of the weld seam tracking and weld pool monitoring relies on the laser centerline extraction, hence reducing the effect of the inaccuracies of feature points. Line fitting in a welding seam tracking is a common process to modify the broken centerline and then obtain a continuous line thus having accurate feature points. Hough transform and the least square method are very special effects on the line fitting. However, they cannot detect a proper line if the centerline consists of many distributed random lines. Qin et al. [14] used the double local search method and modified the least square method to extract and fit the centerline, however, the use of the double local search method is sometimes unreliable in some cases. They are not suitable to fit the line using the modified least square method if having more than 200 points of centreline. Wang et al. [15] and Fan et al. [16] proposed only one single-time of local search method and extract the centreline using sub-pixel precision and using the proposed operator respectively. Liangyu et al. [11] applied stalk transformation to extract the centerline but there was too much calculation. Fang et al. [17] and Park et al. [18] applied skeleton thinning to extract the centreline but there are unconnected lines that exist along the centreline. However, Wu et al. [19] and Li et al. [20] used a Hough transform to extract the centreline from the binary image. Hough transform method can detect the line by using conversion space between lines in the image into a point in Hough space. This kind of method is quick in terms of processing but is only available for low laser reflection whose pixel edges remain connected. Multiple laser lines used to improve the weld seam accuracy have been proposed by [21, 22]. The main purpose of having multiple laser lines on a workpiece is to obtain accurate multi-range data hence can reduce processing time.

To reduce the weld seam position error, feature point tracking is controlled by the centerline extraction result. Xiuping et al. [23] and Kiddee et al. [24] utilized an iterative search method and distance thresholding from the current point to the centerline to obtain the feature points. This method needs a new centerline for every line fitting. Kiddee et al. [24] used the template matching method by using three sample templates of left, bottom, and right in the specified ROI to match between those templates and the weld seam centerline. However, the template matching would provide a false location if the search window is out of the matching. While, instead of using templates in ROI, the element based on a line segment and junction properties has been proposed by [25] to indicate the character strings. These character strings are then matched with the template model to obtain the feature points. Sun et al. [26] applied a second-order different method of the column index where the rapid changes in column value will give the feature point. Du et al. [12] used slope analysis and intercept parameters to find feature points. Then, applied the intersection method to determine the groove point. The tested value is compared with the manual measurement to test the validity. Wang et al. [27] constructed the improvement of template matching error to detect the center of the groove, but the paper only measures the groove center without identifying the groove depth. Therefore, groove depth in image processing can be obtained by using the coordinate position of the feature points. Lü et al. [28] adopted a pixel subtraction method between the feature points of the top line and the bottom line on y-column. In addition to the V shape, Y and I shape welds are chosen to test the accuracy of the method, however, there is no further analysis for the various samples of V-shape.

In conclusion, the use of laser in an active vision sensor gives the advantage to obtain the groove depth. The laser emits its light on the workpiece surface and points to the deepest location of the groove. One of the most significant current discussions in welding seam tracking based on image processing is feature point extraction. Therefore, feature point tracking has been a major focus for most researchers [10, 12, 19, 29, 30]. However, the method for determining the groove depth that can be achieved by the extraction of feature points still receives insufficient attention except for [28]. In fact, there has also been little discussion of strong laser reflections by poor-quality lasers and edge noise levels by most researchers except for [10, 31]. For this reason, an extension study on feature point extraction is proposed by using a low-quality laser and its reflection. The main objective is to determine the groove depth based on feature points and then find out the system’s performance between the measured value against the actual value. To overcome the lighting issues for weld seam tracking, this paper proposes the application of CMOS camera sensor with laser light. Since this work does not perform an actual welding process, arc spark, and welding spark can be neglected where the major subject of this study is on the laser reflection in the absence of any external light.

2 Methodology

2.1 Experimental set-up

Laser-based vision sensor (LBVS) consists of a structured light laser and a camera which is developed to digitize and interpret the images. The principle is that the laser light is projected onto the workpiece and resulting in a laser line that follows the profile on its surface. The laser and camera are mounted on a fixed stand. Figure 1 depicts the basic principle of LBVS system. An angle between the camera and laser is less than \(90^{\circ }\) to facilitate image acquisition. The size dimension of the captured image is 1920 \(\times \) 1080 pixels. The pixel size of the camera is 2.2 \(\mu \)m. The distance between the camera stand and the laser stand is approximately 433 mm. At 533 mm in height, the CMOS camera is perpendicular to the worktable. The size of the workpiece is 10 cm \(\times \) 10 cm. To preserve precision, the workpiece is placed under the camera, and all images are captured from the same height and distance. There is no additional calibration between the camera, laser, and the object being tracked due to the vision system is mounted on a fixed stand and it is parallel with the worktable. As mentioned in [28], the feature points can be not in an exact location due to the workpiece’s surface defects during the cutting process. The possibility of unlevel laser line and laser reflection along the weld seam can produce different results in the extraction. Therefore, the analysis will be done in order to evaluate the image system’s effectiveness.

2.2 Image processing of the proposed system

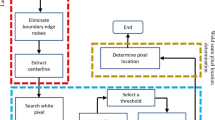

A CMOS camera captures the laser and weld seam image, which is subsequently processed by a computer. The three main steps in image processing discussed in this study are image acquisition, image pre-processing, and image post-processing. In image preprocessing, there are three common segmentation steps: ROI (region of interest) extraction, image segmentation, and edge boundary noise rejection. This system proposed four steps for image post-processing laser centerline extraction, line detection and fitting, feature points extraction, groove point extraction, and groove depth measurement. In some literature, the feature extraction is considered by looking at the parameter of weld groove [32], In this work, the feature point extraction represents the V-groove butt weld joint parameter into x-y coordinates. All the procedures are described in detail in the following sections. The flowchart for the proposed image processing is shown in Fig. 2. One thing that needs to be highlighted is the laser reflection on the workpiece. As displayed in Fig. 3, the captured image of the laser stands out due to the higher brightness feature and therefore can simply be extracted from the background image. However, the laser reflection on the workpiece seems strong where the reflection is quite scattered around the laser edge boundary. The low-quality laser could be the source of the reflection [10]. This reflected laser produces some kinds of light pollution around the laser reflection. This light pollution is called noise in image processing. However, it is important to keep in mind that the laser focus must be carefully adjusted to obtain the required laser width. This is because the wider the laser thickness, the more difficult to extract the laser centerline.

2.3 Image pre-processing

As shown in Fig. 2, there are three pre-processing steps before undergoing post-processing: ROI extraction, image segmentation, and edge boundary noise rejection. The main reason to do image pre-processing is to clear the image from unwanted information by removing any irrelevant pixels that eventually affect the results and to make sure all the required features are acquired.

2.3.1 ROI extraction

The laser line fills only a small area on the surface of the workpiece, therefore, a minimization technique known as ROI (region of interest) is used by allocating only the required area for processing. In this paper, the coordinate position of ROI varies from one image to the other due to the laser position on a workpiece varying for different image frames. Figure 4a demonstrates how the ROI is extracted from the original image, and Fig. 4b shows that the ROI only includes the weld seam line and the groove joint line within the laser area. Equation (1) shows the coordinate position for searching the ROI from the original image.

where xmin and ymin denote the coordinate value of the starting ROI on the two axes respectively, w and h are the width and height of the ROI dimensions respectively.

2.3.2 Image segmentation

The next step is to segment the laser image from the background. Binary conversion is a simple segmentation method that depends on the comparison level between the intensity of gray image or rgb image as an input value f(x,y) and the thresholding (T) setting. A T consists of a range value from 0 to 1. According to Eq. (2), each input pixel value g(i,j) is compared to T. Then, a decision is applied to define the output value from the corresponding input value. The output value u(i,j) only consists of either pixel 0 or 1 that represents the binary value. As demonstrated in Fig. 5, the laser image serves as the pixel 1 and creates a white color, whereas pixel 0 has a dark background image. The thresholding operation is very sensitive to noise in the image. Therefore, a noise rejection technique is compulsory to apply in order to handle and remove the noise for laser centerline extraction and subsequent line detection.

where T is a threshold, g(i,j) is the color input value at coordinate (i,j) and u(i,j) is the binary output pixel value.

2.3.3 Edge boundary noise rejection

A noise rejection algorithm is required in preprocessing to handle some data noise, thus improving the quality of the image. As shown in Fig. 6, the noises most frequently occur around the edge boundary when the laser reflections, which produce light pollution, are particularly intense. These noises provide difficult detection of centerline extraction in the next step. Hence, gives the challenge to extract the feature points if the centerline is not in an exact position. A morphological opening operation (MOO) is applied due to its characteristic, by eliminating the undesirable object while keeping the shape [33]. There are two basic functions in MOO, which are dilation and erosion. The idea behind MOO is to preserve the shape of an image that is already in binary by altering the thickness of the laser image. Instead of using the closing operation, which fills gaps and holes, an opening operation is more suitable to be used because there are no holes to be filled in the reference image but most likely to smooth the contour of a white image by removing false touching and thin branches. Figure 7 shows the image of the approach process where an opening operation starts with an erosion disk structuring element with a radius of 8 and is followed by a dilation disk structuring element with a radius of 20. It turns out that with the MOO operation, the laser image has become more homogeneous, and less disconnected and the relevant edge noise can be eliminated. However, the radius is changeable and can be different for another image, depending on the shape of the image.

2.4 Laser centerline extraction

In image post-processing, there are two important processes, which are extracting the feature points and then determining the groove depth based on the pixel location in a 2D coordinate. This is a new approach to the groove depth of the weld seam tracking application because there has been little discussion about it except for [28]. It is difficult to decide the location of feature points from a 2-D binary image as shown in Fig. 7. Therefore, a skeleton technique is used to extract the centerline from the 2-D binary image. This centerline is one pixel wide (1-D) which will construct a line along the V shape and these lines are referred to as skeleton lines. Skeleton images are one of the morphological applications. It erodes pixels away from the boundary binary image while preserving the endpoints of line segments until no more thinning is possible, at which point what is left approximates the skeleton. The results of the skeleton technique without MOO and with MOO are shown in Fig. 8. Consequently, MOO improved the quality image by eliminating the branches that connected to the endpoints of line segments. After extracting the centerline from the 2D binary image, the next step is to recover the broken lines using the line fitting method in order to obtain the feature points. For the image shown in Fig. 9, the centerline is made up of short and long lines that are not properly connected due to the uneven width of the laser boundary during the morphological process. These unconnected lines are called broken lines. As a result of this situation, extracting of feature points becomes more challenging because the corner points are not in an appropriate and proper position.

2.5 A new approach of line detection and fitting method

Line fitting is used to decrease error for feature point extraction. Currently, the Hough transform is dominant for line detection but somehow unable to detect a proper line that is randomly distributed due to the laser reflection sensitivity. Therefore, this study proposes a novel method for detecting and fitting disconnected lines. For groove depth measurements, this study only considers the weld seam feature lines and groove feature lines in the horizontal direction as shown as regions outlined in red which is illustrated in Fig. 10.

Based on the V-shaped image, the algorithm starts from the left side in a straight line motion and then moves down, from there, it moves into a straight line then continues to move up, and finally ends up in a straight line on the right. The corner point is the point where the change in direction of movement occurs. For example, in the case of this V shape, the change point from straight motion in \(90^{\circ }\) and moving down to \(45^{\circ }\) is named as the left corner point. While the change point from the bottom groove at an angle of \(135^{\circ }\) moves to \(90^{\circ }\) in a straight line is known as the right corner point. Hence there are two corner points: left and right on the weld seam feature line. Another point is the groove point at the bottom line as shown in Fig. 11. These three points are important for feature point extraction and groove depth measurement. Figure 12 shows the flowchart for feature point extraction. This method starts by finding the row index that has the greatest number of pixels “1” (white pixels). This maximum number is regarded as the maximum row (reference row) and has three functions: line detection, line fitting, and corner point extraction.

2.5.1 Weld seam feature line detection

For weld seam feature line detection, there are two aspects that need to be highlighted which are (1) the number of white pixels for each row and (2) the highest number of white pixels.

Step 1: The algorithm searches for the white pixel in each column from the first row until the last row of the image.

Step 2: If the algorithm finds a white pixel in the column, it saves the row index that belongs to the white pixel as expressed in Eq. (3).

Here, u(i,j) is the pixel coordinate location in a specified column and row axis. Column index is the column where the algorithm searches for the white pixel as it scans the pixel throughout the column. Row index is saved if the algorithm detects a white pixel on that column, otherwise no row index is saved and represents as 0.

Step 3: From the first until the end of the column for that row, the algorithm finds white pixels and starts to count. For the respective column, the count will increase by one (+1) if there is a white pixel next to it. If there is no white pixel, the count will remain until it encounters the next white pixel and starts to count again as illustrated in Fig. 13. The method is named as incho (increment and hold) process. Equation (4) shows how the white pixel is counted based on Fig. 13.

where u(i,j)+1 is incremented by 1 if there is a white pixel next to it, otherwise no count and remains the count at that point. While columncount(j) is the white pixels counted for each column.

Step 4: When the calculation is complete as shown in Eq. (5), then find the highest value of white pixels on each row and save the value of that row as a reference row to be used later as shown in Eq. (6).

here, H(i) is the number of counted of white pixels across the column, \(\sum \) is the sum of white pixels throughout the column. max[H(i)] is the searching for the maximum count of white pixels and Refrow is the highest number of white pixels on that row.

2.5.2 Weld seam feature line fitting

This step is to recover the broken lines into a continuous line by using a reference row index. For more accurate corner points, a one-way direction algorithm is proposed. The criteria are listed below to detect the line in the upper row (weld seam feature line):

-

1)

Distance between a white pixel at a column less than a threshold with respect to the reference row—if there is no white pixel or the distance between the white pixel and the reference row is more than a threshold, no line is detected.

-

2)

The line is rapidly changed in a one-way direction

Figure 14 shows the result of weld seam feature line detection represented as a red line after line fitting is applied. It can be seen clearly that the red line stops at the corner on the left and right sides. By using step 2), the pixel coordinate on those corners can be obtained.

To find the bottom row that is the groove feature line, [24] used the farthest point algorithm by calculating the distance of the outliner (bottom) point to the reference line. Therefore, in this paper, using the farthest point method and assuming that the last row has N points, there are two criteria to be fulfilled:

-

(1)

Number of points that lie on the same row and

-

(2)

Distance between the reference row value and the white pixel of the current row.

All the values for criteria (1) and (2) must exceed the set threshold. Now, the row index for the groove feature line, Grooverow has been known. This Grooverow will be used in Sect. 2.6 in order to determine the pixel coordinate of the groove line. Figure 15 shows the red line on the groove feature line. Interestingly, there is no line fitting because the line is already straight.

2.6 Feature point extraction

As previously stated, the V shape should have three distinguishing points: the left corner point, the right corner point, and the groove point as described in Sect. 2.5. These three points are very important to determine the depth of the groove. This section discusses on how to allocate the pixel coordinate in (x,y) for each corner point. However, from the skeleton image as shown in Fig. 15, the groove feature line has shown two more corner points at the left end and right end of the line, making it a total of four corner points of the V-groove. [25] used line segment and junction properties to determine the feature points. Because the algorithm begins on the left side of the V-shaped line and progresses to the right end of the line, three orientations in degrees, 90, 45, and 135, have been introduced in this work to identify the movement of the line. This method has been proposed in this paper based on the orientation position of a V-shape from one corner to another corner. Two kinds of rows: Refrow and Grooverow, one for weld seam corner points and the other one for groove corner points are the main references to allocate the pixel coordinate. The Refrow and Grooverow are used in this section to identify whether the distance between pixels on the current line and the Refrow is less than or greater than a threshold Tf. Figure 16 depicts the orientation label of V-shape based on degree, and Table 1 shows the relationship between the orientation position that can be used to extract the feature points with respect to the threshold.

According to Table 1, the weld seam line is extracted first, and subsequently the groove line. As a reminder, the characteristic line of the welding seam only covers the upper line, therefore the degrees involved are 90 on the upper straight line, 45 and \(135^{\circ }\) on the curved line. Whereas, the other \(90^{\circ }\) include the bottom line, 45 and \(135^{\circ }\) of curved lines are characteristic of a groove line. Moreover, two angles will be used to determine the position of the corner feature point.

To detect the left corner of the weld seam feature line, the line starts moving from the left side of the image and this line is a straight line and represents a \(90^{\circ }\) angle. Then, the line moves down by \(45^{\circ }\). The transition from a straight line to a downward-sloping line is called the left corner point. If the distance of pixel 1 between Refrow and the current row is greater than Tf and the corner point meets the transition from the start and end movement, then the coordinates of the pixel at the left corner can be obtained. However, no pixel coordinate is extracted if the transition line goes from 45 to \(135^{\circ }\) due to no transition from a straight line to a curved line or vice versa. Next, the line moves from \(135^{\circ }\) and then goes up to \(90^{\circ }\). The transition from a curved line to a straight line is known as the right corner point. The coordinates of the pixel in the right corner can be found in the distance of pixel 1 between Refrow and the current row is smaller than Tf and the corner point meets the transition between the start and finish movement.

To find the left corner point on the groove feature line, the pixel distance of 1 between the current line and Grooverow is 0 because the groove line is already on the groove row and therefore no threshold is needed. Additionally, a left corner point can be obtained because there is a transition from 45 to \(90^{\circ }\). Whereas the right corner point can be extracted when it meets the requirements of the orientation position and the threshold.

2.7 A new groove point extraction

Supposedly there is one point only for the groove feature line instead of two points. After taking into account the several factors such as the laser width and also the actual value, it was found that if using the middle point between two corner points, did not successfully locate the lowest point. Even though the lowest line indicates the deepest point of the workpiece, however, after going through the image processing method especially when involves the radius value in MOO operation, the line might be a little bit above when compared to the actual value. Since the actual value is obtained at the deepest position, it is taken into consideration to make sure the groove value is placed correctly. To ensure that the level of consistency between the measured value and the actual value is not significantly different, a method called intersection is the most appropriate to use to find a new point that is slightly below the groove feature line. There are two steps to extract a new groove by using an intersection extended point. By referring to Fig. 17, the lines are divided into two parts: left and right sides. There are four points namely Al, Bl, Ar, and Br where point Al and Bl are for the first line (left side) and point Ar and Br is on the second line (right side).

Below is the step on how to determine the new groove point by using the intersection method. The step shown below is applied for the first line which is for the left side.

-

Step 1: Calculate the difference vector Cdiff[xc, yc] of the first line by subtracting the Bl [xb, yb] and Al [x1, y1]

-

Step 2: Extend the Cdiff by p% where p% is declared as factor_distance

-

Step 3: Determine the pixel coordinate at the endpoint of the extended line by using Eq. (7)

$$\begin{aligned} {D \tiny {l}}= {A\tiny {l}}+({C\tiny {diff}} \times {factor\_distance}) \end{aligned}$$(7)where Dl [x1, y1] is the end point of the extended first line, Al is the start point from the left side, Cdiff is a vector difference between point Al and Bl and factor_distance is a coefficient, it can be a percentage or any integer number. It is obvious that the right side can be obtained by the same algorithm, only change to Ar [x2, y2], Br [x2, y2], and Dr [x2, y2]

-

Step 4: Do the same steps for the second line on the right side

-

Step 5: By using the intersection formula for both lines, then a new groove point (Ug,Vg) can be identified

2.8 Groove depth measurement

Lü et al. [28] used the method of subtracting the coordinate pixel values on the y-axis between the weld seam line and the groove line, however, the results will change if the left and right corner points are not the same on the y-axis. This paper presents a new approach to determining depth, which can be used if the two corner points are not exactly equal in row coordinates. First, find the midpoint (XA,YA) between the left and right corners of the weld seam line. Then, compute the distance between the midpoint (XA,YA) and the new groove line (Ug,Vg) by using Euclidean distance. The distance is represented as the groove depth, Gd. Figure 18 shows the groove depth measurement.

3 Results

10 samples of straight line and 10 samples of half-moon are taken to see how successful the proposed image processing algorithm is. The workpiece and the camera are in a fixed position, while the laser is adjusted to obtain the various laser positions on a workpiece. The images are captured in a controlled environment. All the measured values are taken experimentally, and the values will be compared to the actual value. The actual coordinate is determined by human eye observation according to the location in the actual image.

3.1 Comparison between existing method and the proposed method of line detection

Figure 19 shows the results of image preprocessing for the half-moon type. It starts with the captured image (a), then extracts the selected laser area from the whole image using ROI extraction (b), next, uses the MOO process with the erosion disk structuring element with radius 10 (c), and followed by the dilation disk structuring element with radius 40 (d). The radius value is flexible; depending on the size of the image’s features, it may or may not be the same for another sample of a half-moon image. The stated radius above is applicable for the sample in Fig. 19d and e. As stated earlier, the Hough transform is a line detection method that has been used by many researchers. Figures 20 and 21 depict the results of line detection by using the Hough transform method for two types of V-groove weld seam: half-moon line and straight line. It was shown that the lines are not properly detected for both types. It was difficult to extract the weld seam of left and right corner points in Figs. 20b, c and 21b, c when there was more than one line detected. Moreover, no line was detected for the groove line in Figs. 20d and 21d. The proposed method, however, can improve the deficiency by successfully detecting the line and fitting the unconnected lines as displayed in Fig. 22.

It was difficult to extract the weld seam of left and right corner points in Figs. 20b, c and 21b, c when there was more than one line detected. In Figs. 20d and 21d, no line was detected for the groove line. The proposed method, however, can improve the deficiency by successfully detecting the line and fitting the unconnected lines into a continuous line, as displayed in Fig. 22.

3.2 Groove depth matching result

Figure 23 shows the coordinates of the feature points for one of the samples for each line type. As displayed in Fig. 23a, the left corner point coordinate is (205,66), the right corner point is (378,66) and the groove point is (281,152). The y-axis shows the same coordinate which is at row 66, the value to realize the line fitting. While the groove coordinate is obtained after using the line intersection extended point method from four coordinates, left corner points, one from the weld seam feature line (upper row) and the other one from the groove feature line (bottom row), and two more coordinates which is from the right corner points, on the upper and bottom rows respectively. Whereas the left corner point coordinates in Fig. 23b is (222,81), the right corner point is (719,81) and the groove point is (471,258). The pixel coordinates of all samples were collected and compared to the actual values. The data gathered (pixel coordinate) for the midpoint and new groove point mentioned in Sect. 2.5 was then calculated using Euclidean distance to establish the groove depth, which is denoted as Gd (groove depth). These values have been plotted in a graph to highlight the difference between the measured value from the proposed method and the actual value as shown in Figs. 24 and 25. Based on both figures, the values for measurement and actual for groove depth do not show a very significant comparison. Referring to Figs. 24 and 25, the most significant divergence is in the 8th and 3rd samples, respectively, which is approximately 12 pixels, while the least difference is 2 pixels and 4 pixels, respectively for both figures. Then, to validate the accuracy of the proposed system, APE (absolute percentage error) was applied between the actual and the measured value, and then MAPE (mean absolute percentage error) was used to estimate the average error for all the samples. All the data and percentage errors were provided in Tables 2 and 3. From the results, the average percentage is still acceptable due to the MAPE for half-moon types is only 2.7% while 5.5% for the straight line.

4 Conclusion

This research proposed an extension study on feature point extraction to measure the depth of the V-groove. This method requires feature points from the weld seam and groove feature line to determine the groove depth. A distance measurement method is applied to obtain the depth. In the binary image, the edge noises appear to be strong which causes light pollution around the laser boundary. However, using morphological operation and skeleton technique can eliminate the branches that appear at the endpoint of the line thus improving the image quality. Line fitting is then proposed to fix the broken line in order to obtain accurate feature points. The groove point is determined from the groove line to have one point instead of two points using the intersection equation method. When the proposed method is tested against laser reflection and different lighting conditions, it is discovered to be acceptable when the distance measurement displays an accuracy of more than 94%. Therefore, the proposed method gives an advantage when it can be used on strong distribution edge noise, due to its reflections on MIG metal.

References

Wu J, Smith J, Lucas J (1996) Weld bead placement system for multipass welding. IEEE Proc-Sci Meas Technol 143(2):85–90

Shen X, Teng W (2011) A rapid image processing algorithm for structured light stripe welding seam tracking in laser welding. 30th International Congress on Laser Materials Processing, Laser Microprocessing and Nanomanufacturing, pp 118–124

Xiao R, Xu Y, Hou Z, Chen C, Chen S (2019) An adaptive feature extraction algorithm for multiple typical seam tracking based on vision sensor in robotic arc welding. Sensors and Actuators A: Physical 297

Fan J, Deng S, Jing F, Zhou C, Yang L, Long T, Tan Min (2020) An initial point alignment and seam-tracking system for narrow weld. IEEE Trans Ind Inf 16:877–886

Manorathna P, Phairatt P, Ogun P, Widjanarko T, Chamberlain M, Justham L, Marimuthu S, Jackson MR (2014) Feature extraction and tracking of a weld joint for adaptive robotic welding. International Conference on Control Automation Robotics & Vision (ICARCV), pp1368–1372

Shah HNM, Sulaiman M, Shukor AZ, Khamis Z, Rahman AA (2018) Butt welding joints recognition and location identification by using local thresholding. Robotics and Computer-Integrated Manufacturing 51:181–188

Shah HNM, Sulaiman M, Shukor AZ, Rashid MZA (2017) Recognition of butt welding joints using background subtraction seam path approach for welding robot. International Journal of Mechanical & Mechatronics Engineering 17(01):57–62

Shah HNM, Sulaiman M, Shukor AZ, Khamis Z (2018) An experiment of detection and localization in tooth saw shape for butt joint using KUKA welding robot. Int J Adv Manuf Technol 97(5):3153–3162

Liu X, Liu C (2017) Research on image processing in weld seam tracking with laser structured light. International Forum on Energy, Environment Science and Materials (IFEESM 2017) 120:803–807

Muhammad J, Altun H, Abo-Serie E (2018) A robust butt welding seam finding technique for intelligent robotic welding system using active laser vision. International Journal of Advanced Manufacturing Technology 94:13–29

Liangyu L, Lingjian F, Xin Z, Xiang L (2007) Image processing of seam tracking system using laser vision. Robotic Welding, Intelligence and Automation 319–324

Du R, Xu Y, Hou Z, Shu J, Chen S (2019) Strong noise image processing for vision-based seam tracking in robotic gas metal arc welding. Int J Adv Manuf Technol 101(11):2135–2149

Li X, Li X, Khyam MO, Ge SS (2017) Robust welding seam tracking and recognition. IEEE Sensors J 17:5609–5617

Qin T, Zhang K, Deng J, Jin X (2011) Image processing methods for V-shape weld seam based on laser structured light. Foundations of Intelligent Systems, AISC 122:527–536

Wang Z, Jing F, Fan J (2018) Weld seam type recognition system based on structured light vision and ensemble learning. IEEE International Conference on Mechatronics and Automation (ICMA) 866–871

Fan J, Jing F, Yang L, Long T, Tan M (2019) An initial point alignment method of narrow weld using laser vision sensor. Int J Adv Manuf Technol 102:201–212

Fang Z, Xu D, Tan M (2010) Visual seam tracking system for butt weld of thin plate. Int J Adv Manuf Technol 49:519–526

Park MH, Wu QQ, Park CK, Lee JP, Kim IS (2015) A study on image processing algorithms for seam tracking system in GMA welding. Adv Mater Res 1088:819–823

Wu QQ, Lee JP, Park MH, Jin BJ, Kim DH, Park CK, Kim IS (2015) A study on the modified Hough algorithm for image processing in weld seam tracking. J Mech Sci Technol 29(11):4859–4865

Li C, Gong G (2018) Research on the adaptive recognition and location of the weld based on the characteristics of laser stripes. 10th International Conference on Intelligent Human-Machine Systems and Cybernetics, pp 163–166

Sung K, Lee H, Choi YS, Rhee S (2009) Development of a multiline laser vision sensor for joint tracking in welding. Weld J 88(4):79–85

Xiao Z (2011) Research on a trilines laser vision sensor for seam tracking in welding. In: Lecture Notes in Electrical Engineering book series (LNEE,volume 88), pp. 139-144

Xiuping W, Ruilin B, Ziteng L (2014) Weld seam detection and feature extraction based on laser vision. Proceedings of the 33rd Chinese Control Conference, pp.8249–8252

Kiddee P, Fang Z, Tan M (2016) An automated weld seam tracking system for thick plate using cross mark structured light. Int J Adv Manuf Technol 87:3589–3603

Li X, Li X, Ge SS, Khyam MO, Luo C (2017) Automatic welding seam tracking and identification. IEEE Transactions on Industrial Electronics 64(9):7261–7271

Sun JD, Cao GZ, Huang SD, Chen K, Yang JJ (2016) Welding seam detection and feature point extraction for robotic arc welding using laser-vision. 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAl), pp. 64–67

Wang X, Shi Y, Yu G, Liang B, Li Y (2016) Groove-center detection in gas metal arc welding using a template-matching method. Int J Adv Manuf Technol 86:2791–2801

Lü X, Gu D, Wang Y, Yan Qu, Qin C, Huang F (2018) Feature extraction of weld groove image based on laser vision. IEEE Sensor J 18(11):4715–4724

Yanbiao Z, Yanbo W, Weilin Z, Xiangzhi C (2018) Real-time seam tracking control system based on line laser visions. Optics & Laser Technology 103:182–192

Lee JP, Wu QQ, Park MH, Park C, Kim IS (2014) A study on optimal algorithms to find joint tracking in GMA welding. International Journal of Engineering Science and Innovative Technology (IJESIT) 3:370–380

Cibicik A, Tingelstad L, Egeland O (2021) Laser scanning and parametrization of weld grooves with reflective surfaces. Sensors (Basel) 21(14):4791

Cibicik A, Njaastad EB, Tingelstad L, Egeland O (2022) Robotic weld groove scanning for large tubular T joints using a line laser sensor. Int J Adv Manuf Technol 120:4525–4538

Said KAM, Jambek AB, Sulaiman N (2016) A study of image processing using morphological opening and closing processes. Int J Control Theory Appl 9(31):15–21

Funding

The authors are grateful for the support granted by the Center for Robotics and Industrial Automation, Universiti Teknikal Malaysia Melaka (UTeM) in conducting this research through grant RACER/2019/FKE-CeRIA/F00399 and the Ministry of Higher Education.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

All professional ethics have been followed.

Consent to participate

Not applicable

Consent for publication

Not applicable

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Johan, N.F., Shah, H.N.M., Sulaiman, M. et al. Groove depth measurement based on laser extraction and vision system. Int J Adv Manuf Technol 130, 4151–4167 (2024). https://doi.org/10.1007/s00170-023-12914-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-12914-9