Abstract

Vision sensors are used in welding seam applications for image capture and post-processing of image data. When utilized with welding robots, it is essential for establishing control quality of seam tracking that is up to par. The vision sensor works with a laser to provide the advantage of extracting the location of the weld seam. However, in terms of visual perspective, the challenges may come from the possible environment illumination, imperfections on the workpiece surface such as stain and scratches, error from the hardware setting, or laser reflection which produces a lot of image noises. The noise generated by the laser beam on the surface of the workpiece due to the specifications of the laser itself can cause the centerline of the laser to become uneven, usually making it difficult to extract the feature point of the weld seam. In this work, a method to improve the quality of the centerline by constructing a more stable centerline has been presented to obtain the pixel location of the welding seam point in the x–y coordinate by considering the effect of laser reflection on the workpiece. Three main phases have been employed to realize the objectives: laser extraction, broken-line fitting, and pixel location determination. To locate a pixel coordinate, a technique of the qualitative description based on the V-groove of butt joint had been developed. Two metals of butt joint, each of which has two different types of lines with several laser positions on the workpiece are tested in order to validate the accuracy of the proposed method. The approach has minimal error outcomes when compared to the actual value and has no noticeable impact on the system’s effectiveness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the welding industry, seam tracking system using vision sensor is developed to increase welding automation. Vision sensors have been frequently used in welding applications to capture welding seam images without direct contact between the workpiece and the welding robot [1,2,3,4,5,6]. With the development of manufacturing and laser technology, visual sensors are thought to provide benefits for seam detection by decreasing the positioning error in weld seams and increasing production quality for weld pool investigation [7]. There are two types of vision sensors that can be used to track the weld seam: active sensors [8,9,10,11,12] and passive sensors [13, 14] or a combination of the two [15]. The classifications are mostly determined by the types of light used, such as arc light, natural light, LED light, or laser-structured light. An active vision sensor uses laser-structured light as a light source to detect the weld seam image. Basically, this kind of sensor is more suitable to determine the weld pool area and the groove. By incorporating a CNN model into the GMAW tracking process, [8] increased the robustness of the image processing system, particularly when the images had significant interference by using an active vision sensor. The outcome demonstrates how accurate and efficient the suggested strategy is. While passive sensor uses an arc light or natural light as an additional light source. The challenge for the passive sensor is that the proper lighting must be available to guide the sensor to detect the target image. The camera must be able to detect the weld seam image near the arc, and this kind of sensor is prominent for the narrow butt joint [14]. [15] used the combination between natural light and structured light to measure the horizontal and vertical torch deviation; however, due to the utilization of natural light, this system is readily interfered by arc lights. Therefore, an active sensor overcomes the problems where the use of a laser can provide information on the weld seam profile, especially when dealing with groove butt joint configuration.

Vision sensors and image processing have gained so much attention in many applications. However, the way the image can be processed depends totally on the lighting, the workpiece surface, and many more factors. These limitations such as reflection from the metal surface, scratches, stains, natural lighting when capturing images give an effect on the final result [12, 16]. Laser reflections that come with either low brightness or large laser widths also contribute to lighting issues [9, 17, 18].

Image pre-processing is an essential step in any kind of visual image application. To obtain a quality image, a step-by-step pre-processing must be able to fulfill the requirement of a specific task [19]. Normally, image pre-processing is used widely to reduce noises that exist in the image, thus containing only the important information, simultaneously can reduce computation time and increasing the processing speed. The first step in image pre-processing is image acquisition, followed by image filtering and image segmentation. Some applications apply ROI (region of interest) to gain smooth effects [20]. However, all the steps mentioned above can be changeable in terms of sequential order, and it is not compulsory to have all the steps, as long as the target image can be obtained accurately. [21] applied cross-line structured light (CLSL) to search for the ROI. Two laser lines are used in different directions and search for the cross mark at the intersection between the two lasers. This cross mark is used as a reference point to define ROI.

In welding seam images, the important aspect to work with a laser is to extract the centerline laser image. There are many methods to perform centerline extraction such as Hough transform [5, 22], skeleton technique, Steger algorithm [20], intensity method [15, 22], and direction template-based with ridge line tracking [23]. [5] extracted the laser line by using Hough transform, but this method is sensitive to strong noise, welding dust, and spatter. By using a direction template and line tracking method, the method has been reduced the processing time, able to work with a sub-pixel level; however, it was not suitable to use for poor quality laser [23]. The selection of kernel size and intensity distribution are determined to enhance the vertical oriented median filter and to eliminate the noises [24].

Feature extraction is used for weld seam tracking to extract the feature information from the weld groove image. Basically, two feature points of weld seam are used, one is for weld bead position and the other one is for weld seam tracking. Due to the shape of V-groove types, the line image consists of a straight line and a curve line. These two lines together will create a corner point. Therefore, slope analysis is dominant for weld seam position [1, 13]. To determine the feature points, [1] used slope analysis and threshold setting to track the corner point of the V-groove. [1] tracks the two positions of the V corner and determines the weld profile from those positions. It is important to have the weld profile in order to ensure the level of weld bead filler reaches a required level during the welding process. The test images used 0.01 mm per pixel as system precision. [13] applied slope analysis and oblique intercept method to find the feature point by assuming that the corner point of a parallel line that passing the circle is a feature point. [25] proposed a new method using an internal propulsion algorithm. The ROI is divided into the left and right sides of the weld seam. The weld seam point is determined when the detection operator is maximum between the center point and the current point.

This paper introduces a method to reconnect the uneven lines which are produced from the morphological operation result. The fact that laser reflection that emits on the workpiece is a very critical issue, this paper highlighted the use of laser with low intensity reflection and bright spot. Typically, the V-type butt groove image consists of the top line in a horizontal direction and the bottom line in a vertical direction with a specified orientation; however, this work only solely takes into account the horizontal top line for weld seam tracking position. Three indication criteria and region-based rules are used in a novel method for extracting the weld seam points. The algorithms were realized in Matlab programming.

This paper is organized as follows: Section 2 describes the overview of the proposed system which consists of three major phases and their sub-phase. The image pre-processing, noise elimination, broken-line fitting, and pixel location determination method is also introduced in this section. The experimental results and discussions are given in Section 3. Section 4 concludes the paper. Figure 1 presents the flowchart for the overall process for weld seam feature points extraction of the V-groove butt joint type. The detail of the explanation will be described in each phase section, respectively.

2 Methodology

2.1 Overview

The approach introduced in this research employs a laser-based vision sensor system (LBVS) that is made up of a light laser and an industrial camera to pinpoint the coordinate location (x, y) for the V-groove of butt joint type. Weld seam feature point extraction using LBVS is very critical before obtaining coordinate locations. It involves two primary tasks: detecting the laser line on the workpiece image and extracting the feature points on the weld seam line. Therefore, a series of sequential processing which involved three main phases have been developed which are laser extraction, broken-line fitting, and weld seam pixel location determination as displayed in Fig. 2. One of the main issues that is going to be highlighted is the image noises. The noises are compulsory to remove so that only the relevant information is needed. The noise reduction technique is explained thoroughly in the laser extraction phase. Moreover, a technique based on pixel counting and reference row finding has been proposed under the broken-line fitting phase to reconnect the uneven lines. Based on the V-shape configuration, this work contributes to the idea of designing three parameters based on the qualitative description in order to extract the weld seam coordinates. The three parameters are region, direction, and indicator which will be explained in detail in Section 2.3, and this work process is put under the final phase which is the weld seam pixel location determination phase. As shown in Fig. 3a, the laser line produces a V shape due to the direct reflection of the laser on the workpiece’s surface. From the formation, it was found that there are two main lines, namely, the laser line (in red line) and the welding seam line (in blue circle). The point where the laser line and the welding seam line intersect is known as the weld seam point. The weld seam point is also known as the corner point that denotes a sudden change at the corners of the welding joint, and the location of this corner point is included on the top line (on the surface of the workpiece). Due to the joining of the two metal sheets, the weld seam point is divided into the left and right corners as shown in Fig. 3b.

2.2 Laser extraction phase

Laser brightness offers an advantage in welding seam extraction due to the great contrast of the laser image in the foreground. It gives a special character when the light is more prominent and noticeable than the image around it. However, working with vision sensor is challenging because factors such as laser reflections that contribute to the low intensity distribution might interfere with the ability of an algorithm to analyze the images. Therefore, two main steps in the laser extraction phase which are image pre-processing and centerline extraction have been developed to tackle the problem arise and to provide the better strategy for welding seam points extraction using a vision sensor and laser. For the image pre-processing, three sub-steps are presented before extracting the centerline: ROI boundary box, Bi-level technique, and MNR processing. First, the ROI (region of interest) boundary box is prepared to extract only the target area of interest from the entire image, to ensure that the laser image with relevant features and characteristics is maintained. Second, the Bi-level technique is used to segment the laser image from the background. Third, MNR (morphological noise reduction) processing is performed to eliminate noise and thus improve the image quality. Finally, a 1-D image extraction is applied to extract the centerline of the laser as provided in Fig. 4. Some researchers adopt the image smoothing such as median and gaussian filtering to reduce noises and then apply morphological operation. However, this work proposes a direct image thresholding process to remove low-intensity noise without going through a smoothing technique by converting the image into a binary image. Additionally, morphological operation is applied to strengthen the visual appearance of the laser image by suppressing the remaining noise.

2.2.1 Image pre-processing

The input image is usually large-scale, so it takes time to process the image, and it can be complex even to process small particles. Therefore, the ROI boundary box is used to occupy only a small area of the entire image. This small area consists of a laser region that follows the shape of the V-groove and only includes relevant features. Any material outside of the ROI will be eliminated in order to remove spurs which lead to inaccurate extraction. The ROI is set according to the position of the known and fixed laser region in the image. From the entire input image where the origin coordinates are, i.e. at (X in, Y in), find a point to start the ROI. That point will be the origin position for ROI with coordinates (X ROI, Y ROI). Then, find the appropriate height and width of the ROI so that the desired V shape appears including the relevant features. The size and location of the ROI depend on the position of the laser on the workpiece due to inconsistent weld seams along the weld path. Figure 5 shows the input image with laser reflection on a metal surface. The laser reflection has produced low-intensity noise around the high-intensity laser line. If ROI is not implemented or considers the intensity that exists below the groove line, the result will be different for the bottom line, but due to the objective of this work is to extract the weld seam feature points on the top line only, therefore the ROI selection can exclude other laser additional regions as long as the V shape is visible. Additionally, the goal of the ROI is to eliminate all areas that contribute to noise including any additional laser reflections present below the groove that may result from poor laser quality or reflections on the workpiece surface.

Figure 6 depicted the algorithm flowchart for the binary transformation and boundary edge noise elimination. The details are explained in the respective section. Conceptually, from the Fig. 6, the ROI image is converted into a binary image using a threshold level (T), then apply morphological operation as a noise elimination that consists of erossion, filling, and followed by dilation process to ensure that the boundary edges of the laser image are unbroken so that the lines finally have a width of one pixel size. Binary images are easier to adapt to the segmentation process when compared to grayscale images. This is because the system can control the image by looking at only two dual-level pixels, 1 and 0 which represent white and black images, respectively. To replace all pixel values in the input image with binary pixels, there is a level determination called thresholding which ranges from 0 to 1. This algorithm uses adaptive thresholding where the level is changing for different images. If the brightness of the input image g(i,j) is greater than or equal to a threshold value (T), then replace the value with B(i,j) as pixel ‘1’, represented in a white image; otherwise, it changes with pixel ‘0’ as a black image as expressed in Eq. (1).

Here, B(i,j) represents the binary pixel value either 1 or 0, g(i,j) is the input image value from 0 to 255 at coordinate (i,j), and T is the adaptive thresholding level, ranging from 0 to 1. In this research, the threshold value is performed well for threshold in the range of 0.6 < T < 0.9. The process of selecting the best thresholding is repeated until the binary image achieves a standard level of threshold value. Based on Fig. 7, the foreground is white pixels, and the remainder of the pixels are black for the background and successfully achieved by removing the unwanted low-intensity noise. In contrast to the sub-pixel precision and maximum intensity methods, the advantage of the laser is that the high brightness covering the laser region can be practically segmented from the background using the Bi-level technique.

2.2.2 Noise elimination and centerline extraction

Image noises that commonly occur in welding seam applications that use vision sensor are normally present caused by several factors such as environment illumination, imperfections on the workpiece surface, poor hardware, or laser reflection. There are not many studies that address the effects of laser reflection except for [9, 17, 20]; instead, the majority discuss the stains and scratches that can be observed on the workpiece surface. As can be seen in Fig. 8, most of the noise resulting from these reflections consists of thin hairs and blobs (red circles) that appear around the edge of the white image boundary. This noisy image might be caused by a weak laser reflection that causes the edge to be unpredictable and messy. The V structure is still discernible, though. The challenge here is how to get rid of noise along the edge of the boundary so that the centerline can be successfully extracted. Morphological image processing is a filtering method that has been proven to successfully remove noise from binary images [15, 26]. MO with opening operation comes with two steps performed sequentially which is erosion followed by dilation as stated in Eq. (2) [27].

where \(\theta\) and \(\oplus\) denote erosion and dilation process, respectively, with A being a binary image and B being the structuring element. As shown in Fig. 6 and in Eq. (2), the binary image A is eroded in advance using the DSE (disk structuring element, B). Radius selection can be altered to a certain extent, and it is necessary to select the optimal radius to accommodate dilation. The outcomes of the MOO process are shown in Fig. 9. It can be clearly seen how erosion with a radius of 10 can remove a thin layer of undesirable white pixels and small blobs from the outer boundary, slightly shrinking the larger blobs as shown in Fig. 9a. To put it another way, most of the white pixels at the border edge have been changed to black pixels, thus making the white image become smaller than previous. Then, apply a dilation technique to the eroded image to facilitate centerline extraction. By using the same DSE B for the dilation process, the same procedure for radius selection is used again. Now, the issue is the radius size. Most research using MOO (morphological opening operation) uses a small radius size, usually less than 10. But due to the relatively large image structure feature, it can reach up to 30. As shown in Fig. 9b, the dilation image with a radius of 10 still has jagged boundary edges which may lead to inaccurate centerline extraction. As can be seen, the larger the size of the radius, the neater the edge of the border; however, the radius with 40 as shown in 11 is too large and tends to give an inaccurate center line also because there is a large width or gap between the top and bottom edges. In conclusion, erosion with size 10 is applied, and then dilation with a radius size of 30 is added along the edge boundary; gaps are filled, thus making the output of the morphological image look smoother and perfect as depicted in Fig. 10b. The next step is centerline extraction. Figure 12 illustrates the position of the centerline (blue dot line) and also the left and right corner points regarded as weld seam points (yellow circle). The centerline is located in the middle between the upper and bottom edges of the laser image (white image). Once the center line is successfully extracted, the next step is to determine the coordinates of the two corner points.

The process to obtain the centerline on the binary image is important in realizing the main purpose of this research. Several studies have been conducted and found that the Skeleton algorithm from Matlab is an effective way to extract the centerline following the completion of the morphological process. It produces a 1-pixel wide image from a 2-D binary image. This process does not require too much calculation compared to local search [3] [24] and the line tracking method [26]. Figure 13a displays the result of the skeleton technique, and Fig. 13b shows how the centerline is successfully extracted from the binary image. This skeleton technique uses the function directly (inbuilt) from Matlab.

2.3 New approach of broken-line fitting

Centerline extraction has produced a cluttered 1-D image. This imperfection produces a jagged line along the V shape. Starting from the leftmost pixel, the white pixels should be in the same row in order to determine the precise location of the corner points (left and right sides). However, it produces several different rows along the column, especially in the top line part (top line of the welding seam) which is the main subject of this research and has resulted in a broken line between one pixel and the pixel next to it as shown in Fig. 14. This causes the laser line that is extracted to be not at all in the ideal shape and may result in the corner points deviating from their actual value. Therefore, a broken-line fitting method is proposed to reconnect the discontinuous lines into a straight line in order to facilitate the extraction of weld seam points. Each pixel in a binary image can be specified by its row and column coordinates. By using information from 1-D image results, without complicated calculations, this work only takes into account the binary pixel values of ‘1’ (white pixels) and’0’ (black pixels). Since the laser line on the weld seam is horizontal, this approach involves searching for pixels by counting white pixels along the column beginning at the top left of the image which is where the origin coordinate for ROI is located at (X ROI, Y ROI). After the search ends on the last row and column of the image (bottom right), next the number of white pixels for each row will be counted. The row that has the maximum number of white pixels is known as the reference row. This reference row will serve as an indicator for the line fitting.

Line fitting on the top of the welding seam can be done by finding the most pixels ‘1’on each row, assuming that the line on the top of the weld seam has the longest line compared to the other lines in any row. It involves searching from the leftmost pixel until the distance between the current pixel and the reference row is greater than a set threshold. This is because if the distance is greater than the threshold, then no lines will be detected. The search continues until the rightmost pixel. Overall, the proposed method using the local search method consists of three main steps, namely, (a) local searching, (b) reference row determination, and (c) reference line detection. Below are the steps on how to get the line fitted. The overall process of broken-line fitting and pixel location determination is shown in Fig. 15 and the following below is the detail.

-

(a)

Local search

As illustrated in Fig. 16a, the operation starts at the top left of the first row, then goes horizontally along the column, and repeats until the last row and the last column (bottom right). While Fig. 16b shows a representation of the black image represented by pixel ‘0’ and the white image represented by pixel ‘1’. Since the operation is carried out starting with the topmost part of the image, the algorithm will start searching for each pixel value. There is no row index recorded if there is all pixel ‘0’ along the column and it is stored as ‘0’. But if the algorithm finds pixel ‘1’ at any row, that row index will be stored as ‘R’.

-

(b)

Reference row determination

The next step is to determine the total number of pixels. The main purpose is to build a reference line. Count each white pixel on each row of ‘R’. This process is repeated until the last row. After completing the calculation process, the highest number of pixels ‘1’ will turn the row index as the reference row. This reference row is an indication to detect the reference line. This reference line also plays a role in realizing this research objective through the fitting of broken lines. Each of these reference lines will be detected when it meets the distance condition. Figure 17 shows the illustration of white lines based on the row indexes (R1 until R6) and columns (C1 until C12) of the image. For example, R2 is originally located under the same row as the other rows (R1, R3, R4, R5, R6). But it is named as reference row which indicates that it contains the highest number of white pixels on that row. This reference row becomes the y-axis value, while the x-axis values are different for both the left and right corners.

-

(iii)

Reference line detection

Once the reference row has been identified, the next step is to construct the reference line with respect to the reference row. This reference line will be built along the reference row when it meets the distance requirement. By using the distance relation between rows, if the distance between the reference row and the white pixel does not exceed a predetermined threshold, the reference line will be detected. Otherwise, no line will be detected. As shown in Fig. 17, there are white pixels from C1 to C3 and C6 to C7, and these pixels are in line with the reference row, so the reference line will be detected (red line) as in Fig. 18 because it meets the distance requirement between the rows. While the white pixels on C4, C5, C8, C9, and C10 are not in line with the reference row, i.e. on R1, R3, and R4, but because they are still within the set threshold distance, the reference line will be detected. On the other hand, the white pixels on C11 and C12 have a distance that exceeds the threshold, then no reference line will be detected. This process will continue until the rightmost pixel. In this way, the reference line will be successfully constructed to the left and right of the laser line. Therefore, the proposed local search approach is very useful to extract the position of the weld seam point in the left and right corners.

2.4 Weld seam pixel location determination

The previous step successfully constructed a straight line from a broken line. Then, through the proposed approach, the reference line which starts from the leftmost pixel will stop at the left corner of the laser line due to the use of a threshold. This process continues until it finds again pixel that meets the distance requirement (less than a threshold). This pixel is the pixel in the right corner of the laser line. The next step is to extract the pixel location of the left and right corner points. These corner points reflect the geometric characteristics of the V-groove which are located at the corner point of the centerline. Therefore, this paper designed a system based on the qualitative description. This qualitative description defines three main parameters, namely, region, direction, and indicator to generate the weld seam pixel coordinate. First, due to the geometric of V, the V is divided into three regions, namely, R a, R b, and R c. R a and R c are denoted as straight line regions (the same direction), while R b is identified as a curve line region. This curved line is named because there is a change from a straight line to another straight line but in a different direction. These three regions are connected to each other by implying a sudden change from R a to R b and from R b to R c (shown by red arrow) as illustrated in Fig. 19. Next, to show the relationship between the regions and the sudden change in direction, another parameter is built which is an indicator. This change is important to determine whether it is a sudden change or not. In this work, three types of indicators used: 0, 1, and 2. According to Fig. 19, R a and R c are the straight line with the indicator is set to 0, while R b has two indicators (1 and 2). Indicator 1 is referred to as the abrupt change from a straight line to a curve line, while the sudden change from a curved line to a straight line is described by indicator 2. Then, the final step is to do a sequence relationship between the threshold and the indicators. The following below is the step on how to determine the weld seam points. The following below in Fig. 20 is the step on how to determine the weld seam points.

3 Results

3.1 Experimental configuration

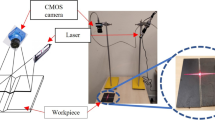

The proposed system consists of CMOS industrial camera, Z-laser light source, and computer. A camera is located on the next side of the laser with a fixed stand. The camera is set perpendicular to the workpiece, and the laser is arranged at certain angles to emit its light on the workpiece. The distance between a camera and laser stand is 433 mm, and the height is 533 mm as depicted in Fig. 21a. A raw data is obtained by The ImagingSource CMOS camera with 6 frames per second and 5 MP resolution. To maintain precision, a workpiece is put beneath the camera, and all of the images are captured at the same height and distance. To obtain samples from each position, the workpiece was moved manually in the transverse direction. This work presented two types of lines from two different workpieces, one is a straight line and another one is semicircle line type as shown in Fig. 21b and c, respectively. The figures also show the position of the weld seam points and the width that can be obtained from the left corner and the right corner. For a straight line, there are two workpieces with different thicknesses: one is 6 mm and another one is 9 mm. In total, three workpieces were used in this research, two for the straight line type and one for the semicircle line type. Brightness from the camera and environment lighting are also adjusted for raw data collection purposes during image acquisition.

3.2 Identification of pixel coordinate

As compared with the laser reflection in [9], the laser reflection is not strong enough when the laser is pointed on the metal workpiece. Therefore, the proposed method gives an advantage when it can be applied to strong distribution noise possibly due to low laser quality. Figure 22 displays the result of pixel coordinate location for straight line type in (x–y) as two blue dot circles on the corner curve. As can be seen in Fig. 22, the y-axis value is the same for both left and right corner points; this is because the value refers to the reference row which is 66 (for this sample). Therefore, the pixel coordinate value in 2D for the left corner is (138, 66), and the right corner is (451, 66). The feature points can be expressed as weld seam points because the position of the points is on the weld seam line. Figure 23 shows the image processing results for the semicircle line type. Figure 24 depicts the 2D pixel coordinate value for the semicircle line type, which is (258, 69) for the left corner and (770, 69) for the right corner where the reference row is at 69.

3.3 Position error and width matching results

This work involves laser detection using a CMOS camera. Consequently, it is crucial to use an image processing method to extract the centerline of the laser. Additionally, since there is no contact with the welding robot in this study, the primary goal is to obtain the pixel position in 2D coordinate without converting to mm unit. From the pixel coordinate results, there are two measurements that can be done: (1) position error and (2) width matching. The position error is purposely to determine how much the pixel values in the x-axis and y-axis produced from the proposed approach deviate from the actual value. All the errors are display in (x,y) for both corner points as shown in Fig. 25, while the coordinate values for this position error determination can be referred to in Tables 1, 2, and 3. The width between the left and right corner points is measured to verify the effectiveness of the proposed approach by determining the accuracy of the proposed method. To determine the width, the y-axis value can be ignored because it is a reference row that has the same value on both corners. Therefore, the width can be found by considering the x-axis only at the left and right corners. For the analysis, the workpieces for the straight line type are divided into 2 categories of thickness, which are 6 mm and 9 mm, and there are 5 samples for each category. While for the semicircle, the thickness is only 9 mm with 10 samples. Due to the thickness of the laser and its scattering effect, the output value will not be the same as the actual value. However, the difference must not be too large since it is used to measure the effectiveness of the proposed approach. To obtain the actual value, the human eye observation method is employed. As shown in Tables 1, 2, and 3, the 2D coordinates (x, y) for the left and right corners of the workpiece having a straight line with a thickness of 6 mm and 9 mm, respectively, and a semicircular line have been successfully extracted. As a result of the left and right corner values, the table also shows the width value (in pixels) between the proposed value and the actual value. Values are taken experimentally and manually, and the difference can be observed more clearly and significantly when presented in graphs as shown in Figs. 26 and 27.

According to Fig. 27, most of the y-axis have minimum position error in both axes within 0 to 3 pixels in most samples for both left and right corner points. For a straight line with 9 mm thickness, sample 2 has the largest error value of about 14 pixels on the left corner and also has a minimum error of 2 pixels on the right corner, both in the x-axis. It can be said that sample 2 for 6 mm thickness has the largest error value that is (10, 2) in the left corner and (5, 2) in the right corner. However, the best performance of the pixel coordinate for straight line case is on sample 5 with a slightly different error on both x–y coordinates. While for the semicircle case and by looking at the x-axis, the position error is quite large for most samples where most of the errors are within 5 pixels to 17 pixels on the left corner point and within 1 pixel to 11 pixels on the other side. Figures 26 and 28 show a relatively uniform fluctuation trend for the width matching results between the proposed method (red line) and the actual value (green line), and it is not much different for both straight lines and semicircles cases.

This work uses the pixel accuracy method to measure the accuracy of width matching by using width values as displayed in Tables 1, 2, and 3. Therefore, the width matching accuracy was determined by calculating the ratio between the total sum of width value of the proposed and the actual values. Overall, the accuracy for straight lines with thicknesses of 9 mm and 6 mm is 98.21% and 96.21%, respectively, while it is 97.17% for semicircles, which contributed to the effectiveness of the proposed approach.

4 Conclusions

A new approach method for broken-line fitting and weld seam point extraction was presented. Pixel locations for two corner weld seam points from the weld seam path were successfully identified. The V-type butt-groove configuration in this research emphasizes three main stages which are weld seam extraction, broken-line fitting, and pixel location. To detect a reference line, a broken-line fitting method was introduced. The major goal is to stabilize the lines and make them continuous so they can be used as a reference line. This reference line is a guide to track the pixel column by using a row distance threshold. The weld seam position is determined by two corner points, one at the left corner and the other one at the right corner. To identify a pixel location, a qualitative description and indicator rule were proposed. The proposed method had been compared with the actual value, and the experimental output showed that there was only a small mismatch error in the coordinate results, while the width matching has a relatively good percentage accuracy, and it was also able to operate with low-quality lasers. The findings of this paper that contribute to some knowledge are as follows:

-

(1)

There were few earlier studies have addressed the noises around the boundary of a laser binary image. These noises may come from the low quality of the laser. However, the morphological opening operation with erosion and dilation absolutely can remove the noises and can extract the centerline using the skeleton technique.

-

(2)

Despite the fact that the centerline generates discontinuity lines, a proposed method can connect the lines by observing the reference row.

-

(3)

Instead of using slope analysis and corner detection method, this paper proposed a method based on V-shape line orientation and indicator rule to extract the weld seam’s feature point.

References

Wu J, Smith JS, Lucas J (1996) Weld bead placement system for multipass welding. IEE Proc Sci Meas Technol 143:85–90. https://doi.org/10.1049/ip-smt:19960163

Naji OAAM, Shah HNM, Anwar NSN, Johan NF (2020) Advances in visual sensor based on laser structured light and its application for robotic welding. Int J Adv Trends Comput Sci Eng 9:9146–9154. https://doi.org/10.30534/ijatcse/2020/322952020

Zou Y, Zhou W (2019) Automatic seam detection and tracking system for robots based on laser vision. Mechatronics 63:102261. https://doi.org/10.1016/j.mechatronics.2019.102261

Fan J, Jing F, Yang L, Teng L, Tan M (2019) A precise initial weld point guiding method of micro-gap weld based on structured light vision sensor. IEEE Sens J 19:322–331. https://doi.org/10.1109/JSEN.2018.2876144

Wu QQ, Lee JP, Park MH, Jin BJ, Kim DH, Park CK, Kim IS (2015) A study on the modified Hough algorithm for image processing in weld seam tracking. J Mech Sci Technol 29:4859–4865. https://doi.org/10.1007/s12206-015-1033-x

Wang N, Zhong K, Shi X, Zhang X (2020) A robust weld seam recognition method under heavy noise based on structured-light vision. Robot Comput Integr Manuf 61:101821. https://doi.org/10.1016/j.rcim.2019.101821

Rout A, Deepak BBVL, Biswal BB (2018) Advances in weld seam tracking techniques for robotic welding: a review. Robot Comput Integr Manuf 56:12–37. https://doi.org/10.1016/j.rcim.2018.08.003

Li X, Li X, Khyam MO, Ge SS (2017) Robust welding seam tracking and recognition. IEEE Sens J 17:5609–5617. https://doi.org/10.1109/JSEN.2017.2730280

Muhammad J, Altun H, Abo-Serie E (2018) A robust butt welding seam finding technique for intelligent robotic welding system using active laser vision. Int J Adv Manuf Technol 94:13–29. https://doi.org/10.1007/s00170-016-9481-8

Hou Z, Xu Y, Xiao R, Chen S (2020) A teaching-free welding method based on laser visual sensing system in robotic GMAW. Int J Adv Manuf Technol 109:1755–1774. https://doi.org/10.1007/s00170-020-05774-0

Xue K, Wang Z, Shen J, Hu S, Zhen Y, Liu J, Wu D, Yang H (2019) Robotic seam tracking system based on vision sensing and human-machine interaction for multi-pass MAG welding. J Manuf Process 63:48–59. https://doi.org/10.1016/j.jmapro.2020.02.026

Tian YZ, Liu HF, Li L, Wang WB, Feng JC, Xi FF, Yuan GJ (2020) Robust identification of weld seam based on region of interest operation. Adv Manuf 8:473–485. https://doi.org/10.1007/s40436-020-00325-y

Yu D, Liao Q (2018) An algorithm for feature extraction of weld groove based on laser vision. Proc 2018 IEEE 3rd Adv Inf Technol Electron Autom Control Conf IAEAC 2018:538–1542. https://doi.org/10.1109/IAEAC.2018.8577682

Lu J, Yang A, Chen X, Xu X, Lv R, Zhao Z (2022) A seam tracking method based on an image segmentation deep convolutional neural network. Metals (Basel) 12. https://doi.org/10.3390/met12081365

Shao WJ, Huang Y, Zhang Y (2018) A novel weld seam detection method for space weld seam of narrow butt joint in laser welding. Opt Laser Technol 99:9–51. https://doi.org/10.1016/j.optlastec.2017.09.037

Shah HNM, Sulaiman M, Shukor AZ, Kamis Z, Rahman AA (2017) Butt welding joints recognition and location identification by using local thresholding. Robot Comput Integr Manuf 51:181–188. https://doi.org/10.1016/j.rcim.2017.12.007

Shi X, Sun Y, Liu H, Bai L, Lin C (2021) Research on laser stripe characteristics and center extraction algorithm for desktop laser scanner. SN Appl Sci 3:1–12. https://doi.org/10.1007/s42452-021-04309-w

Muhammad J, Altun H, Abo-Serie E (2017) Welding seam profiling techniques based on active vision sensing for intelligent robotic welding. Int J Adv Manuf Technol 88:127–145. https://doi.org/10.1007/s00170-016-8707-0

Ye H, Liu Y, Liu W (2021) Weld seam tracking based on laser imaging binary image preprocessing. IEEE Adv Inf Technol Electron Autom Control Conf 2021:756–760. https://doi.org/10.1109/IAEAC50856.2021.9390791

Xiao R, Xu Y, Hou Z, Chen C, Chen S (2019) An adaptive feature extraction algorithm for multiple typical seam tracking based on vision sensor in robotic arc welding. Sensors Actuators, A Phys 297:111533. https://doi.org/10.1016/j.sna.2019.111533

Kiddee P, Fang Z, Tan M (2017) A geometry based feature detection method of V-groove weld seams for thick plate welding robots. 2017 2nd Int. Conf Control Robot Eng ICCRE 2017:43–48. https://doi.org/10.1109/ICCRE.2017.7935039

Wang Z, Jing F, Fan J (2018) Weld seam type recognition system based on structured light vision and ensemble learning. Proc 2018 IEEE Int Conf Mechatronics Autom ICMA 2018 61573358:866-871. https://doi.org/10.1109/ICMA.2018.8484570

Lu X, Gu D, Wang Y, Qu Y, Qin C, Huang F (2018) feature extraction of welding seam image based on laser vision. IEEE Sens J 18:4715–4724. https://doi.org/10.1109/JSEN.2018.2824660

Andrej Cibicik OE, Lars T (2021) Laser scanning and parametrization of weld grooves with reflctive surfaces. Sensors 203:47. https://doi.org/10.1016/s0262-4079(09)62497-0

Li W, Mei F, Hu Z, Gao X, Yu H, AldeenHousein A, Wei C (2022) Multiple weld seam laser vision recognition method based on the IPCE algorithm. Opt Laser Technol 155:108388. https://doi.org/10.1016/j.optlastec.2022.108388

Said KAM, Jambek AB, Sulaiman N (2016) A study of image processing using morphological opening and closing processes. Int J Control Theory Appl 9:15–21

Batchelor BG (2012) Machine vision handbook 803–867. https://doi.org/10.1007/978-1-84996-169-1.

Funding

The authors are grateful for the support granted by the Center for Robotics and Industrial Automation, Universiti Teknikal Malaysia Melaka (UTeM) in conducting this research through grant RACER/2019/FKE-CeRIA/F00399 and the Ministry of Higher Education.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

All professional ethics have been followed.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Johan, N.F., Mohd Shah, H.N., Sulaiman, M. et al. Weld seam feature point extraction using laser and vision sensor. Int J Adv Manuf Technol 127, 5155–5170 (2023). https://doi.org/10.1007/s00170-023-11776-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-11776-5