Abstract

At present, most groove cutting robot serving in practical production is still “teaching and playback” type, which cannot meet requirement of quality and diversification. In order to enhance the flexibility of the robotic groove cutting, it is necessary to generate the robot cutting path automatically no matter how workpiece is placed. This paper mainly states the implementation of the automatic measurement of the cutting robot for free-formed workpiece and the procedure of getting the 3D data of the workpiece edge by laser vision sensor. The geometric modeling of workpiece contour is performed from sequential 3D data. Once the geometric model of workpiece is determined, the path of the cutting robot is automatically generated. Previous experiments show that the proposed method performed successfully.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Nowadays, the development of welding technology is booming in industry. As a pre-step of welding, groove cutting gradually attracts people’s attention. In the modern manufacturing industry, there are various kinds of workpieces that need to go through the cutting process, for instance, rectangular, round and irregular shapes and so on. Now there are three different ways to realize the plane workpiece groove cutting: manual groove cutting completely relies on workers, semi-automatic groove cutting relies on simple machine tools and CNC groove cutting that can realize automatic programming.

Manual and semi-automatic groove cutting belong to traditional cutting methods. First, some specific parts are cut off from the steel and processed two or three times by edge planing machine, groove-cutting robot and small cutting machine. After these processes, the welding groove process is completed. This method has many weaknesses, for example complicated working procedure, large labor intensity and severe steel waste. Therefore, the market has an urgent demand of a high efficient, simple-operative and high stable groove cutting robot.

Automatic CNC groove cutting refers to the cutting which is completed by the cutting robot with intelligent and high automation degree. Workers only need to complete the simple programming and input parameter in this way. Cutting robot can plan the cutting path automatically by using the advanced nesting software, which can achieves high precision and high efficiency automatic cutting [1]. Now most of the cutting robots serving in practical production still are “teaching and playback” type, and they cannot meet requirement of quality and diversification because these robots have no ability to adapt to circumstance changes and uncertain disturbances during cutting process [2, 3]. What’s more, groove cutting robot with nesting software often cuts workpieces and groove one-time in a whole plate. This cutting approach improves the efficiency of production; nevertheless, it leads to a waste of steel.

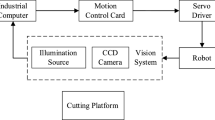

In order to overcome or restrain these shortcomings, it will be an effective approach to develop and improve intelligent technologies for cutting robot. After the analysis of the characteristics of various cutting ways, the machine vision system solves these problems and achieves automation. In this study, computer vision is applied in the closed loop system to control the robot movement in real-time.

2 System Configuration

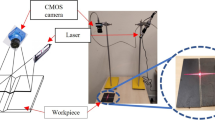

The vision-based groove cutting robot system includes a five-axis gantry structure robot, a laser vision sensor and a control computer. Figure 1 shows the set-up of the five-axis gantry structure robot. It consists of three Cartesian axes \( (X,Y,Z) \) and two rotary axes \( (U,V) \) to control the torch orientation for groove cutting.

The laser vision sensor attaches to the Z-axis. The visual sensor is composed of a CCD camera, two laser generators and a white light-emitting diode (LED) light source as shown in Fig. 2 [4]. The structured light generated by the cylindrical lens is projected onto the workpiece. The camera captures the images of the workpiece edge and sends them to the Image acquisition card. Subsequently, the images are processed to detect the workpiece edge and the intersection between the workpiece edge and the structured light in the image coordinate system. The 3D data of the workpiece edge are produced by the intersection transformed using the triangulation principle. After accurate calibration procedure between the laser and the CCD, 3D data acquired from the sensor can be described in the robot coordinate frame. After running a lap around the workpiece edge, sequential 3D data are obtained, and the geometric modeling of workpiece contour is performed from sequential 3D data [5].

3 Tracking Strategy and Image Processing

3.1 Tracking Strategy

The groove cutting process shows: the 3D contour information of workpiece should be acquired before cutting. Therefore, how to control the robot to achieve the workpiece edge tracking is the primary problem in the visual system.

Due to the uncertain of the shape of the workpiece and dimensional position, in order to achieve the 3D contour information collection, the camera must track the edge of workpiece automatically. Since the lens distortion, the point in the image coordinate provides the information with error, and the error is proportional to the distance between the point and the image center, the farther the distance and the greater the error. From the above analysis, the tracking process must complete the following two goals:

-

(a)

The robot is controlled to run along the workpiece edge, and the main goal is to collect the 3D information of workpiece contour.

-

(b)

The center of image is always on the workpiece edge to ensure accuracy.

The schematic of edge tracking is shown in Fig. 3. The camera motion is equivalent to the motion of the optical center, i.e. the center point O. In order to achieve the above goals, the point to be obtained in next step point is M or the point N. In this study, the robot is designed to run around the workpiece edge clockwise. In Fig. 3 the motion trajectory is: A → B → C → D → A. So the next point is M.

3.2 Image Processing

The next step of robot running is defined as getting the distance from the image center to the intersection between workpiece edge and one of image edge based on the running direction, as shown in Fig. 4, which is captured from an edge of a workpiece model by using the CCD under white LED light irradiation. For tracking, the next step is OM, \( \Delta x \) stands for distance on the X-axis and \( \Delta y \) stands for distance on the Y-axis respectively. For structured light, T is the target point to calculate the 3D information of workpiece edge. So, the goal of image processing is to obtain coordinate of the point M and the point T.

To avoid noises and improve the inspection results, inspections are restricted within a defined region of interest (ROI). The white rectangle is defined and labeled as ROI, as shown in Fig. 4. The center of the ROI is the same as the original image. The ROI contains not only workpiece edge information but also structured light information.

The image processing sequence for obtaining workpiece edge contains: color space conversion, median filter, threshold segmentation (using Otsu method), edge detection by canny operator, Hough Transform to detect line, line classification, target point determination. Compared with the sequence for obtaining edge of workpiece, the image processing of getting structured light is similar. Process is as follows: color space conversion, median filter, threshold segmentation (global threshold), Hough Transform to detect structure light, line classification, target point determination. The image processing result of workpiece edge and structure light are shown in Figs. 5 and 6.

Hough Transform is used to detect the workpiece edge further in the form of line. By classifying line, there are two cases: one line and two lines. A line stands for the situation that robot is located in the workpiece edge and two lines stand for the situation that robot is on the corner of workpiece. Finally, the information of line and the direction of robot running are taken advantage of to obtain the target point.

In the study, dual-beam structured light is adopted. The image about two structured light have obvious characteristics. One of the structured lights is parallel to the x-axis of the image coordinate system; the other one is parallel to the y-axis of the image coordinate system. In addition, the intersection between two structures lights is the image center at best. According to the features, the line of structure light can be obtained easily by Hough Transform. Lastly, when the information of structured light is gotten, the intersection between structured light and workpiece edge can be gotten.

4 Calibrations

4.1 Standard Transformation Formula for Tracking

To convert a specific distance of the image coordinate system to that of the robot coordinate system, the transformation formula is needed in advance. A calibration board with two parallel lines, as shown in Fig. 7, is stated in this paper. In this visual system, the image frame is parallel to the camera frame, and meanwhile the camera frame is parallel to the robot frame.

The two lines in Fig. 7a are parallel to the X-axis and the lines in Fig. 7b are parallel to the Y-axis of the image coordinate. The center of the two lines is in the small area near the image center, in order to get more accurate result. The transformation formula can be represented by Eq. (1) and holds when the height of the robot operation is a fixed value. In this paper the height is 120 mm.

where \( \Delta RobotX \) and \( \Delta RobotY \) are the displacements along the x-axis direction and the y-axis direction in the robot coordinate system; similarly, \( \Delta ImageX \) and \( \Delta ImageY \) are the displacements in the image coordinate system. In this paper, the distance between the two parallel lines is 20 mm, namely, \( \Delta RobotX \) and \( \Delta RobotY \) is 20 mm.

According to Eq. (1), the distance that the robot runs in next step in the robot coordinate system \( (D_{real} (x),D_{real} (y)) \) is given as follows:

Variables above, \( (D_{image} (x),D_{image} (y)) \) is the distance that the robot runs in the image coordinate. (\( target(x) \), \( target(y) \)) is the coordinate of the target, i.e. the point M in the Fig. 4, \( CWidth \) is the width of the image and \( CHeight \) is the height of the image. Equation (3) shows that the principle of tracking is that the image center is always on the edge of the workpiece.

4.2 Standard Transformation Formula for Structured Light

Vanishing point method, as a calibration method, is employed to calibrate the parameter of the structured light, including Projection angle \( \beta \), Baseline length \( L \) and Focal length \( f \). The sensor operates on the principle of active triangulation ranging. According to the Ref. [6], the three parameters are calculated. Then the relationship between image coordinate and camera coordinate can be presented in Eqs. (4) and (5) [6]:

Equation (4) represents the transformation formula which the projection surface is parallel to the y-axis, and Eq. (5) represents the transformation formula which the projection surface is parallel to the x-axis. \( (N_{x} ,N_{y} ) \) is the coordinate of target T in image coordinate, and \( (W_{x} ,W_{y} ) \) is the actual size of pixel. \( (x_{c} ,y_{c} ,z_{c} ) \) is the coordinate in camera coordinate. So far, we can obtain the three-dimensional coordinate of workpiece edge.

In practical applications, 3D data derived from the two structured light is not identical. So, it is essential that the 3D coordinate obtained by different structure light needs to be converted to the same coordinate. To the same point, 3D coordinate obtained by the structured light parallel to the y-axis as the standard, the other one is converted; the modified transformation formula can be represented by Eq. (6).

Some variables (\( x_{c1} ,y_{c1} ,z_{c1} \)) is the 3D coordinate obtained by the structured light parallel to the y-axis, (\( x_{c2} ,y_{c2} ,z_{c2} \)) is the 3D coordinate obtained by the other, and (\( \Delta x_{c} ,\Delta y_{c} ,\Delta z_{c} \)) is the difference. Through the calculations below, the precise value of (\( \Delta x_{c} ,\Delta y_{c} ,\Delta z_{c} \)) can be got.

where variable (\( x_{c1}^{\prime} ,y_{c1}^{\prime} ,z_{c1}^{\prime} \)) is the 3D coordinate converted according to (\( x_{c2} ,y_{c2} ,z_{c2} \)). So far, 3D information derived from the two structured light is identical.

5 Experimental Results and Discussion

To investigate the performance of the developed visual system, a test has been carried out. The proposed method is implemented in Visual C++6.0 and OpenCV in real environment. Guided by the vision sensor, edge tracking experiment is carried out on a gantry structure robot platform. The model, shown in Fig. 8 as an example is used to test.

According to the 3D data, the geometric modeling of workpiece can be recovered. The ideal model and the recovered model can be shown in the same coordinate in order to be compared, as shown in Fig. 9 (the blue line represents recovered model, and the red line represents ideal model described by CAD). The detailed description is given in Table 1.

In the Table 1, the biggest error distance between ideal workpiece and recovered workpiece is 1 mm, and the smallest only 0.1 mm. So, this system can meet the required accuracy of the cutting fully.

The developed visual measurement system is a multi-tasking data processing that consists of image processing, workpiece edge tracking, workpiece edge 3D calculation and communication between the robot and the computer. The precision of the system is affected by various kinds of factors, including the light scanning system, calibration, the image processing. The main error analysis is as follows:

-

1.

Calibration error. In the study, calibration consists of two parts: calibration for the robot, camera and structured light calibration. Once the robot is assembled, no error is expected on the robot currently. The error raised by lens distortion is not considered in the process of camera calibration. The structured light calibration is carried out by the “vanishing point” method. In dual-structure light system, the three-dimensional coordinate obtained by different structure light need to convert to the same coordinate, which is one of the reasons that error exists in the transformation formula.

-

2.

Image processing error. The image processing error results from the procedure of seeking intersection between structured light and workpiece edge. If the difference between ideal target point and the detected point is a pixel in the image coordinate system, then, the real difference in the robot coordinate system is 0.05 mm.

6 Conclusion

In this study, a dimensional position extraction system for groove automatic cutting is proposed based on dual-beam structured light. The workpiece edge and structured light image can be acquired using the proposed visual sensor, while edge can be extracted using the proposed image processing method. When the track ends, the workpiece dimensional position is identified by the 3D information collected by the dual-beam structured light system. Finally, the experiment confirms the feasibility of the developed system.

References

Chen SB, Chen SZ, Qiu T (2005) Acquisition of weld seam dimensional position information for arc welding robot based on vision computing. J Intell Robot Syst 43:77–97

Chen H, Liu K, Xing G, Dong Y (2014) A robust visual servo control system for narrow seam double head welding robot. Int J Adv Manuf Technol

Luo Hong, Chen Xiaoqi (2005) Laser visual sensing for seam tracking in robotic arc welding of titanium alloys. Int J Adv Manuf Technol 26:1012–1017

Zhen Y, Fang G, Chen S, Zou J (2013) Passive vision based seam tracking system for pulse-MAG welding. Int J Adv Manuf Technol 67:1987–1996

Nele L, Sarno E, Keshari A (2013) An image acquisition system for real-time seam tracking. Int J Adv Manuf Technol 69:2099

Hai X, Ming L, Wang C, Ye S (1996) A line structured light 3D visual sensor calibration by vanishing point method. Opto-Electron Eng 23(3):53–58

Acknowledgement

The author is particularly grateful to Harbin XiRobot Technology Co., Ltd. for its help during the study.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Chu, HH., Ji, Y., Wang, XJ., Wang, ZY. (2015). Study on Vision-Based Dimensional Position Extraction of Plane Workpiece for Groove Automatic Cutting. In: Tarn, TJ., Chen, SB., Chen, XQ. (eds) Robotic Welding, Intelligence and Automation. RWIA 2014. Advances in Intelligent Systems and Computing, vol 363. Springer, Cham. https://doi.org/10.1007/978-3-319-18997-0_24

Download citation

DOI: https://doi.org/10.1007/978-3-319-18997-0_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18996-3

Online ISBN: 978-3-319-18997-0

eBook Packages: EngineeringEngineering (R0)