Abstract

This paper presents a simple method for conservatively solving a reliability-based design optimization (RBDO) problem of structures, when only a set of random samples of uncertain parameters is available. Specifically, we consider the truss design optimization under the stress constraints, where the external load is a random vector. The target confidence level, i.e., the probability that the structural reliability is no smaller than the target reliability, is specified, without any assumption on statistical information of the input distribution. We formulate a robust design optimization problem, any feasible solution of which satisfies the reliability constraint with the specified confidence level. The derived robust design optimization problem is solved with a sequential semidefinite programming. Two numerical examples are solved to show the trade-off between the specified confidence level and the structural volume.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consideration of uncertainty is indispensable for design of structures having high assurance of quality. Two major approaches to structural optimization against uncertainty are reliability-based design optimization (RBDO) and robust design optimization (see surveys by Park et al. (2006)), Beyer and Sendhoff (2007), Valdebenito and Schuëller (2010), Yao et al. (2011), and Jiang et al. (2018). Roughly speaking, robust design optimization makes use of a possibilistic (or bounded-but-unknown) model of uncertainty, which assumes only the set of values that the uncertain parameters can possibly take. In contrast, RBDO adopts a probabilistic model of uncertainty, which assumes statistic properties, i.e., a probabilistic distribution, of the uncertain parameters. As an emerging research topic, among others, in RBDO, diverse methods that can address uncertainty in distribution have received considerable attention (Ito and Kogiso 2016; Ito et al. 2018; Peng et al. 2017; Noh et al. 2011a; Cho et al. 2016; Moon et al. 2017, 2018; Picheny et al. 2010; Gunawan and Papalambros 2006; Youn and Wang 2008; Zaman and Mahadevan 2017; Jiang et al. 2013; Noh et al. 2011b; Huang and Zhang 2009; Choi et al. 2010).

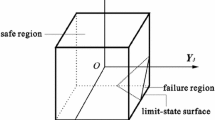

Naturally, uncertainty has also been taken into account in the research field of mathematical optimization. An optimization model with possibilistic uncertainty is called robust optimization (Ben-Tal et al. 2009), while the one with probabilistic uncertainty is called chance-constrained optimization (a.k.a. stochastic programming) (Shapiro et al. 2009). Robust optimization requires that each constraint is satisfied in its worst case, when the uncertain parameters can take any value in a prescribed uncertainty set. In chance-constrained optimization, the probability that the constraints are satisfied should be no smaller than the prescribed threshold, when the distribution of the uncertain parameters is given. Uncertainty in distribution is addressed in the framework of distributionally robust optimization (Goh and Sim 2010; Ben-Tal et al. 2013; Bertsimas et al. to appear; Delage and Ye 2010), in which the worst-case distribution is considered when a set of distributions is given.

In most literature on robust optimization, the uncertainty set is assumed to be given a priori, and means for designing the uncertainty set is rarely discussed. As an exception, Goldfarb and Iyengar (2003) proposed to use the linear regression for the market data to determine the uncertainty set for robust portfolio selection. Also, for making use of data to design the uncertainty set, Tulabandhula and Rudin (2014) proposed to apply statistical learning methods, and Bertsimas et al. (2018) used the statistical hypothesis testing. Recently, Hong et al. (2017) have proposed quite a simple method to design the uncertain set. In this method, a given data set is split into two sets, and one of them is used to determine the shape and the other is used to calibrate the size of the uncertainty set. The size calibration is based on the order statistics (Arnold et al. 2008) of the data set to give feasible solutions of the derived robust optimization problem a statistical feasibility guarantee. This paper is inspired by Hong et al. (2017).

This paper attempts to show how the idea in Hong et al. (2017) can be utilized in RBDO with an unknown input distribution. We assume that only the set of random samples of uncertain variables is available. Specifically, in this paper, the external load applied to a structure is considered a random vector. As usual in RBDO, we impose a lower bound (i.e., a target reliability) for the probability that the constraints on structural performance are satisfied. However, this probability itself is a random variable, because we do not know the (joint) distribution of the external load. Here, we consider any possible distribution such that the given data set forms a random sample from it. We then impose a lower bound (i.e., a target confidence level) for the probability that the structural reliability is not smaller than the target reliability. Using the idea in Hong et al. (2017), we can derive a sufficient condition for this confidence level constraint. Since this sufficient condition is in a form of robust constraints, we thus transform the RBDO problem with a confidence level to a robust design optimization problem; more precisely, any feasible solution of the latter problem is feasible for the former problem. Furthermore, by using a technique developed in robust design optimization (Kanno and Takewaki 2006a), we can transform the robust design optimization problem to an optimization problem in the conventional form. Thus, we convert the RBDO problem with a confidence level to a tractable form.

There exist several methods proposed for RBDO with incomplete statistical information on random variables. For example, Gunawan and Papalambros (2006) used the Bayesian inference to estimate the structural reliability, when for some of random variables only a finite number of samples are available. To reduce the computational cost for a Bayesian approach to reliability estimate, Youn and Wang (2008) adopted the eigenvector dimension reduction method. Noh et al. (2011a, b) proposed to use the adjusted mean and correlation coefficient for an input normal distribution model with a confidence level. Given the intervals of input variables as data, Zaman and Mahadevan (2017) proposed to use the Johnson distribution as an input distribution model. In Cho et al. (2016) and Moon et al. (2017, 2018), some candidates of the input distribution types are prepared, and distribution parameters are considered random variables to compute the confidence level of the probability of failure with a Bayesian approach. Ito et al. (2018) assumed that each random variable follows a normal distribution with the mean and the variance modeled as random variables, and converted RBDO with a confidence level to the conventional form of RBDO with a modified value of the target reliability index. In this paper, we do not make any assumption on the distribution type and the statistical parameters; we only assume that the given data is a set of independent and identically distributed samples drawn from an unknown distribution.

An application of order statistics in structural design can be found in determination of the so-called A-basis and B-basis values of allowable stresses of materials for, particularly, aircraft and aerospace vehicles; see, e.g., Bhachu et al. (2016). Here, the B-basis value refers to the value below which 10% of the observations fall with 95% confidence. From a given material data set, a non-parametric method finds (a conservative estimate of) the B-basis value according to order statistics (Bhachu et al. 2016, section II). Thus, from the view point of structural optimization, this method uses order statistics to determine the parameters (more precisely, the specified bounds of stresses) in constraints. In contrast, the method presented in this paper uses order statics to determine the magnitude (or the level) of uncertainty against which the constraints should be satisfied.

As for approximate solution methods for chance-constrained optimization, scenario-generation approaches have been developed (Calafiore and Campi 2005, 2006; Luedtke and Ahmed 2008; Campi and Garatti 2011). In these methods, a chance constraint is replaced by a set of constraints evaluated at generated samples, where the probability that the obtained solution violates the original constraint has a theoretical bound. Also, there exist integer programming approaches to chance-constrained optimization in the case that the random variables have finite supports (Luedtke et al. 2010; Luedtke 2014; Vielma et al. 2012).

The paper is organized as follows. In Section 2, we define the RBDO problem that we handle in this paper, and give an overview of the methodology that links the RBDO problem to a robust design optimization problem. Following Hong et al. (2017), Section 3 explains how to construct the uncertainty set used in the robust design optimization. As a concrete RBDO problem, Section 4 discusses a design optimization problem of trusses under the stress constraints. Section 5 reports the results of numerical experiments. Some conclusions are drawn in Section 6.

In our notation, ⊤ denotes the transpose of a vector or a matrix. The Euclidean norm of vector \({\boldsymbol {x}} \in \mathbb {R}^{n}\) is denoted by \(\| {\boldsymbol {x}} \| = \sqrt {{\boldsymbol {x}}^{\top } {\boldsymbol {x}}}\). We often write the (n + m)-dimensional column vector (x⊤,y⊤)⊤ consisting of \({\boldsymbol {x}} \in \mathbb {R}^{n}\) and \({\boldsymbol {y}} \in \mathbb {R}^{m}\) as (x,y). We use \({\mathcal {S}}^{n}\) to denote the set of n × n real symmetric matrices. We write X ≽ 0 and X ≼ 0, respectively, if \(X \in {\mathcal {S}}^{n}\) is positive semidefinite and negative semidefinite. For a, \(b\in \mathbb {R}\) with a < b, ]a,b[ designates the open interval between a and b. We use \({\mathcal {N}}({\boldsymbol {\mu }},\Sigma )\) to denote the multivariate normal distribution with mean vector μ and variance-covariance matrix Σ.

2 Problem setting

Throughout the paper, we assume that the input distribution in RBDO is known imprecisely. Some situations might match this assumption. For instance, suppose that only a set of some samples of random variables is given, and we try to use the data set directly rather to construct its empirical distribution model. Also, we may suppose that a distribution of random variables is given, but its statistical information is not reliable. Moreover, even if reliable statistic information of random variables is available, epistemic uncertainty stemming from, e.g., empirical modeling of a structural system, is inevitable. In this paper, we attempt to find a structural design which is robust against such uncertainty in a probabilistic model. By using the order statistics of the data set, we can give a structural design a confidence level for the prescribed reliability.

Consider a finite-dimensional structure. Let d denote the number of degrees of freedom of the nodal displacements. We use \({\boldsymbol {x}} \in \mathbb {R}^{m}\) to denote the vector of design variables, where m is the number of independent design variables.

Let \({\boldsymbol {q}} \in \mathbb {R}^{d}\) denote the external load vector. Throughout the paper, we assume that q is a random vector and that the other quantities are not uncertain. For notational convenience, let F denote the joint distribution functionFootnote 1 of q.Footnote 2 Suppose that the performance requirement for the structure is expressed as

Assuming that F is known, the conventional RBDO solves the following optimization problem:

Here, \(g_{0}: \mathbb {R}^{m} \to \mathbb {R}\) is the objective function, 𝜖 ∈]0,1[ is a constant, and \(X \subseteq \mathbb {R}^{m}\) is a given set, where constraint (1c) represents, e.g., the side constraints of the design variables. It is worth noting that 𝜖 is the threshold (or the upper bound) of the failure probability, and −Φ− 1(𝜖) is the target reliability index, where Φ is the distribution function of the standard normal distribution. For simplicity, in the following we often call 1 − 𝜖 the target reliability.

We now proceed to the situation that F is unknown, or known imprecisely. Instead, we are given a data set \(D=\{ \hat {{\boldsymbol {q}}}_{1},\dots ,\hat {{\boldsymbol {q}}}_{n} \}\), where \(\hat {{\boldsymbol {q}}}_{1},\dots ,\hat {{\boldsymbol {q}}}_{n}\) are independent and identically distributed samples drawn from F. It should be clear that we do not attempt to estimate F from D. Rather, we assume only that D forms a random sample from F. Then the reliability of the structure, P{gl(x;q) ≤ 0}, is considered a random variable with respect to F. In this regard, we use

to denote the probability that the structure has the reliability no smaller than 1 − 𝜖. With this notation, the design optimization problem that we attempt to solve is formulated as follows:

Here, δ ∈]0,1[ is a constant, and 1 − δ corresponds to the target confidence level. Namely, any feasible solution of this problem is guaranteed with probability at least 1 − δ to have the reliability no smaller than 1 − 𝜖.

Hong et al. (2017) proposed a robust optimization approach for approximately solving chance-constrained optimization problems. The methodology, adjusted to our context, can be summarized as follows. For α ≥ 0, define \(Q(\alpha ) \subset \mathbb {R}^{d}\) by

where \(\bar {{\boldsymbol {q}}} \in \mathbb {R}^{d}\) is a constant vector and \(\Omega \in {\mathcal {S}}^{d}\) is a constant positive definite real symmetric matrix. Suppose that Q(α) satisfies

Then we can easily see that, if x satisfies

then it satisfies

Therefore, problem (2a)–(2c) is approximated in a conservative manner by the following robust optimization problem with Q(α) satisfying (4):

More precisely, any feasible solution of problem (5a)–(5c) is feasible for problem (2a)–(2c).

What remain to be considered are how to construct Q(α) satisfying (4) and how to solve problem (2a)–(2c). Concerning the former, Section 3 briefly explains an idea found in Hong et al. (2017). The latter is dealt with in Section 4, for a concrete design optimization problem of trusses.

3 Construction of uncertainty set

As explained in Section 2, the confidence level in RBDO is guaranteed at a feasible solution of a robust optimization problem with the uncertainty set, Q(α), satisfying (4). We utilize the data set, D, to construct such a Q(α). In this section, we summarize the idea presented in Hong et al. (2017), with adjusting it to our purpose.

With referring to the definition in (3), we see that Q(α) is determined by \(\bar {{\boldsymbol {q}}}\), Ω, and α. Among them, we first determine \(\bar {{\boldsymbol {q}}}\) and Ω, and subsequently determine α. We choose some data points randomly from D. Let \(\hat {{\boldsymbol {\mu }}}\) and \(\hat {\Sigma }\) denote the mean and the variance-covariance matrix of the chosen data points. Then we put \(\bar {{\boldsymbol {q}}}=\hat {{\boldsymbol {\mu }}}\) and \(\Omega =\hat {\Sigma }^{-1}\).

In the following, we use the remaining data points in D to determine α satisfying (4). For simple notation, we use the same notation, \(D=\{ \hat {{\boldsymbol {q}}}_{1},\dots ,\hat {{\boldsymbol {q}}}_{n} \}\), to denote the set of remaining data points. A small value of α is preferable to avoid an overly conservative structural design. The key observation in Hong et al. (2017, Lemma 3) is obtained from fundamentals of the order statistics. For the convenience of the reader, essentials of the proof, with modification to adjust our context, are repeated here.

Proposition 1

LetFXbe a continuous distribution function, and\(X_{1},\dots ,X_{n} \in \mathbb {R}\)beits independent and identically distributed samples. We useX(1) < X(2) < … < X(n)to denote the order statistics ofX1,…,Xn.For a given𝜖 ∈]0,1[,chooseδsatisfying

Then, for any natural number p (1 ≤ p ≤ n) satisfying

we have

Proof

Let s1−𝜖 denote the (1 − 𝜖)-quantile of FX, i.e.,

As a simple computation, we obtain

As a fundamental of the order statistics (Arnold et al. 2008, section 2.2), we haveFootnote 3

It follows from (9) and (10) that (7) implies (8). □

Remark 1

The assumption in (6) of Proposition 1 ensures the existence of p satisfying (7); we can easily see that p = n satisfies (7).

Define \(a : \mathbb {R}^{d} \to \mathbb {R}\) by

Renumber the data points \(\hat {{\boldsymbol {q}}}_{1},\dots ,\hat {{\boldsymbol {q}}}_{n} \in D\) so that

holds. Then, \(a(\hat {{\boldsymbol {q}}}_{(1)}),\dots , a(\hat {{\boldsymbol {q}}}_{(n)})\) correspond to the order statistics of samples \(a(\hat {{\boldsymbol {q}}}_{1}),\dots , a(\hat {{\boldsymbol {q}}}_{n})\). Let \(\tilde {p}\) be the minimum natural number satisfying (7). It is worth noting that \(\tilde {p}\) depends only on n, 𝜖, and δ, i.e., the number of data points in D, the target reliability, and the target confidence level. From Proposition 1, we obtain

where FA is the distribution function of a(q). On the other hand, the definition of the distribution function yields

It follows from (11) and (12) that we obtain

with \(\tilde {\alpha }=a({\boldsymbol {q}}_{(\tilde {p})})\).

The upshot is that any feasible solution of problem (5a)–(5c) with the uncertainty set \(Q(\tilde {\alpha })\) is guaranteed to have the reliability 1 − 𝜖 with the confidence level no smaller than 1 − δ.

Remark 2

Although in this paper we restrict ourselves to single load cases for simplicity, the presented method can deal with multiple load cases. For a problem with s load cases, we suppose that corresponding data sets, \(D_{1},\dots ,D_{s} \subseteq \mathbb {R}^{d}\), of external loads are given, where Dj forms a random sample from Fj(j = 1,…,s). It is worth noting that Dj and \(D_{j^{\prime }} (j^{\prime }\not = j)\) may consist of different numbers of data points. For each j = 1,…,s, we compute \(\bar {{\boldsymbol {q}}}_{j}\) and Ωj by using some data points randomly chosen from Dj. Apply the procedure described above to determine \(\tilde {\alpha }_{j}\), so that we have

Thus, for a problem with multiple loads, we replace (5b) with

4 Application to truss design under stress constraints

In this section, we demonstrate how the methodology introduced in Section 2 can be applied to a concrete problem in RBDO. Specifically, we consider design optimization of trusses under uncertainty in the external load. As for the reliability, we consider the probability that the member stresses do not exceed the specified bound.

Consider a truss structure. We use xi(i = 1,…,m) to denote the member cross-sectional area, which is considered a design variable, where m is the number of members. We set the lower bound, denoted \(\underline {x} > 0\), for xi, and do not consider change in truss topology in the course of optimization. In the following, we assume small deformation and linear elasticity.

Let \(K({\boldsymbol {x}}) \in {\mathcal {S}}^{d}\) denote the stiffness matrix, which has the form

Here, li is the undeformed length of member i, E is the Young modulus, \({\boldsymbol {b}}_{i} \in \mathbb {R}^{d}\) is a constant vector, and \(K_{i} = \frac {E}{l_{i}} {\boldsymbol {b}}_{i} {\boldsymbol {b}}_{i}^{\top }\) is a constant matrix. The equilibrium equation is written as

Since we give positive lower bounds for all the member cross-sectional areas, it is natural to assume rankK(x) = d. Therefore, the equilibrium equation has a unique solution for any \({\boldsymbol {q}}\in \mathbb {R}^{d}\). We use u(x;q) to denote this solution.

For member i, let σi(u) denote the stress corresponding to displacement u. Since we do not allow change in the truss topology in the course of optimization, the stress constraint can be written simply as

where \(\underline {\sigma }_{i}\) and \(\overline {\sigma }_{i}\) are specified lower and upper bounds, respectively. This constraint can be rewritten as

with \(\sigma _{{\mathrm {c}}i} = (\overline {\sigma }_{i} - \underline {\sigma }_{i})/2\) and \(r_{i} = (\overline {\sigma }_{i} + \underline {\sigma }_{i})/2\). Since q is considered a random vector, σi(u(x;q)) is a random variable. Therefore, we handle constraint (13) within the framework of the reliability constraint with the specified confidence level, as explained in Section 2. Accordingly, the design optimization problem that we attempt to solve is formulated as follows:

Here, the objective function is the structural volume. As a conservative approximation of problem (14a)–(14c), we consider the following robust optimization problem:

The attention of the remainder of this section is focused on how to solve this robust optimization problem.

Define \(G_{i} \in {\mathcal {S}}^{d + 1}\) by

so that

holds. For \({\boldsymbol {x}} \in \mathbb {R}^{m}\) satisfying (15c), define \(\Psi ({\boldsymbol {x}}) \in {\mathcal {S}}^{d + 1}\) by

so that

holds. The following proposition can be obtained in a manner similar to (Kanno and Takewaki 2006a, Proposition 3.1).

Proposition 2

Suppose rankK(x) = d.Thenxsatisfies

if and only if there exists νi ≥ 0 satisfying

A proof appears in Appendix.

It follows from Proposition 2 that problem (15a)–(15c) is equivalent to the following optimization problem:

Here, \({\boldsymbol {x}}\in \mathbb {R}^{m}\) and \({\boldsymbol {\nu }}\in \mathbb {R}^{m}\) are variables to be optimized.

We next present an algorithm for finding a local optimal solution of problem (18a)–(18d). The algorithm is essentially based on the same idea as the sequential semidefinite programming (sequential SDP) proposed in Kanno and Takewaki (2006a); see also Kanno et al. (2001) and Kanzow et al. (2005). It is worth noting that a maximization problem of the robustness of a truss is considered in Kanno and Takewaki (2006a). In contrast, in problem (18a)–(18d), we fix the target robustness, \(\tilde {\alpha }\). Hence, the sequential SDP for problem (18a)–(18d), presented in Algorithm 1, is simpler than the one used in Kanno and Takewaki (2006a).

In the sequential SDP, the nonlinear matrix-valued function Ψ in (18b) is linearized at the incumbent solution, denoted x(k). Also, to prevent too large step length, the quadratic penalty on variations of variables, i.e., ρ∥x −x(k)∥2 and ρ∥v −v(k)∥2, are added to the objective function, where ρ > 0 is a penalty parameter. Accordingly, at the k th iteration we solve the following problem in variables x and ν:

Here, DΨ(x(k)) is the derivative of mapping \(\Psi : \mathbb {R}^{m} \to {\mathcal {S}}^{d + 1}\) at x(k) defined such that DΨ(x(k))z is a linear function of \({\boldsymbol {z}} = (z_{1},\dots ,z_{m})^{\top } \in \mathbb {R}^{m}\) given by

Problem (19a)–(19d) is an SDP problem, which can be solved efficiently with a primal-dual interior-point method (Anjos and Lasserre 2012).

5 Numerical experiments

This section presents two numerical examples. Algorithm 1 was implemented in MATLAB ver. 9.0.0. We solved the SDP problem in (19a)–(19d) by using CVX ver. 2.1 (Grant and Boyd 2008, 2018) with SDPT3 ver. 4.0 (Tütüncü et al. 2003). The cvx_precision parameter of CVX is set to best, which means that the solver continues as far as it can make progress (Grant and Boyd 2018). Computation was carried out on a 2.2GHz Intel Core i5 processor with 8GB RAM.

In the following examples, the lower bound for the member cross-sectional areas is set to \(\underline {x}= 100 \mathrm {mm^{2}}\). Note that the lower bound constraints on the member cross-sectional areas are inactive for all the solutions obtained in this section. The parameters of Algorithm 1 are ρ = 10− 3 and 𝜖0 = 10− 4mm2. The initial point is given as \(x^{(0)}_{i}= 2000 \mathrm {mm^{2}} (i = 1,\dots ,m)\).

5.1 Example (I): 2-bar truss

Consider the planar truss shown in Fig. 1. The truss has m = 2 members and d = 2 degrees of freedom of the nodal displacements. The Young modulus of the members is E = 20GPa. The lower and upper bounds for the member stresses are \(\underline {\sigma }_{i}=-100 \text {MPa}\) and \(\overline {\sigma }_{i}= 100 \text {MPa} (i = 1,2)\), respectively.

The data set \(D \subset \mathbb {R}^{2}\) of the external load vector consists of 250 data points drawn from the normal distribution with mean μ and variance-covariance matrix Σ given as

This does not mean that the external load is assumed to follow the normal distribution. It should be emphasized that no assumption is made on the distribution that uncertain parameters follow.

Figure 2 shows the data points, as well as the uncertainty set obtained from the data set. We used 50 data points, indicated by “∘” in Fig. 2, to determine \(\bar {{\boldsymbol {q}}}\) and Ω of the uncertainty set. The mean and the variance-covariance matrix of these data points are

from which we put

The remaining 200 points, indicated by “ ×” in Fig. 2, were used to determine \(\tilde {\alpha }\). For instance, if we set 𝜖 = 0.1 and δ = 0.08 (i.e., if we require that the failure probability should not be greater than 10%, with 8% confidence level), then we obtain

The boundary of \(Q(\tilde {\alpha })\) is shown in Fig. 2 by a solid curve.

The solution obtained by Algorithm 1 is

Figure 3a shows this solution, where the width of each member in the figure is proportional to its cross-sectional area. The algorithm terminated after 11 iterations. The computational time was 3.1s. To confirm the feasibility and quality of the obtained solution, we computed the extreme values of the member stresses, when the external load satisfies \({\boldsymbol {q}} \in Q(\tilde {\alpha })\). Figure 3b reports the results, where the member indices are shown in Fig. 1 and \(\sigma _{{\mathrm {c}}}:=\overline {\sigma }_{i}=-\underline {\sigma }_{i} (i = 1,2)\). We can confirm that, for each member, there exists an external load with which the stress constraint becomes active.

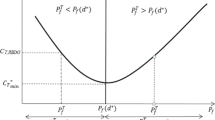

The optimal value increases as the required confidence level increases. Figure 4 shows this trade-off relationship. As for the threshold of the failure probability, we here consider three cases: 𝜖 = 0.1, 0.08, and 0.05. It is worth noting that, for 𝜖 = 0.05, the assumption in (6) of Proposition 1 reads δ ≥ (1 − 𝜖)n = (1 − 0.05)200 ≃ 3.5053 × 10− 5. This means that, to obtain a solution with a confidence level larger than 1 − (3.5053 × 10− 5) for 𝜖 = 0.05, the proposed method requires a larger number of data points than the present data set.

Figure 5 reports the results of Monte Carlo simulation for the solution obtained with 𝜖 = 0.1 and δ = 0.08. We generated 50000 data sets, each of which consists of 50000 samples drawn as \({\boldsymbol {q}} \sim {\mathcal {N}}({\boldsymbol {\mu }},\Sigma )\). For each data set, we checked the population of data points with which the stress constraint is violated. Figure 5 shows the ratio of the population divided by the total number of data points. We can see that the ratio of failure is much less than the specified threshold, 𝜖 = 0.1. However, this does not imply that the obtained solution is overly conservative. Namely, although in our problem setting we consider any distribution that can explain the given data in Fig. 2, the Monte Carlo simulation was performed for only one distribution.

We next investigate the gap between the proposed method and methods using an estimated distribution. Namely, we assume the normal distribution, and use all the data points in Fig. 2 to estimate the mean and the variance-covariance matrix. This estimation yields

Then, we generate 5 × 106 samples drawn as \({\boldsymbol {q}} \sim {\mathcal {N}}(\check {{\boldsymbol {\mu }}},\check {\Sigma })\), and compute the structural response of the solution in (21). Figure 6 reports the values of |σi(x∗;q)|/σc for these samples, where |σi(x∗;q)|/σc > 1 means that the stress constraint is violated. The obtained failure probabilities for member 1 and member 2, respectively, are 0.01442 and 0.00956. These values are less than the specified lower bound for failure probability, 𝜖 = 0.1, because in the proposed method the distribution type is not identified.

The results of Monte Carlo simulation for a normal distribution estimated from the data in Fig. 2. Example (I). a Member 1; and b member 2

A solution obtained by the proposed method generally depends on choice of data points used for learning the shape and for calibrating the size of the uncertainty set. In Fig. 7, we investigate robustness of the proposed method against this choice. Here, we used a single data set, consisting of 250 data points. Also, we fixed the number of data points used for shape determination as 50; the remaining 200 data points were used for size calibration. Partition of the data set into these two sets was determined randomly. Figure 7 reports the objective values obtained for 10000 randomly generated partitions. The mean of these objective values is 3.7966 × 106mm2, and the standard deviation is 0.0677 × 106mm2. Thus, variation of the objective value is quite small. It is worth noting that, since all the solutions in Fig. 7 satisfy the reliability constraint with at least the specified confidence level, we can adopt the best one (i.e., the solution having the minimal objective value) among them as the final solution.

We next examine robustness of the proposed method against choice of the number data points for shape determination; the other data points are used for size calibration. Figure 8 shows variation of the obtained solutions, where the the data set, consisting of 250 data points, is fixed. Figure 8a shows the variation of the minimal p satisfying (7) in Proposition 1. This value is used in the size calibration phase. It should be clear that the relation in Fig. 8a is not affine, although it looks almost affine. Figure 8b reports the objective values obtained by the proposed method. We can observe that variation of the objective values is only about 5%. Also, reducing the number of data points for size calibration does not necessarily imply increase in the objective value. Figure 8c and d show the constructed uncertainty set, as well as separation of the data set, when 30 data points and 227 data points are used for shape determination, respectively. In Fig. 8d, all of the remaining 23 data points are used for size calibration. Compared with Fig. 8c, some data points far from the center of the ellipsoid are not selected (accidentally) as the ones for size calibration, the objective value in Fig. 8d is not too overly conservative.

Variation of the solution for example (I) with (𝜖,δ) = (0.1,0.08) with respect to the number of data points for shape determination of the uncertainty set. a The minimum p satisfying (7) (i.e., \(\tilde {p}\) in Section 3); b the objective value; c the uncertainty set when 30 data points are used for shape determination; and d the uncertainty set when 227 data points are used for shape determination. “∘” The data points for shape determination; and “×” for size calibration

Finally, we vary the data set size and observe change in the obtained solutions. As for the data set size, we consider four cases, where data sets consist of 80, 150, 250, and 450 data points drawn from the normal distribution with mean μ and variance-covariance matrix Σ in (20). In each case, we prepared 10000 randomly generated data sets with randomly changing a seed of the random number generator of MATLAB. For every data set, we used 50 randomly chosen data points for shape determination of the uncertainty set; the remaining data points were used for size calibration. Figure 9 and Table 1 report the obtained results. It is natural that the mean and the standard deviation of the obtained objective value decrease as the number of data points increases, because a larger number of data points provide us with more information on the underlying distribution. In other words, the results shown in Fig. 9 and Table 1 do not necessarily mean that a solution obtained with a small number of data points is overly conservative, because, to ensure robustness against uncertainty in a distribution with a smaller number of data points, we need to consider an uncertainty set with a larger size.

5.2 Example (II): 29-bar truss

Consider the planar truss shown in Fig. 10. The truss consists of m = 29 members, and has d = 20 degrees of freedom of the nodal displacements. The undeformed lengths of horizontal members and vertical members are 1m. The Young modulus of the members is E = 20GPa. The lower and upper bounds for the member stresses are \(\underline {\sigma }_{i}=-100 \text {MPa}\) and \(\overline {\sigma }_{i}= 100 \text {MPa} (i = 1,\dots ,m)\), respectively.

Figure 11 shows the data set \(D \subset \mathbb {R}^{d}\) of the external load vector constituting of 300 samples drawn from a normal distribution. Thus, the number of random variables is d = 20. The mean of the external load, denoted μ, is as illustrated in Fig. 10, where the magnitudes of the two nonzero nodal forces are 100kN. The variance-covariance matrix is Σ = 250I in kN2. Again, it should be clear that in our framework no assumption is made on the distribution that the external load follows. Namely, the normal distribution mentioned above was used only for generating the data set.

Among 300 data points, we used 100 data points to learn \(\bar {{\boldsymbol {q}}}\) and Ω, and the remaining 200 points to determine \(\tilde {\alpha }\). We set 𝜖 = 0.1 and δ = 0.08. Figure 12a shows the solution obtained by Algorithm 1. The algorithm terminated after 120 iterations. The total computational time was 799.2s. Concerning the truss design in Fig. 12a, Fig. 12b collects the extreme values of the member stresses corresponding to \({\boldsymbol {q}} \in Q(\tilde {\alpha })\), where the member indices are shown in Fig. 12a and \(\sigma _{{\mathrm {c}}}:=\overline {\sigma }_{i}=-\underline {\sigma }_{i} (i = 1,\dots ,m)\). We can observe that, for each member, there exists a load case with which the stress constraint becomes active. For the target reliability 1 − 𝜖 = 0.9, Fig. 13 shows the trade-off between the confidence level, 1 − δ, and the structural volume of the solution obtained by the proposed method.

6 Conclusions

This paper has presented a data-driven approach to reliability-based design optimization (RBDO) of structures subjected to the uncertain external load. In this approach, a robust design optimization problem is solved to find a structural design that has the target reliability with the prescribed confidence level. The probability that the reliability of the obtained solution is no smaller than the target reliability is guaranteed to be no smaller than the prescribed confidence level, irrespective of the distribution of the external load. The uncertainty set, used in the robust design optimization problem, is determined from the given data (i.e., the set of given samples of the external load), by using the order statistics. This construction procedure of the uncertainty set basically follows the idea presented in Hong et al. (2017).

The methodology presented in this paper is quite versatile, in the sense that many of existing techniques for robust design optimization can be incorporated into the framework. This suggests possibilities of extensions of the presented method to RBDO problems in diverse problem settings. For instance, uncertainty in stiffnesses of structural elements (Guo et al. 2009, 2011, 2015; Kanno and Takewaki 2006b, 2008) could be explored. Also, for topology optimization, a topology-dependent uncertainty set of the external load, considered in Yonekura and Kanno (2010), Kanno and Guo (2010), and Kanno (2018) for robust design optimization, could be extended for RBDO. A key is that, roughly speaking, robust design optimization can consider a bit more complicated constraints, as well as larger-size problems, than RBDO, although the computational efficiency should be evaluated directly.

The presented method provides a feasible solution of the RBDO problem with a confidence level. The gap between the objective value obtained by the presented method and the optimal value of the original problem remains to be studied. When only very limited number of data points are available, the proposed method cannot provide with us sufficiently high confidence for high reliability. As a remedy, possibility of use of a non-parametric bootstrap method (Hanson and Koopmans 1964), which is often used for assessing allowable stresses of materials in structural design (Bhachu et al. 2016; Edwards et al. 2011), could be explored.

Notes

It should be clear that, throughout the paper, a distribution function means a cumulative distribution function (also called a cumulative density function).

Throughout the paper, we assume that F is unknown. We make no assumption on statistical properties of q, and do not attempt to estimate them. We use the notation F to clarify the notion of the confidence level defined below.

To see (10), we begin with the simplest case, p = n. We then obtain

$$\begin{array}{@{}rcl@{}} &&{}{\mathrm{P}}_{F_{X}}\{ X_{(n)} \le s_{1-\epsilon} \} \\ &&{}= {\mathrm{P}}_{F_{X}}\{ X_{j} \le s_{1-\epsilon} \ (j = 1,\dots,n) \} \\ &&{}= {\mathrm{P}}_{F_{X}}\{ X_{1} \le s_{1-\epsilon} \} \times {\dots} \times {\mathrm{P}}_{F_{X}}\{ X_{n} \le s_{1-\epsilon} \} \\ &&{}= F_{X}(s_{1-\epsilon})^{n} , \end{array} $$where the last equality follows the assumption that X1,…,Xn form a random sample from FX. Next, for p = n − 1 we can derive

$$\begin{array}{@{}rcl@{}} &&{}{\mathrm{P}}_{F_{X}}\{ X_{(n-1)} \le s_{1-\epsilon} \} \\ &&{}={\mathrm{P}}_{F_{X}}\{ X_{j} \le s_{1-\epsilon} \ (j = 1,\dots,n) \} \\ &&{} {\qquad} + \sum\limits_{i = 1}^{n} {\mathrm{P}}_{F_{X}}\{ X_{j} \le s_{1-\epsilon} \ (j\not=i) , \ X_{i} > s_{1-\epsilon} \} \\ &&{}= F_{X}(s_{1-\epsilon})^{n} + \binom{n}{1} F_{X}(s_{1-\epsilon})^{n-1} (1-F_{X}(s_{1-\epsilon})) \\ &&{}= \binom{n}{n} F_{X}(s_{1-\epsilon})^{n} + \binom{n}{n-1} F_{X}(s_{1-\epsilon})^{n-1} (1-F_{X}(s_{1-\epsilon})) . \end{array} $$Similarly, we can obtain the first equality of (10) for general p.

References

Anjos MF, Lasserre JB (eds) (2012) Handbook on semidefinite, conic and polynomial optimization. Springer, New York

Arnold BC, Balakrishnan N, Nagaraja HN (2008) A first course in order statistics. SIAM, Philadelphia

Ben-Tal A, El Ghaoui L, Nemirovski A (2009) Robust optimization. Princeton University Press, Princeton

Ben-Tal A, den Hertog D, De Waegenaere A, Melenberg B, Rennen G (2013) Robust solutions of optimization problems affected by uncertain probabilities. Manag Sci 59:341–357

Bertsimas D, Gupta V, Kallus N (2018) Data-driven robust optimization. Math Program 167:235–292

Bertsimas D, Sim M, Zhang M (to appear) Adaptive distributionally robust optimization. Management Science, https://doi.org/10.1287/mnsc.2017.2952

Beyer H. -G., Sendhoff B (2007) Robust optimization—a comprehensive survey. Comput Methods Appl Mech Eng 196:3190–3218

Bhachu KS, Haftka RT, Kim NH (2016) Comparison of methods for calculating B-basis crack growth life using limited tests. AIAA J 54:1287–1298

Calafiore G, Campi MC (2005) Uncertain convex programs: randomized solutions and confidence levels. Math Program 102:25–46

Calafiore G, Campi MC (2006) The scenario approach to robust control design. IEEE Trans Autom Control 51:742–753

Calafiore G, El Ghaoui L (2004) Ellipsoidal bounds for uncertain linear equations and dynamical systems. Automatica 40:773–787

Campi MC, Garatti S (2011) A sampling-and-discarding approach to chance-constrained optimization: feasibility and optimality. J Optim Theory Appl 148:257–280

Cho H, Choi KK, Gaul N, Lee I, Lamb D, Gorsich D (2016) Conservative reliability-based design optimization method with insufficient input data. Struct Multidiscip Optim 54:1609–1630

Choi J, An D, Won J (2010) Bayesian approach for structural reliability analysis and optimization using the Kriging dimension reduction method. J Mech Des (ASME), 132, Article No 051003

Delage E, Ye Y (2010) Distributionally robust optimization under moment uncertainty with application to data-driven problems. Oper Res 58:595–612

Edwards DJ, Guess FM, Young TM (2011) Improved estimation of the lower percentiles of material properties. Wood Sci Technol 45:533–546

Goh J, Sim M (2010) Distributionally robust optimization and its tractable approximations. Oper Res 58:902–917

Goldfarb D, Iyengar G (2003) Robust portfolio selection problems. Math Oper Res 28:1–38

Grant M, Boyd S (2008) Graph implementations for nonsmooth convex programs. In: Blondel V., Boyd S., Kimura H. (eds) Recent advances in learning and control (A Tribute to M. Vidyasagar). Springer, pp 95–110

Grant M, Boyd S (2018) CVX: Matlab software for disciplined convex programming, Ver. 2.1 http://cvxr.com/cvx/ (Accessed June 2018)

Gunawan S, Papalambros PY (2006) A Bayesian approach to reliability-based optimization with incomplete information. J Mech Des (ASME) 128:909–918

Guo X, Bai W, Zhang W, Gao X (2009) Confidence structural robust design and optimization under stiffness and load uncertainties. Comput Methods Appl Mech Eng 198:3378–3399

Guo X, Du J, Gao X (2011) Confidence structural robust optimization by non-linear semidefinite programming-based single-level formulation. Int J Numer Methods Eng 86:953–974

Guo X, Zhao X, Zhang W, Yan J, Sun G (2015) Multi-scale robust design and optimization considering load uncertainties. Comput Methods Appl Mech Eng 283:994–1009

Hanson DL, Koopmans LH (1964) Tolerance limits for the class of distributions with increasing hazard rates. Ann Math Stat 35:1561–1570

Hong LJ, Huang Z, Lam H (2017) Learning-based robust optimization: Procedures and statistical guarantees. arXiv:1704.04342

Huang H-Z, Zhang X (2009) Design optimization with discrete and continuous variables of aleatory and epistemic uncertainties. J Mech Des (ASME), 131, Article No 031006

Ito M, Kogiso N (2016) Information uncertainty evaluated by parameter estimation and its effect on reliability-based multiobjective optimization. J Adv Mech Des Syst Manuf, 10, Article No 16–00331

Ito M, Kim NH, Kogiso N (2018) Conservative reliability index for epistemic uncertainty in reliability-based design optimization. Struct Multidiscip Optim 57:1919–1935

Jiang Z, Chen W, Fu Y, Yang R-J (2013) Reliability-based design optimization with model bias and data uncertainty. SAE Int J Mater Manuf 6:502–516

Jiang C, Zheng J, Han X (2018) Probability-interval hybrid uncertainty analysis for structures with both aleatory and epistemic uncertainties: a review. Struct Multidiscip Optim 57:2485–2502

Kanno Y (2018) Robust truss topology optimization via semidefinite programming with complementarity constraints: a difference-of-convex programming approach. Comput Optim Appl 71:403–433

Kanno Y, Guo X (2010) A mixed integer programming for robust truss topology optimization with stress constraints. Int J Numer Methods Eng 83:1675–1699

Kanno Y, Takewaki I (2006a) Sequential semidefinite program for maximum robustness design of structures under load uncertainties. J Optim Theory Appl 130:265–287

Kanno Y, Takewaki I (2006b) Confidence ellipsoids for static response of trusses with load and structural uncertainties. Comput Methods Appl Mech Eng 196:393–403

Kanno Y, Takewaki I (2008) Semidefinite programming for uncertain linear equations in static analysis of structures. Comput Methods Appl Mech Eng 198:102–115

Kanno Y, Ohsaki M, Katoh N (2001) Sequential semidefinite programming for optimization of framed structures under multimodal buckling constraints. Int J Struct Stab Dyn 1:585–602

Kanzow C, Nagel C, Kato H, Fukushima M (2005) Successive linearization methods for nonlinear semidefinite programs. Comput Optim Appl 31:251–273

Luedtke J (2014) A branch-and-cut decomposition algorithm for solving chance-constrained mathematical programs with finite support. Math Program 146:219–244

Luedtke J, Ahmed S (2008) A sample approximation approach for optimization with probabilistic constraints. SIAM J Optim 19:674–699

Luedtke J, Ahmed S, Nemhauser GL (2010) An integer programming approach for linear programs with probabilistic constraints. Math Program 122:247–272

Moon M. -Y., Choi KK, Cho H, Gaul N, Lamb D, Gorsich D (2017) Reliability-based design optimization using confidence-based model validation for insufficient experimental data. J Mech Des (ASME), 139, Article No 031404

Moon M-Y, Cho H, Choi KK, Gaul N, Lamb D, Gorsich D (2018) Confidence-based reliability assessment considering limited numbers of both input and output test data. Struct Multidiscip Optim 57:2027–2043

Noh Y, Choi KK, Lee I, Gorsich D, Lamb D (2011a) Reliability-based design optimization with confidence level under input model uncertainty due to limited test data. Struct Multidiscip Optim 43:443–458

Noh Y, Choi KK, Lee I, Gorsich D, Lamb D (2011b) Reliability-based design optimization with confidence level for non-Gaussian distributions using bootstrap method. J Mech Des (ASME), 133, Article No 091001

Park G-J, Lee T-H, Lee KH, Hwang K-H (2006) Robust design: an overview. AIAA J 44:181–191

Peng X, Li J, Jiang S (2017) Unified uncertainty representation and quantification based on insufficient input data. Struct Multidiscip Optim 56:1305–1317

Picheny V, Kim NH, Haftka RT (2010) Application of bootstrap method in conservative estimation of reliability with limited samples. Struct Multidiscip Optim 41:205–217

Pólik I, Terlaky T (2007) A survey of S-lemma. SIAM Rev 49:371–418

Shapiro A, Dentcheva D, Ruszczyński A. (2009) Lectures on stochastic programming: modeling and theory. SIAM, Philadelphia

Tulabandhula T, Rudin C (2014) Robust optimization using machine learning for uncertainty sets. arXiv:1407.1097

Tütüncü RH, Toh KC, Todd MJ (2003) Solving semidefinite-quadratic-linear programs using SDPT3. Math Program B95:189–217

Valdebenito MA, Schuëller GI (2010) A survey on approaches for reliability-based optimization. Struct Multidiscip Optim 42:645–663

Vielma JP, Ahmed S, Nemhauser GL (2012) Mixed integer linear programming formulations for probabilistic constraints. Oper Res Lett 40:153–158

Yao W, Chen X, Luo W, van Tooren M, Guo J (2011) Review of uncertainty-based multidisciplinary design optimization methods for aerospace vehicles. Progress Aerosp Sci 47:450–479

Yonekura K, Kanno Y (2010) Global optimization of robust truss topology via mixed integer semidefinite programming. Optim Eng 11:355–379

Youn BD, Wang P (2008) Bayesian reliability-based design optimization using eigenvector dimension reduction (EDR) method. Struct Multidiscip Optim 36:107–123

Zaman K, Mahadevan S (2017) Reliability-based design optimization of multidisciplinary system under aleatory and epistemic uncertainty. Struct Multidiscip Optim 55:681–699

Acknowledgments

This work is partially supported by JSPS KAKENHI 17K06633.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: Michael Kokkolaras

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proof of Proposition 2

Appendix: Proof of Proposition 2

This section presents a proof of Proposition 2. The following well-known fact, called the S-lemma, plays a pivotal role.

Proposition 3

Let f,\(g : \mathbb {R}^{n} \to \mathbb {R}\)be quadratic functions. Suppose that there existsan\(\hat {{\boldsymbol {x}}} \in \mathbb {R}^{n}\)satisfying\(f(\hat {{\boldsymbol {x}}}) < 0\).The following two statements are equivalent:

-

(a)

f(x) ≤ 0 ⇒ g(x) ≥ 0,

-

(b)

∃τ ≥ 0:\(g({\boldsymbol {x}}) + \tau f({\boldsymbol {x}}) \ge 0 (\forall {\boldsymbol {x}} \in \mathbb {R}^{n})\).

A proof can be found in a survey paper (Pólik and Terlaky 2007). A slight variance is made in the following.

Proposition 4

Let f,\(g : \mathbb {R}^{n} \to \mathbb {R}\)be quadratic functions. Suppose that there existan\(\hat {{\boldsymbol {x}}} \in \mathbb {R}^{n}\)satisfying\(f(\hat {{\boldsymbol {x}}}) < 0\)andan\(\tilde {{\boldsymbol {x}}} \in \mathbb {R}^{n}\)satisfying\(g(\tilde {{\boldsymbol {x}}}) > 0\).The following two statements are equivalent:

-

(a)

f(x) ≤ 0 ⇒ g(x) ≤ 0,

-

(b)

∃ν ≥ 0:\(f({\boldsymbol {x}}) - \nu g({\boldsymbol {x}}) \ge 0 (\forall {\boldsymbol {x}} \in \mathbb {R}^{n})\).

Proof

It follows from Proposition 2 that (a) holds if and only if there exists τ ≥ 0 satisfying \(\tau f({\boldsymbol {x}}) - g({\boldsymbol {x}}) \ge 0 (\forall {\boldsymbol {x}} \in \mathbb {R}^{n})\). The latter condition is not satisfied with τ = 0, because there exists \(\tilde {{\boldsymbol {x}}}\) such that \(g(\tilde {{\boldsymbol {x}}})>0\). By putting ν = 1/τ, we obtain (b).

□

The following fact can be found in, e.g., Calafiore and El Ghaoui (2004).

Proposition 5

Let \(Q \in {\mathcal {S}}^{n}\) , \({\boldsymbol {p}} \in \mathbb {R}^{n}\) , and \(r \in \mathbb {R}\) . The following two statements are equivalent:

-

(a)

\(\displaystyle \left [\begin {array}{cccccc} {\boldsymbol {x}} \\ 1 \end {array}\right ]^{\top } \left [\begin {array}{cccccc} Q & {\boldsymbol {p}} \\ {\boldsymbol {p}}^{\top } & r \end {array}\right ] \left [\begin {array}{cccccc} {\boldsymbol {x}} \\ 1 \end {array}\right ] \ge 0 (\forall {\boldsymbol {x}} \in \mathbb {R}^{n})\) ,

-

(b)

\(\displaystyle \left [\begin {array}{cccccc} Q & {\boldsymbol {p}} \\ {\boldsymbol {p}}^{\top } & r \end {array}\right ] \succeq 0 \) .

Proof

It is trivial that (ii) implies (i). We show that (i) implies (ii) by the contradiction. Suppose that (ii) does not holds, i.e., there exist \({\boldsymbol {x}}^{\prime } \in \mathbb {R}^{n}\) and \(\xi \in \mathbb {R}\) satisfying

If ξ≠ 0, then (22) is reduced to

which contradicts (i). Alternatively, if ξ = 0, then (22) is reduced to

Put x = ηx′ to see that the left-hand side of (i) is reduced to

which can be viewed as a quadratic function of η, when we fix x′. It follows from (23) that (24) is not bounded below. Therefore, there exists an η such that (24) becomes negative, which contradicts (i). □

As an immediate corollary of Proposition 4 and Proposition 5, we can obtain the following fact.

Proposition 6

Suppose that A,\(B \in {\mathcal {S}}^{n + 1}\)satisfiesA ≱ 0 andB ≰ 0. Thefollowing two statements are equivalent:

-

(a)

\(\displaystyle \left [\begin {array}{cccccc} x \\ 1 \end {array}\right ]^{\top } A \left [\begin {array}{cccccc} x \\ 1 \end {array}\right ] \le 0 \Rightarrow \displaystyle \left [\begin {array}{cccccc} x \\ 1 \end {array}\right ]^{\top } B \left [\begin {array}{cccccc} x \\ 1 \end {array}\right ] \le 0 \) ,

-

(b)

∃ν ≥ 0:A − νB ≽ 0.

Proof of Proposition 2

Observe that (17) can be rewritten equivalently as

which is further rewritten as

Since we assume rankK(x) = d, we have

Therefore, Ψ(x) ≱ 0. Also, from the definition of Gi in (16), we see that Gi ≰ 0. Therefore, we can apply Proposition 6 to (25), which completes the proof. □

Rights and permissions

About this article

Cite this article

Kanno, Y. A data-driven approach to non-parametric reliability-based design optimization of structures with uncertain load. Struct Multidisc Optim 60, 83–97 (2019). https://doi.org/10.1007/s00158-019-02199-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02199-6