Abstract

We develop methodologies to enable applications of reliability-based design optimization (RBDO) to environmental policy setting problems. RBDO considers uncertainty as random variables and parameters in an optimization framework with probabilistic constraints. Three challenges in environmental decision-making problems not addressed by current RBDO methods are efficient methods in handling: (1) non-normally distributed random parameters, (2) discrete random parameters, and (3) joint reliability constraints (e.g., meeting constraints simultaneously with a single reliability). We propose a modified sequential quadratic programming algorithm to address these challenges. An active set strategy is combined with a reliability contour formulation to solve problems with multiple non-normal random parameters. The reliability contour formulation can also handle discrete random parameters by converting them to equivalent continuous ones. Joint reliability constraints are estimated by their theoretical upper bounds using reliability indexes and angles of normal vectors between active constraints. To demonstrate the methods, we consider a simplified airshed example where CO and NOx standards are violated and are brought into compliance by reducing the speed limits of two nearby highways. This analytical example is based on the CALINE4 model. Results show the potential of this approach to handle complex large-scale environmental regulation problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

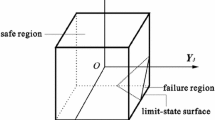

The field of reliability-based design optimization (RBDO) considers a class of problems where uncertainty is treated via random variables, parameters, and probabilistic constraints (Fletcher 1987, Tu et al. 1999). The objective function is deterministic and typically includes moments of the random variables. Equation 1 is a generalized single-objective RBDO formulation with random design variables X, random parameters P, deterministic design variables x and deterministic parameters p. The objective f is a function of deterministic quantities, namely the mean values of all random quantities in the formulation and \({{\mathcal{K}}}\) is the constraint set.

Constraints with random variables are formulated so that the probability of constraint violation is less than or equal to an acceptable failure limit \(P_{{\rm f}, j}\) . Deterministic constraints (i.e., constraints that are not functions of any random quantities) are considered in the probabilistic form as a special case with the failure probabilities \(P_{{\rm f}, j}\) being 0%, or alternatively one can also treat deterministic quantities x, p as special cases of X, P without variability. Equality constraints are not explicitly included in this formulation though they can be addressed via several approaches [e.g., see Chapter 10 of Fletcher (1987)].

Although the RBDO formulation has been the subject of numerous research investigations (for example, see Tu et al. 1999; Rackwitz 2001), three challenges have prevented the use of RBDO in environmental applications, namely the treatments of (1) non-normally distributed random parameters, (2) discrete random parameters, and (3) joint reliability constraints (e.g., meeting CO and NOx regulatory standards simultaneously with a single reliability). In this paper we propose a general solution methodology addressing these issues, and demonstrate the applicability of the solution methodology to a simplified airshed management problem summarized in Fig. 1.

In Fig. 1, we show the specific case of an airshed within which a population is subject to vehicle pollutants generated by two nearby roads. We assume that the airshed is subject to frequent violations of the National Ambient Air Quality Standards (NAAQS) for both carbon monoxide (CO) and nitrogen oxides (NOx) and that these two roads are the exclusive sources of the emissions. To reduce health risks to the exposed population, policymakers have several regulatory options to bring the airshed into compliance with the NAAQS, including fuel economy standards and incentives (National Highway Traffic Safety Administration 2000; Internal Revenue Service 2005), pollutant emissions standards (Office of Mobile Sources 1999), taxation on polluting vehicles (Office of Mobile Sources 1997), reducing traffic density using tolls (Mekky 1995; Yildrim and Hearn 2005), and modifying vehicle speed limits (Solomon 1964). Here we consider a hypothetical case where the policymaker is interested to regulate CO and NOx from these roads by reducing speed limits such that the probability of complying with both standards is achieved with a single reliability (e.g., 90%). While speed limits could be reduced to zero if the pollutant concentrations were the only concern, the policymaker must simultaneously promote human health and economic activity. Therefore the policymaking activity can be considered as an effort to achieve stricter environmental standards with a given reliability while minimizing impact on the economy, consumer choice, safety, and transportation time.

The policymaker’s problem is structurally similar to the class of RBDO problems described in the literature (Tu et al. 1999; Siddall 1983), though this problem has a number of features that distinguish it from previously investigated formulations that have exclusively considered engineering design variables that are continuous, time-invariant, and with symmetric distributions, predominately normal ones. Extending RBDO solution approaches to the airshed problem would require consideration of random parameters that are non-normal (McWilliams et al. 1979; Nadarajah and Kotz 2006; Flynn 2004), skewed (Zhang et al. 1994), time and season dependent (National Climate Data Center (NCDC) 2009), and sometimes discrete (Gilbert 1996). For instance, it is evident that the CO and NOx concentrations in the airshed depend on numerous factors, such as the combustion and emissions control characteristics of the vehicles on the road, driver responses to posted speed limits, traffic density, location of roads and regulatory points of interest, wind speed and direction, and atmospheric mixing conditions that depend on temperature and season. It is not appropriate to treat all of these uncertainties quantitatively using normal distributions. For example, while driver responses to posted speed limits might be reasonably modeled using normal distributions (Berry and Belmont 1951; Hossain and Iqbal 1999; Katti and Raghavachari 1986; Kumar and Rao 1998), the combination of windspeed and direction cannot be reasonably modeled using normal distributions (McWilliams et al. 1979; McWilliams and Sprevak 1980). Although methods for addressing non-normal variables within RBDO have been proposed, the methods are computationally expensive to the point that adding more constraints (as required to model large urban areas) would not be practical (e.g., see Ditlevsen 1981; Hohenbichler and Rackwitz 1981; Melchers 1987). In addition, within environmental systems there can exist discrete random variables that may be inherent to the modeling approach, such as the treatment of pollutant transport under discrete conditions (e.g., day, night, summer, winter, clouds, sun, etc.). To date, RBDO solution methods have not addressed the treatment of such discrete random parameters.

Several research articles considering environmental uncertainty in decision-making have focused on the use of simulation techniques and/or sensitivity analysis (e.g., see Fine et al. 2003; Huang et al. 2000; Vardoulakis et al. 2002). These techniques typically utilize models of remote sensing data from environmental parameter observations created by either Taylor series expansions or sampling techniques such as Monte Carlo Simulation (e.g., Stephens 1994; Andre and Hammarstrom 2000; Frey and Zheng 2002; Freeman et al. 1983; Bergin and Milford 2000; Yegnan et al. 2002). However, these models are not applicable to analytical formulations, such as RBDO, that would hold several advantages for the problem posed in Fig. 1. For instance, one can consider Fig. 1 as a basic building block that could be extended to analyze an entire city. As additional roads, road segments (Benson 1984), and receptors are applied to the basic formulation, the use of sampling techniques to evaluate probabilistic constraints would require too much computation for practical application. Even if the computations could be completed in a reasonable amount of time, finding a means to integrate the sampling techniques into an optimization algorithm would pose a significant challenge and greatly increase computational complexity. A simulation-based approach would also be less amenable to analytical post-optimality studies that provide additional insight.

Here we propose using extensions of the RBDO methodology developed in Chan et al. (2006, 2007) to study environmental policy design problems. This requires using realistic probability distributions that have been developed to analyze pollutant emissions and transport. For instance, Dabberdt et al. estimated the pollution density of a 3-h H2SO4 release accident on a neighborhood using probabilistic distributions (Dabberdt and Miller 2000). Turaglioglu et al. (2005) obtained the density of SO2 and the total suspended particulate in Erzerum, Turkey using probabilistic approaches. Zhang et al. (1994) modeled actual on-road CO and HC emissions data using Γ-distributions. Kentel et al. (2004) analyzed health risks associated with contaminated water probabilistically using both fuzzy and random variables. Benekos et al. (2007) obtained the distribution of groundwater contaminations using a two-stage Monte Carlo analysis.

Research activities that are the most relevant to the work presented here have employed fuzzy and stochastic mathematical programming (FMP and SMP). FMP uses a fuzzy membership function to quantify ‘vagueness’ of an uncertain quantity and then applies possibility theory in analyzing the uncertainty. For example, Lin et al. (2008) developed a hybrid-fuzzy two-stage stochastic energy systems planning model (IFTEM) to deal with various uncertainties that can be expressed as fuzzy or interval numbers. Guo et al. (2008) proposed a semidefinite programming in municipal solid waste (MSW) management under fuzzy uncertainty. SMP, on the other hand, is based on probability theory with information acquired from field measurements. For example, Cooper et al. (1996) surveyed the application of SMP in air pollution managements. Chance-constraints have been used extensively in SMP in studying the impact of regional air quality under uncertainty (Cooper et al. 1996; Liu et al. 2003). Although SMP considers random variables/parameters within an optimization framework, linear chance-constrained formulations have only limited application to environmental problems since they tend to be highly nonlinear.

To address these limitations, in Chan et al. (2007) we extended a filter-based sequential linear programming algorithm to handle RBDO problems with uncertainties modeled as random variables. As discussed in Fletcher and Leyffer (2002), similar structure and convergence arguments could be used in creating a filter-based SQP algorithm. In Chan et al. (2006), we showed that active set strategies can be integrated with the filter-SQP algorithm to improve the efficiency of the algorithm by only calculating constraints that are likely to be active in the next iteration. For problems with a large number of constraints or computational expensive constraints (such as the probability constraints in RBDO), the active set based algorithm significantly improves the computational efficiency. However, the algorithm still focus on uncertainties that are modeled as normal distributions. In addition, current RBDO formulation handles constraints individually while in environmental problems the decisions are made to consider all constraint reliability jointly.

The main contribution of this paper is that the filter-based SQP algorithm developed for RBDO problems in Chan et al. (2007) and the active set strategies proposed in Chan et al. (2006) are extended to handle a primary challenge in environmental decision-making, namely, handling non-normal and discrete random parameters. A further issue is the joint reliability of constraint satisfaction. This issue is important in environmental policymaking since it would be better for the population as a whole to establish a 90% reliability of compliance for all pollutants at all receptor locations in the airshed than it would be to establish a 90% reliability of compliance for each pollutant at each receptor site. Mathematically speaking, at the solution point to Eq. 1, the probability of violating any given constraint is determined by \(P_{{\rm f}, j}\). The greater the number of constraints considered, and the greater the independence of these constraints, the greater is the probability that some constraint in the constraint set will be violated. In other words, from the perspective of Fig. 1, the importance to consider the joint reliability of the constraint set rather than the separate reliability of the individual constraints increases with the number and independence of receptors and pollutants, to the extent that this increases the likelihood of a higher number of active constraints at the optimum.

The reformulation of Eq. 1 to consider joint constraint reliability is provided in Eq. 2. This type of problem is often referred to as a system reliability problem in the literature Kuo et al. (2000). While mathematically system reliability and joint reliability are equivalent, in practice system reliability is a term that is typically used in reference to mixed parallel and series systems (e.g., see Kuo et al. 2000). Joint reliability on the other hand is a statistical concept that can be used more generally to describe

This paper solves the problem of Eq. 2 in a computationally efficient manner using an algorithmic approach that can be readily scaled up to large problems. Section 2 solves Eq. 2 under discrete random parameters, non-normal random parameters, and joint constraint reliability building from the approach detailed in Chan et al. (2006). The application of the extended RBDO approach to the simplified airshed management problem of Fig. 1 is described in Sect. 3 with results and discussion provided in Sect. 4.

2 Methodology

2.1 Optimization algorithm

To solve Eq. 2, we begin with the sequential linear programming (SLP) algorithm for RBDO developed in Chan et al. (2006, 2007). Since the objective function is deterministic, the real challenge of the algorithm lies in the method of calculating the constraints. Let k be the iteration counter and \({\varvec{\mu}}_{\mathbf{X}}^k\) be the current design at the iteration. The SLP algorithm iteratively updates and improves a design until certain convergence criteria are satisfied. At the kth iteration, each probabilistic constraint is converted into an equivalent deterministic one using different reliability methods depending on the curvatures of the constraint and its relative importance on improving the design. This conversion transforms the original nonlinear optimization problem with probabilistic constraints (called the probabilistic NLP) to an equivalent deterministic nonlinear optimization problem (called the deterministic NLP). The SLP algorithm then linearizes the deterministic NLP at the current design as a linear programming subproblem (LP). By solving a sequence of LPs, we then solve the original RBDO problems. A similar structure can be used in creating a filter-based sequential quadratic programming algorithm (SQP) as shown in Fletcher and Leyffer (2002). The main difference is that instead of LP subproblems, QP subproblems are created. The QP subproblems have linear constraints, and SLP method developed in Chan et al. (2006, 2007) can be rapidly extended to SQP. A summary flowchart for the method employed in this paper is provided in Fig. 2.

Once a deterministic QP is formed at \({\varvec{\mu}}_{\mathbf{X}}^k\), standard methods such as those described in Luenberger and Ye (2008) for solving QP problems can then be used. The solution of the QP subproblem is the step vector s k. For convergence purposes, \({\varvec{\mu}}_{\mathbf{X}}^k + {\bf s}^k\) is temporarily assigned as a trial design. A trial design that either improves the objective function values or reduces the constraint violations will formally become the updated design of the iteration (k + 1). The design therefore proceeds to the next design value and iterates as shown in Fig. 2 until converged.

The methods in Chan et al. (2006, 2007) transform probabilistic constraints into deterministic ones using different approaches depending on the activity and the curvature of the constraint. Figure 3 illustrates the decision process for using different approaches, namely the reliability contour approach proposed in this paper, the first order reliability method (FORM), and the second order reliability method (SORM). When constraints are functions of non-normal random parameters, the proposed reliability contour approach will be used. For constraints that are functions of normal random design variables and/or parameters, we will first check whether the constraint is in the current extended active set. Constraints in the extended active set are possibly violated and therefore have larger influences in the next iteration. Principal curvatures of these constraints are then calculated and compared with a predetermined value. When curvatures of these constraints are greater than the predetermined value, using FORM will result in significant errors and therefore SORM is used instead. For constraints that are either unimportant (not in the extended active set) or relatively linear (small curvatures), FORM is used.

Converting probabilistic constraints into deterministic ones requires the majority of computation time. To reduce the computational burden, the active set strategies in Chan et al. (2006) assure that intense calculations are reserved only for constraints that are likely to be active at potentially optimal points being tested. The rest of the constraint set is only approximated to the level needed to determine whether constraints might become active in the next iteration.

A design is feasible when it satisfies all constraint requirements. The satisfaction of a joint reliability constraint at \({\varvec{\mu}}_{\mathbf{X}}^k\) requires calculating the values of all m constraints and the interactions between them. As will be discussed in Sec. 4, the calculation of reliability for joint constraints does not yield analytical solutions and therefore must be evaluated using a computationally intensive sampling approach such as Monte Carlo. Fortunately, the upper and lower bounds within which the exact reliability value should be located can be analytically estimated. Here we use as the feasibility index of the current design point the upper bound of the joint constraint reliability. A design with upper bound less than or equal to a preset value is likely feasible. Then the resultant design can be verified using a simulation technique such as Monte Carlo Analysis.

2.2 Random and discrete parameter uncertainty

Parameters remain fixed during optimization but stochastic parameters (e.g., windspeed and direction) will vary. To clearify our treatment of random parameters, we consider Eq. 3.

The failure probability of a constraint with both random design variables X and random parameters P is calculated by integrating the joint probability density function \(f_{\bf X}, {\bf P}\) over the failure domain g(X, P) > 0 as shown in Eq. 3. Since at a given design point the nominal values of X (i.e., μ X ) are fixed, one can treat a random design parameter as a random design variable with a fixed nominal value. Therefore, when treating parameters as design variables, here we will simply assign a new design vector \({\mathbf{X}}^{\prime}=[{\bf X}, {\bf P}] \). Given the ability to treat parameters as “unchanging variables” in an optimization routine, random parameters P will be included within X in the rest of this paper for notational simplicity.

To illustrate how we will treat discrete random parameters, we now consider the simple example of \(g({\bf X})=X_1+X_2\). Let X 1 have a standard normal distribution and let X 2 have the following discrete distribution:

The probability of constraint violation is calculated using total reliability theory (Ang and Tang 2007) as:

To represent X 2 in a continuous optimization algorithm, a scaled delta function \(\theta(x,\mu,\sigma)=e^{-\left(\frac{{x-\mu}}{ {2\sigma}}\right)^2}\) is used to approximate the probability mass function (PMF) of X 2 as a continuous probability density function (PDF) via Eq. 6.

The accuracy of this approximation depends on the value of σ. The smaller σ is, the better approximation it becomes. The conceptual approach is summarized in Fig. 4. The choice of σ is problem dependent and we suggest that σ is small enough such that the equivalent continuous PDF can be viewed as a reasonable approximate to the discrete PMF. For example in Fig. 4, the PDF for σ = 0.2 smoothes out the discrete behavior of the original PMF as compared with σ = 0.05. In addition the ‘modes’ of the equivalent PDF disconnected with each other when σ = 0.05. Therefore σ = 0.05 is a good PDF approximate to Eq. 5.

As we implemented the proposed method to treat discrete random variables, we found that the converted continuous distributions can have numerical challenges that prevent the arbitrarily small assignment of σ. In short, the selection of the σ for each θ in Eq. 6 will affect the approximation accuracy directly. Appropriate σ values need to be determined for different problems by conducting a pre-processing sensitivity analysis. This is computationally straightforward and only must be conducted one time.

2.3 Non-normal uncertainty

The calculation of probabilistic constraints in Eq. 1 represents the majority of function evaluations during optimization. Several methods have been proposed to improve the efficiency and accuracy of calculating constraint probabilities (Rackwitz 2001). Importantly, each of these methods has essentially focused on Gaussian distributed variables. The presence of non-Gaussian distributions makes calculating the constraint in Eq. 3 more challenging. Methods for dealing with non-Gaussian distributed random quantities have been discussed in the literature (Ditlevsen 1981; Melchers 1987; Hohenbichler and Rackwitz 1983), but they are computationally expensive and not well-suited for large problems as we discuss below.

In existing RBDO solution strategies, significant advantages in computing probabilities follow from the rotational symmetry of a Gaussian distribution. Due to this symmetry, no matter which direction a linear constraint is with respect to the current design point, the shortest distance from the design point to the constraint can be used to calculate the constraint probabilities. As described extensively in the literature (e.g., see Hohenbichler and Rackwitz 1981; Melchers 1987; Hohenbichler and Rackwitz 1983), the first order reliability method (FORM) and the second order reliability method (SORM) use this characteristic of a normal distribution by first converting a normal distribution X ∼N(μ X , σ 2 X ) into a standard normal distribution U ∼N(0, 12) via

After this conversion, the premise of the FORM method is that a constraint probability can be approximated as Φ(−β) where β is the shortest distance from the origin to the constraint function in U-space. The point that lies on the constraint boundary (also called the limit state function) having the shortest distance to the origin is called the most probable point (MPP). Each constraint has its own MPP and therefore the number of MPPs is the same as the number of limit state functions (constraints), m. SORM extends FORM by providing a more accurate estimation of constraint probabilities by considering the principal curvatures \(\varvec{\kappa}\) of each constraint as shown in Eq. 8.

For independent non-normal random variables, it has been shown that a transformation T can be applied to X such that

Several such transformations are available in the literature (Melchers 1987). The simplest transformation is given by Eq. 9 and requires that the cumulative distribution function (CDF) of the ith random variable X i at x i should be the same as the CDF of a standard normal variable at u i .

Due to the nonlinearity of the transformation, a linear constraint in the X-space will become nonlinear in the U-space. Then applying the first order Taylor series expansion to Eq. 9 around a point x e i , one can get the following result:

If the following assignments are made,

then the standard FORM and SORM techniques can be extended to non-normal variables (Melchers 1987; Hohenbichler and Rackwitz 1983).

It can be shown that x e i mathematically is the MPP of a design (Melchers 1987). The typical solution approach for non-normal random variables then involves using the equivalent normal distribution in finding MPPs and then using the MPP to update equivalent normal parameters. In practice this problem has convergence difficulties and is computationally intensive since calculating the location of the MPP for a nonlinear function is by itself an optimization process. The conversion of constraints from the X-space to the U-space via the nonlinear relationship of Eq. 9 is also computationally intensive.

To reduce the number of required calculations in Eq. 3, we start by applying an active set strategy which reduces the number of constraints that must be considered during any iteration of the optimization process. Following the approach detailed in Chan et al. (2006), a working set of constraints \({{\mathcal{G}}}^k\) is established at each design iteration k which only includes constraints that are active or possibly active.

For constraints inside the working set, we propose a means to improve the efficiency of calculating Eq. 3 relative to the approach represented by Eqs. 10 and 11. However, before describing this alternative solution approach for non-normal distributions, we define a reliability contour surface Ψ = 0. For standard normal random variables U, we define a reliability contour surface as a contour satisfying

for any linear constraint l. FORM states that the shortest distance from the origin to limit states l must be \({\beta}= \Upphi^{-1}(P_{\rm f})\). Hence a contour with radius β around the origin is formed in the U-space as Eq. 13.

When design variables are not normal, this common radius contour only exists in the standard U-space. By mapping this common radius contour from U-space to X-space using Eq. 9, a reliability contour surface in the X-space as shown in Eq. 14 is created.

This surface remains constant around the design point and therefore once the contour is created, the computational time at each iteration is reduced dramatically. Therefore, in contrast to previous RBDO approaches, we propose to use this X-space reliability contour surface Ψ(μ X , x) = 0 to identify probabilistic constraint feasibility.

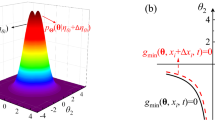

As an example, consider a constraint Pr[g(X 1, X 2) > 0] ≤ P f where both design variables have Weibull distributions, their PDF being in Eq. 15.

where η = 1, α = 1.5. This two dimensional Weibull reliability contour can be written as Eq. 16.

Figure 5 shows this Weibull reliability contour with \(P_{\rm f} = 1\%\) . Obtaining Eq. 14 only requires that all CDFs are analytical, which was also necessary using the method of finding equivalent normal distributions using Eq. 9. However, the reliability contour method has an advantage since the calculations only need to be performed one time, as a pre-processing step, rather than at each design iteration.

99% Reliability contour for Weibull distributions presented in Eq. 16

The accuracy of using the reliability contour surface approach is the same as using FORM and SORM. At every design point, the limit state function is transferred from the X-space to the U-space. In FORM, if the U-space limit state function, g U j , is tangent to the β-sphere, the tangent point is the MPP and the probability is equivalent to the probability of its linearization at the MPP point. A similar statement is true for SORM when the curvature corrected equivalent reliability index β e is used instead of β. The transformation in Eq. 9 ensures that when the MPP in U-space is mapped back to the X-space it will still be the tangent point between the reliability contour surface and the X-space limit state, g j . Let the linearization of the limit state at the MPP in X-space be \(\hat{g}_j\) and in the U-space be \(\hat{g}_j^U\). The probability of violating the constraint in X-space is approximated as the probability of violating \(\hat{g}_j\) which translates to \(\hat{g}^U_j\) in U-space. Hence, using the reliability contour surface method, we can obtain the same accuracy as FORM (via β) and SORM (via β e ).

We can achieve further computational efficiency by not considering constraints that can be proven to be inactive. In the optimization routine, the number of required computations is reduced significantly because the reliability contour method is integrated with an active set strategy, such that only constraints in the working set are considered. In addition the transformation of Eq. 9 is not calculated during any iteration and instead the reliability contour surface in the X-space (Eq. 14) is only calculated once.

At the current design step, a constraint g j is active if it has a common tangent with the reliability contour surface; it is inactive if μX is feasible and does not intersect with Ψ = 0; it is infeasible if μX is infeasible or μ X is feasible but it has more than one intersection with Ψ = 0. The point that lies on the reliability contour with the minimal distance to a constraint is called an MPP estimate. The MPP estimate will be the actual MPP for an active constraint, and will be an estimate of the actual MPP for an inactive constraint. Although the MPP estimate and the actual MPP can be significantly different for an inactive constraint, the constraint value at the MPP estimate will always provide correct feasibility information (i.e., “yes” or “no”) for the probabilistic constraint it considers. This is all that solving the problem requires.

The relationship between actual MPP values and MPP estimates is illustrated in Fig. 6 with two constraints g 1 and g 2. Constraint g 1 is active at the current design point μX while g 2 is inactive. Actual MPPs, denoted as \({\mathbf{x}}_{{{\rm{MPP}}_{j}}},\) are shown as triangles; MPP estimates, \(\hat{{\bf x}}_{{\rm MPP}_j}\) are shown as circles. The actual MPPs are on the constraint boundary and the MPP estimates are on the reliability contour. Since g 1 is active, \({\mathbf{x}}_{{\rm MPP}_1}=\hat{{\mathbf{x}}}_{{\rm MPP}_1}.\)

The location of the MPP estimate on the reliability contour is found by matching the normal vectors (Eq. 17a) and values (Eq. 17b) of the reliability contour and constraint boundary.

To reduce the computational burden in solving Eq. 17a and 17b, we propose the following. For inactive constraints, the exact locations of MPP estimates are not critical as long as they provide the correct feasibility information. Therefore we create a “safe zone” of feasibility that is computationally less intensive than finding the MPP estimate directly on the reliability contour. This safe zone is created with a common radius emanating from the design point that is constant in all directions and equal to the maximum distance from the design point to the reliability contour (radius = ρ in Fig. 6). If the constraint is found to intersect the safe zone reliability contour, then the constraint is included in the active set and the MPP estimate for that constraint is calculated on the actual reliability contour to determine feasibility of the constraint. The radius of the safe zone reliability contour is obtained by solving Eq. 18, with the point x t in Fig. 6 illustrating the maximal radius point that defines the value ρ.

The safe zone reliability contour is centered at the current design point and shown in Fig. 6.

For highly skewed distributions, the actual reliability contour surface will be very asymmetric and therefore using a constant radius safe zone will be conservative. This conservative estimate may result in additional constraints in the active set, but the net computational burden will usually be much lower given that considerable gains are made by avoiding unnecessary MPP calculations on the actual reliability surface. Overall, the method has significant speed advantages with equivalent accuracy to existing methods.

The solution approach using a reliability contour surface and its safe zone can be summarized as follows:

-

Step 1

At the current point μ k X , find the gradient of each constraint;

-

Step 2

Use Eq. 14 to construct the analytical form of reliability contours given all random quantities;

-

Step 3

Find the safe zone reliability contour radius using Eq. 18;

-

Step 4

For each constraint, use the constraint gradient to find the MPP estimate on the safe zone reliability contour;

-

Step 5

For inactive constraints, the safe zone reliability contour is used for calculating MPP estimates;

-

Step 6

For active constraints, the actual reliability contour surface is used to locate the MPP estimates;

-

Step 7

Constraint feasibilities are determined via comparison to their values at the MPP estimates.

Consider constraint g j . The first two steps require calculating ∇g j and \(\Uppsi(\mu_{\mathbf{X}}^k, {\bf x}) =0\) at μ k X . In Step 3, ρ is calculated via Eq. 18 and the safe zone reliability contour is formed as Eq. 19.

The MPP estimate \({\bf x}_{{{\rm{MPP}}_{j}}}\) is calculated as Eq. 20 in Step 4.

In Step 5, if \(g_j(\hat{{\bf x}}_{{\rm MPP}_j}) < 0\), g j is inactive. Otherwise it is active and the actual \({\bf x}_{{{\rm{MPP}}_{j}}}\) needs to be calculated in Step 6 via Eq. (17a–17b). If \(g_j ({\bf x}_{{{\rm{MPP}}_{j}}}) {\le}0\), the current design point μ k X is feasible to Eq. 1. Otherwise it is infeasible and the feasibility results are sent back to the optimizer. Compared with the standard RBDO approach for handling non-normal random parameters, reliability contours need only be calculated once as a preprocessing step. Another benefit of the process is that the integration of reliability contours in optimization algorithms will not have the convergence problems existing in the standard approach between using MPPs to update equivalent normal distributions.

2.4 Joint constraint reliability

Constraints in standard RBDO formulations are written such that the probability of violating each constraint does not exceed an acceptable limit.

Therefore in a hypothetical RBDO formulation with 100 independent constraints (whose constraint sets are mutually exclusive) and a reliability target of 99% in Eq. 4, the expected number of constraint violations out of the 100 constraints is one. Even though this is a very unlikely example, it demonstrates the usefulness of being able to optimize for joint reliability in critical problems such as those considering human and ecosystem health.

In the problem represented by Fig. 1, it is best to consider the probability of any constraint being violated, regardless of whether that constraint corresponds to multiple receptor points or pollutants. If we consider the probability that any constraint is violated, then we must consider a union of events. Let F j be the infeasible (failure) domain of constraint g j . The problem is then written as Eq. 22:

Calculating the constraint feasibility in Eq. 22 is challenging. In the simplest case with only linear constraints and normally distributed random variables, the probability of union of failure events yields the multivariate normal integral in Eq. 23 (Hohenbichler and Rackwitz 1983).

Calculating the exact solutions of Eq. 23 is impractical in most cases. Ditlevsen proposed a method in estimating the upper and the lower bounds within which the exact values of Eq. 23 will be located (Ditlevsen 1979). He calculates the general upper and lower bounds as

The union of multiple failure domains becomes increasingly difficult to calculate with an increasing number of constraints. One advantage of using the upper and lower bounds is that they are calculated from unions of any two failure domains. Once the relationship between two failure surfaces is known, Eq. 24 and 25 are much easier to calculate.

We propose here to use a conservative approach: stating that as long as the upper bound in Eq. 24 is less than or equal to an acceptable failure probability P f, then the overall system has feasible joint reliability (i.e., it is probabilistically feasible for the joint constraint case). To incorporate this approach within the active set strategy, the upper bound of the joint constraint reliability Eq. 24 is calculated only for constraints in the working set \({{\mathcal{G}}}^k\). For constraints that do not have the potential to be violated, it is assumed that their contribution to joint reliability is negligible. The overall process of considering joint constraint reliability in the active set strategy is as follows:

-

1.

Obtain the working set \({{\mathcal{G}}}^k\) at the kth iteration from the optimizer;

-

2.

Calculate individual constraint violations for \(j \notin {{\mathcal{G}}}^k\);

-

3.

Calculate the upper bound of joint constraint reliability for \(j \in {{\mathcal{G}}}^k;\)

-

4.

Return all constraint results to the optimizer.

2.5 Example with non-normal random variables and joint constraint reliability

This section provides a simple example pulling together the concepts from Sects. 3 and 4 for illustration. First we reiterate that the importance of calculating the joint constraint reliability depends on how much the failure domains of active constraints overlap with each other. For problems with a large portion of overlapping failure domains, considering joint reliability might not make a significant difference. However, for problems without overlapping failure domains of active constraints, joint reliability can change results quite significantly.

Figure 7 illustrates a simple problem with two variables and two linear constraints, Eq. 26, where the random variables X 1 and X 2 both have Weibull distributions with shape and scale parameters being 1.

With the probability of failure for each constraint set at 10% in Eq. 26, the optimum is found at [4.697, 4.697]. In this case, the probability of violating both constraints is negligible, in other words the quantity Pr[g 1 > 0 ∩ g 2 > 0] is approximately zero. The probability of violating either constraint becomes

and it is actually 20%, making the joint constraint reliability at this design point only 80%. This means that while there is a 10% chance of violating the first constraint and a 10% chance for violating the second constraint, there is actually a 20% chance of violating either constraint. To achieve a 10% chance of violating either constraint (i.e., to set the joint constraint reliability at 10%), the problem is reformulated as Eq. 28.

The optimum is now [4.004, 4.004] with a joint reliability being 90%. The failure probability of each constraint in this case is 5%. Figure 7 shows the reliability contours for the two problems. The probability of being in the joint failure domain of both constraints simultaneously is small. Therefore, to achieve a high joint reliability the reliability contour becomes larger and the design becomes more conservative.

Example of non-normal random variables considering joint constraint reliability from Eq. 26

3 Air pollution demonstration study: model development

To demonstrate the concepts developed in Sect. 2 and to evaluate their performance in a small-scale example problem with similarity to real-world modeling, we consider the case introduced in Fig. 1 with the angle ω = 50°. In this case study we are interested in reducing tailpipe emissions from vehicles on the highways to bring the area into compliance with the NAAQS by reducing speed limits. Vehicle operational speed has significant impact on tailpipe emissions (Joumard 1986) and fuel consumption (Kenworthy et al. 1986). These relationships are highly nonlinear and subject to inter-vehicle variability as well as operational uncertainties. Setting an appropriate speed limit is inherently a trade-off between driver safety, time savings, vehicle emissions, and fuel economy (Sinha 2000). In what follows, we create a scenario for the objective function and constraints and then apply the methods from Sect. 2. We validate the optimum using Monte Carlo simulation with one million samples.

3.1 Objective function

Several studies have focused on the effects of regulatory speed limits on safety and other economic metrics (e.g., see TRB 1998). Equation 29 describes an objective that considers safety and time savings as a function of speed limit (Ashenfelter and Greenstone 2004).

where

Here D(v) is a measure of safety in terms of property damage (in 1,000 dollars) per 100 million vehicle-miles and is defined as the probability of being involved in a crash multiplied by the severity of each crash at different speeds. Solomon (1983) and Hauer (1971) found that the probability of being involved in a crash per vehicle-mile as a function of on-road vehicle speeds follows a U-shaped curve. Speed values around the median speed have the lowest probability of being in a crash. Crash severity is measured by speed differences before and after the crash (Joksch 1993). Assuming the final speed after the crash is zero, this crash severity measure is proportional to the speed before the crash. Fitting the data from Solomon (1983), we obtain the safety measure given in Eq. 30 as an example of the overall price society might be willing to pay to avoid each accident.

The measure c(v) reflects the value of time savings associated with increased vehicle velocity. Assuming the average wage per person is w (dollars/h), c(v) is used as the cost of an hour spent travelling without working. The overall traveling time for a trip of length s (in km) is

where v(km/h) is the speed of an on-road vehicle. The overall cost (dollars) for trip s is then

Combining the societal costs of property damage (medical and social welfare) and cost of time spent on travelling, the objective function in Eq. 29 represents an economic measure of the pros and cons of driving at a specific speed.

3.2 Constraint functions

The constraints reflect the desire to keep overall emissions of CO and NOx from on-road vehicle tailpipe emissions within the values set by the NAAQS. Here we will consider only the one-hour standard. The current NAAQS states that the one-hour concentration of CO cannot exceed 40 mg/m3 and the annual average concentration of NOx cannot exceed 100 μg/m3. The NAAQS does not include a 1 h limit for NOx, and therefore in this example the one-hour ambient air quality standard for NOx (470 μg/m3) in California is considered here. For the demonstration problem of Fig. 1, we use the infinite line source dispersion model Eq. 33 as described by Gilbert (1996) while recognizing that this simplistic infinite line source can be readily replaced with more complex modeling approaches such as found in CALINE4 (Benson 1984).

In Eq. 33, the index i represents the two highway (infinite line source) systems in Fig. 1, j represents different pollutants (CO and NOx), and q is the emission rates of the pollutants on the roads that are a product of the emissions factors (EF) from the vehicles and the vehicle traffic density T in Eq. 34:

We assume that all vehicles on both highway systems are identical mid-size gasoline-powered passenger vehicles (it is readily possible to add variation here, but for this demonstration example we avoid it). The advanced vehicle simulator (ADVISOR) (Markel et al. 2002) was used to obtain the emission factors for the baseline vehicle at different speeds. A relationship between speed and emissions factors for CO and NOx was estimated as Eqs. 35 and 36 with the norms of the residuals (Montgomery 2005) being 0.0025 and 1.83 × 10−4, respectively.

Although emission rates may differ between vehicles due to operational variation and maintenance, these uncertainties were not considered in this example. Highway traffic is modeled as constant flow per second with each highway having four lanes in each direction. The constant-flow traffic is shown in Fig. 8 and modeled as Eq. 2. This source of variation is also not modeled for demonstration purposes but could be included on the basis of traffic studies.

3.3 Quantification of uncertainties

In Eq. 33, we consider uncertainties that arise from four sources: wind speed and direction, dispersion coefficient and driver speed responses to posted speed limits. The modeling of each of these uncertainties is described below.

3.3.1 Wind speed and direction

The probability density functions of wind speed and wind directions are modeled based on (McWilliams et al. 1979; McWilliams and Sprevak 1980). Unlike the method of Ramirez which uses a two-parameter Weibull distribution (Ramirez and Carta 2005), McWilliams et al. decompose wind speed into two components: one along the prevailing wind direction and one perpendicular to it. We assign the actual wind speed as U h , the prevailing wind direction as ψ, the wind speed along the prevailing wind direction as U y , and the wind speed perpendicular to the prevailing wind direction as U x . Figure 9 illustrates the relationship between the wind components. McWilliams et al. (1979) used this model with the wind speed distributions U y and U x being normally distributed as Eq. 38.

According to McWilliams and Sprevak (1980), the distributions for the observed wind speed U h and wind direction θ (which is the wind direction relative to the prevailing wind direction ψ) can then be calculated since \(U_h=\sqrt{U_x^2+U_y^2}\) and θ = tan(U x /U y ). Equation 39 is the resulting PDF for U h and θ, where I 0 is the modified Bessel function of the first kind.

Using the method described in McWilliams and Sprevak (1980), we obtained wind speed and direction data data from the NCDC (2009) for Detroit, Michigan during the summer evening rush hour (5 pm–6 pm). The data indicate that the prevailing wind direction is at 215° with μ = −0.825 and σ = 3.177 that can be utilized in Eq. 39. The evening rush hour is selected as it is expected for the situation in Fig. 1 that this is the time period when the constraints are most likely to be violated in the hypothetical airshed. Therefore, the 90% reliability can be considered a worst case since it is calculated using the most vulnerable time for the airshed. Figure 10a and b demonstrate a reasonable agreement between data histograms and the PDF predictions using Eq. 39.

3.3.2 Dispersion coefficient

In a Gaussian dispersion model, the dispersion coefficients σ z for both roads are a function of the distance from the site to the line source L. The dispersion coefficient σ z is typically represented by three parameters c, d, f (Martin 1976).

Values of c, d, f depend on an ambient atmospheric condition called ‘stability class’. Stability class describes the effective vertical mixing of a parcel of air existing in the airshed and can be determined as shown in Table 1. Table 2 shows the values of c, d, f for different stability classes (Gilbert 1996). Solar insolation from NCDC data is quantified as cloudiness or sky cover, which is measured from 0/8 to 8/8 with 0/8 being clear sky and 8/8 being overcast. 0/8 to 3/8 is considered as strong insolation, 3/8 to 6/8 is considered as moderate insolation, and above 6/8 is considered considered as slight insolation. The data for the Detroit area reveal that solar insolation in summer is independent of wind speed and reasonably modeled as a uniform distribution.

Given distributions of solar insolation from (NCDC 2009) and the wind speed from this study, distributions of c, d, f can be obtained by the stability class distribution from Table 1 and the corresponding dispersion coefficient values from Table 2. The resulting distribution of the c, d, f are discrete and the dispersion coefficient σ z is calculated as shown in Eq. 41. The existence of discrete variables in the Gaussian dispersion model means that a probabilistic constraint will be formulated as Bayesian conditional probability such that continuous distributions are used to approximate the distributions of c, d, f as discussed in Sect. 2.

where

3.3.3 Vehicle speed

A Federal Highway Administration report (FHWA 1995) indicates that for highways with speed limit 55 MPH, an average speed of 56.9 MPH and a SD of 7 are observed. Many studies in the literature also show that the observed vehicle speeds can be reasonably modeled using normal distributions (Berry and Belmont 1951; Hossain and Iqbal 1999; Katti and Raghavachari 1986; Kumar and Rao 1998). Based on these studies, we assume that the actual vehicle speeds on both roads follow normal distributions with mean equaling to the speed limit and a standard deviation σ V = 7 MPH (FHWA 1995). The original speed limits on both highways are 70 MPH.

4 Air pollution study: results and discussions

In this study, we have two design variables (speed limits of two nearby highways), one joint reliability with two probabilistic constraints on CO and NOx emissions regulations. Four random parameters are considered including wind speed, wind direction, on-road vehicle speeds and dispersion coefficient. Three different scenarios are formulated using the objective function and constraints as described in Sect. 3. The first scenario is a deterministic optimization problem, as shown in Eq. 42, that does not consider uncertainties. The second scenario considers uncertainties from wind speed, wind direction, dispersion coefficient as well as actual on-road vehicle speeds with constraint probabilities formulated separately as shown in Eq. 43. The third scenario, as shown in Eq. 44, is the RBDO formulation with joint probabilities. In all scenarios, the speed limits of both highways are set to be identical. In practice speed limits can be set independently. Policy makers can even partition one road into several segments and then assign each segment its own speed limit to match real-world problems.

Scenario 1: Deterministic optimal speed limit without considering uncertainties

Solving Scenario 1 (Eq. 42), it is found that none of the air quality constraints are active and the optimum is v* = 81.9 MPH (36.6 m/s). At this speed limit, pollution concentrations during rush hour at the receptor location of Fig. 1 are 12 mg/m3 for CO and 470 μg/m3 for NOx with the NOx constraint being active. After adding the uncertainties of wind speed, wind direction, stability class, and actual on-road vehicle random design variables/parameters to this deterministic optimum, it is found that the reliability of satisfying NAAQ standards is only 72.0% with respect to the CO standard and only 48.0% with respect to the NOx standard. The overall societal cost is $597,910. The example demonstrates that when significant variability in system parameters exists, constraint violations can occur even when constraints are not active deterministically. Therefore, without incorporating uncertainty into design optimization, the compliance of NAAQS using deterministic optimization may not be sufficiently reliable.

Scenarios 2 and 3: Probabilistic optimal speed limit considering uncertainties

If the desired compliance reliability is 90% for each constraint (Scenario 2, Eq. 43), the optimum speed limit is reduced to 62.9 MPH (28.1 m/s). The overall societal cost increases to $725,180, an approximately 21.3% increase relative to the 70 MPH speed limit associated with the constraint to achieve the NAAQS standards. By considering joint constraint reliability in Scenario 3 at 90%, the optimal speed limit is reduced to 44.3 MPH (19.8 m/s). Table 3 compares the results between the different scenarios. The probabilistic optimum of Eq. 43 has reliability to comply to current NAAQS of NOx and CO as 89.9 and 90.1%, respectively. The joint reliability at this speed limit is 79.9%. The probabilistic optimum of Eq. 44 has the desired joint reliability (90.0%). The example demonstrates that extensions of the active set SQP algorithm can integrate with reliability contour approach in solving problems with non-normal and/or discrete distributed random parameters with joint constraint reliability.

After solving this problem, we note that the complexity of the CDFs and PDFs in the problem makes sampling methods challenging to apply in this example. Typically, random samples are created by generating N random samples ξ i from [0,1] uniformly. Inverse CDFs are then used to find the corresponding random sample via Eq. 45.

Obtaining the inverse of CDF is computationally intensive and a large number of random samples need to be generated to maintain high accuracy. Our experience in this example shows that the computational requirement for generating random samples prohibit many sampling techniques from being plausible.

Overall, this example has shown that the RBDO methodology outlined in Sect. 2 is straightforward and efficient to apply. Running the case study which has two design variables (speed limits for both highways) and one joint reliability with four constraints takes 32 s on an Windows quad-core 2.4GHz with 4GB of memory. One issue we encountered is that calculating the maximum radius in Eq. 18 as a pre-processing step in the approach appears to be dependent on the algorithm used. For a gradient-based algorithm, a good starting point must be selected such that the problem can converge while satisfying the equality constraint. On the other hand, non-gradient based algorithms do not require the starting point knowledge as an a priori but can have difficulties satisfying the equality. Therefore we found that a combination of both algorithms with good reliability. A non-gradient based algorithm is first applied and the optimum is selected as the starting point for the gradient based algorithm. Although it is a process requiring significant effort, Eq. 18 needs only to be executed once for the entire problem. This modest amount of pre-processing opens the door to rapid calculation of design optima for large scale problems with non-normal variables/parameters and joint reliability.

The results of this case study naturally depend on the assumptions made in the model and are only presented here to show the feasibility of the approach and to suggest that the method can be applied to problems of a larger scale. For instance, consider a large city with q number of roads. Let the speed limits of all road be the policy decisions to be made, therefore the size of q. If r types of pollutants are of concern, the overall constraints have size r × q. The main difference from the demonstrated two-road example is the construction of the reliability contour. If one speed limit is assigned to each road, the overall design freedom is q. As a pre-processing step, a reliability contour and ‘safe zone’ surface of dimension q need to be formed. Once the reliability contour and the safe zone are both obtained, the policy decision problem simply scales up by the demonstration by q. Compared with the existing approach where adding one constraint would require an additional equivalent normal distribution finding process, the proposed method becomes more advantageous with the increase of problem dimensions. As the number of policy decision variables increases, complicated environmental regulation setting in airsheds and watersheds can still be efficiently treated using the RBDO extensions proposed in this paper. In this way analytical RBDO approaches can be applied to important stochastic environmental research problems.

5 Conclusions

In this research, we proposed a reliability contour approach for solving RBDO problems with non-normally distributed random parameters and discrete random parameters, and a approach that calculates the upper bound of the joint reliability in constraint evaluation. Both approaches are integrated in a modified sequential quadratic programming algorithm with active set strategies to solve problems with a large number of constaints efficiently. The proposed methodology was demonstrated on a simplified airshed example where CO and NOx standards are violated and to be brought into compliance by changing the speed limits of two nearby highways. The extended RBDO framework developed here can be applied to complex environmental regulations setting in airsheds and watersheds given its computational efficiency and accuracy.

References

Andre M, Hammarstrom U (2000) Driving speeds in europe for pollutant emissions estimation. Transp Res D: Transp Environ 5(5):321–335

Ang H-S, Tang WH (2007) Probability concepts in engineering: emphasis on application in civil and environmental engineering, 2nd edn. Wiley, New York

Ashenfelter O, Greenstone M (2004) Using mandated speed limits to measure the value of a statistical life. J Polit Econ 112(1):2

Benson P (1984) CALINE4—a dispersion model for predicting air quality concentrations near roadways. Technical Report FHWA/CA/TL-84/15, California Department of Transportation

Bergin MS, Milford JB (2000) Application of bayesian monte carlo analysis to a lagrangian photochemical air quality model. Atmos Environ 34(5):781–792

Berry DS, Belmont DM (1951) Distribution of vehicle speeds and travel times. In: Proceedings of the Berkeley symposium on mathematical statistics and probability, pp 589–602

Benekos ID, Shoemaker CA, Stedinger JR (2007) Probabilistic risk and uncertainty analysis for bioremediation of four chlorinated ethenes in groundwater. Stoch Environ Res Risk Assess 21(4):375–390

Chan K-Y, Skerlos SJ, Papalambros PY (2006) Monotonicity and active set strategies in probabilistic design optimization. J Mech Des 128(4):893–900

Chan K-Y, Skerlos SJ, Papalambros PY (2007) An adaptive sequential linear programming algorithm for optimal design problems with probabilistic constraints. J Mech Des 129(2):140–149

Cooper W, Hemphill H, Huang Z, Li S, Lelas V, Sullivan D (1996) Survey of mathematical programming models in air pollution management. Eur J Oper Res 96(1):1–35

Dabberdt WF, Miller E (2000) Uncertainty, ensembles and air quality dispersion modeling: applications and challenges. Atmos Environ 34(27):4667–4673

Ditlevsen O (1979) Narrow reliability bounds for structural systems. J Struct Mech 7(4):453–472

Ditlevsen O (1981) Principle of normal tail approximation. ASCE J Eng Mech Div 107(6):1191–1208

FHWA (1995) Travel speeds, enforcement efforts, and speed-related highway statistics. Technical Report FHWA-SA-95-051, U.S. Department of Transportation

Fine J, Vuilleumier L, Reynolds S, Roth P, Brown N (2003) Evaluating uncertainties in regional photochemical air quality modeling. Annu Rev Environ Resour 28:59–106

Fletcher R (1987) Practical methods of optimization. Wiley, New York

Fletcher R, Leyffer S (2002) Nonlinear programming without a penalty function. Math Program Ser B 91(2):239–369

Flynn MR (2004) The beta distribution—a physically consistent model for human exposure to airborne contaminants. Stoch Environ Res Risk Assess 18(5):306–308

Freeman DL, Egami RT, Robinson NF, Watson JG (1983) Methodology for propagating measurement uncertainties through dispersion models. In: Proceedings of 76th air pollution control association annual meeting, vol 2, pp 15

Frey HC, Zheng J (2002) Quantification of variability and uncertainty in air pollutant emission inventories: Method and case study for utility nox emissions. J Air Waste Manage Assoc 52(9):1083–1095

Gilbert (1996) Introduction to environmental engineering and science, 2nd edn. Prentice-Hall, New Jersey

Guo P, Huang G, He L, Sun B (2008) Itssip: Interval-parameter two-stage stochastic semi-infinite programming for environmental management under uncertainty. Environ Modell Softw 23(12):1422–1437

Hauer E (1971) Accidents, overtaking and speed control. Accid Anal Prevent 3(1):1–13

Hohenbichler M, Rackwitz R (1981) Non-normal dependent vectors in structural safety. ASCE J Eng Mech Div 107(6):1227–1238

Hohenbichler M, Rackwitz R (1983) First-order concepts in system reliability. Struct Safety 1(3):177–188

Hossain M, Iqbal GA (1999) Vehicular headway distribution and free speed characteristics on two-lane two-way highways of Bangladesh. J Inst Eng (India) 80(2):77–80

Huang H, Akutsu Y, Arai M, Tamura M (2000) Two-dimensional air quality model in an urban street canyon: evaluation and sensitivity analysis. Atmos Environ 34(5):689–698

Internal Revenue Service (2005). Publication 510—gas guzzler tax. Department of Treasury

Joksch H (1993) Velocity change and fatality risk in a crash—a rule of thumb. Accid Anal Prevent 25(1):103–104

Joumard R (1986) Influence of speed limits on road and motorway on pollutant emissions. Sci Total Environ 59:87–96

Katti BK, Raghavachari S (1986) Modeling of mixed traffic speed data as inputs for the traffic simulation models. Highway Research Bulletin, vol 28. Indian Road Congress, New Delhi, pp 35–48

Kentel E, Aral MM (2004) Probabilistic-fuzzy health risk modeling. Stoch Environ Res Risk Assess 18(5):324–338

Kenworthy JR, Rainford H, Newman PWG, Lyons TJ (1986) Fuel consumption, time saving and freeway speed limits. Traffic Eng Control 27(9):455–459

Kumar VM, Rao SK (1998) Headway and speed studies on two-lane highways. Indian Highways 26(5):23–36

Kuo W, Prasad VR, Tillman FA, Hwang C-L (2001) Optimal reliability design: fundamentals and applications. Cambridge University Press, New York

Lin Q, Huang G, Bass B, Qin X (2008) IFTEM: an interval-fuzzy two-stage stochastic optimization model for regional energy systems planning under uncertainty. Energy Policy. doi:10.1016/j.enpol.2008.10.038

Liu L, Huang GH, Liu Y, Fuller GA, Zeng GM (2003) A fuzzy-stochastic robust programming model for regional air quality management under uncertainty. Eng Optim 35(2):177–199

Luenberger D, Ye Y (2008) Linear and nonlinear programming, 3rd edn. Springer, New York

Office of Mobile Sources (1999) Emission facts—the history of reducing tailpipe emissions. Technical Report EPA420-F-99-017, Environmental Protection Agency, USA

Office of Mobile Sources (1997) Minor amendments to inspection/maintenance program evaluation requirements. Technical Report EPA420-F-97-052, Environmental Protection Agency, USA

Martin DO (1976) The change of concentration standard deviation with distance. J Air Pollut Control Assoc 26(2):145–146

Markel T, Brooker A, Hendricks T, Johnson V, Kelly K, Kramer B, O’Keefe M, Sprik S, Wipke K (2002) Advisor: a systems analysis tool for advanced vehicle modeling. J Power Sources 110(2):255–266

McWilliams B, Sprevak D (1980) Estimation of the parameters of the distribution of wind speed and direction. Wind Eng 4(4):227–238

McWilliams B, Newmann MM, Sprevak D (1979) Probability distribution of wind velocity and direction. Wind Eng 3(4):269–273

Mekky A (1995) Toll revenue and traffic study of highway 407 in toronto. Transp Res Rec 1498:5–15

Melchers RE (1987) Structural reliability—analysis and prediction. Ellis Horwood Limited, Chichester

Montgomery DC (2005) Design and analysis of experiments, 6th edn. Wiley, New York

Nadarajah S, Kotz S (2006) Sums, products, and ratios for downton’s bivariate exponential distribution. Stoch Environ Res Risk Assess 20(3):164–170

National Climate Data Center (NCDC) (2009). http://www.ncdc.noaa.gov/oa/ncdc.html. U.S. Department of Commerce

National Highway Traffic Safety Administration (2000) Automobile fuel economy. Technical Report Title 49 U.S. Code, Chap 329, U.S. Department of Transportation

Rackwitz R (2001) Reliability analysis—a review and some perspective. Struct Safety 23(4):365–395

Ramirez P, Carta JA (2005) Influence of the data sampling interval in the estimation of the parameters of the weibull wind speed probability density distribution: a case study. Energy Convers Manage 46(15–16):2419–2438

Siddall JN (1983) Probabilistic engineering design—principles and applications. Marcel Dekker, New York

Sinha KC (2000) To increase or not to increase speed limit: a review of safety and other issues. In: Proceedings of the Conference on Traffic and Transportation Studies, pp 1–8

Solomon D (1964) Accidents on main rural highways related to speed, driver, and vehicle. Technical report, U.S. Department of Commerce/Bureau of Public Roads

Stephens RD (1994) Remote sensing data and a potential model of vehicle exhaust emissions. J Air Waste Manage Assoc 44(11):1284–1292

Transportation Research Board (TRB) (1998) Managing speed, review of current practice for setting and enforcing speed limits. National Research Council, Washington, DC, 1998. Special Report 254

Tu J, Choi KK, Park YH (1999) New study on reliability-based design optimization. J Mech Des 121(4):557–564

Turalioglu FS, Bayraktar H (2005) Assessment of regional air pollution distribution by point cumulative semivariogram method at erzurum urban center, turkey. Stoch Environ Res Risk Assess 19(1):41–47

Vardoulakis S, Fisher BEA, Gonzalez-Flesca N, Pericleous K (2002) Model sensitivity and uncertainty analysis using roadside air quality measurements. Atmos Environ 36(13):2121–2134

Yegnan A, Williamson DG, Graettinger AJ (2002) Uncertainty analysis in air dispersion modeling. Environ Modell Softw 17(7):639–649

Yildirim MB, Hearn DW (2005) A first best toll pricing framework for variable demand traffic assignment problems. Transp Res B Methodol 39(8):659–678

Zhang Y, Bishop GA, Stedman DH (1994) Automobile emissions are statistically and gamma-distributed. Environ Sci Technol 28(7):1370–1374

Acknowledgments

This research was partially supported by the Automotive Research Center, a U.S. Army Center of Excellence in Modeling and Simulation of Ground Vehicles at the University of Michigan and by the National Science Council in Taiwan under NSC95-2218-E-006-045. These supports are gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chan, KY., Papalambros, P.Y. & Skerlos, S.J. A method for reliability-based optimization with multiple non-normal stochastic parameters: a simplified airshed management study. Stoch Environ Res Risk Assess 24, 101–116 (2010). https://doi.org/10.1007/s00477-009-0304-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-009-0304-4