Abstract

In this paper we discuss the adjoint sensitivity analysis and optimization of hysteretic systems equipped with nonlinear viscous dampers and subjected to transient excitation. The viscous dampers are modeled via the Maxwell model, considering at the same time the stiffening and the damping contribution of the dampers. The time-history analysis adopted for the evaluation of the response of the systems relies on the Newmark-β time integration scheme. In particular, the dynamic equilibrium in each time-step is achieved by means of the Newton-Raphson and the Runge-Kutta methods. The sensitivity of the system response is calculated with the adjoint variable method. In particular, the discretize-then-differentiate approach is adopted for calculating consistently the sensitivity of the system. The importance and the generality of the sensitivity analysis discussed herein is demonstrated in two numerical applications: the retrofitting of a structure subject to seismic excitation, and the design of a quarter-car suspension system. The MATLAB code for the sensitivity analysis considered in the first application is provided as “Supplementary Material”.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Energy dissipation systems are technologies able to improve the performance of structural systems subject to transient excitations. Their purpose is to dissipate or reduce part of the energy generated by the dynamic excitation and transferred to these systems. Thus, it is possible to reduce the deformation demand upon the structural systems considered. In fact, if properly designed, they can reduce specific systems’ responses of interest, such as selected displacements and accelerations. These technologies can be divided into passive systems, active and semi-active systems (Soong and Dargush 1997; Constantinou et al. 1998). In each of these categories, there are a wide variety of different technologies nowadays well developed and widely used. Examples of their applications are: The seismic retrofitting of buildings subject to seismic excitation with viscous dampers (e.g. Takewaki 1997; Lavan and Levy 2005; Kanno 2013) and tuned-mass dampers (e.g. Almazán et al. 2012; Daniel and Lavan 2015); The reduction of wind and earthquake induced vibrations in high-rise buildings (e.g. Yang et al. 2004; Smith and Willford 2007; Infanti et al. 2008); The seismic protection of bridges with viscous dampers (e.g. Infanti et al. 2004; Simon-Talero et al. 2006; Infanti and Castellano 2007); The control of human-induced vibrations (Caetano et al. 2010; Casado et al. 2013); The vibration control of offshore wind turbines (e.g. Brodersen and Høgsberg 2016); The shock absorption for high-speed boat seats (e.g. Klembczyk and Mosher 2000); The structural control of dynamic blast loading (e.g. Miyamoto and Taylor 2000); The design of passive and semi-active suspension systems for cars subject to road excitation (e.g. Georgiou et al. 2007; Suciu et al. 2012).

The efficacy of the energy dissipation systems is strongly related to their location in the system that needs to be controlled and to their size. For these reasons, often their design is based on optimization, to address the placement and sizing of these devices. For instance, in Lavan and Amir (2014) the authors distribute and size linear fluid viscous dampers in linear structures subject to seismic excitation with an optimization approach based on Sequential Linear Programming (SLP). This procedure is then further enhanced in Pollini et al. (2016) in order to consider a more complete and realistic objective cost function. In Kanno (2013) the author presents a mixed-integer programming approach for the optimal placement of viscous dampers in shear frames, where the damping coefficients are selected from a discrete set of values. A discrete optimization approach is presented in Dargush and Sant (2005), where different types of passive dissipation systems (i.e. metallic plate dampers, fluid viscous dampers, viscoelastic solid dampers) are sized and placed with genetic algorithms. Also in this case, the properties of the devices are selected from a predefined set of available sizes. In Georgiou et al. (2007) Georgiou, Verros, and Natsiavas present a methodology for the multi-objective optimization of the suspension damping and stiffness parameters of quarter-car models subjected to random road excitation. The authors consider both passive and semi-active suspension systems.

An important aspect related to optimization-based design methodologies is the computational effort that they require. This aspect can influence the preference for one of these methodologies over more traditional design techniques. Broadly speaking, there are two major sets of optimization approaches: the ones that rely on gradient information (i.e. first or second order gradients of the functions involved), and the ones that do not require this information and that are often referred to as zero order methods (e.g. genetic algorithms). These two sets have different advantages and disadvantages. Gradient-based approaches typically require reduced computational efforts, and more sophisticated mathematical tools. They are also less affected by the size of the problem considered. On the other hand, gradient-based optimization approaches are less robust from a computational point of view, meaning that, depending on the complexity of the problem considered, they may show difficulties in converging smoothly to a local minimum. For this reason they require users that are more familiar with these types of approaches. Nevertheless, they are typically preferred because they achieve good final designs with reasonable computational resources, thus promoting the use of optimization-based methodologies among practitioners and researchers in their everyday activities. On the other hand, zero order methods are more simple and easy to get started with, but they require very high computational resources that in many cases become even prohibitive. Moreover, the computational effort significantly increase with the complexity and the size of the problems considered. For these reasons, a significant effort and attention has been directed towards the development and application of gradient-based design approaches in several engineering fields.

A key element for the development of a gradient-based optimization approach is the sensitivity analysis (Haftka and Adelman 1989; Tortorelli and Michaleris 1994). In general there are three different approaches for the calculation of the sensitivities: The finite difference method; The direct differentiation method; And the adjoint variable method. The finite difference method is undoubtedly the most easy to implement, and it is typically used for comparison and verification of the sensitivity obtained with more accurate methodologies. The sensitivities obtained with the direct and adjoint method are identical and exact with respect to the numerical solution (Tortorelli and Michaleris 1994). However, the adjoint method is to be preferred when there are less functions to differentiate in comparison to the number of design variables, as in the applications towards which this paper is directed. In the adjoint variable method, the sensitivity is calculated by first expanding the function that needs to be differentiated into an augmented function and subsequently by differentiating the resulting augmented function. In principle, there are two ways to perform the adjoint sensitivity analysis. The first consists of differentiating the augmented function first, and in introducing only at the end the particular discretization adopted for the numerical solution of the problem. This approach is also referred to as “differentiate-then-discretize”. The second way consists in differentiating directly the discretized version of the problem at hand (Tortorelli and Michaleris 1994; Jensen et al. 2014). This approach, instead, is referred to as “discretize-then-differentiate”. In the literature there are several examples of optimization-based approaches that relied on the differentiate-then-discretize type of adjoint sensitivity analysis. Some dealt with the design of linear viscous dampers in linear structures subject to seismic excitation (Lavan and Levy 2006a, 2006b); others with linear viscous dampers in nonlinear structures subject to seismic excitation (Lavan and Levy 2005, 2010). Other examples, but in a different context, include: The work presented in (Turteltaub 2005) where Turteltaub proposed an algorithm to optimize the performance of a two-phase composite under dynamic loading; A topology optimization-based method for the systematic design of one-dimensional band gap and pulse converting structures, (Dahl et al. 2008); The design of non-linear optical devices based on topology optimization (Elesin et al. 2012). However, as it has been pointed out in Tortorelli and Michaleris (1994) and more recently in Jensen et al. (2014), optimization procedures based on a “differentiate-then-discretize” type of adjoint sensitivity analysis may lead to inconsistency errors. These may be caused, for example, by different time discretizations between the time-history analysis for the structural response and the sensitivity analysis. In order to avoid these sources of inconsistency, it is recommended to rely on a “discretize-then-differentiate” type of adjoint sensitivity analysis. For example, this type of adjoint sensitivity analysis is used in Le et al. (2012) for the topology optimization of linear elastic structures with spatially varying material micro-structures to tailor energy propagation. Also in Nakshatrala and Tortorelli (2016) the authors rely on a discretize and then differentiate type of adjoint sensitivity analysis, but in this case for the topology optimization of nonlinear elastic material micro-structures for tailored energy propagation. Very recently, the sensitivity analysis for dynamic systems non-viscously damped has been discussed in Yun and Youn (2017). Essentially, Yun and Youn extended the methodology discussed in Jensen et al. (2014) to the case of linear structures with damping forces which depend on the history of motion via convolution integrals. The discretize-then-differentiate adjoint variable method was also adopted recently in Pollini et al. (2017) for the seismic retrofitting of 3-D linear frame structures with nonlinear viscous dampers. However, to the best of the authors knowledge, the adjoint sensitivity analysis for hysteretic dynamic systems with nonlinear viscous dampers has not yet been developed.

Thus, in this paper we formulate a consistent adjoint sensitivity analysis for the optimization of hysteretic dynamic systems subject to transient excitation and equipped with nonlinear viscous dampers. The responses of interest are evaluated with nonlinear time-history analyses based on the Newmark-β method. The equilibrium in each time-step is achieved by means of the Newton-Raphson and the Runge-Kutta methods. The dampers are modeled with the Maxwell’s model for visco-elasticity. In this way, both the stiffening and damping contributions of the dampers are accounted for. The adjoint sensitivity analysis discussed herein is presented through a step-by-step procedure, and its validity and generality is then demonstrated with two design applications based on optimization: the seismic retrofitting of a structure subject to seismic excitation, and the design of a quarter-car suspension system.

The remainder of the paper is organized as follows: In Section 2 we present the governing equations of the systems considered, focusing on the equations for the dynamic equilibrium, and for the nonlinear behavior of the structures and dampers considered; The adjoint sensitivity analysis is then discussed with detail in Section 3; The general results of Section 3 are then applied to specific optimization problems in Section 4, followed by conclusions in Section 5.

2 Governing equations

In this paper we consider nonlinear systems equipped with nonlinear dampers and subjected to transient excitations. In what follows, we will focus on a single degree of freedom system for the sake of clarity. It should be noted that the methodology discussed herein can be generalized with no particular effort to the case of a multi-degree-of-freedom system. The governing equations for the dynamic equilibrium of these systems are the following:

where t f is the final time; u(t), \(\dot {u}(t)\), and \(\ddot {u}(t)\) are the displacement, velocity, and acceleration of the system with respect to a ground reference system at time t; m is the mass of the system; c s the inherent damping of the system; f s (t) the restoring forces generated by the structural elements; f d (t)the resisting forces produced by the nonlinear viscous dampers; and P(t)is the external load acting on the system. In the following sections we will introduce the specific models considered for the description of the nonlinear behavior of the added damping system (i.e. \(g_{d}\left (f_{d}(t),\dot {u}(t),u(t),t\right )\)) and of the structural components (i.e. \(g_{s}\left (f_{s}(t),\dot {u}(t),u(t),t\right )\)).

2.1 Nonlinear fluid viscous damper model

In what follows, we present the model considered for the definition of the mechanical behavior of the nonlinear dampers considered in this work. In particular, we model the dampers with the Maxwell’s model considering a spring and a dashpot in series, as shown in Fig. 1. The spring accounts for the stiffening property of the damper and its supporting member, and the dashpot for its viscous property.

Equation (2) defines the compatibility and equilibrium equations of the damper model considered:

where u e l (t) is the elastic deformation in the spring at time t, u v d (t) is the deformation in the dashpot at time t, \(\dot {u}_{el}(t)\) and \(\dot {u}_{vd}(t)\) are the velocities in the spring an in the dashpot. Similarly, f e l (t)and f v d (t)are the forces in the spring and in the dashpot as functions of time, which are also equal because of equilibrium considerations. In the damper element, the spring is characterized by a stiffness coefficient k d . Therefore, the output force can be calculated with the following equation:

The viscous property of the dashpot is defined by a fractional power law (Seleemah and Constantinou 1997). More precisely, the nonlinear behavior of the dashpot is defined by the damping coefficient c d , and the fractional exponent α:

where s g n is the sign function. We observe that for α = 1(4) represents the force-velocity behavior of a linear viscous damper. Similarly, for α → 0 (4) mimics the behavior of a friction damper. If we invert the force-velocity relation expressed in (4), we obtain the following velocity-force relation for the dashpot:

By deriving (3) with respect to time, and substituting \(\dot {u}_{el}(t)\) with an equivalent formulation based on the compatibility equation presented in (2), we obtain the following equation:

Last, we can observe that the function g d of (1) can now be written explicitly as follows:

2.2 Hysteretic model for inelastic systems

We introduce now the nonlinear model considered for the definition of the resisting force f s (t). The model considered is represented in Fig. 2. In particular, we consider a hysteretic spring with elastic-perfectly plastic behavior, and a smooth transition from the elastic to the plastic range (Sivaselvan and Reinhorn 2000). The stiffness k s is formulated as follows:

where the exponent N controls the smoothness of the transition from the elastic to the inelastic range, k 0 is the elastic stiffness, f s (t) the current force in the spring at time t, f y is the yielding force that marks the limit for the elastic range, \(\dot {u}(t)\) is the current velocity impressed on the spring at time t.

The force in the spring can then be expressed in a differential form:

and the function g s introduced in (1) can then be written as follows:

It is now possible to rewrite (1) more explicitly, introducing the functions g s and g d that have already been discussed.

It should be noted that the methodology discussed herein can accommodate any desired formulation for the functions g s and g d . Figure 3 represents schematically the single degree of freedom system that we will consider in the following development.

2.3 Time-history analysis

The differential equations (11) are solved with an algorithm based on the Newmark-β integration scheme. In order to solve problem (1), we first discretize it in time:

for i = 1,…,N. Initially the problem is divided into N Δt identical time intervals. If in a specific time interval the algorithm does not achieve an equilibrium point with good approximation, the time interval is reduced into n sub-intervals. More details on this aspect will be provided later. In every time-step Δt i+ 1 = t i+ 1 − t i we rely on the constant acceleration method, which is a particular case of the more general Newmark-β method:

In every time-step, the first order differential equations for f s (t) and f d (t) can be seen as two Cauchy problems with initial suitable conditions. Briefly, a Cauchy problem with initial conditions can be written as follows:

The Cauchy problem can be approximated with the family of Runge-Kutta (RK) methods. These methods have the advantage of being one-step methods, which depend only on information related to time t i for approximating the problem at time t i+ 1. Therefore, they are naturally suited for being used in algorithms which rely on heterogeneous time discretizations. However, they achieve a good accuracy at the price of an increased number of function evaluations in each time-step, compared to multi-step methods (Quarteroni et al. 2007). For this reason, we will use a fourth-order RK method similarly to Kasai and Oohara (2001) and Oohara and Kasai (2002). This allows us to achieve a good compromise between accuracy of the approximation and complexity of the method. It should be noted that the complexity of the integration scheme directly affects the computational effort required in the sensitivity analysis, as it will be discussed in a later section.

The explicit four-stage RK method over the time step Δt i+ 1 = t i+ 1 − t i can be written as follows:

The integration scheme (15) will be used for the approximation of both \(\dot {f}_{s,i}\) and \(\dot {f}_{d,i}\) in each time-step. Essentially, in every time-step we approximate u i , \(\dot {u}_{i}\), and \(\ddot {u}_{i}\) through the Newmark-β method. The resisting forces of the structural components and of the damper are approximated with the fourth order RK method presented in (15). The equilibrium in each point in time is achieved by means of an iterative procedure based on the Newton-Raphson method. In Table 1 we report the pseudo code for the integration scheme adopted in the time-history analysis, for a time-step t i → t i+ 1. The algorithm was based on the one discussed in (Argyris and Mlejnek 1991), but it has been further enhanced to accommodate the nonlinear behavior of the systems herein considered, and the presence of nonlinear viscous dampers.

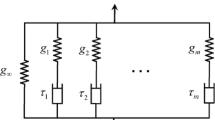

3 Adjoint sensitivity analysis

In the following section we calculate the sensitivity of a generic response functional h:

where x is a generic design variable. In particular we will rely on the discretize-then-differentiate adjoint variable method discussed in (Jensen et al. 2014). Therefore, from now on we will consider the discretized version of the problem at hand:

The goal is to calculate \(\frac {d\, h}{d\, x}\). To this end, we first define the augmented function \(\hat {h}\):

where λ i , μ i , ν i , \({\phi ^{1}_{i}}\), \({\psi ^{1}_{i}}\), etc., are the adjoint variables and R e q,i , R N e w1,i , R N e w2,i , R s,i , R k s1,i , R d,i , R k d1,i , etc., are the equilibrium equations’ residuals in each time-step. These residuals should be equal to zero in order to satisfy the dynamic equilibrium of the system. It follows that \(\frac {d\, \hat {h}}{d\, x} =\frac {d\, h}{d\, x}\). In particular, we first consider the equation for the residual of the global equilibrium equation (12):

We also consider the equations representing the residuals of the Newmark-β approximation (13):

Next, we consider the residuals of the local equilibrium equations for the restoring force of the system approximated with the Runge-Kutta method (15):

where we defined:

The last that remain to be considered are the residuals of the local equilibrium equations for the nonlinear damper approximated also with the Runge-Kutta method (15):

where we defined:

It is now possible to formulate the full sensitivity of the function \(\hat {h}\) by differentiating the equations of the residuals (i.e. (19)–(23)) with respect to a generic variable x:

It should be noted that the derivatives of the residuals are also calculated with the chain rule. For example:

where, for the sake of generality, we allowed each of the residuals to depend directly and indirectly on the independent design variable x. At this point we should also mention that the quantities \(K^{i-1}_{s,j}\) and \(K^{i-1}_{d,j}\), with j = 1,…,4, introduced by the RK approximations (15) of the forces f s,i and f d,i are treated as additional state variables. To avoid the calculation of the implicit derivatives of the state variables with respect to the design variables (e.g. \(\frac {d u_{i}}{d x}\), \(\frac {d \dot {u}_{i}}{d x}\), \(\frac {d \ddot {u}_{i}}{d x}\), \(\frac {d f_{s,i}}{d x}\), \(\frac {d f_{d,i}}{d x}\), \(\frac {d K^{i}_{s,1}}{d x}\), \(\frac {d K^{i}_{d,1}}{d x}\)), once (25) is differentiated all the terms multiplying these derivatives are collected and equated to zero. This requires the recollection of the terms in (25), considering the implicit derivatives \(\frac {d u_{i}}{d x}\), \(\frac {d \dot {u}_{i}}{d x}\), etc. unrelated. In this way it is possible to eliminate these unknown derivatives from the final formulation of the gradient \(\frac {d\hat {h}}{dx}\).

As a result of this procedure, we obtain a system of linear equations where the unknowns are the adjoint variables (e.g. λ i , μ i , ν i , \({\phi ^{1}_{i}}\), \({\psi ^{1}_{i}}\)) with terminal known conditions. More precisely, for each time step i → i − 1we solve the following linear system:

where:

The matrix A g r,i and the vector b g r,i have been presented in (28) divided into blocks. The details of each of these blocks are given in AAppendix. If we consider a generic system of N d o f degrees of freedom, with N d nonlinear viscous dampers, and N s elements at the level of which the nonlinear behavior of the resisting forces is defined, the matrix A g r,i has dimensions

The column vectors z g r,i and b g r,i have dimensions

Herein, as we already mentioned, we consider a single degree of freedom system where N d o f = 1, N s = 1, and N d = 1 (see Fig. 3). Therefore, in (28) the matrix A g r,i has dimensions 13 × 13and the vector b g r,i 13 × 1. The matrix A g r,i in (27) may become very large in large scale problems, much larger than the number of degrees of freedom in the structural analysis. This, in first sight, may seem to lead to a computationally very expensive approach for sensitivity analysis. However, this is not the case. In fact, as the structural analysis problem is comprised of “global” and “local” state variables, the sensitivity analysis problem too is characterized by “global” and “local” adjoint variables. For example, λ i , μ i and ν i can be viewed as global adjoint variables, while all the others may be treated as local ones. Due to the structure of the equations and their linearity, some sets of the local adjoint variables may be solved locally as a function of the global ones. This is relatively easy as each set of the local equations is small. Then, the local adjoint variables could be substituted to the equations of the global ones. Thus, the system could be reduced to the size of the structural analysis problem while being linear. This is much more computationally cheap compared to the structural analysis. Thus, if the structural analysis problem is manageable from a computational point of view, then so is the sensitivity analysis. It should be remembered, that for the class of problems considered here, this is the computationally cheapest option.

The systems of linear equations (27) is solved backward starting from known terminal conditions for z g r (i.e. for z g r,N = z g r (t f )). Thus, the system of (27) is organized such that the vector b g r,i depends on z g r,i+ 1. The final conditions are written as follows:

The matrix A g r,N is essentially equal to A g r,i . The vector z g r,N contains the adjoint variables calculated in the final point in time t N . The vector b g r,N has less non zero entries than b g r,i , since the vector entries with subscript N + 1 do not exist. With reference to (28), the blocks of b g r,N are written as follows:

The adjoint sensitivity analysis starts from (29), to then continue by solving sequentially the system of equations (27) for i : N − 1 → 1. Once the adjoint variables z g r,i have been calculated for each discrete point in time t i it is possible to calculate the sensitivity of the functional h:

where:

In (31), it is assumed that the properties of the spring (i.e. k s , and f y ) and of the viscous damper (i.e. c d , and k d ) are formulated explicitly in terms of the design variable x. The mass m, and the inherent damping c s , on the contrary, are assumed constant and not dependent on the design variable x. This is an approximation that relies on the assumption that a variation of the variable Δx will not affect significantly the mass and the inherent damping of the system.

Table 2 contains the pseudo code for the adjoint sensitivity analysis.

In the following section we will show how the adjoint sensitivity analysis discussed in this section can be used in order to optimize particular dynamic systems.

4 Applications

The adjoint sensitivity analysis discussed in Section 3 can be generalized to the case of multi-degree-of-freedom systems with no particular additional effort, and it opens up new possibilities for optimizing complex dynamic systems equipped with nonlinear viscous dampers. In this section we present and discuss two different illustrative numerical applications. In both cases we apply the adjoint sensitivity analysis discussed previously. The gradients calculated with the adjoint sensitivity analysis were verified by comparing them successfully with the gradients calculated with the finite difference method in both applications. The MATLAB code for the sensitivity analysis considered in the first application, and its verification with the finite difference method is provided as “Supplementary Material”. The first system considered is a planar frame with two degrees of freedom. The structure is subject to a ground motion acceleration, which represents a realistic seismic excitation. The goal is to design the added damping system to reduce the peak displacement in time of the structure. It can be seen as a seismic retrofitting problem for a structure with nonlinear behavior equipped with nonlinear fluid viscous dampers. In the second application we consider a quarter-car suspension system. The suspension is made of a linear spring and a nonlinear damper in parallel. In this case the spring is expected to remain in the linear range. The design extends to both the spring and the viscous damper composing the suspension system. The system is subject to a sinusoidal excitation representing the road irregularity.

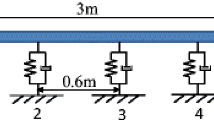

4.1 Two degrees of freedom planar frame

The first example consists of a planar shear frame with two degrees of freedom, and subject to a ground acceleration as shown in Fig. 4. In particular, we consider the first 20 seconds of the acceleration record L A02 from the L A10% in 50 years ensemble (National Information Service for Earthquake Engineering - University of California Berkeley 2013). Figure 5 shows the ground acceleration record considered over time. Due to the ground acceleration the structure is expected to experience significant displacements, and in particular inter-story drifts (i.e. relative displacements between contiguous stories). In this case, the inter-story drifts are:

and they are collected in the vector d(t). Inter-story drifts are a measure of both structural and non-structural damage in structures subject to earthquakes (Charmpis et al. 2012). Hence, in this example the goal is to reduce the deformation demand on the structure by distributing and sizing up to two nonlinear fluid viscous dampers. The mass matrix and the initial stiffness vector of the system are:

The nonlinear behavior of the structure is defined at the element level, as discussed in Section 2.2. In particular, for each column we consider an elastic-perfectly plastic behavior defined in terms of shear forces and relative horizontal displacement between the columns ends. The initial stiffness for the columns of the first and second floor is defined in the stiffness vector of (34). Each entry of the vector k s0is the sum of the stiffening contributions of the two columns of each story. Similarly, the yielding forces for the columns of the first and second story of the structure are defined in the vector f y :

We assume 5% of critical damping for the two modes of the structure to construct the Rayleigh damping matrix of the structure (Chopra 2011):

This matrix is then considered constant throughout the optimization. We consider an aggregated constraint of the peak inter-story drifts in time. To this end, we first evaluate the maximum value in time of each inter-story drift with a differentiable approximation of the maxfunction:

where t f is the final time, and \(\mathcal {D}(\cdot )\) is an operator that transforms a vector into a diagonal matrix, and a diagonal matrix into a vector (similarly to the diag(⋅)MATLAB function). In (37) the inter-story drifts have been normalized by the allowable value d a l l o w . The maximum allowable inter-story drift is set to d a l l o w = 9 m m. For high values of the parameter r, (37) approximates the maximum value in time of the normalized inter-story drifts. Numerically, (37) is calculated as follows:

where \(w_{1}=\frac {\Delta t_{1}}{2}\), \(w_{N}=\frac {\Delta t_{N}}{2}\), and \(w_{i}=\frac {\Delta t_{i}+{\Delta } t_{i + 1}}{2}\). Subsequently, we aggregate the two normalized peak drifts:

Equation (39) for high values of the parameter q approximates the maximum value of the two entries of the vector \(\tilde {\mathbf {d}}_{c}\). It should be noted that both r and q are even numbers. We consider an exponent α for the viscous dampers equal to 0.35, and a fixed ratio between the damping coefficient and the stiffness coefficient of each viscous damper: ρ = k d /c d . The coefficient ρ in this example is set to 1.1042. For more information regarding the tuning procedure adopted for the definition of ρ the reader is referred to (Pollini et al. 2017). As a result, the design variables of the problem are c d1 and c d2 for a given exponent α and ratio ρ. The two damping coefficients are defined as the product of a reference damping coefficient \(\bar {c}_{d}\) and a scaling variable x i ∈ [0,1]: \(c_{di}=x_{i}\bar {c}_{d}\), for i = 1,2. The reference damping coefficient represents the maximum allowed value of damping, and it is defined a priori. In this example is set to \(\bar {c}_{d} = 100\, [kN(s/mm)^{\alpha }] \). As we already mentioned, the properties of the structure are given, and the added viscous dampers are designed in order to satisfy a constraint on performance. Similarly to what is done in more traditional problems of structural optimization where structural weight or volume are minimized (e.g. truss optimization), here we are designing the smallest added damping system required. The dampers are characterized by their damping coefficient, therefore the most natural objective cost function would be the sum of the dampers’ damping coefficients. Thus, the objective cost function minimized is the sum of the two damping coefficients of the two fluid viscous dampers that could be potentially placed in the structure if needed. The final optimization problem formulation is presented in (40):

where T is a transformation matrix from local degrees of freedom to global ones, and I is the identity matrix. As already mentioned, we attach to this article the MATLAB code for the sensitivity analysis of the constraint on \(\tilde {d}_{c}\), and its verification with the finite difference method. Problem (40) has been solved with a Sequential Linear Programming approach (SLP), where in every iteration the sub-problems were solved with the Gurobi Optimizer solver for Linear Programming problems (Gurobi Optimization Inc. 2016). In every iteration five linearized constraints were considered: the linearized aggregated constraint corresponding to the current iteration point and the linearized constraints corresponding to the previous four iterations. Due to the non-convexity of the problem, it may happen that in a certain iteration one or more linearized constraints are active even though the current point strictly falls into the feasible domain. This issue was already discussed in (Lavan and Levy 2006b), and it is shown in Fig. 2 of the same reference. These constraints may lead to too conservative solutions. Therefore they are removed and disregarded in the following iterations. Moving limits were also considered with respect to the design variables x 1 and x 2. In particular, we considered moving limits with a range of ± 0.025with respect to each current iteration point. Last, the parameters r and q presented in (37) and (39) are initially set to 1000, and progressively increased through the iterations by steps of 50.

Two degrees of freedom system with nonlinear fluid viscous dampers considered in Section 4.1

Ground acceleration record LA02 considered in Section 4.1

The starting point for the optimization analysis was the maximum damping solution, with both c d1 and c d2 equal to 100 [k N(s/m m)α] (i.e. x 1 = 1, x 2 = 1). The optimization analysis converged after 36 iterations in MATLAB. The details regarding the final optimized design solution are listed in Table 3. In Fig. 6 we plot the contour of the objective function and of the aggregated constraint, and the optimization path followed by the algorithm. Each blue circle represents an optimal point identified in each iteration. The black circle represents the final solution. From the plot it is also possible to notice the high degree of non-convexity of the problem at hand. Nevertheless, the algorithm adopted in this application was able to identify what graphically seems to be a final solution resembling the optimal solution of the problem. From Table 3 it possible to observe that the final solution is characterized by a small constraint violation. The aggregated inter-story dirift constraint is highly non-convex and progressively approximated by (38) and (39). These two approximations of the max function improve their accuracy through the iterations according to the prescribed continuation scheme. Thus, the non-convex constraint is hard to enforce due the approximation adopted. Additionally, the optimal solution seats in a corner of the feasible domain. Therefore, as the algorithm converges towards this corner, it becomes more complex to enforce the approximated aggregated constraint through sequential linearizations. As a result, the final solution is identified accepting a negligible (from an engineering point of view) constraint violation. The values of the aggregated constraint and objective function for each iteration point are plotted in Fig. 7. In Fig. 8, we show the response of the structure with the optimized damping distribution. In particular, the first plot shows the response in terms of global coordinates u 1 and u 2. The second plot shows the response in terms of dampers’ local coordinates (i.e. inter-story drifts) d 1 and d 2.

Contour of the objective function (blue) and of the aggregated constraint (red) considered in Section 4.1. Each blue circle represent the optimal point for each sub-linear programming problem. The black circle represents the final optimized solution

4.2 Quarter-car with passive suspension system

The second application that we discuss consists of the design of a quarter-car suspension system equipped with a nonlinear fluid viscous damper. This particular application was inspired by the numerical examples discussed in (Georgiou et al. 2007), where the authors formulated and solved with genetic algorithms a multi-objective optimization problem for the design of passive or semi-active suspension systems. In what follows, the quarter-car is modeled as a two degrees of freedom system, similarly to the previous application. The system is subject to a sinusoidal road excitation z g (t) = A s i n(ω t), with A = 50m m, ω = 10r a d/s e c, and 0 ≤ t ≤ 1.25 s e c. This value of the forcing frequency was chosen because it is close to one of the undamped natural frequencies of the linear system. Essentially, this excitation mimics the dynamic load felt by a car driving over a road irregularity of amplitude 5 c m and length 4.36 m, with a speed of 50 k m/h. The associated ground acceleration considered in the equations of motion is \(\ddot {z}_{g}(t) = - A\, \omega ^{2}\,sin(\omega t)\). In Fig. 9 we show the dynamic system considered. In this case the first mass represents the unsprung mass of the wheel, and the second mass accounts for a quarter of the total sprung car mass. The coefficients k s1 and c 1 represent the stiffness and the damping of the wheel. They both define linear behaviors, and they are considered fixed and not involved in the design. Their values are set to k s1 = 200 k N/m and c 1 = 7 N s/m. The suspension system is characterized by a linear spring and a nonlinear fluid viscous damper. These two elements will be designed with an optimization-based approach. The spring is defined by the stiffness coefficient k s2, initially set to 15 N/m m. The damper is defined by the stiffness coefficient k d , the damping coefficient c d , and the exponent α. The mass matrix, the damping matrix, and the initial stiffness matrix are:

Quarter-car with nonlinear passive suspension considered in Section 4.2

As in Section 4.1, also in this case we consider a fixed ratio ρ between the damping coefficient and the stiffness coefficient of the damper. In particular, in this application we considered a ratio ρ equal to 1.4519. For the calculation of ρ we followed the same tuning procedure discussed in Pollini et al. (2017), considering the linear system defined by the mass and stiffness matrices presented in (41). The exponent α in this case was set to 0.5. Therefore, the design variable related to the damper is the damping coefficient c d for a given ratio ρ and exponent α. For convenience, from a numerical point of view, the two design variables are reformulated as follows:

where \(\bar {c}_{d}\) and \(\bar {k}_{s}\) are reference damping and stiffness coefficients defined a priori, and x 1,2 ∈ [0,1]. In this example we considered \(\bar {c}_{d}= 150\) N(s/m m)α and \(\bar {k}_{s}= 15\) N/m m. The goal of this problem is to design a suspension system that minimizes the peak acceleration in time of the mass m 2 while respecting a constraint on the peak stroke of the suspension. The peak acceleration of the second mass is a parameter related to the comfort of the occupants of the car. Therefore, we want to achieve the best level of comfort by minimizing the peak acceleration \(\ddot {u}_{2,max} = \max _{t} |\ddot {u}_{2}(t)|\). We calculate the peak acceleration through a differentiable approximation of the maxfunction:

where r is a high even number. At the same time, we consider a constraint on the peak suspension stroke, or suspension travel. The peak stroke is calculated also with a differentiable formulation:

where the maximum allowed stroke was set to d a l l o w = 50m m. Both (43) and (44) have been calculated similarly to (37). The final optimization problem is presented in (45):

Problem (45) has been solved with the Method of Moving Asymptotes (MMA) (Svanberg 1987). The MMA algorithm, in fact, proved to be more effective for this particular optimization problem in converging smoothly towards the final optimized solution. Moreover, with this example we want to show that the sensitivity analysis discussed herein can be applied to different types of dynamic systems. We considered the same moving limits as in the previous application, and the same setting for the parameter r.

The variables x 1and x 2were initially set to one. As a consequence, the associated physical design variables initially were: c d = 150N(s/m m)α, and k s2 = 15N/m m. The optimization process converged after 24 iterations in MATLAB. The final results of the analysis are presented in Table 4. Figure 10 shows the contour of the objective function and of the constraint. It also shows the optimization path followed by the optimizer. Also in this case each blue circle represents optimal points for each subsequent convex approximation of the problem at hand. The black circle highlights the final design solution. The values of the constraint and of the objective function over the iterations are plotted in Fig. 11. The response of the optimized system is shown in Figs. 12 and 13. In particular, Fig. 12 shows the acceleration response of the sprung mass m 2 in time, and the stroke of the suspensions system d(t) = u 2(t) − u 1(t)in time. Figure 13 shows the response of the damper in terms of force-displacement and force-velocity.

Contour of the objective function (blue) and of the aggregated constraint (red) considered in Section 4.2. Each blue circle represent the optimal point for each sub-convex programming problem. The black circle represents the final optimized solution

5 Conclusions

In this paper we discussed the adjoint sensitivity analysis and optimization for nonlinear dynamic systems coupled with nonlinear fluid viscous dampers. The dampers are modeled with the Maxwell’s model for visco-elasticity. It is thus possible to account for the stiffening and damping contributions of the device. The systems are subject to transient excitations, and their response is calculated with the Newmark-β method. In particular, the equilibrium in each time-step is iteratively achieved by means of the Newton-Raphson and Runge-Kutta methods. The heart of the discussion of this paper focuses on the adjoint sensitivity analysis of these systems, and its application to two different design cases. The sensitivity of a generic response function is in fact consistently calculated with detail through the discretize-then-differentiate version of the adjoint variable method. The generic framework is then applied to the optimization-based design of an added damping system for seismic retrofitting, and to the optimization-based design of a quarter-car suspension system. Both applications show the importance of the adjoint sensitivity analysis discussed herein in the context of optimization-based design of hysteretic dynamic systems with nonlinear viscous dampers. The results presented could be extended and applied to different design cases, where we expect the methodology discussed here to promote the use of computationally efficient design procedures based on optimization.

References

Almazán JL, Espinoza G, Aguirre JJ (2012) Torsional balance of asymmetric structures by means of tuned mass dampers. Eng Struct 42:308–328

Argyris JH, Mlejnek HP (1991) Dynamics of structures. North Holland

Brodersen ML, Høgsberg J (2016) Hybrid damper with stroke amplification for damping of offshore wind turbines. Wind Energy 19(12):2223–2238

Caetano E, Cunha A, Moutinho C, Magalhaes F (2010) Studies for controlling human-induced vibration of the Pedro e Ines footbridge, Portugal. Part 2: implementation of tuned mass dampers. Eng Struct 32(4):1082–1091

Casado CM, Diaz IM, de Sebastian J, Poncela AV, Lorenzana A (2013) Implementation of passive and active vibration control on an in-service footbridge. Struct Control Health Monit 20(1): 70–87

Charmpis DC, Komodromos P, Phocas MC (2012) Optimized earthquake response of multi-storey buildings with seismic isolation at various elevations. Earthq Eng Struct Dyn 41(15):2289–2310

Chopra AK (2011) Dynamics of structures: theory and applications to earthquake engineering. Prentice-Hall, Englewood Cliffs

Constantinou MC, Soong TT, Dargush GF (1998) Passive energy dissipation systems for structural design and retrofit. Multidisciplinary Center for Earthquake Engineering Research Buffalo, New York

Dahl J, Jensen JS, Sigmund O (2008) Topology optimization for transient wave propagation problems in one dimension: design of filters and pulse modulators. Struct Multidiscip Optim 36(6): 585–595

Daniel Y, Lavan O (2015) Optimality criteria based seismic design of multiple tuned-mass-dampers for the control of 3D irregular buildings. Earthq Struct 8(1):77–100

Dargush GF, Sant RS (2005) Evolutionary aseismic design and retrofit of structures with passive energy dissipation. Earthq Eng Struct Dyn 34(May):1601–1626

Elesin Y, Lazarov BS, Jensen JS, Sigmund O (2012) Design of robust and efficient photonic switches using topology optimization. Photon Nanostruct - Fundamentals Appl 10(1):153–165

Georgiou G, Verros G, Natsiavas S (2007) Multi-objective optimization of quarter-car models with a passive or semi-active suspension system. Veh Syst Dyn 45(March 2015):77–92

Gurobi Optimization Inc. (2016) Gurobi Optimizer Reference Manual. http://www.gurobi.com

Haftka RT, Adelman HM (1989) Recent developments in structural sensitivity analysis. Struct Optim 1 (3):137–151

Infanti S, Castellano MG (2007) Sheikh Zayed bridge seismic protection system. In: 10th world conference on seismic isolation, energy dissipation and active vibrations control of structures, number May. Istanbul, Turkey

Infanti S, Papanikolas P, Benzoni G, Castellano MG (2004) Rion antirion bridge: design and full–scale testing of the seismic protection devices. In: 13th world conference on earthquake engineering. Vancouver, B.C., Canada

Infanti S, Robinson J, Smith R (2008) Viscous dampers for high-rise buildings. In: 14th world conference on earthquake engineering (14WCEE), number July. Beijing, China

Jensen JS, Nakshatrala PB, Tortorelli DA (2014) On the consistency of adjoint sensitivity analysis for structural optimization of linear dynamic problems. Struct Multidiscip Optim 49(2006):831–837

Kanno Y (2013) Damper placement optimization in a shear building model with discrete design variables: a mixed-integer second-order cone programming approach. Earthq Eng Struct Dyn 42(11):1657–1676

Kasai K, Oohara K (2001) Algorith and computer code to simulate nonlinear viscous dampers. In: Passively controlled structure symposium, Yokohama, Japan

Klembczyk AR, Mosher MW (2000) Analysis, optimization, and development of a specialized passive shock isolation system for high speed planning boat seats. Technical Report, Taylor Devices Inc. and Tayco Developments Inc., North Tonawanda

Lavan O, Levy R (2005) Optimal design of supplemental viscous dampers for irregular shear-frames in the presence of yielding. Earthq Eng Struct Dyn 34(8):889–907

Lavan O, Levy R (2010) Performance based optimal seismic retrofitting of yielding plane frames using added viscous damping. Earthq Struct 1(3):307–326

Lavan O, Amir O (2014) Simultaneous topology and sizing optimization of viscous dampers in seismic retrofitting of 3D irregular frame structures. Earthq Eng Struct Dyn 43:1325–1342

Lavan O, Levy R (2006a) Optimal peripheral drift control of 3D irregular framed structures using supplemental viscous dampers. J Earthq Eng 10(6):903–923

Lavan O, Levy R (2006b) Optimal design of supplemental viscous dampers for linear framed structures. Earthq Eng Struct Dyn 35(3):337–356

Le C, Bruns TE, Tortorelli DA (2012) Material microstructure optimization for linear elastodynamic energy wave management, vol 60

Miyamoto HK, Taylor D (2000) Structural control of dynamic blast loading. In: Proceedings from structures congress on advanced technology in structural engineering, 2000, pp 1–8

Nakshatrala PB, Tortorelli DA (2016) Nonlinear structural design using multiscale topology optimization. Part II: Transient formulation, vol 304

National Information Service for Earthquake Engineering - University of California Berkeley (2013) 10 pairs of horizontal ground motions for Los Angeles with a probability of exceedence of 10% in 50 years

Oohara K, Kasai K (2002) Time-history analysis model for nonlinear viscous dampers. In: Structural engineers world congress (SEWC). Yokohama, Japan

Pollini N, Lavan O, Amir O (2016) Towards realistic minimum-cost optimization of viscous fluid dampers for seismic retrofitting. Bull Earthq Eng 14(3):971–998

Pollini N, Lavan O, Amir O (2017) Minimum-cost optimization of nonlinear fluid viscous dampers and their supporting members for seismic retrofitting. Earthq Eng Struct Dyn 46:1941–1961

Quarteroni A, Sacco R, Saleri F (2007) Numerical mathematics, volume 37 of Texts in Applied Mathematics. Springer, Berlin

Seleemah AA, Constantinou MC (1997) Investigation of seismic response of buildings with linear and nonlinear fluid viscous dampers. Technical Report. University at Buffalo, Buffalo

Simon-Talero M, Merino RM, Infanti S (2006) Seismic protection of the Guadalfeo bridge by viscous dampers. In: 1st European conference on earthquake engineering and seismology, number September. Geneva, Switzerland, pp 3–8

Sivaselvan MV, Reinhorn AM (2000) Hysteretic models for deteriorating inelastic structures. J Eng Mech 126(6):633–640

Smith RJ, Willford MR (2007) The damped outrigger concept for tall buildings. Struct Design Tall Special Buildings 16(4):501–517

Soong TT, Dargush GF (1997) Passive energy dissipation systems in structural engineering. Wiley, New York

Suciu CV, Tobiishi T, Mouri R (2012) Modeling and simulation of a vehicle suspension with variable damping versus the excitation frequency. J Telecommun Info Technol 2012(1):83–89

Svanberg K (1987) The method of moving asymptotes—a new method for structural optimization. Int J Numer Methods Eng 24(June 1986):359–373

Takewaki I (1997) Optimal damper placement for minimum transfer functions. Earthq Eng Struct Dyn 26 (February):1113–1124

Taylor D (2015) Personal comunication

Tortorelli DA, Michaleris P (1994) Design sensitivity analysis overview and review. Inverse Prob Eng 1 (December 2013):71– 105

Turteltaub S (2005) Optimal non-homogeneous composites for dynamic loading. Struct Multidiscip Optim 30 (2):101–112

Yang JN, Agrawal AK, Samali B, Wu J-C (2004) Benchmark problem for response control of wind-excited tall buildings. J Eng Mech 130(4):437–446

Yun K-S, Youn S-K (2017) Design sensitivity analysis for transient response of non-viscously damped dynamic systems. Struct Multidiscip Optim 55(6):2197–2210

Acknowledgements

The authors would like to thank the anonymous reviewers for their helpful comments. The research presented in this paper was founded by the Israeli Ministry of Science, Technology and Space. The authors gratefully acknowledge this financial support.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix:: Explicit formulations of the matrices involved in the sensitivity analysis

Appendix:: Explicit formulations of the matrices involved in the sensitivity analysis

To perform the sensitivity analysis discussed in Section 3, (27) must be solved iteratively backwards over the same time discretization adopted in the system response analysis. The matrix A g r,i , and the vector b g r,i have been partitioned into blocks in order to facilitate their description. More precisely, the block \(\mathbf {A}^{11}_{i}\) of A g r,i is also a matrix and it is presented in Table 5. The blocks \(\mathbf {A}^{12}_{i}\) and \(\mathbf {A}^{21}_{i}\) are the following:

Finally, the block \(\mathbf {A}^{22}_{i}\) is defined in Table 6.

Similarly, the blocks of the vector b g r,i are also vectors, and they are written as follows:

where \(\dot {u}_{i+\frac {1}{2}} = \frac {1}{2}\left (\dot {u}_{i + 1}+\dot {u}_{i}\right )\). We remark that Δt i+ 1 = t i+ 1 − t i , and Δt i = t i − t i− 1.

Rights and permissions

About this article

Cite this article

Pollini, N., Lavan, O. & Amir, O. Adjoint sensitivity analysis and optimization of hysteretic dynamic systems with nonlinear viscous dampers. Struct Multidisc Optim 57, 2273–2289 (2018). https://doi.org/10.1007/s00158-017-1858-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-017-1858-2