Abstract

A new hybrid algorithm, combining Particle Swarm Optimization (PSO) and Differential Evolution (DE), is presented in this paper. In the proposed algorithm, an alternative replication strategy is introduced to avoid the individuals falling into the suboptimal. There are two groups at the initial process. One is generated by the position updating method of PSO, and the other is produced by the mutation strategy of DE. Based on the alternative replication strategy, those two groups are updated. The poorer half of the population is selected and replaced by the better half. A new group is composed and conducted throughout the optimization process of DE to improve the population diversity. Additionally, the scaling factor is used to enhance the search ability. Numerous simulations on eight benchmark functions show the superior performance of the proposed algorithm.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Particle Swarm Optimization (PSO), proposed by Kennedy and Eberhart [1], is a well-known global intelligence optimization algorithm. On the basis of individual perception and social perception, the particles of PSO are replaced to enhance the speed of convergence and search accuracy in every iteration. Since then, many modifications have been developed. A recent version of modification was to update the particle positions by using two selected functions and combining individual experiences to avoid getting into the local optimum [2]. A fitness function was defined in [3] to identify the parameters of nonlinear dynamic hysteresis model. To a certain extent, the algorithm improves the convergence of the progress. However, a common problem occurring in the simulation experiments is still the premature clustering in the early part of iteration procedure.

Differential Evolution (DE) [4] is a population-based method of function optimization like Genetic algorithm (GA), including mutation, crossover, and selection. In DE, new individuals are generated from the information of multiple previous individuals to get out of stagnation. Hence, some improved DE algorithms are proposed for getting better consequents. An improvement of DE with Taguchi method of sliding levers had the powerful ability of global search and obtained a better solution [5]. The fuzzy selection operation was used in an improved DE to reduce the complexity of multiple attribute decisions and enhance population diversity [6]. Though the DE has some advantages in global optimization, there is a dependency on controlling parameters which are not easy to decide when confronting high-dimensional complex problems.

In view of the advantages and disadvantages of PSO and DE, many hybrid algorithms of PSO and DE are described to combine the advantages of both and better solve various practical problems. The hybrid PSO and DE with population size reduction (HPSODEPSR) [7] can achieve optimal or near optimal solution faster than other comparison algorithms. The Bacterial Foraging Optimization (BFO) hybridized [8] with PSO and DE was introduced to effectively handle the dynamic economic dispatch problem. In order to improve the diversity and make each subgroup achieve a different optimal solution in the range of fitness values, Zuo and Xiao [9] used a hybrid operator and multi-population strategy performing the PSO and DE operation in turn. A novel hybrid algorithm PSO-DE [10] jumped out of stagnation, increased the speed of convergence and improved the algorithm’s performance. A modified algorithm hybridizing PSO and DE with an aging leader and challengers was advanced to find the optimal parameters of PID controller quickly [11]. Though those hybrid algorithms improve the performance of the original algorithms (i.e. PSO and DE), premature stagnation is still a major problem.

This paper presents a novel hybrid algorithm of PSO and DE (DEPSO) with alternative replication strategy to overcome the above-mentioned problems. In the proposed algorithm, population is separated into two groups which are generated by two different methods, i.e. velocity updating strategy of PSO and mutative strategy of DE. A novel population are produced according to alternative replication strategy. The poor half of the population is eliminated while the other half is reproduced for the new evolution. In order to enhance the diversity of the population, the scaling factor of DEPSO is adjusted according to the linear decreasing rule.

The remaining paper is organized as follows. A brief introduction of PSO algorithm and DE algorithm is provided in Sect. 2. In Sect. 3, the hybrid algorithm of PSO and DE with alternative and replication strategy is described in detail. Section 4 gives experimental results of the Simulations on benchmark functions. Finally, the conclusion is presented in Sect. 5.

2 Description of Algorithms

2.1 Particle Swarm Optimization

In standard PSO algorithm, a group of particles flies in the search space to find the optimal location. The particles are given random positions x and velocities v in the initiate progress. In each iteration, the best position of each particle pbest id and the best position of global gbest id are learnt, which leads the particle to the new position. Equations (1)–(2) are the updated rule [1]:

where x id and v id are the position and velocity of the ith particle, respectively, N is the number of particles, and D is the dimensions of search space, c 1 and c 2 are acceleration factors. Finally, ω is the inertia weight adjusted by Eq. (3) in [12], ω max and ω min are the maximum and the minimum value of inertia weight, respectively. G and G max are the current number of iteration and the maximum number of iteration, separately.

2.2 Differential Evolution Algorithm

DE, proposed by Storn and Price [4], has the significant effect on solving application problems. The outline of DE can be described as follows:

-

Step 1: initialize individuals according to the upper and lower bounds of search space, and evaluate the fitness of each individual.

-

Step 2: compare the fitness of every individual and record the best individual.

-

Step 3: generate new vectors through mutation process. The mutation rule is as follows Eq. (4):

-

$$ v_{i} = x_{r1} + F(x_{r2} - x_{r3} ),i = 1,..,N $$(4)

where N is the number of individuals, x i is the ith individual and v i is the updated ith vector through mutating. F is the scaling factor, r 1 , r 2 and r 3 not equal to each other are randomly selected from [1, N].

-

Step 4: cross populations and mutant vectors to get a trial vector. Equation (5) is the crossover formula:

-

$$ u_{ij} = \left\{ {\begin{array}{*{20}l} {v_{ij} } \hfill & {if(rand_{j} (0,1) \le CR)\,or \,j = j_{rand} } \hfill \\ {x_{ij} } \hfill & {otherwise} \hfill \\ \end{array} } \right., \, j = 1 \ldots D $$(5)

where D is the number of parameters, while u ij represents the ith individual at the jth search space after crossing operation. CR is the crossover probability, and j rand is a randomly selected index in the range of [0, D].

-

Step 5: The greedy algorithm (i.e. Eq. (6)) is used to select individuals for the next iteration process.

-

$$ x_{i} = \left\{ {\begin{array}{*{20}l} {u_{i} } \hfill & {if\;f(u_{i} ) \le f(x_{i} )} \hfill \\ {x_{i} } \hfill & {otherwise} \hfill \\ \end{array} } \right. $$(6)

3 DEPSO Algorithm with Alternative Replication Strategy

Due to rapid information search, the suboptimal solutions might be more frequently obtained by PSO. Different from the PSO, population of DE tends to be more diversity as the number of iterations increases but consumes more computational complexity. To take advantages of those two algorithms, in this paper, a new hybrid DEPSO method is proposed to improve the search capability of particles with smaller computational time.

For the purpose of preventing individuals from sinking into suboptimal solution, we use the following alternative replication strategy to optimize the initial particles of each iteration.

At the beginning of each iteration, a new group P 1 is generated by PSO algorithm (i.e. Eqs. (1)–(2)). Considering the optimal value of every individual x pbest , another group P 2 is renewed by the mutation process of DE algorithm (i.e. Eq. (7)). Based on the fitness value, we compare the updated groups (i.e. P 1 and P 2 ) with the initial group P 0 and preserve the better individuals to form new P 1 and P 2 .

where N is individuals’ number, x i is the i individuals, F is the scaling factor, and r 4 and r 5 are indexes selected from [1, N].

Sort the individuals of new groups (i.e. P 1 and P 2 ) according to the fitness values, and the sorted groups are also compared to retain the superior individuals constituting a group P 3 . The new group P 3 eliminates half of the individuals with poor fitness values, and the rest of the individuals are reproduced to keep the number of individuals.

In order to overcome the shortcoming of population reduction, the mutation factor decreases linearly with the number of iterations increasing in the mutation procedure. The scaling factor is controlled in Eq. (8).

where F max , F min , G, and G max are the maximum mutation factor, minimum mutation factor, the current number of iteration and the maximum number of iteration, respectively.

Finally, DE algorithm is re-simulated based on the group which is obtained using the alternative replication strategy. The scheme of DEPSO is described as follows in detail:

- Step 1::

-

initialize the position and velocity of every individual, and generate initial group P 0 .

- Step 2::

-

calculate the fitness of each individual, and evaluate the best solution of each individual pbest and the best solution of all individuals gbest.

- Step 3::

-

update the position and velocity of individuals using Eqs. (1)–(2), and generate new group P 1 .

- Step 4::

-

mutate the initial individuals (i.e. Eq. (7)) and introduce a new group P 2 .

- Step 5::

-

calculate the fitness of two groups (i.e. P 1 and P 2 ), and compare the fitness with the initial group P 0 to update the groups P 1 and P 2 , respectively.

- Step 6::

-

P 1 and P 2 are sorted based on the fitness. A new group P 3 is constituted of the superb individuals in the comparison of the sorted groups. The group P 3 is updated by alternative replication strategy.

- Step 7::

-

new vectors are formed by the group P 3 in the light of mutation process (i.e. Eq. (4)).

- Step 8::

-

Equation (5) is applied to get trial vectors through the crossover of individuals and mutant vectors in DE algorithm.

- Step 9::

-

select the best individuals using Eq. (6) and new offspring P 0 are introduced to execute the iterative procedure. Figure 1 presents the flowchart of DEPSO.

4 Experiments and Analysis

4.1 Benchmark Functions and Algorithms

To verify the performance of the proposed DEPSO algorithm, eight benchmark functions [13] (i.e. Sphere f1, SumPowers f2, Rosenbrock f3, Quartic f4, Rastrigin f5, Griewank f6, Ackley f7, Schwefel2.22 f8) are applied to test the improved algorithm. One important reason for choosing these eight functions is that they contain unimodal functions (i.e. f1, f2, f3, and f4) and multimodal functions (i.e. f5, f6, f7, and f8). Additionally, these functions are minimum problems and the minimum value is known to be zero. The performance of DEPSO is demonstrated through eight benchmark functions and compared with some classic algorithms, i.e. PSO, DE, Genetic algorithm (GA) [14], Artificial Bee Colony algorithm (ABC) [15, 16], and Bacterial Foraging Optimization (BFO) [17]. All functions are tested on these optimization algorithms through MATLAB R2014a software.

4.2 Experimental Parameters

The similar parameters of six optimization algorithms are set as follows: the size of the population is 50, the maximum iterative number is set to 5000. Each function is run 30 times with the search space dimension 30, 50, and 80. The parameters of GA, ABC and BFO are from [18] except the reproduction’s number of BFO is 25. More parameters setting of PSO, DE and DEPSO are shown as follows: In PSO, c 1 = c 2 = 2, ω max = 0.9, and ω min = 0.4; In DE, CR = 0.5, and F ranges from 0.4 to 0.9; In DEPSO, c 1 , c 2 , ω max and ω min are the same as PSO. CR and F are the same as DE. The values are the results of multiple simulations.

4.3 Experiment Results and Discussion

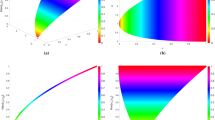

To get experiment results, all benchmark functions are executed by coding these search methods. The mean fitness value and the standard deviation obtained by six optimization algorithms are displayed in Table 1. The bold type is used to underline the best of all the numerical results attained by comparing six optimization algorithms. Figure 2 lists the convergence curves of different test functions gotten by all the algorithms with the dimension 50. In Fig. 2, in order to make the curves clear, the results are the logarithm with base 10.

As shown in Table 1, DEPSO algorithm almost gets the optimal mean and standard deviation among all the algorithms for the eight benchmark functions. It means that the hybrid DEPSO algorithm is better than other algorithms in terms of search accuracy. Additionally, the proposed algorithm obtains the minimum values (i.e. zero) on functions of Sphere, SumPowers, Quartic, Rastrigin, Griewank, and Schwefel2.22. It means that the convergence precision of the DEPSO algorithm is high in the selected functions.

In terms of dimensionality, the DEPSO can perform better than other algorithms when the dimension is increasing. From Fig. 2, we can conclude the convergent speed of DEPSO algorithm is significantly faster than other algorithms and the convergent results are closer to optimal values. Altogether, the performance of DEPSO algorithm performs better whether in unimodal functions or in multimodal functions.

5 Conclusion

A hybrid algorithm integrating the advantages of PSO and DE is proposed in this paper. The results show that the DEPSO algorithm outperforms other algorithms in terms of mean and standard deviation. Though DE with nonlinearly decreasing mechanism of scaling factor can obtain the similar solutions on some benchmark functions (e.g., Rosenbrock and Rastrigin), the convergence speed is not good than the proposed DEPSO method. Thus, the proposed alternative replication strategy can enhance the performance of the hybrid algorithm. Our future study will focus on the application of the proposed algorithm to solve the real-world problems and more hybrid methods will be developed to obtain better solutions.

References

Kennedy, J., Eberhart, R.C.: Particle swarm optimization. In: IEEE International Conference on Neural Networks, Piscataway, pp. 1942–1948 (1995)

Guedria, N.B.: Improved accelerated PSO algorithm for mechanical engineering optimization problems. Appl. Soft Comput. 40(40), 455–467 (2016)

Zhang, J., Xia, P.: An improved PSO algorithm for parameter identification of nonlinear dynamic hysteretic models. J. Sound Vib. 389 (2016)

Storn, R., Price, K.: Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 11(4), 341–359 (1997)

Tsai, J.T.: Improved differential evolution algorithm for nonlinear programming and engineering design problems. Neurocomputing 148, 628–640 (2015)

Pandit, M., Srivastava, L., Sharma, M.: Environmental economic dispatch in multi-area power system employing improved differential evolution with fuzzy selection. Appl. Soft Comput. 28, 498–510 (2015)

Ali, A.F., Tawhid, M.A.: A hybrid PSO and DE algorithm for solving engineering optimization problems. Appl. Math. Inf. Sci. 10(2), 431–449 (2016)

Vaisakh, K., Praveena, P., Rao, S.R.M.: A bacterial foraging PSO — DE algorithm for solving reserve constrained dynamic economic dispatch problem. In: 2011 IEEE International Conference on Fuzzy Systems, pp. 153–159 (2011)

Zuo, X., Xiao, L.: A DE and PSO based hybrid algorithm for dynamic optimization problems. Soft. Comput. 18(7), 1405–1424 (2014)

Liu, H., Cai, Z., Wang, Y.: Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl. Soft Comput. 10(2010), 629–640 (2010)

Moharam, A., El-Hosseini, M.A., Ali, H.A.: Design of optimal PID controller using hybrid differential evolution and particle swarm optimization with an aging leader and challengers. Appl. Soft Comput. 38, 727–737 (2016)

Shi, Y., Eberhart, R.: A modified particle swarm optimizer. In: Proceedings of IEEE International Conference Evolutionary Computation, Anchorage, pp. 69–73 (1998)

Niu, B., Liu, J., Zhang, F., Yi, W.: A cooperative structure-redesigned-based bacterial foraging optimization with guided and stochastic movements. In: Huang, D.-S., Jo, K.-H. (eds.) ICIC 2016. LNCS, vol. 9772, pp. 918–927. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-42294-7_82

Holand, J.H.: Adaption in natural and artificial systems. Control Artif. Intell. 6(2), 126–137 (1975)

Karaboga, D.: An idea based on honey bee swarm for numerical optimization. Engineering Faculty, Computer Engineering Department, Erciyes University, Technical report - TR06 (2005)

Karaboga, D., Basturk, B.: A powerful and efficient algorithm for numerical function optimization: Artificial Bee Colony (ABC) algorithm. J. Global Optim. 39(3), 459–471 (2007)

Passino, K.M.: Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst. 22(3), 52–67 (2002)

Wang, H., Zuo, L., Liu, J., Yang, C., Li, Ya., Baek, J.: A comparison of heuristic algorithms for bus dispatch. In: Tan, Y., Takagi, H., Shi, Y., Niu, B. (eds.) ICSI 2017. LNCS, vol. 10386, pp. 511–518. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-61833-3_54

Acknowledgment

This work is partially supported by The National Natural Science Foundation of China (Grants No. 61472257), Natural Science Foundation of Guangdong Province (2016A030310074), Guangdong Provincial Science and Technology Plan Project (No. 2013B040403005), and Research Foundation of Shenzhen University (85303/00000155).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Zuo, L., Liu, L., Wang, H., Tan, L. (2018). A Hybrid Differential Evolution Algorithm and Particle Swarm Optimization with Alternative Replication Strategy. In: Tan, Y., Shi, Y., Tang, Q. (eds) Advances in Swarm Intelligence. ICSI 2018. Lecture Notes in Computer Science(), vol 10941. Springer, Cham. https://doi.org/10.1007/978-3-319-93815-8_46

Download citation

DOI: https://doi.org/10.1007/978-3-319-93815-8_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-93814-1

Online ISBN: 978-3-319-93815-8

eBook Packages: Computer ScienceComputer Science (R0)