Abstract

Obesity is the result of a gene by environment interaction. A genetic legacy from our evolutionary past interacts with our modern environment to make some people obese. Why we have a genetic predisposition to obesity is problematical, because obesity has many negative consequences. How could natural selection favor the spread of such a disadvantageous trait? From an evolutionary perspective, three different types of explanation have been proposed to resolve this anomaly. The first is that obesity was once adaptive, in our evolutionary past. For example, it may have been necessary to support the development of large brains, or it may have enabled us to survive (or sustain fecundity) through periods of famine. People carrying so-called thrifty genes that enabled the efficient storage of energy as fat between famines would be at a selective advantage. In the modern world, however, people who have inherited these genes deposit fat in preparation for a famine that never comes, and the result is widespread obesity. The key problem with these adaptive scenarios is to understand why, if obesity was historically so advantageous, many people did not inherit these alleles and in modern society remain slim. The second type of explanation is that most mutations in the genes that predispose us to obesity are neutral and have been drifting over evolutionary time – so-called drifty genes, leading some individuals to be obesity prone and others obesity resistant. The third type of explanation is that obesity is neither adaptive nor neutral and may never even have existed in our evolutionary past, but it is favored today as a maladaptive by-product of positive selection on some other trait. Examples of this type of explanation are the suggestion that obesity results from variation in brown adipose tissue thermogenesis, or the idea that we over consume energy to satisfy our needs for protein (the protein leverage hypothesis). This chapter reviews the evidence for and against these different scenarios, concluding that adaptive scenarios are unlikely, but the other ideas may provide possible evolutionary contexts in which to understand the modern obesity phenomenon.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Keywords

- Obesity

- Evolution

- Adaptation

- Natural selection

- Thrifty genotype

- Drifty genes

- Genetic drift

- Brown adipose tissue

- Protein leverage hypothesis

Introduction

The obesity epidemic is a recent phenomenon (Chap. 1, “Overview of Metabolic Syndrome” on “Epidemiology”). In as little as 50 years, there has been a progressive rise in the worldwide prevalence of obesity. A trend that started in the Western world (Flegal et al. 1998) has rapidly spread to developing countries; until today, the only places yet to experience the epidemic are a few areas in sub-Saharan Africa. This change in the fatness of individuals over such a short timescale cannot reflect a change in the genetic makeup of the populations involved (Power and Schulkin 2009). Most of the recent changes must therefore be driven by environmental factors (Chap. 15, “Diet and Obesity (Macronutrients, Micronutrients, Nutritional Biochemistry)” on “Environmental Factors”). Yet, even among the most obese countries, there remain large populations of individuals who remain lean (e.g., Ogden et al. 2006; Flegal et al. 2010). These individual differences in obesity susceptibility mostly reflect genetic factors (Allison et al. 1996; Ginsburg et al. 1998; Segal and Allison 2002; Chap. 9, “Genetics of Obesity” on “Genetic Factors”). The obesity epidemic is therefore a consequence of a gene by environment interaction (Speakman 2004; Levin 2010; Speakman et al. 2011). Some people have a genetic predisposition to deposit fat, reflecting their evolutionary history, which results in obesity when exposed to the modern environment.

However, this interpretation of why we become obese has a major problem. We know that obesity is a predisposing factor for several serious noncommunicable diseases (Chap. 35, “Metabolic Syndrome, GERD, Barrett’s Esophagus” on “Diseases Associated with Obesity”). The fact that a large contribution to obesity is genetic yet obesity leads to an increase in the risk of developing these serious diseases is an issue, because the theory of evolution suggests that natural selection will only favor individuals that exhibit phenotypic traits that lead to increases in fitness (survival or fecundity). How is it possible for natural selection to have favored the spread of genes for obesity – a phenotype that has a negative impact on survival? This might be explained if obesity led to increases in fecundity that offset the survival disadvantage, but in fact obese people also have reduced fecundity (Zaadstra et al. 1993; but see Rakesh and Syam 2015), making the anomaly even worse. How did the predisposition to obesity evolve? What were the key events in our evolutionary history that led us to the current situation?

Why Do Animals and Humans Have Adipose Tissue?

The first law of thermodynamics states that energy can be neither created nor destroyed, but only transformed, and the second law states that there is an overall direction in the transformation, such that disorder (entropy) increases. Living organisms must obey these fundamental physical laws, and they have major consequences. Being low entropy systems, living things need to continuously fight against the impetus for entropy to increase. Complex organic molecules like proteins, lipids, DNA, and RNA become damaged and corrupted and must be continuously recycled and rebuilt to maintain their function. Doing this requires the continuous transformation of large amounts of energy. Hence, even when an organism is outwardly doing nothing, it still uses up large amounts of energy to maintain its low entropy state. However, living organisms must also grow, move around to find mates and food, defend themselves against attack by pathogens, and reproduce: processes which all require energy. The requirement for energy by living beings is continuous. Although energy sustains many different life processes, in animals, it can be obtained only by feeding, and feeding is discontinuous. Since energy cannot be created or destroyed, this means that animals need to have some mechanism(s) to store energy so that the episodic supply can be matched to the continuous requirement. The key storage mechanisms that allow us to get from one meal to the next are glucose and particularly glycogen in the liver and skeletal muscle. A useful analogy for this system is a regular bank account (Speakman 2014). Money is periodically deposited into the account (similar to food intake) where it is stored temporarily (like glucose and glycogen stores) and is depleted by continuous spending (energy expenditure). The presence of the bank account acts as an essential buffer between discontinuous income and continuous spending.

There are, however, numerous situations where animals struggle to get enough food to meet the demands. In these instances, animals need a more long-term storage mechanism than glucose and glycogen stores, and this is generally provided by body fat. Returning to the analogy of a bank account – body fat is like a savings account. During periods when food is abundantly available, animals can deposit energy into their body fat (savings account), so that it is available for periods in the future when demand will exceed supply (Johnson et al. 2013). So adipose tissue exists primarily as a buffer that is used to supply energy during periods when food supply is insufficient to meet energy demands.

Why Do We Get Obese?

Given this background to why adipose tissue exists, there have been three different types of evolutionary explanation for why in modern society we fill up these fat stores to tremendous levels (Table 1: also reviewed in Speakman 2013a, 2014). First, there is the adaptive viewpoint. This suggests that obesity was adaptive in the past, but in the changed environment of the modern world, the positive consequences of being obese have been replaced by negative impacts. Second, there is the neutral viewpoint. This suggests that obesity has not been subject to strong selection in the past, but rather the genetic predisposition has arisen by neutral evolutionary processes like genetic drift. Finally there is the maladaptive viewpoint. This suggests that obesity has never been advantageous and that historically people were never obese (except some rare genetic mutations). However, the modern propensity to become obese is a by-product of positive selection on some other advantageous trait. Because evolution is by definition a genetic process, evolutionary explanations seek to explain where the genetic variation that causes a predisposition to obesity comes from. There is another set of ideas that are related to evolutionary explanations but do not concern genetic changes – for example, the “thrifty phenotype” hypothesis (Hales and Barker 1992; Wells 2007; Prentice et al. 2005), the “thrifty epigenotype” hypothesis (Stoger 2008), and the oxymoronic “nongenetic evolution” hypothesis (Archer 2015) (Table 1). This chapter does not concern these non-evolutionary ideas, but a treatment of some of them can be found elsewhere in this volume (Chap. 13, “Fetal Metabolic Programming,” Aitkin).

Adaptive Interpretations of Obesity

The primary adaptive viewpoint is that during our evolution accumulation of fat tissue provided a fitness advantage and was therefore positively selected by natural selection. This positive selection in the past is why some individuals have a predisposition to become obese today in spite of its negative effects. Humans are not the only animals to become obese (Johnson et al. 2013). There are several other groups of mammals and birds that deposit large amounts of body fat at levels equivalent to human obesity, for example, deposition of fat in some mammals prior to the hibernation (e.g., Krulin and Sealander 1972; Ward and Armitage 1981; Boswell et al. 1994; Kunz et al. 1998; Speakman and Rowland 1999; Martin 2008) and the deposition of fat in some birds prior to migration (e.g., Moore and Kerlinger 1987; Klaasen and Biebach 1994; Moriguchi et al. 2010; Repenning and Fontana 2011). Several other animals show cycles in fat storage in relation to the annual cycle even though they do not engage in migration or hibernation – including voles (Krol et al. 2005; Li and Wang 2005; Krol and Speakman 2007) and hamsters (Bartness and Wade 1984; Wade and Bartness 1984) – mostly to facilitate breeding. These animal examples of obesity have in common the fact that deposition of fat is a preparatory response for a future shortfall in energy supply or an increase in demand (Johnson et al. 2013). For the hibernating animal, it will be unable to feed during winter, and for the migrating animal, it will also have no access to food when crossing barriers such as large deserts or oceans. Although humans neither seasonally hibernate nor migrate, a number of authors have made direct comparisons between these processes in wild animals and obesity in humans (Johnson et al. 2013). This is because humans must often deal with shortfalls of energy supply during periods of famine. Famine reports go back almost as long as people have been able to write (McCance 1975; Harrison 1988; Elia 2000). The argument was therefore made that human obesity in our ancient past probably served the function of facilitating survival through famines (Neel 1962), like fat storage in hibernators facilitates survival through hibernation. Famines would have provided a strong selection on genes that favored the deposition of fat during periods between famines. Individuals with alleles that favored efficient fat deposition would survive subsequent famines, while individuals with alleles that were inefficient at fat storage would not (Neel 1962). This idea, called the “thrifty gene hypothesis” was first published more than 50 years ago (more in the context of selection for genes predisposing to diabetes than obesity which was presumed to underpin the efficiency of fat storage) (Neel 1962). It has since been reiterated in various forms specifically with respect to obesity (Eaton et al. 1988; Lev-Ran 1999, 2001; Prentice 2001, 2005a, b, 2006; Campbell and Cajigal 2001; Chakravarthy and Booth 2004; Eknoyan 2006; Watnick 2006; Wells 2006; Prentice et al. 2008; O’Rourke 2014).

In detail, the hypothesis is as follows. When humans were experiencing periodic famines, thrifty alleles were advantageous because individuals carrying them would become fat between famines, and this fat would allow them to survive the next famine. They would pass their versions of the thrifty genes to their offspring, who would then also have a survival advantage in subsequent famines. In contrast, individuals not carrying such alleles would not prepare for the next famine by depositing as much fat, and would die, along with their unthrifty alleles. Because food supplies were presumed to be always low, even between famines, the levels of obesity attained, even in those individuals who carried the thrifty alleles, were probably quite modest, and so individuals never became fat enough to experience the detrimental impacts of obesity on health. What changed since the 1950s was that the food supply in Europe and North America increased dramatically due to enormous increases in agricultural production. This elevation in food supply has gradually spread through the rest of the world. The consequence is people in modern society who carry the thrifty alleles more efficiently eat the abundant food and deposit enormous amounts of fat. Obese people are like boy scouts: always prepared. In this way, the alleles that were once advantageous have been rendered detrimental by progress (Neel 1962).

Advocates of the thrifty gene idea agree on some fundamental details. First, that famines are frequent. Estimates vary, but values of once every 10 years or so are often cited after Keys et al. 1950. Second, famines cause massive mortality (figures of 15–30 % mortality are commonly quoted). However, they differ in some important aspects. One area of discrepancy is how far back in our history humans have been exposed to periodic famine. Some have suggested that famine has been an “ever present” feature of our history (Chakravarthy and Booth 2004; Prentice 2005a). There is a problem, however, with this suggestion. If the “thrifty alleles” provided a strong selective advantage to survive famines and famines have been with us for this period of time, then these alleles would have spread to fixation in the entire population (Speakman 2006a, b, 2007). We would all have the thrifty alleles, and in modern society we would all be obese. Yet, even in the most obese societies, there remains a population of lean people comprising about 20 % of the population (Ogden et al. 2006; Flegal et al. 2010). If famine provided a strong selective force for the spread of thrifty alleles, it is relevant to ask how come so many people managed to avoid inheriting them (Speakman 2006a, b, 2007).

We can illustrate this issue in a more quantitative manner. If a thrifty allele existed that promoted greater fat storage such that individuals carrying two versions of that allele survived 3 % better and those who carry one version would survive 1.5 % better, then a random mutation to create the thrifty allele would spread from being in just one individual to the entire population of the ancient world in about 600 famine events. Using the most conservative estimate of famine frequency, of once per 150 years, this is about 90,000 years or about 1/500th the time since Australopithecus. Any mutation therefore that produced a thrifty allele within the first 99.8 % of hominin history with this effect on mortality would therefore have gone to fixation. We would therefore all have inherited these alleles, and we would all be obese (Speakman 2006a, b).

This calculation reveals a large difference between the “obesity” phenomena observed in animals and the obesity epidemic in humans. In animals, when a species prepares for hibernation, migration, or breeding, the entire population becomes obese. The reasons are clear (Speakman and O’Rahilly 2012). If a bird migrates across an area of ocean and does not deposit enough fat for the journey, it plunges into the ocean short of its destination and the genes that caused it to not deposit enough fat are purged from the population. Selection is intense, and consequently all the animals become obese. If the same intense selection processes had operated in humans, as suggested by advocates of adaptive interpretations of obesity like the thrifty gene hypothesis (Prentice 2001b, 2005), then we too would all become obese when the environmental conditions proved favorable for us to do so. We do not.

Another school of thought, however, is that famine has not been a feature of our entire history but is linked to the development of agriculture (Prentice et al. 2008). Benyshek and Watson (2006) suggested that hunter-gatherer lifestyles are resilient to food shortages because individuals can be mobile, and when food becomes short in one area, they can seek food elsewhere or modify their diet to exploit whatever is abundant. In contrast, agricultural-based societies are dependent on fixed crops, and if these fail due, for example, to adverse weather conditions, food supply can immediately become a problem (see also Berbesque et al. 2014). Because mutations happening in the last 12,000 years would not have had chance to spread through the entire population, this shorter timescale for the process of selection might then explain why in modern society some of us become obese, but others remain lean.

The problem with this scenario, however, is opposite to the problem with the “ever present” idea. Humans developed agriculture only within the last 12,000 years (Diamond 1995), which would be only about 80 famine events with significant mortality. To be selected a mutation causing a thrifty allele would consequently have to provide an enormous survival advantage to generate the current prevalence of obesity. Calculations suggest the per-allele survival benefit would need to be around 10 %. Although it is often suggested that mortality in famines is very high and therefore a per-allele mortality effect of this magnitude could be theoretically feasible, such large mortality effects of famines are generally confounded by the problem of emigration, and true mortality is probably considerably lower. An additional problem is that for a mutation to be selected, all of this mortality would need to depend on differences in fat content attributable to a single genetic mutation. This also makes the critical assumption that the reason people die in famines is because they starve to death, and thus individuals with greater fat reserves would on average be expected to survive longer than individuals with lower fat reserves. Although there are some famines where it is clear that starvation has been the major cause of death (e.g., Hionidou 2002), for most famines this is not the case, and the major causes of death are generally disease related (Harrison 1988; Toole and Waldman 1988; Mokyr and Grada 1999; Adamets 2002). This does not necessarily completely refute the idea that body fatness is a key factor influencing famine survival. The spread of disease among famine victims is probably contributed to by individuals having compromised immune systems. A key player in the relationship between energy status and immune status is leptin (Lord et al. 1998; Matarese 2000; Faggioni et al. 2001). Low levels of leptin may underpin the immunodeficiency of malnutrition. Because circulating leptin levels are directly related to adipose tissue stores, it is conceivable that leaner people would have more compromised immune systems and hence be more susceptible to disease during famines.

One way to evaluate the role of body fatness in famine survival is to examine patterns of famine mortality with respect to major demographic variables such as age and sex and compare these to the expectation based on known effects of sex and age on body fat storage and utilization (Speakman 2013b). Females have greater body fat stores and lower metabolic rates compared with men of equivalent body weight and stature. In theory therefore, females should survive famines longer than males if body fatness plays a major role in survival (Henry 1990; Macintyre 2002). With respect to age, older individuals have declining metabolic rate, but they tend to preserve their fat stores until they are quite old (Speakman and Westerterp 2010). Consequently, older individuals would be expected to survive famines longer than younger adults if body fatness was the overriding consideration. Patterns of mortality during actual famines suggest that males have higher mortality than females (Macintyre 2002). However, with respect to age, the highest mortality usually occurs among the very young (less than 5 years of age, including elevated fetal losses) and elderly (increasing probability of mortality with age from the age of about 40 onwards) (Watkins and Menken 1985; Harrison 1988; Menken and Campbell 1992; Scott et al. 1995; Cai and Feng 2005). The age-related pattern of mortality in adults is the opposite of that predicted if body fatness is the most important consideration. However, the impact of sex is in agreement with the theoretical expectation. Despite this apparent correspondence in many famines, the magnitude of the female mortality advantage massively exceeds the expectation from body fatness differences (Speakman 2013b). Yet in other famines, there is no female mortality advantage at all. This points to famine mortality being a far more complex phenomenon than simple reserve exhaustion. For instance, with respect to age, older individuals that have passed reproductive age may sacrifice themselves to provide food to enable survival of their offspring. Alternatively, they may succumb to diseases more rapidly because of an age-related decline in immune function. The exaggerated effect of sex may be similarly explained by social factors – females, for example, may exchange sex for extra food or may have more access to food because they do more of the family cooking – the “proximity to the pot” phenomenon (Macintyre 2002). Overall, the data on causes of mortality during famine points to an extremely complex picture, where differences in body fatness probably play a relatively minor role in defining who lives and who dies.

Recognizing the problem with the suggestion that selection for genes that cause obesity has only been in force for the past 12,000 years, Prentice et al. (2008) suggested that the impact of body fatness during famines on fitness is not on survival probability but mostly on fertility. There is strong support for this suggestion (e.g., Razzaque 1988). For many famines, we have considerable evidence that fertility is reduced. During the Dutch hunger winter, for example, when Nazi Germany imposed a blockade on some areas of the Netherlands, there was a clear reduction in the number of births from the affected regions that could be picked up in enrolments to the army 18 years later, while adjacent regions that were not blockaded and did not suffer famine show no such reduction. The effect is profound with a decline during the famine amounting to almost 50 %. Tracing back the exact time that effects were manifest suggests that the major impact was on whether females became pregnant or not, rather than an impact on fetal or infant mortality rates (Stein et al. 1975; Stein and Susser 1975). Unlike the effect of fatness on mortality, there is also good reason to anticipate that differences in fertility would be strongly linked to differences in body fatness. This is because we know from eating disorders such as anorexia nervosa that individuals with chronically low body fat stop menstruating and become functionally infertile. Leptin appears to be a key molecule involved in the association between body fatness and reproductive capability (Ahima et al. 1997). This effect is not just restricted to females. Both male and female ob/ob mice which cannot produce functional leptin are both sterile: a phenotype that can be reversed by administration of leptin in both sexes (females, Chehab et al. 1996; males, Mounzih et al. 1997). Note however, that leptin is also responsive to chronic food shortage as well as body composition (Weigle et al. 1997), and there is a school of thought that amenorrhea in anorexia nervosa is not due to low body fatness but low food intake. If this was the case, then lowered fertility need not necessarily be restricted to lean individuals. This argument may also apply to the link between fat stores and immune status elaborated above. Moreover, there is another argument why reduced fertility is unlikely to be a major selective force during famines and that is because following famines, there is usually a compensatory boom in fertility that offsets any reduction during the famine years. Individuals that fail to get pregnant during famines tend to become immediately pregnant once the famine is over. Thus if one looks at the period including only the famine years, then fertility seems to have a major impact on demography (and hence selection), but expanding the period to include the famine and the post famine period revealing the net impact of altered fertility on demographics (and hence selection) is negligible and certainly insufficient to provide the selective advantage necessary to select genes for obesity over the period since humans invented agriculture.

These arguments about selection on genes favoring obesity were made before we had good information about the common polymorphisms that cause obesity or their effect sizes on fat storage. Without such information, it was plausible to suggest that genes might exist that have a large impact on fat storage and hence survival or fertility during famines. This view became untenable with the advent of genome-wide association studies (GWAS) which identified the main genes with common polymorphisms associated with increased obesity risk (Day and Loos 2011). These GWAS studies revolutionized our view of the genetics of obesity since the majority of identified SNPs had nothing to do with the established hunger signaling pathway, and their effect sizes were all relatively small. At present, there are about 50 genes (SNPs) suggested to be associated with BMI that that have per-allele effect sizes between 1.5 kg and 100 g (Willer et al. 2009; Speliotes et al. 2010; Okada et al. 2012; Paternoster et al. 2012; Wen et al. 2012). On this basis, it has been suggested that the genetic architecture of obesity may involve hundreds or even thousands of genes each with a very small effect (Hebebrand et al. 2010). This reality about the genetic architecture of obesity makes the proposed model by Prentice et al. (2008) that selection on these genes has only occurred over the past 12,000 years completely untenable, because SNPs causing differences in fat storage of 100–1000 g could not possibly cause differential survival or fecundity during famines of 10 %.

Setting aside the suggestion that famines are a phenomenon of the age of agriculture, if periodic food crises sufficient to cause significant mortality did affect us throughout our evolutionary history, it is possible to imagine a scenario where genes of small effect might have such a small impact on fat storage, and hence famine survival (or fecundity), that their spread in the population would be incredibly slow. Therefore, they might not progress to fixation over the duration of our evolutionary history, and we would be left today with the observed genetic architecture of many incompletely fixed genes of small effect. Speakman and Westerterp (2013) evaluated this idea by first predicting the impact of such polymorphisms of small effect on famine survival and then modeling the spread of such genes over the 4 million years of hominin evolution (assuming a 150-year frequency of famines). Using a mathematical model of body fat utilization under total starvation, combined with estimates of energy demand across the lifespan, it was shown that genes that had a per-allele effect on fat storage of 80 g would cause a mortality difference of about 0.3 %. That is 10x lower than the assumed effect that had been previously used to model the spread of thrifty genes (Speakman 2006b). Nevertheless, despite this very low impact on famine survival, a mutation causing such a difference in fat storage would move to fixation in about 6000 famine events (about 900,000 years). Thus the scenario of genetic polymorphisms moving slowly to fixation is correct, but it implies that all the mutations identified as important in GWAS studies had occurred in the last million years or so – which we know is not correct. In addition, if the selection model is correct, we would anticipate, all else being equal, that genes with greater effect size would have greater prevalence, but that is not observed in the known GWAS SNPs (Speakman and Westerterp 2013 using data from Speliotes et al. 2010).

Overall, the idea that the genetic basis of obesity is adaptive, resulting from selection in our evolutionary history which favored “thrifty” alleles, because of elevated survival or fecundity of the obese during famines, is not supported by the available data. Other adaptive scenarios could be envisioned. For example, Power and Shulkin (2009) argue that we are fat because of the need to support development of our large brains. Rakesh and Syam (2015) point to the benefits of milder levels of obesity for disease survival and fecundity. An alternative idea is that fat storage in human ancestors was promoted by the loss of the uricase gene in the Miocene (Johnson et al. 2013), which enabled more efficient utilization of fructose to deposit fat. This fat then enabled greater survival during periods of famine. A common problem faced by such scenarios is the fact that even in the most obesogenic modern environments, many individuals do not become fat. Any proposed adaptive scenario must explain this variation. Perhaps the closest any adaptive idea comes to explaining this variation is the suggestion of Johnson et al. (2013) that we lost the uricase gene early in our evolution because of the advantages for conversion of fruit sugars to fat (i.e., everyone inherited this mutation), but this only leads to obesity in modern society in individuals with high intakes of fructose. This however does not explain the known genetic variation between individuals that predisposes to obesity (Allison et al. 1996).

The Neutral Viewpoint

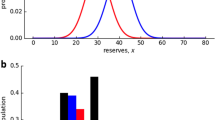

Evolution is a complex process. We often regard natural selection as being the primary force generating genetic change. However, this is a naive viewpoint, and among evolutionary biologists, it is well recognized that natural selection is one of a number of processes including phyletic heritage, founder effects, neutral mutations, and genetic drift that underlie genetic variations between individuals in a population. We should be cautious not to interpret everything biological from the perspective of adaptation by natural selection. The emerging field of “evolutionary medicine” is rapidly learning to appreciate this fact, and there is an increasing recognition that other “nonadaptive” evolutionary processes may be important to understand the evolutionary background to many human diseases (Zinn 2010; Puzyrev and Kucher 2011; Valles 2012; Dudley et al. 2012). The “drifty gene” hypothesis is a nonadaptive explanation for the evolutionary background of the risk of developing obesity (Speakman 2007, 2008). This hypothesis starts from the observation that many wild animals can accurately regulate their body fatness. Several models are available to understand this regulation (Speakman et al. 2011), but a particularly useful idea is the suggestion that body weight is bounded by upper and lower limits or intervention points (Herman and Polivy 1984; Levitsky 2002; Speakman 2007), called the dual intervention point model (Speakman et al. 2011). If an individual varies in weight between the two limits, then nothing happens, but if its body weight decreases below the lower limit or above the upper limit, it will intervene physiologically to control its weight. Body weight is kept relatively constant (between the two limits) in the face of environmental challenges. These upper and lower limits may be selected for by different evolutionary pressures: the lower limit by the risk of starvation and the upper limit by the risk of predation.

Considerable research suggests that this fundamental balance of risks of starvation keeping body masses up (i.e., setting the lower intervention point) and risks of predation keeping body masses down (i.e., setting the upper intervention point) is a key component of body mass regulation in birds (Gosler et al. 1995; Kullberg et al. 1996; Fransson and Weber 1997; Cresswell 1998; Adriaensen et al. 1998; van der Veen 1999; Cuthill et al. 2000; Brodin 2001; Gentle and Gosler 2001; Covas et al. 2002; Zimmer et al. 2011), small mammals (Norrdahl and Korpimaki 1998; Carlsen et al. 1999, 2000; Banks et al. 2000; Sundell and Norrdahl 2002), and larger animals such as cetaceans (MacLeod et al. 2007). The “starvation-predation” trade-off has become a generalized framework for understanding the regulation of adiposity between and within species (Lima 1986; Houston et al. 1993; Witter and Cuthill 1993; Higginson et al. 2012), and laboratory studies are now starting to probe the metabolic basis of the effects of stochastic food supply and predation risk on body weight regulation (Tidhar et al. 2007; Zhang et al. 2012; Monarca et al. 2015a, b).

The drifty gene hypothesis suggests that early hominins probably also had such a regulation system. During the early period of human evolution between 6 and 2 million years ago (Pliocene), large predatory animals were far more abundant (Hart and Susman 2005). Our ancestors (Paranthropines and Australopithecines) were also considerably smaller than modern humans, making them potential prey to a wide range of predators. At this stage of our evolution, it seems most likely that upper and lower intervention points evolved to be relatively close together, and the early hominids probably had close control over their body weights.

Several major events however happened in our evolutionary history around 2.5 million to 2.0 million years ago. The first was the evolution of social behavior. This would have allowed several individuals to band together to enhance their ability to detect predators and protect each other from their attacks. In a similar manner, some modern primates, for example, vervet monkeys, have evolved complex signaling systems to warn other members of their social groups about the approach of potential predators (Cheney and Seyfarth 1985; Baldellou and Henzi 1992). This alone may have been sufficient to dramatically reduce predation risk. A second change was the discovery of fire and weapons (Stearns 2001; Platek et al. 2002), powerful means for early Homo to protect themselves against predation. Social structures would have greatly augmented these capacities. Modern non-hominid apes such as chimpanzees (Pan troglodytes) also use weapons such as sticks to protect themselves against predators such as large snakes, and it has been concluded that bands of early hominids with even quite primitive tools could easily succeed in defending themselves in confrontations with potential predators (Treves and Naughton-Treves 1999).

The consequence was that the predation pressure that maintained the upper intervention point effectively disappeared. It has been suggested that because there was no selective pressure causing this intervention point to change, the genes that defined it were then subject to mutation and random drift (Speakman 2007) – hence, the “drifty” gene hypothesis (Speakman 2008). Genetic drift is a process that is favored by low effective population size. The suggestion that early Homo species had a small effective population size (around 10,000 despite a census population of around one million) (Harding et al. 1997; Eller et al. 2009) would create a genetic environment where drift effects could be common. Mutations and drift for 2 million years would generate the necessary genetic architecture, but this is insufficient to cause an obesity epidemic. By this model virtually, the same genetic architecture would also have been present 20,000 years ago (after 1,980,000 years of mutation and drift compared to 2 million years today). Why did the obesity epidemic not happen then? There have been two separate factors of importance that restricted the potential for people to achieve their drifted upper intervention points – the level of food supply and the social distribution of it (Power and Schulkin 2009). Before the Neolithic, the most important factor was probably the level of food supply. Paleolithic individuals probably could not increase their body masses sufficiently to reach their drifted upper intervention points because there was insufficient food available. At this stage, each individual or small group would be foraging entirely for their own needs. Things changed in the Neolithic with the advent of agriculture. Subsistence agriculture is not much different from hunter-gathering – in that each individual grows and harvests food for themselves and/or a small group. As yields from agricultural practice improved, however, the numbers of people needed to grow and harvest food as a percentage of the total population declined. It is at this stage that more complex human societies emerged (Diamond 1995).

Human societies are only feasible because it is possible for a subset of individuals to grow and harvest food to sustain a larger number of individuals. This wider group of individuals is then able to perform activities that would be unfeasible if they had to spend all their time growing and harvesting food. Such activities include religion, sport, politics, the arts and war, as well as building projects with stone, making pottery, iron, and bronze-ware which all require high temperatures of a kiln and mining ores. These activities were only possible when yields from crops became high enough to allow some individuals to stop raising crops and do other things. However, a crucial additional element was the societal control of food supply, so that food produced by one section of society can be distributed to those that do not produce it. This effectively requires the development of monetary and class systems, most of which have their origins in the wake of Neolithic agriculture. This central control of food supply is important because people can only attain their drifted upper intervention points if there is an adequate supply of food for them to do so.

In the Paleolithic, most people could not get access to these resources because there were insufficient resources available. After the Neolithic, most people could also not get access to unlimited food supplies because of the central control of food supply. Because most people would normally have body weights in the region between their upper and lower intervention points, they would not experience a physiological drive forcing them to seek out such food. An exception might be during the rare periods of famine (see above). This pattern of food access led to the development of a class-related pattern of variation in body weight. In the lower classes, where food supply was restricted, people did not move to their upper intervention points, whereas in higher levels of society, where access to food was effectively unlimited, attainment of the drifted upper intervention point became possible. Consequently at this stage, obesity was restricted to the wealthy and powerful. Not all wealthy and powerful people became obese (only those with the genetic predisposition to do so – i.e., with high drifted upper intervention points), but none of the poorer classes did. Obesity became a status symbol (Power and Shulkin 2009; Brewis 2010). Reports of obese people date from at least early Greek times. In the fifth Century BC, Hippocrates suggested some potential cures for obesity (Procope 1952). This implies two things. There would be no need for a cure for obesity if nobody suffered from it, so it must have been common enough to warrant his attention. Second, Hippocrates did not regard obesity as advantageous or desirable – but something that needed to be “cured.” This provides additional evidence against the famine-based “thrifty gene” hypothesis, since obesity 2500 years ago, when famines were still supposed to be a major selective pressure, should have been viewed as advantageous if that theory was correct.

Estimates by agricultural historians of the levels of food production support the idea that most people in the past were under socially restricted food supply. In the late 1700s, for example, it has been estimated that 70 % of Britain and 90 % of France were consuming less than 12 MJ/day. If only 10 % of the population had free access to unlimited energy, then only people in this proportion of the population would be expected to reach their drifted upper intervention points. Obesity prevalence would be expected to be less than 3 %. This was the actual prevalence of obesity in the USA in 1890. It seems that the social control of food supply only started to change in Western societies after the First World War. This period (1920s) saw a wave of obesity in Western societies (Dubois 1936), but this was reversed when the Western world went back to war in the 1940s, especially in countries where food rationing was introduced. The modern obesity epidemic reflects a second wave of obesity as easy access to nutritional resources became widespread across all social levels after World War II ended. Nowadays, anyone in the West can afford to overconsume energy (Speakman 2014). For example, a person in the USA earning the minimum wage of 7.25$ per hour (2013) and working a standard 38 h week would have an annual income of about 14,300 US$. Assuming half of this was available to buy food, this person could buy annually 2865 McDonalds’ happy meals (about eight per day), containing about 3700 cal, about 47 % more energy than the daily intake requirement of a man and 84 % more than the daily intake requirement of a woman. In 2013, it was estimated that earners of minimum wage had lower income than those on welfare in the majority of states in the USA. It has been frequently noted that obesity increases coincidental with the economic transition from being largely rural to largely urban. Explanations for this trend have largely concerned alterations in levels of physical activity and increased access to food resources. The current model is completely consistent with these interpretations because it suggests that only following such economic transitions are individuals able to achieve their drifted upper intervention points.

The GWAS provides some support for this model. SNPs predisposing to obesity have not been under strong positive selection (Southam et al. 2010; Koh et al. 2014), and similar lack of strong positive selection is also observed in GWAS targets linked to type 2 diabetes (Ayub et al. 2014). This absence of selection is also supported by the absence of any link between prevalence and effect size among these SNPs (Speakman and Westerterp 2013). Finally, the genes that have been identified appear to include a large proportion of centrally acting genes that are related to appetite and food intake (e.g., Fredriksson et al. 2008). It is entirely conceivable that the centrally acting genes that have been identified to date somehow define the upper intervention point. Overall, this model provides a nonadaptive explanation for why some people get obese but others do not.

The Maladaptive Scenario

The maladaptive viewpoint is that obesity has never been advantageous. Historically, it may have never even existed, except in some rare individuals with unusual genetic abnormalities – perhaps represented in Paleolithic sculptures such as the “Venus of Willendorf.” However, the idea is that genes that ultimately predispose us to obesity become selected as a by-product of selection on some other trait that was advantageous. The best example of a “maladaptive” interpretation of the evolution of obesity is the suggestion that it is caused by individual variability in the capacity of brown adipose tissue to burn off excess caloric intake (Sellayah et al. 2014).

Brown adipose tissue is found uniquely in mammals (Chap. 21, “Adipose Structure (White, Brown, Beige),” Vidal-puig et al.). Contrasting white fat which contains a single large fat droplet, brown adipocytes typically contain large multilocular lipid droplets and abundant mitochondria. These mitochondria contain a unique protein called uncoupling protein 1 (UCP-1) which resides on the inner membrane. UCP-1 acts as a pore via which protons in the intermembrane space can return to the mitochondrial matrix. However, unlike protons traveling from the intermembrane space to the matrix via ATP synthase, the protons moving via UCP-1 are not coupled to the formation of ATP (hence, the name “uncoupling protein”). The chemiosmotic potential energy carried by the protons traveling via UCP-1 is therefore released directly as heat, which is the primary function of BAT – to generate heat for thermoregulation. Unsurprisingly, then BAT is found abundantly in small mammals and in the neonates of larger mammals (including humans), which have an unfavorable surface-to-volume ratio for heat loss. The weight of BAT, and hence its capacity to generate heat, varies in relation to thermoregulatory demands. During winter, the amount of BAT and UCP-1 increases (Feist and Feist 1986; Feist and Rosenmann 1976; McDevitt and Speakman 1994). During summer, BAT and UCP-1 are lower (Feist and Feist 1986; Wunder et al. 1977; McDevitt and Speakman 1996).

During the late 1970s, it was suggested that BAT might have an additional function: to “burn off” excess calorie intake (Rothwell and Stock 1979; Himms-Hagen 1979). This idea fell out of favor because it was commonly believed that adult humans do not have significant deposits of BAT. However, active BAT was discovered in adult humans in 2007 (Nedergaard et al. 2007), and since that time the idea that variability in BAT function might result in the variable susceptibility to obesity has reemerged (Sellayah et al. 2014). This has been supported by observations that the amount and activity of BAT is inversely related to obesity (Cypress et al. 2009; van Marken-Lichtenbelt et al. 2009) and that there is an age-related reduction in BAT activity, correlated with the age-related increase in body fatness (Cypress et al. 2009; Yoneshiro et al. 2011). Moreover, the seasonal changes and responses to cold exposure in animals are also observed in humans (Saito et al. 2009), suggesting important functional activity. Experimental studies in rodents have established that transplanting extra BAT tissue into an individual can protect both against diet-induced (Stanford et al. 2013; Liu et al. 2013) and genetic obesity (Liu et al. 2015).

The “maladaptive” scenario for the evolution of obesity is therefore as follows. Individuals are presumed to vary in their brown adipose tissue thermogenesis as a result of their variation in evolutionary exposure to cold (Sellayah et al. 2014), which necessitated the use of BAT for thermogenesis. Some individuals might have high levels of active BAT, while others might have lower levels, either because their exposure to cold was lower or because they avoided cold exposure by other mechanisms such as development of clothing and the use of fire. Consequently, high levels of BAT would be one of a number of alternative adaptive strategies for thermoregulation. Because of this diversity of potential strategies, a genetic predisposition to develop high and active levels of BAT would only be present in some individuals and populations. This would lead to individual and population variation in the ability to recruit BAT for its secondary function: burning off excess energy intake.

A key question, however, is why individuals might have excessive intake of energy in the first place. Especially since this notion appears diametrically opposed to the fundamental assumption underlying the thrifty gene hypothesis that energy supply is almost always limited, one potential explanation for this effect is that individuals may not only eat food for energy but also for some critical nutrient. When food is of high quality, it may be that by eating enough food to meet the daily energy demands is enough to also meet demands for the critical nutrient. Any excess nutrient intake could be excreted. Two scenarios might alter this situation. Energy demands might decline. This could, for example, be precipitated by an increase in sedentary behavior in modern society (Prentice and Jebb 1995; Church et al. 2011). If individuals continued to eat food to meet their energy demands, then they would reduce their intake, but this might mean their intake of the critical nutrient was now below requirements, and they would be nutrient deficient. However, direct measurements of energy demand in humans in both Europe and North America since the 1980s do not support the idea that activity energy demands have declined (Westerterp and Speakman 2008; Swinburn et al. 2009). Nevertheless, another scenario is that the quality of the food might change and the ratio of energy to the critical nutrient might increase. Again, if individuals continued to eat to meet their energy requirements, then intake of the nutrient would become deficient. In both of these scenarios to avoid nutrient deficiency, individuals might consume more food to meet their demands for the nutrient. The result would be that their consumption of energy would then exceed their demands.

A strong candidate for the nutrient that may drive overconsumption of energy is protein. This idea is called the “protein leverage hypothesis” (Simpson and Raubenheimer 2005) and is elaborated in full detail in the book The Nature of Nutrition by Simpson and Raubenheimer (2010). By this hypothesis, the main driver of food intake is always the demand for protein. That is, people and animals primarily eat to satisfy their protein requirements, and energy balance comes along as a passenger. The idea has lots to commend it. Across human societies, the intake of protein, despite very diverse diets, is almost constant – consistent with this being the primary regulated nutrient. In contrast, energy intakes are widely divergent. Moreover, we know that diets which include a high ratio of protein to energy (e.g., the Atkins diet) are effective for weight loss. A review of 34 studies of dietary intake showed that dietary protein was negatively associated with energy intake (Gosby et al. 2014). Several experimental studies of diet choice in rodents also point to protein content as the factor regulating energy intake and hence body weight (e.g., Sorensen et al. 2008; Huang et al. 2013). Hence, the protein leverage theory may provide a necessary backdrop to the brown adipose tissue idea. It has also been noted that the protein leverage hypothesis may also explain why in modern society individuals increase their body mass to their upper intervention points as part of the “drifty gene” idea detailed above (Speakman 2014). Note however that other studies suggest little evidence in support of the protein leverage hypothesis in food intake records over time in the USA (Bender and Dufour 2015), but this may reflect the poverty of the food intake reports rather than the theory (Dhurandhar et al. 2015).

If humans do overconsume energy because of the requirement for protein, then the ability to burn off the excess energy might then depend on levels of brown adipose tissue. Individuals with large BAT depots might burn off the excess and remain lean, while those with lower levels of BAT might be unable to burn off the excess consumption and become obese. By this interpretation, obesity is a maladaptive consequence of variation in adaptive selection on brown adipose tissue capacity. The environmental trigger is the change in the energy to nutrient ratio in modern food that stimulates overconsumption of energy. There is no need by this viewpoint to infer that obesity has ever provided an advantage or even that we have in our history ever been fat.

If brown adipose tissue is a key factor that influences the propensity to become fat, then one would anticipate that knocking out the UCP-1 gene in mice would lead to obesity. Enerbäck et al. (1997) knocked out UCP-1, but the result did not support the hypothesis, because the mice did not become any more obese than wild-type mice when exposed to a high-fat diet. One potential issue with this experiment was that the genetic background of these mice was a mix of two strains, one susceptible and the other not susceptible to weight gain on a high-fat diet. The experiment was repeated but with the mice now backcrossed onto a pure C57BL/6 background (a strain that is susceptible to high-fat diet-induced weight gain) (Liu et al. 2003). However, now the mice lacking UCP-1 were actually more resistant to the high-fat diet-induced obesity than the wild-type mice, but the protective effect was abolished when the mice were raised at 27 °C. This confusion was further compounded when the same mice were studied at 30 °C, at which temperature the KO mice became fat even on a chow diet, and this effect was multiplied with high-fat feeding (Feldmann et al. 2009). This is very confusing because at 30 °C, one would anticipate that UCP-1 would not be active in the mice that had it, and hence they should not differ from the KO animals. So the impact of knocking out the UCP-1 gene ranges from being protective from obesity at 20 °C to neutral at 27 °C to highly susceptible at 30 °C. These data for the UCP-1 KO mouse raise some interesting questions about the hypothetical role of BAT in the development of obesity in humans. In particular in some circumstances, not having functional BAT is not an impediment to burning off excess intake (i.e., the UCP1 KO mice at 20 °C). It is unclear then why humans could not also burn off excess intake by other methods – for example, physical activity or shivering.

A second major problem with this BAT idea is that the obesity genes identified so far from the GWAS studies (Willer et al. 2009; Speliotes et al. 2010) are not associated with brown adipose tissue function but instead appear mostly linked to development or expressed in the brain and linked to individual variation in food intake (e.g., the gene FTO: Cecil et al. 2008; Speakman 2015). This lack of a link to the genetics suggests that evolutionary variability in thermoregulatory requirements probably did not drive individual variations in BAT thermogenic capacity (but see Takenaka et al. (2012) for a perspective on the evolution of human thermogenic capacity relative to the great apes). Finally, there are other potential explanations for why there might be an association between BAT depot size and obesity (Cypress et al. 2009; van Marken-Lichtenbelt et al. 2009). Adipose tissue acts as an insulator, and thermoregulatory demands in the obese are reduced because of shift downwards in the thermoneutral zone (Kingma et al. 2012). Severely obese people may be under heat stress because of their reduced capacity to dissipate heat at ambient temperatures where lean people are in the thermoneutral zone. In these circumstances, the requirement for thermoregulatory heat production would be reduced, and hence it is potentially the case that the association between BAT activity and adiposity comes about because obesity reduces the need for BAT and not because variation in BAT causes variation in the capacity to burn off excess intake.

Conclusion

Many ideas have been presented that try to explain the evolutionary background of the genetic contribution to the obesity epidemic. These can be divided into three basic types of idea. Adaptive interpretations suggest that fat has been advantageous during our evolutionary history. Theories include the thrifty gene hypothesis and the idea that high body fat was necessary to support our brain development. These ideas generally struggle to explain the diverse in obesity levels observed in modern society. Neutral interpretations emphasize that the propensity to become obese does not have any advantage but is a by-product of mutation and genetic drift in some key control features. The dominant idea is the drifty gene hypothesis. Finally, obesity may be a maladaptive consequence of positive selection on some other systems. Examples of this type of explanation are the brown adipose tissue hypothesis and the protein leverage hypothesis.

References

Adamets S. Famine in nineteenth and twentieth century Russia: mortality by age, cause and gender. In: Dyson T, O’Grada C, eds. Famine Demography. Perspectives from the Past and Present. International Studies in Demography Series. Oxford: Oxford University Press; 2002:158-180. Chapter 8.

Adriaensen F et al. Stabilizing selection on blue tit fledgling mass in the presence of sparrowhawks. Proc R Soc Lond Ser B. 1998;265:1011-1016.

Ahima RS, Dushay J, Flier SN, et al. JS. Leptin accelerates the onset of puberty in normal female mice. J Clin Invest. 1997;99:391-395.

Allison DB, Kaprio J, Korkeila M, et al. The heritability of BMI among an international sample of monozygotic twins reared apart. Int J Obes (Lond). 1996;20:501-506.

Archer E. The childhood obesity epidemic as a result of nongenetic evolution: the maternal resources hypothesis. Mayo Clin Proc. 2015;90:77-92.

Ayub Q et al. Revisiting the thrifty gene hypothesis via 65 loci associated with susceptibility to type 2 diabetes. Am J Hum Genet. 2014;94:176-185.

Baldellou M, Henzi SP. Vigilance, predator detection and the presence of supernumerary males in vervet monkey troops. Anim Behav. 1992;43:451-461.

Banks PB, Norrdahl K, Korpimaki E. Nonlinearity in the predation risk of prey mobility. Proc R Soc B. 2000;267:1621-1625.

Bartness TJ, Wade GN. Photoperiodic control of seasonal body weight cycles in hamsters. Neurosci Biobehav Rev. 1984;9:599-612.

Bender RL, Dufour DL. Protein intake, energy intake, and BMI among USA adults from 1999–2000 to 2009–2010: little evidence for the protein leverage hypothesis. Am J Hum Biol. 2015;27:261-262.

Benyshek DC, Watson JT. Exploring the thrifty genotype’s food-shortage assumptions, a cross-cultural comparison of ethnographic accounts of food security among foraging and agricultural societies. Am J Phys Anthropol. 2006;131:120-126.

Berbesque JC et al. Hunter-gatherers have less famine than agriculturalists. Biol Lett. 2014;10:20130853.

Boswell T, Woods SC, Kenagy GJ. Seasonal changes in body mass, insulin and glucocorticoids of free-living golden mantled ground squirrels. Gen Comp Endocrinol. 1994;96:339-346.

Brewis AA. Obesity: Cultural and Biocultural Perspectives. New York: Rutgers University Press; 2010.

Brodin A. Mass-dependent predation and metabolic expenditure in wintering birds: is there a trade-off between different forms of predation? Anim Behav. 2001;62:993-999.

Cai Y, Feng W. Famine, social disruption, and involuntary fetal loss: evidence from Chinese survey data. Demography. 2005;42:301-322.

Campbell BC, Cajigal A. Diabetes: energetics, development and human evolution. Med Hypotheses. 2001;57:64-67.

Carlsen M, Lodal J, Leirs H, et al. The effect of predation risk on body weight in the field vole, Microtus agrestis. Oikos. 1999;87:277-285.

Carlsen M, Lodal J, Leirs H, et al. Effects of predation on temporary autumn populations of subadult Clethrionomys glareolus in forest clearings. Int J Mamm Biol. 2000;65:100-109.

Cecil JE, Tavendale R, Watt P, et al. An obesity associated FTO gene variant and increased energy intake in children. N Engl J Med. 2008;359:2558-2566.

Chakravarthy MV, Booth FW. Eating, exercise, and “thrifty” genotypes: connecting the dots toward an evolutionary understanding of modern chronic diseases. J Appl Physiol. 2004;96:3-10.

Chehab FF, Lim ME, Lu RH. Correction of the sterility defect in homozygous obese female mice by treatment with human recombinant leptin. Nat Genet. 1996;12:318-320.

Cheney DL, Seyfarth RM. Vervet monkey alarm calls – manipulation through shared information. Behaviour. 1985;94:150-166.

Church TS, Thomas DM, Tudor-Loke C, et al. Trends over 5 decades in US occupation related physical activity and their associations with obesity. Plos One. 2011:e19657.

Covas R et al. Stabilizing selection on body mass in the sociable weaver Philetairus socius. Proc R Soc Lond Ser B. 2002;269:1905-1909.

Cresswell W. Diurnal and seasonal mass variation in blackbirds Turdus merula: consequences for mass-dependent predation risk. J Anim Ecol. 1998;67:78-90.

Cuthill IC et al. Body mass regulation in response to changes in feeding predictability and overnight energy expenditure. Behav Ecol. 2000;11:189-195.

Cypress AM, Lehman S, Williams G, et al. Identification and importance of brown adipose tissue in adult humans. N Engl J Med. 2009;360:1509-1517.

Day FR, Loos RJF. Developments in obesity genetics in the era of genome wide association studies. J Nutrigenet Nutrigenomics. 2011;4:222-238.

Dhurandhar NV, Schoeller DA, Brown AW, et al. Energy balance measurement: when something is not better than nothing. Int J Obes (Lond). 2015;39:1109-1113.

Diamond J. Guns, Germs and Steel: The Fates of human Societies. New York: WW Norton; 1995.

DuBois E. Basal Metabolism in Health and Disease. Philadelphia: Lea and Febiger; 1936.

Dudley JT, Kim Y, Liu L, et al. Human genomic disease variants: a neutral evolutionary explanation. Genome Res. 2012;22:1383-1394.

Eaton SB, Konner M, Shostak M. Stone agers in the fast lane: chronic degenerative diseases in evolutionary perspective. Am J Med. 1988;84:739-749.

Eknoyan G. A history of obesity, or how what was good became ugly and then bad. Adv Chronic Kidney Dis. 2006;13:421-427.

Elia M. Hunger disease. Clin Nutr. 2000;19:379-386.

Eller E, Hawks J, Relethford JH. Local extinction and recolonisation, species effective population size and modern human origins. Hum Biol. 2009;81:805-824.

Enerbäck S, Jacobsson A, Simpson EM, et al. Mice lacking mitochondrial uncoupling protein are cold-sensitive but not obese. Nature. 1997;387:90-94.

Faggioni R, Feingold KR, Grunfeld C. Leptin regulation of the immune response and the immunodeficiency of malnutrition. FASEB J. 2001;15:2565-2571.

Feist DD, Feist CF. Effects of cold, short day and melatonin on thermogenesis, body weight and reproductive organs in Alaskan red backed voles. J Comp Physiol B. 1986;156:741-746.

Feist DD, Rosenmann M. Norepinephrine thermogenesis in seasonally acclimatized and cold acclimated red-backed voles in Alaska. Can J Physiol Pharmacol. 1976;54:146-153.

Feldmann HM, Golozoubova V, Cannon B, et al. UCP1 ablation induces obesity and abolishes diet-induced thermogenesis in mice exempt from thermal stress by living at thermoneutrality. Cell Metab. 2009;9:203-209.

Flegal KM, Shepherd JA, Looker CA, et al. Overweight and obesity in the united states: prevalence and trends 1960–1994. Int J Obes (Lond). 1998;22:39-47.

Flegal KM et al. Prevalence and trends in obesity among US adults, 1999–2008. JAMA. 2010;303:235-241.

Franks PW. Gene by environment interactions in type 2 diabetes. Curr Diabetes Rep. 2011;11:552-561.

Fransson T, Weber TP. Migratory fuelling in blackcaps (Sylvia atricapilla) underperceived risk of predation. Behav Ecol Sociobiol. 1997;41:75-80.

Fredriksson R, Hagglund M, Olszewski PK, et al. The obesity gene, FTO, is of ancient origin, upregulated during food deprivation and expressed in neurons of feeding related nuclei of the brain. Endocrinology. 2008;149:2062-2071.

Gentle LK, Gosler AG. Fat reserves and perceived predation risk in the great tit, Parus major. Proc R Soc Lond Ser B. 2001;268:487-491.

Ginsburg E, Livshits G, Yakovenko K, et al. E. Major gene control of human body height, weight and BMI in five ethnically different populations. Ann Hum Genet. 1998;62:307-322.

Gosby AK, Conigrave AD, Raubenheimer D, et al. Protein leverage and energy intake. Obes Rev. 2014;15:183-191.

Gosler AG, Greenwood JJD, Perrins C. Predation risk and the cost of being fat. Nature. 1995;377:621-623.

Hales CN, Barker DJ. Type 2 (non-insulin-dependent) diabetes mellitus: the thrifty phenotype hypothesis. Diabetologia. 1992;35:595-601.

Harding RM, Fullerton SM, Griffiths RC, et al. Archaic African and Asian lineages in the genetic ancestry of modern humans. Am J Hum Genet. 1997;60:772-789.

Harrison GA, ed. Famine. Oxford: Oxford University Press; 1988.

Hart D, Susman RW. Man the Hunted. Primates, Predators and Human Evolution. Boulder, CO: Westview Press; 2005.

Hebebrand J, Volckmar AL, Knoll N, et al. Chipping away the ‘missing heritability’: GIANT steps forward in the molecular elucidation of obesity – but still lots to go. Obes Facts. 2010;3:294-303.

Henry CJK. Body mass index and the limits to human survival. Eur J Clin Nutr. 1990;44:329-335.

Herman CP, Polivy J. A boundary model for the regulation of eating. In: Stunkard AJ, Stellar E, eds. Eating and Its Disorders. New York: Raven Press; 1984.

Higginson AD, McNamara JM, Houston AI. The starvation-predation trade-off predicts trends in body size, muscularity and adiposity between and within taxa. Am Nat. 2012;179:338-350.

Himms-Hagen J. Obesity may be due to malfunctioning brown fat. Can Med Assoc J. 1979;121:1361-1364.

Hionidou V. Why do people die in famines? Evidence from three island populations. Popul Stud A J Demogr. 2002;56:65-80.

Houston AI, McNamara JM, Hutchinson JMC. General results concerning the trade-off between gaining energy and avoiding predation. Philos Trans R Soc. 1993;341:375-397.

Huang X, Hancock DP, Gosby AK, et al. Effects of dietary protein to carbohydrate balance on energy intake, fat storage, and heat production in mice. Obesity. 2013;21:85-92.

Johnson RJ, Steinvinkel P, Martin SL, et al. Redefining metabolic syndrome as a fat storage condition based on studies of comparative physiology. Obesity. 2013;21:659-664.

Keys A, Brozek J, Henschel A, et al. The Biology of Starvation. vol. 2. Minneapolis, Minnesota: University of Minnesota Press; 1950.

Kingma B, Frijns A, van Marken Lichtenbelt W. The thermoneutral zone: implications for metabolic studies. Front Biosci. 2012:e4.

Klaasen M, Biebach H. Energetics of fattening and starvation in the long distance migratory garden warbler (Sylvia borin) during the migratory phase. J Comp Physiol. 1994;164:362-371.

Koh XH, Liu XY, Teo YY. Can evidence from genome-wide association studies and positive natural selection surveys be used to evaluate the thrifty gene hypothesis in East Asians? Plos One. 2014;10:e10974.

Krol E, Speakman JR. Regulation of body mass and adiposity in the field vole, Microtus agrestis: a model of leptin resistance. J Endocrinol. 2007;192:271-278.

Krol E, Redman P, Thomson PJ, et al. Effect of photoperiod on body mass, food intake and body composition in the field vole, Microtus agrestis. J Exp Biol. 2005;208:571-584.

Krulin G, Sealander JA. Annual lipid cycle of the gray bat Myotis grisescens. Comp Biochem Physiol. 1972;42A:537-549.

Kullberg C, Fransson T, Jakobsson S. Impaired predator evasion in fat blackcaps (Sylvia atricapilla). Proc R Soc Lond Ser B. 1996;263:1671-1675.

Kunz TH, Wrazen JA, Burnet CD. Changes in body mass and fat reserves in pre-hibernating little brown bats (Myotis lucifugus). Ecoscience. 1998;5:8-17.

Levin BE. Developmental gene x environmental interactions affecting systems regulating energy homeostasis and obesity. Front Neuroendocrinol. 2010;31:270-283.

Levitsky DA. Putting behavior back into feeding behavior: a tribute to George Collier. Appetite. 2002;38:143-148.

Lev-Ran A. Thrifty genotype: how applicable is it to obesity and type 2 diabetes? Diabetes Rev. 1999;7:1-22.

Lev-Ran A. Human obesity: an evolutionary approach to understanding our bulging waistline. Diabetes Metab Res Rev. 2001;17:347-362.

Li XS, Wang DH. Regulation of body weight and thermogenesis in seasonally acclimatized Brandt’s voles (Microtus brandtii). Horm Behav. 2005;48:321-328.

Lima SL. Predation risk and unpredictable feeding conditions – determinants of body mass in birds. Ecology. 1986;67:377-385.

Liu XM et al. Brown adipose tissue transplantation reverses obesity in the Ob/Ob mouse. Endocrinology. 156:2461-2469.

Liu XT, Rossmeisl M, McClaine J, et al. Paradoxical resistance to diet-induced obesity in UCP-1 deficient mice. J Clin Investig. 2003;111:399-407.

Liu XM et al. Brown adipose tissue directly regulates whole body energy metabolism and energy balance. Cell Res. 2013;23:851-854.

Lord GM, Matarese G, Howard LK, et al. Leptin modulates T-cell immune response and reverse starvation induced immunosuppression. Nature. 1998;394:897-901.

Macintyre K. Famine and the female mortality advantage. Chpt 12. In: Dyson T, O’Grada C, eds. Famine Demography. Perspectives from the Past and Present. International Studies in Demography Series. Oxford: Oxford University Press; 2002:240-260.

MacLeod R, MacLeod CD, Learmouth JA, et al. Mass-dependent predation risk in lethal dolphin porpoise interactions. Proc R Soc B. 2007;274:2587-2593.

Martin SL. Mammalian hibernation: a naturally reversible model for insulin resistance in man. Diab Vasc Dis Res. 2008;5:76-81.

Matarese G. Leptin and the immune system: how nutritional status influences the immune response. Eur Cytokine Netw. 2000;11:7-13.

McCance RA. Famines of history and of today. Proc Nutr Soc. 1975;34:161-166.

McDevitt RM, Speakman JR. Central limits to sustainable metabolic-rate have no role in cold-acclimation of the short-tailed field vole (Microtus-agrestis). Physiol Zool. 1994;67:1117-1139.

McDevitt RM, Speakman JR. Summer acclimatization in the short-tailed field vole, Microtus agrestis. J Comp Physiol B. 1996;166:286-293.

Menken J, Campbell C. Forum: on the demography of South Asian famines. Health Transit Rev. 1992;2:91-108.

Mokyr J, Grada CO. Famine disease and famine mortality: lessons from Ireland, 1845–1850. Centre for Economic Research Working paper 99/12. University College Dublin; 1999.

Monarca RI, da Luz Mathias M, Wang DH, et al. Predation risk modulates diet induced obesity in male C57BL/6 mice. Obesity. 2015a: in press.

Monarca R, da Luz Matthias M, Speakman JR. Behavioural and Physiological responses of wood mice (Apodemus sylvaticus) to experimental manipulations of predation and starvation risk. Physiol Behav. 2015b;149:331-339.

Moore F, Kerlinger P. Stopover and fat deposition by North American wood warblers (Parulinae) following spring migration over the Gulf of Mexico. Oecologia. 1987;74:47-54.

Moriguchi S, Amano T, Ushiyama K, et al. Seasonal and sexual differences in migration timing and fat deposition in greater white-fronted goose. Ornithol Sci. 2010;9:75-82.

Mounzih K, Lu RH, Chehab FF. Leptin treatment rescues sterility of genetically obese ob/ob males. Endocrinology. 1997;138:1190-1193.

Nedergaard J, Bengtsson T, Cannon B. Unexpected evidence for active brown adipose tissue in adult humans. Am J Physiol. 2007;293:E444-E452.

Neel JV. Diabetes mellitus a ‘thrifty’ genotype rendered detrimental by ‘progress’? Am J Hum Genet. 1962;14:352-353.

Norrdahl K, Korpimaki E. Does mobility or sex of voles affect risk of predation by mammalian predators? Ecology. 1998;79:226-232.

O’Rourke RW. Metabolic thrift and the basis of human obesity. Ann Surg. 2014;259:642-648.

Ogden CL, Carroll MD, Curtin LR, et al. Prevalence of overweight and obesity in the United States, 1999–2004. JAMA. 2006;295:1549-1555.

Okada Y, Kubo M, Ohmiya H, et al. Common variants at CDKAL1 and KLF9 are associated with body mass index in east Asian populations. Nat Genet. 2012;44:302-U105.

Paternoster L, Evans DM, Nohr EA, et al. Genome wide population based association study of extremely overweight a young adults – the GOYA study. PLoS One. 2012;6:e24303.

Platek SM, Gallup GG, Fryer BD. The fireside hypothesis: was there differential selection to tolerate air pollution during human evolution? Med Hypotheses. 2002;58:1-5.

Power ML, Schulkin J. The Evolution of Obesity. Baltimore: John Hopkins University Press; 2009. 408 p.

Prentice AM. Obesity and its potential mechanistic basis. Br Med Bull. 2001;60:51-67.

Prentice AM. Early influences on human energy regulation: thrifty genotypes and thrifty phenotypes. Physiol Behav. 2005a;86:640-645.

Prentice AM. Starvation in humans: evolutionary background and contemporary implications. Mech Ageing Dev. 2005b;126:976-981.

Prentice AM. The emerging epidemic of obesity in developing countries. Int J Epidemiol. 2006;35:93-99.

Prentice AM, Jebb SA. Obesity in Britain – gluttony or sloth. Br Med J. 1995;311:437-439.

Prentice AM, Rayco-Solon P, Moore SE. Insights from the developing world: thrifty genotypes and thrifty phenotypes. Proc Nutr Soc. 2005;64:153-161.

Prentice AM, Hennig BJ, Fulford AJ. Evolutionary origins of the obesity epidemic: natural selection of thrifty genes or genetic drift following predation release? Int J Obes (Lond). 2008;32:1607-1610.

Procope J. Hippocrates on Diet and Hygiene. London: Zeno; 1952.

Puzyrev VP, Kucher AN. Evolutionary ontogenetic aspects of pathogenetics of chronic human diseases. Russ J Genet. 2011;47:1395-1405.

Rakesh TP, Syam TP. Milder forms of obesity may be a good evolutionary adaptation: ‘fitness first’ hypothesis. Hypothesis. 2015;13:e4.

Razzaque A. Effect of famine on fertility in a rural area of Bangladesh. J Biosoc Sci. 1988;20:287-294.

Repenning M, Fontana CS. Seasonality of breeding moult and fat deposition of birds in sub-tropical lowlands of southern brazil. Emu. 2011;111:268-280.

Rothwell NJ, Stock MJ. Role for brown adipose tissue in diet induced thermogenesis. Nature. 1979;281:31-35.

Saito M et al. High incidence of metabolically active brown adipose tissue in healthy adult humans effects of cold exposure and adiposity. Diabetes. 2009;58:1526-1531.

Scott S, Duncan SR, Duncan CJ. Infant mortality and famine – a study in historical epidemiology in northern England. J Epidemiol Community Health. 1995;49:245-252.

Segal N, Allison DB. Twins and virtual twins: bases of relative body weight revisited. Int J Obes (Lond). 2002;26:437-441.

Sellayah D, Cagampang FR, Cox RD. On the evolutionary origins of obesity: a new hypothesis. Endocrinology. 2014;155:1573-1588.

Simpson SJ, Raubenheimer D. Obesity: the protein leverage hypothesis. Obes Rev. 2005;6:133-142.

Simpson SJ, Raubenheimer D. The Nature of Nutrition: A Unifying Framework from Animal Adaptation to Human Obesity. Princeton: Princeton University Press; 2010. 248pp.

Sorensen A, Mayntz D, Raubenheimer D, et al. Protein-leverage in mice: the geometry of macronutrient balancing and consequences for fat deposition. Obesity. 2008;16:566-571.

Southam L, Soranzo N, Montgomery SB, et al. Is the thrifty genotype hypothesis supported by evidence based on confirmed type 2 diabetes and obesity susceptibility variants? Diabetologia. 2010;52:1846-1851.

Speakman JR. Obesity – the integrated roles of environment and genetics. J Nutr. 2004;134:2090S-2105S.

Speakman JR. Thrifty genes for obesity and the metabolic syndrome – time to call off the search? Diab Vasc Dis Res. 2006;3:7-11.

Speakman JR. The genetics of obesity: five fundamental problems with the famine hypothesis. In: Fantuzzi G, Mazzone T, eds. Adipose Tissue and Adipokines in Health and Disease. New York: Humana Press; 2006b.

Speakman JR. A nonadaptive scenario explaining the genetic predisposition to obesity: the “predation release” hypothesis. Cell Metab. 2007;6:5-12.

Speakman JR. Thrifty genes for obesity, an attractive but flawed idea, and an alternative perspective: the ‘drifty gene’ hypothesis. Int J Obes (Lond). 2008;32:1611-1617.

Speakman JR. Evolutionary perspectives on the obesity epidemic: adaptive, maladaptive and neutral viewpoints. Annu Rev Nutr. 2013a;33:289-317.

Speakman JR. Sex and age-related mortality profiles during famine: testing the body fat hypothesis. J Biosoc Sci. 2013b;45:823-840.

Speakman JR. If body fatness is under physiological regulation, then how come we have an obesity epidemic? Physiology. 2014;29:88-98.

Speakman JR. The ‘fat mass and obesity related’ (FTO) gene: mechanisms of impact on obesity and energy balance. Curr Obes Rep. 2015;4:73-91.

Speakman JR, O’Rahilly S. Fat – an evolving issue. Dis Model Mech. 2012;5:569-573.

Speakman JR, Rowland A. Preparing for inactivity: how insectivorous bats deposit a fat store for hibernation. Proc Nutr Soc. 1999;58:123-131.

Speakman JR, Westerterp KR. Association between energy demands, physical activity and body composition in adult humans between 18 and 96 years of age. Am J Clin Nutr. 2010;92:826-834.

Speakman JR, Westerterp KR. A mathematical model of weight loss during total starvation: more evidence against the thrifty gene hypothesis. Dis Model Mech. 2013;6:236-251.

Speakman JR, Levitsky DA, Allison DB, et al. Set-points, settling points and some alternative models: theoretical options to understand how genes and environments combine to regulate body adiposity. Dis Model Mech. 2011;4:733-745.

Speliotes EK, Willer CJ, Berndt SI, et al. Association analyses of 249,796 individuals reveal 18 new loci associated with body mass index. Nat Genet. 2010;42:937-948.

Stanford KI et al. Brown adipose tissue regulates glucose homeostasis and insulin sensitivity. J Clin Invest. 2013;123:215-223.

Stearns PN. The Encyclopedia of World History. 6th ed. Houghton Mifflin; 2001.

Stein Z, Susser M. Fertility, fecundity, famine – food rations in Dutch famine 1944–45 have a causal relation to fertility and probably to fecundity. Hum Biol. 1975;47:131-154.

Stein ZA, Susser M, Saenger G, et al. Famine and Human Development: The Dutch Hunger Winter of 1944–1945. New York: Oxford University Press; 1975.

Stöger R. The thrifty epigenotype: an acquired and heritable predisposition for obesity and diabetes? Bioessays. 2008;30:156-166.

Sundell J, Norrdahl K. Body size-dependent refuges in voles: an alternative explanation of the chitty effect. Ann Zool Fenn. 2002;39:325-333.