Abstract

In this paper, we shall establish the superconvergence properties of the Runge-Kutta discontinuous Galerkin method for solving two-dimensional linear constant hyperbolic equation, where the upwind-biased numerical flux is used. By suitably defining the correction function and deeply understanding the mechanisms when the spatial derivatives and the correction manipulations are carried out along the same or different directions, we obtain the superconvergence results on the node averages, the numerical fluxes, the cell averages, the solution and the spatial derivatives. The superconvergence properties in space are preserved as the semi-discrete method, and time discretization solely produces an optimal order error in time. Some numerical experiments also are given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we would like to study the superconvergence properties of the Runge-Kutta discontinuous Galerkin (RKDG) method with the upwind-biased numerical flux, for solving the two-dimensional linear constant hyperbolic equation

subject to the initial solution \(U(x,y,0)=U_0(x,y)\) and the periodic boundary condition. Here \(\beta _1\) and \(\beta _2\) are assumed to be positive numbers for simplicity, and \(T>0\) is the final time.

As an extension of the discontinuous Galerkin (DG) method [25] for the steady linear transport equation, the RKDG method was proposed to solve the unsteady nonlinear conservation law with the explicit Runge-Kutta (RK) time-marching and many numerical techniques [14, 15, 17,18,19]. Due to the high-order accuracy and the nice ability to capture shocks, this method has attracted more and more attention in recent years. For a fairly complete set of references, please see the review papers [20] and the references therein. Compared with the wide applications of this method, the theory results are not plenty. Limited in linear hyperbolic equations, many theoretical studies are given for the semi-discrete DG method on, for example, the stability and optimal error estimate [13, 23, 24, 27], the superconvergence analysis [2, 4, 5, 8, 10, 11, 21, 33], and the estimate for post-processed solutions [16, 22, 26]. However, there are a few studies for the fully discrete DG method. Recently, Xu et al. [32] proposed a uniform framework to analyze the \(L^2\)-norm stability performance for the arbitrary RKDG method. This concept has been implicitly used in Ref. [34, 35], and the main technique is the matrix transferring process based on the temporal difference of stage solutions. We would like to mention that this framework also is good at obtaining the optimal error estimate [31], and the superconvergence results [30] for RKDG methods.

In this paper, we are interested in the superconvergence analysis of RKDG methods. To well understand this, let us recall some famous works on this context for the semi-discrete DG method to solve the linear constant hyperbolic equation. For the one-dimensional scheme with the purely upwind numerical flux, Cheng and Shu [10] proved by Fourier argument the \((k+3/2)\)th order supraconvergence on the uniform mesh, and then extended the above conclusion to the quasi-uniform mesh by the help of generalized slope and energy argument [11]. In this paper, k is the degree of piecewise polynomials in finite element space, and the supraconvergence means the high-order approximation between the numerical solution and a special projection of the exact solution. Later, Yang and Shu [33] applied the technique of dual arguments and established the \((k+2)\)th order supraconvergence result, as well as the \((k+2)\)th order superconvergence results on the solution at Radau points and cell averages, on the quasi-uniform mesh. As an important milestone in this field, Cao et al. [8] adopted the technique of correction functions and achieved the \((2k+1)\)th order supraconvergence results. As an application, the superconvergence results on the numerical fluxes, the cell averages, the solution at right Radau points and the derivative at left Radau points are presented. After that, the technique of correction technique was applied to many problems, such as the two-dimensional scheme with purely upwind numerical flux [4], the one-dimensional scheme with upwind-biased numerical flux [2], and some numerical methods on those partial differential equations with high-order spatial derivatives [1, 3, 6]. For more details, please refer to [7] and the references therein.

The purpose of this paper is carrying out the superconvergence analysis of two-dimensional RKDG(s, r, k) method, where s and r, respectively, are the stage number and the time order of RK time-marching. Generally speaking, this work is an extension of [30] in one dimension, since those kernel techniques still work well, for example, the \(L^2\)-norm stability analysis of RKDG methods by matrix transferring process, the generalized Gauss-Radau (GGR) projection, the well-defined reference functions at every time stage, the technique of incomplete correction functions, and so on. However, the correction technique in two dimensions encounters with some troubles, especially when the spatial derivatives and the correction manipulations are defined along different directions. The main developments in this issue are given as follows.

-

i)

The correction technique in two dimensions is well defined for the upwind-biased numerical flux. This topic has been discussed in [4] for the semi-discrete DG method with purely upwind numerical flux. Based on the Radau expansion in each element, the correction objectives are made up of the infinite number of expanded terms along x- and y-directions, respectively. However, this treatment is not easily extended to the upwind-biased numerical flux in two dimensions. In this paper, we would like to carry out the correction manipulation with the help of the GGR projections of given functions. This modification can help us to find out the essential difference between one dimension and multi-dimension.

-

ii)

The analysis strategy is different for the different status whether the correction manipulation and the spatial derivative (or its DG discretization) are in the same direction or not. If in the same direction, the treatment is almost the same as that in one dimension, using the so-called recursive structure. If not, we have to seek a new proof line since the recursive structure is lost. Fortunately, we find out the high-order convergence hidden in each correction, by a deep discussion on the two-dimensional GGR projection and one-dimensional GGR projection.

Based on the above developments, we are able to obtain the almost same supra- and super-convergence results as those in [30].

The supraconvergence results for the solution are easy to get. However, the supraconvergence analysis for the spatial derivatives encounters with the negative influence of multi-dimensions. To keep the optimal time order in this case, the spatial derivative has been transformed into the temporal difference of stage solutions; see [30] for one-dimensional case. However, this strategy does not work in multi-dimension. To overcome this difficulty, we give a new proof line in this paper using the commutative property of the discrete DG derivatives along two different spatial directions. See the proof of Theorem 3.

In this paper, we obtain the following superconvergence results of the RKDG method. They preserve the superconvergence orders in space as that in the semi-discrete DG method [4], and the optimal time order is supplemented as we expect, provided that the RKDG method is \(L^2\)-norm stable under suitable temporal-spatial restriction. All conclusions in this paper are independent of the stage number, and are shown under the measurement of the root-mean-square of discrete data. In specific, we obtain the \((2k+1)\)th order superconvergence in space with respect to the node averages, the cell averages, and the edge averages of the numerical fluxes. There hold the \((k+2)\)th order superconvergence in space for the numerical fluxes and the \((k+1)\)th order for the tangent derivatives of numerical fluxes, at some special points on element boundaries. We also, respectively, obtain the \((k+2)\)th order and the \((k+1)\)th order superconvergence in space for the solution and spatial derivatives at some special points (or lines).

The remaining of this paper is organized as follows. Section 2 presents the RKDG method in two dimensions. In Sect. 3, we give some preliminaries, including the inverse inequities of finite element space, the properties of spatial DG discretization, the GGR projections in different spatial dimension, and the technique of correction functions. In Sect. 4, we devote to establishing the supraconvergence results on the solution and the spatial derivatives, and presenting some superconvergence results on the node average, the numerical flux, the cell average, the solution and the spatial derivatives. A convenience definition of the initial solution is also given at the end of this section. Section 5 shows some numerical experiments to verify the theoretical results in this paper. The concluding remarks and some technical proofs are, respectively, given in Sects. 6 and Appendix A.

To help the readers better understand this paper, we list here some important notations with short descriptions.

s, r, k | The RKDG(s, r, k) method |

\(\varOmega _h\), \(I_h\), \(J_h\) | The rectangle partition and two partitions along x- and y-directions |

\(\varGamma _h^1\), \(\varGamma _h^2\) | Vertical and horizontal element boundaries |

\((\cdot ,\cdot )\), \(\langle \cdot ,\cdot \rangle _{\varGamma _h^d}\) | The standard inner products in \(L^2(\varOmega _h)\) and \(L^2(\varGamma _h^d)\), \(d=1,2\) |

\(\flat \) | A fixed number with respect to the Sobolev embedding |

\(\mathbb {G}_{\gamma _1,\gamma _2},\mathbb {G}_{\gamma _1,\gamma _2}^\perp \) | Two-dimensional GGR projection and the projection error |

\(\mathbb {X}_{\gamma _1}\), \(\mathbb {Y}_{\gamma _2}\), \(\mathbb {X}_{\gamma _1}^\perp \), \(\mathbb {Y}_{\gamma _2}^\perp \) | One-dimensional GGR projections and the projection errors |

\(\mathbb {Q}\) | The combination of some two-dimensional GGR projections; see (24) |

\(\mathbb {F}_{1,p}\), \(\mathbb {F}_{2,p}\) | The pth correction operator along x- and y-directions; see (27) |

\(\partial _x^{-1}\), \(\partial _y^{-1}\) | The antiderivatives along x- and y-directions; see (28) |

\(\mathcal {S}^x_{\kappa p}\), \(\mathcal {S}^y_{\kappa p}\) | Two elemental structures; see (32) |

q | Total number of correction manipulations in time-marching |

\(q_\mathrm{nt}\) | Total number of correction manipulations for the initial solution |

\(\sigma \) | Maximal order of derivative in reference functions |

\(U^{(\ell )}_{[\sigma ]}(x,t), \varrho ^{(\ell )}_{[\sigma ]}(x,t)\) | Reference function at the \(\ell \)th stage, and the corresponding truncation error in time |

\(U^{n,\ell }\) | Reference function at each time stage, defined as \(U^{(\ell )}_{[r]}(x,t^n)\) |

\(W^{n,\ell }\) | Truncated reference function at each time stage, defined as \(U^{(\ell )}_{[\min (q,r)]}(x,t^n)\) |

\(z^{n,\ell }, z^{n,\ell }_{\text {c}},z^{n,\ell }_{\text {d}}\) | Arbitrary series \(z^{n,\ell }\) and their combinations; see (45) |

\(\chi ^{n,\ell }\) | One stage function in the finite element space |

\(e^{n,\ell }, \xi ^{n,\ell }, \eta ^{n,\ell }\) | Stage error and the decomposition \(e^{n,\ell }=\xi ^{n,\ell }-\eta ^{n,\ell }\); see (42) |

\(\mathcal {Z}^{n,\ell }(v)\) | Functional to determine the residual of stage error; see (44) |

\(\Psi _{k+1}^x(x)\), \(\Psi _{k+1}^y(y)\) | Parameter-dependent Radau polynomials of degree \(k+1\) along x- and y-directions |

\(S_h^{\mathrm{R},x}\), \(S_h^{\mathrm{L},x}\), \(S_h^{\mathrm{R},y}\), \(S_h^{\mathrm{L},y}\) | Sets of roots and extrema of \(\Psi _{k+1}^x(x)\) and \(\Psi _{k+1}^y(y)\) |

\(\vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^2(\cdot )}\) | The root-mean-square of discrete data; see Sect. 4.4 |

2 The RKDG Method

Given integers \(N_x\) and \(N_y\), consider the rectangle partition of \(\varOmega =(0,1)^2\), namely,

with \(I_i=(x_{i-1/2},x_{i+1/2})\) and \(J_j=(y_{j-1/2},y_{j+1/2})\). Here the mesh size h is the maximum of \(h_i^x=x_{i+1/2}-x_{i-1/2}\) and \(h_j^y=y_{j+1/2}-y_{j-1/2}\) for \(i=1,2,\cdots , N_x\) and \(j=1,2,\cdots , N_y\). In this paper we assume \(\varOmega _h\) to be quasi-uniform, namely, the ratios \(h/h_i^x\) and \(h/h_j^y\) for any i and j are bounded by a fixed constant as h goes to zero.

The discontinuous finite element space is then defined as

where \(\mathcal {Q}^k(K_{ij})=\mathcal {P}^k(I_i)\otimes \mathcal {P}^k(J_j)\) is the space of polynomials in \(K_{ij}\) of degree at most \(k\geqslant 1\) for each variable. Note that \(\mathcal {P}^k(I_i)\) and \(\mathcal {P}^k(J_j)\) consist of all polynomials of degree up to k on the corresponding domain.

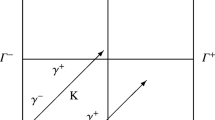

Note that the function \(v\in V_h\) may be discontinuous across the element boundaries. In this paper, we use the notations following [9, 31]. Let \(\theta \) be a number, and denote \({\tilde{\theta }}=1-\theta \). On the vertical edges \(x=x_{i+1/2}\), the jump and the weighted average are denoted by

where \(v_{i+1/2,y}^{+}\) and \(v_{i+1/2,y}^{-}\) are two limiting traces from the right and the left, respectively. On the horizontal edges \(y=y_{j+1/2}\), the jump and the weighted average are denoted by

where \(v_{x,j+1/2}^{+}\) and \(v_{x,j+1/2}^{-}\) are two limiting traces from the top and the bottom, respectively.

Let \(I_h=\{I_i:i=1,2,\cdots ,N_x\}\) and \(J_h=\{J_j:j=1,2,\cdots ,N_y\}\) be two partitions along x- and y-directions, respectively. Denote the vertical element boundaries and the horizontal element boundaries by

Let \((\cdot ,\cdot )\) and \(\langle \cdot ,\cdot \rangle _{\varGamma _h^d}, d=1,2,\) be the standard inner products in \(L^2(\varOmega _h)\) and \(L^2(\varGamma _h^d)\), respectively, with the associated norms \(\Vert \cdot \Vert _{L^2(\varOmega _h)}\) and \(\Vert \cdot \Vert _{L^2(\varGamma _h^d)}\).

The semi-discrete DG method of (1) is defined as follows: find \(u(t):[0,T]\rightarrow V_h\), such that for \(t\in (0,T]\) there holds

and a suitably defined initial solution is coupled with. Here

are the DG spatial discretizations along x- and y-directions with given parameters \(\theta _1>1/2\) and \(\theta _2>1/2\). Note that \(\beta _1\{\!\!\{u\}\!\!\}^{\theta _1,y}\) and \(\beta _2\{\!\!\{u\}\!\!\}^{x,\theta _2}\) provide the upwind-biased numerical fluxes, and the periodic boundary condition is used here.

The objective of this paper is the fully discrete RKDG(s, r, k) method, which adopts the s-stage and rth-order explicit Runge-Kutta algorithm to solve (6). For simplicity, let \(\{t^n=n\tau :0\leqslant n\leqslant M\}\) be a uniform partition of [0, T], where M is a positive integer and \(\tau =T/M\) is the time step. The detailed formulations from \(t^n\) to \(t^{n+1}\) are often represented in the Shu-Osher form [28] as follows.

-

Let \(u^{n,0}=u^n\).

-

For \(\ell =0,1,\cdots ,s-1\), successively seek \(u^{n,\ell +1}\) by the variational form

$$\begin{aligned} (u^{n,\ell +1},v) = \sum _{0\leqslant \kappa \leqslant \ell } \Big [ c_{\ell \kappa }(u^{n,\kappa },v) +\tau d_{\ell \kappa }\mathcal {H}(u^{n,\kappa },v) \Big ], \quad \forall v\in V_h, \end{aligned}$$(8)where \(c_{\ell \kappa }\) and \(d_{\ell \kappa }\) are the parameters given by the used RK algorithm, satisfying \(\mathop \sum \limits_{0\leqslant \kappa \leqslant \ell }c_{\ell \kappa }=1\) and \(d_{\ell \ell }\ne 0\).

-

Let \(u^{n+1}=u^{n,s}\).

The initial solution \(u^0\in V_h\) is an approximation of the given function \(U_0\). The definition will be given at the end of Sect. 4 to ensure the supra- and super-convergence results.

3 Preliminaries

In this section, we present some preliminaries, such as the inverse inequities, the properties of DG discretizations, the definition of GGR projections and the technique of correction functions. For notational convenience, we use the symbol C to denote generic constant independent of n, h, \(\tau \), u and U. It may have different values at each occurrence.

3.1 Inverse Inequities and Properties of DG Discretization

The following inverse inequities will be mainly used in this paper. For any \(v\in V_h\), there is an inverse constant \(\mu >0\) independent of v and h, such that

For more details, please refer to [12].

In the following lemma, we give some properties of the DG discretization that will be explicitly used in this paper. More properties and discussions can be found in [9, 31].

Lemma 1

Let \(d=1,2\). For any parameter \(\theta \), there hold

-

accurate skew-symmetric property, i.e., for piecewise smooth functions w and v,

$$\begin{aligned} \mathcal {H}_d^{\theta }(w,v)+ \mathcal {H}_d^{{\tilde{\theta }}}(v,w)=0; \end{aligned}$$(10) -

weak boundedness in the finite element space, i.e., there is a constant \(C>0\) independent of w, v and h, such that

$$\begin{aligned} \mathcal {H}_d^{\theta }(w,v) \leqslant Ch^{-1}\Vert w\Vert _{L^2(\varOmega _h)}\Vert v\Vert _{L^2(\varOmega _h)}, \quad \forall w,v\in V_h. \end{aligned}$$(11)

3.2 GGR Projection

In this subsection, we make a deep discussion on the GGR projection for \(w(x,y)\in H^\flat (\varOmega _h)\), where \(\flat \in (1,2)\) is a fixed number to ensure \(H^\flat (\varOmega _h)\) is embedded into \(C({\bar{\varOmega }}_h)\). In this paper, we use \(H^{\ell }(\varOmega _h)\) and \(C({\bar{\varOmega }}_h)\) to, respectively, denote the spaces made up of piecewise \(H^\ell \)-functions and piecewise continuous functions. Those notations in Sect. 2 can be extended to these spaces.

Remark 1

If the subscript h is dropped, the corresponding regularity in \(H^{\ell }(\varOmega )\) and \(C({\bar{\varOmega }})\) should be strengthened to the whole domain.

Given two parameters \(\gamma _1\) and \(\gamma _2\), let \(\mathbb {G}_{\gamma _1,\gamma _2} w\in V_h\) be the GGR projection of w. Denoting the projection error by \(\mathbb {G}_{\gamma _1,\gamma _2}^\perp w= w-\mathbb {G}_{\gamma _1,\gamma _2} w\), we can give the detailed definition as follows [9].

-

\(\gamma _1\ne 1/2\) and \(\gamma _2\ne 1/2\): for any i and j there hold

$$\begin{aligned} \int _{K_{ij}}\big (\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\big ) v\,{\text {d}}x{\text {d}}y&=0, \quad \forall v\in \mathcal {P}^{k-1}(I_i)\otimes \mathcal {P}^{k-1}(J_j), \end{aligned}$$(12a)$$\begin{aligned} \int _{J_j} \{\!\!\{\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\}\!\!\}^{\gamma _1,y}_{i+\frac{1}{2},y} v\,{\text {d}}y&=0, \quad \forall v\in \mathcal {P}^{k-1}(J_j), \end{aligned}$$(12b)$$\begin{aligned} \int _{I_i} \{\!\!\{\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\}\!\!\}^{x,\gamma _2}_{x,j+\frac{1}{2}} v\,{\text {d}}x&=0, \quad \forall v\in \mathcal {P}^{k-1}(I_i), \end{aligned}$$(12c)$$\begin{aligned} \{\!\!\{\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\}\!\!\}^{\gamma _1,\gamma _2}_{i+\frac{1}{2},j+\frac{1}{2}}&=0, \end{aligned}$$(12d)where \(\{\!\!\{v\}\!\!\}^{\gamma _1,\gamma _2} = \gamma _1\gamma _2v^{-,-} +\gamma _1\tilde{\gamma }_2v^{-,+} +\tilde{\gamma }_1\gamma _2v^{+,-} +\tilde{\gamma }_1\tilde{\gamma }_2v^{+,+} \) is the node average of four limiting traces from different elements.

-

\(\gamma _1\ne 1/2\) and \(\gamma _2=1/2\): for any i and j there hold

$$\begin{aligned} \int _{K_{ij}}\big (\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\big )v\,{\text {d}}x{\text {d}}y&= 0, \quad \forall v\in \mathcal {P}^{k-1}(I_i)\otimes \mathcal {P}^{k}(J_j), \end{aligned}$$(13a)$$\begin{aligned} \int _{J_j} \{\!\!\{\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\}\!\!\}^{\gamma _1,y}_{i+\frac{1}{2},y} v\,{\text {d}}y&=0, \quad \forall v\in \mathcal {P}^{k}(J_j). \end{aligned}$$(13b) -

\(\gamma _1=1/2\) and \(\gamma _2\ne 1/2\): for any i and j there hold

$$\begin{aligned} \int _{K_{ij}}\big (\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\big ) v\,{\text {d}}x{\text {d}}y&=0, \quad \forall v\in \mathcal {P}^{k}(I_i)\otimes \mathcal {P}^{k-1}(J_j), \end{aligned}$$(14a)$$\begin{aligned} { \int _{I_i} \{\!\!\{\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\}\!\!\}^{x,\gamma _2}_{x,j+\frac{1}{2}} v\,{\text {d}}x}&=0, \quad \forall v\in \mathcal {P}^{k}(I_i). \end{aligned}$$(14b) -

\(\gamma _1=1/2\) and \(\gamma _2=1/2\): for any i and j there holds

$$\begin{aligned} \int _{K_{ij}}\big (\mathbb {G}_{\gamma _1,\gamma _2}^\perp w\big )v\,{\text {d}}x{\text {d}}y=0, \quad \forall v\in \mathcal {P}^{k}(I_i)\otimes \mathcal {P}^{k}(J_j). \end{aligned}$$(15)That is to say, \(\mathbb {G}_{1/2,1/2} w\) is the local \(L^2\) projection of w.

It has been proved in [9] that the GGR projection \(\mathbb {G}_{\gamma _1,\gamma _2} w\) is well defined and there holds the approximation property

Here and below, we demand \(R\geqslant \flat \) unless otherwise specified. In fact, we are also allowed to take \(R\geqslant 1\) in (16), if either \(\gamma _1=1/2\) or \(\gamma _2=1/2\).

The following properties [9] play important roles in the optimal error estimate and superconvergence analysis. Associated with the DG discretization (7), there hold

and the superconvergence property (for instance \(R=k+2\))

It is easy to get (17) by the definition of the GGR projection. To obtain (18), we have to emphasize the continuity of w and make deep investigations on the two-dimensional GGR projection by virtue of one-dimensional GGR projections [9].

Let \(\mathcal {P}^k(I_h)\) and \(\mathcal {P}^k(J_h)\) be the spaces of piecewise polynomials with degree up to k, respectively, defined on \(I_h\) and \(J_h\). For convenience of notations and analysis, we would like to define one-dimensional GGR projections for w(x, y).

-

Fix \(y\in [0,1]\). Let \(\mathbb {X}_{\gamma _1} w(x,y^{\pm })\in \mathcal {P}^k(I_h)\) be the one-dimensional GGR projection of \(w(x,y^{\pm })\) along the x-direction. It depends on the value of \(\gamma _1\).

-

\(\gamma _1\ne 1/2\): for any \(i=1,2,\cdots , N_x\), there holds

$$\begin{aligned} \int _{I_i}\mathbb {X}_{\gamma _1}^\perp w(x,y^{\pm })v(x)\,{\text {d}}x=0, \quad \forall v(x)\in \mathcal {P}^{k-1}(I_i), \, \text{ and } \, \{\!\!\{\mathbb {X}_{\gamma _1}^\perp w(x,y^{\pm })\}\!\!\}^{\gamma _1,y}_{i+\frac{1}{2},y}=0. \end{aligned}$$ -

\(\gamma _1=1/2\): for any \(i=1,2,\cdots , N_x\), there holds

$$\begin{aligned} \int _{I_i}\mathbb {X}_{\frac{1}{2}}^\perp w(x,y^\pm )v(x)\,{\text {d}}x=0, \quad \forall v(x)\in \mathcal {P}^{k}(I_i). \end{aligned}$$Namely, it is the local \(L^2\) projection along the x-direction.

Here \(\mathbb {X}_{\gamma _1}^\perp w(x,y^{\pm })=w(x,y^{\pm })-\mathbb {X}_{\gamma _1} w(x,y^{\pm })\) is the projection error.

-

-

Fix \(x\in [0,1]\). Let \(\mathbb {Y}_{\gamma _2} w(x^\pm ,y)\in \mathcal {P}^k(J_h)\) be the one-dimensional GGR projection of \(w(x^{\pm },y)\) along the y-direction. The definition is very similar as above, so is omitted to save the length of this paper.

The widely-used expression \(\mathbb {G}_{\gamma _1,\gamma _2} = \mathbb {X}_{\gamma _1}\otimes \mathbb {Y}_{\gamma _2}\) can be understood by the following fact: for any function with separation variables

with \(\tilde{v}_1(x)\in H^1(I_h)\) and \(\tilde{v}_2(y)\in H^1(J_h)\), there holds

This fact can be easily verified and will be used again and again.

Let \(\mathcal {P}^{2k+1}(\varOmega _h)\) be the piecewise polynomials of the total degree not greater than \(2k+1\). By the scaling argument, it is easy to get the following approximation property: for any function \(w\in H^R(\varOmega _h)\), there exists a function \(v=v(w)\in \mathcal {P}^{2k+1}(\varOmega _h)\) such that

where \(d=1,2, \kappa \geqslant 0\) and \(R\geqslant 1\). The next proposition will be used several times.

Proposition 1

For \(\mathcal {P}^{2k+1}(\varOmega _h)\), there exists a group of basis functions in the separation form like (19), where either \(\tilde{v}_1(x)\) or \(\tilde{v}_2(y)\) is a piecewise polynomial of degree at most k.

Proof

Define the scaling Legendre polynomials in the form

where \(L_\ell (\cdot )\) is the standard Legendre polynomial of degree \(\ell \) on \([-1,1]\). For convenience, their zero extensions are denoted by the same notations.

It is well known that \(\mathcal {P}^{2k+1}(\varOmega _h)\) has the basis functions

where a and b are nonnegative integers satisfying \(a+b\leqslant 2k+1\). This completes the proof of this proposition.

Remark 2

Note that \(V_h=\mathcal {Q}^k(\varOmega _h)\) has the similar proposition as above.

In what follows, we will use many times the combination of some GGR projections

By virtue of one-dimensional GGR projections, we have the next lemma.

Lemma 2

Let \(R\geqslant \flat \). There exists a constant \(C>0\) independent of w and h, such that

Proof

Consider (23), where either \(\tilde{v}_1(x)\) or \(\tilde{v}_2(y)\) is a piecewise polynomial of degree at most k. It follows from (20) that

since either \((\mathbb {X}_{\theta _1}-\mathbb {X}_{\frac{1}{2}}) \tilde{v}_1(x)=0\) or \((\mathbb {Y}_{\theta _2}-\mathbb {Y}_{\frac{1}{2}}) \tilde{v}_2(y)=0\). Hence, it follows from Proposition 1 that

since \(\mathbb {G}_{1/2,1/2} - \mathbb {Q}\) is a linear map. This property together with (16) and (21) implies

which completes the proof of this lemma.

3.3 Techniques of Correction Functions

Let \(0\leqslant p\leqslant k\) be the sequence number of correction manipulation. For any given function \(w(x,y)\in H^1(\varOmega _h)\), we would like to define two series of correction functions

where \(\partial _x^{-1}\) and \(\partial _y^{-1}\) are the antiderivatives along two spatial directions. They are defined element by element, namely

for \(x\in I_i\) or \(y\in J_j\). Here \(i=1, 2,\cdots , N_x\) and \(j=1,2,\cdots , N_y\). The definitions in (27) have clear and uniform expressions, and they are a little different to those in [4].

Along the same line as that in [30], we can have the following conclusions. We omit the proofs here to save the space.

Lemma 3

Let \(0\leqslant p\leqslant k\). For \(R\geqslant 1\), there is a constant \(C>0\) independent of h and w, such that

Lemma 4

Let \(1\leqslant p\leqslant k\). There hold

-

exact collocation of numerical flux: \(\{\!\!\{\mathbb {F}_{1,p} w\}\!\!\}^{\theta _1,y}_{i+\frac{1}{2},y}=0\) and \(\{\!\!\{\mathbb {F}_{2,p} w\}\!\!\}^{x,\theta _2}_{x,j+\frac{1}{2}}=0\);

-

complementary of the \(L^2\)-orthogonality:

$$\begin{aligned} (\mathbb {F}_{1,p-1}w,v)&= 0, \quad \forall v\in \mathcal {P}^{k-p}(I_h)\otimes \mathcal {P}^{k}(J_h), \end{aligned}$$(30a)$$\begin{aligned} (\mathbb {F}_{2,p-1}w,v)&= 0, \quad \forall v\in \mathcal {P}^{k}(I_h)\otimes \mathcal {P}^{k-p}(J_h); \end{aligned}$$(30b) -

the exact expression under the DG spacial discretization: for any \(v\in V_h\),

$$\begin{aligned} { \mathcal {H}_{1}^{\theta _1}(\mathbb {F}_{1,p} w,v) }&= \beta _1(\mathbb {F}_{1,p-1} w,v), \quad { \mathcal {H}_{2}^{\theta _2}(\mathbb {F}_{2,p} w,v) } = \beta _2(\mathbb {F}_{2,p-1} w,v). \end{aligned}$$(31)

In two-dimensional superconvergence analysis, we pay more attention on the following elemental structures:

where \(\kappa =1,2\) and \(0\leqslant p\leqslant k\). Here the superscripts x and y refer to the directions of spatial derivatives (or its DG discretization), and the subscript \(\kappa \) refers to the direction of correction manipulation.

For \(\mathcal {S}^x_{1p}(\cdot ,\cdot )\) and \(\mathcal {S}^y_{2p}(\cdot ,\cdot )\), the correction function and the spatial derivative are defined along the same direction. In this case, the treatment is almost the same as that in one dimension. For \(p=0\), using (17), we easily have

For \(1\leqslant p\leqslant k\), as an application of (31), we have

These recursive structures reduce

where \(p'\leqslant k\) is any given integer. Similarly, we also have

Each term on the right-hand side of (33) and (34) helps us to achieve the high-order convergence rate.

For \(\mathcal {S}^x_{2p}(\cdot ,\cdot )\) and \(\mathcal {S}^y_{1p}(\cdot ,\cdot )\), the correction function and the spatial derivative are defined in different directions. In this case the recursive structures are lost. In the following lemma we show that either of them has a boundedness with a sufficiently high order. This is an important observation in the multi-dimension.

Lemma 5

Let \(0\leqslant p\leqslant k\) and \(R\geqslant 2\). For any \(v\in V_h\), there holds

Proof

Since the proofs are similar, we only take \(\mathcal {S}^y_{1p}(w,v)\) as an example. By the accurate skew-symmetric property (10), we have

Each term in (36) can be bounded by the Cauchy-Schwartz inequality, the application of the inverse inequality (9b) to \(\Vert \{\!\!\{v\}\!\!\}^{x,\tilde{\theta }_2}\Vert _{L^2(\varGamma _{\!h}^2)}\), and the conclusion

In Appendix A, we will prove (37), where the continuity of w plays an important role. Till now we complete the proof of this lemma.

4 Superconvergence Analysis

In this section, we present some superconvergence results of the RKDG(s, r, k) method, in a mild regularity assumption of the exact solution. The proof line is almost the same as that in one dimension [30], using the incomplete correction for the well-defined reference functions at each time stage, as well as the stability analysis of the RKDG method.

4.1 Reference Functions

Let \(\sigma \) be an integer such that \(0\leqslant \sigma \leqslant r\). Denote \(U_{[\sigma ]}^{(0)}=U\) and inductively define for \(\kappa =1,2,\cdots , s-1\), the reference functions

Here and below the arguments x, y and t are omitted if no necessary. Let \(\ell \) be an integer and assume that \(U_{[\sigma ]}^{(0)},\cdots ,U_{[\sigma ]}^{(\ell )}\) have been well-defined in the form (38). Paralleled to the \((\ell +1)\)th time stage marching, we get

where \(\partial _\beta =\beta _1\partial _x+\beta _2\partial _y\) is the streamline derivative, and (1) is used in the last step. By cutting off the term involving the \((\sigma +1)\)th order time derivative, if it exists, we define the successive reference function \(U_{[\sigma ]}^{(\ell +1)}\) in the form (38) with \(\kappa =\ell +1\). This inductive procedure stops when \(U_{[\sigma ]}^{(s-1)}\) is gotten.

Define the reference functions at every time stage by

Let \(U^{n,s}_{[\sigma ]}=U^{n+1,0}_{[\sigma ]}\). A simple application of the Taylor expansion in time yields that

for \(\ell =0,1,\cdots , s-1\). Here \(\varrho ^{n,\ell }_{[\sigma ]}\) is the truncation error (the cutting-off manipulation and/or the local error of one step time-marching), satisfying for all n and \(\ell \) that

For more details, see [30, 31].

In what follows, we assume \(U_0\in H^{r+\flat }(\varOmega )\). Since \(U(x,y,t)=U_0(x-\beta _1t, y-\beta _2t)\), the above reference functions are all continuous in space.

4.2 Framework

Let \(e^{n,\ell }=u^{n,\ell }-U^{n,\ell }\) be the stage error of the RKDG method, where \(U^{n,\ell }\equiv U^{n,\ell }_{[r]}\) is defined in the previous subsection. Inserting a series of functions \(\chi ^{n,\ell }\in V_h\), we have the error decomposition

with \(\xi ^{n,\ell }=u^{n,\ell }-\chi ^{n,\ell }\in V_h\) and \(\eta ^{n,\ell }=U^{n,\ell }-\chi ^{n,\ell }\). As the standard treatment in the finite element analysis, the main work is obtaining a sharp boundedness of \(\xi ^{n,\ell }\).

Since the DG discretization is consistent, the definition of reference function (40) and the RKDG method (8) yield the error equations for \(\ell =0,1,\cdots , s-1\),

The source terms \(F^{n,0}, \cdots , F^{n,s-1}\) are recursively defined by

with

Here the summation in (44a) is equal to zero if \(\ell =0\), and

Note that the subscripts “\(\text {c}\)” and “\(\text {d}\)” refer to the related series of coefficients.

Based on the above error equations, we have the following theorem as the starting point of superconvergence analysis.

Theorem 1

For the RKDG(s, r, k) method, there are a suitable temporal-spatial restriction and a bounding constant \(C>0\) independent of n, h, \(\tau \), u and U, such that

where \(\Vert \mathcal {Z}^{\kappa ,\ell }\Vert = \sup \limits _{0\ne v\in V_h}\mathcal {Z}^{\kappa ,\ell }(v)/\Vert v\Vert _{L^2(\varOmega _h)}\).

This theorem is a trivial extension of those results in [30,31,32], where a uniform framework was proposed to investigate the \(L^2\)-norm stability performance of any RKDG methods. Many analysis techniques are involved there, for example, the temporal difference of stage solutions, the matrix transferring process, the termination index, the contribution index of spatial discretization, and so on. The studies in [30,31,32] show that most results and discussion are independent of the spatial dimension. Hence we do not present the proof of this theorem in order to shorten the length of this paper.

To end this subsection, we would like to give some explanations on the temporal-spatial restriction in the theorem. Let us consider the RKDG(r, r, k) method, where the stage number is equal to the time order.

-

If \(r=0\pmod 4\) or \(r=3\pmod 4\), the standard CFL condition \(\tau \leqslant \lambda h\) is enough for arbitrary degree k, where \(\lambda \) is a sufficiently small number.

-

If \(r=1\pmod 4\) or \(r=2\pmod 4\), we may demand a strong temporal-spatial condition that \(\tau \) must be a high-order infinitesimal of h. The standard CFL condition is allowed only when the degree of piecewise polynomials is small enough.

Note that the maximal CFL number \(\lambda \) may depend on the upwind-biased parameter \(\theta \). For more context of the \(L^2\)-norm stability of RKDG methods, please refer to [30,31,32].

4.3 Supraconvergence Results on the Solution and Spatial Derivatives

Now we give the specific definition of \(\xi ^{n,\ell }=u^{n,\ell }-\chi ^{n,\ell }\in V_h\), and establish the supraconvergence results on the solution and spatial derivatives.

Let \(0\leqslant q\leqslant k\) be the total number of correction manipulations along the spatial direction, and take

with the incomplete correction technique. Here \(U^{n,\ell }=U^{n,\ell }_{[r]}\) and \(W^{n,\ell }=U^{n,\ell }_{[\min (q,r)]}\) are two series of reference functions satisfying (40) with different \(\sigma \).

Substituting (47) into the definition of \(\mathcal {Z}^{n,\ell }(v)\) and making some manipulations, we have \(\mathcal {Z}^{n,\ell }(v)=\sum \limits_{1\leqslant i\leqslant 5}\mathcal {Z}^{n,\ell }_i(v)\) for any \(v\in V_h\), where

Note that \(\mathcal {S}^x_{\kappa p}(\cdot ,\cdot )\) and \(\mathcal {S}^y_{\kappa p}(\cdot ,\cdot )\), \(\kappa =1,2\), have been defined and discussed in Sect. 3.3. Here we have used (40), the identity

and the fact that \((\mathbb {G}_{1/2,1/2}^{\perp }W^{n,\ell }_{\text {c}},v)=0\) holds for any \(v\in V_h\).

Lemma 6

If \(\tau =\mathcal {O}(h)\), we have for \(\ell =0,1,\cdots ,s-1\), that

where the bounding constant \(C>0\) is independent of n, h, \(\tau \), u and U.

Proof

From the recurrence relationships (33) and (34), we have

where \(W^{n,\ell }_{\text {d}}\) can be linearly expressed by \(\tau ^i\partial _t^{i}U^n\) for \(0\leqslant i\leqslant k\). The combination coefficients depend on those parameters given in the RK time-marching. Take the first term in \(\mathcal {Z}^{n,\ell }_{1}(v)\) as an example, in which the typical term can be bounded in the form

where we use Lemma 3 with \(R=k+1-i\) in the first step, and \(\tau =\mathcal {O}(h)\) in the last step. This implies that \(\Vert \mathcal {Z}^{n,\ell }_{1}\Vert \) is bounded by the right-hand side of (49).

The term \(\mathcal {Z}^{n,\ell }_{2}(v)\) can be bounded similarly. For example, for \(0\leqslant i\leqslant k\) there holds

due to Lemma 5 with \(R=k+2+q-p-i\). Here \(\tau =\mathcal {O}(h)\) is also used. This implies that \(\Vert \mathcal {Z}^{n,\ell }_{2}\Vert \) is also bounded as expected.

The first two terms in \(\mathcal {Z}^{n,\ell }_{3}(v)\) can be bounded as follows. For example, noticing (41) and Lemma 3 with \(R=k+1-p\), we have

It is trivial to see that \((\varrho _{[r]}^{n,\ell },v)\leqslant C\tau ^r\Vert U_0\Vert _{H^{r+1}(\varOmega )}\Vert v\Vert _{L^2(\varOmega _h)}\). Hence, we get the same upper boundedness for \(\Vert \mathcal {Z}^{n,\ell }_{3}\Vert \), as that in (49).

Now we are going to estimate the next term \(\mathcal {Z}^{n,\ell }_{4}(v)\). The first term can be bounded by the fact that \(W^{n,\ell }_{\text {c}}\) is linearly expressed by \(\tau ^{i-1} \partial _t^i U^n\) for \(1\leqslant i\leqslant k\) and \((U^{n+1}-U^n)/\tau \). By applying Lemma 2, the typical terms are bounded by

for \(1\leqslant i\leqslant k\), and

Applying (11) and Lemma 2, as well as the linear expression of \(W^{n,\ell }_{\text {d}}\), the typical terms in the second term can be bounded by

for \(0\leqslant i\leqslant k\). Note that we have used \(\tau =\mathcal {O}(h)\) in the above discussion. This also implies the same upper boundedness as that in (49).

The definitions in Sect. 4.1 imply \(\gamma ^{(\ell )}_{\kappa [\sigma ]}=\gamma ^{(\ell )}_{\kappa [r]}\) if \(0\leqslant \kappa \leqslant \min (\sigma ,\ell )\). Hence, the terms \(U^{n,\ell }_{\text {c}}-W^{n,\ell }_{\text {c}}\) and \(U^{n,\ell }_{\text {d}}-W^{n,\ell }_{\text {d}}\) can be linearly expressed by \(\tau ^{i-1} \partial _t^i U^n\) and \(\tau ^i \partial _t^i U^n\), respectively, for \(q+1\leqslant i\leqslant r\). Using (16), we have

and using (18) we have

This implies that \(\Vert \mathcal {Z}^{n,\ell }_{5}\Vert \) is bounded by the right-hand side of (49).

Till now we complete the proof of this lemma by summing up the above estimates.

As an application of Lemma 6 and Theorem 1, we easily get the following conclusion. It implies that the supraconvergence order in space can achieve \(2k+1\).

Theorem 2

Assume Theorem 1hold, and let \(0\leqslant q\leqslant k\) be an integer. If the initial solution \(u^0\in V_h\) satisfies

then there is a constant \(C>0\) independent of n, h, \(\tau \), u and U, such that

If the exact solution has one more order regularity than that in Theorem 2, we have the following conclusion for the spatial derivatives. It also implies that the order in space can achieve \(2k+1\).

Theorem 3

Assume Theorem 1hold, and let \(0\leqslant q\leqslant k\) be a given integer. If the initial solution \(u^0\) satisfies

then there is a constant \(C>0\) independent of n, h, \(\tau \), u and U, such that

Proof

First, we give some notations and preliminaries. By the Riesz representation theorem, there exist two linear maps \(\mathbb {H}_1:V_h \rightarrow V_h\) and \(\mathbb {H}_2:V_h \rightarrow V_h\), such that

Let \(w(x,y)=w_1(x)w_2(y)\), where \(w_1(x)\in \mathcal {P}^k(I_h)\) and \(w_2(y)\in \mathcal {P}^k(J_h)\). We have

after a simple manipulation. Consequently,

Noticing Remark 2, we know that this equation holds for any \(w\in V_h\). This implies the commutative property \(\mathbb {H}_1\mathbb {H}_2=\mathbb {H}_2\mathbb {H}_1\).

Below we take \(\Vert \partial _x\xi ^n\Vert _{L^2(\varOmega _h)}\) as an example to show the proof of (53). The process looks a little long and complex.

Expressing the variation form (8) by two maps \(\mathbb {H}_1\) and \(\mathbb {H}_2\), making a left multiplication with \(\mathbb {H}_1\), and using the commutative property \(\mathbb {H}_1\mathbb {H}_2=\mathbb {H}_2\mathbb {H}_1\), we have that

That is to say, \(\mathbb {H}_1 u^{n,\ell }\) is the stage solution of the RKDG(s, r, k) method to solve the auxiliary problem with the periodic boundary condition

The exact solution of this auxiliary problem is \(\widetilde{U}=-\beta _1\partial _x U\). Let \(\widetilde{U}^{n,\ell }_{[r]}\) and \(\widetilde{U}^{n,\ell }_{[\min (q,r)]}\) be the reference functions for (56), define

Along the same line to obtain Theorem 2, we can get the similar estimate

where \(\mathcal {G}=(h^{k+1+q}+\tau ^r)\Vert \widetilde{U}_0\Vert _{H^{\max (k+2+q,r+\flat )}(\varOmega )}\). In Appendix A, we will prove

which together with the triangle inequity and (58) yields

where the boundedness of \(\Vert \xi ^0\Vert _{L^2(\varOmega _h)}\) is assumed in (52). Hence \(\Vert \partial _x\xi ^n\Vert _{L^2(\varOmega _h)}\) can be bounded in the form as stated in this theorem, provided that we show

In fact, \(({\widetilde{\xi }}^n-\mathbb {H}_1\xi ^n,v)\) can be divided into the following two terms:

since \(\mathcal {H}_1^{\theta _1}(U^n,v)+(\beta _1\partial _xU^n,v)=0\) holds for any \(v\in V_h\). Then we can prove (60) by estimating (I) and (II) along the same proof line as Lemma 1.

Remark 3

In this paper, we abandon the technique in [30], in which the spatial derivative is transformed into the temporal difference of stage solution. However, for the multi-dimensional case, only the streamline derivative can be transformed into the temporal difference. Along this proof line we can not establish the boundedness for the spatial derivatives along x- and y-directions, respectively. Hence, the strategy in [30] does not work well.

Remark 4

The conclusion of Theorem 3 does not hold for the higher order spatial derivative, due to the absence of the commutative property with \(\mathbb {H}_1\) and \(\partial _x\), as well as \(\mathbb {H}_2\) and \(\partial _y\).

4.4 Superconvergence Results

In this subsection, we devote to establishing the superconvergence results for the RKDG method in two dimensions, as an extension of those in [4, 30].

To show that, we give some notations. Associated with the partition and the upwind-biased parameters, we seek two series of parameters \(\{\vartheta ^x_{i}\}_{i=1}^{N_x}\) and \(\{\vartheta ^y_{j}\}_{j=1}^{N_y}\) by two systems of linear equations

Since \(\theta _1\ne 1/2\) and \(\theta _2\ne 1/2\), all parameters are well-defined; furthermore, they are bounded by a fixed constant since the partition is quasi-uniform [9, 30].

Let \(\Psi _{k+1}^x(x)\) and \(\Psi _{k+1}^y(y)\) be two parameter-dependent Radau polynomials along different directions. They are both piecewise polynomials of degree \(k+1\), defined element by element such as

Let \(S_h^{\mathrm{R},x}\) and \(S_h^{\mathrm{L},x}\) be two discrete sets made up of the roots and the extrema of \(\Psi _{k+1}^x(x)\), respectively. Likewise, we can define the discrete sets \(S_h^{\mathrm{R},y}\) and \(S_h^{\mathrm{L},y}\) for \(\Psi _{k+1}^y(y)\). Here and below, for all discrete set the symbol “R” points to the roots and “L” points to the extrema. Furthermore, we use “B” to represent the element boundaries.

Letting \({\text{RMS}}\{g_{1}, g_{2}, \ldots , g_{{\ell}} \}=[(g_{1}^{2}+\cdots +g_{\ell} ^{2})/\ell ]^{1/2}\) be the root-mean-square of discrete data, we present some notations below. With respect to the node average, define

With respect to the numerical flux, define

Here \(\star \) refers to the edge average, and the double superscripts point to the discrete sets along x- and y-directions. With respect to the cell average, define

Here \(\star \) refers to the cell average. With respect to the solution and the spatial derivatives, define

Now we are able to announce the superconvergence results in the following theorem.

Theorem 4

Let \(e^n=u^n-U(t^n)\) be the numerical error of the RKDG(s, r, k) method. Assume Theorem 1hold, then there hold for any \(n\leqslant M\) the following results.

-

i)

If the initial solution is defined as in Theorem 2with \(q=k\), then the node averages and the cell averages are superconvergent, namely,

$$\begin{aligned} \vert \!\vert \!\vert {\{\!\!\{e^n\}\!\!\}^{\theta _1,\theta _2}}\vert \!\vert \!\vert _{L^2(\varOmega _h)} +\vert \!\vert \!\vert {e^n}\vert \!\vert \!\vert _{L_{\star }^2(\varOmega _h)} \leqslant C\Vert U_0\Vert _{H^{\max (2k+2,r+\flat )}(\varOmega )}\big (h^{2k+1}+\tau ^r\big ), \end{aligned}$$and the edge averages of the numerical fluxes are superconvergent, namely,

$$\begin{aligned} \vert \!\vert \!\vert {\{\!\!\{e^n\}\!\!\}^{\theta _1,y}}\vert \!\vert \!\vert _{L_{\star }^2(\varGamma _h^1)} + \vert \!\vert \!\vert {\{\!\!\{e^n\}\!\!\}^{x,\theta _2}}\vert \!\vert \!\vert _{L_{\star }^2(\varGamma _h^2)} \leqslant C\Vert U_0\Vert _{H^{\max (2k+2,r+\flat )}(\varOmega )} (h^{2k+1}+\tau ^r ). \end{aligned}$$ -

ii)

If the initial solution is defined as in Theorem 2with \(q=1\), then the numerical fluxes are superconvergent at the roots set on element edges, namely,

$$\begin{aligned} \vert \!\vert \!\vert {\{\!\!\{e^n\}\!\!\}^{\theta _1,y}}\vert \!\vert \!\vert _{L^2(S_h^{\mathrm{B,R}})} + \vert \!\vert \!\vert {\{\!\!\{e^n\}\!\!\}^{x,\theta _2}}\vert \!\vert \!\vert _{L^2(S_h^{\mathrm{R,B}})} \leqslant C\Vert U_0\Vert _{H^{\max (k+3,r+\flat )}(\varOmega )}\big (h^{k+2}+\tau ^r\big ). \end{aligned}$$If the initial solution is defined as in Theorem 3with \(q=0\), then the tangent derivatives of numerical fluxes are superconvergent at the extrema set on element edges, namely,

$$\begin{aligned} \vert \!\vert \!\vert {\{\!\!\{\partial _ye^n\}\!\!\}^{\theta _1,y}}\vert \!\vert \!\vert _{L^2(S_h^{\mathrm{B,L}})} + \vert \!\vert \!\vert {\{\!\!\{\partial _x e^n\}\!\!\}^{x,\theta _2}}\vert \!\vert \!\vert _{L^2(S_h^{\mathrm{L,B}})} \leqslant C\Vert U_0\Vert _{H^{\max (k+3,r+1+\flat )}(\varOmega )}\big (h^{k+1}+\tau ^r\big ). \end{aligned}$$ -

iii)

If the initial solution is defined as in Theorem 2with \(q=1\), then the solution is superconvergent at the roots set, namely,

$$\begin{aligned} \vert \!\vert \!\vert {e^n}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{R,R})} \leqslant C\Vert U_0\Vert _{H^{\max (k+3,r+\flat )}(\varOmega )}\big (h^{k+2}+\tau ^r\big ). \end{aligned}$$If the initial solution is defined as in Theorem 3with \(q=0\), then the spatial derivatives are respectively superconvergent on the extrema lines, namely,

$$\begin{aligned} \vert \!\vert \!\vert {\partial _x e^n}\vert \!\vert \!\vert _{L_y^2(S_h^{\mathrm{L},x})} + \vert \!\vert \!\vert {\partial _y e^n}\vert \!\vert \!\vert _{L_x^2(S_h^{\mathrm{L},y})} \leqslant C\Vert U_0\Vert _{H^{\max (k+3,r+1+\flat )}(\varOmega )}(h^{k+1}+\tau ^r). \end{aligned}$$

Here the above constants \(C>0\) are all independent of n, h, \(\tau \), u and U.

Proof

The proof line is almost the same as that in [30]. To shorten the length of this paper, we only give a snapshot to bound

under different measurements. For more details, please refer to [30].

The conclusions in item i) are easily proved by Theorem 2 with \(q=k\), the definition of the two-dimensional GGR projection, Lemma 3 and the first two conclusions in Lemma 4.

The conclusions in item ii) are proved as follows. The first term in (63) can be bounded by Theorem 2 with \(q=1\) and Theorem 3 with \(q=0\), respectively. The second term is bounded by the inequality (see Appendix A)

with \(w=U^n\). The third term is bounded by Lemma 3.

The conclusions in item iii) can be proved by Theorem 2 with \(q=1\), and Theorem 3 with \(q=0\), respectively, coupled with the inequality (see Appendix A)

with \(w=U^n\). The third term is bounded by Lemma 3.

Now we complete the proof of this theorem.

To end this section, we give a convenient implementation for the initial solution

Here \(q_\mathrm{nt}\) is an integer, satisfying \(q-1\leqslant q_\mathrm{nt}\leqslant k\) in Theorem 2 and \(q\leqslant q_\mathrm{nt}\leqslant k\) in Theorem 3, respectively.

Remark 5

The first term in (65) can be replaced by \(\mathbb {G}_{\theta _1,\theta _2}U_0\). However, it involves the numerical solution of linear equations with the \(N_xN_y\) order circulate block tridiagonal matrix.

5 Numerical Experiments

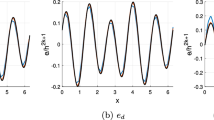

In this section, we present some numerical experiments to verify Theorems 2–4. Let \(\beta _1=\beta _2=1\) and \(T=1\). Carry out the RKDG(r, r, 2) method with \(\theta _1=\theta _2=0.75\) and \(r=3,4,5,6\). The non-uniform mesh is obtained by randomly perturbing the equidistance nodes by at most 5%. The initial solution is defined by (65) with \(q_\mathrm{nt}\). The time-step is \(\tau =0.1h_{\min }\), where \(h_{\min }\) is the minimum of length and width of every element.

Example 1

Let \(U_0={\rm{e}}^{\sin (2\uppi (x+y))}\), which is infinity differentiable.

Table 1 presents the supraconvergence results on the solution with \(q_\mathrm{nt}=k\) and \( k-1\), and the spatial derivatives with \(q_\mathrm{nt}=k\). We can see that the convergence orders exceed \(\min (5,r)\), as stated in Theorems 2 and 3.

In the next six tables, we present some numerical experiments to verify Theorem 4. Besides the root-mean-square, we also consider the absolution maximum as the measurement. For example, \(\vert \!\vert \!\vert {\{\!\!\{z\}\!\!\}^{\theta _1,\theta _2}}\vert \!\vert \!\vert _{L^\infty (\varOmega _h)} =\max \{|\{\!\!\{z\}\!\!\}^{\theta _1,\theta _2}_{i+\frac{1}{2},j+\frac{1}{2}}| :i=1,\cdots , N_x, \text{ and } j=1,\cdots , N_y\}\), and so on.

-

i)

Table 2 shows the superconvergence results on the node average and the cell average, and Table 3 shows those on the edge average of the numerical flux. Both take \(q_\mathrm{nt}=k\) and \(q_\mathrm{nt}=k-1\) for the initial solution. The data indicate that the convergence orders exceed \(\min (5,r)\), as stated in item i).

-

ii)

Tables 4 and 5 show the superconvergence results on the numerical fluxes and their tangent derivatives at some special points. Take \(q_\mathrm{nt}=1\) and \(q_\mathrm{nt}=0\) for the initial solution. We can observe that the convergence orders exceed \(\min (4,r)\) for the numerical fluxes and \(\min (3,r)\) for the tangent derivatives. These data verify item ii).

-

iii)

Tables 6 and 7 give the superconvergence results on the solution and the spatial derivatives at some special points (or lines). One can see that the convergence orders exceed \(\min (4,r)\) for the solution and \(\min (3,r)\) for the spatial derivatives. These data support the conclusions in item iii).

Example 2

Let \(\epsilon \) be a positive integer, and take \(U_0=[\sin (2\uppi (x+y))]^{\epsilon +2/3}\), which belongs to \(H^{\epsilon +1}(\varOmega )\) rather than \(H^{\epsilon +2}(\varOmega )\).

The data in Table 8 indicate the sharpness of the regularity assumption in theorems. We can observe the expected order from the right column in each group when the regularity assumption is satisfied. If the regularity becomes one order worse, the expected order is lost in the left column in each group.

6 Concluding Remarks

In this paper, we establish the superconvergence results for the RKDG method to solve the two-dimensional linear constant hyperbolic equation. We present a new technique of correction functions by virtue of the two-dimensional GGR projection, and reveal the interaction of the spatial derivatives and the correction technique along the same or different directions. The supraconvergence results for the solution and spatial derivatives, as well as many superconvergence results (see Theorem 4) are successfully extended from one dimension to two dimensions, and the Runge-Kutta time discretization does not destroy the superconvergence performance in the semi-discrete method. In the future work, we will extend the above results to non-periodic boundary condition and nonlinear conservation law.

References

Cao, W., Huang, Q.: Superconvergence of local discontinuous Galerkin methods for partial differential equations with higher order derivatives. J. Sci. Comput. 72(2), 761–791 (2017)

Cao, W., Li, D., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin methods based on upwind-biased fluxes for 1D linear hyperbolic equations. ESAIM Math. Model. Numer. Anal. 51(2), 467–486 (2017)

Cao, W., Li, D., Zhang, Z.: Optimal superconvergence of energy conserving local discontinuous Galerkin methods for wave equations. Commun. Comput. Phys. 21(1), 211–236 (2017)

Cao, W., Shu, C.-W., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin methods for two-dimensional hyperbolic equations. SIAM J. Numer. Anal. 53(4), 1651–1671 (2015)

Cao, W., Shu, C.-W., Zhang, Z.: Superconvergence of discontinuous Galerkin methods for 1-D linear hyperbolic equations with degenerate variable coefficients. ESAIM Math. Model. Numer. Anal. 51(6), 2213–2235 (2017)

Cao, W., Zhang, Z.: Superconvergence of local discontinuous Galerkin methods for one-dimensional linear parabolic equations. Math. Comput. 85(297), 63–84 (2016)

Cao, W., Zhang, Z.: Some recent developments in superconvergence of discontinuous Galerkin methods for time-dependent partial differential equations. J. Sci. Comput. 77(3), 1402–1423 (2018)

Cao, W., Zhang, Z., Zou, Q.: Superconvergence of discontinuous Galerkin methods for linear hyperbolic equations. SIAM J. Numer. Anal. 52(5), 2555–2573 (2014)

Cheng, Y., Meng, X., Zhang, Q.: Application of generalized Gauss-Radau projections for the local discontinuous Galerkin method for linear convection-diffusion equations. Math. Comput. 86(305), 1233–1267 (2017)

Cheng, Y., Shu, C.-W.: Superconvergence and time evolution of discontinuous Galerkin finite element solutions. J. Comput. Phys. 227(22), 9612–9627 (2008)

Cheng, Y., Shu, C.-W.: Superconvergence of discontinuous Galerkin and local discontinuous Galerkin schemes for linear hyperbolic and convection-diffusion equations in one space dimension. SIAM J. Numer. Anal. 47(6), 4044–4072 (2010)

Ciarlet, P.G.: The Finite Element Method for Elliptic Problems. North-Holland Publishing Co., Amsterdam (1978)

Cockburn, B.: Discontinuous Galerkin methods for convection-dominated problems. In: Barth, T.J., Deconinck, H. (eds.) High-Order Methods for Computational Physics. Lecture Notes in Computer Science and Engineering, vol. 9, pp. 69–224. Springer, Berlin (1999)

Cockburn, B., Hou, S., Shu, C.-W.: The Runge-Kutta local projection discontinuous Galerkin finite element method for conservation laws. IV. The multidimensional case. Math. Comput. 54(190), 545–581 (1990)

Cockburn, B., Lin, S.Y., Shu, C.-W.: TVB Runge-Kutta local projection discontinuous Galerkin finite element method for conservation laws. III. One-dimensional systems. J. Comput. Phys. 84(1), 90–113 (1989)

Cockburn, B., Luskin, M., Shu, C.-W., Süli, E.: Enhanced accuracy by post-processing for finite element methods for hyperbolic equations. Math. Comput. 72(242), 577–606 (2003)

Cockburn, B., Shu, C.-W.: TVB Runge-Kutta local projection discontinuous Galerkin finite element method for conservation laws. II. General framework. Math. Comput. 52(186), 411–435 (1989)

Cockburn, B., Shu, C.-W.: The Runge-Kutta local projection \(P^1\)-discontinuous-Galerkin finite element method for scalar conservation laws. RAIRO Modél. Math. Anal. Numér. 25(3), 337–361 (1991)

Cockburn, B., Shu, C.-W.: The Runge-Kutta discontinuous Galerkin method for conservation laws. V. Multidimensional systems. J. Comput. Phys. 141(2), 199–224 (1998)

Cockburn, B., Shu, C.-W.: Runge-Kutta discontinuous Galerkin methods for convection-dominated problems. J. Sci. Comput. 16(3), 173–261 (2001)

Guo, L., Yang, Y.: Superconvergence of discontinuous Galerkin methods for linear hyperbolic equations with singular initial data. Int. J. Numer. Anal. Model. 14(3), 342–354 (2017)

King, J., Mirzaee, H., Ryan, J.K., Kirby, R.M.: Smoothness-increasing accuracy-conserving (SIAC) filtering for discontinuous Galerkin solutions: improved errors versus higher-order accuracy. J. Sci. Comput. 53(1), 129–149 (2012)

Li, J., Zhang, D., Meng, X., Wu, B.: Analysis of discontinuous Galerkin methods with upwind-biased fluxes for one dimensional linear hyperbolic equations with degenerate variable coefficients. J. Sci. Comput. 78(3), 1305–1328 (2019)

Meng, X., Shu, C.-W., Wu, B.: Optimal error estimates for discontinuous Galerkin methods based on upwind-biased fluxes for linear hyperbolic equations. Math. Comput. 85(299), 1225–1261 (2016)

Reed, W.H., Hill, T.R.: Triangular mesh methods for the neutron transport equation. Los Alamos Scientific Laboratory report LA-UR-73-479 (1973)

Ryan, J., Shu, C.-W., Atkins, H.: Extension of a postprocessing technique for the discontinuous Galerkin method for hyperbolic equations with application to an aeroacoustic problem. SIAM J. Sci. Comput. 26(3), 821–843 (2005)

Shu, C.-W.: Discontinuous Galerkin methods: general approach and stability. In: Numerical Solutions of Partial Differential Equations, Advanced Mathematics Training Courses, pp. 149–201. CRM Barcelona, Birkhäuser, Basel (2009)

Shu, C.-W., Osher, S.: Efficient implementation of essentially nonoscillatory shock-capturing schemes. J. Comput. Phys. 77(2), 439–471 (1988)

Wang, H., Zhang, Q., Shu, C.-W.: Implicit-explicit local discontinuous Galerkin methods with generalized alternating numerical fluxes for convection-diffusion problems. J. Sci. Comput. 81(3), 2080–2114 (2019)

Xu, Y., Meng, X., Shu, C.-W., Zhang, Q.: Superconvergence analysis of the Runge-Kutta discontinuous Galerkin methods for a linear hyperbolic equation. J. Sci. Comput. 84, 23 (2020)

Xu, Y., Shu, C.-W., Zhang, Q.: Error estimate of the fourth-order Runge-Kutta discontinuous Galerkin methods for linear hyperbolic equations. SIAM J. Numer. Anal. 58(5), 2885–2914 (2020)

Xu, Y., Zhang, Q., Shu, C.-W., Wang, H.: The \(L^2\)-norm stability analysis of Runge-Kutta discontinuous Galerkin methods for linear hyperbolic equations. SIAM J. Numer. Anal. 57(4), 1574–1601 (2019)

Yang, Y., Shu, C.-W.: Analysis of optimal superconvergence of discontinuous Galerkin method for linear hyperbolic equations. SIAM J. Numer. Anal. 50(6), 3110–3133 (2012)

Zhang, Q., Shu, C.-W.: Error estimates to smooth solutions of Runge-Kutta discontinuous Galerkin methods for scalar conservation laws. SIAM J. Numer. Anal. 42(2), 641–666 (2004)

Zhang, Q., Shu, C.-W.: Stability analysis and a priori error estimates of the third order explicit Runge-Kutta discontinuous Galerkin method for scalar conservation laws. SIAM J. Numer. Anal. 48(3), 1038–1063 (2010)

Acknowledgements

Yuan Xu is supported by the NSFC Grant 11671199. Qiang Zhang is supported by the NSFC Grant 11671199.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Appendix A

Appendix A

In this section, we supplement some technical proofs.

1.1 Proofs of (37)

As an example, below we present the detailed proof with respect to \(\Vert [\![\mathbb {F}_{1,p}w]\!]\Vert _{L^2(\varGamma _{\!h}^2)}\). It depends on the one-dimensional correction technique.

Fix \(y\in [0,1]\) and assume \(v(x,y^{\pm })\in H^1(I_h)\). For \(0\leqslant p\leqslant k\), the correction function along the x-direction is defined in the form [30]

Consider the separation function \(v(x,y)=v_1(x)v_2(y)\), where either \(v_1(x)\) or \(v_2(y)\) is the piecewise polynomial of degree at most k. A direct application of (20) yields

As we have mentioned in Remark 2, we have

Note that \(\mathbb {F}_{1,p}^\mathrm{1d}(w^+_{x,j+1/2})=\mathbb {F}_{1,p}^\mathrm{1d}(w^-_{x,j+1/2})\), since \(w\in H^R(\varOmega )\subset H^2(\varOmega )\) is continuous. Then using (A2) and the triangle inequality, we get \(\Vert [\![\mathbb {F}_{1,p}w]\!]\Vert _{L^2(\varGamma _{\!h}^2)}\leqslant ({\text{I}})+({\text{II}})\), where

Each term in (I) is bounded in the form

where the inverse inequality (9b) and Lemma 3 with \(R=1\) are used. Each term in (II) is bounded in the form

where the result in [30, Lemma 4.3] is used for any horizontal edges. Finally using (21), we can get the boundedness of \(\Vert [\![\mathbb {F}_{1,p}w]\!]\Vert _{L^2(\varGamma _{\!h}^2)}\) as stated in (37).

Similarly, we can get the boundedness of the second term in (37), by showing

The detailed process is omitted here.

1.2 Proof of (59)

It is easy to prove the right inequality by taking \(v=\mathbb {H}_1 w\) in (39) and using Lemma 1. To prove the left inequality, we start from the formulation for any function \(w(x,y)\in V_h\), namely

For simplification of notations, the ranges in the summations are omitted. Due to the \(L^2\)-orthogonality of \(L^y_{j,\ell }(y)\), we can easily get that

where (55) has been used in the second conclusion. Then we can prove the left inequality using the inequality [29] for the single-variable function

1.3 Proof of (64)

Since \(w\in H^3(\varOmega )\) is continuous everywhere, we have

It has been proved in [30, Lemma 5.3] for every i that

which together with the standard trace inequity [12] yield (64a).

The proof of (64b) is almost the same, so omitted here. In what follows we devote to proving (64c).

The proof depends on the local projection related to (62), the parameter-dependent Radau polynomials. For any given function \(w\in L^2(\varOmega _h)\), the projection \(\mathbb {C}w\) is defined element by element, namely,

which belongs to \(\mathcal {P}^{k+1}(K_{ij})\cap \mathcal {Q}^k(K_{ij})\). Here

is the \(L^2\) projection of w onto \(\mathcal {P}^{k+1}(K_{ij})\). Note that the definition of this local projection is a little different to that in [2, 30], and we do not need to discuss whether \(\vartheta ^x_i\) and/or \(\vartheta ^y_j\) are equal to 0.

Let \(\mathbb {C}^\perp w=w-\mathbb {C}w\) be the projection error. By standard scaling argument, we have the approximation property

Furthermore, we can easily obtain the following lemmas.

Lemma A1

There exists a constant \(C>0\) independent of h and w, such that

Proof

As the applications of the Bramble-Hilbert lemma and the scaling argument, it is sufficient to prove for any \(w\in \mathcal {P}^{k+1}(K_{ij})\) that

which is implied by the definition of projection \(\mathbb {C}\), say (A6).

Lemma A2

There exists a constant \(C>0\) independent of h and w, such that

Proof

By the definitions of \(\mathbb {G}_{\theta _1,\theta _2}\) and \(\mathbb {C}\), we have

where \(\mathbb {R}w\) is the \(L^2\) projection of w onto \(\mathcal {P}^k(\varOmega _h)\), and \(\mathbb {R}^\perp w=w-\mathbb {R}w\) is the projection error. Here \(w_1\) and \(w_2\) are defined element-by-element by

Next we will estimate each term on the right-hand side of (A9).

Using the approximation properties of projections (16) and (A8), as well as the approximation property of projection \(\mathbb {R}\) (refer to (21)), we get

After some technical and direct manipulations, almost the same as that in [30], we also have

where definition (61), with respect to \(\{\vartheta ^x_{i}\}_{i=1}^{N_x}\) and \(\{\vartheta ^y_{j}\}_{j=1}^{N_y}\), plays an important role. For more details, please see [30].

Finally, summing up the above conclusions yields this lemma.

Using the inverse inequity \(\Vert v\Vert _{L^\infty (\varOmega _h)}\leqslant C h^{-1}\Vert v\Vert _{L^2(\varOmega _h)}\) for \(v\in V_h\), Lemma A2 implies

Hence it follows from Lemma A1 that

The others can be estimated similarly. Now we complete the proof of (54).

Rights and permissions

About this article

Cite this article

Xu, Y., Zhang, Q. Superconvergence Analysis of the Runge-Kutta Discontinuous Galerkin Method with Upwind-Biased Numerical Flux for Two-Dimensional Linear Hyperbolic Equation. Commun. Appl. Math. Comput. 4, 319–352 (2022). https://doi.org/10.1007/s42967-020-00116-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42967-020-00116-z

Keywords

- Runge-Kutta discontinuous Galerkin method

- Upwind-biased flux

- Superconvergence analysis

- Hyperbolic equation

- Two dimensions