Abstract

In this paper, we shall establish the superconvergence property of the Runge–Kutta discontinuous Galerkin (RKDG) method for solving a linear constant-coefficient hyperbolic equation. The RKDG method is made of the discontinuous Galerkin (DG) scheme with upwind-biased numerical fluxes coupled with the explicit Runge–Kutta algorithm of arbitrary orders and stages. Superconvergence results for the numerical flux, cell averages as well as the solution and derivative at some special points are shown, which are based on a systematical study of the \(\hbox {L}^2\)-norm stability for the RKDG method and the incomplete correction techniques for the well-defined reference functions at each time stage. The result demonstrates that the superconvergence property of the semi-discrete DG method is preserved, and the optimal order in time is provided under the smoothness assumption that is independent of the number of stages. As a byproduct of the above superconvergence study, the expected order of the post-processed solution is obtained when a special initial solution is used. Some numerical experiments are also given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we shall study the superconvergence property of the explicit Runge–Kutta discontinuous Galerkin (RKDG) method with the upwind-biased numerical flux for solving the linear hyperbolic equation

equipped with the initial solution \(U(x,0)=U_0(x)\) and the periodic boundary condition. Here \(T>0\) is the final time. For simplicity, we assume in this paper that \(\beta \) is a positive constant. We remark that there is no essential difficulty to extend the above context to multi-dimensional problems and to variable-coefficient linear problems.

The discontinuous Galerkin (DG) method was first introduced by Reed and Hill [31], and then developed by Cockburn et al. [15, 16, 18,19,20] in the framework of explicit RKDG methods for solving time-dependent nonlinear hyperbolic conservation laws. Due to its flexibility in implementation and good numerical performance, especially on high order accuracy for smooth solutions and high resolution for discontinuities, this method has attracted increased attention in recent years. For more details, one can refer to [13, 21] and the references therein. However, in contrast to its wide applications, theoretical results are not plenty. Even when restricted to linear hyperbolic equations, many theoretical works have mainly been carried out for the semi-discrete DG method, for example, the stability and optimal error estimate [14, 28, 33], the superconvergence analysis [2,3,4,5, 7, 9, 10, 25, 39], and the post-processing [17, 26, 32]. In this paper, we continue the work in [37, 38] and investigate superconvergence properties of RKDG methods with arbitrary orders and stages, when solving the model problem (1.1). Superconvergence orders in space, together with the optimal order in time, will be shown for the numerical flux, the cell average, as well as the solution and derivative at some discrete points.

To achieve the above goals, we have to address two key points. One is the \(\hbox {L}^2\)-norm stability analysis for the fully-discrete RKDG method. It is well known that this cannot be directly obtained under the strong stability preserving (SSP) framework [24], since the DG method combined with forward Euler time-marching is not stable under the standard CFL condition for piecewise linear or higher degree polynomials. Hence we need to find another way to recover the stability performance in theory. In [40, 41], Zhang and Shu have derived the optimal error estimate for the second order and third order RKDG methods when solving the sufficiently smooth solution of nonlinear conservation laws. The \(\hbox {L}^2\)-norm stability is implicitly presented in [40, 41] for the linear hyperbolic equation. Recently, Xu et al. [38] have proposed an analysis framework of \(\hbox {L}^2\)-norm stability for linear hyperbolic equations, and have made a classification on the different stability performance for many RKDG methods of time order up to twelve. Specially, it is proved theoretically that the four stage fourth order RKDG method is actually stable under the standard CFL condition. The main technique is to rewrite the RKDG scheme into an equivalent representation by using the temporal differences of the stage solutions, and then carry out a matrix transferring process with the aid of computer, in order to automatically obtain a delicate energy equation that can essentially reveal the stability mechanism of the higher order RKDG method. Similar work has also been given by Sun and Shu [35] for the Runge–Kutta algorithms to solve ordinary differential equations with semi-negative operators. After that, the authors [37] have found the relationship between the multiple-steps and the single-step time-marching, which allows us to avoid a detailed computer-aided calculation on the evolution vector. Hence the matrix transferring process for multiple-step time-marching is not necessary to be carried out.

Another key point is how to define the reference functions [37, 40, 41] and the technique of correction functions [2, 7] at each time stage. The purpose of this paper is to verify in theory that the time discretization does not destroy the superconvergence performance. To this purpose, we have to overcome two technical difficulties. One is the definition of reference functions at every time stage, under the almost same regularity assumption as that in the semi-discrete method. This issue has been addressed in [37], where the optimal error estimate is obtained for the fourth order RKDG method and the additional regularity assumption solely depends on the time order, independent of the number of stages. The basic idea is the cutting treatment on the original reference functions proposed in [40, 41]. The other is the definition of the correction function without too much regularity requirement on the exact solution. To that end, we propose in this paper an incomplete correction technique for the above reference functions.

It is worthy mentioning that the technique of correction functions is important in the development of superconvergence analysis for DG methods. Below we recall some important works related to this issue, mainly restricted to the semi-discrete DG method for one dimensional problems. Cheng and Shu [10] proved the \((k+3/2)\)th order supraconvergence between the numerical solution and a particular projection of the exact solution for the linear hyperbolic equation, and then Meng et al. [29] extended this result to the nonlinear conservation law if the flow speed keeps its sign. Here and below k is the degree of piecewise polynomials. The word supraconvergence is used to mean the supercloseness of the numerical solution and a special function in the finite element space, in order to distinguish with the word superconvergence. For the linear hyperbolic equation, Yang and Shu [39] improved the work of [10] and proved the (\(k+2\))th order superconvergence at the downwind-biased Radau points with a suitable initial discretization, when the purely upwind numerical flux is used. As a milestone in this issue, Cao et al. [7] firstly adopted the technique of correction functions for the linear hyperbolic equation and proved the (\(2k+1\))th order supraconvergence of the numerical solution towards a particular function in the finite element space. As an application of this technique, the superconvergence results with respect to the cell average, the numerical flux, the solution at the right Radau points, and the derivative at the left Radau points were established in a uniform framework. After that, this correction technique has been implemented to many problems; see [2,3,4,5,6] for an incomplete list of references.

In this paper we shall investigate the superconvergence property for the fully discrete RKDG method. Although the technique of correction functions is inherited from [2, 7] for the semi-discrete DG method, some improvements are achieved on several issues. Firstly, we make a minor modification to the definition of correction functions, such that the superconvergence property can be correctly reduced for the non-uniform mesh and upwind-biased parameter. Secondly, the smoothness requirement on the exact solution is weakened, with the help of the Bramble-Hilbert lemma, instead of the Legendre expansion. In this paper, we only require the initial solution and its derivatives up to the \(\min (2k+2,r+1)\)-th order belong to \(L^2(I)\), rather than \(L^{\infty }(I)\). Here r is the temporal order of the RKDG method. Thirdly, some tedious treatments are presented to preserve the optimal order in time under the mild regularity assumption that is independent of the number of stages of the RKDG methods. Finally, by making a full use of the superconvergence results and the properties of divided differences, we are able to avoid the duality arguments [17] and present a new proof of the accuracy-enhancement of a post-processed solution when a special initial solution is taken.

The rest of the paper is organized as follows. In Sect. 2, we give the definition of the RKDG scheme and its equivalent representation by the temporal difference of the stage solutions. In Sect. 3, we recall the matrix transferring process and quickly set up the propositions of the termination index and the contribution index, which lead to different stability results. Section 4 is the main part of paper, in which we establish the supraconvergence results for the solution and its derivative, both \((2k+1)\)th order in space and rth order in time, if the initial solution is smooth enough. In Sect. 5, we present the superconvergence results in the discrete \(\hbox {L}^2\)-norm, including the numerical flux, the cell average, as well as the solution and the derivative, respectively, at the roots and the extrema of some Radau-type polynomials. As a byproduct, in Sect. 6 we give a new proof for the superconvergence result for post-processed solutions. Some numerical experiments are given in Sect. 7, and the concluding remarks are given in Sect. 8. Finally, the supplement of three technical issues are given in the appendix.

There are many notations in this paper. To help the readers better understand this paper, we list here some main important notations with short descriptions.

s, r, k | The RKDG(s, r, k) method |

m | Number of multiple-steps |

\(\varvec{\alpha }(m)\) | Evolution vector |

\({\mathbb {D}}_\ell (m)u^n\) | Temporal difference of stage solutions |

\(\zeta (m), \rho (m)\) | The termination index, and the contribution index |

\(m_{\star }\) | The minimum of integers m such that \(\zeta (m)=\rho (m)\) |

\(\psi _r\) | Quantity to quickly judge stability performance; see (3.17) |

q | Total number of correction manipulations in time-marching |

\(q_\mathrm{nt}\) | Total number of correction manipulations for the initial solution |

\(\sigma \) | Maximal order of derivative in reference functions |

\(U^{(\ell )}_{[\sigma ]}(x,t), \varrho ^{(\ell )}_{[\sigma ]}(x,t)\) | Reference function at the \(\ell \)th stage, and the corresponding truncation error in time |

\(U^{n,\ell }\) | Reference function at each time stage, defined as \(U^{(\ell )}_{[r]}(x,t^n)\) |

\(W^{n,\ell }\) | Truncated reference function at each time stage, defined as \(U^{(\ell )}_{[\min (q,r)]}(x,t^n)\) |

\(z^{n,\ell }, z^{n,\ell }_{\text {c}},z^{n,\ell }_{\text {d}}\) | Arbitrary series \(z^{n,\ell }\) and their combinations; see (4.8) |

\(\chi ^{n,\ell }\) | One stage function in the finite element space |

\(e^{n,\ell }, \xi ^{n,\ell }, \eta ^{n,\ell }\) | Stage error and its decomposition \(e^{n,\ell }=\xi ^{n,\ell }-\eta ^{n,\ell }\); see (4.10) |

\({\mathcal {Z}}^{n,\ell }(v)\) | Functional to determine the residual of stage error; see (4.12) |

\({\mathbb {P}}_h,{\mathbb {P}}_h^\perp \) | \(\hbox {L}^2\) projection and the projection error |

\({\mathbb {G}}_h,{\mathbb {G}}_h^\perp \) | GGR projection and the projection error |

\({\mathcal {F}}_p\) | The pth correction operator; see (4.19) |

\({\mathbb {D}}_h^{-1}\) | The antiderivative in each element; see (4.20) |

\(R_{j,k+1}\) | The parameter-dependent Radau polynomial of degree \(k+1\) on \(I_j\) |

\(r_{ij}, n_j^\mathrm{R}\) | Roots of \(R_{j,k+1}\), and the total number of roots on \(I_j\) |

\(l_{ij},n_j^\mathrm{L}\) | Eextrema of \(R_{j,k+1}\), and the total number of extrema on \(I_j\) |

\({\mathbb {C}}_h,{\mathbb {C}}_h^\perp \) | Parameter-dependent local projection and the projection error |

\(\vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^2(S_h^\mathrm{B})}, \vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^\infty (S_h^\mathrm{B})}\) | Discrete \(\hbox {L}^2\) (resp. \(\hbox {L}^\infty \)) norm at element boundary points |

\(\vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^2(S_h^\mathrm{E})}, \vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^\infty (S_h^\mathrm{E})}\) | Discrete \(\hbox {L}^2\) (resp. \(\hbox {L}^\infty \)) norm at element midpoints |

\(\vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^2(S_h^\mathrm{R})}, \vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^\infty (S_h^\mathrm{R})}\) | Discrete \(\hbox {L}^2\) (resp. \(\hbox {L}^\infty \)) norm at roots of every \(R_{j,k+1}\) |

\(\vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^2(S_h^\mathrm{L})}, \vert \!\vert \!\vert {\cdot }\vert \!\vert \!\vert _{L^\infty (S_h^\mathrm{L})}\) | Discrete \(\hbox {L}^2\) (resp. \(\hbox {L}^\infty \)) norm at extrema of every \(R_{j,k+1}\) |

\(\partial _h^{\ell }\) | The \(\ell \)th order divided difference |

\(K_h^{2k+1,k+1}\) | Kernel function for post-processing |

2 The RKDG Method

In this section, we first present for the model problem (1.1) the RKDG method in the Shu-Osher form [34], and then write it into the equivalent representation [38] by the help of the temporal difference of the stage solutions.

2.1 Discontinuous Finite Element Space

Let \({\mathcal {T}}_h=\{I_j=(x_{j-1/2},x_{j+1/2})\}_{1\le j\le J}\) be a partition of the spatial domain \(I=(0,1)\), where \(J\in {\mathbb {Z}}^{+}=\{1,2,3,\ldots \}\) is an integer. The length of the element \(I_j\) is \(h_j=x_{j+1/2}-x_{j-1/2}\) for \(j=1,2,\ldots , J\). Denote \(h=h_{\max }=\max _{1\le j\le J} h_j\) and \(h_{\min }=\min _{1\le j\le J} h_j\). In this paper we assume that the partition is quasi-uniform, namely, the ratio \(h_{\max }/h_{\min }\) is upper bounded by a fixed constant as h goes to zero.

Associated with the partition \({\mathcal {T}}_h\), the discontinuous finite element space is defined as

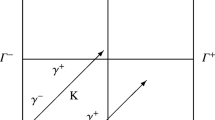

where \(P^k(I_j)\) is the space of polynomials in \(I_j\) of degree at most \(k\ge 1\). Note that the functions in \(V_h\) are allowed to have discontinuities at element endpoints. Dropping the subscript \(j+1/2\) for convenience, the jump and the weighted average are respectively denoted by

where \(v^{-}\) and \(v^{+}\), respectively, are the left- and right-limit, and \(\theta \) is a given constant.

Some inverse inequalities are used in this paper. Namely, for any \(v\in V_h\) there hold

where the inverse constant \(\mu >0\) is independent of h and v. Here \(\left\| {\cdot }\right\| _{L^2(I)}\) and \(\left\| {\cdot }\right\| _{L^{\infty }(I)}\) respectively are the usual norms in \(L^2(I)\) and \(L^{\infty }(I)\), and

with \(\varGamma _{\!h}\) being the set of all element endpoints. For more discussions, one can refer to [12, 30].

2.2 Semi-discrete DG Scheme

The semi-discrete DG method for (1.1) is defined as follows. Find the map \(u(x,t):[0,T]\rightarrow V_h\), such that

holds for any time \(t\in (0,T]\), and a suitable initial solution is enforced at \(t=0\). Here

is the DG spatial discretization with respect to the periodic boundary condition, and \((\cdot ,\cdot )\) is the inner product in \(L^2(I)\). In this paper we demand \(\theta >1/2\) such that \(\beta \{\!\!\{u\}\!\!\}^{(\theta )}_{j+1/2}\) forms an upwind-biased numerical flux, since \(\beta >0\). Actually, when \(\theta =1\) it yields the purely upwind flux.

The following important properties will be repeatedly used later in our analysis. The proofs are straightforward and hence are omitted. Please refer to [38] for more details.

Lemma 2.1

For the DG spatial discretization \({\mathcal {H}}(\cdot ,\cdot )\), we have the following conclusions.

-

1.

There holds the approximate skew-symmetric property, namely

$$\begin{aligned} {\mathcal {H}}(w,v)+{\mathcal {H}}(v,w) = -2\beta \Big (\theta -\frac{1}{2}\Big ) \sum _{1\le j\le J} [\![w]\!]_{j+\frac{1}{2}}[\![v]\!]_{j+\frac{1}{2}}, \quad \forall \, w,v\in V_h, \end{aligned}$$which implies \( {\mathcal {H}}(w,w) = -\beta \Big (\theta -\frac{1}{2}\Big )\left\| {[\![w]\!]}\right\| _{L^2(\varGamma _{\!h})}^2\), for any \(w\in V_h\);

-

2.

There holds the nonpositive property, namely

$$\begin{aligned} \sum _{i,j\in {\mathcal {G}}}g_{ij} {\mathcal {H}}(w_i,w_j) \le 0, \quad w_i\in V_h, \end{aligned}$$where \(\{g_{ij}\}_{i,j,\in {\mathcal {G}}}\) forms a symmetric positive semidefinite matrix, and \({\mathcal {G}}\) is an arbitrary index set for the row number and column number.

-

3.

There holds the weak boundedness in \(V_h\times V_h\), namely

$$\begin{aligned} |{\mathcal {H}}(w,v)| \le C |\beta | h^{-1}\left\| {w}\right\| _{L^2(I)}\left\| {v}\right\| _{L^2(I)}, \quad \forall \,w,v\in V_h, \end{aligned}$$where the bounding constant \(C>0\) solely depends on \(\theta \) and \(\mu \).

2.3 Fully-Discrete RKDG Method

The explicit Runge–Kutta algorithm is widely used to solve (2.4); see, e.g., [22,23,24] and the references therein. In this paper this kind of fully-discrete method is named as the RKDG(s, r, k) method, where s and r are the stage number and time order of the Runge–Kutta algorithm, and k is the degree of piecewise polynomials in \(V_h\).

For any \(M\in {\mathbb {Z}}^{+}\), let \(\{t^n=n\tau \}_{0\le n\le M}\) be a uniform partition of the time interval [0, T], where \(\tau \) is the time step. In this paper the time step is taken to be a constant just for simplicity. For the RKDG(s, r, k) method, each time-marching from \(t^n\) to \(t^{n+1}\) is generally given in the Shu-Osher form [34]:

-

Let \(u^{n,0}=u^n\).

-

For \(\ell =0,1,\ldots ,s-1\), successively find the stage solution \(u^{n,\ell +1}\) through the following variational formula

$$\begin{aligned} (u^{n,\ell +1},v) = \sum _{0\le \kappa \le \ell } \Big [ c_{\ell \kappa }(u^{n,\kappa },v) +\tau d_{\ell \kappa }{\mathcal {H}}(u^{n,\kappa },v) \Big ], \quad \forall v\in V_h, \end{aligned}$$(2.6)where the parameters \(c_{\ell \kappa }\) and \(d_{\ell \kappa }\) are given constants, determined by the used Runge–Kutta algorithm; noting that \(d_{\ell \ell }\ne 0\) and \(\sum _{0\le \kappa \le \ell }c_{\ell \kappa }=1\).

-

Let \(u^{n+1}=u^{n,s}\).

The initial solution \(u^0\in V_h\) is given as the suitable approximation of \(U_0\). The detailed definition will be given for different purposes; see Sects. 4 and 5 below.

2.4 Equivalent Representation of the RKDG Method

Following [37, 38, 41], we set up an equivalent representation of the RKDG method by using the temporal differences of the stage solutions.

Sometimes we need to investigate the numerical performance for multiple-step time-marching of RKDG methods. To do that, we introduce for any integers \(n\ge 0\) and \(\kappa \ge 0\) the notations

Let \(m\in {\mathbb {Z}}^{+}\). The m steps time-marching of an RKDG(s, r, k) method with time step \(\tau \) can be looked upon as a single step time-marching of the RKDG(ms, r, k) method with time step \(m\tau \), in which each stage marching can be written in the form

where \(\ell =0,1,\ldots , ms-1\). Here the parameters \(c_{\ell \kappa }(m)\) and \(d_{\ell \kappa }(m)\) are determined by the given parameters \(c_{\ell \kappa }(1)=c_{\ell \kappa }\) and \(d_{\ell \kappa }(1)=d_{\ell \kappa }\).

In this paper, we always denote \({\mathbb {D}}_0(m) u^n=u^n\) for simplicity of notation. For \(1\le \ell \le ms\), the temporal difference of the stage solution

is recursively defined by the variational form

It is easy to see that the combination coefficients satisfy \(\sigma _{\ell \ell }(m)\ne 0\) and \(\sum _{0\le \kappa \le \ell }\sigma _{\ell \kappa }(m)=0\). This process can be implemented by some suitable linear combination of the variational formulas at different time stages.

In the meanwhile, the above definitions lead to the evolution equation

which is an equivalent representation of the RKDG method. Here \(\alpha _0(m), \alpha _1(m),\ldots , \alpha _{ms}(m)\) are constants, and \(\alpha _0(m)>0\) is used only for scaling such that all components are integers. It is only needed for easier computer implementation. In the theoretical analysis, we often take \(\alpha _0(m)=1\). For the convenience of notations, we would like to express (2.11) by the so-called evolution vector

In addition, we define \(\alpha _i(m)=0\) for \(i>ms\).

In the next lemma, we would like to point out that it is not necessary to carry out a tedious manipulations to write down the detailed formulation of (2.8) and/or (2.11) for multiple-step time-marching of RKDG methods. To show that, associated with \({\varvec{\alpha }}(m)\) we define the generating polynomial

and denote the offsets by \(\tilde{\alpha }_i(m)=\alpha _i(m)/\alpha _0(m)-1/i!\) for \(i\ge 0\).

Lemma 2.2

Every evolution vector \({\varvec{\alpha }}(m)\) can be obtained from \({\varvec{\alpha }}(1)\), due to the following identity

Furthermore, we have \(\tilde{\alpha }_i(m)=0\) for \(0\le i\le r\), and \(\tilde{\alpha }_{r+1}(m)=\tilde{\alpha }_{r+1}(1)/m^r\).

This is a trivial extension of the results in [37], which is given for the fourth order RKDG method. The proof is almost the same, so it is omitted here.

3 Stability Analysis

In this section we investigate the \(\hbox {L}^2\)-norm stability of the RKDG methods, following [37, 38]. The main technique is to carry out a matrix transferring process to set up a sufficiently good energy equation, showing explicitly by the termination index, the contribution index, and the sign of the central objective.

3.1 Matrix Transferring Process

Squaring and integrating on both sides of the evolution equation (2.11), we can get the initial energy equation. However, the stability mechanism of the DG spatial discretization (see Lemma 2.1) is not well reflected.

To fully explore the positive contribution of the spatial discretization, we would like to execute the matrix transferring process through a series of energy equations

where \(\ell \ge 1\) is the sequence number of the matrix transferring, and

respectively represent the time discretization information and the spatial discretization information. They are equivalently expressed by two symmetric matrices

The matrix transferring process is defined as follows. Let \(\ell \ge 1\) be the sequence number, and assume both \({\mathbb {A}}^{(\ell -1)}(m)\) and \({\mathbb {B}}^{(\ell -1)}(m)\) have been known. If the central objective \(a_{\ell -1,\ell -1}^{(\ell -1)}(m)\ne 0\), we define the termination index

and stop the process. Otherwise, we make the following manipulation such that the time discretization information, corresponding to the \((\ell -1)\)th row and column of \({\mathbb {A}}^{(\ell -1)}(m)\), is completely transformed into the space discretization information. Owing to the symmetry property of matrices, below we only present the manipulations in the lower triangular matrices. Assuming \(i\ge j\), the formulations are given in the form

where the relationship (2.10) is used. See [38] for more discussions.

Recalling the initial energy equation, we define two matrices \({\mathbb {A}}^{(0)}(m)\) and \({\mathbb {B}}^{(0)}(m)\) with the entries

in addition. Since \(a_{00}^{(0)}(m)\equiv 0\), the transform is done at least once. This implies \(\zeta (m)\ge 1\).

A careful observation on (3.4a) reveals that the kernel information in TM(\(\zeta (m)\)) can be explicitly expressed by the evolution vector \({\varvec{\alpha }}(m)\). The related formulations are collected in the following lemma; see [37, Propositions 3.1 and 3.2] for more details.

Lemma 3.1

For \(0\le j\le \zeta (m)\), we have

Also we have \(a_{00}^{(0)}(m)=0\) and

Furthermore, we have \(a_{ij}^{(\zeta (m))}(m)=0\) provided \(\min (i,j)<\zeta (m)\).

The results given in [37] can be extended from the fourth order RKDG methods into arbitrary order RKDG methods, along the same line.

Proposition 3.1

For \(m\in {\mathbb {Z}}^{+}\), we have \(\zeta (m)\equiv \zeta \ge \lfloor {r/2}\rfloor +1\) and \(a_{\zeta \zeta }^{(\zeta )}(m)a_{\zeta \zeta }^{(\zeta )}(1)>0\). Here \(\lfloor {r/2}\rfloor \) denotes the maximal integer not greater than r/2.

Proof

This can be proved by the generating polynomial. If \(p^{(m)}(z)p^{(m)}(-z)=\sum _{0\le i\le ms} g_{2i}(m) z^{2i}\), then it follows from (3.7) that

For \(1\le j\le \lfloor {r/2}\rfloor \), using (3.7) again we have

since Lemma 2.2 clearly states \(\alpha _{j\pm \kappa }(m)=1/(j\pm \kappa )!\) for \(0\le j\pm \kappa \le r\). Owing to the definition of the termination index \(\zeta (m)\), the central objective \(a_{\zeta (m)\zeta (m)}^{(\zeta (m))}(m)\) is the first nonzero diagonal entry in the matrix transferring process. Hence \(\zeta (m)\ge \lfloor {r/2}\rfloor +1\), and

On the other hand, using Lemma 2.2 and (3.9) with \(m=1\), we have

Comparing with the order and coefficient of (3.9) and (3.10), we have \(\zeta (m)=\zeta (1)=\zeta \) and

That is to say, \(a_{\zeta \zeta }^{(\zeta )}(m)\) and \(a_{\zeta \zeta }^{(\zeta )}(1)\) have the same signs. This completes the proof of this proposition. \(\square \)

By (3.4b) and (3.6) in Lemma 3.1, each entry in the \(\zeta (m)\)th order leading principal symmetric submatrix of \({\mathbb {B}}^{(\zeta (m))}(m)\) can be explicitly expressed by

where \(0\le j\le i\le \zeta (m)-1\).

The contribution index of the spatial DG discretization is defined by

where

This index implies that the \(\rho (m)\)th order leading principal submatrix of \({\mathbb {B}}^{(\zeta (m))}(m)\) is symmetric positive definite. If \(BI(m)=\emptyset \), then \(\rho (m)=\zeta (m)\).

Proposition 3.2

There holds \(\rho (m)\ge \lfloor {(r+1)/2}\rfloor \).

Proof

Let \(0\le i, j\le \lfloor {(r-1)/2}\rfloor \). Since \(i+j+1\le r\), each element in the right-hand side of (3.12) is clearly determined by Lemma 2.2. By the same manipulation as that in [37], we can obtain

Please refer to [37] for more details. The \(\lfloor {(r+1)/2}\rfloor \)th order leading principal submatrix of \({\mathbb {B}}^{(\zeta (m))}(m)\) is symmetric positive definite, since it is congruent to a Hilbert matrix with a diagonal transform matrix made up of \(\sqrt{2}\alpha _0(m)/i!\). This completes the proof of this proposition. \(\square \)

Proposition 3.3

There exists an integer \(m_{\star }\ge 1\) such that \(\rho (m)=\zeta (m)\) for \(m\ge m_{\star }\).

Proof

Along the same line as that for (3.14) we have

for \(0\le j\le i\le \zeta (m)-1\), where

We can announce that \(\widetilde{b}_{ij}^{(\zeta (m))}(m)\) goes to zero as m increases, because the offset satisfies

where the bounding constant \(C>0\) is independent of m. The detailed proof of (3.16) will be given in the appendix.

As a result, the \(\zeta (m)\)th order leading principal submatrix of \({\mathbb {B}}^{(\zeta (m))}(m)\) can be looked upon as a perturbation of a symmetric positive definite matrix, which is also congruent to a \(\zeta (m)\)-th order Hilbert matrix. Hence this lemma is proved. \(\square \)

For many popular RKDG methods, we do not need to make the above discussion in order to find out the above important information for each m. The stability performance can be quickly judged by the following quantity

when it is not equal to zero.

Remark 3.1

Note that \(\tilde{\alpha }_{r+1}(1)\ne 0\) for the rth order RKDG methods. Hence \(\psi _r\ne 0\) always holds for odd r. However, it may happen that \(\psi _r=0\) for even r. If so, the matrix transferring process for \(m=1\) is needed. In the following we will not pay more attention to this case.

Proposition 3.4

Assume \(\psi _r\ne 0\). There holds \(\zeta =\lfloor {(r+2)/2}\rfloor \) and \((-1)^{\zeta }\psi _r\cdot a_{\zeta \zeta }^{(\zeta )}(1)>0\).

Proof

By means of \(e^z=\sum _{i\ge 0}\frac{1}{i!}z^i\), it follows from Lemma 2.2 that

which also implies

Comparing this identity with (3.9), we are able to prove this proposition. \(\square \)

Proposition 3.5

Let \(m_{\star }\in {\mathbb {Z}}^{+}\) be the integer stated in Proposition 3.3, and assume \(\psi _r\ne 0\). Then for odd r we have \(m_{\star }=1\), and for even r we have

Proof

It follows from Propositions 3.2 and 3.4 that

For odd r, this conclusion implies \(m_{\star }=1\) since \(\lfloor {(r+1)/2}\rfloor =\lfloor {(r+2)/2}\rfloor \). For even r, this also implies that \(\rho (m)\ge \zeta -1\).

Hence, to achieve \(\rho (m)=\zeta =r/2+1\) for even r, we only need to ensure \(\det \{b_{ij}^{(\zeta )}(m)\}_{0\le i,j\le \zeta -1}>0\). Note that \(i+j+1>r\) happens only when \(i=j=\zeta -1\). Therefore, in (3.15) there holds \(\tilde{b}_{ij}^{(\zeta )}(m)=0\) almost everywhere, except

Note that Lemma 2.2 is used at the last step. By the formulation for the determinants of Hilbert matrices [11], we have

For more details to get this equality, please refer to [37]. We have now completed the proof of this proposition. \(\square \)

Remark 3.2

There holds \(\tilde{\alpha }_{r+1}(1)=-1/(r+1)!\) for the RKDG(r, r, k) method. Proposition 3.5 shows \(m_{\star }=1\) for \(r\not \equiv 0\pmod 4\), and \(m_{\star }=2\) for \(r\equiv 0\pmod 4\).

3.2 Stability Conclusions

In this subsection we would like to point out three kinds of stability performance for those RKDG methods satisfying \(\psi _r\ne 0\).

Following the line of analysis in [37, 38], we can have the following extending conclusions. Propositions 3.3 and 3.1 imply \(\rho (m)=\zeta (m)=\zeta \) for \(m\ge m_{\star }\). Using the relationship (2.10) among the temporal differences of stage solutions, as well as the weak boundedness of the DG discretization (see Lemma 2.1), we can conclude for any \(0\le i\le j \le ms\) that

where the bounding constant \(C(m)>0\) is independent of \(n, h,\tau \) and u. This, together with the Cauchy-Schwarz inequality, yields

where \(\lambda =|\beta |\tau h^{-1}\) is the CFL number. Here and below \(Q_m(\lambda )\) represents a generic polynomial satisfying \(Q_m(0)=0\).

Because the \(\zeta \)th order leading principal submatrix of \({\mathbb {B}}^{(\zeta )}(m)\) is symmetric positive definite, its smallest eigenvalue, denoted by \(\varepsilon (m)\), is positive. By the previous two conclusions in Lemma 2.1, and the second inverse inequality in (2.3), we get

where the Cauchy-Schwarz inequality and Young’s inequality are also used. Here the first term on the right-hand side of \(\text {SP}(\zeta )\) explicitly shows the stability mechanism of the DG discretization.

Summing up the above conclusions, we have

for any \(m\ge m_{\star }\). For more details, please refer to [37, 38].

Theorem 3.1

If \((-1)^{\lfloor {r/2}\rfloor +1}\psi _r<0\), the RKDG(s, r, k) method has the strong (boundedness) stability. That is, there exists an integer \(m_{\star }\in {\mathbb {Z}}^{+}\), such that

provided the CFL number \(\lambda =|\beta |\tau {h^{-1}}\) is smaller than a certain constant. Moreover, if \(m_{\star }=1\) is admitted, the monotonicity stability is achieved, namely,

Proof

Since \((-1)^{\lfloor {r/2}\rfloor +1}\psi _r<0\), Proposition 3.4 implies \(a_{\zeta \zeta }^{(\zeta )}(m)<0\). It follows from (3.20) that

provided \(\lambda \le \lambda _{\max }(m)\). If \(m_{\star }=1\), this obviously implies the monotonicity stability. If \(m_{\star }>1\), we take \(m=m_{\star }, m_{\star }+1, \ldots , 2m_{\star }-1\), and get the strong (boundedness) stability. We have now completed the proof of this theorem. \(\square \)

Theorem 3.2

If \((-1)^{\lfloor {r/2}\rfloor +1}\psi _r>0\), the RKDG(s, r, k) method has the weak(\(\gamma \)) stability with

That is, there exists a bounding constant \(C>0\) depending on the final time T and independent of u, such that

provided that the time step satisfies a strong restriction \(\tau ={\mathcal {O}} (h^{\gamma /(\gamma -1)})\).

Proof

Since \((-1)^{\lfloor {r/2}\rfloor +1}\psi _r>0\), Proposition 3.4 implies \(a_{\zeta \zeta }^{(\zeta )}(m_\star )>0\). It follows from (3.20) that

where the bounding constant \(C(m_{\star })>0\) is independent of n, h and \(\tau \). An application of Gronwall’s inequality gives us this theorem for \(n\equiv 0\pmod {m_{\star }}\). Using (3.19) and the triangle inequality, we can easily see that

where the bounding constant \(C(m_{\star })>0\) is also independent of \(n,h, \tau \) and u. We can then complete the proof of this theorem by collecting the above conclusions. \(\square \)

In practice, the study on the stability under a suitable CFL condition is extremely important. For the piecewise polynomials of lower degree, we have the following theorem.

Theorem 3.3

For the RKDG(s, r, k) method, some improved stability holds for the piecewise polynomials of lower degree.

-

1.

There holds the monotonicity stability provided \(k\le \lfloor {r/2}\rfloor -1\);

-

2.

There holds the strong (boundedness) stability provided \(k\le \lfloor {r/2}\rfloor \). If \(m_{\star }=1\) is admitted, the strong (boundedness) stability is improved to be the monotonicity stability.

Proof

Recalling the result in [38, Theorem 5.1] that the RKDG(ms, r, k) method has the monotonicity stability for \(k\le \rho (m)-1\), where the jump’s square on element endpoints (the stability mechanism of the semi-discrete DG method) plays an important role. As a corollary of Propositions 3.1 through 3.3, we can complete the proof of this theorem. \(\square \)

Theorem 3.3 implies that the RKDG(s, r, k) method always has the \(\hbox {L}^2\)-norm stability under a suitable CFL condition, if \(r\ge 2k+1\). It is good for the superconvergence study below.

To conclude this section, the \(\hbox {L}^2\)-norm stability results for the RKDG(s, r, k) method [23] are listed in Table 1, where \(s=r\) or \(s=r+1\). They are given by Theorems 3.1 through 3.3.

4 Supraconvergence Analysis

In this section we devote to establishing the supraconvergence property of the RKDG method, starting from the stability result in the previous section. The main tools are the reference functions and the technique of correction functions at different time stages.

For the convenience of notations, in what follows we would like to use the notation C to denote those generic constants independent of \(n, h,\tau , u\), and U.

4.1 Elemental Analysis Process

Following [37] we set up an elemental analysis process on the error estimate for the fully-discrete RKDG method.

4.1.1 A General Stability

The stability results in Theorems 3.1 through 3.3 can be extended to the nonhomogeneous problem. For simplicity of notations, the numerical solution is still denoted by u. Similarly as (2.6), at each time-marching there holds

for \(\ell =0,1,\ldots ,s-1\). Here \(f^{n,\ell }\) is the given source term.

Along the same line as the discussion for the homogeneous problem, we can easily obtain the following lemma. To shorten the length of this paper, the proof is omitted.

Lemma 4.1

Under the temporal-spatial condition as stated in Theorems 3.1 through 3.3, there holds

where the bounding constant \(C>0\) is independent of \(n,h,\tau , f\), and u.

4.1.2 The Reference Functions

We now extend the idea in [37] and define a series of reference functions. Below the arguments x and t may be dropped if it does not cause confusion.

Given an integer \(\sigma \) satisfying \(0\le \sigma \le r\), and let \(U_{[\sigma ]}^{(0)}=U\) be the exact solution of (1.1). The others are inductively defined. Let us assume for the sake of argument that the previous reference functions \(U_{[\sigma ]}^{(\kappa )}\), for \(0\le \kappa \le \ell \), have been defined well and expanded in the form

where the parameter \(\gamma _{i[\sigma ]}^{(\kappa )}\) has been known. Paralleled to the stage marching of the RKDG(s, r, k) method, we define an auxiliary reference function

where the expansion results from the simple substitution of (4.3). The detailed formulations to compute the parameter \(\gamma ^{(\ell +1)}_{i[\sigma ]}\) are omitted here; please refer to [37] for more details. By cutting off the term involving the \((\sigma +1)\)th order time derivative – if it exists – we define the subsequent reference function

Letting \(\ell \) go through the set \(\{0,1,\ldots ,s-2\}\), we can define all reference functions.

Proposition 4.1

For \(0\le \ell \le s-1\), there hold \(\gamma _{0[\sigma ]}^{(\ell )}=1\) and \(\gamma ^{(\ell )}_{i[\sigma ]}=\gamma ^{(\ell )}_{i[r]}\) if \(0\le i \le \min (\sigma ,\ell )\).

For the convenience of notations, we denote \(U_{[\sigma ]}^{(s)}(x,t)=U(x,t+\tau )\) in this paper. Since \(U_0\in H^{r+1}(I)\), the exact solution \(U(x,t)=U_0(x-\beta t)\) is smooth enough such that the above reference functions are all continuous in space, due to the Sobolev embedding theorem [1]. After some manipulations that all Taylor expansions in time are only done up to the \((\sigma +1)\)-th time derivatives, it is easy to see that

where \(\varrho _{[\sigma ]}^{(\ell )}\) are the truncation errors in time, bounded by

Here \(L^\infty (H^{i}(I))\) denotes the space-time Sobolev space in which the \(H^{i}(I)\)-norm at any time \(t\in [0,T]\) is uniformly bounded. Actually, there holds \(\varrho _{[\sigma ]}^{(\ell )}=0\) for \(\ell \le \min (\sigma -1,s-2)\).

4.1.3 The Error Decomposition and Error Estimate

The following compact notations will be used for convenience. Let \(z^{n,\ell }\) form a series of solutions at every time stage, and denote

for any \(\ell =0,1,\ldots , s-1\), and n under consideration. Also we denote \(z^{n,s} = z^{n+1,0}\).

Denote by \(e^{n,\ell }=u^{n,\ell }-U^{n,\ell }\) the stage error, where

is the reference function at each time stage.

Let \(\chi ^{n,\ell }\in V_h\) be arbitrary series of functions defined at time stages. They will be determined for different purposes. As the standard analysis in the finite element method, we define

which implies the error decomposition \(e^{n,\ell }=\xi ^{n,\ell }-\eta ^{n,\ell }\).

Letting \(t=t^n\) in (4.6), we can get a group of variational forms similar as in the RKDG method. Subtracting them from each other and using the error decomposition, we can achieve the following error equation

for \(\ell =0,1,\ldots ,s-1\). Here \((F^{n,\ell },v)\) is the residual functional at every time stage, which is recursively defined by

where \(\varrho _{[r]}^{n,\ell }=\varrho _{[r]}^{(\ell )}(x, t^n)\), and the summation in (4.12) is equal to zero if \(\ell =0\).

By employing Lemma 4.1 on (4.11), we have the starting point of our estimate. The proof is easy, so is omitted here.

Lemma 4.2

Assume that the RKDG(s, r, k) method has the \(\hbox {L}^2\)-norm stability under suitable temporal-spatial condition, as stated in Theorems 3.1 through 3.3. Then we have

where \(\left\| {{\mathcal {Z}}^{\kappa ,\ell }}\right\| =\sup _{0\ne v\in V_h} {\mathcal {Z}}^{\kappa ,\ell }(v)/\left\| {v}\right\| _{L^2(I)}\), and the bounding constant \(C>0\) is independent of \(n, h, \tau , u\) and U.

4.2 The Supraconvergence Property

In this subsection we carefully take \(\chi ^{n,\ell }\) to arrive at the expected order of \(\left\| {\xi ^n}\right\| _{L^2(I)}\) and \(\left\| {\xi _x^n}\right\| _{L^2(I)}\), in the framework of Lemma 4.2.

4.2.1 Two Projections

In this paper we employ the \(\hbox {L}^2\) projection and the generalized Gauss-Radau (GGR) projection [8], respectively denoted by \({\mathbb {P}}_h={\mathbb {P}}_h^k\) and \({\mathbb {G}}_h={\mathbb {G}}_h^k\). The first one is locally defined, and the second one is globally defined except when \(\theta =1\).

If there is no confusion, the superscript k is dropped. For any \(w\in L^2(I)\), the projection \({\mathbb {P}}_hw\in V_h\) satisfies

where \({\mathbb {P}}_h^\perp w=w-{\mathbb {P}}_hw\) is the projection error. It is easy to get that [12]

For any \(w\in H^1({\mathcal {T}}_h)\), the projection \({\mathbb {G}}_hw\in V_h\) is defined by

where \({\mathbb {G}}_h^\perp w=w-{\mathbb {G}}_hw\) is the projection error. Here \(H^1({\mathcal {T}}_h)\) denotes the space including all piecewise \(H^1\)-functions. The main advantage of the GGR projection is the exact collocation of the numerical flux on element boundary points. As a result, we have

Moreover, it is proved in [8] that

4.2.2 The Technique of Correction Functions

Given any integer \(p\ge 0\), the pth correction function [2] for any function \(w\in H^1({\mathcal {T}}_h)\) is defined by

Here \({\mathbb {D}}_h^{-1}\) is the antiderivative in each element, defined by

Below we present some elemental properties about (4.19), similar as those in [2].

Lemma 4.3

Let \(0\le p\le k\). There exists a constant \(C>0\) independent of h and w, such that

As a corollary, \({\mathcal {F}}_p\) is a linear and continuous operator from \(H^1({\mathcal {T}}_h)\) to \(V_h\).

Proof

We only need to prove (4.21) for \(p\ge 1\), since it is trivial for \(p=0\).

Using the triangle inequality, the approximation property (4.18) of the GGR projection, and the first inverse inequality in (2.3), we have for any function \(z\in V_h^{k+1}\) that

This, together with the fact that \({\mathbb {D}}_h^{-1}{\mathcal {F}}_{p-1}w\in V_h^{k+1}\), yields

where the Holder’s inequality is used element by element at the last step. As a result, we can complete the proof of this lemma by induction. \(\square \)

Lemma 4.4

Let \(1\le p\le k\) and \(w\in H^1({\mathcal {T}}_h)\). There holds

-

1.

the exact collocation of the numerical flux, namely, \(\{\!\!\{{\mathcal {F}}_p w\}\!\!\}^{(\theta )}_{j+1/2}=0\) for \(j=1,2,\ldots ,J\);

-

2.

the recurrence relationship, namely, \(({\mathcal {F}}_{p}w, v_x)=({\mathcal {F}}_{p-1} w,v)\) for any \(v\in V_h\);

As a corollary of the above results, we have \({\mathcal {H}}({\mathcal {F}}_{p} w,v)=\beta ({\mathcal {F}}_{p-1} w,v)\) for any \(v\in V_h\).

Proof

By the definition of antiderivative operator, it is trivial to see \(({\mathbb {D}}_h^{-1}{\mathcal {F}}_{p-1} w)_{j+1/2}^+=0\). Then an integration by parts yields

Successively using this identity we have

where the definitions of the two projections are used at the last step since \(p-1\le k-1\).

Now we prove two elemental results by using the above statements \(({\mathbb {D}}_h^{-1}{\mathcal {F}}_{p-1} w)_{j+1/2}^{\pm }=0\). The definition of the GGR projection implies

and reduces for any \(v\in V_h\) that

where an integration by parts in each element is used. Now we have completed the proof of this lemma. \(\square \)

Lemma 4.5

Let \(1\le p\le k\) and \(w\in H^1({\mathcal {T}}_h)\). There holds \(({\mathcal {F}}_{p-1} w, v)= 0\) for any \(v\in V_h^{k-p}\).

Proof

Repeatedly applying (4.23), we have \(({\mathcal {F}}_{p-1}w, v) = ({\mathcal {F}}_{k}w, \partial _x^{k-p+1} v)=0\) for any \(v\in V_h^k\). This completes the proof of this lemma. \(\square \)

Remark 4.1

The antiderivative operator in this paper is slightly different from that in [2, 7]. The multiplier \((h_j/2)^{-1}\) is dropped in (4.20). This minor modification is very important to correctly yield Lemma 4.4 no matter whether the mesh is uniform or not.

4.2.3 The Supraconvergence of the Solution

Let q be an integer satisfying \(0\le q\le k\), to denote the total number of correction manipulations. At each time stage, in (4.10) we take

where both \(U^{n,\ell }=U_{[r]}^{(\ell )}(x,t^n)\) and \(W^{n,\ell }=U_{[\min (q,r)]}^{(\ell )}(x,t^n)\) are the reference functions. The detailed definition in Sect. 4.1.2 clearly shows that \(W^{n,\ell }\) is truncated from \(U^{n,\ell }\). If \(q=0\), the summation in (4.24) is understood to be zero and there is no correction manipulation. It is worthy mentioning that the introduction of \(W^{n,\ell }\) is very important to help us obtain the superconvergence results under a weak smoothness assumption of the exact solution.

Lemma 4.6

Assume \(\tau /h\) is upper bounded by a constant. With the choice (4.24), we have

for \(\ell =0,1,\ldots ,s-1\), where the bounding constant \(C>0\) is independent of \(n,\ell , h,\tau , u\) and U.

Proof

Substituting (4.24) and (4.10) into the definition of \({\mathcal {Z}}^{n,\ell }(v)\), we have \({\mathcal {Z}}^{n,\ell }(v)=\sum _{0\le p\le q}{\mathcal {Z}}_{p}^{n,\ell }(v)\), where

Note that the term \({\mathcal {Z}}_{p}^{n,\ell }(v)\) does not exist if \(q=0\). Owing to (4.17), it follows from \({\mathcal {F}}_0={\mathbb {G}}_h^\perp - {\mathbb {P}}_h^\perp \) and the definitions of the two projections that

Applying the last conclusion in Lemma 4.4 for the second term in \({\mathcal {Z}}_{p}^{n,\ell }(v)\), we get

for \(1\le p\le q\), where the equality (4.6) with \(\sigma =\min (q, r)\) is used.

Denote \(V^{n,\ell }_{\text {c}}=U^{n,\ell }_{\text {c}}-W^{n,\ell }_{\text {c}}\). Summing up the above identities we have

which implies

Below we are going to separately estimate each term on the right-hand side.

Recalling that \(\gamma _{0[\sigma ]}^{(\ell )}=1\) and \(\sum _{0\le \kappa \le \ell }c_{\ell \kappa }=1\) hold for \(\ell =0,1,\ldots , s-1\). As a result, \(W^{n,\ell }_{\text {c}}\) can be split into two kinds of terms, say,

where \(\widetilde{\gamma }_{i}^{(\ell )}\) are some known constants. Note that the term \(W^{n,\ell }_{\text {c},2}\) emerges only for \(\ell =s-1\). Successively applying Lemma 4.3 and the approximation property of the two projections, we get

since \(U(x,t)=U_0(x-\beta t)\) and \(\tau /h\) is bounded. Similarly, we have

Note that \(V^{n,\ell }_{\text {c}}\) comes from the cutting-off manipulation of reference functions. If \(q\ge r\), it is trivial to see that \(V^{n,\ell }_{\text {c}}=0\) and hence \(\left\| {{\mathcal {F}}_0V^{n,\ell }_{\text {c}}}\right\| _{L^2(I)}=0\). Even if \(q<r\), noticing Proposition 4.1, along the same line as for (4.27) we can get

For \(0\le p\le q-1\), we can similarly get

where (4.7) is used at the second step. Also (4.7) implies

Summing up the above inequalities, we have completed the proof of this lemma. \(\square \)

Theorem 4.1

Suppose the time step is taken to ensure the \(\hbox {L}^2\)-norm stability of the RKDG(s, r, k) method, as stated in Theorems 3.1 through 3.3. For any integer q satisfying \(0\le q\le k\), let

be the initial solution of the RKDG method, where \(q_\mathrm{nt}\) is an integer satisfying \(q-1\le q_\mathrm{nt}\le k\). Then we have

where the bounding constant \(C>0\) is independent of \(n,h, \tau ,u\) and U.

Proof

As a corollary of Lemmas 4.2 and 4.6, we have completed the proof of this theorem since

At the second step, we have used Lemma 4.3 and the approximation properties of the two projections. \(\square \)

Remark 4.2

Taking \(q=k\) in (4.33), the highest supraconvergence order \(2k+1\) in space is achieved for the solution. It will be verified by the numerical experiments.

4.2.4 Supraconvergence with Respect to the Derivative

Directly applying the first inverse inequality in (2.3), we can easily obtain from Theorem 4.1 that

It seems that one order of accuracy is lost in both space and time. However, the numerical results do not show this phenomenon. In the following lemma we give a theoretical support.

Theorem 4.2

Suppose the time step is taken to ensure the \(\hbox {L}^2\)-norm stability of the RKDG(s, r, k) method, as stated in Theorems 3.1 through 3.3. For any integer q satisfying \(0\le q\le k\), let (4.32) be the initial solution of RKDG method with \(q\le q_\mathrm{nt}\le k\). Then we have

where the bounding constant \(C>0\) is independent of \(n,h, \tau , u\) and U.

Proof

Let \(\Pi = -\beta U_x\). Obviously, it satisfies the auxiliary problem

which is equipped with the periodic boundary condition and the initial solution \(\Pi (x,0)=\Pi _0(x)\).

For any function \(w\in V_h\), there exists a unique function \(\tilde{w}\in V_h\) such that \((\tilde{w},v)={\mathcal {H}}(w,v)\) holds for any \(v\in V_h\). Define

It is easy to see that \({\mathcal {H}}_h\) is a linear map from \(V_h\) to itself.

Let \(\tilde{u}^{n,\ell }={\mathcal {H}}_h u^{n,\ell }\). It follows from (2.6) that \(u^{n,\ell +1} = \sum _{0\le \kappa \le \ell } [ c_{\ell \kappa }u^{n,\kappa }+\tau d_{\ell \kappa }\tilde{u}^{n,\kappa } ]\). Making a left-multiplication of \({\mathcal {H}}_h\) yields

for any n under consideration. This can be viewed as the RKDG(s, r, k) method to solve (4.36), with the initial solution \(\tilde{u}^0={\mathcal {H}}_h u^0\). Along the same line as that for Theorem 4.1, we have

The main difference comes from the initial solution. Here

is analogously defined as for \(\xi ^{n,\ell }\), and the including reference functions are defined along the same way as that in Sect. 4.1.2.

Due to the initial setting \(\tilde{u}^0={\mathcal {H}}_h u^0\), a tedious manipulation yields

The detailed process will be given in the appendix.

To finish the proof of this theorem, we need to set up the relationship between \(\xi _x^n\) and \(\tilde{\xi }^n\). By the definition of \(\xi ^{n,\ell }\), we know that

By (2.10) there holds \({\mathbb {D}}_1(1) u^n=\tau {\mathcal {H}}_h u^n =\tau \tilde{u}^{n}\). By the definition of the reference functions (see Sect. 4.1.2) we have \({\mathbb {D}}_1(1) U_{[r]}^n=-\tau \beta U^n_x\) and \({\mathbb {D}}_1(1) U_{[\min (q,r)]}^n=-\tau \beta U^n_x\) for \(q\ge 1\). A comparison with (4.41) and (4.39) yields for \(q\ge 1\) that

In fact, this conclusion also holds for \(q=0\), because both summations in (4.41) and (4.39) vanish at this status. Substituting the identity (4.42) into the error equation (4.11) with \(\ell =0\), we have

It follows from [36, Lemma 2.3] that

Together with (4.38), (4.40) and Lemma 4.6, this completes the proof of this theorem. \(\square \)

Remark 4.3

Taking \(q=k\) in (4.35), the highest supraconvergence order \(2k+1\) in space is achieved for the derivative of solutions. It will be verified by the numerical experiments.

5 Superconvergence Analysis at Discrete Points

In this section we devote to establishing the superconvergence results on some discrete points, based on the supraconvergence analysis in Sect. 4.

5.1 Notations and Conclusions

Let \(L_i(\hat{x})\) be the standard Legendre polynomial of degree i on the reference element \([-1,1]\), and thus

is the Legendre polynomial of degree i in \(I_j\), which is scaled from \(L_i(\hat{x})\).

Associated with the mesh and the upwind-biased parameter, we are able to seek a group of parameters \(\{\vartheta _j\}_{1\le j\le J}\) by the following system of linear equations

where \(j=1,2,\ldots ,J\). The existence and uniqueness can be verified since the determinant is not equal to zero, due to \(\theta \ne 1/2\). Similar system has been discussed in [8]. Then the parameter-dependent Radau polynomial of degree \(k+1\) is defined element by element, namely,

Its roots in \(I_j\) are denoted by \(r_{ij}\) for \(1\le i\le n_j^\mathrm{R}\), and its extrema in \(I_j\) are denoted by \(l_{ij}\) for \(1\le i\le n_j^\mathrm{L}\). Almost the same as that in [2, Lemma 3.1], we have \(n_j^\mathrm{R}=k+1\) if \(|\vartheta _j|<1\), and \(n_j^\mathrm{R}=k\) otherwise. By Rolle’s theorem, we know \(n_j^\mathrm{L}\ge n_j^\mathrm{R}-1\).

Remark 5.1

When the purely upwind flux (\(\theta =1\)) is used, there always holds \(\vartheta _j=1\) and hence \(R_{j,k+1}(x)\) is the right Radau polynomial in each element. When the upwind-biased flux (\(\theta \ne 1\)) is used together with the uniform mesh, we have

However, it may happen \(\vartheta _j\le 0\) for the upwind-biased flux coupled with the non-uniform mesh. To show that, we give a numerical example in Table 2, where the non-uniform mesh is obtained by random perturbations of a uniform mesh with J elements.

Four types of discrete point sets are considered in this section. The first two sets are for the element boundary points and the element midpoints, respectively denoted by

where \(x_j=(x_{j-1/2}+x_{j+1/2})/2\) is the central point in \(I_j\). The other two sets are for the roots and extrema of the parameter-dependent Radau polynomials (5.2), namely,

Below the following notations are used. For any given function z, define discrete norms

where \(\bar{z}_j\) is the cell average of z in \(I_j\). Similarly, we define the discrete norm

Similarly for \(\vert \!\vert \!\vert {z}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{L})}\). Now we are ready to present the superconvergence property in the next theorem.

Theorem 5.1

Assume that the RKDG(s, r, k) method has the \(\hbox {L}^2\)-norm stability under suitable temporal-spatial condition, as stated in Theorems 3.1 through 3.3. Let \(e^n=u^n(x)-U(x,t^n)\) be the numerical error at each time level.

-

1.

Let (4.32) be the initial solution with \(k-1\le q_\mathrm{nt}\le k\), then the numerical fluxes and the cell averages are superconvergent, namely,

$$\begin{aligned} \vert \!\vert \!\vert {\{\!\!\{e^n\}\!\!\}^{(\theta )}}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{B})} +\vert \!\vert \!\vert {\bar{e}^n}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{E})} \le C\left\| {U_0}\right\| _{H^{\max (2k+2,r+1)}(I)}(h^{2k+1}+\tau ^r). \end{aligned}$$ -

2.

Let (4.32) be the initial solution with \(0\le q_\mathrm{nt} \le k\), then the solution is superconvergent at the roots of the parameter-dependent Radau polynomial, namely,

$$\begin{aligned} \vert \!\vert \!\vert {e^n}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{R})} \le C\left\| {U_0}\right\| _{H^{\max (k+3,r+1)}(I)} (h^{k+2}+\tau ^r) , \end{aligned}$$and the derivative is superconvergent at the extrema of the parameter-dependent Radau polynomial, namely,

$$\begin{aligned} \vert \!\vert \!\vert {e_x^n}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{L})} \le C\left\| {U_0}\right\| _{H^{\max (k+3,r+2)}(I)}(h^{k+1}+\tau ^{r}). \end{aligned}$$

Note that the above bounding constant \(C>0\) is independent of \(n,h,\tau ,u\) and U.

In the next subsections we are going to prove this theorem based on the previous supraconvergence results. Before that, we give here two remarks to conclude this subsection.

Remark 5.2

Theorem 5.1 indicates that the fully-discrete RKDG scheme preserves the superconvergence properties of the semi-discrete DG method. In order to not destroy the superconvergence order in space, we have to use Runge–Kutta time-marching of enough high order. Numerical experiments in Sect. 7 will verify this statement.

Remark 5.3

Theorem 5.1 shows the superconvergence orders in the discrete \(\hbox {L}^2\)-norm. However, numerical experiments indicate the same order in the discrete \(\hbox {L}^{\infty }\)-norm. How to fill in this gap is left for the future work.

5.2 Superconvergence Results on the Average and the Numerical Flux

Now we take \(q=k\) in (4.24), and get

due to the definition of the GGR projection (4.16) and the first property in Lemma 4.4. Applying the second inverse inequality in (2.3) and Theorem 4.1 we have

Analogously we have

due to (4.16) with \(v=1\) and Lemma 4.5. Applying the triangle inequality and the Holder’s inequality, we obtain

At the last step, Theorem 4.1 is used for \(\left\| {\xi ^n}\right\| _{L^2(I)}\), and Lemma 4.3 and the approximation properties of the two projections are used for \(\left\| {{\mathcal {F}}_k (-\partial _x)^k U^n}\right\| _{L^2(I)}\). We have now proved the first superconvergence results stated in Theorem 5.1.

5.3 Superconvergence Results on the Solution and Derivative

To do that, we define a local projection with respect to the parameter-dependent Radau polynomials. This work is almost the same as that in [2], with a minor modification.

Let \(w\in H^{1}({\mathcal {T}}_h)\) be any given function. The projection \({\mathbb {C}}_hw\in V_h\) is defined element by element. It depends on whether \(\vartheta _j\) is equal to zero or not. If \(\vartheta _j\ne 0\), it satisfies [2]

where \(\theta _j=(\vartheta _j+1)/2\) for even k, and \(\theta _j=(\vartheta _j^{-1}+1)/2\) for odd k. If \(\vartheta _j=0\), it is just defined by the standard \(\hbox {L}^2\)-projection \({\mathbb {P}}_hw\) in this element. Here \({\mathbb {C}}_h^\perp w = w - {\mathbb {C}}_hw\) is the projection error.

The projection \({\mathbb {C}}_hw\) is well-defined. By the standard scaling argument, together with the Sobolev embedding theorem and the Bramble-Hilbert lemma, we can easily prove that

no matter whether \(\vartheta _j=0\) or not.

Lemma 5.1

There exists a bounding constant \(C>0\) independent of \(j, h_j\) and w, such that

Proof

Along the same line as that for (5.8), we can prove this lemma by verifying \({\mathbb {C}}_h^\perp w(r_{ij})=0\) and \(({\mathbb {C}}_h^\perp w)_x (l_{ij})=0\) for any \(w\in P^{k+1}(I_j)\). Since \({\mathbb {C}}_h^\perp w=0\) holds for any \(w\in P^k(I_j)\), we only need to show

If \(\vartheta _j=0\), it is obviously true since \({\mathbb {C}}_h={\mathbb {P}}_h\). If \(\vartheta _j\ne 0\), it has been clearly proved in [2]. This completes the proof of this lemma. \(\square \)

Lemma 5.2

There exists a bounding constant \(C>0\) independent of h and w, such that

Proof

The proof is postponed into the appendix. \(\square \)

Lemma 5.3

There exists a bounding constant \(C>0\) independent of h and w, such that

Proof

Since the mesh is quasi-uniform, we have \(J^{-1}={\mathcal {O}}(h)\). Due to the third inverse inequity in (2.3), there is a bounding constant \(C>0\) independent of h and w, such that

This implies \(\vert \!\vert \!\vert {{\mathbb {C}}_hw - {\mathbb {G}}_hw}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{R})}\le C\left\| {{\mathbb {C}}_hw - {\mathbb {G}}_hw}\right\| _{L^2(I)}\). An application of the triangle inequity reduces

where Lemmas 5.1 and 5.2 are used for each term at the last step. The remaining part can be proved similarly, the details are omitted here. \(\square \)

Now we turn to prove the second conclusion in Theorem 5.1. Taking \(q=1\) in (4.24), we have

Using the inequality (5.13), together with Theorem 4.1 and Lemma 4.3, we obtain

where the approximation property of the two projections are also used. It follows from Lemma 5.3 that

Collecting up the above conclusions, we prove the estimate for \(\vert \!\vert \!\vert {e^n}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{R})}\) in Theorem 5.1.

Along the similar line as before, we can bound \(\vert \!\vert \!\vert {e^n_x}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{L})}\) by using Theorem 4.2 with \(q=0\). We have now completed the proof of Theorem 5.1.

Remark 5.4

Under the stronger smoothness assumption on the exact solution, there holds

and so on; see [2] for more details. These are beneficial for obtaining the superconvergence order in the discrete \(\hbox {L}^{\infty }\)-norm for the semi-discrete DG method. However, it is difficult to get the expected order for the RKDG method. If the third inverse inequality in (2.3) is used, an unsatisfying boundedness like \(h^{-1/2}\tau ^r\) will emerge. We plan to address this difficulty in future work.

6 Byproduct: Accuracy-Enhancement of the Post-processed Solution

In this section, we consider the smoothness-increasing accuracy-conserving (SIAC) filter to obtain superconvergence of a post-processed solution. Following [17], the filter on a uniform mesh is implemented by the convolution of the numerical solution with the kernel function

where \(\varphi ^{(k+1)}\) is the B-spline function of order \(k+1\). The coefficients \(c_\ell ^{2k+1,k+1}\) are taken to ensure that the kernel function reproduces polynomials of degree 2k by convolution.

Theorem 6.1

Assume that the RKDG(s, r, k) method has the \(\hbox {L}^2\)-norm stability under suitable temporal-spatial condition, as stated in Theorems 3.1 through 3.3. Furthermore, the mesh is uniform and \(M\tau =T\). Let (4.32) be the initial solution with \(k-1\le q_\mathrm{nt} \le k\), then

where the bounding constant \(C>0\) is independent of \(h, \tau , u\) and U. Here \(\star \) denotes the convolution.

Proof

For the obtained numerical solution \(u^M\), the post-processed solution satisfies the well-known conclusion [17]

where the bounding constant \(C>0\) solely depends on k. Here the negative norm is defined as

and \(\partial _h^{\ell } e^M\) is the \(\ell \)th order divided difference of the numerical error.

In the following analysis, the divided differences will play important roles. To be more clear, we would like to quickly introduce its definition and recall some essential properties [27] about it. Let w be any piecewise smooth function on \({\mathcal {T}}_h\). The divided difference is recursively defined by

for \(\ell \ge 1\), where \(\partial _h^0w(x)=w(x)\). By Holder’s inequality, one can see that

If w and v are periodic, there holds

Furthermore, it is easy to see that \(\partial _h^{\ell }\) commutes with many operators, like \({\mathbb {G}}_h\), \(\partial _x\), and \({\mathcal {F}}_p\). Finally, it is worthy pointing out that the above manipulations in this section should be understood on the correspondingly shifted meshes.

According to (6.3), it is sufficient to prove this theorem by showing for any \(\Phi \in C_0^\infty (I)\) that

For simplicity of notations, the superscript M is dropped below. This purpose can be implemented with the help of the previous superconvergence results. Recalling the definitions (4.10) and (4.24) with \(q=k\), we have the decomposition \( (\partial _h^\ell e,\Phi ) =(\partial _h^\ell \xi ,\Phi )-(\partial _h^\ell \eta ,\Phi )\). By (6.6) and (6.5) we have \((\partial _h^\ell \xi ,\Phi )=(-1)^\ell (\xi ,\partial _h^\ell \Phi ) \le \left\| {\xi }\right\| _{L^2(I)}\left\| {\Phi }\right\| _{H^{k+1}(I)}\). Hence

due to Theorem 4.1. By the definition of the correction function, we get the decomposition

Below we are going to separately estimate the three terms on the right-hand side.

The GGR projection implies \((\partial _h^\ell {\mathbb {G}}_h^\perp U,\Phi ) =({\mathbb {G}}_h^\perp \partial _h^\ell U,\Phi ) =({\mathbb {G}}_h^\perp \partial _h^\ell U,\Phi - {\mathbb {P}}_h^{k-1}\Phi )\), where \({\mathbb {P}}_h^{k-1}\) is the local \(\hbox {L}^2\)-projection onto \(V_h^{k-1}\). The approximation properties of the two projections lead to

Below we assume \(k\ge 2\) such that the second term exists; otherwise, it vanishes. Depending on \(\ell \), we split the summation index into two sets. There is no harm in assuming \(1\le p\le \min (\ell ,k-1)\). By using (6.6), the commutative property, and Lemma 4.5, we have

Then Lemma 4.3, the approximation property of the two projections, and (6.5) lead to

Along the same line we can get the same boundedness for \(\ell <p \le k-1\). Hence

Similarly, the third term can be bounded in the form

Collecting up the above estimates and noticing that \(U=U_0(x-\beta T)\), we can obtain (6.7) and then prove this theorem. \(\square \)

Remark 6.1

Theorem 6.1 requires a special setting on the initial solution, which is inherited from the supraconvergence study. In practice, the \(\hbox {L}^2\)-projection setting (not included in this theorem) still works well to obtain the accuracy enhancement; see the numerical experiment below.

Remark 6.2

Theorem 6.1 provides a small relaxation on the regularity assumption of the exact solution. In this paper we only demand \(U_0\in H^{2k+2}(I)\cap H^{r+1}(I)\), which is slightly weaker than the usual assumption \(U_0\in H^{2k+3}(I)\) for the semi-discrete method [17].

7 Numerical Experiments

In this section, we provide some numerical verification. To this end, we carry out the RKDG(r, r, 2) method with the upwind-biased parameter \(\theta =0.75\), to solve the model problem (1.1) with \(\beta =1\) and \(T=1\). The non-uniform meshes, obtained by a random perturbation of the equidistance nodes by at most 10%, are used except for the post-processed solutions. The time step is \(\tau =0.2 h_{\min }\), where \(h_{\min }\) is the minimum of all element lengths.

With different degree k, and/or the different upwind-biased parameter \(\theta \), the numerical experiments are very similar. Limited by the length of this paper, we only present the data for the case mentioned here.

Example 7.1

We take \(U_0=\sin (2\pi x)\), which is infinitely differentiable.

In Table 3, we list the superconvergence results on the solution and derivative at the roots and extrema of the parameter-dependent Radau polynomials. The error and convergence order in the discrete \(\hbox {L}^2\)-norms, \(\vert \!\vert \!\vert {e}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{R})}\) and \(\vert \!\vert \!\vert {e_x}\vert \!\vert \!\vert _{L^2(S_h^\mathrm{L})}\), are shown. As a comparison, the error and convergence order in the discrete \(\hbox {L}^\infty \)-norms, \(\vert \!\vert \!\vert {e}\vert \!\vert \!\vert _{L^\infty (S_h^\mathrm{R})}\) and \(\vert \!\vert \!\vert {e_x}\vert \!\vert \!\vert _{L^\infty (S_h^\mathrm{L})}\), are also given. The initial solution \(u^0\) is taken to be (4.32) with \(q_\mathrm{nt}=1\) and \(q_\mathrm{nt}=0\), respectively. The \(\min (k+2,r)\)-th order is observed for the solution, and the \(\min (k+1,r)\)-th order is observed for the derivative. This verifies the second conclusion in Theorem 5.1.

In Table 4, we present the superconvergence results on the average and the numerical flux. Similarly, the discrete \(\hbox {L}^2\) norms and the discrete \(\hbox {L}^\infty \) norms are given. The initial solution \(u^0\) is taken to be (4.32) with \(q_\mathrm{nt}=k\) and \(q_\mathrm{nt}=k-1\), respectively. The expected \(\min (2k+1,r)\)-th order is observed for the two initial settings. This verifies the first conclusion in Theorem 5.1.

In Table 5 we investigate the supraconvergence results with different initial settings. To do that, we take \(u^0\) to be (4.32) with three parameters \(q_\mathrm{nt}\).

-

For \(q_\mathrm{nt}=k\), the expected \(\min (2k+1,r)\)-th order is observed for \(\left\| {\xi }\right\| _{L^2(I)}\) and \(\left\| {\xi _x}\right\| _{L^2(I)}\), which verifies Theorems 4.1 and 4.2. Note that \(\left\| {\xi _{xx}}\right\| _{L^2(I)}\) does not keep the same order. When r becomes large enough, the reduction of one order is observed for \(\left\| {\xi _{xx}}\right\| _{L^2(I)}\), which can be explained by the inverse inequality.

-

The expected order is not achieved for \(\left\| {\xi }\right\| _{L^2(I)}\) when \(q_\mathrm{nt}=k-2\), and for \(\left\| {\xi _x}\right\| _{L^2(I)}\) when \(q_\mathrm{nt}\le k-1\). This verifies that it is sharp for the requirement on \(q_\mathrm{nt}\) in Theorems 4.1 and 4.2.

In Table 6, we give the numerical results on the accuracy enhancement of the post-processed solutions, where \(u^0\) is taken to be (4.32) with \(q_\mathrm{nt}=k\). As an example out of the assumption of Theorem 6.1, we also take \(u^0\) to be (4.32) with \(q_\mathrm{nt}=0\) (i.e., the GGR projection) or the \(\hbox {L}^2\) projection of \(U_0\). The convergence order \(\min \{2k+1,r\}\) is observed for all cases, indicating that the result in Theorem 6.1 is correct but not sharp.

Example 7.2

To investigate the sharpness of regularity assumption, we take \(U_0=\sin ^{\epsilon +2/3}(2\pi x)\), where \(\epsilon \) is a positive integer. This function belongs to \(H^{\epsilon +1}(I)\) but not \(H^{\epsilon +2}(I)\).

The superconvergence results are shown in Table 7, the supraconvergence results are shown in Table 8, and the accuracy enhancements of the post-processed solution are shown in Table 9. In each group, the regularity parameter is \(\epsilon -1\) for the left column, and is \(\epsilon \) for the right column. When the regularity parameter satisfies the requirement in the theorems, the expected orders are observed. However, when the regularity parameter drops, the expected orders are lost. These results indicate that the regularity assumptions in the theorems appear to be sharp.

8 Concluding Remarks

In this paper we establish the superconvergence results for the fully-discrete RKDG methods with arbitrary stages, time order and degree of piecewise polynomials, when the upwind-biased flux is used. To complete this task, many analysis techniques are involved. Firstly we are able to avoid the computer-aided manipulation on the matrix transferring process, and set up the relationship between the single-step and multiple-steps time-marching. As a result, the stability results can be directly concluded by an equivalent representation of the RKDG methods. Secondly, we present a uniform strategy on the reference functions and the incomplete correction functions at every time stage. Then many superconvergence results are rigorously given under different regularity assumptions, and the optimal time order is achieved as expected. Thirdly, we obtain two interesting results in addition. With the help of the discrete derivative operator according to the DG spatial discretization, as well as the transform between the spatial derivative and temporal difference, we are able to prove that the first order spatial derivative of the solution has the same supraconvergence order as that for the solution. We also present a new proof for the accuracy enhancement of the post-processed solution, as an application of the obtained supraconvergence result and the properties of the divided differences of the numerical error.

In future work, we shall extend the above techniques and analyses to non-periodic boundary conditions and to nonlinear equations and/or systems. The extensions to other time-marching methods are also on the plan. Furthermore, we plan to explore the proof of piecewise-point superconvergence results that have been shown in the numerical experiments for the RKDG methods.

References

Adams, R.A.: Sobolev spaces. In: Pure and Applied Mathematics, vol. 65, Academic Press, New York (1975)

Cao, W., Li, D., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin methods based on upwind-biased fluxes for 1D linear hyperbolic equations. ESAIM Math. Model. Numer. Anal. 51, 467–486 (2017)

Cao, W., Shu, C.-W., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin methods for two-dimensional hyperbolic equations. SIAM J. Numer. Anal. 53, 1651–1671 (2015)

Cao, W., Shu, C.-W., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin method for scalar nonlinear hyperbolic equations. SIAM J. Numer. Anal. 56, 732–765 (2018)

Cao, W., Shu, C.-W., Zhang, Z.: Superconvergence of discontinuous Galerkin methods for 1-D linear hyperbolic equations with degenerate variable coefficients. ESAIM Math. Model. Numer. Anal. 51, 2213–2235 (2017)

Cao, W., Zhang, Z.: Some recent developments in superconvergence of discontinuous Galerkin methods for time-dependent partial differential equations. J. Sci. Comput. 77, 1402–1423 (2018)

Cao, W., Zhang, Z., Zou, Q.: Superconvergence of discontinuous Galerkin methods for linear hyperbolic equations. SIAM J. Numer. Anal. 52, 2555–2573 (2014)

Cheng, Y., Meng, X., Zhang, Q.: Application of generalized Gauss–Radau projections for the local discontinuous Galerkin method for linear convection-diffusion equations. Math. Comput. 86, 1233–1267 (2017)

Cheng, Y., Shu, C.-W.: Superconvergence and time evolution of discontinuous Galerkin finite element solutions. J. Comput. Phys. 227, 9612–9627 (2008)

Cheng, Y., Shu, C.-W.: Superconvergence of discontinuous Galerkin and local discontinuous Galerkin schemes for linear hyperbolic and convection-diffusion equations in one space dimension. SIAM J. Numer. Anal. 47, 4044–4072 (2010)

Choi, M.D.: Tricks or treats with the Hilbert matrix. Am. Math. Mon. 90, 301–312 (1983)

Ciarlet, P.G.: The finite element method for elliptic problems. In: Studies in Mathematics and Its Applications, vol. 4, North-Holland Publishing Co., Amsterdam (1978)

Cockburn, B.: An introduction to the discontinuous Galerkin method for convection-dominated problems, In: Advanced Numerical Approximation of Nonlinear Hyperbolic Equations (Cetraro, 1997), Lecture Notes in Mathematics, vol. 1697, Springer, Berlin, pp. 151–268 (1998)

Cockburn, B.: Discontinuous Galerkin methods for convection-dominated problems, In: High-Order Methods for Computational Physics, Lecture Notes Computer Science Engineering, vol. 9, Springer, Berlin, pp. 69–224 (1999)

Cockburn, B., Hou, S., Shu, C.-W.: The Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws. IV. The multidimensional case. Math. Comput. 54, 545–581 (1990)

Cockburn, B., Lin, S.Y., Shu, C.-W.: TVB Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws. III. One-dimensional systems. J. Comput. Phys. 84, 90–113 (1989)

Cockburn, B., Luskin, M., Shu, C.-W., Süli, E.: Enhanced accuracy by post-processing for finite element methods for hyperbolic equations. Math. Comput. 72, 577–606 (2003)

Cockburn, B., Shu, C.-W.: TVB Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws. II. General framework. Math. Comput. 52, 411–435 (1989)

Cockburn, B., Shu, C.-W.: The Runge–Kutta local projection \(P^1\)-discontinuous-Galerkin finite element method for scalar conservation laws. RAIRO Modél. Math. Anal. Numér. 25, 337–361 (1991)

Cockburn, B., Shu, C.-W.: The Runge–Kutta discontinuous Galerkin method for conservation laws. V. Multidimensional systems. J. Comput. Phys. 141, 199–224 (1998)

Cockburn, B., Shu, C.-W.: Runge–Kutta discontinuous Galerkin methods for convection-dominated problems. J. Sci. Comput. 16, 173–261 (2001)

Gottlieb, S.: Strong stability preserving time discretizations: a review. In: Spectral and High Order Methods for Partial Differential Equations—ICOSAHOM 2014, Lecture Notes Computer Science Engineering, vol. 106, Springer, Cham, pp. 17–30 (2015)

Gottlieb, S., Ketcheson, D.I., Shu, C.-W.: High order strong stability preserving time discretizations. J. Sci. Comput. 38, 251–289 (2009)

Gottlieb, S., Shu, C.-W., Tadmor, E.: Strong stability-preserving high-order time discretization methods. SIAM Rev. 43, 89–112 (2001)

Guo, L., Yang, Y.: Superconvergence of discontinuous Galerkin methods for linear hyperbolic equations with singular initial data. Int. J. Numer. Anal. Model. 14, 342–354 (2017)

King, J., Mirzaee, H., Ryan, J.K., Kirby, R.M.: Smoothness-increasing accuracy-conserving (SIAC) filtering for discontinuous Galerkin solutions: improved errors versus higher-order accuracy. J. Sci. Comput. 53, 129–149 (2012)

Meng, X., Ryan, J.K.: Discontinuous Galerkin methods for nonlinear scalar hyperbolic conservation laws: divided difference estimates and accuracy enhancement. Numer. Math. 136, 27–73 (2017)

Meng, X., Shu, C.-W., Wu, B.: Optimal error estimates for discontinuous Galerkin methods based on upwind-biased fluxes for linear hyperbolic equations. Math. Comput. 85, 1225–1261 (2016)

Meng, X., Shu, C.-W., Zhang, Q., Wu, B.: Superconvergence of discontinuous Galerkin methods for scalar nonlinear conservation laws in one space dimension. SIAM J. Numer. Anal. 50, 2336–2356 (2012)

Qiu, J., Zhang, Q. Stability, error estimate and limiters of discontinuous Galerkin methods. In: Handbook of Numerical Methods for Hyperbolic Problems, Handbook of Numerical Analysis, vol. 17, Elsevier, Amsterdam, pp. 147–171 (2016)

Reed, W.H., Hill, T.R.: Triangular mesh methods for the neutron transport equation. Los Alamos Scientific Laboratory report LA-UR-73-479 (1973)

Ryan, J., Shu, C.-W., Atkins, H.: Extension of a postprocessing technique for the discontinuous Galerkin method for hyperbolic equations with application to an aeroacoustic problem. SIAM J. Sci. Comput. 26, 821–843 (2005)

Shu, C.-W.: Discontinuous Galerkin methods: general approach and stability. In: Numerical Solutions of Partial Differential Equations, Advanced Mathematics Training Course, CRM Barcelona, Birkhäuser, Basel, pp. 149–201 (2009)

Shu, C.-W., Osher, S.: Efficient implementation of essentially nonoscillatory shock-capturing schemes. J. Comput. Phys. 77, 439–471 (1988)

Sun, Z., Shu, C.-W.: Strong stability of explicit Runge–Kutta time discretizations. SIAM J. Numer. Anal. 57, 1158–1182 (2019)

Wang, H., Zhang, Q., Shu, C.-W.: Implicit-explicit local discontinuous Galerkin methods with generalized alternating numerical fluxes for convection-diffusion problems. J. Sci. Comput. 81, 2080–2114 (2019)

Xu, Y., Shu, C.-W., Zhang, Q.: Error estimate of the fourth-order Runge–Kutta discontinuous Galerkin methods for linear hyperbolic equations (submitted). SIAM J. Numer. Anal. (2019)

Xu, Y., Zhang, Q., Shu, C.-W., Wang, H.: The \(\text{ L}^2\)-norm stability analysis of Runge–Kutta discontinuous Galerkin methods for linear hyperbolic equations. SIAM J. Numer. Anal. 57, 1574–1601 (2019)

Yang, Y., Shu, C.-W.: Analysis of optimal superconvergence of discontinuous Galerkin method for linear hyperbolic equations. SIAM J. Numer. Anal. 50, 3110–3133 (2012)

Zhang, Q., Shu, C.-W.: Error estimates to smooth solutions of Runge-Kutta discontinuous Galerkin methods for scalar conservation laws. SIAM J. Numer. Anal. 42, 641–666 (2004)