Abstract

In this paper, we present a unified approach to study superconvergence behavior of the local discontinuous Galerkin (LDG) method for high-order time-dependent partial differential equations. We select the third and fourth order equations as our models to demonstrate this approach and the main idea. Superconvergence results for the solution itself and the auxiliary variables are established. To be more precise, we first prove that, for any polynomial of degree k, the errors of numerical fluxes at nodes and for the cell averages are superconvergent under some suitable initial discretization, with an order of \(O(h^{2k+1})\). We then prove that the LDG solution is \((k+2)\)-th order superconvergent towards a particular projection of the exact solution and the auxiliary variables. As byproducts, we obtain a \((k+1)\)-th and \((k+2)\)-th order superconvergence rate for the derivative and function value approximation separately at a class of Radau points. Moreover, for the auxiliary variables, we, for the first time, prove that the convergence rate of the derivative error at the interior Radau points can reach as high as \(k+2\). Numerical experiments demonstrate that most of our error estimates are optimal, i.e., the error bounds are sharp.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we study and analyze superconvergence properties of the local discontinuous Galerkin(LDG) method for partial differential equations containing higher order derivatives. To demonstrate the main idea and the approach, we restrict ourselves to the following representative examples:

-

The one dimensional third order wave equation

$$\begin{aligned} u_t+u_{xxx}=0. \end{aligned}$$(1.1) -

The one dimensional fourth order equation

$$\begin{aligned} u_t+u_{xxxx}=0. \end{aligned}$$(1.2)

For equations with high-order derivative, such as the convection–diffusion equations, the third order wave equation (1.1) or the fourth order equation (1.2), discontinuous Galerkin(DG) methods (see, e.g., [11,12,13,14]) cannot be directly applied because of the piecewise discontinuity at the element interfaces, which is not regular enough to handle higher derivatives. The LDG method is the extension of the DG method to solve partial differential equations with high-order derivatives, which was first developed by Cockburn and Shu [15] for the nonlinear convection–diffusion equations. The basic idea of the LDG method is to rewrite equations with higher order derivatives into a linear system, and then apply the DG method on the system. In the past decades, the LDG method has been successfully applied to various problems involving higher order derivatives such as third order KdV type equations [3, 24, 26, 27], time-dependent biharmonic equations [16], the fully nonlinear K(n, n, n) equations [23] and Cahn–Hilliard type equations [21]. We also refer to the review paper [25] for the LDG method when applied to high-order partial differential equations.

There have been many studies of the mathematical theory for superconvergence of DG or LDG methods, see, e.g., [1, 2, 4, 5, 9, 10, 22, 28]. However, much attention has been paid to hyperbolic equations [4,5,6, 17, 19, 28], convection–diffusion equations [8, 10] or parabolic equations [6, 29]. Superconvergence of LDG methods for higher order equations, e.g., third order and fourth order problems, has not been investigated as much or satisfactorily as that for lower order equations. Only recently, Meng et al. [20] and Hufford and Xing [18] separetely investigated the superconvergence properties of the LDG method for one dimensional linear time dependent fourth order problems and linearized KdV equations, and they both proved that the LDG solution is superconvergent with an order of \(k+\frac{3}{2}\) to a particular projection of the exact solution. However, this convergence rate is not sharp and far from our superconvergence need, comparing with the highest superconvergence rate \(2k+1\) in [6, 7] for hyperbolic and parabolic equations.

The main purpose of our current paper is to present a unified approach to study superconvergence behavior of the LDG method for high-order time-dependent partial differential equations. By a rigorous mathematical proof, we prove a superconvergence rate of \(2k+1\) for the errors of numerical fluxes at nodes and for the cell averages, and \(k+2\) for the errors of the function value approximation separately at a class of Radau points. We also prove that the LDG solution is \((k+2)\)-th order superconvergent towards a particular projection of the exact solution. The superconvergence results are not only valid for the exact solution itself but also for the auxiliary variables. As we may recall, all the superconvergence results are the same as those for the counterpart hyperbolic and parabolic equations in [6, 7]. Furthermore, for the auxiliary variables, we also prove that the convergence rate of the derivative error at the interior Radau points can reach as high as \(k+2\)—a new result not established before. The contribution of this paper lies in that: on the one hand, we improve the superconvergence rate in [18, 20] to the possible optimal one for third and fourth order problems; on the other hand, we proved that the highest superconvergence rate \(2k+1\) (of the error at numerical fluxes and for the cell average) is also valid for the third and fourth order problems. Moreover, we also established a new superconvergence result for the derivative value error at the interior Radau points, whose convergence rate is 2 order higher than the optimal convergence rate. By doing so, we establish a framework to study superconvergence of LDG methods for general higher order derivative problems and enrich the superconvergence theory of the LDG methods. Furthermore, our current work is also part of an ongoing effort to develop LDG superconvergence results for KdV and Cahn–Hilliard type equations.

The key ingredient in our superconvergence analysis is the construction of the special interpolation function for both the exact solution and the auxiliary variables, which is superclose to the LDG solution. It is this supercloseness that leads to the \(2k+1\)-th order superconvergence rate for the numerical fluxes at all nodes and for the cell averages. To design the special interpolant, we first establish the energy stability for both the exact solution and the auxiliary variables, and then use some special projections (Gauss–Radau projections) to eliminate the jump terms appeared in the LDG schemes, and finally to use the idea of correction function (see, e.g., [6, 7]) to correct the error between the LDG solution and the Gauss–Radau projections. For partial differential equations containing higher order derivatives, the correction functions for the exact solution itself and all the auxiliary variables have to be constructed simultaneously. The main difficulty is how to balance the interplay among all the variables. The complicated interplay among all the variables makes the analysis for higher order problems more elusive than the counterpart hyperbolic or parabolic problems.

The rest of the paper is organized as follows. In Sect. 2, we study and analyze the superconvergence properties of the LDG method for one dimensional third order wave equations, where we first present the energy stability for the solution itself and the auxiliary variables, and then design a special interpolation function superclose to the LDG solution based on the stability inequality, and finally prove that superconvergence results hold not only for the LDG solution but also for the auxiliary variables. Section 3 is dedicated to the superconvergence analysis of the LDG method for 1-D linear fourth order problems. A \(2k+1\)-th order superconvrgence rate of the errors for the numerical flux at nodes and for the cell average is obtained. In Sect. 4, we provide numerical examples to support our theoretical findings. Finally, concluding remarks and possible future works are presented in Sect. 5.

Throughout this paper, we adopt standard notations for Sobolev spaces such as \(W^{m,p}(D)\) on sub-domain \(D\subset \Omega \) equipped with the norm \(\Vert \cdot \Vert _{m,p,D}\) and semi-norm \(|\cdot |_{m,p,D}\). When \(D=\Omega \), we omit the index D; and if \(p=2\), we set \(W^{m,p}(D)=H^m(D), \Vert \cdot \Vert _{m,p,D}=\Vert \cdot \Vert _{m,D}\), and \(|\cdot |_{m,p,D}=|\cdot |_{m,D}\). Notation \(A\lesssim B\) implies that A can be bounded by B multiplied by a constant independent of the mesh size h.

2 One Dimensional Third Order Wave Equations

We consider the following one dimensional third order wave equation

with the periodic boundary condition \(u(0,t)=u(2\pi ,t)\).

2.1 LDG Schemes and Stability

Let \(\Omega =[0,2\pi ]\) and \(0=x_{\frac{1}{2}}<x_{\frac{3}{2}}<\ldots <x_{N+\frac{1}{2}} = 2\pi \) be \(N+1\) distinct points on the interval \({\Omega }\). For any positive integer n, we define \({\mathbb {Z}}_{n}=\{1,\ldots ,n\}\) and denote by

the cells and cell centers, respectively. Let \(h_j=x_{j+\frac{1}{2}}-x_{j-\frac{1}{2}}\), \({\bar{h}}_j = h_j/2\) and \(h = \displaystyle \max _j\; h_j\). We assume that the mesh is quasi-uniform, i.e., there exists a constant c such that \(h\le c h_j, j\in {\mathbb {Z}}_N\). Define the finite element space

where \({\mathbb {P}}_{k}\) denotes the space of polynomials of degree at most k with coefficients as functions of t.

To construct the LDG scheme for (2.1), we rewrite (2.1) into a first order system

The LDG method for (2.2) is to find \(u_h,p_h,q_h\in V_h\) such that for all \(v,\xi ,\eta \in V_h\),

where \((u,v)_j=\int _{\tau _j}uv {d}x, v^-|_{j+\frac{1}{2}}\) and \(v^+|_{j+\frac{1}{2}}\) denote the left and right limits of v at the point \(x_{j+\frac{1}{2}}\), respectively, and \({\hat{u}}_h,{\hat{p}}_h,{\hat{q}}_h\) are numerical fluxes. These fluxes, which in general are dependent on the discontinuous solution from both sides, are the key ingredient to ensure the stability and the local solvability of the intermediate variables \(p_h,q_h\). For the purpose of the stability, the numerical fluxes should be that \({{\hat{p}}}_h\) is chosen based on upwinding, \({{\hat{u}}}_h\) and \({{\hat{q}}}_h\) are chosen alternatively from the left and right. In this paper, we take

In each element \(\tau _{j}, j\in {\mathbb {Z}}_N\), we define

Let

where

Apparently, (2.3) can be rewritten as

Lemma 1

For periodic functions \(v,\xi ,\eta \), there hold

where \((\xi ,v)=\sum _{j=1}^N(\xi ,v)_j\), and \([v]_{j+\frac{1}{2}}=v^+_{j+\frac{1}{2}}-v^-_{j+\frac{1}{2}}\) denotes the jump of v at the point \(x_{j+\frac{1}{2}}, j\in {\mathbb {Z}}_N\).

Here we omit the proof since it can be obtained from a direct calculation. Furthermore, noticing that each identity in (2.3) still holds by taking time derivative, we get the energy stability immediately by letting \((v,\xi ,\eta )=(u_h,p_h,q_h)\) in the above Lemma. Actually, the four equalities in Lemma 1 are consistent with the energy equations in [24] when we choose \((v,\xi ,\eta )=(u_h,p_h,q_h)\).

Remark 1

As a direct consequence of the above lemma,

The above equality acts an important role in our later superconvergence analysis.

2.2 Preliminaries

Define

For any given function \(v\in H_h^1\), we denote by \(P_h^{+}v,P_h^-v\in V_h\) the two special Gauss–Radau projections of v. That is,

Note that the two projections are commonly used in the analysis of DG methods.

Let \(L_{j,m}\) be the standard Legendre polynomial of degree m on the interval \(\tau _j\). Then any function \(v\in H_h^1\) has the following Legendre expansion in \(\tau _j\)

By the definitions of \(P_h^-,P_h^+\) and using the fact that \(L_{j,m}(x_{j+\frac{1}{2}}^-)=1, L_{j,m}(x_{j-\frac{1}{2}}^+)=(-1)^m\) for all \(m\ge 0\), we have

where

Consequently,

where

For all \(v\in H_h^1\), we define the primal function \(D_x^{-1}v\) of v by

Let \(L_m\) be the standard Legendre polynomial of degree m on the interval \([-1,1]\). By the properties of Legendre polynomials and a scaling from \(\tau _j\) to \([-1,1]\), we have

where \( s=\frac{x-x_j}{{{\bar{h}}}_j}\in [-1,1].\)

2.3 Construction of Special Interpolation Functions

The idea of our superconvergence analysis here is to construct a specially designed interpolation function of the exact solution superclose to the LDG solution. To be more precise, we shall construct special functions \(u_I,p_I,q_I\in V_h\), which depend on the exact solution only, satisfying

for some \(l>0\). Using the supercloseness between \((u_I,p_I,q_I)\) and \((u_h,p_h,q_h)\), we derive the superconvergence of the LDG approximation at some special points as well as for the cell average. As proved in [24], there holds

Therefore, to achieve our superconvergence goal, some extra terms are needed to correct the error between the LDG solution \((u_h,p_h,q_h)\) and the Gauss–Radau projection \((P_h^+u,P_h^+p,P_h^-q)\). This is exactly the idea of correction function (see, e.g., [6, 7]). In other words, we will construct some correction functions \((w_u,w_p,w_q)\) based on the Gauss–Radau projection such that (2.10) holds with \((u_I,p_I,q_I)=(P_h^+u+w_u,P_h^+p+w_p,P_h^-q+w_q)\). Then the construction of the interpolation functions is reduced to the correction functions \(w_v, v=u,p,q\). Consequently, the rest of this subsection is dedicated to the construction of the correction functions \(w_{v}, v=u,p,q\).

We first define a serials of functions \(w_{v,i}\in V_h, v=u,p,q, 1\le i\le k\) as follows. In each element \(\tau _j,j\in {\mathbb {Z}}_N\), let \(w_{v,i}, v=u,p,q\) be functions such that for all \(\xi \in {\mathbb {P}}_{k-1}(\tau _j)\),

where we used the notations

Lemma 2

The functions \(w_{v,i},v=u,p,q, 1\le i\le k\) defined in (2.11–2.13) are uniquely determined. Moreover, there hold for all integers n and \(v=u,p,q\)

Proof

We finish our proof by induction. We first suppose in each element \(\tau _j\)

where \(a_{i,m},b_{i,m},c_{i,m}\) are coefficients to be determined. In light of (2.6–2.7), (2.9), we have

where \({{\tilde{p}}}_{j,k}\) is given by (2.8) with \(v=p\). By choosing \(\xi =L_{j,m}, m\le k-1\) in (2.11), we get

Using the fact that \(w_{u,1}\left( x^+_{j-\frac{1}{2}}\right) =0\) yields

Then for any positive integer n,

On the other hand, note that

By denoting \({p}(s,t)=p(x,t),s=(x-x_j)/{{\overline{h}}}_j\in [-1,1]\), we have, from the integration by parts and the properties of Legendre polynomials,

Noticing that \(\partial ^i_s{p}=\left( {{{\bar{h}}}}_j\right) ^i\partial ^i_xp\), we have

Consequently,

which gives

Similarly, we choose \(\xi =L_{j,m}, m\le k-1\) in (2.12–2.13) to obtain

where \({{\bar{q}}}_{j,k}\) and \({{\tilde{u}}}_{j,k}\) are the same as in (2.8) with \(v=q,u_t\) respectively. Therefore,

Noticing that \(p=u_x,q=p_{x}, u_t=-q_{x}\), then (2.14) follows for \(i=1\) and \(v=u,p,q\). Now we suppose (2.14) is valid for all \(i, 1\le i\le k-1\) and prove it also holds for \(i+1\). By induction hypothesis, we have \(w_{p,i}\bot {\mathbb {P}}_{k-i-1}\). Then

By (2.9),

Choosing \(\xi =L_{j,m}, m\le k-1\) in (2.11), we get

Here we use the notation \(b_{i,k-i-2}=b_{i,k-i-1}=0\). Again, using the fact \(w_{u,i+1}\left( x_{j-\frac{1}{2}}^+\right) =0\), we have

Consequently,

and

Following the same arguments, we can prove \(\partial _t^n w_{p,i+1}\bot {\mathbb {P}}_{k-i-2},\ \partial _t^nw_{q,i+1}\bot {\mathbb {P}}_{k-i-2}\), and

Then (2.14) is valid for \(i+1\) and \(v=u,p,q\), and thus (2.14) holds for all \(i\le k\). The proof is complete.\(\square \)

Now we define the correction functions as follows. For any positive integer l, where \(1\le l\le k\), we define in each element \(\tau _j\)

where \(w_{v,i}, v=u,p,q\) are defined by (2.11–2.13). With the correction function, we define

We have the following properties for \((u_I,p_I,q_I)\).

Theorem 1

Let \((u_I,p_I,q_I)\) be defined by (2.17) with \(w_v, v=u,p,q\) given by (2.11–2.13), (2.16). Then

Moreover, there holds for all \(\xi \in V_h\)

Proof

First, by the definition of (2.11–2.13) and (2.16), we easily obtain

Then (2.18) follows from the properties of the Gauss–Radau projections \(P^{+}_h,P_h^-\). Second, by the orthogonality of \(w_{v,i}, 0\le i\le k-1, v=u,p,q\) in (2.14), we have

Then by integration by parts and (2.11),

which yields

Similarly, there holds

Noticing that

then the desired result (2.19) follows.\(\square \)

2.4 Superconvergence

We first prove the supercloseness between the LDG solution and the interpolation function \((u_I,p_I,q_I)\) defined in (2.17). To simplify our analysis, we use the following notation in the rest of this paper

Then the LDG method has the following orthogonal property

Theorem 2

Given any positive l, where \(1\le l\le k\), let u be the solution of (2.1) satisfying \(\partial _t^nu\in W^{k+l+3,\infty }(\Omega )\) for all \( n\le 2\), and \((u_h,p_h,q_h)\) be the solution of (2.3). Suppose \((u_I,p_I,q_I)\in V_h\) is the interpolation function of (u, p, q) defined in (2.17). Then

where \(c(u)=\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert \partial _t^nu(\tau )\Vert _{k+l+3,\infty }\).

Proof

Choosing \((v,\xi ,\eta )=({{\bar{e}}}_u,{{\bar{e}}}_p, {{\bar{e}}}_q)\) in the formula of Remark 1 and using the Cauchy-Schwarz inequality, we obtain

where

Since the exact solution (u, p, q) also satisfies (2.3) and (2.5), we have, from the orthogonality (2.21) and (2.19)

Similarly, noticing that (2.3) holds by taking time derivative, there holds the orthogonality

Then

To estimate \(I_3\), we take time derivative in the second and third equations of (2.3), and then substitute them into the first equation of (2.3) to obtain

Following the same argument, we derive

Substituting the estimates of \(I_i, i\in {\mathbb {Z}}_4\) into (2.22) and integrating with respect to time between 0 to t yields

Then the desired result follows from (2.14), the Gronwall inequality and the fact that

\(\square \)

As indicated by the above theorem, to achieve our superconvergence goal in (2.10), the initial solution should be chosen such that the initial error is small enough to be compatible with the supererconvergence error. To estimate the initial error, we need the following result.

Lemma 3

Given any function \(v\in H_h^1\), suppose \(\xi \in V_h\) satisfies

where \({{\hat{\xi }}}=\xi ^+\) or \(\xi ^-\). Then

Proof

If \({{\hat{\xi }}}=\xi ^+\), then

Choosing \(\eta =\xi _x-cL_{j,k}\) in the above equation, where \(c=\xi _x\left( x^-_{j+\frac{1}{2}}\right) \), we obtain

Here in the last step, we have used the inverse inequality. Then (2.23) follows. Similarly, if \({{\hat{\xi }}}=\xi ^-\), we have

Then (2.23) still holds by taking \(\eta =\xi _x-cL_{j,k}\) in the above identity with \(c=\xi _x\left( x^+_{j-\frac{1}{2}}\right) (-1)^k\). This finishes our proof. \(\square \)

Lemma 4

Given any positive l, where \(1\le l\le k\), suppose \(\partial _t^nu|_{t=0}\in W^{k+l+3}\) for \(n=0,1\), and the initial solution is taken such that

where \(w^l_{q}\) is defined by (2.16). Then

Proof

By the special initial discretization and (2.19), we have at time \(t=0\)

Then

which yields, together with (2.14),

We next estimate \({{\bar{e}}}_p(x,0)\). On the one hand, we have, from the orthogonality \((e_q,\eta )_j+{{{\mathcal {D}}}}_j(e_p,\eta ,e_p^+)=0, \forall \eta \in V_h\) and (2.19),

Thanks to the special initial discretization \({{\bar{e}}}_q(x,0)=0\) and the conclusion in Lemma 3,

On the other hand, as a direct consequence of the LDG scheme (2.3),

Then

Here in the last step, we have used the orthogonality \(w_{p,i}\bot {\mathbb {P}}_0\) for \(i<l\le k\). Substituting (2.26) and the following formula

into (2.25), we derive

Then

and thus,

Consequently,

Following the same argument, we can show

This finishes our proof. \(\quad \square \)

As a direct consequence of Theorem 2, Lemma 4 and the estimates for \(w_{v,i}, v=u,p,q\) in (2.14), we have the following supercloseness results.

Corollary 1

Given any positive l, where \(1\le l\le k\), let u be the solution of (2.1) satisfying \(\partial _t^nu\in W^{k+l+3,\infty }(\Omega )\) for all \( n\le 2\), and \((u_I,p_I,q_I)\in V_h\) be the interpolation function of (u, p, q) defined in (2.17). Suppose \((u_h,p_h,q_h)\) is the solution of (2.3) with the initial solution satisfying (2.24). Then for all \(t\in [0,T]\)

Especially, by choosing \(l=1\) we get

2.4.1 Superconvergence for the Cell Averages

We first denote by \(\Vert e_v\Vert _c, v=u,p,q\) the cell averages of the function \(e_v\). That is

We have the following superconvergence results for the cell averages.

Theorem 3

Let u be the solution of (2.1) satisfying \(\partial _t^nu\in W^{2k+3,\infty }(\Omega )\) for all \( n\le 2\), and \((u_h,p_h,q_h)\) be the solution of (2.3) with the initial solution satisfying (2.24) for \(l=k\). Then

Proof

Let \((u_I,p_I,q_I)=(u^k_I,p^k_I,q^k_I)\). By the orthogonality of \(w_{v,i},i\le k-1\),

Then we have, from the Cauchy-Schwartz inequality, the first inequality of (2.14) and (2.27),

Then (2.29) follows. \(\square \)

Remark 2

As a direct consequence of the above theorem, we have the following superconvergence results for the domain average of \(e_u\).

Furthermore, if we take \( (v,\xi ,\eta )=(0,1,0),(0,0,1)\) in the equation \(({{{\mathcal {A}}}{-}\mathcal {B}})(e_u,e_p,e_q;v,\xi ,\eta )=0\) and use the periodic boundary condition, then we immediately get

2.4.2 Superconvergence of Numerical Fluxes at Nodes

We denote by \(e_{v,f}\) the maximal error of numerical flux at mesh nodes for the function \(e_v\), where \(v=u,p,q\). To be more precise,

Theorem 4

Let u be the solution of (2.1) satisfying \(\partial _t^nu\in W^{2k+3,\infty }(\Omega )\) for all \( n\le 2\), and \((u_h,p_h,q_h)\) be the solution of (2.3) with the initial solution satisfying (2.24) for \(l=k\). Then

Proof

First, note that (2.25–2.26) hold for any \(t\in [0,T]\). By the same argument as in Lemma 4, we get

Similarly, there hold

Second, by the orthogonality \(({{{\mathcal {A}}}-\mathcal {B}})_j(e_u,e_p,e_q;v,\xi ,\eta )=0, \forall v,\xi ,\eta \in V_h\) and (2.18–2.19),

Then the conclusion in Lemma 3 gives

which yields, together with (2.14) and (2.27)

and thus,

Then the desired result follows by taking \(l=k\). \(\square \)

2.4.3 Superconvergence of Function Value Approximation at Interior Radau Points

Let \({{{\mathcal {L}}}}_{j,m},{{{\mathcal {R}}}}_{j,m}, m\in {\mathbb {Z}}_{k}\) be the k interior left and right Radau points on the interval \(\tau _j, j\in {\mathbb {Z}}_N\), respectively. That is, \({{{\mathcal {L}}}}_{j,m}, m\in {\mathbb {Z}}_k\) are zeros of \(L_{j,k+1}+L_{j,k}\) except the point \(x=x_{j-\frac{1}{2}}\), and \({{{\mathcal {R}}}}_{j,m}, m\in {\mathbb {Z}}_k\) are zeros of \(L_{j,k+1}-L_{j,k}\) except the point \(x=x_{j+\frac{1}{2}}\). We have the following superconvergence result at those interior Radau points.

Theorem 5

Let u be the solution of (2.1) satisfying \(\partial _t^nu\in W^{k+4,\infty }(\Omega )\) for all \( n\le 2\), and \((u_h,p_h,q_h)\) be the solution of (2.3) with the initial solution satisfying (2.24) for \(l=1\). Then

where

Proof

Choosing \(l=1\) in (2.31–2.32), there hold for all \(x\in \tau _j\)

and thus,

By the triangle inequality and (2.14), we have

For any smooth function \(v\in W^{k+2,\infty }\), note that (see, e.g., [6])

Then (2.33) follows. The proof is complete.\(\square \)

2.4.4 Superconvergence of Derivative Value Approximation at Interior Radau Points

Before the study of the derivative error at interior Radau points, we have the following result which plays fundemantal role in the forthcoming superconvergence analysis.

Lemma 5

Let \(v, \xi \in V_h\) satisfy

where \({{\hat{\xi }}}=\xi ^+\) or \(\xi ^-\). Then there hold for \({{\hat{\xi }}}=\xi ^-\)

and for \({{\hat{\xi }}}=\xi ^+\)

Proof

In each element \(\tau _j, j\in {\mathbb {Z}}_N\), we suppose \(\xi \in V_h\) has the following Legendre expansion

where \(\{c_{m}\}_{m=1}^k\) are constants. Then

Similarly, since \(v|_{\tau _j}\in {\mathbb {P}}_{k}(\tau _j)\) for all \(v\in V_h\), then there exist a set of coefficients \(\{b_{m}\}_{m=1}^{k+1}\) such that

Then for \({{\hat{\xi }}}=\xi ^-\),

Choosing \(\eta =L_{j,n}+L_{j,n-1}, n\in {\mathbb {Z}}_k\) in the above identity and using the orthogonality

where \(\delta \) denotes the kronecker symbol, we immediately obtain

and thus

where in the last step, we have used the identity (see, [30])

Then (2.35) follows. Similarly, if \({{\hat{\xi }}}=\xi ^+\), by choosing the left Radau polynomial \(L_{j,m}+L_{j,m-1}\) to replace the right Radau polynomial \(L_{j,m}-L_{j,m-1}\) in the formula of \(v, \xi _x\), and using the orthogonality

we get the desired result (2.36) directly. The proof is complete.\(\square \)

Now we are ready to show the superconvergence result for the derivative approximation of the LDG solution.

Theorem 6

Let u be the solution of (2.1) and \((u_h,p_h,q_h)\) be the solution of (2.3). Then at the interior Radau points \({{{\mathcal {R}}}}_{j,m}, {{{\mathcal {L}}}}_{j,m}, (j,m)\in {\mathbb {Z}}_N\times {\mathbb {Z}}_k\), there holds

Therefore, if the exact solution u satisfies \(\partial _t^nu\in W^{k+4,\infty }(\Omega )\) for all \( n\le 2\), and the initial solution is given by (2.24) with \(l=1\), then

where for any \(v=u,p,q\),

Proof

In light of the numerical scheme (2.3), we have for all \(\eta \in V_h\)

which yields, together with (2.35–2.36),

Then (2.37) follows. Consequently,

By the triangle inequality, (2.14), (2.37) and (2.34), and the inverse inequality

and thus

Then the second inequality of (2.38) follows by choosing \(l=1\). This finishes our proof. \(\square \)

Remark 3

As a direct consequence of (2.39) and (2.27), we can also get the following point-wise derivative error estimate for the variable q at the interior left Radau points:

for all \(1\le l\le k\). Apparently, for \(k\ge 2\), we immediately derive a superconvergence rate of \(k+2\) for the point-wise derivative error \(e_{qx}^{{{{\mathcal {L}}}}}\). Our numerical result demonstrates that the convergence rate \(k+2\) is also valid for \(k=1\), which is \(\frac{1}{2}\) order higher than the theoretical result.

Remark 4

We would like to point out that the methodology we adopt in Sect. 2 for the problem (2.1) can be generalized to other third order linear equations such as the linearized KdV equation

Similarly, superconvergence results are still valid for the numerical fluxes choice

2.5 Initial Discretization

In this subsection, we would like to demonstrate how to implement the initial discretization as it is of great importance in our superconvergence analysis. We divide the process into the following steps:

-

1.

In each element \(\tau _j\), let \(q_0=\partial _x^2u_0\) and calculate \(P_h^-q_0\) and compute \(w_{q,i}, i\in {\mathbb {Z}}_l\) by (2.11–2.13);

-

2.

Set \(q_h=P_h^-q_0+\sum _{i=1}^lw_{q,i}\) and compute \(\partial _x(p_h-P_h^+p)\) from the equation

$$\begin{aligned} (q-q_h,v)_j=-{{{\mathcal {D}}}}_j\left( P_h^+p-p_h,v; (P_h^+p-p_h)^+\right) ,\quad v\in V_h; \end{aligned}$$ -

3.

Take \(S_p=(P_h^+p-p_h)(x^+_{j-\frac{1}{2}})=0\) and calculate \(P_h^+p-p_h\) from the expression of \(S_p\) and \(\partial _x(p_h-P_h^+q)\);

-

4.

Compute the value of \(e_p\) and calculate \(\partial _x(u_h-P_h^+u)\) from the identity

$$\begin{aligned} (e_p,v)_j=-{{{\mathcal {D}}}}_j\left( P_h^+u-u_h,v; (P_h^+u-u_h)^+\right) ,\quad v\in V_h; \end{aligned}$$ -

5.

Take \(S_u=\left( P_h^+u-u_h\right) \left( x^+_{j-\frac{1}{2}}\right) =0\) and calculate \(P_h^+u-u_h\) from the expression of \(S_u\) and \(\partial _x\left( u_h-P_h^+u\right) \);

-

6.

Figure out \(u_h=P_h^+u-\left( P_h^+u-u_h\right) \).

As pointed out in [29], we can also choose the condition \(\int _{\Omega } (v_h-P_h^+v) dx=0\) to replace the condition \(S_v=0, v=u,p\) in the process of the above initial discretization.

3 One Dimensional Linear Fourth Order Equations

In this section, we use the following one dimensional fourth order equation

to present and analyze the superconvergence of LDG methods for PDEs containing fourth order derivatives. The same technique can also be applied to other fourth order equations such as the linearized Cahn–Hilliard equation

Without loss of generality, we still consider here the periodic boundary condition.

3.1 LDG Schemes and Stability

We first rewrite (3.1) into a first order linear system

Then the LDG method for (3.1) is to find \((u_h,p_h,q_h,r_h)\in V_h\) such that for all \(v,\xi ,\eta ,\psi \in V_h\),

Here we take the alternating numerical fluxes

or

We slightly modify \({{{\mathcal {A}}}}_j\) and \({{{\mathcal {B}}}}_j\) as follows:

where the numerical fluxes \(({{\hat{u}}},{{\hat{p}}},{{\hat{q}}},{{\hat{r}}})\) are taken as (3.4) or (3.5). Since the exact solutions (u, p, q, r) also satisfy (3.3), we have the following orthogonality

where we still use the notation (2.20) for all \(v=u,p,q,r\). Furthermore, for periodic functions \(v,\xi ,\eta ,\psi \), a direct calculation yields

Then the stability of the numerical scheme follows by choosing \((v,\xi ,\eta ,\psi )=(u_h,p_h,q_h,r_h)\).

3.2 Analysis

This subsection is dedicated to the construction of the special interpolation function \((u_I,p_I,q_I,r_I)\) superclose to the LDG solution \((u_h,p_h,q_h,r_h)\). For simplicity, we only consider the flux choice (3.4) in the rest of this paper. The same argument can be applied to the flux (3.5).

We first define a serial of correction functions \(w_{v,i}, v=u,p,q,r\) as follows.

where \(\xi \in {\mathbb {P}}_{k-1}\), and

Following the same arguments as in Lemma 2, we have for all integers n

For any positive l, where \(1\le l\le k\), we define

and

Apparently, we have from (3.8) and the properties of \(P_h^+,P_h^-\),

Moreover, we follow the same argument as in Theorem 1 to obtain

3.3 Superconvergence

Lemma 6

Given any positive integer l, where \(1\le l\le k\), suppose the initial solution is taken such that

where \(w^l_{q}\) is defined by (3.10). Then

The proof is similar to that in Lemma 4 and is omitted here. The implement of the initial discretization is given in the similar way as in the Sect. 2.5.

Theorem 7

Given any positive integer l, where \(1\le l\le k\), let u be the solution of (3.1) satisfying \(\partial _t^nu\in W^{k+l+4,\infty }(\Omega )\) for all \( n\le 2\), and \((u_I,p_I,q_I)\in V_h\) be the interpolation function of (u, p, q) defined in (3.11). Suppose \((u_h,p_h,q_h)\) is the solution of (3.3) with the initial solution satisfying (3.14). Then for all \(t\in (0,T]\)

Proof

First, taking \((v,\xi ,\eta ,\psi )=({{\bar{e}}}_u,{{\bar{e}}}_p,{{\bar{e}}}_q,{{\bar{e}}}_r)\) in (3.7)

where

By the orthogonality (3.6) and (3.13), we have

Since (3.3) still holds by taking time derivative, we have

which yields, together with (3.13),

Similarly, we can prove

Combining the estimates of \(I_i, i\in {\mathbb {Z}}_4\) together and integrating with respect to time between 0 to t yields

By (3.9) and the result in Lemma 6, the desired result follows. This finishes our proof. \(\square \)

Following the same argument as what we did for the third order case, we obtain the following superconvergence results.

Theorem 8

Let u be the solution of (3.1) satisfying \(\partial _t^nu\in W^{2k+4,\infty }(\Omega )\) for all \(n\le 2\), and \((u_h,p_h,q_h,r_h)\) be the solution of (3.3) with the initial solution satisfying (3.14) with \(l=k\). Then for flux choice (3.4),

-

The errors of numerical fluxes at nodes and for the cell averages are superconvergent, with an order of \(2k+1\). That is, there hold for all variables \(v=u,p,q,r\),

$$\begin{aligned} e_{v,f}:= & {} \max _j \left| {{\hat{e}}}_v\left( x_{j+\frac{1}{2}},t\right) \right| \lesssim h^{2k+1}\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert \partial _t^nu\Vert _{2k+4,\infty }(\tau ),\\ \Vert e_v\Vert _c\lesssim & {} h^{2k+1}\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert \partial _t^nu\Vert _{2k+4,\infty }(\tau ). \end{aligned}$$ -

The LDG solution is \(k+2\)-th order superconvergent to a particular projection of the exact solution, i.e.,

$$\begin{aligned}&\Vert u_h-P_h^-u\Vert _0+ \Vert p_h-P_h^+p\Vert _0+ \Vert q_h-P_h^-q\Vert _0+ \Vert r_h-P_h^+r\Vert _0\\&\quad \lesssim h^{k+2}\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert \partial _t^nu\Vert _{k+5,\infty }(\tau ). \end{aligned}$$Usually, we call this phenomenon as the supercloseness.

-

The function value approximation is \(k+2\)-th order superconvergent at all interior right Radau points for the solution u and the auxiliary variable q; and at all interior left Radau points for the auxiliary variables p, r, i.e.,

$$\begin{aligned} e_{u}^{{{\mathcal {R}}}}+ e_{p}^{{{\mathcal {L}}}}+e_{q}^{{{\mathcal {R}}}}+e_{r}^{{{\mathcal {L}}}}\lesssim h^{k+2}\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert \partial _t^nu\Vert _{k+5,\infty }(\tau ). \end{aligned}$$ -

At all interior left Radau points, the derivative value approximation for the solution u and the auxiliary variable q equal to the function value approximation for the auxiliary variables p and r, respectively; and at the interior right Radau points, the derivative value approximation for the auxiliary variables p and r equal to the function value approximation for the solution q and \(-u_t\), respectively. That is,

$$\begin{aligned} e_{ux}^{{{\mathcal {L}}}}=e_{p}^{{{\mathcal {L}}}}, \quad e_{qx}^{{{\mathcal {L}}}}=e_{r}^{{{\mathcal {L}}}}, \quad e_{px}^{{{\mathcal {R}}}}=e_{q}^{{{\mathcal {R}}}},\quad e_{rx}^{{{\mathcal {R}}}}=-e_{u_t}^{{{\mathcal {R}}}}. \end{aligned}$$Consequently, the derivative value approximation is \(k+2\)-th order superconvergent at all interior left Radau points for the solution u and the auxiliary variable q; and at all interior right Radau points for the auxiliary variables p, r, i.e.,

$$\begin{aligned} e_{ux}^{{{\mathcal {L}}}}+ e_{px}^{{{\mathcal {R}}}}+e_{qx}^{{{\mathcal {L}}}}+e_{rx}^{{{\mathcal {R}}}}\lesssim h^{k+2}\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert \partial _t^nu\Vert _{k+5,\infty }(\tau ). \end{aligned}$$ -

The error for the domain averages is exact for all auxiliary variables p, q, r, and is \(2k+1\)-th order superconvergent for the solution u itself, i.e.,

$$\begin{aligned} \Vert e_p\Vert _d=\Vert e_q\Vert _d=\Vert e_r\Vert _d=0,\quad \Vert e_u\Vert _d\lesssim h^{2k+1}\sup _{\tau \in [0,t]}\sum _{n=0}^2\Vert u\Vert _{2k+4,\infty }(\tau ). \end{aligned}$$

Remark 5

Due to the estimate in (3.15) and the stability inequality (3.16), we have

Following what we have done for the third order equation, we derive

It is this error bound for the \(L^{\infty }\) norm of \(({{\bar{e}}}_u)_t \) that gives us the \(k+2\)-th order superconvergence for the error \(e_{rx}^{{{\mathcal {R}}}}\) (point-wise derivative error at the interior right Radau points for the variable r).

4 Numerical Examples

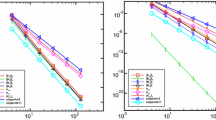

In this section, we present numerical examples to verify our theoretical findings. In our experiments, we will measure several errors, including the errors of numerical fluxes at nodes and for the cell average, the function value error and the derivative error at Radau points, and the error (supercloseness) between the LDG solution and the Gauss–Radau projection of the exact solution itself and the auxiliary variables. All our examples are tested by polynomials \({\mathbb {P}}_{k}\) with \(k=1,2,3\).

To reduce the time discretization error, we use the discrete fourth-order method in time as follows. For ordinary differential equation \(u_t+f(u)=0\), let \(t_0=0<t_1<\ldots <t_M=T\), and \(dt=t_{n+1}-t_n\). Let \(u^0=u(0)\) and \(u^{n+1}\) be defined as

with \(u^{n,1}\) and \(u^{n,2}\) given as solutions of the coupled system of equations

where \(a_{11}=a_{22}=\frac{1}{4}, a_{12}=\frac{1}{4}-\frac{\sqrt{3}}{6}, a_{12}=\frac{1}{4}+\frac{\sqrt{3}}{6}\). Existence of \(\{u^n\}\) can be established by using a variant of the Brouwer Fixed-Point Theorem (see, e.g., [3]).

Example 1

We consider the following equation with the periodic boundary condition

The exact solution to this problem is

We take (2.4) as our numerical fluxes, and construct our meshes by equally dividing the interval \([0,2\pi ]\) into N subintervals, where \(N=2^j\) with \(j=3,\ldots , 6\). We compute the numerical solution at time \(T=1\) with time step \(dt=0.0001\,h^2\). The initial discretization is given in the same way as in Sect. 2.5.

We list in Tables 1, 2 and 3 the various errors for the LDG approximation, where \((\xi _u,\xi _p,\xi _q)=(u_h-P_h^+u,p_h-P_h^+p,q_h-P_h^-q)\) and other errors are defined in Sect. 2.4. From Tables 1, 2 and 3 we observe, for the exact solution u itself, a convergence rate of \(k+2\) for \(\Vert \xi _u\Vert _0\) and \(e_{u}^{{{\mathcal {L}}}}\) (the function value error at interior left Radau points); and \(k+1\) for \(e_{ux}^{{{\mathcal {R}}}}\) (the derivative error at interior right Radau points), and \(2k+1\) for the numerical flux at nodes and for the cell averages. We also observe similar superconvergence results for the auxiliary variables p, q. Furthermore, as indicated by Theorem 6, the error data for \(e_{px}^{{{\mathcal {R}}}}\) (the derivative error at interior right Radau points for the auxiliary variable p) is the same as that for \(e_{q}^{{{\mathcal {R}}}}\), and thus we observe a convergence of \(k+2\) for \(e_{px}^{{{\mathcal {R}}}}\). Similarly, we also observe that the convergence rate of \(e_{qx}^{{{\mathcal {L}}}}\) is \(k+2\). These superconvergence results are consistent with the theoretical findings in Theorems 3–6, and (2.28) in Corollary 1.

Example 2

We consider the following equation with the periodic boundary condition

The exact solution to this problem is

We choose (3.4) as our numerical fluxes, and construct our meshes by equally dividing each interval \([0,\frac{3\pi }{4}]\) and \([\frac{3\pi }{4},2\pi ]\) into N / 2 subintervals, \(N=2^m, m=3,\ldots , 7\). We compute the same errors as in Example 1 at time \(t=0.1\). The initial discretization is obtained by following the similar way as in Sect. 2.5. The computational results for the LDG solution itself and all the auxiliary variables are given in Tables 4, 5 and 6 respectively, where

From Tables 4, 5 and 6, we observe similar results as in Example 1. Especially, we observe that \(e_{ux}^{{{\mathcal {L}}}}, e_{qx}^{{{\mathcal {L}}}}\) is exactly the same as \(e_{p}^{{{\mathcal {L}}}}, e_{r}^{{{\mathcal {L}}}}\), respectively, and \(e_{px}^{{{\mathcal {R}}}}\) equals to \(e_{q}^{{{\mathcal {R}}}}\). All these results confirm the theoretical results in Theorem 8.

5 Conclusion Remarks

In this paper, we study the superconvergence behavior of LDG methods for partial differential equations with higher order derivatives. Our model problems are third and fourth order equations. We prove a superconvergence order of \(2k+1\) for the error of numerical flux at nodes as well as for the cell averages, and \(k+2\) for the function value approximation. We also prove that the LDG solution is superclose to a particular projection of the exact solution, with an order of \(k+2\). All results hold for the exact solution u and the auxiliary variables. The key idea to derive the desired superconvergence result is to use the correction function to design some special interpolation functions, which are superclose to the LDG solution, based on the energy inequality. An unexpected and new result is the superconvergence rate of \(k+2\) for the derivative error at a class of interior Radau points, which is 2 order higher than the optimal convergence rate. Our on-going work includes the superconvergence study of the LDG methods for nonlinear KdV and Cahn–Hilliard equations.

References

Adjerid, S., Devine, K.D., Flaherty, J.E., Krivodonova, L.: A posteriori error estimation for discontinuous Galerkin solutions of hyperbolic problems. Comput. Methods Appl. Mech. Eng. 191, 1097–1112 (2002)

Adjerid, S., Massey, T.C.: Superconvergence of discontinuous Galerkin solutions for a nonlinear scalar hyperbolic problem. Comput. Methods Appl. Mech. Eng. 195, 3331–3346 (2006)

Baker, G., Dougalis, V.A., Karakashian, O.A.: Convergence of Galerkin approximations for Korteweg-de Vries equation. Math. Comput. 40, 419–433 (1983)

Cao, W., Li, D., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin methods based on upwind-biased fluxes for 1D linear hyperbolic equations. ESAIM: Math. Model. Numer. Anal. (2016, accepted). doi:10.1051/m2an/2016026

Cao, W., Shu, C.-W.: Yang, Yang, Zhang, Z.: Superconvergence of discontinuous Galerkin method for 2-D hyperbolic equations. SIAM J. Numer. Anal. 53, 1651–1671 (2015)

Cao, W., Zhang, Z.: Superconvergence of local discontinuous Galerkin method for one-dimensional linear parabolic equations. Math. Comput. 85, 63–84 (2016)

Cao, W., Zhang, Z., Zou, Q.: Superconvergence of discontinuous Galerkin method for linear hyperbolic equations. SIAM J. Numer. Anal. 5, 2555–2573 (2014)

Celiker, F., Cockburn, B.: Superconvergence of the numerical traces of discontinuous Galerkin and hybridized methods for convection-diffusion problems in one space dimension. Math. Comput. 76, 67–96 (2007)

Cheng, Y., Shu, C.-W.: Superconvergence and time evolution of discontinuous Galerkin finite element solutions. J. Comput. Phys. 227, 9612–9627 (2008)

Cheng, Y., Shu, C.-W.: Superconvergence of discontinuous Galerkin and local discontinuous Galerkin schemes for linear hyperbolic and convection-diffusion equations in one space dimension. SIAM J. Numer. Anal. 47, 4044–4072 (2010)

Cockburn, B., Hou, S., Shu, C.-W.: The Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws, IV: the multidimensional case. Math. Comput. 54, 545–581 (1990)

Cockburn, B., Lin, S., Shu, C.-W.: TVB Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws, III: one dimensioal systems. J. Comput. Phys. 84, 90–113 (1989)

Cockburn, B., Shu, C.-W.: TVB Runge–Kutta local projection discontinuous Galerkin finite element method for coservation laws, II: genaral framework. Math. Comput. 52, 411–435 (1989)

Cockburn, B., Shu, C.-W.: The Runge–Kutta discontinuous Galerkin method for conservation laws, V: multidimensional systems. J. Comput. Phys. 141, 199–224 (1998)

Cockburn, B., Shu, C.-W.: The local discontinuous Galerkin method for time-dependent convection-diffusion systems. SIAM J. Numer. Anal. 35, 2440–2463 (1998)

Dong, B., Shu, C.-W.: Analysis of a local discontinuous Galerkin method for linear time-dependent fourth-order problems. SIAM J. Numer. Anal. 47, 3240–3268 (2009)

Guo, W., Zhong, X., Qiu, J.: Superconvergence of discontinuous Galerkin and local discontinuous Galerkin methods: Eigen-structure analysis based on Fourier approach. J. Comput. Phys. 235, 458–485 (2013)

Hufford, C., Xing, Y.: Superconvergence of the local discontinuous Galerkin method for the linearized Korteweg–de Vries equation. J. Comput. Appl. Math. 255, 441–455 (2014)

Meng, X., Shu, C.-W., Zhang, Q., Wu, B.: Superconvergence of discontinuous Galerkin method for scalar nonlinear conservation laws in one space dimension. SIAM J. Numer. Anal. 50, 2336–2356 (2012)

Meng, X., Shu, C.-W., Wu, B.: Superconvergence of the local discontinuous Galerkin method for linear fourth-order time-dependent problems in one space dimension. IMA J. Numer. Anal. 32, 1294–1328 (2012)

Xia, Y., Xu, Y., Shu, C.-W.: Local discontinuous Galerkin methods for the Cahn–Hilliard type equations. J. Comput. Phys. 227, 472–491 (2007)

Xie, Z., Zhang, Z.: Uniform superconvergence analysis of the discontinuous Galerkin method for a singularly perturbed problem in 1-D. Math. Comput. 79, 35–45 (2010)

Xu, Y., Shu, C.-W.: Local discontinuous Galerkin methods for three classes of nonlinear wave equations. J. Comput. Math. 22, 250–274 (2004)

Xu, Y., Shu, C.-W.: Optimal error estimates of the semi-discrete local discontinuous Galerkin methods for high order wave equations. SIAM J. Numer. Anal. 50, 79–104 (2012)

Xu, Y., Shu, C.-W.: Local discontinuous Galerkin methods for high-order time-dependent partial differential equations. Commun. Comput. Phys. 7, 1–46 (2010)

Yan, J., Shu, C.-W.: A local discontinuous Galerkin method for KdV type equations. SIAM J. Numer. Anal. 40, 769–791 (2002)

Yan, J., Shu, C.-W.: Local discontinuous Galerkin methods for partial differential equations with higher order derivatives. J. Sci. Comput. 17, 27–47 (2002)

Yang, Y., Shu, C.-W.: Analysis of optimal supercovergence of discontinuous Galerkin method for linear hyperbolic equations. SIAM J. Numer. Anal. 50, 3110–3133 (2012)

Yang, Y., Shu, C.-W.: Analysis of sharp superconvergence of local discontinuous Galerkin method for one-dimensional linear parabolic equations. J. Comput. Math. 33, 323–340 (2015)

Zhang, Z.: Superconvergence points of spectral interpolation. SIAM J. Numer. Anal. 50, 2966–2985 (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

W. Cao: Research supported in part by NSFC Grant No.11501026, and the China Postdoctoral Science Foundation Grant 2016T90027. Q. Huang: Research supported in part by NSFC (No. 11571027), Beijing Nova Program (No. Z1511000003150140), and the Importation and Development of High-Caliber Talents Project of Beijing Municipal Institutions.

Rights and permissions

About this article

Cite this article

Cao, W., Huang, Q. Superconvergence of Local Discontinuous Galerkin Methods for Partial Differential Equations with Higher Order Derivatives. J Sci Comput 72, 761–791 (2017). https://doi.org/10.1007/s10915-017-0377-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0377-z