Abstract

Rockburst prediction is crucial in deep hard rock mines and tunnels to make safer working conditions. Due to the complex interaction of many factors involved in rockburst prediction, such as multi-variable and multi-interference factors, three hybrid support vector machine (SVM) models optimized by particle swarm optimization (PSO), Harris hawk optimization (HHO), and moth flame optimization (MFO) are proposed to predict rockburst hazard level (RHL). The RHL is determined according to four kinds of microseismic characteristic parameters including angular frequency ratio, total energy, apparent stress, and convexity radius. Then, six types of microseismic characteristic parameters are taken as input variables in 343 sets of data, including angular frequency ratio and total energy, etc.. And the RHL is taken as the output target of rockburst prediction. The classification performance of PSO-SVM, HHO-SVM, and MFO-SVM hybrid models is evaluated by accuracy (ACC), precision (PRE), and kappa coefficient. Findings reveal that the MFO-SVM model performs best in terms of accuracy, with ACC, PRE, and kappa coefficients reaching 0.9559, 0.9063, and 0.9094 respectively, while PSO-SVM and HHO-SVM have similar performances. However, the PSO-SVM, HHO-SVM, and MFO-SVM all perform better than the unoptimized SVM model. This confirms that the three optimization algorithms significantly enhance the rockburst prediction capacity of the SVM model to help mine practitioners apply machine learning methods to rockburst prediction problems appropriately.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

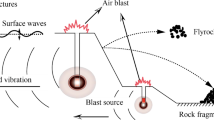

In the construction process of deep hard rock mines and tunnels, rockburst is a common type of geological hazard. Rockbursts generally occur because of the accumulation of energy within the rock mass due to external factors that cause a sudden release of disturbance [51]. A rockburst disaster at the Altenberg tin mine in Germany in 1640 caused heavy damage which could be the earliest recorded rockburst disaster [38]. Today, rockbursts occur extensively in deeper hard rock mines in many countries, e.g., South Africa [23], Canada [21], Western Australia [15], the USA [7] , and China [57]. In addition, there are also rockburst cases in tunnel engineering in Norway [3] and China [53]. In deep hard rock mines and tunneling projects, rockbursts can lead to varying degrees of impact on equipment, and serious rockburst disasters even threaten personnel safety [59]. Due to a greater demand for resources, mining and construction activities are going deeper underground compared to the past. These increasing geotechnical activities can exacerbate rockbursts [62].

Microseismic monitoring (MS) technology is a kind of three-dimensional monitoring technology for monitoring microseismic events caused by rock cracking, which has been used in the field of rockburst monitoring for many years[69]. By placing sensors in different directions, microseismic waves emitted by rock fractures can be monitored. Further analysis of microseismic waves can obtain the location, time, intensity, and type of rock fracture [12]. Therefore, rockburst hazards can be predicted according to microseismic monitoring information. At present, microseismic monitoring has become one of the common technical means in deep hard rock mines [33]. Mendecki et al. [34] pointed out that seismic quantitative analysis becomes a crucial part of the safety of gold mining in South Africa. In 1990, AngloGold Mining Company of South Africa invented a system named “robot” to quantify earthquake disasters which were improved into the later Routine Rating of Seismic Hazard (RRoSH). After that, this system was widely developed in more than a dozen gold mines in South Africa. In 1997, Poplawski [40] applied an approach called “departure indexing” to predict rockburst hazards. Becka and Brady [4] proposed a “cell evaluation” approach based on numerical simulation and analysis to conduct a quantitative analysis of earthquake risk. Trifu and Suorineni [46] applied a microseismic monitoring system in the field of rockburst prediction; Li et al. [24] used microseismic monitoring techniques for rockburst hazard assessment in underground engineering. Andrzej and Zbigniew [2] suggested that the characteristics associated with microseismic events could be the basis for rockburst risk assessment and used this to conduct a risk assessment of the Zabrze-Bielszowice coal mine in Poland. However, the traditional method which was used to judge MS monitoring information depends on the manual implementation, and it is difficult to achieve sufficient speed and accuracy.

In recent years, there has been an increased focus on AI technology in the field of geotechnology [8, 18, 70, 43, 48, 57, 58, 63,64,65,66,67]. Especially in the field of rockburst prediction, a variety of rockburst prediction techniques have been developed based on different machine learning methods. Zhou and Gu [56] proposed a geographic information system based on the artificial neural network (ANN) to evaluate rockburst propensity. Su et al. [45] and Zhou et al. [59] used the K-nearest neighbor algorithm (KNN) to predict rockburst and achieved good results. Adoko et al. [1] attempted to combine an ANN with a fuzzy inference system to analyze 174 rockburst events to predict rockburst. The results show that the fuzzy inference system performs a crucial part in the estimation of rockburst intensity. Zhou et al. [57, 59] applied the SVM algorithm with different kernels to rockburst prediction and achieved good results. Besides classical machine learning models, other methods such as quadratic discriminant analysis (QDA) [59], Naive Bayes (NB) [59], and Bayesian network [25] have achieved good results in rockburst prediction. Compared with traditional methods that rely on manual judgment and processing of information, these emerging prediction models using artificial intelligence technology can effectively reduce errors caused by human factors and process large volumes of data that are difficult to be processed by traditional methods more quickly and effectively. More importantly, the model established by using AI technology can perform better in solving the problem of complex and fuzzy relationships among the characteristic values of datasets [22].

Although there are many scholars who have done research on different rockburst prediction methods [62], few scholars consider ensemble learning to analyze the monitored MS data and provide real-time and effective rockburst hazard warning for underground engineering.

Support vector machines (SVM) are gaining attention as a well-performing machine learning model in geotechnical engineering as well as in construction engineering [13, 71]. SVM has an uncomplicated structure and fewer controllable variables, and the difficulty of the calculation has nothing to do with the spatial dimension of the sample. Therefore, compared with an ANN, SVM is based on a more solid theoretical foundation and can better deal with nonlinear, high-dimensional, and small-sample problems [55]. Bi et al. [6] compared several types of machine learning models, such as KNN, RF, and SVM, for the classification of microseismic events, and the results showed that SVM has the most outstanding ability to recognize microseismic events. Pu et al. used the method of SVM to predict kimberlite rockbursts and achieved good prediction performance [41], Zhou et al. proposed SVM hybrid models to classify and assess the long-term rockburst hazard of underground cavities, possessing a high prediction precision [57]. The aforementioned research has demonstrated that SVM has a fair level of robustness in predicting rockbursts; thus, this paper will employ the SVM method to categorize the different classes of rockburst hazards. In addition, the particle swarm optimization (PSO) algorithm is an algorithm that simulates the predatory behavior of birds to continuously search for optimal solutions. Compared with traditional algorithms, on the one hand, PSO is easy to implement and has fewer adjustable parameters. On the other hand, it has a strong global search ability for nonlinear and multi-peak problems [32]. Therefore, it is widely used by researchers. Harris hawk optimization (HHO) algorithm [16] and moth flame optimization (MFO) algorithm [37] are optimized algorithms that simulate the process of Harris hawk predation and moth being attracted by flame respectively, both of which have the characteristics of few adjustable parameters and fast convergence. It performs well even on higher dimensional and more complex problems. Based on these three optimization algorithms, three hybrid classification models, PSO-SVM, HHO-SVM, and MFO-SVM, are constructed for rockburst prediction using MS monitoring information.

In this article, first, the background of the algorithm has been introduced. On this basis, the framework of the model is described. And then, a dataset containing 343 microseismic monitoring samples from Dongguashan Copper Mine is established. After the previous preparatory work was completed, this paper developed a three-class hybrid model to predict the rockburst hazard level. Finally, the classification capabilities of the three hybrid models (PSO-SVM, HHO-SVM, and MFO-SVM) are comprehensively compared by using multiple indicators, and the best hybrid classification model for rockburst prediction is obtained.

2 Materials and Methods

2.1 Support Vector Machines

Support vector machines (SVM) is a machine learning algorithm of a binary model proposed by Vapnik [47]. It maps feature items corresponding to instances to a subset of points in space. Then, the points are classified by the hyperplane found in the model, to achieve the effect of classifying the input data, as shown in Fig. 1.

The support vector in SVM is a number of sample points scattered around the hyperplane. Margin is the sum of the distances between the support vectors distributed on each side of the hyperplane to the hyperplane, which can be indicated by the following formula:

The larger the distance between support vectors, the easier it is to search for the most suitable hyperplane, that is, the value \(\omega\) should be minimized, to minimize the influence of sample local disturbance on the model and generate the most robust results. Depending on whether the training set data is linearly separable, the solution of the optimal classification hyperplane is also different.

By integrating constraints into the optimization objective function, the Lagrange formula [20, 28] is established, as shown in formula (2), where \({\alpha }_{i}\) is the Lagrange coefficients and the same to\({\beta }_{i}\).

For various divisions of the training set data, the SVM uses various planning strategies. For linear non-separable problems, a kernel function should be established firstly:\(K({x}_{i},{x}_{j})=\varphi ({x}_{i})\varphi ({x}_{i})\). To construct the optimal classification hyperplane, the input samples in the original space need to be mapped into the high-dimensional feature space H using a nonlinear mapping \(\varphi :{R}^{d}\to H\) [54]. The optimal decision formula is shown in formula (3) [64, 68]:

According to the problem of linear divisibility and linear indivisibility of SVM, it is not difficult to find that SVM is defined only for binary classification problems. If you want to solve the multi-classification problem, you need to make further improvements. At present, there are two main methods for constructing a multi-classification SVM model: direct method and indirect method. The direct method is to directly solve and calculate the multi-classification function suitable for the problem to be solved. The indirect method is to realize multiple classifications by constructing and combining multiple SVM models. The commonly used methods are as follows: one-to-one method and one-to-many methods.

In the SVM, a node represents a support vector, and the output is a linear combination of the intermediate nodes, as shown in Fig. 2. Kernel functions commonly used in SVM include the d-order polynomial kernel function, linear kernel function, radial basis kernel function (RBF), and Sigmoid kernel function with parameters k and θ. The RBF is used in this study. In RBF-SVM, different combinations of hyperparameters c and g play a crucial role in classification capability. Therefore, in order to obtain better classification performance, PSO, HHO, and MFO are used to optimize the hyperparameters c and g.

2.2 Particle Swarm Optimization

Particle swarm optimization (PSO) algorithm seeks optimal solutions through mutual cooperation and information sharing among individuals of a group [10], which is inspired by the predatory actions of birds. PSO has the advantages of few adjustable parameters and simple implementation and is widely used in image processing, function optimization, vehicle driving road optimization, and other fields [5, 9, 36, 27]. The system structure of the PSO is shown in Fig. 3. This algorithm first initializes a group of random particles, which have two properties of velocity \({v}_{i}\) and position \({x}_{i}\). In each iteration, the particles will update themselves according to two “extreme values” p and g, and the formula is as follows:

where \({x}_{i}(t)\) represents the position and \({v}_{i}(t)\) represents a velocity of the tth iteration; \({p}_{i}(t)\) represents the historical best location where the ith particle is found; \({p}_{g}(t)\) is the historical sweet spot where all particles have been found; \({c}_{1}\) and \({c}_{2}\) represent learning factors, and both of them are 2; \({r}_{1}\) and \({r}_{2}\) represent random numbers between [0,1].

2.3 Harris Hawk Optimization

Harris hawk optimization (HHO) algorithm was proposed by Heidari et al. [16] taking inspiration from simulated Harris hawk predation. HHO has a great global search ability and few adjustment parameters. The process of HHO is mainly composed of three parts: the search stage, the search and development transformation stage, and the development stage.

-

(a)

In the search stage, the location of the Harris hawk is randomly selected, and the prey is searched through two strategies, as shown in Eqs. (6) and (7).

$$X\left(t+1\right)=\left\{\begin{array}{c}{X}_{rand}\left(t\right)-\left.{r}_{1}\right|{X}_{rand}\left(t\right)-2{r}_{2}\left.X\left(t\right)\right|,q\ge 0.5\\ \left[{X}_{p}\left(t\right)-{X}_{m}\left(t\right)\right]-{r}_{3}\left[lb+{r}_{4}\left(ub-lb\right)\right],q<0.5\end{array}\right.$$(6)$${X}_{m}\left(t\right)=\sum_{k=1}^{M}{X}_{k}\left(t\right)/M$$(7)where \(X\left(t\right)\) represents the individual current position, while \(X(t+1)\) represents the next iteration position, and t represents iteration times. \({X}_{rand}\left(t\right)\) is a random position of an individual; \({X}_{p}\left(t\right)\) represents the location of prey and also represents the location of the individual with the optimal fitness; \({r}_{1},{r}_{2},{r}_{3},{r}_{4},q\) are random numbers ranging from 0 to 1, where q represents the strategy chosen. \({X}_{m}\left(t\right)\) represents the average position of individuals, \({X}_{k}\left(t\right)\) represents the location of the kth individual in the swarm; M represents the swarm size.

-

(b)

Transformation of search and development. HHO algorithm will convert between search and development according to the escape energy of prey, which can be expressed in Eq. (8):

$$E=2{E}_{0}(1-\frac{t}{T})$$(8)where \({E}_{0}\) represents the escape energy of prey, t represents the number of current iterations, and T represents the maximum number of iterations. When \(|E|\ge 1\), HHO enters the search stage or enters the development stage.

-

(c)

The development stage. Depending on the circumstance, HHO will now select between a light siege and a severe siege to approach the optimal result.

In HHO, compared with the update of the fitness of individual fitness and prey location. If the individual fitness value is superior to the prey position fitness, the prey location will be replaced by a new and better individual position. The process will stop when HHO reaches a set number of iterations.

2.4 Moth Flame Optimization

Seyedali Mirjalili proposed the moth flame optimization (MFO) in 2015 [37] which was inspired by the characteristics of moths spiraling close to an artificial light source in the night environment, as shown in Fig. 4. MFO has strong parallel optimization ability, global optimization, and characteristic ability[69, 50].

In MFO, the moth is represented by m. Considering the moth as the solution to the problem, then the position of the moth in space is the unknown parameter of the required solution. Thus, the moth can be made to fly in various spatial dimensions by changing its position vector. The flame that corresponds to each moth in MFO is the only flame that the moth will fly around. The following equation can reflect the change in moth location needed to quantitatively model how moths react to flames:

Among them, \({M}_{i}\) is the ith moth; S is the spiral function; \({F}_{j}\) represents the jth flame; \({D}_{i}=|{F}_{j}-{M}_{i}|\) is the distance of the ith moth to the jth flame; b is the defined logarithmic spiral shape constant, t takes a random value between − 1 and 1 which represents the path coefficient.

As shown in Fig. 5, the position of the flame influences the update of the moth’s position. The different values of t represent the different positions of the moths from the flame. In the continuous iterations, the moth updates the position based on the fitness value of the current location and the fitness value of the corresponding sequence of flames, as shown in Fig. 6, and then more accurately approaches the flames in their corresponding sequence.

Since each position update of each moth will search all different positions, the local search capability of MFO becomes weaker. A control mechanism for the number of flames as shown in Eq. (10) solves this problem well:

Among them, N represents the maximum quantity of flames; l represents the present number of iterations; T represents the maximum quantity of iterations. According to the formula, due to the reduction of flames, the moths will change their position parameters according to the flame adaptation value.

3 Data Collection

3.1 Data Sources

This study is based on microseismic monitoring data from the Dongguashan Copper Mine in Tongling City, Anhui Province, China. Dongguashan Copper Mine, formerly known as Shizishan Copper Mine, is located 7.5 km east of Tongling City and is an extra-large high-sulfur copper deposit, as shown in Fig. 7. The elevation of the main ore body in the mining area is − 680 ~ − 1000 m; the horizontal strike is 1810 m; the maximum width is 882 m, while the minimum width is 204 m; the average thickness of the middle section is 40 m. The ore body is layered, and its occurrence is basically the same as that of the surrounding rock. It is a gently inclined layered ore body. The ore body as a whole has the characteristics of wide distribution in the plane, but shallow distribution in the vertical direction. The internal structure of the mine is simple and uncomplicated, joints and fissures are not developed, and the rock mass is high in hardness.

The stress and deformation state of rock mass and its change characteristics caused by mining activities are an important factor that causes ground pressure activities. The Dongguashan Copper Mine contains a variety of lithologic rock formations, the mining area is large, and the structural distribution of the stope and the mining area is intricate, resulting in a complex spatial distribution of rock masses prone to rockbursts [11, 49]. A schematic diagram of the stope distribution of Dongguashan Copper Mine is shown in Fig. 8, and it is also the main monitoring area of the microseismic monitoring system.

A schematic diagram of the network layout of the microseismic monitoring system used at the Dongguashan Copper Mine is shown in Fig. 7. The microseismic monitoring system installed in Dongguashan Copper Mine has a total of 7 sensors buried, one of which is a three-component sensor. The specific arrangement of the sensor is shown in Table 1.

The database used in this paper contains 343 sets of microseismic monitoring data, including the angular frequency ratio (AFR), which indicates the vibration frequency; the total energy (TE) released by microseismic in rock mass; the apparent stress (AS), which measures the stress release at the source; the concave and concave and convex radius (CCR), which reflects the variation of geological plate characteristics; the energy ratio (ER) of P-wave to S-wave; and the moment magnitude (MM), which visually indicates the magnitude of the earthquake, as shown in Table 2.

3.2 Rockburst Hazard Index

The monitoring status of the data used in this study includes a large number of parameters such as magnitude, energy, angular frequency, apparent stress, and convexity radius. Among these, a large number of fields are not strongly correlated with rockburst hazard, so the variables closely related to rockburst hazard need to be screened out. Table 3 gives a brief overview of the microseismic evaluation indicators of mining engineering.

In the microseismic monitoring of mines, the angular frequency reflects the vibration of the rock mass. Based on the angular frequency ratio of P-wave and S-wave, the internal vibration of the rock mass can be judged. For microseismic events, the release of microseismic energy is related to the internal fracture mode and speed of rock mass, and the energy radiation of the P-wave and S-wave is also different. In this study, the sum of the energy values of P- and S-waves can reflect the energy released by the occurring microseismic events. The radius of the asperity is a kind of geological plate feature, and the occurrence of earthquakes is related to the rupture of the asperity [31]. The apparent stress is generally expressed as the ratio of the microseismic release energy to the microseismic body change potential. In addition, volumetric potential magnitude [35] and moment magnitude [14] are also intuitive indicators for evaluating microseismic events.

With microseismic monitoring at the Dongguashan copper mine, researchers are able to quickly identify anomalous microseismic conditions and notify site staff to observe the actual site conditions and provide feedback. To avoid the ambiguity of visual description, the staff usually classify the site conditions roughly based on empirical formulae and combine the microseismic monitoring anomaly data with the actual site conditions. In mine microseismic monitoring, four types of microseismic characteristic parameters including angular frequency ratio (AFO), total energy (TE), apparent stress (AS), and concave and convex radius (CCR) were taken into consideration to preliminarily calculate rockburst hazard composite index [19] and then classify the RHL to quantify the rockburst hazard. The empirical formula to discriminate RHL steps is as follows:

-

(1)

First, the rockburst hazard index of a single parameter should be determined. The premise of calculating the comprehensive index of rockburst hazard is to determine the rockburst hazard index of a single microseismic characteristic parameter \({W}_{i}(t)\). The calculation method is as follows:

$${W}_{n}(t)=\frac{||A(t)|-\overline{{A }_{0}}|}{{A}_{\mathrm{max}}-\overline{{A }_{0}}}$$(11)where A(t) represents the amplitude of a certain type of characteristic parameter at time t; \(\overline{{A }_{0}}\) is the mean of the amplitude monitored under normal conditions; Amax represents the maximum value of microseismic characteristic parameters monitored.

-

(2)

The weighting factor \({P}_{n}(t)\) is usually determined manually by the staff based on the site damage. After determining the weight factor \({P}_{n}(t)\) of a single index, according to the rockburst hazard index \({W}_{n}(t)\) of each index, the comprehensive rockburst hazard index \({W}_{z}(t)\) can be obtained by referring to Eq. (12).

$${W}_{z}(t)=\sum_{n=1}^{R}{W}_{n}(t){P}_{n}(t)$$(12)

According to the comprehensive rockburst hazard index, rockburst is divided into four hazard levels, and the corresponding RHL at each moment are evaluated. The classification of weight factors and rockburst hazard grades is shown in Table 4.

This method relies on the personal experience of the staff to roughly discriminate the RHL by empirical formulae, which has many drawbacks. Firstly, this approach relies heavily on the personal work experience and subjectivity of the staff and may be biased due to the different experiences of the observers (the data obtained in this paper were obtained from experienced staff and verified by microseismic monitoring researchers). Secondly, for some hazardous areas, which is hard for the staff to check them, it is difficult to assess the damage situation at the site. Based on the above-mentioned drawbacks of the empirical formula, this paper is aimed at developing a new machine-learning model to achieve the prediction of RHL and reduce the influence of human subjectivity and environmental limitations. In this study, the influence of energy ratio (ER) and moment magnitude (MM) on rockburst is also considered in addition to the four types of characteristic parameters included in the empirical formula.

Correlations, graphs, scatterplots, and histograms obtained from the analysis of rockburst data are shown by the GGally function [42] in Fig. 9a. And Pearson correlation coefficients of various characteristic parameters in different RHLs are illustrated in Fig. 9b. Based on the above, the input variables are angular frequency ratio (AFR), total energy (TE), apparent stress (AS), concave and convex radius (CCR), energy ratio (ER), and moment magnitude (MM), and the output variable is RHL, from which the three hybrid classification models (PSO-SVM, HHO-SVM, and MFO-SVM) are trained and tested. The whole process is implemented for this study in Fig. 10.

In addition, it can be seen from the description of the parameter distributions of the training and test sets in Fig. 11 that they are almost identical for each class of parameters. Therefore, the credibility of the model is guaranteed.

3.3 Evaluation Indicators of the Models

To evaluate the performance of PSO-SVM, HHO-SVM, and MFO-SVM models, this study adopted four kinds of evaluation indicators, namely, accuracy (ACC), precision (PRE), kappa coefficient, and confusion matrix [60, 61, 70] as shown in Fig. 10. ACC is the proportion of samples where the predicted value matches the true value of all samples. In the classification process, the class concerned is usually positive and the other classes are negative, and the classifier is correct or incorrect in the prediction of the dataset. Therefore, there are four situations as follows: TP (true positive), FP (false positive), TN (true negative), and TP (true positive). PRE is the true percentage of the sample that is predicted to be positive. In the evaluation of multi-class problems, the precision is calculated separately for each label, and the unweighted average is taken. The kappa coefficient plays a role in statistics to evaluate consistency. In practical application, the general range is [0,1]. The magnitude of the coefficient is positively related to the classification accuracy of the model. The calculation methods and principles of ACC, PRE, and kappa coefficients are shown in Fig. 12, where, P0 represents the accuracy of prediction, Pe represents accidental consistency:\({P}_{e}=\frac{{\sum }_{i=1}^{n}{a}_{i+}*{a}_{+j}}{{N}^{2}}\), \({a}_{ij}\) is the sample with actual i and predicted j; N is the whole quantity of samples, and n represents the number of categories;\({a}_{i+}=\sum_{j}{a}_{ij}\);\({a}_{+j}=\sum_{i}{a}_{ij}\).

4 Results and Discussion

To optimize the target machine learning algorithm, PSO, HHO, and MFO are introduced to determine its internal optimal parameters. In this section, the classification capability of PSO-SVM, HHO-SVM, and MFO-SVM models are systematically compared and analyzed as a single SVM model.

The input dataset consists of a training set and a test set, usually in the ratio of 70:30 [26, 39], 75:25 [52], or 80:20 [17, 29]. In this paper, the dataset obtained from microseismic monitoring is divided into a training set and a test set in the ratio of 80:20 to prove the reliability of the model. Divided in this way, the training set contained 274 sample data, while the test set contained 69 sample data.

In summary, different values of the two hyperparameters (c and g) included in the SVM can have a marked effect on the classification capacity of the model. To obtain better classification results when predicting RHL, three optimization algorithms (PSO, HHO, and MFO) are applied to search for the best combination of hyperparameters for the SVM. To avoid the randomness of dividing the dataset, six kinds of population scales (10, 20, 50, 100, 150, and 200) are set in each classification model, corresponding to the number of particle populations in PSO-SVM, the number of Harris hawk in HHO-SVM, and the number of moths in MFO-SVM, respectively.

4.1 Parameter Setting

Some parameters need to be set in PSO-SVM, HHO-SVM, and MFO-SVM, such as some parameters in the three optimization algorithms (PSO, HHO, and MFO). These parameters affect the classification ability and running speed of the model. Some parameter settings of these three hybrid models are the same, including the region where c and g are taken, the number of iterations, and the division ratio of the training set and test set. In the range of values of the hyperparameters c and g, the optimization algorithm will search for a more reasonable parameter combination in the algorithm space. The number of iterations will affect the optimization result after the optimization process. The segmentation ratio of the training set and testing set affects the reliability and classification ability of the whole model to some extent. In this article, parameters in PSO-SVM, HHO-SVM, and MFO-SVM are compared and analyzed, and the final screening parameters are shown in Table 5.

4.2 Discussion and Analysis

In this section, the combined capabilities of PSO-SVM, HHO-SVM, and MFO-SVM are compared and analyzed. In this study, the three indexes PRE, ACC, and kappa coefficient in the above equation are used to evaluate the three hybrid classification models (PSO-SVM, HHO-SVM, and MFO-SVM). In addition, all models adopt the same training set and test set.

To compare the comprehensive performance of the hybrid models, this paper adopts the method of scoring each evaluation index and finally takes the total score to make the comparison. Therefore, the combined capacity of different models in different populations was ranked during the testing phase. The results of relevant rankings can be seen in Fig. 13.

Based on the results in Fig. 13a, PSO-SVM models of different populations all reached a stable state at 100 iterations. To determine the optimal population size, after the completion of PSO-SVM model training, the prediction capacity of the model is comprehensively analyzed. From Fig. 13a, it can be clearly visualized that the optimal population for the PSO-SVM model is 50 (PRE = 0.8198, kappa = 0.8477, ACC = 0.9265).

For the HHO-SVM model, parameters are set as presented in Table 6. It should be noted that HHO differs from PSO in that it does not need to set too many parameters, while other training and test conditions are the same as PSO-SVM. Figure 13b shows the fitness changes in six different populations. Finally, according to the combined score in Fig. 11, the HHO-SVM model has the best classification ability when the population is 200, and thus, the optimal combination of parameters is obtained, as shown in Fig. 13b. (PRE = 0.8660, kappa = 0.8461, ACC = 0.9265).

In the process of establishing MFO-SVM, parameters are also set according to Table 6. Similar to HHO, MFO does not need to set specific parameters, and its training and test conditions are set the same as PSO-SVM and HHO-SVM. Finally, the comprehensive performance of the MFO-SVM model is evaluated. As shown in Fig. 13c, the optimal parameter combination and model performance of MFO-SVM is achieved when the population is 50 (PRE = 0.9063, kappa = 0.9094, ACC = 0.9559).

In order to further study the classification and prediction ability of different models for RHL, the PSO-SVM model with a population of 50, the HHO-SVM model with a population of 200, and the MFO-SVM model with a population of 50, which performed better among the three hybrid models, are further evaluated for comprehensive performance. As shown in Fig. 13d, among the three kinds of hybrid models, the MFO-SVM model with a population of 50 demonstrates greater ability in predicting RHL compared to other models, while the performance of PSO-SVM and HHO-SVM is slightly inferior to that of MFO-SVM, and there is no significant difference between them. However, in comparison with the SVM model that has not been optimized, as presented in Table 6, the prediction capacity of the three hybrid models has been significantly improved, which confirms that the optimization algorithm is effective in improving the rockburst prediction ability of the model (Fig. 14).

When evaluating the results, the confusion matrix serves to demonstrate the agreement between the predicted and actual values of the model [44, 59,60,61]. The numbers on the diagonal line from the top left to the bottom right of the confusion matrix indicate the number of samples whose predicted values agree with the actual values, while the other positions present the number of samples where the predicted value does not match the actual value. According to the optimal population number and corresponding parameters of the three hybrid classification models, the confusion matrix of the three hybrid classification models can be obtained. As shown in Fig. 15, among the three hybrid models, the data dispersion degree of MFO-SVM is the smallest, while the performance of PSO-SVM and HHO-SVM is slightly inferior to that of MFO-SVM, but they also have good classification prediction ability.

In conclusion, among the three kinds of hybrid classification models based on the test set, the MFO-SVM model with a population of 50 has an outstanding performance in RHL prediction, while the performance of PSO-SVM and HHO-SVM is slightly inferior to that of MFO-SVM. However, the performances of the three hybrid models have improved significantly compared to the unoptimized SVM model.

5 Conclusions

Rockburst is a common disaster in deep hard rock mines and tunnel engineering, which is potentially dangerous to personnel and equipment. This paper studied the high-precision prediction of RHL by SVM classification technology, which is vital for rockburst hazards prevention and control in mines as well as tunneling projects.

The parameters of the model in this paper contain the total energy, apparent stress, moment magnitude, and other variables in microseismic monitoring. Machine learning can better clarify the relationship between multiple highly nonlinear variables compared to the traditional rockburst prediction methods, which are mostly based on microseismic monitoring.

Based on the SVM model, three optimization strategies are combined to select the best combination of hyperparameters for the model. After the optimization of PSO, HHO, and MFO algorithms, the three kinds of mixed classification models (PSO-SVM, HHO-SVM, and MFO-SVM), and SVM models were comprehensively evaluated, and thus, the optimal combination of hyperparameters and the model with the best classification capacity were obtained.

Experimental results showed that the MFO-SVM performs best among the three hybrid classification models proposed in this article with the same dataset with the highest ACC and PRE (ACC = 0.9559, PRE = 0.9063) and is more suitable for rockburst prediction using microseismic information. After the improvement of the three optimization algorithms, the prediction performance of SVM models was significantly improved, and the kappa coefficients of PSO-SVM, HHO-SVM, and MFO-SVM prediction models reached 0.8477, 0.8461, and 0.9094, respectively. Given the complex relationship between each input variable and the rockburst hazard, these results are highly satisfactory.

The results showed that the three optimization methods have different optimization effects on the prediction capacity of the SVM model. A comparison shows that MFO-SVM has the optimal comprehensive prediction performance, which can be applied to the prediction of rockburst hazards based on microseismic information. The limitation of rockburst prediction using the SVM method in this paper is the limited sample size of the dataset, with only 343 microseismic monitoring samples in total. On the other hand, there may be other characteristic parameters that are not covered in this study that affect the RHL. Therefore, with the continuous expansion of the sample data and more associated characteristic parameters being considered in the model, the prediction capacity of the hybrid classification model will be further improved.

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Adoko AC, Gokceoglu C, Wu L, Zuo QJ (2013) Knowledge-based and data-driven fuzzy modeling for rockburst prediction. Int J Rock Mech Min Sci 61:86–95

Andrzej L, Zbigniew I (2009) Space-time clustering of seismic events and hazard assessment in the Zabrze-Bielszowice coal mine, Poland. Int J Rock Mech Min Sci 46:918–928

Barton N, Lien R, Lunde J (1974) Engineering classification of rock masses for the design of tunnel support. Rock Mech Rock Eng 6(4):189–236

Becka DA, Brady BHG (2002) Evaluation and application of controlling parameters for seismic events in hard-rock mines. Int J Rock Mech Min Sci 39:633–642

Bhandari AK, Kumar A, Singh GK (2015) Modified artificial bee colony based computationally efficient multilevel thresholding for satellite image segmentation using Kapur’s, Otsu and Tsallis functions. Expert Syst Appl 42(3):1573–1601

Bi L, Xie W, & Zhao J (2019). Automatic recognition and classification of multi-channel microseismic waveform based on DCNN and SVM. Computers & geosciences 123: 111–120

Blake W, Hedley DGF (2003) Rockbursts, case studies from North American hardrock mines. Society for Mining, Metallurgy and Exploration Inc., New York, pp 121

Chen Y, Ma G, Wang H, Li T (2018) Evaluation of geothermal development in fractured hot dry rock based on three dimensional unified pipe-network method. Appl Therm Eng 136:219–228

Civicioglu P (2012) Transforming geocentric cartesian coordinates to geodetic coordinates by using differential search algorithm. Comput Geosci 46:229–247

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: MHS’95. Proceedings of the sixth international symposium on micro machine and human science, 4–6 Oct. 1995, New York, NY, USA, IEEE

Fan J, Dong T, Hu P, Peng C (2013) Failure behavior of deep hard rock with rockburst tendency. Min Eng Res 28(2):10–15

Feng GL, Feng XT, Chen BR, Xiao YX, Yu Y (2015) A microseismic method for dynamic warning of rockburst development processes in tunnels. Rock Mech Rock Eng 48(5):2061–2076

Goh ATC, Goh SH (2007) Support vector machines: their use in geotechnical engineering as illustrated using seismic liquefaction data. Comput Geotech 34:410–421

Hanks TC, Kanamori H (1979) A moment magnitude scale. J Geophys Res: Solid Earth 84(B5):2348–2350

Heal D, Potvin Y, Hudyma M (2006) Evaluating rockburst damage potential in underground mining. In: Yale, D.P. et al. (Eds.), Proceedings of 41st U.S. Symposium on Rock Mechanics (USRMS). USA, Curran Associates, Colorado School of Mines, 322–329

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872

Hoang ND, Bui DT (2018) Predicting earthquake-induced soil liquefaction based on a hybridization of kernel Fisher discriminant analysis and a least squares support vector machine: a multidataset study. Bull Eng Geol Env 77:191–204

Hoang ND, Pham AD (2016) Hybrid artificial intelligence approach based on metaheuristic and machine learning for slope stability assessment: a multinational data analysis. Expert Syst Appl 46:60–68

Huimin L, Fangyuan X, Baoju L, Min D (2021) Time series prediction method of rockburst hazard level based on CNN-LSTM. J Central South Univ (Nat Sci)

Hou KY, Shao GH, Wang HM et al (2018) Research on practical power system stability analysis algorithm based on modified SVM. Prot Control Mod Power Syst 3

Kaiser PK, Tannant DD, McCreath DR (1996) Canadian rockburst support handbook. Geomechanics Research Centre, Laurentian University, Sudbury, Ontario, p 314

Kohestani VR, Hassanlourad M, Ardakani A (2015) Evaluation of liquefaction potential based on CPT data using random forest. Nat Hazards 79:1079–1089

Leger JP (1991) Trends and causes of fatalities in South African mines. Saf Sci 14(3–4):169–185

Li T, Cai MF, Cai M (2007) A review of mining-induced seismicity in China. Int J Rock Mech Min Sci 44:1149–1171

Li T, Ma C, Zhu M, Meng L, Chen G (2017) Geomechanical types and mechanical analyses of rockbursts. Eng Geol 222:72–83

Li C, Zhou J, Armaghani DJ, Cao W, Yagiz S (2021) Stochastic assessment of hard rock pillar stability based on the geological strength index system. Geomech Geophys Geo-Energy GeoResour 7(2):47

Li C, Zhou J, Khandelwal M, Zhang X, Monjezi M, Qiu Y (2022) Six novel hybrid extreme learning machine–swarm intelligence optimization (ELM–SIO) models for predicting backbreak in open-pit blasting. Natural Resources Research 31(5):3017–3039

Li E, Yang F, Ren M, Zhang X, Zhou J, Khandelwal M (2021) Prediction of blasting mean fragment size using support vector regression combined with five optimization algorithms. Journal of Rock Mechanics and Geotechnical Engineering 13(6):1380–1397

Li E, Zhou J, Shi X, Armaghani DJ, Yu Z, Chen X, Huang P (2020) Developing a hybrid model of salp swarm algorithm-based support vector machine to predict the strength of fiber-reinforced cemented paste backfill. Eng Comput 1–22

Lin B, Wei X, Junjie Z (2019) Automatic recognition and classification of multi-channel microseismic waveform based on DCNN and SVM. Comput Geosci 123:111–120

Lizhong T, Jinhui W, Jun Z, Xibing L (2011) Prediction of seismic apparent stress and deformation in large-scale mining mines and regional dangerous earthquakes. Chin J Rock Mech Eng 30(6):1168–1178

Marini F, Walczak B (2015) Particle swarm optimization (PSO). A tutorial. Chemom Intell Lab Syst 149:153–165

Mccreary R, Mcgaughey J, Potvin Y et al (1992) Results from MS monitoring, conventional instrumentation, and tomography surveys in the creation and thinning of a burst-prone sill pillar. Pureappl Geophys 139:349–373

Mendecki AJ (1993) Keynote address: real time quantitative seismology in mines. In: Proceedings of Third International Symposium on Rock- bursts and Seismicity in Mines 16–18 August 1993. Kingston, Ontario, Canada. 287–295

Min Q, Shaohui T, Bigen Xu (2013) Microseismic monitoring and prediction of ground pressure disaster in Ashele Copper Mine. Min Res Dev 3:58–63

Mirghasemi S, Andreae P, Zhang MJ (2019) Domain-independent severely noisy image segmentation via adaptive wavelet shrinkage using particle swarm optimization and fuzzy C-means. Expert Syst Appl 133:126–150

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl-Based Syst 89:228–249

Ortlepp WD (2005) RaSiM comes of age—a review of the contribution to the understanding and control of mine rockbursts. In proceedings of the sixth international symposium on rockburst and seismicity in mines, Perth, Western Australia 9–11

Pandiyan V, Caesarendra W, Tjahjowidodo T et al (2018) In-process tool condition monitoring in compliant abrasive belt grinding process using support vector machine and genetic algorithm. J Manuf Process 31:199–213

Poplawski RF (1997) Seismic parameters and rockburst hazard at MtCharlotte mine. Int J Rock Mech Min Sci 34(8):1213–1228

Pu Y, Apel DB, Wang C, Wilson B (2018) Evaluation of burst liability in kimberlite using support vector machine. Acta Geophys 66(5):973–982

Schloerke B, Crowley J, Cook D et al (2011) Ggally: extension to ggplot2

Shi XZ, Zhou J, Wu BB et al (2012) Support vector machines approach to mean particle size of rock fragmentation due to bench blasting prediction. Trans Nonferrous Met Soc China 22:432–441

Sokolova M, Lapalme G (2009) A systematic analysis of performance measures for classification tasks. Inf Process Manage 45:427–437

Su GS, Zhang XF, Yan LB (2008) Rockburst prediction method based on case reasoning pattern recognition. J Min Saf Eng 25(1):63–67

Trifu CI, Suorineni FT (2009) Use of MS monitoring for rockburst management at VALE INCO mines. In: Proc Seventh Int Symp Rock Burst Seism Mines, 20–23 August 2009, Dalian, China. 1105–1114.

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Wang S-m, Zhou J, Li C-q et al (2021) Rockburst prediction in hard rock mines developing bagging and boosting tree-based ensemble techniques. J Central South Univ 28:527–542

Wu JL (2014) Deformation and failure mechanism of surrounding rock under frequent blasting mining in Dongguashan Copper Mine, Master’s Thesis, Central South University

Xie C, Nguyen H, Bui XN, Nguyen VT, Zhou J (2021) Predicting roof displacement of roadways in underground coal mines using adaptive neuro-fuzzy inference system optimized by various physics-based optimization algorithms. Journal of Rock Mechanics and Geotechnical Engineering 13(6):1452–65

Xiting F, Yaxun X, Guangliang F, Zhibin Y, Bingrui C, Chengxiang Y, Guoshao Su (2019) Study on rockburst incubation process. Chin J Rock Mech Eng 38(4):649–673

Young-Su K, Byung-Tak K (2006) Use of artificial neural networks in the prediction of liquefaction resistance of sands. J Geotech Geoenviron Eng 132:1502–1504

Zhang CQ, Zhou H, Feng XT (2011) An index for estimating the stability of brittle surrounding rock mass: FAI and its engineering application. Rock Mech Rock Eng 44:401–414

Zhao HB (2008) Slope reliability analysis using a support vector machine. Comput Geotech 35:459–467

Zhao HB, Ru Z-L, Yin S (2007) Updated support vector machine for seismic liquefaction evaluation based on the penetration tests. Mar Georesour Geotechnol 25:209–220

Zhou KP, Gu DS (2004) Application of GIS-based neural network with fuzzy self-organization to assessment of rockburst tendency. Chin J Rock Mech Eng 23(18):3093–3097

Zhou J, Li XB, Shi XZ (2012) Long-term prediction model of rockburst in underground openings using heuristic algorithms and support vector machines. Saf Sci 50:629–644

Zhou J, Li XB, Mitri HS (2015) Comparative performance of six supervised learning methods for the development of models of hard rock pillar stability prediction. Nat Hazards 79:291–316

Zhou J, Li X, Mitri HS (2016) Classification of rockburst in underground projects: comparison of ten supervised learning methods. J Comput Civ Eng 30:4016003

Zhou J, Shi X, Li X (2016) Utilizing gradient boosted machine for the prediction of damage to residential structures owing to blasting vibrations of open pit mining. J Vib Control 22(19):3986–3997

Zhou J, Shi XZ, Huang RD, Qiu XY, Chen C (2016) Feasibility of stochastic gradient boosting approach for predicting rockburst damage in burst-prone mines. Trans Nonferrous Metals Soc China 26(7):1938–1945

Zhou J, Li X, Mitri HS (2018) Evaluation method of rockburst: state-of-the-art literature review. Tunn Undergr Space Technol 81:632–659

Zhou J, Li EM, Yang S et al (2019) Slope stability prediction for circular mode failure using gradient boosting machine approach based on an updated database of case histories. Saf Sci 118:505–518

Zhou T, Lu HL, Wang WW et al (2019) GA-SVM based feature selection and parameter optimization in hospitalization expense modeling. Appl Soft Comput 75:323–332

Zhou J, Koopialipoor M, Li EM et al (2020) Prediction of rockburst risk in underground projects developing a neuro-bee intelligent system. Bull Eng Geol Env 79:4265–4279

Zhou J, Qiu Y, Zhu S, Armaghani DJ, Khandelwal M, Mohamad ET (2021) Estimation of the TBM advance rate under hard rock conditions using XGBoost and Bayesian optimization. Undergr Space 6(5):506–515

Zhou J, Li C, Arslan CA et al (2021) Performance evaluation of hybrid FFA-ANFIS and GA-ANFIS models to predict particle size distribution of a muck-pile after blasting. Eng Comput 37:265–274

Zhou J, Huang S, Qiu Y (2022) Optimization of random forest through the use of MVO, GWO and MFO in evaluating the stability of underground entry-type excavations. Tunnelling and Underground Space Technology 124:104494

Zhou J, Shen X, Qiu Y, Shi X, Khandelwal M (2022) Cross-correlation stacking-based microseismic source location using three metaheuristic optimization algorithms. Tunnelling and Underground Space Technology 126:104570

Zhou J, Qiu Y, Armaghani DJ, Zhang W, Li C, Zhu S, Tarinejad R (2021) Predicting TBM penetration rate in hard rock condition: a comparative study among six XGB-based metaheuristic techniques. Geoscience Frontiers 12(3):101091

Zhou J, Huang S, Wang M, et al. (2022) Performance evaluation of hybrid GA–SVM and GWO–SVM models to predict earthquake-induced liquefaction potential of soil: a multi-dataset investigation. Engineering with Computers 38:4197–4215. https://doi.org/10.1007/s00366-021-01418-3

Funding

This research was funded by the National Science Foundation of China (42177164 and 41807259), the Distinguished Youth Science Foundation of Hunan Province of China (2022JJ10073), and the Innovation-Driven Project of Central South University (2020CX040).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• Support vector machine (SVM) with PSO, HHO, and MFO for rockburst prediction modeling.

• ACC, PRE, kappa coefficients, and confusion matrix are used to compare the effect of hybrid rockburst prediction models.

• MFO-SVM hybrid model has the best effect on rockburst prediction.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, J., Yang, P., Peng, P. et al. Performance Evaluation of Rockburst Prediction Based on PSO-SVM, HHO-SVM, and MFO-SVM Hybrid Models. Mining, Metallurgy & Exploration 40, 617–635 (2023). https://doi.org/10.1007/s42461-022-00713-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42461-022-00713-x