Abstract

In this paper, a stochastic gradient method based adaptive version of the radial basis function neural network has proposed to map the pattern features of the control chart patterns in different categories to recognize their belonging class. Adaptiveness has given over the spreadness and centers of Gaussian basis function appeared in the hidden nodes of the radial basis function neural network. Along with normal abnormalities in patterns, the mixture of different abnormal patterns has also considered capturing the worst possible conditions of abnormalities in real time. The advantages of the proposed method have appeared as very high recognition accuracy, minimum error in learning and generalize performance with small training dataset in control chart pattern recognition. Achieved performance has compared with the state of art results available in the literature which has applied feature based recognition using Support vector machine and Genetic algorithm. The proposed method has enhanced the recognition generalization of control chart patterns with simplicity in design and high level of decision confidence. The performances have achieved through the simulation-based experiments over a huge number of patterns containing ten different types of pattern and on average, 99.99% accuracy has achieved.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Control chart pattern (CCP) provides the condition of the process of consideration hence it has been used as a diagnostic tool in maintaining the quality of the process. Generally, a process is considered as out-of-control under two different appearances either sampled data of interest appears beyond the defined control limit or there is unnatural behavior appear in the pattern. It’s easy to detect the defect in the former case while it’s difficult to recognize the latter case condition because of inherent random noise. There are different possibilities of control chart patterns exist, among them there are six basics patterns exist [1] e.g. normal (NOR), cyclic (CYC), increasing trend (UT), decreasing trend (DT), upward shift (US) and downward shift (DS), as shown in Fig. 1 while other possibilities are derived from these six patterns as shown in Fig. 2. Except for the normal pattern, all other patterns are the indication of some kind of problem in the process, hence, it is very necessary to recognize the pattern precisely and efficiently to maintain the desired level of quality as well as reduce the cost. There are some methods like zone test or run rules available to recognize the CCPs but they carry high false alarms. Right detection of patterns not only provides the in-control/out-of-control but also knowledge of abnormality in patterns can help to understand the possible reason of cause. This makes troubleshooting process more efficient. Recently, the focus of research has shifted towards automated recognition of CCPs through the intelligent algorithmic approach. Different possibilities of the solution have been explored in the past like rule-based solution which mainly derived from the statistical characteristics of different patterns or computational intelligent based solution which mainly extract the features from patterns to classify the different types of pattern class. It was observed that statistical overlapping of pattern features may cause poor efficiency in the rule-based solution while computational intelligence-based solution like artificial neural network (ANN), extract and learn the features from the pattern directly. In the past, most of the research has considered the single abnormality in the control chart patterns [1,2,3]. But there may be a number of reasons that appeared abnormalities are the mixture of these single abnormalities [4, 16]. It is obvious that recognition of mix patterns is more challenging.

In this paper, an adaptive form of radial basis function network has applied for directly driven recognition of ten different possibilities of the control chart pattern. Six fundamental categories of patterns as shown in Fig. 1 and four mix categories of patterns as shown in Fig. 2 have considered in the training and test data set. Stochastic gradient descent based adaptiveness of Gaussian kernel function parameters (center position and function spreadness) have applied in each iteration of learning so that available features in input data could extract more easily and appropriate manner. It was observed that with the very small size of the training data set, robust recognition performance has been achieved over a very large test dataset. Obtained performance from the proposed method has shown superior outcome in compared to feature based recognition solution and other results available in the recent literature.

In Sect. 2, related work in the area of CCP recognition has discussed. The modeling of patterns has given in Sect. 3. The proposed work in details has presented in Sect. 4. The detail experimental results and analyses have discussed in Sect. 5 and the conclusion has given at the end.

2 Related work

Researchers have shown increasing interest in the past for solving the problem of automated recognition of control chart patterns. The number of researchers has given the attention to perform the recognition in the two-stage model, first, there were exclusive features extraction from the patterns and later some kind of classification strategy has applied. There are varieties of statistical and shape based features, which have been considered useful for control chart pattern recognition [2]. A comparison between feature based recognition with raw data based recognition through LVQ network has been explored [3]. Wang et al. [4] has applied the numerical fitting method along with the neural network to improve the performance. Based on the hybrid approach of independent component analysis (ICA) and decision tree (DT), Gauri et al. [5] has proposed the concurrent control chart recognition in which two unnatural variations appeared together. For vertical drilling process application, an expert system for control chart pattern based on shape features has been proposed in Ref. [6]. The shape and statistical features based recognition through multilayer perceptron neural network have proposed in Ref. [7]. Sequential forward selection and extreme learning machine have applied in [8] to improve the recognition performance of the control chart. Weighted support vector machine (WSVM) has applied in Ref. [9] for process monitoring and fault diagnosis. Consensus clustering framework has applied in Ref. [10] as an unsupervised method for control chart pattern recognition. A combination of SVM and ICA have applied in Ref. [11] to monitor and recognition of CCP. It is difficult to find the huge dataset from real-time to train the classifier for recognition purpose. De la Torre [12] has proposed the method to generate the patterns synthetically for CCP. Integration of statistical process control (SPC) and engineering process control (EPC) have been proposed in Ref. [13] in which Extreme learning machine (ELM) and random forest (RF) have applied to recognize the disturbance available in CCP. Application of SVM in the area of statistical process control has been explored in detail [14]. Possibilities to improve the mixed control chart pattern recognition performance of SVM by parametrizing through Genetic algorithm have discussed in Refs. [15, 16]. Based on neural architecture the recognition of control chart patterns (CCPs) has proposed in Refs. [17, 18]. Fitted line and fitted cosine curve of samples to recognize and analyze the unnatural patterns have been discussed in Ref. [19]. Feature-based recognition using an adaptive neuro-fuzzy inference system (ANFIS) along with fuzzy c-mean (FCM) has proposed in Ref. [20]. To overcome the degrading performance with large sample size in the conventional control chart, Shi et al. [21] has presented a chart which is based on the continuous ranked probability score. The shape and statistical feature based solution using RBFNN have applied in Ref. [22] and associated rules have applied to select the best set of shape and statistical features.

3 Modeling of data generation in CCP

In order to analyze the CCPs recognition, the Monte Carlo method is used to get the sample data. All patterns except for normal patterns illustrate that the process being monitored is not functioning correctly and requires adjustment. For this study, the patterns of all six basics patterns were generated using equations as shown in Table 1 and four mixture patterns have modeled using equations shown in Table 2. Each pattern was taken as a time series of 60 data points. The modeling equations were used to create the data points for the various patterns carrying \(\eta\) as the nominal mean value of the process variable under observation, \(\sigma\) as the standard deviation of the process variable, \(a\) as the amplitude of cyclic variations in a cyclic pattern (set to < 15), \(g\) as the gradient of an increasing trend pattern or a decreasing trend pattern (set in the range 0.2–0.5), b indicates the shift position in an upward shift pattern and a downward shift pattern (b = 0 before the shift and b = 1 at the shift and thereafter), s is the magnitude of the shift (set between 7.5 and 20), r(:) is a function that generates random numbers normally distributed between − 3 and 3, t is the discrete time at which the monitored process variable is sampled (set within the range 1–60), T is the period of a cycle in a cyclic pattern (set between 4 and 12 sampling intervals) and p(t) is the value of the sampled data point at time t.

4 Proposed work: RBF adaptiveness and its application in CCP

The supervised learning of the neural network is often thought-about because of the curve fitting method. The network is given with training pairs, every consisting of a vector from the associated input data set in conjunction with the desired network response. Through an outlined learning formula, the network performs the changes of its weights so error between the particular and desired response is reduced relative to some optimization criteria. Once trained, the network performs the interpolation within the output vector house. A nonlinear Mapping between the input and also the output vector areas are often achieved with radial basis function. The design of the RBF NN consists of three layers: an input layer, one layer of nonlinear process neurons referred to as hidden layer and also the output layer. The output of RBFNN is calculated consistent with Eq. (1).

where, \(x \in \Re^{n \times 1}\) is an input vector,\(\phi_{k} (.)\) is a function from \(\Re^{ + }\) to \(\Re\), \(\left\| . \right\|_{2}\) denotes the Euclidean norm, \(W_{ik}\) are the weights in the output layer. N is the number of neurons in the hidden layer, and \(c_{k} \in \Re^{n \times 1}\) is the RBF centers in the output space. For each neuron in the hidden layer, the Euclidean distance between its associated centers and the input to the network is computed. The output of the hidden layer is a nonlinear function of the distance. Finally, the output of the network is computed as a weighted sum of the hidden layer outputs. The functional form of \(\phi_{k} (.)\) is assumed to be given and is mostly Gaussian function as given by Eq. (2)

where, \(\sigma\) parameter controls the “width” of RBF and is commonly referred as spread parameter. The centers are defined points that are assumed to perform an adequate sampling of the input vector space. They are usually chosen as a subset of the input data. In the case of the Gaussian RBF, the spread parameter \(\sigma\) is commonly set according to the following heuristic relationship

where dmax is the maximum Euclidean distance between the selected centers and K is the number of centers. Using Eq. 3 the RBF of a neuron in the hidden layer of the network is given by

4.1 Adaptive RBF NN

In the fixed center based mostly RBF NN, there is just one adjustable parameter of network obtainable and it’s weights of the output layer. This approach is easy, but to perform adequate sampling of the input, an oversized variety of centers should be designated from the input data set. This produces a comparatively terribly massive network. In the planned methodology their square measure potentialities to regulate all the three sets of network parameters that weight, the position of the RBF centers and therefore the dimension of the RBF. Therefore, alongside the weights within the output layer, each the position of the centers moreover because the unfold parameter for each process unit within the hidden layer undergoes the method of supervised learning. The primary step in the development is to outline fast error value operate as

When the chosen RBF is Gaussian, Eq. (5) becomes

The equations for updating the network parameters are given by Eqs. (7)–(9)

where \(e(n) = \widehat{y(n)} - y_{d} (n),\;y_{d} (n)\),\(y_{d} \left( n \right)\) is the desired network output, and \(\mu_{w} ,\;\mu_{c} ,\;\mu_{\sigma }\) are appropriate learning parameters.

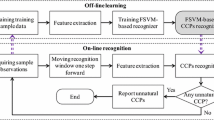

The working flow of the proposed adaptive RBF has shown in Fig. 3. Initially, with the predefined parameters’ values, several sets of different types of patterns have generated through the modeling equations. Pre-processing has applied in terms of scaling down the value of patterns in the range of [0 1]. The different number of the pattern set formed, where each set carries a different variety of patterns. A suitable architecture has applied to learn the pattern classification knowledge by assuming the target as the maximum value (equal to 1) for corresponding pattern class and minimum (equal to 0) for other pattern classes. The mean square error has considered for the error function to decide the quality of learning. Gradient method has applied to change the values of weights, centers, and spreadness. Under an iteration, each set of patterns (B1, B2,….Bn) as shown in Fig. 3 has appeared in the sequential fashion to increase the generalize quality of learning.

5 Experimental result and analysis

In this paper, there are three parts of the experimental module. In the first stage, the advantage of proposed adaptiveness in RBF network has shown in comparison to the static RBF over six basic patterns of CCP. In the second module, ARBF has applied for all 10 patterns (basics + mixture). In the third module, comparative performances have presented between the proposed solution and SVM-GA based approach [16]. Simulation experiments have been done in MATLAB environment.

5.1 Optimal pattern length

The pattern length is very crucial in pattern learning as well as their recognition. The small size of pattern length will not provide enough distinguish features from one pattern category to others while a very long length of the pattern will have more computational cost along with response delay. To get the effect of pattern length over learning and recognition performance, different sizes of pattern length have tested. Six basic forms of CCP have considered for experiment with the pattern length of 20,30,40,50,60 and 70 samples in time and adaptive RBF has applied for learning as well as for performance evolution under 5 independent trial over 600 training and 5400 test patterns and obtained mean performances have shown in Table 3. It was observed that with the pattern length of 20, 30 and 40 there was a large value of mean square error (MSE) in compare to pattern length of 50, 60 and 70. Learning with large pattern length was a comfort because of the availability of more features of the corresponding category. It is observed that as the pattern length is closer to the 60 samples, the MSE is small as well as the performance has achieved the maximum correct recognition and no significant benefit observed further even after increasing the length as shown in Table 3. Hence in this paper, all experiments have conducted with pattern length equal to 60 samples.

5.2 Performance analysis between static vs adaptive RBF

An optimal number of required hidden nodes in the RBF network is a key factor in deciding the quality of final performance. In most of the cases, pre-knowledge of required nodes number does not exist. Hence, the required number of optimal hidden nodes for static and adaptive RBF has been estimated by varying the number of nodes in ten different experimental trials and obtained final mean error obtained to understand the effects as shown in Table 4 and in Table 5. There was total of 6000 (1000 × 6) patterns have been generated by equations as shown in Table 1. Training has applied up to 100 iterations over 600 (100 × 6) patterns and remaining 5400 patterns have considered for test purpose. For SRBF, trials have given with 8, 10, 15 and 20 hidden nodes and observed that mean error has achieved minimum with 10 hidden nodes. For ARBF there were five different values of hidden nodes number, 15,20,25,30 and 40 have applied. All three learning parameters \(\mu_{w} ,\;\mu_{c} ,\;\mu_{\sigma }\) have selected as 0.1. It can observe from Table 4 that error was closer for 25, 30 and 40 nodes. To maintain better generalization, 25 hidden nodes have selected as the final value for further experimental work. The learning convergence for SRBF and ARBF with 10 and 25 hidden nodes have shown in Fig. 4. It can observe that there was better convergence in all trials for ARBF. The comparative mean CCP recognition performance over 10 independent trials for SRBF with 10 hidden nodes and ARBF with 25 nodes have shown in Table 6. It can observe that ADRBF true recognition performance has come around 99.9% while SRBF has given the recognition accuracy around 92.25% which is significantly very low in comparison. Decision confidence, which is a quality parameter of decision quality, defined as category values of a test pattern outcome over the different class. ARBF mean decision confidence overall patterns have shown in Fig. 5 and it is observed that with a very large margin, patterns have placed in their belonging classes. This quality is very useful against, some kind of temporary noise.

5.3 Performance analysis of ARBF over Mix CCP

As it was clear earlier that ARBF has shown the superior performance over six basic patterns recognition, hence ABRF has applied to recognize the mixture patterns. First a data set of 1000 group, where each group has 10 different types of patterns (total 10,000 patterns) have developed using the equations available in Tables 1 and 2. A part of total data set, 100 groups (total 1000 patterns) have considered for training purpose and up to 100 iterations, training has applied, while the remaining 900 groups (contains total 9000 patterns) have applied as test patterns. To find the optimal hidden nodes number, different possibilities of nodes number 20, 25, 30, 35, 40 have explored under 10 independent trials. Network with 35 hidden nodes have shown the better convergence hence has been selected for the further purpose and corresponding mean CCP recognition performances over test patterns for 10 independent trials have presented in Table 7. For the training data set, the obtained mean performance was 99.56% while for the test data set it has shown 99.9911%. Mean decision confidence for all the patterns have shown in Fig. 6 and observe that there is absolute accuracy in the decision for all 10 different pattern categories.

5.4 Comparative performance analysis of ARBF against SVM-GA [16] over Mixed CCP

Support vector machine has shown numerous applicability successfully in various applications. Selection of kernel and associated parameters values decide the outcome quality heavily. In [16], a combination of SVM with the genetic algorithm (GA) has applied to recognize the mixed CCP. A series of eight statistical features (mean, standard deviation, mean-square value, autocorrelation, positive cusum, negative cusum, skewness, kurtosis) and five shape features (slope, the number of mean crossing, the number of least-square line crossing, the area between the pattern and its mean line, the area between the pattern and its least-square line) have applied as features set which have been extracted from patterns. These features have been applied to MSVM and parameters optimization has done through GA. Total 100 groups of the pattern have been generated, in which 50 groups have been considered for training.

To make the comparison performance more meaningful, the same set of data modeling has been done as given in Ref. [16]. Learning has given over training data of 50 groups (each group carried 10 patterns) up to 100 iterations. But instead of taking only 50 new groups (total 500 patterns) of data set for test purpose, 450 new groups (4500 patterns) of patterns have been applied to get the performance of purposed method closer in the long run of a practical situation. The number of hidden nodes have considered as 35. The obtained mean performance by proposed method over test patterns of all five trials has compared with performance obtained in Ref. [16] as shown in Table 8. On average (estimated as the ratio of the sum of accurate % recognition under all different pattern categories to the number of different pattern categories), there was 97.6% accurate recognition reported in [16] while the proposed method has delivered the 99.9937% recognition accuracy over the vast number of test patterns.

6 Conclusion

Automated control chart pattern recognition has shown a remarkable improvement in the quality control for the manufacturing industrial process. In this paper, an adaptive radial basis function has applied to recognize the wide form of patterns variations with very accuracy and precision. The proposed method has freedom from any kind of feature extraction requirement, which cause to improve the speed of recognition. A huge number of test patterns have applied to ensure the generalization characteristics of outcomes. Performance evaluation has been done over fundamental six patterns of CCP as well as the mixture of them. The proposed method has shown much better recognition accuracy over feature based recognition process available in the literature, using Support vector machine and Genetic algorithm. It has also observed that there was a very crisp level of decision strength available in outcomes which make the solution more robust in the presence of noise.

References

Wang C-H, Kuo W (2007) Identification of control chart patterns using wavelet filtering and robust fuzzy clustering. J Intell Manuf 18(3):343–350

Gauri SK, Chakraborty S (2007) A study on the various features for effective control chart pattern recognition. Int J Adv Manuf Technol 34(3–4):385–398

Jiang P, Liu D, Zeng Z (2009) Recognizing control chart patterns with neural network and numerical fitting. J Intell Manuf 20:625

Wang C-H, Dong T-P, Kuo W (2009) A hybrid approach for identification of concurrent control chart patterns. J Intell Manuf 20(4):409–419

Gauri SK (2010) Control chart pattern recognition using feature-based learning vector quantization. Int J Adv Manuf Technol 48(9–12):1061–1073

Bag M (2012) An expert system for control chart pattern recognition. Int J Adv Manuf Technol 62(1–4):291–301

Ranaee V, Ebrahimzadeh A (2013) Control chart pattern recognition using neural networks and efficient features: a comparative study. Pattern Anal Appl 16(3):321–332

Zhang Y, Lin X (2014) Recognition method for control chart patterns based on improved sequential forward selection and extreme learning machine. In: International conference on progress in informatics and computing (PIC), IEEE. https://doi.org/10.1109/pic.2014.6972300

Xanthopoulos P, Razzaghi T (2014) A weighted support vector machine method for control chart pattern recognition. Comput Ind Eng 70:134–149

Haghtalab S, Xanthopoulos P, Madani K (2015) A robust unsupervised consensus control chart pattern recognition framework. Expert Syst Appl 42(19):6767–6776

Kao L-J, Lee T-A, Luhine C-J (2016) A multi-stage control chart pattern recognition scheme based on independent component analysis and support vector machine. J Intell Manuf 27(3):653–664

De la Torre Gutierrez H (2016) Estimation and generation of training patterns for control chart pattern recognition. Comput Ind Eng 95:72–82

Shao YE, Chiu C-C (2016) Applying emerging soft computing approaches to control chart pattern recognition for an SPC–EPC process. Neurocomputing 201:19–28

Cuentas S, Peñabaena-Niebles R, Garcia E (2017) Support vector machine in statistical process monitoring: a methodological and analytical review. Int J Adv Manuf Technol 91:485

Wang C, Zhao C (2017) Recognition of control chart pattern using improved supervised locally linear embedding and support vector machine. Procedia Eng 174:281–288

Zhang M, Cheng W (2015) Recognition of mixture control chart pattern using multiclass support vector machine and genetic algorithm based on statistical and shape features. Math Probl Eng, Article ID 382395

Shaban A, Shalaby MA (2010) A double neural network approach for the automated detection of quality control chart patterns. Int J Rapid Manuf 1(3):278–291

Hassan A (2011) An improved scheme for online recognition of control chart patterns. Int J Comput Aided Eng Technol 3(3–4):309–321

Lesany SA, Fatemi Ghomi SMT, Koochakzadeh A (2018) Development of fitted line and fitted cosine curve for recognition and analysis of unnatural patterns in process control charts. Pattern Anal Appl. https://doi.org/10.1007/s10044-018-0682-7

Zaman M, Hassan A (2018) Improved statistical features-based control chart patterns recognition using ANFIS with fuzzy clustering. Neural Comput Appl. https://doi.org/10.1007/s00521-018-3388-2

Shi L, Gong L, Lin DKJ (2018) CRPS chart: simultaneously monitoring location and scale under data-rich environment. Qual Reliab Eng Int 34(4):681–697. https://doi.org/10.1002/qre.2280

Addeh Abdoljalil, Khormali Aminollah, Golilarz Noorbakhsh Amiri (2018) Control chart pattern recognition using RBF neural network with new training algorithm and practical features. ISA Trans 79:202–216

Acknowledgements

This research has done in Manuro Tech Research Pvt. Ltd., Bangalore, India, under the Innovative solution for Future Technology program.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kadakadiyavar, S., Ramrao, N. & Singh, M.K. Efficient mixture control chart pattern recognition using adaptive RBF neural network. Int. j. inf. tecnol. 12, 1271–1280 (2020). https://doi.org/10.1007/s41870-019-00381-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41870-019-00381-z