Abstract

In this paper, we compare optimal homotopy asymptotic method and perturbation-iteration method to solve random nonlinear differential equations. Both of these methods are known to be new and very powerful for solving differential equations. We give some numerical examples to prove these claims. These illustrations are also used to check the convergence of the proposed methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The mathematical description of a significant number of scientific and technological problems leads to nonlinear differential equations and most of them cannot be solved analytically using traditional methods. Therefore, these problems are often handled by the most common methods such as Adomian decomposition method, homotopy decomposition method, Taylor collocation method, differential transform method, homotopy perturbation method, variational iteration method (Adomian 1988; Atangana and Abdon 2012; Atangana et al. 2013; Bildik et al. 2006; Bulut et al. 2003; He 1999, 2005; Bayram et al. 2012; Bildik and Konuralp 2006; Bildik and Deniz 2015a, b; Öziş and Ağırseven 2008). These methods can deal with nonlinear problems, but they have also problems about the convergence region of their series solution. These regions are generally small according to the desired solution. In order to cope with this task, researchers have recently proposed some new methods.

In the presented study, we apply the perturbation-iteration method (PIM) and optimal homotopy asymptotic method (OHAM) to obtain an approximate solution of random nonlinear differential equations. Each method has its own characteristic and significance that shall be examined. PIM is constructed recently by Pakdemirli et al. They have modified well-known perturbation method to construct perturbation-iteration method. It has been efficiently applied to some strongly nonlinear systems and yields very approximate results (Aksoy and Pakdemirli 2010; Şenol and Mehmet 2013; Aksoy et al. 2012; Dolapçı et al. 2013). On the other hand, Vasile Marinca et al. have developed optimal homotopy asymptotic method for solving many different types of differential equations (Marinca and Herişanu 2008; Marinca et al. 2008, 2009). This method is straight forward and reliable for the approximate solution of many nonlinear problems (Gupta and Ray 2014a, b, 2015a; b; Iqbal et al. 2010; Ali et al. 2010). OHAM also provides us with a convenient way to control the convergence of approximation series and adjust convergence regions. Our purpose is to solve some nonlinear problems and test their convergence for these illustrations. Our results also prove one more time that both of these methods are very effective and powerful to solve nonlinear problems.

2 Perturbation-Iteration Method

In this section, we give some information about perturbation-iteration algorithms. They are classified with respect to the number of terms in the perturbation expansion (n) and with respect to the degrees of derivatives in the Taylor expansions (m). Briefly, this process has been represented as PIA (n, m) (Marinca and Herişanu 2008; Marinca et al. 2008, 2009).

2.1 PIA(1,1)

In order to illustrate the algorithm, consider a second-order differential equation in closed form:

where y = y(x) and ɛ is the perturbation parameter. For PIA(1,1), we take one correction term from the perturbation expansion:

Substituting (2) into (1) then expanding in a Taylor series gives

Rearranging Eq. (3) yields a linear second-order differential equation as:

Note that all derivatives are computed at ɛ = 0. We can easily obtain (y c )0 from Eq. (4) by using an initial guess y 0. Then y 1 is determined by using this information. One can obtain satisfactory results for the considered equation by constructing an iterative scheme with the help of (2) and (4).

2.2 PIA(1,2)

As distinct from PIA(1,1), we need to take n = 1, m = 2 to obtain PIA(1,2). That is, we need to take also second-order derivatives:

or by rearranging

Note again that all derivatives are calculated at ɛ = 0. By means of (2) and (6), iterative scheme is developed for the particular equation considered.

3 Optimal Homotopy Asymptotic Method

In order to review the basic principles of OHAM, let us consider the nonlinear differential equation:

where g(x) is a source function, L, N and B are linear, nonlinear and boundary operators, respectively. First, we construct a homotopy h(ϕ i (x, p), p) which satisfies

where ϕ(x, p) is an unknown function, p is an embedding parameter, H(p) is a nonzero auxiliary function for p ≠ 0. Clearly, at p = 0 and p = 1, we have ϕ(x, 0) = y 0(x) and ϕ(x, 1) = y(x). So, as the embedding parameter p increases from 0 to 1, the solution ϕ(x, p) deforms from the initial guess y 0(x) to the exact solution y(x) of the original nonlinear differential equation. y 0(x) can be computed from (8) for p = 0:

For this study, we choose the auxiliary function H(p) in the form:

for the sake of simplicity. Here C 1, C 2,… are constants which are to be determined later. Most recently, Herişanu et al. have proposed a generalized auxiliary function:

where H i (t, C i ), i = 1, 2,… are auxiliary functions. Some examples of such generalized auxiliary functions are presented in the papers (Herişanu et al. 2012, 2015). Let us consider the Taylor expansion of the solution of Eq. (8) about p:

Substituting (11) and (10) into (8) and equating the coefficients of the like powers of p equal to zero, we obtain the linear equations:

and in general form

where N m (y 0, y 1, …, y m ) is the coefficient of p m in the expansion of about the embedding parameter p:

Previous researches have showed that the convergence of the series (11) depends upon the constants C 1, C 2,…. If the series (11) is convergent at p = 1, one has

Generally speaking, the solution of Eq. (7) can be determined approximately in the form:

Substituting (16) into (8), the general problem results in the following residual:

Obviously, when R(x, C i ) = 0 then the approximation y (m)(x, C i ) will be the exact solution. For determining C 1, C 2,…, a and b are chosen such that the optimum values for C 1, C 2, … are obtained using the method of least squares:

where R = L(y (m)) + g(x) + N(y (m)) is the residual and

After finding constants, one can get the approximate solution of order m. The constants C 1, C 2, … can also be defined from

where k i ∊ (a, b).

4 Numerical Examples

In this section, we will examine a few examples with a known analytic and numerical solution in order to compare the convergence of these two new methods. We wish to emphasize that the purpose of the comparisons is not definitive, but only to give the reader insight into the relative efficiencies of the two methods.

Example 1 Consider the following nonlinear differential equation (Fu 1989):

which has no exact solution.

4.1 PIA(1,1)

For the equation considered, an artificial perturbation parameter is inserted as follows:

Performing the required calculations

for the formula (3) and setting ɛ = 1 yields

We start the iteration by taking a trivial solution which satisfies the given initial conditions:

Substituting (25) into the iteration formula (24), we have

Inserting Eq. (26) into Eq. (2) and applying the initial conditions we get

We remind that y 1 does not represent the first correction term; rather it is the approximate solution after the first iteration. Following the same procedure, we obtain new and more approximate results:

4.2 PIA(1,2) Solution

As in the previous case, we construct a perturbation–iteration algorithm by taking one correction term in the perturbation expansion and two derivatives in the Taylor series. Then the algorithm takes the simplified form:

Using the trivial solution y 0 = 1, we have

Substituting the solution of (31) into (2) and applying the initial conditions yields

Following the same procedure using (32), the second iteration is obtained as

We do not give higher iterations here for brevity. One can easily realize that, we have functional expansion for PIA(1,2) instead of a polynomial expansion as obtained in PIA(1, 1).

4.3 OHAM Solution

We have

Problem of zero order is written as:

from which we obtain

Substituting Eq. (36) into (12), we get first-order problem:

and its solution is

The problem of second order

with the solution

We can obtain the approximate solution of second order from Eqs. (36), (38), (40) and (16) for m = 2:

As we have mentioned in the previous section, one can find constants C 1 and C 2 by using Eq. (20) and

Substituting \(x = \frac{1}{2},\frac{3}{4}\) into Eq. (42):

and solving (43) we obtain

and correspondingly the approximate solution of the second order takes the following form:

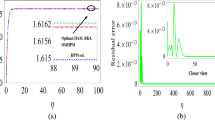

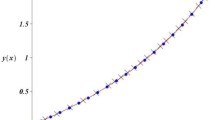

One can also compute more approximate results by following the same procedure with a computer program. We do not give higher iterations due to huge amount of calculations. Table 1 and Fig. 1 show the values of the PIM solutions, OHAM solution and solution by Runge–Kutta. It is clear that PIA(1,2) gives better results than OHAM even for m = 2. Table 2 demonstrates the absolute errors for different m.

Example 2 Consider the following nonlinear differential equation:

with the exact solution \(y\left( x \right) = { \ln } \left( {{ \cos } x} \right)\).

4.4 PIA(1,1)

For the equation considered, an artificial perturbation parameter is inserted as follows:

Performing the required calculations for the formula (3) yields

and setting ɛ = 1

We start the iteration by taking a trivial solution which satisfies the given initial conditions:

Substituting (50) into the iteration formula (49), we have

Inserting Eq. (51) into Eq. (2) and applying the initial conditions we get

We remind that y 1 does not represent the first correction term; rather it is the approximate solution after the first iteration. Following the same procedure, we obtain new and more approximate result:

4.5 PIA(1,2)

As in the previous case, we construct a perturbation–iteration algorithm by taking one correction term in the perturbation expansion and two derivatives in the Taylor series. Then Eq. (6) takes the simplified form:

Using the trivial solution y 0 = 0 and Eq. (2) we get

Following the same procedure with Mathematica, we get

Note that the function in the parentheses of the second term of Eq. (54) is approximated as 0 for simplicity.

4.6 OHAM

We have

Problem of zero order is written as:

from which we obtain

Substituting Eq. (59) into (12), we get first-order problem:

having solution

Second-order problem is

The solution of Problem (62) is given by

Following the procedure as in the previous example, we get

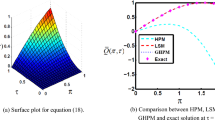

One can proceed to obtain higher iterations by using a computer program. Table 3 displays the approximate results of OHAM and PIM for m = 3. PIA(1,1) and PIA(1,2) gives better results than OHAM for this problem. Figure 2 also demonstrates the difference between OHAM solution and PIM solution. Table 4 shows the absolute errors of the proposed methods for m = 3.

5 Conclusions

This paper applied the OHAM and PIM algorithms to solve random nonlinear differential equations. These two methods are very effective and accurate for solving nonlinear problems arising in many fields of science. In this work, we consider two examples which were selected to show the computational accuracy for illustration purposes. We have showed that OHAM has substantial computational requirements and more cumbersome to handle these chosen problems when compared with the PIM. Perturbation-iteration algorithms find more approximate results with less computational work. It is worth mentioning also that there might be some new developments about OHAM, but we just use the early stage of OHAM for our comparison for simplicity. For this study, it may be concluded that, the PIA(1,2) is more effective and powerful in obtaining approximate solutions for the selected problems.

References

Adomian G (1988) A review of the decomposition method in applied mathematics. J Math Anal Appl 135(2):501–544

Aksoy Y, Pakdemirli M (2010) New perturbation–iteration solutions for Bratu-type equations. Comput Math Appl 59(8):2802–2808

Aksoy Y et al (2012) New perturbation-iteration solutions for nonlinear heat transfer equations. Int J Numer Methods Heat Fluid Flow 22(7):814–828

Ali J et al (2010) The solution of multipoint boundary value problems by the optimal homotopy asymptotic method. Comput Math Appl 59(6):2000–2006

Atangana A, Botha JF (2012) Analytical solution of the groundwater flow equation obtained via homotopy decomposition method. J Earth Sci Clim Chang 3:115. doi:10.4172/2157-7617.1000115

Atangana A, Belhaouari SB (2013) Solving partial differential equation with space-and time-fractional derivatives via homotopy decomposition method. Math Probl Eng 2013:9. doi:10.1155/2013/318590

Bayram M et al (2012) Approximate solutions some nonlinear evolutions equations by using the reduced differential transform method. Int J Appl Math Res 1(3):288–302

Bildik N, Deniz S (2015a) Comparison of solutions of systems of delay differential equations using Taylor collocation method, Lambert w function and variational iteration method. Sci Iran Trans D Comput Sci Eng Electr 22(3):1052

Bildik N, Deniz S (2015b) Implementation of Taylor collocation and Adomian decomposition method for systems of ordinary differential equations. AIP Publ 1648:370002

Bildik N, Konuralp A (2006) The use of variational iteration method, differential transform method and Adomian decomposition method for solving different types of nonlinear partial differential equations. Int J Nonlinear Sci Numer Simul 7(1):65–70

Bildik N et al (2006) Solution of different type of the partial differential equation by differential transform method and Adomian’s decomposition method. Appl Math Comp 172(1):551–567

Bulut H, Ergüt M, Evans D (2003) The numerical solution of multidimensional partial differential equations by the decomposition method. Int J Comp Math 80(9):1189–1198

Dolapçı IT, Şenol M, Pakdemirli M (2013) New perturbation iteration solutions for Fredholm and Volterra integral equations. J Appl Math 2013:5. doi:10.1155/2013/682537

Fu WB (1989) A comparison of numerical and analytical methods for the solution of a Riccati equation. Int J Math Educ Sci Technol 20(3):421–427

Gupta AK, Ray SS (2014) On the solutions of fractional burgers-fisher and generalized fisher’s equations using two reliable methods. Int J Math Math Sci 2014:16. doi:10.1155/2014/682910

Gupta AK, Ray SS (2014b) Comparison between homotopy perturbation method and optimal homotopy asymptotic method for the soliton solutions of Boussinesq-Burger equations. Comput Fluids 103:34–41

Gupta AK, Ray SS (2015) The comparison of two reliable methods for accurate solution of time‐fractional Kaup–Kupershmidt equation arising in capillary gravity waves. Math Methods Appl Sci 39(3):583–592. doi:10.1002/mma.3503

Gupta AK, Ray SS (2015b) An investigation with Hermite Wavelets for accurate solution of Fractional Jaulent-Miodek equation associated with energy-dependent Schrödinger potential. Appl Math Comput 270:458–471

He Ji-Huan (1999) Variational iteration method—a kind of non-linear analytical technique: some examples. Int J Non Linear Mech 34(4):699–708

He J-H (2005) Homotopy perturbation method for bifurcation of nonlinear problems. Int J Nonlinear Sci Numer Simul 6(2):207–208

Herisanu N, Marinca V, Madescu Gh (2015) An analytical approach to non-linear dynamical model of a permanent magnet synchronous generator. Wind Energy 18(9):1657–1670

Herisanu N, Marinca V (2012) Optimal homotopy perturbation method for a non-conservative dynamical system of a rotating electrical machine. Zeitschrift für Naturforschung A 67(8-9):509–516

Iqbal S et al (2010) Some solutions of the linear and nonlinear Klein-Gordon equations using the optimal homotopy asymptotic method. Appl Math Comput 216(10):2898–2909

Marinca V, Herişanu N (2008) Application of optimal homotopy asymptotic method for solving nonlinear equations arising in heat transfer. Int Commun Heat Mass Transfer 35(6):710–715

Marinca V, Herişanu N, Nemeş I (2008) Optimal homotopy asymptotic method with application to thin film flow. Cent Eur J Phys 6(3):648–653

Marinca V et al (2009) An optimal homotopy asymptotic method applied to the steady flow of a fourth-grade fluid past a porous plate. Appl Math Lett 22(2):245–251

Öziş T, Ağırseven D (2008) He’s homotopy perturbation method for solving heat-like and wave-like equations with variable coefficients. Phys Lett A 372(38):5944–5950

Ray SS, Gupta AK (2015) A numerical investigation of time-fractional modified Fornberg-Whitham equation for analyzing the behavior of water waves. Appl Math Comput 266:135–148

Şenol, Mehmet et al (2013) Perturbation-iteration method for first-order differential equations and systems. Abstr Appl Anal 2013:6. doi:10.1155/2013/704137

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bildik, N., Deniz, S. Comparative Study between Optimal Homotopy Asymptotic Method and Perturbation-Iteration Technique for Different Types of Nonlinear Equations. Iran J Sci Technol Trans Sci 42, 647–654 (2018). https://doi.org/10.1007/s40995-016-0039-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-016-0039-2