Abstract

In this paper, the properties of the newly developed flux-difference residual distribution methods will be analyzed. The focus would be on the order-of-accuracy and stability variations with respect to changes in grid skewness. Overall, the accuracy loss and the stability range of the new methods are comparable with the existing residual distribution methods. It will also be shown that new method has a general mathematical formulation which can easily recover the existing residual distribution methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Although finite volume (FV) methods are widely used in many computational fluid dynamics (CFD) applications, but FV methods suffer from severe results degradation on skewed grids [9, 10, 13] which is unavoidable in industrial CFD. On the other hand, residual distribution (RD) methods are known to be less sensitive to mesh variations [4, 5, 9]. RD methods are also more compact which allows a more efficient parallel computation [15] and have a natural platform to incorporate multidimensional fluid physics [7].

However, there is still lot to be done for RD methods. Most RD methods are developed from mainly steady-state inviscid equations. Most of these methods will be at best first order accurate in space for unsteady calculations [2] even if they are high order accurate for steady-state problems unless there is a costly implicit sub-iterative process being applied at every time-step. To the authors’ best knowledge, there is only one type of explicit second-order RD scheme for unsteady calculations [3, 18]. In fact, most RD methods cannot even preserve second-order accuracy when solving steady-state scalar advection-diffusion problems which is the prerequisite of solving the steady-state Navier–Stokes equations [16]. One way to preserve second-order accuracy when solving advection-diffusion problems is the unified first-order hyperbolic systems approach [17].

Ismail and Chizari [11] have developed a new class of RD methods which ensures automatic conservation of the primary variables without any dependence on cell-averaging for any well-posed equations and preserves the spatial second-order accuracy on unsteady problems using any consistent explicit time integration scheme. The approach has also been extended to solve advection-diffusion problems with much success [19]. Since these flux-difference RD methods are new, very little has been done to understand the inherent properties of the scheme.

In this paper, our main intention is to provide a pure mathematical analysis to determine the mathematical properties of the newly developed flux-difference RD method on triangular grids when solving the two-dimensional linear advection equation. The analysis includes the determination of its positivity condition, stability analysis and the study of the order-of-accuracy variations as a function of grid skewness.

2 Residual Distribution Methods

Consider the two-dimensional scalar advection equation,

where u is the unknown quantity in temporal and two-dimensional space. The fluxes are \(\mathbf {F}=(au)\hat{i}+(bu)\hat{j}=\mathbf {\lambda }{u}\). The \(\hat{i}\) and \(\hat{j}\) are the unique characteristic vector along x- and y-direction. \(\mathbf {\lambda }\) is the wavespeed that defines the speed and direction of advection of u.

The main concept of the residual distribution method is finding the sub-residuals (or signals) for each point from the total residual of a cell (element) as shown in Fig. 1. By using Green’s theorem, the total cell residual (\(\phi _{\mathrm{T}}\)) of Eq. 1 would be

In discrete form, the total residual over a triangular element using the trapezoidal rule by using the three nodes \(p=(i,j,k)\) [11] is

where \(\mathbf {n_p}\) are the inward normal to the side opposite of node p and \(\mathbf {F}^*\) is the degree of freedom for the flux-difference RD method. The way \(\phi _\mathrm{T}\) is distributed locally to each node \(\phi _i \) will define each type of RD method.

2.1 Flux-Difference RD Methods

This newly developed RD method [11] has two components: isotropic signals and artificial signals.

2.1.1 Isotropic Signals

This isotropic signals (\(\phi ^\text {iso}\)) distribution is a central-type flux difference scheme of which the total residual of is equally distributed to each of the three nodes within an element. The \(\phi ^\text {iso}\) also depends on \(\mathbf {F}(u)\) rather than u, which is one of the key features of this alternative RD method that is quite different from the ones currently available in the literature. Similar to a FV approach, conservation of the primary variables (u) is automatic for the isotropic signals integrated over each local element since the summation of any \(\mathbf {F}^*\) would be zero within each element. \(\mathbf {F}^*\) is one of the degrees of freedom for which we could impose certain physical conditions. For the time being we choose an arithmetic average of three nodal values for \(\mathbf {F}^*\) within the element. \(\mathbf {F}^*\) will only affect the nodes of each element since overall update is on the nodes, where each node p would have an equal amount of sub-residuals.

2.1.2 Artificial Signals

Since the isotropic signals is pure central approach, it requires some form of artificial diffusion as an offset to achieve stability [11]. The idea is to add the artificial terms to the isotropic signals such that the primary variable u is discretely conserved over a local element (cell) but at the same time, will discretely augment u on each node.

Let us focus on a local element which has nodes i, j, k. Define

so for node i, the newly proposed sub-residuals are

Similarly, the sub-residuals to nodes j, k can also be determined as in [11]. \(\alpha ,\beta ,\gamma \) are additional degrees of freedom for the new RD method. It will be shown in the next subsection that the flux-difference RD method can also be made upwind to account for physical wave propagation by controlling the artificial signals.

2.2 Recovery of Classic RD Methods

There will be a unique signal distribution (\(\tilde{\phi _i}\)) which will recover the classic RD methods by solving Eq. 5 for \(\alpha ,\beta \) and \(\gamma \). For each node i, j, k,

Equation 6 is linearly dependent for \(\alpha ,\beta \) and \(\gamma \), because the summation of both sides will be the total cell residual(\(\phi _\mathrm{T}\)). This requires at least one parameter out of \(\alpha ,\beta \) and \(\gamma \) to be specified. From [11], one of the conditions for entropy-stability is that \(\gamma =-\alpha \) and conservation requires that \(\tilde{\phi }_i=-\tilde{\phi }_j-\tilde{\phi }_k\). Thus, the first equation of Eq. (6) is redundant since it can be rewritten in terms of \(\tilde{\phi }_j, \tilde{\phi }_k\). Overall, we will now have two linearly independent equations that can be solved for \(\alpha \) and \(\beta \).

Therefore,

Note that these \(\alpha \) and \(\beta \) are well-posed since the denominator will be always positive except for the trivial case for which the signals are all zero.

For the scalar linear advection, using \(k_i=\frac{1}{2}\mathbf {\lambda }^*\cdot {\mathbf {n}_i}\) will yield

2.2.1 N-Scheme Recovery

The N-scheme is a classic first-order multidimensional upwind RD method. Essentially, it has two upwind conditions: one target and two target cells [1].

Recall that the signals (subresiduals) of classic N scheme are

\(k_i\) is projection of the wavespeed \(\mathbf {\lambda }\) onto the edge opposite node i within an element. \(u_i\) refers to the values of node i opposite of the edge i. And, the upwind conditions are

By choosing the following,

we shall recover the 2-target N-scheme as before.

For one-target cell,

2.2.2 LDA Recovery

For two-target,

Note that the one-target LDA is identical with one-target N scheme.

2.2.3 Lax–Friedrichs Method

The signal distribution for

Lax–Friedrichs using \(\alpha \) and \(\beta \),

2.2.4 Lax–Wendroff Recovery

where A is the cell area.

The specific \(\alpha ,\beta ,\gamma \) formulation for the newly developed flux-difference RD method will be disclosed in the next section.

2.3 Time Integration Step

For each point, we could evaluate summation of the signals from neighboring cells as shown in Fig. 2. The time evolution of the solution is computed as

where j shows the neighboring cells to the main node (point) p.

3 Properties of the Flux-Difference RD Methods

3.1 Positivity Condition

Hyperbolic-type PDEs such as the scalar advection may contain discontinuities such as shockwaves. It is vital to capture a monotone shock profile, which can be mathematically presented as positivity. The constraints of positivity are defined as

which is identical to [12] LED (Local Extremum Diminishing) criterion. Recall that the signal distribution for a flux-differencing RD method is,

Simplifying the previous equation will reduce to

To achieve the mathematical positivity condition (Eq. (18)) requires that

with

and,

Thus, the positivity condition will be

Since \(-\frac{2}{3}k_\text {min}\) is always positive then \(\alpha >\gamma \) is a necessary condition to get positivity. Combining \(\alpha >\gamma \) with the entropy-stability condition [11],

therefore, the inequalities reduce into

But \(k_\text {min} \ge {0}\) and the fact that larger \(\alpha \) corresponds to increasing entropy generation (hence increasing dissipation)[11], the best condition for positivity is

To make the dimensions correct for the artificial signals in Eq. (5), we could select the following form for \(\alpha \) based on the work of [11].

where the \(L_\mathrm{r}\) is a reference length and, \(\mathbf {\lambda }\) is the cell characteristic vector.

Lemma 1

The local positivity is satisfied if and only if,

Proof

The condition of Eq. (26) will be,

hence,

where \(\hat{d}\) is the unique characteristic vector defined in [2]. Consequently,

which is showing an approximation for q to satisfy local positivity. Note that \(\ln \left( \frac{h}{L_\mathrm{r}}\right) \) is negative because \(\frac{h}{L_\mathrm{r}}\) is considered very small therefore, the equality is reversed. \(\square \)

3.2 Truncation Error

Following the work of [4], the first step would be to determine general spatial update equation prior to the TE analysis. The equation could be discretely written in the following form.

Note that \(A_i\) is the median-dual cell area. Assume j denotes the neighboring nodes and \(w_j\) is the coefficient distribution of the residual to that node. It is also assumed for this analysis that \((a,b)> 0\) and \( \frac{b}{a} < \frac{h_2}{h}\). The analysis for \( \frac{b}{a} > \frac{h_2}{h}\) follows the same steps and will not be shown here for conciseness.

In the limit of steady state, \(u^{n+1}_i\rightarrow {u^{n}_i}\). Thus, the terms inside the parentheses in Eq. 33 will be the truncation error (TE).

Using the Taylor series expansion of the neighboring points about the main point of interest (node 0) as shown in Fig. 3, the TE will be determined. For a right running (RR) triangular grid, the Taylor series expansion of the neighboring points about the main node 0 is given as the following [4].

where \(l_t^j\) and \(l_n^j\) are the tangential to streamline and normal to streamline distances, respectively, for node j from the main node of interest i. The \(\frac{\partial ^d u}{\partial t^{d-k}\partial n^k} \) is the \(d^{th}\) order partial derivative with respect to tangential direction along the streamline, t and normal, n.

3.3 Formal Order-of-Accuracy on Structured Triangular Grids

To establish the order-of-accuracy for the “flux-difference” approach, first we need to determine its truncation error (TE) in general form with arbitrary \((\alpha ,\beta ,\gamma )\). For this case, \(\left| \left| \mathbf {\lambda }\right| \right| =\sqrt{a^2+b^2}\).

The structured triangular grids would have uniform grid length and height with dimensions \((h_1,h_2)\). For conciseness, we rewrite the grid length in terms of h and the height in terms of a stretching factor (s) of the grid length.

Note that the grid stretching parameter s is related to grid skewness by \(s=2\tan \left( \frac{\pi }{2}Q\right) \) [4] for a right triangle. For \(Q=1\), the stretching parameter is infinite.

Recall that the “flux-difference” signal distribution

As it was mentioned before, \((\alpha ,\beta ,\gamma )\) contain a length scale from the cell to ensure consistent units as in Eq. 28. Thus, we define the dimensionless parameters (\(\tilde{\alpha }, \tilde{\beta }, \tilde{\gamma }\)) as the following.

The TE analysis would depend on the isotropic and artificial signals about node 0 (Fig. 3).

3.3.1 Isotropic Signals

The isotropic signal truncation error is given as the following.

We could expand the signals coming from each element in terms of u. For instance, the signals from elements 012, 023 and 032 are written as follows.

We could do a similar procedure for other signals coming from other elements, and thus, the TE reduces to the following.

By performing a Taylor series expansion about node 0, the overall truncation error of the isotropic signals can be written as below.

This implies that the isotropic signals are second-order accurate in general (even unsteady problems), unlike most RD methods. For inviscid steady-state conditions, we expect no changes in the derivatives tangential to the streamline, and thus the following is recovered.

The isotropic signals is fourth-order accurate for inviscid steady-state conditions.

3.3.2 Artificial Signals

Based on Eq. (38), the truncation error for the artificial terms of the signals could also be determined.

By expanding each signal coming from the respective element,

The overall truncation error for the artificial signals is given as the following.

From the previous equation, note that the artificial signals are second-order accurate when we select \(\alpha =\gamma \), but this violates the entropy-stable condition of the method [11]. For the inviscid steady state case, the overall truncation error reduces to

It is clear that the obstacle to achieve high-order spatial accuracy in steady-state advection problems is due to the artificial signals. From [11], selecting \(\beta =0\) and imposing \(\alpha =-\gamma \) will yield the truncation error of the artificial signals in steady-state condition to be

The overall truncation error for the flux-difference approach is \(\text {TE}^\text {iso} + \text {TE}^\text {art}\). TE equations for the classic RD methods are included in “Appendix A”.

3.4 First-Order Flux-Difference RD Method

To construct a baseline first-order entropy-stable method, we can just use the original \(\phi ^{\mathrm{art}}\) with \(\alpha =-\gamma \) and that \(\beta =0\). In a more structured form, this can be viewed by choosing \(\alpha \) such that

A positive first-order method can be achieved if we select \(\alpha \) based on Eq. (26), where the q can be determined by Eq. (32). Note that the positive first-order method is less diffusive than the baseline first-order method since it generates less entropy.

3.5 Second Order and Beyond

To develop a compact high-order (beyond first order) method, the main concept still remains the same. By controlling entropy generation produced by the artificial signals, one might be able to construct a high-order approach. Thus, we could achieve second order and third order by choosing

where \(q^\text {high}\rightarrow 1^-\) for second order and \(q^\text {high}\rightarrow 2^-\) for third order. Fourth-order accuracy can be theoretically achieved if \(q^\text {high}\rightarrow 3^-\). The question remains on whether the high order-of-accuracy is preserved on distorted triangular grids. The following section will attempt to address this issue by examining the grid skewness effect on a specific test case in which the normal derivatives can be computed.

3.6 von Neumann Stability Analysis

To assess the feasibility on the new flux difference RD method, the stability aspect is analyzed following the work of [8]. Only forward Euler time stepping scheme is considered in this study to maintain the explicit time feature of the new scheme while being consistent with the time integration step addressed in Sect. 2.3. The analysis is done again on the structured (right-running) grids as shown in Fig. 4. Hence, the Von Neumann analysis in this study starts with Eq. (17) as the general equation for RD method with forward Euler scheme and the sum of signals can be further categorized into isotropic and artificial signals. Collecting the isotropic and artificial terms from the neighboring cells and considering the entropy-stability condition [11] yields

where

and

Considering only the numerical errors, \(\zeta ^m_{l,m}\), and casting the errors into Fourier forms, \(\zeta ^n_{l,m} = \delta ^n \text {exp}^{i \theta (l x + m y)}\) and \(i=\sqrt{-1}\), the numerical error equation is

Dividing by \(\delta ^n \text {exp}^{i \theta (l x + m y)}\) yields

Using the identity of \(\text {exp}^{i \theta } = \hbox {cos}\theta - i \hbox {sin}\theta \), we obtain

where s is the stretching factor defined in Eq. 36. Since \(\delta \) is the ratio of the numerical error at timestep n+1 to the error at timestep n, the magnitude, \(|\delta |\), is the amplification factor which determines the stability of the numerical scheme. The stable condition is achieved if and only if \(|\delta | \le 1\) \(\forall \theta \), where

A more generalized quantity is required to represent the stability condition of the schemes. We have employed the non-dimensional Courant–Friedrichs–Lewy (CFL) number for this purpose. Based on the CFL hypothesis [6], the characteristic vector should not exceed the element within one iteration as it might contradict with the characteristics in other elements which causes the solution to be unstable. The CFL condition for RD is demonstrated in Fig. 5 and the CFL number, \(\nu \) is defined as

where d is the distance between the centroid to the edge of the cell along the direction of the characteristic vector. There are only two types of cell (type I and II from Fig. 3) for right running grid and their respective d (\(d_{\text {I}}\) and \(d_{\text {II}}\)) is denoted in Fig. 4. \(\min d\) is the minimum value of d among all the cells. Substituting \(\Delta t\) into Eq. 59 results in

The stability equations for the classic RD methods are in “Appendix B”.

3.6.1 CFL Number Range for Stable Condition

The stability of flux difference scheme is assessed based on the range of \(\nu \) across different skewness. The amplification factor, \(|\delta |\) (z-axis), is plotted against both normalized frequencies \(\theta _x\) (x-axis) and \(\theta _y\) (y-axis) in 3D graphs. Note that the ranges of the frequencies are \(0 \le \theta _x \le \pi \) and \(0 \le \theta _y \le \pi \) as the pattern repeats beyond this range. For conciseness, only selected plots with grid skewness, \(Q = 0.3\) are shown. Unstable regions where \(|\delta |> 1\) are shaded in grey, while the stable regions of \(|\delta |\le 1\) are shaded in orange in Fig. 6. The lower limit of stable \(\nu \) is always 0 as \(\Delta t\) is also 0, and there is no time iteration.

Amplification factor plots at stable (left)-unstable (right) CFL number, a N scheme, \(\nu \) = 3.0, b N scheme, \(\nu \) = 3.01, c LDA, \(\nu \) = 3.0, d LDA, \(\nu \) = 3.01, e Baseline 1st order, \(\nu \) = 0.2, f Baseline 1st order, \(\nu \) = 0.21, g Positive 1st order, \(\nu \) = 2.0, h Positive 1st order, \(\nu \) = 2.05, i 2nd order, \(\nu \) = 0.3, j 2nd order, \(\nu \) = 0.35

From Fig. 6, both N scheme and LDA have the highest maximum stable \(\nu \) at 3.0. The positive first-order scheme performs very good in terms of stability \((\nu \le 2.0)\) compared to baseline (\(q=0\)) first order \((\nu \le 0.2)\). Hence, from this point onwards, the positive approach will be chosen as the first order scheme for the newly developed flux-difference method. Another point to note is the frequency regions where \(|\delta |\) exceeds 1. Both the classic RD methods, together with the second-order approach, are unstable at low-frequency regions, while baseline and positive first order show instability at higher-frequency regions.

A summary of the maximum stable \(\nu \) is shown in Table 1. The stability of higher-order schemes, i.e. third- and fourth-order schemes, was also analyzed and the excruciating limited ranges of \(\nu \) have rendered these schemes to be too expensive to be used practically with explicit method. Hence, they are to be deemed unstable and will not be discussed further in the following sections. However, implicit solvers should be explored for these higher-order schemes in the future. The positive version will be used as our first-order method due to its larger stability range compared to the baseline first order approach.

4 Results on Variation of Grid Skewness

4.1 Order-of-Accuracy

The steady-state (linear advection) test case [14] has a square domain with an inlet boundary condition for left and bottom sides, and an outlet for the right and top sides. The inlet boundaries and the steady-state exact solution are determined as

where a and b are the characteristic wave speeds in x and y direction and \(\omega _f\) is the frequency of wave. For simplicity, all of the calculations below use \(a=b=1\) but \(\omega _f\) would vary and the square domain is one by one in lengths.

Using a right-running triangular grid shown in Fig. 3 and by controlling the length (h) an height (k) of the grid, analytical and numerical order of accuracy for different skewnesses (Q) could be determined. We denote the positive approach and \((q=1)\) to be first-order and second-order flux-difference RD methods, respectively. The range of skewness for the structured triangular grid is \(0.3 \le {Q}\le {1}\) as done in [4]. From the truncation error equations in the previous section, each error term includes a coefficient and the normal derivative of the solution on a particular node. For this test case, the normal derivatives can be determined based on the repeating sine-cosine function of Eq. (62). The complete setup details of the truncation error (and consequently order-of-accuracy (OoA)) versus skewness variations can be obtained in [4]. Only the first six error terms in the TE equations are considered when performing the analysis. Note that the grid stretching parameter s is related to grid skewness by \(s=2\tan \left( \frac{\pi }{2}Q\right) \) [4] for a right triangle. For the RD methods, the order of accuracy will be unbounded for \(s=1\) due to perfect grid alignment with the characteristics \((a,b)=(1,1)\). However, the limit of \(s\rightarrow 1^+\) still exists for most RD methods.

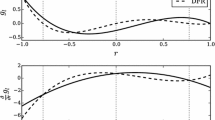

From Fig. 7, the asymptotic values for the analytical order-of-accuracy (OoA) when the stretching parameter is between \(1< s <{\infty }\) . For all of the RD schemes reported herein, the magnitude of errors increases rapidly beyond certain skewness and reaches an asymptotic value when \(Q=1.0\) (or \(s\rightarrow {\infty }\)) as shown in Fig. 9. However, the OoA of methods may increase (i.e Lax Wendroff, second FD), or decrease (LDA, N scheme) or even behave in an oscillatory fashion (highest frequency), depending on the respective TE equations. Of course numerically, we expect most schemes to have a drop in OoA as we increase the skewness (Fig. 8) but RD schemes are usually least affected relative to FV methods [4]. For brevity, we have only included the numerical OoA for low frequency since the methods have a similar pattern for higher frequencies but with more rapid deterioration at high skewness as shown in the analytical part. The \(L_2\) errors for the numerical results are also similar to the truncation errors in Fig. 9 hence omitted for conciseness. Note that the Lax–Wendroff \(L_2\) errors drop to round-off errors when \(Q\rightarrow {0.3}\) due to the numerical solutions approach the exact solution at this configuration hence we could not compute its numerical OoA.

The order of accuracy for the first order scheme generally attains the desired accuracy and it is comparable to Lax–Friedrichs (LxF) which is also a central scheme. However, the first order positive scheme always has slightly lower L2 error compared to that of Lax–Friedrichs and the difference decreases when the grid is further skewed. The N-scheme is the best first order due to its narrowest stencil, which is least susceptible to grid changes.

The second order (\(q=1\)) version maintains its accuracy for the most part with varying skewness while the order of accuracy of classic second order LDA deteriorates at high skewness. The deterioration becomes more rapid as the frequency increases, although LDA achieves third order at \(Q=0.5\) as the truncation error for LDA in steady state condition is given by

With the condition of \(a = b = 1\) and the identity of grid skewness of \(s=2\tan \left( \frac{\pi }{2}Q\right) \), the TE for LDA at \(Q=0.5\) becomes

It can be seen that the second order term of the TE for LDA is cancelled perfectly at that specific skewness. The Lax–Wendroff (LxW) scheme is third order accurate for right-running grids since there the second order error terms drop out for this unique choice of structured (right running) grids. The Lax–Wendroff is generally a second order method as demonstrated in [5].

The sample of analytical OoA plot for one skewness value is shown in Fig. 10. The numerical OoA plot for a particular skewness has the same hierarchical pattern, therefore it is not included to maintain conciseness of the paper. The numerical velocity contours and the residual convergence history are shown in Figs. 11 and 12.

4.2 Shock-Tree Problem

This test case is to examine the ability of each method to capture a monotone shock profile on a discontinuous data. The shock-tree case is based on the Burgers’ equation, with the inflow boundary at the bottom, left and right of the domain.

The steady state exact solution is,

It is illustrated in Fig. 13 that both first order schemes preserve monotonicity in discontinuous domain and the positive approach performs exceptionally good as the diffusion is minimal. However, the second order approach is not monotone and oscillations occur near the shock region.

5 Conclusion

The flux-difference RD methods have a general mathematical form which can easily recover the classic central and upwind RD methods, hence all of their inherent properties as well. In addition, the new RD method can also be designed to have its own unique central-type methods with different order-of-accuracy by controlling the artificial signals. Overall, the flux-difference methods are minimally sensitive to grid skewness as much as the classic RD methods, which are known to be much less relative to finite volume methods.

References

Abgrall, R.: Residual distribution schemes: current status and future trends. Comput. Fluids 35(7), 641–669 (2006)

Abgrall, R.: A review of residual distribution schemes for hyperbolic and parabolic problems: the July 2010 state of the art. Commun. Comput. Phys. 11(4), 1043–1080 (2012)

Abgrall, R., Trefilík, J.: An example of high order residual distribution scheme using non-Lagrange elements. J. Sci. Comput. 45(1–3), 3–25 (2010)

Chizari, H., Ismail, F.: Accuracy variations in residual distribution and finite volume methods on triangular grids. Bull. Malays. Math. Sci. Soc. 22, 1–34 (2015)

Chizari, H., Ismail, F.: A grid-insensitive lda method on triangular grids solving the system of euler equations. J. Sci. Comput. 71(2), 839–874 (2017)

Courant, R., Friedrichs, K., Lewy, H.: On the partial difference equations of mathematical physics. IBM J. 11, 215–234 (1967)

Deconinck, H., Roe, P., Struijs, R.: A multidimensional generalization of Roe’s flux difference splitter for the Euler equations. Comput. Fluids 22(2), 215–222 (1993)

Ganesan, M.: Analytical study of residual distribution methods for solving conservation laws, MSc. thesis, Universiti Sains Malaysia (2017)

Guzik, S., Groth, C.: Comparison of solution accuracy of multidimensional residual distribution and Godunov-type finite-volume methods. Int. J. Comput. Fluid Dyn. 22, 61–83 (2008)

Ismail, F., Carrica, P.M., Xing, T., Stern, F.: Evaluation of linear and nonlinear convection schemes on multidimensional non-orthogonal grids with applications to KVLCC2 tanker. Int. J. Numer. Methods Fluids 64(September 2009), 850–886 (2010)

Ismail, F., Chizari, H.: Developments of entropy-stable residual distribution methods for conservation laws I: scalar problems. J. Comput. Phys. 330, 1093–1115 (2017)

Jameson, A.: Artificial diffusion, upwind biasing, limiters and their effect on accuracy and multigrid convergence in transonic and hypersonic flow. In: 93-3359. AIAA Conference, Orlando (1993)

Katz, A., Sankaran, V.: High aspect ratio grid effects on the accuracy of Navier–Stokes solutions on unstructured meshes. Comput. Fluids 65, 66–79 (2012)

Masatsuka, K.: I do like CFD, book, vol. 1 (2009)

Mazaheri, A., Nishikawa, H.: Improved second-order hyperbolic residual-distribution scheme and its extension to third-order on arbitrary triangular grids. J. Comput. Phys. 300(1), 455–491 (2015)

Nishikawa, H.: Fluctuation-Splitting Schemes and Hyp / Ell Decompositions of the Euler Fluctuations and Forms of The Euler Equa- tions. Tech. Rep. August (2004)

Nishikawa, H.: A first-order system approach for diffusion equation. II: unification of advection–diffusion. J. Comput. Phys. 229(11), 3989–4016 (2010)

Ricchiuto, M., Abgrall, R.: Explicit Runge–Kutta residual distribution schemes for time dependent problems: second order case. J. Comput. Phys. 229(16), 5653–5691 (2010)

Singh, V., Chizari, H., Ismail, F.: Non-unified Compact Residual-Distribution Methods for Scalar Advection-Diffusion Problems. Journal of Scientific Computing revision stage (2017)

Acknowledgements

We would like to thank the Ministry of Higher Education of Malaysia for financially supporting this research work under the FRGS grant (NO: 203/PAERO/6071316).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ahmad Izani.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

A Truncation Error (TE) of Classic RD Methods

The TE for different classic RD methods are determined with the assumption that \(\frac{b}{a}<\frac{k}{h}\).

B Stability Analysis on Classic RD Methods

With the same formulation in Sect. 3.6, the amplification factor, \(\delta \) for N and LDA schemes are determined as the following.

Rights and permissions

About this article

Cite this article

Ismail, F., Chang, W.S. & Chizari, H. On Flux-Difference Residual Distribution Methods. Bull. Malays. Math. Sci. Soc. 41, 1629–1655 (2018). https://doi.org/10.1007/s40840-017-0559-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-017-0559-8