Abstract

The bivariate Weibull distribution is an important lifetime distribution in survival analysis. In this paper, Farlie–Gumbel–Morgenstern (FGM) copula and Weibull marginal distribution are used for creating bivariate distribution which is called FGM bivariate Weibull (FGMBW) distribution. FGMBW distribution is used for describing bivariate data that have weak correlation between variables in lifetime data. It is a good alternative to bivariate several lifetime distributions for modeling real-valued data in application. Some properties of the FGMBW distribution are obtained such as product moment, skewness, kurtosis, moment generation function, reliability function and hazard function. Three different estimation methods for parameters estimation are discussed for FGMBW distribution namely; maximum likelihood estimation, inference function for margins method and semi-parametric method. To evaluate the performance of the estimators, a Monte Carlo simulations study is conducted to compare the preferences between estimation methods. Also, a real data set is introduced, analyzed to investigate the model and useful results are obtained for illustrative purposes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The Weibull distribution has been attained more attention in the literature and has inherent flexibility. The univariate Weibull distribution has the following cdf and pdf respectively

and

where \( \beta ,\alpha \) are the scale and shape parameters respectively.

In bivariate Weibull, recent researches have been made for the bivariate Weibull distribution. Galiani [6] concluded that bivariate Weibull are specifically oriented towards applications in economics, finance and risk management. Flores [4] used Weibull marginal to construct bivariate Weibull distributions. Kundu and Gupta [13] introduced the Marshall–Olkin bivariate Weibull distribution.

A copula is a convenient approach for description of a multivariate distribution. Nelsen [16] introduced Copulas as following; copula is function that join multivariate distribution functions with uniform [0, 1] margins. A copula is a convenient approach to describe a multivariate distribution with dependence structure. The n-dimensional copula \( \left( C \right) \) exists for all \( y_{1} , \ldots ,y_{n} \), \( F\left( { y_{1} , \ldots ,y_{n} } \right) = C\left( {F_{1} \left( {y_{1} } \right), \ldots , F_{n} \left( {y_{n} } \right)} \right), \) if F is continuous, then \( C \) is uniquely defined.

Sklar [18] states that, considered the two random variables \( Y_{1} \) and \( Y_{2} \), with distribution functions \( F_{1} \left( {y_{1} } \right) \) and \( F_{2} \left( {y_{2} } \right) \) the following cdf and pdf for copula are respectively

and

Farlie–Gumbel–Morgenstern (FGM) is one of the most popular parametric families of copulas, the family was discussed by Gumbel [8] The joint cdf and joint pdf for FGM copula as following respectively

and

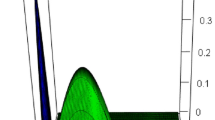

Figure 1 3-dimension for the pdf and cdf of FGM copula with different value of parameter of copula \( \theta \).

Fredricks and Nelsen [5] drives the formula for Spearman’s and Kendall’s correlation coefficient as follows

Such that \( \frac{ - 1}{3} \le \rho_{sperman} \le \frac{1}{3}, \frac{ - 2}{9} \le \rho_{\text{Kendall}} \le \frac{2}{9} \).

In this article, we study the bivariate extension of the Weibull distribution based on FGM copula function (FGMBW) and discuss its statistical properties. FGMBW distribution is used for describing bivariate data that have weak correlation between variables in lifetime data. It is a good alternative to bivariate several lifetime distributions for modeling non-negative real-valued data in application.

The objective of this article is twofold: to study the properties of the FGMBW distribution, and to estimate the parameters of the model by different estimation methods. The attractive feature of the marginal function of FGMBW distribution is the same as the basic distribution (Weibull). Other features of the FGMBW distribution: it contains closed forms for its cdf, product moment, moment generation function, and hazard rate function. The final motivation of the article is to develop a guideline for introducing the best estimation method for the FGMBW distribution, which we think would be of deep interest to statisticians. A simulation study is conducted to compare the preferences between estimation methods. Also, a real data set is introduced and analyzed to investigate the model. The uniqueness of this study comes from the fact that we introduce a comprehensive description of mathematical and statistical properties of FGMBW distribution with the hope that they will attract wider applications in medicine, economics, life testing and other areas of research.

The rest of this paper is organized as follows: FGM bivariate Weibull distribution is obtain in Sect. 2. Some statistical properties of FGMBW distribution in Sect. 3. Parameter estimation methods for the FGMBW distribution based in copula in Sect. 4. In Sect. 5, asymptotic confidence intervals are discussed. In Sect. 6, the potentiality of the new model is illustrated by simulation study. In Sect. 7, Application of real data are discussed. Finally, Conclusion of some remarks for FGMBW model are addressed in Sect. 8.

2 FGM Bivariate Weibull Distribution

According to Sklar theorem the joint pdf of bivariate Weibull distribution for any copula is as follows

The cdf of a FGMBW distribution can be expressed as

The pdf of a FGMBW distribution is defined as

Figure 2 show the plot 3-dimension for the pdf and cdf of FGMBW distribution with different value of \( \alpha_{1} ,\beta_{1} , \alpha_{2} ,\beta_{2} \,{\text{and}}\,\theta \) (Fig. 3).

3 Properties of FGMBW Distribution

In this section, we give some important statistical properties of the FGMBW distribution such as Marginal Distributions, product moments, moment generating function, conditional distribution, generating random variables, reliability function. Establishing algebraic expressions to determine some statistical properties of the FGMBW distribution can be more efficient than computing them directly by numerical simulation.

3.1 The Marginal Distributions

The marginal density functions for \( Y_{1} \) and \( Y_{2} \) respectively,

which are Weibull distributed, where the marginal distribution of \( Y_{1} \) and \( Y_{2} \) can be calculated directly by

3.2 Conditional Distribution

The conditional probability distribution of \( Y_{2} \) given \( Y_{1} \) is given as follows

and the conditional cdf is

where \( u\left( {y_{i} } \right) = 1 - e^{{ - \left( {\frac{{y_{i} }}{{\beta_{i} }}} \right)^{{\alpha_{i} }} }} i = 1,2 \) and \( v\left( {y_{i} } \right) = 1 - e^{{ - 2\left( {\frac{{y_{i} }}{{\beta_{i} }}} \right)^{{\alpha_{i} }} }} i = 1,2 \)

3.3 Generating Random Variables

Nelsen [16] discussed generating a sample from a specified joint distribution. By conditional distribution method, the joint distribution function is as follows

By using the following steps, we can generate a bivariate sample by using the conditional approach:

- 1.

Generate \( U \) and \( V \) independently from a \( {\text{uniform}}\left( {0, 1} \right) \) distribution.

- 2.

Set \( Y_{1} = \beta_{1} \left[ { - \ln \left( {1 - U} \right)} \right]^{{\alpha_{1} }} \).

- 3.

Set \( F\left( {y_{2} \left| {y_{1} } \right.} \right) = V \) to find \( Y_{2} \) by numerical simulation.

- 4.

Repeat Steps 1–3 \( \left( n \right) \) times to obtain \( \left( {y_{1i} ,y_{2i} } \right), i = 1, 2, \ldots , n \).

3.4 Moment Generating Function

Let \( \left( {Y_{1} ,Y_{2} } \right) \) denote a random variable with the probability density function (2.3). Then, the moment generating function of \( \left( {Y_{1} ,Y_{2} } \right) \) is given by,

To prove the moment generating function start with

3.5 Product Moments

If the random variable \( \left( {Y_{1} ,Y_{2} } \right) \) is distributed as FGMBW, then its rth and sth moments around zero can be expressed as follows

To prove that start with

We use Mardia’s [14] measures of multivariate and bivariate skewness (SK) and kurtosis (KU) (Table 1). Mardia defined bivariate SK and KU, respectively, as

where \( \gamma_{rs} = \frac{{\mu_{rs} }}{{\sigma_{1}^{r} \sigma_{2}^{s} }}, \rho = corr\left( {Y_{1} ,Y_{2} } \right) = \frac{{E\left( {\left( {Y_{1} - E\left( {Y_{1} } \right)} \right)^{ } \left( {\left( {Y_{2} - E\left( {Y_{2} } \right)} \right)} \right)^{ } } \right)}}{{\sigma_{1}^{ } \sigma_{2}^{ } }} \) where \( \mu_{rs} \), is the central moment of order \( \left( {r, s} \right) \) of \( \left( {Y_{1} ,Y_{2} } \right) \), \( \sigma_{1}^{ } = \sqrt {E\left( {Y_{1} - E\left( {Y_{1} } \right)} \right)^{2} } \) and \( \sigma_{2} = \sqrt {E\left( {Y_{2} - E\left( {Y_{2} } \right)} \right)^{2} } \)

3.6 Reliability Function

Osmetti and Chiodini [17] discussed that the reliability function is more convenient to express a joint survival function as a copula of its marginal survival functions, where \( Y_{1} \,{\text{and}}\,Y_{2} \) be random variable with survival functions \( \bar{F}\left( {y_{1} } \right) \) and \( \bar{F}\left( {y_{2} } \right) \) as following.

The reliability function of the marginal distributions is defined as

The expression of the joint survival function for copula is as following

Then the reliability function of FGMBW distribution is

Basu [1] defined the bivariate failure rate function for the first time as

Then the hazard rate function of FGMBW distribution is

4 Estimation Based on Copulas

In the section, we introduce different estimation methods that used to estimate the parameters of FGMBW distribution, such as: maximum likelihood estimation (MLE), inference functions for margins (IFM) and semi-parametric method (SP). To more information about these methods see Chen [2], Tsukahara [19] and Weiß [20].

4.1 Maximum Likelihood Estimation (MLE)

Elaal and Jarwan [3], discussed the maximum likelihood estimator to estimate all model parameters jointly, it is a one-step parametric method. Therefore, the log-likelihood is given as

The parameter estimates are obtained by maximizing the log-likelihood function with expect to each parameter separately. Considering Eqs. (2.3) and (1.6), let

The likelihood function of a FGMBW distribution is defined as

and the log-likelihood function can be written as

The estimates of all parameters are obtained by differentiating the log-likelihood function in (4.1) with respect to each parameter separately, as following

and

The MLE \( \hat{\delta } = \left( {\hat{\alpha }_{1} , \hat{\beta }_{1} , \hat{\alpha }_{2} , \hat{\beta }_{2} , \hat{\theta }} \right) \) can be obtained by solving simultaneously the likelihood equations

But the equations has to be performed numerically using a nonlinear optimization algorithm.

4.2 Estimation by Inference Functions for Margins (IFM)

Joe [9] introduced this parametric method with two-step of estimation. In the first step, each marginal distribution is estimated separately.

Then, in the second step the copula parameter is estimated by maximizing the log-likelihood function of the copula density using the ML estimates of the marginal \( \hat{F}_{1} \left( {y_{1j} ,\delta } \right) \) and \( \hat{F}_{2} \left( {y_{2j} ,\delta } \right) \). Considering the Eq. (2.3), the log likelihood function of a Weibull distribution is defined as

The MLEs \( \left( {\hat{\alpha }_{1} , \hat{\beta }_{1} , \hat{\alpha }_{2} , \hat{\beta }_{2} } \right) \) can be obtained by solving simultaneously the likelihood equations

then

and considering the previous step, the IFM estimate of a FGMBW distribution is defined as

The estimates of all parameters are obtained by differentiating the log-likelihood function in (4.4) with respect to each parameter separately. Basing on this, differentiating the log-likelihood function with respect to \( \theta \) is given as

The estimates of parameters are handled numerically simultaneously the likelihood equations

There is no closed-form expression for the MLE \( \hat{\theta } \) and its computation has to be performed numerically using a nonlinear optimization algorithm.

4.3 Estimation by Semi-Parametric Method [SP]

Kim et al. [10] introduced Estimation that carried out in two stages as in IFM, but the difference is that the marginal distributions are estimated non-parametrically by their sample empirical distributions. In this method, the observations are transformed into pseudo-observations using the empirical distribution function of each marginal distribution. The empirical distribution function is defined as

Then, \( \theta \) is estimated by the maximizer of the pseudo loglikelihood,

Considering the Eq. (4.6), the log likelihood function of a FGMBW distribution is defined as

There is no closed-form expression for the MLE \( \hat{\theta } \) by using of Eq. (4.7) and it computation has to be performed numerically using a statistical software.

5 Asymptotic Confidence Intervals

In this section, we propose the asymptotic confidence intervals using methods of estimations. Keeping this in mind, we may propose the asymptotic confidence intervals using ML, IFM, and SP methods can be used to construct the confidence intervals for the parameters. We first obtain \( I\left( {\hat{\alpha }_{1} , \hat{\beta }_{1} , \hat{\alpha }_{2} , \hat{\beta }_{2} , \hat{\theta }} \right) \) which is the observed inverse Fishers information matrix and it is defined as:

An approximate 95% two side confidence intervals for (\( \alpha_{1} , \beta_{1} , \alpha_{2} , \beta_{2} , \theta \)) are respectively

6 Simulation Study

In this section; Monte Carlo simulation is done for comparison between estimation methods based on copula such as: MLE, IFM and SP. For estimating FGMBW distribution parameters by R language.

Simulation Algorithm Monte Carlo experiments were carried out based on the following data- generated form Weibull Distributions, where \( Y_{1} ,Y_{2} \) are distributed as Weibull with \( \beta_{\text{i }} \) shape parameters and \( \alpha_{\text{i}} \) scale parameter, i = 1, 2 the values of the parameters \( \alpha_{1} ,\beta_{1} , \alpha_{2} , \beta_{2} \) and θ is chosen as the following cases for the random variables generating:

For different sample size \( n \) = 30, 50, 70, 100, 125 and 150. All computations are obtained based on the R language. The simulation methods are compared using the criteria of parameters estimation, the comparison is performed by calculate in the Bias, the MSE and the length of confidence interval (L.CI) for each method as following

where \( \hat{\delta } \) is the estimated value of \( \delta \).

and

We restricted the number of repeated-samples to 1000.

Based on Eqs. 1.7 and 1.8 for Spearman’s and Kendall’s correlation coefficient (Table 2).

On the basis of the results summarized in tables and figures, some conclusions can be drawn which are stated as follows: it is observed that as sample size increases and fixed vector value of δ, the Bias, MSE and Length of confidence interval of the estimates decreases in all the considered methods. In large sample size all of them are nearly equivalent, where the difference is less and there are no significant differences in Bias and MSE values for alternative methods and MLE method. The compare between parametric estimation and non-parametrically estimation have done. The parametric estimation methods are better than non-parametrically estimation method, when copula parameter is not high, approximately (− 0.7:0.7). The SP method is discussed where, the marginal distributions are estimated non-parametrically by their sample empirical distributions and it estimated the copula parameter, this compare between parametric estimation and non-parametrically estimation, whenever the value of copula parameter is close to (− 1 or 1), the efficiency will increase for SP method compared with other methods. IFM method is better than another methods, this is clear for copula parameter θ. It is noted that result, IFM method is the best method because it is a two steps of estimation, first, the marginal distribution parameters estimated and second the copula parameter is estimated, taking into consideration of previous parameter estimates of marginal distribution. That get more efficiency (Figs. 4, 5, Tables 3, 4, 5, 6, 7, 8).

On the basis of the results summarized in table, some conclusions can be drawn which are stated as follows: It is observed that as sample size increases for fixed vector values of \( \delta \) the MSE of the estimates decreases in all the considered methods and for large size all of them are nearly equivalent but IFM performs better than another method when increase \( \theta \) (parameter of copula). While θ decreases, the MLE is better than other methods Based on MSE term, but the SP method is discussed where, the marginal distributions are estimated non-parametrically by their sample empirical distributions and it estimated the parameter of copula, this compare between parametric estimation and non-parametrically estimation.

7 Application of Real Data

The data for 30 patients set from McGilchrist and Aisbett in [15]. Let \( Y_{1} \) refers to first recurrence time and \( Y_{2} \) to second recurrence time, as following \( Y_{1} \,is\,(8,23,22,447,30,24,7,511,53,15,7,141,96,149, \)\( 536,17,185,292,22,15,152,402,13,39,12,113,132,34,2,130) \) and \( Y_{2} \,is\,(16,13,28,318,12,245,9,30, \)\( 196,154,333,8,38,70,25,4,117, 114,159,108,362,24,66,46,40,201,156,30,25,26) \). Elaal and Jarwan [3] discussed the estimation of the parameters of bivariate generalized exponential distribution for this data (Table 9).

Genest et al. [7] introduced Multiplier bootstrap-based goodness-of-fit test. We use the concludes of Genest to fit of Farlie–Gumbel–Morgenstern (FGM) by R package then (Table 10)

This by using a parametric bootstrap N = 10,000 time and the empirical copula estimate.

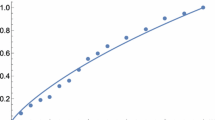

Goodness of fit test one-sample Kolmogorov–Smirnov test (Table 11).

A comparison has been done between FGM bivariate Gamma (FGMBG), which was discussed by Kotz et al. [11], bivariate Marshall–Olkin Weibull (BMOW), which was discussed by Kundu and Dey [12] and FGM Bivariate Generalized Exponential (FGMBGE), which was discussed by Elaal and Jarwan [3].

In Table 12, it is observed that, the FGMBW model provides a better fit than the other tested models (FGMBG FGMBGE BMOW), because it has the smallest value of L, AIC and BIC. The FGMBW distribution is a good alternative to bivariate several lifetime distributions for modeling non-negative real-valued data in application.

In Tables 13, it is observed that, the IFM method provides a better fit than the other tested methods, because it has the smallest value of stander deviation and L.CI for parameters of FGMBW distribution.

8 Conclusion

In this paper, we have proposed a FGMBW distribution based on FGM copula function. Moreover, we have the reliability functions for FGMBW distribution; therefore, it can be used quite effectively in life testing data. Additionally, the new FGMBW model can be used as an alternative to any bivariate Weibull distribution; it might work better, where the marginal function of FGMBW distribution has the same basic distribution and has closed forms for product moment. A comparison between different estimation methods of the FGMBW distribution are concluded. The results show that the best method of estimation is IFM method, whereas real data application show that MLE perform better than their counterparts. Hence, we can argue that IFM estimators and MLE are the best performing estimators for FGMBW distribution.

References

Basu AP (1971) Bivariate failure rate. J Am Stat Assoc 66(333):103–104

Chen X (2007) Large sample sieve estimation of semi-nonparametric models. Handb Econom 6:5549–5632

Elaal MKA, Jarwan RS (2017) Inference of bivariate generalized exponential distribution based on copula functions. Appl Math Sci 11(24):1155–1186

Flores AQ (2009) Testing copula functions as a method to derive bivariate Weibull distributions. Am Polit Sci Assoc (APSA) 2009:3–6

Fredricks GA, Nelsen RB (2007) On the relationship between Spearman’s rho and Kendall’s tau for pairs of continuous random variables. J Stat Plan Inference 137(7):2143–2150

Galiani SS (2003) Copula functions and their application in pricing and risk managing multiname credit derivative products. University of London Master of Science Project

Genest C, Huang W, Dufour J-M (2013) A regularized goodness-of-fit test for copulas. J Soc Fran Stat 154:64–77

Gumbel EJ (1960) Bivariate exponential distributions. J Am Stat Assoc 55:698–707

Joe H (2005) Asymptotic efficiency of the two-stage estimation method for copulabased models. J Multivar Anal 94:401–419

Kim G, Silvapulle MJ, Silvapulle P (2007) Comparison of semiparametric and parametric methods for estimating copulas. Comput Stat Data Anal 51(6):2836–2850

Kotz S, Balakrishnan N, Johnson NL (2004) Continuous multivariate distributions, volume 1: models and applications, vol 1. Wiley, Hoboken

Kundu D, Dey AK (2009) Estimating the parameters of the Marshall–Olkin bivariate Weibull distribution by EM algorithm. Comput Stat Data Anal 53(4):956–965

Kundu D, Gupta AK (2013) Bayes estimation for the Marshall–Olkin bivariate Weibull distribution. Comput Stat Data Anal 57(1):271–281

Mardia KV (1970) Measures of multivariate skewness and kurtosis with applications. Biometrika 57(3):519–530

McGilchrist CA, Aisbett CW (1991) Regression with frailty in survival analysis. Biometrics 47:461–466

Nelsen RB (2006) An introduction to copulas. Springer, New York

Osmetti SA, Chiodini PM (2011) A method of moments to estimate bivariate survival functions: the copula approach. Statistica 71(4):469–488

Sklar A (1973) Random variables, joint distributions, and copulas. Kybernetica 9:449–460

Tsukahara H (2005) Semiparametric estimation in copula models. Can J Stat 33(3):357–375

Weiß G (2011) Copula parameter estimation by maximum-likelihood and minimum-distance estimators: a simulation study. Comput Stat 26(1):31–54

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Almetwally, E.M., Muhammed, H.Z. & El-Sherpieny, ES.A. Bivariate Weibull Distribution: Properties and Different Methods of Estimation. Ann. Data. Sci. 7, 163–193 (2020). https://doi.org/10.1007/s40745-019-00197-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-019-00197-5