Abstract

In this paper, we prove a weak convergence theorem for finding a common solution of combination of equilibrium problems, infinite family of nonexpansive mappings, and the modified inclusion problems using inertial forward–backward algorithm. Further, we discuss some applications of our obtained results. Furthermore, we provide some numerical results to illustrate the convergence behavior of some of our results, and compare the convergence rate between the existing projection method and the proposed inertial forward–backward algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout the paper, unless otherwise stated, let H be a real Hilbert space. Inner product and induced norm are, respectively, denoted by the notations \(\langle .,.\rangle \) and \(\Vert .\Vert \). Weak convergence and strong convergence are denoted by \(``\rightharpoonup \)” and \(``\rightarrow \)”, respectively. Let C be a nonempty, closed, and convex subset of H. The fixed point problem for the mapping \(T : C\rightarrow H\) is to find \(x\in C\), such that \(x = Tx\). We denote the fixed point set of a mapping T by \(\mathrm{Fix}(T)\).

A mapping \(T:C\rightarrow C\) is called nonexpansive if

T is called \(\alpha \)-inverse strongly monotone if there exists a positive real number \(\alpha >0\), such that

Let \(F : C \times C\rightarrow {\mathbb {R}}\) be a bifunction; then, the classical equilibrium problem (for short, EP) is to find \(x\in C\), such that

The set of all solutions of the equilibrium problem \(\mathrm{EP}\) (1.1) is denoted by \(\mathrm{EP}(F)\), that is

Equilibrium problem \(\mathrm{EP}\) (1.1) introduced by Blum and Oettli (1994) in 1994 is the most intensively studied class of problems. This theory has helped in many ways of developing several thrust areas in physics, optimization, economics, and transportation problems. In recent past, various classes and forms of equilibrium problems and their applications have been studied, and as a result, various techniques and iterative schemes have been developed over the year to solve equilibrium problems; see (Blum and Oettli 1994; Combettes and Hirstoaga 2005; Farid et al. 2017; Khan and Chen 2015; Suwannaut and Kangtunyakarn 2014) and references therein.

Recently, Suwannaut and Kangtunyakarn (2014) proposed the following combination of equilibrium problems: for each \(i=1,2,\ldots ,N\), let \(F_i:C\times C\rightarrow {\mathbb {R}}\) be a bifunction and \(a_i\in (0,1)\) with \(\sum \nolimits _ {i=1}^N a_i=1\). The combination of equilibrium problems (for short, CEP) is to find \(x\in C\), such that

The set of all solutions of the combination of equilibrium problem \(\mathrm{CEP}\) (1.3) is denoted by \(\mathrm{EP}\big (\sum \nolimits _ {i=1}^N a_iF_i\big )\), that is

If \(F_i=F,~\forall i=1,2,\ldots ,N\), then \(\mathrm{CEP}\) (1.3) reduces to \(\mathrm{EP}\) (1.1).

Let \(A : H\rightarrow H\) is an operator and \(B : H\rightarrow 2^H\) is a multi-valued operator. The variational inclusion problem (for short, VIP) is to find \(x\in H\), such that

The set of the solution of VIP (1.5) is denoted by \((A+B)^{-1}(0)\). Variational inclusion problems are investigated and studied in minimization problem, complementarity problems, optimal control, convex programming, split feasibility problem, and variational inequalities.

An important method for solving problem VIP (1.5) is the forward–backward splitting method given by

where \(J^B_r=(I + rB)^{-1}\) with \(r > 0\). Forward–backward splitting methods are versatile in offering ways of exploiting the special structure of variational inequality problems. In this algorithm, \(I -rA\) gives a forward step with step size r, whereas \((I +rB)^{-1}\) gives a backward step. Forward–backward splitting method is very useful and feasible, because computation of resolvent of \((I + rA)^{-1}\) and \((I + rB)^{-1}\) is much easier than computation of sum of resolvent the two operators \(A+B\). This method provides a range of approaches to solve large-scale optimization problems and variational inequality problems; see (Bauschke and Combettes 2011; Cholamjiak 1994; Combettes and Wajs 2005; Lions and Mercier 1979; Lopez et al. 2012; Passty 1979; Tseng 2000 and reference therein. Forward–backward splitting method includes the proximal point algorithm and the gradient method as special cases; see (Alvarez 2004; Douglas and Rachford 1956; Lions and Mercier 1979; Peaceman and Rachford 1955) and references therein.

If \(A=\bigtriangledown h\) and \(B=\partial k\), where \(\bigtriangledown h\) is the gradient of h and \(\partial g\) is the subdifferential of k, then VIP (1.5) problem reduces to the following minimization problem:

and solution (1.6) reduces to

where \(\mathrm{prox}_{rk}=(I + r\partial k)^{-1}\) is the proximity operator of k.

In 1964, Polyak (1964) introduced a two-step iterative method known as the heavy-ball method involving minimizing a smooth convex function h given by

where \(\theta _n\in [0, 1)\) is an extrapolation factor with step size r that has to be chosen sufficiently small. Inspired by work of Polyak, in 2001, Alvarez and Attouch (2001) introduced an inertial forward–backward algorithm which was modification of the forward–backward splitting algorithm (1.9), and is given by

They proved the general convergence for monotone inclusion problems under the condition \(\sum \nolimits _ {n=1}^\infty \theta _n\Vert x_n-x_{n-1}\Vert ^2<\infty \) with \(\{\theta _n\}\subset [0, 1)\) in a Hilbert space setting. The term \(\theta _n(x_n-x_{n-1})\) is known as inertia with an extrapolation factor \(\theta _n\) which leads to faster convergence while keeping nature of each iteration basically unchanged; see (Alvarez 2004; Dang et al. 2017; Dong et al. 2017, 2018; Khan et al. 2018; Lorenz and Pock 2015; Nesterov 1983).

Recently, Moudafi and Oliny (2003) proposed the following inertial proximal point algorithm for solving the zero-finding problem of the sum of two monotone operators:

They proved the weak convergence and computed the operator B as the inertial extrapolate \(y_n\) under the condition \(\sum \nolimits _ {n=1}^\infty \theta _n\Vert x_n-x_{n-1}\Vert ^2<\infty \).

Very recently, Khan et al. (2018) proposed inertial forward–backward splitting algorithm for solving the inclusion problems as follows:

and proved a strong convergence theorem of the sequence \(\{x_n\}\) under suitable conditions of the parameters \(\{\alpha _n\}, \{\beta _n\}, \{\gamma _n\}\) and \(\{\theta _n\}\) in the setting of Hilbert space.

In 2014, Khuangsatung and Kangtunyakarn (2014) generalized variational inclusion problem (1.5) as follows: for \(i =1,2,\ldots ,N\), let \(A_i : H\rightarrow H\) be a single-valued mapping and let \(B : H\rightarrow H\) be a multi-valued mapping. The combination of variational inclusion problem (for short, CVIP) is to find \(x\in H\), such that

for all \(b_i\in (0,1)\) with \(\sum \nolimits _ {i=1}^N b_i=1\). The set of all solutions of the combination of variational inclusion problem CVIP (1.13) is denoted by \(\Big (\sum \nolimits _ {i=1}^N b_iA_i+B\Big )^{-1}(0)\). If \(A_i=A,~\forall i=1,2,\ldots ,N\), then CVIP (1.13) reduces to VIP (1.5).

Motivated by the recent research works (Cholamjiak 1994; Dang et al. 2017; Dong et al. 2017, 2018; Khan et al. 2018; Khuangsatung and Kangtunyakarn 2014) going in this direction, we propose an iterative method of modified forward–backward algorithm involving the inertial technique for solving the combination of equilibrium problems, modified inclusion problems, and fixed point problems. Furthermore, we prove a weak convergence theorem for finding a common element of the combination of inclusion problems, fixed point sets of a infinite family of nonexpansive mappings, and the solution sets of a combination of equilibrium problems in the setting of Hilbert space. Furthermore, we utilize our main theorem to provide some applications in finding a common element of the set of fixed points of a finite family of k-strictly pseudo-contractive mappings and the set of solution of equilibrium problem in Hilbert space. Finally, we give some numerical examples to support and justify our results, which shows that our proposed inertial projection method has a better convergence rate than the standard projection method.

2 Preliminaries

To prove our main result, we recall some basic definitions and lemmas, which will be needed in the sequel.

Lemma 2.1

Takahashi (2000) Let H be a real Hilbert space. Then, the following holds:

-

(i)

\(\Vert x+y\Vert ^2\le \Vert x\Vert ^2 + 2\langle y, x+y\rangle \), for all \(x,y\in H\);

-

(ii)

\(\Vert \alpha x+ \beta y+ \gamma z\Vert ^2 =\alpha \Vert x\Vert ^2 + \beta \Vert y\Vert ^2+ \gamma \Vert z\Vert ^2-\alpha \beta \Vert x-y\Vert -\beta \gamma \Vert y-z\Vert -\gamma \alpha \Vert z-x\Vert \), for all \(\alpha , \beta , \gamma \in [0, 1]\) with \(\alpha + \beta + \gamma = 1\) and \(x,y,z\in H\).

A mapping \(P_C:H\rightarrow C\) is said to be metric projection if, for every point \(x\in H\), there exists a unique nearest point in C denoted by \(P_C(x)\), such that

It is well known that \(P_C\) is nonexpansive and firmly nonexpansive, that is

We also recall the following basic result in the setting of a real Hilbert space.

Lemma 2.2

Lopez et al. (2012) Let H be a Hilbert space. Let \(A :H\rightarrow H\) be an \(\alpha \)-inverse strongly monotone and \(B : H\rightarrow 2^H\) a maximal monotone operator. If \(T^{A,B}_r:=J^{B}_r(I-rA)={(I+rB)}^{-1}(I-rA),~r>0\) , then the following holds:

-

(i)

for \(r>0\), \(\mathrm{Fix}(T^{A,B}_r)={(A+B)}^{-1}(0)\). Further, if \(r\in (0,2\alpha ]\), then \({(A+B)}^{-1}(0)\) is a closed convex subset in H;

-

(ii)

for \(0<s\le r\) and \(x\in H,~\Vert x-T^{A,B}_sx\Vert \le 2\Vert x-T^{A,B}_rx\Vert \).

Lemma 2.3

Lopez et al. (2012) Let H be a Hilbert space. Let A is \(\alpha \)-inverse strongly monotone operator. Then, for given \(r > 0\)

for all \(x,y\in H\).

Lemma 2.4

Goebel and Kirk (1990) Let C be a nonempty closed convex subset of a uniformly convex space X and T a nonexpansive mapping with \(\mathrm{Fix}(T)\ne \emptyset \). If \(\{x_n\}\) is a sequence in C, such that \(x_n\rightharpoonup x\) and \((I - T)x_n\rightarrow y\), then \((I - T)x = y\). In particular, if \(y = 0\), then \(x\in \mathrm{Fix}(T)\).

Lemma 2.5

Alvarez and Attouch (2001) Let \(\{\psi _n\}, \{\delta _n\}\) and \(\{\alpha _n\}\) be the sequences in \([0,+\infty )\), such that \(\psi _{n+1}\le \psi _{n}+\alpha _n(\psi _{n}-\psi _{n-1})+\delta _n\) for all \(n\ge 1,~\sum \nolimits _ {n=1}^\infty \delta _n<+\infty \), and there exists a real number \(\alpha \) with \(0\le \alpha _n\le \alpha <1\) for all \(n\ge 1\). Then, the following holds:

-

(i)

\(\sum \nolimits _{n\ge 1}[\psi _{n}-\psi _{n-1}]_+<+\infty \), where \([t]_+=\max \{t,0\}\);

-

(ii)

there exists \(\psi ^*\in [0,+\infty )\), such that \(\lim \nolimits _{n\rightarrow +\infty }\psi _n=\psi ^*\).

Lemma 2.6

Opial (1967) Each Hilbert space H satisfies the Opial’s condition that is, for any sequence \(\{x_n\}\) with \(x_n\rightharpoonup x\), the inequality

holds for every \(y\in H\) with \(y\ne x\).

Definition 2.1

Kangtunyakarn (2011) Let C be a nonempty convex subset of a real Banach space X. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings of C into itself, and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers in [0, 1]. Define the mapping \(K_n : C\rightarrow C\) as follows:

Such a mapping \(K_n\) is called the K-mapping generated by \(T_1, T_2, \ldots , T_N\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\).

Lemma 2.7

Kangtunyakarn (2011) Let C be a nonempty closed convex subset of a strictly convex Banach space. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings of C into itself with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\ne \emptyset \), and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in N\), let \(K_n\) be the K-mapping generated by \(T_1, T_2, \ldots , T_N\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\). Then, for every \(x\in C\) and \(k\in N, \lim \nolimits _{n\rightarrow \infty }K_nx\) exists.

For every \(k\in N\) and \(x\in C\), a mapping \(K:C\rightarrow C\) is defined by \(Kx=\lim \nolimits _{n\rightarrow \infty }K_nx\) is called K-mapping generated by \(T_1, T_2, \ldots \) and \(\lambda _1, \lambda _2, \ldots \).

Remark 2.1

Kangtunyakarn (2011) For every \(n\in N, K_n\) is a nonexpansive mapping and \(\lim \nolimits _{n\rightarrow \infty }\sup \nolimits _{x\in D}\Vert K_nx-Kx\Vert =0\), for every bounded subset D of C.

Lemma 2.8

Kangtunyakarn (2011) Let C be a nonempty closed convex subset of a strictly convex Banach space. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings of C into itself with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\ne \emptyset \), and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in N\), let \(K_n\) be the K-mapping generated by \(T_1, T_2, \ldots , T_N\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\), and let K be the K-mapping generated by \(T_1, T_2, \ldots \) and \(\lambda _1, \lambda _2, \ldots \). Then, \(\mathrm{Fix}(K)=\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\).

Assumption 2.1

Blum and Oettli (1994) We assume that \(F : C\times C \rightarrow {\mathbb {R}}\) satisfies the following conditions:

-

(A1)

\(F(x, x) = 0,~\forall x\in C\);

-

(A2)

F is monotone, i.e., \(F(x, y) + F(y, x)\le 0,~\forall x, y\in C\);

-

(A3)

F is upper hemicontinuous, i.e., for each \(x, y, z\in C\),

$$\begin{aligned} \limsup \limits _{t\rightarrow 0}F(tz + (1- t)x, y)\le F(x, y); \end{aligned}$$ -

(A4)

For each \(x\in C\) fixed, the function \(y\rightarrow F(x, y)\) is convex and lower semicontinuous;

-

(A5)

For fixed \(r > 0\) and \(z\in C\), there exists a nonempty compact convex subset K of H and \(x\in C\cap K\), such that

$$\begin{aligned} F(y,x) + {1\over r}\langle y-x, x-z\rangle < 0, \quad \forall y\in C\backslash K. \end{aligned}$$

Lemma 2.9

Combettes and Hirstoaga (2005) Assume that the bifunction \(F: C\times C \rightarrow {\mathbb {R}}\) satisfies Assumption 2.1. For \(r > 0\) and for all \(x\in H\), define a mapping \(T_{r}: H\rightarrow C\) as follows:

for all \(x\in H\). Then, the following holds:

-

(i)

\(T_{r}\) is nonempty and single-valued.

-

(ii)

\(T_{r}\) is firmly nonexpansive, i.e., for any \(x,y\in H\),

$$\begin{aligned} \Vert T_{r}x-T_{r}y\Vert ^2\le \langle T_{r}x-T_{r}y, x-y\rangle . \end{aligned}$$ -

(iii)

\(\mathrm{Fix}(T_{r}) = \mathrm{EP}(F)\).

-

(iv)

\(\mathrm{EP}(F)\) is closed and convex.

Lemma 2.10

Suwannaut and Kangtunyakarn (2014) Let C be a nonempty, closed, and convex subset of a real Hilbert space H. For each \(i=1,2,\ldots ,N\), let \(F_i:C\times C\rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.1 with \(\bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)\ne \emptyset \). Then

where \(a_i\in (0,1)\) for \(i=1,2,\ldots ,N\) and \(\sum \nolimits _ {i=1}^N a_i=1\).

Remark 2.2

Suwannaut and Kangtunyakarn (2014) From Lemma 2.10, it is easy to see that \(\sum \nolimits _ {i=1}^N a_iF_i\) satisfies Assumption 2.1. Using Lemma 2.9, we obtain

where

and \(a_i\in (0,1)\), for each \(i=1,2,\ldots ,N\) and \(\sum \nolimits _ {i=1}^N a_i=1\).

Theorem 2.1

Khuangsatung and Kangtunyakarn (2014) Let H be a real Hilbert space and let \(B:H\rightarrow 2^H\) be a maximal monotone mapping. For every \(i=1,2,\ldots ,N\), let \(A_i:H\rightarrow H\) be \(\alpha _i\)-inverse strongly monotone mapping with \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}\) and \(\bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)\ne \emptyset \). Then

where \(\sum \nolimits _ {i=1}^N b_i=1\) and \(b_i\in (0,1)\) for every \(i=1,2,\ldots ,N\). Moreover, \(J^{B}_s(I-s\sum \nolimits _ {i=1}^N b_iA_i)\) is a nonexpansive mapping for all \(0<s<2\eta \).

Remark 2.3

From Lemma 2.2 and Theorem 2.1, we obtain

where \(T^{{\sum A},B}_r:=J^{B}_r(I-r\sum \nolimits _ {i=1}^N b_iA_i)={(I+rB)}^{-1}(I-r\sum \nolimits _ {i=1}^N b_iA_i),~r>0\).

Lemma 2.11

Xu (2003) Assume that \(\{s\}\) is a sequence of nonnegative real numbers, such that

where \(\{\alpha _n\}\) is a sequence in (0, 1) and \(\{\delta _n\}\) is a sequence, such that

-

(i)

\(\sum \nolimits _ {n=1}^\infty \alpha _n=\infty \);

-

(ii)

\(\lim \sup \nolimits _ {n\rightarrow \infty }{\delta _n\over \alpha _n}\le 0\) or \(\sum \nolimits _ {n=1}^\infty |\delta _n|<\infty \).

Then, \(\lim \nolimits _ {n\rightarrow \infty }s=0\).

3 Main result

In this section, we prove a weak convergence theorem for finding a common element of the fixed point sets of a infinite family of nonexpansive mappings, the solution sets of a combination of equilibrium problems, and combination of inclusion problems

Theorem 3.1

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. For each \(i=1,2,\ldots ,N\), let \(F_i:C\times C\rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.1. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings of C into itself with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\ne \emptyset \) and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in N\), let \(K_n\) be the K-mapping generated by \(T_1, T_2, \ldots , T_N\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\), and let K be the K-mapping generated by \(T_1, T_2, \ldots \) and \(\lambda _1, \lambda _2, \ldots \) for every \(x\in C\). For every \(i=1,2,\ldots ,N\), let \(A_i:H\rightarrow H\) be \(\alpha _i\)-inverse strongly monotone mapping with \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}\) and \(B:H\rightarrow 2^H\) be a maximal monotone mapping. Assume that \(\Omega :=\bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)\bigcap \bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\bigcap \bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)\ne \emptyset \). For given initial points \(x_0, x_1\in H\), let the sequences \(\{x_n\}, \{y_n\}\) and \(\{u_n\}\) be generated by

where the sequences \(\{\alpha _n\}, \{\beta _n\}\) and \(\{\gamma _n\}\subset [0, 1]\) with \(\alpha _n + \beta _n + \gamma _n=1\), for all \(n\ge 1\) and \(\{\theta _n\}\subset [0,\theta ], \theta \in [0,1],~\lim \inf \nolimits _ {n\rightarrow \infty }r_n>0~ and ~0<s<2\eta \), where \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}\). Suppose the following conditions hold:

-

(i)

\(\sum \nolimits _ {n=1}^\infty \theta _n \Vert x_n-x_{n-1}\Vert <\infty \);

-

(ii)

\(\sum \nolimits _ {n=1}^\infty \alpha _n<\infty ,~ \lim \nolimits _ {n\rightarrow \infty } \alpha _n=0\);

-

(iii)

\(\sum \nolimits _ {n=1}^\infty |r_{n+1}-r_n|<\infty ,~\sum \nolimits _ {n=1}^\infty |\alpha _{n+1}-\alpha _n|<\infty ,~ \sum \nolimits _ {n=1}^\infty |\beta _{n+1}-\beta _n|<\infty , \sum \nolimits _ {n=1}^\infty |\gamma _{n+1}-\gamma _n|<\infty .\)

Then, sequence \(\{x_n\}\) converges weakly to \(q\in \Omega \).

Proof

We divide the proof in the following steps.

Step 1. First, we show that \(\{x_n\}\) is bounded.

Let \(p\in \Omega \), and then, from Lemma 2.9, we have \(u_n=T^{\sum }_{r_n}y_n\). We estimate that

From (3.2) and nonexpansiveness of \(J_{s}^B(I-s\sum \nolimits _ {i=1}^N b_iA_i)\), we arrive that

From Lemma 2.5 and condition (i), we obtain \(\lim \nolimits _{n\rightarrow \infty }\Vert x_{n}-p\Vert \) exists and it follows that \(\{x_n\}\) is bounded and also \(\{y_n\}\) and \(\{u_n\}\) are bounded.

Step 2. We will show that \(\lim \nolimits _{n\rightarrow \infty }\Vert x_{n+1}-x_n\Vert =0.\)

Let us take \(J^{{\sum A},B}_s=J^{B}_s(I-s\sum \nolimits _ {i=1}^N b_iA_i)\). Then, we have

Since \(u_n=T^{\sum }_{r_n}y_n\), therefore, using the definition of \(T^{\sum }_{r_n}\), we have

and

From (3.5) and (3.6), it follows that:

and

From (3.7), (3.8), and monotonicity of \(\sum \nolimits _ {i=1}^N a_iF_i\), we have

which follows that

It follows that

It follows that

which implies

which follows that

Which implies that

Now, from (3.4) and (3.10), we have

Following the lines of Lemma 2.11 in Kangtunyakarn (2011), we have

Since \(\lambda _N\rightarrow 0\) as \(n\rightarrow \infty \), we have

From (3.11), (3.12), Lemma 2.11, and conditions (i), (iii), we have

Step 3. We will show that \(q\in \bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)\).

From Lemma 2.3, we have

Now, from (3.14), we obtain

From condition (i) and (3.13), it follows that

By the following same line as above and using (3.15), we have

Since \(p\in \Omega \) and \(T^{\sum }_{r}\) is firmly nonexpansive, we have

Hence, it follows that

Now, from (3.1), we have

From (3.17) and (3.2), above inequality can be written as

From (3.13), (3.18), and condition (i), it follows that

From the definition of \(y_n\) and condition (i), we have

From (3.19), we obtain

as \(n\rightarrow \infty \). From (3.13) and (3.21), it follows that

as \(n\rightarrow \infty \). Since \(\{x_n\}\) is bounded and H is reflexive, \(w_w(x_n) =\{x\in H : x_{n_i}\rightharpoonup x,~ \{x_{n_i}\}\subset \{x_n\}\}\) is nonempty. Let \(q\in w_w(x_n)\) be an arbitrary element. Then, there exists a subsequence \(\{x_{n_i}\}\subset \{x_n\}\) converging weakly to q. Let \(p\in w_w(x_n)\) and \(\{x_{n_m}\}\subset \{x_n\}\) be such that \(x_{n_m}\rightharpoonup p\). From (3.21), we also have \(u_{n_i}\rightharpoonup q\) and \(u_{n_m}\rightharpoonup p\). Since \(J^{{\sum A},B}_s\) is nonexpansive, by Lemma 2.4, we have \(p,q\in \bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)\). Applying Lemma 2.6, we obtain \(p = q\).

Step 4. We will show that \(q\in \bigcap \nolimits _ {i=1}^\infty \mathrm{Fix} (T_i)=\mathrm{Fix}(K)\).

Now, from Lemma 2.1 and (3.18), we have

From (3.13), and conditions (i), (ii), we obtain

From (3.11), we have

In addition, we can estimate

From (3.13), (3.24), (3.25), and condition (ii), we obtain

Now, suppose to the contrary that \(q\not \in \mathrm{Fix}(K)\), i.e., \(Kq\ne q\) and by Lemma 2.6, we see that

On the other hand, we have

Using Remark 2.1 and (3.26), we obtain that \(\lim \nolimits _{n\rightarrow \infty }\Vert Ku_n-u_n\Vert =0\). From (3.27), we obtain

which is a contradiction, so we have \(q\in \mathrm{Fix}(K) =\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix} (T_i)\).

Step 5. Show that \(q\in \bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)\).

Since \(u_n=T^{\sum }_{r_n}y_n\), we have

Since \(\sum \nolimits _ {i=1}^N a_iF_i\) satisfies Assumption 2.1, so from monotonicity of \(\sum \nolimits _ {i=1}^N a_iF_i\), we get

Since \(\liminf \nolimits _{n\rightarrow \infty }r_n>0\) and from (3.19), it follows that

It follows from (3.29), (3.30), and (A4) that

For \(t\in (0,1]\) and \(y\in C\), let \(y_t:=ty+(1-t)q\). Since \(y\in C\), we have \(y_t\in C\), and hence, \(\sum \nolimits _ {i=1}^N a_iF_i(y_t,q)\le 0\). Therefore, we have

Dividing by t, we get

Letting \(t\downarrow 0\) and from (A3), we get

Therefore, \(q\in \mathrm{EP}(\sum \nolimits _ {i=1}^N a_iF_i)\). Hence, by Lemma 2.10, we obtain \(q\in \bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i).\) Therefore, \(q\in \Omega \). This completes the proof. \(\square \)

As direct consequences of Theorem 3.1, we have the following corollaries.

Corollary 3.1

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. Let \(F:C\times C\rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.1. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings of C into itself with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\ne \emptyset \) and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in N\), let \(K_n\) be the K-mapping generated by \(T_1, T_2, \ldots , T_N\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\), and let K be the K-mapping generated by \(T_1, T_2, \ldots \) and \(\lambda _1, \lambda _2, \ldots \) for every \(x\in C\). For every \(i=1,2,\ldots ,N\), let \(A:H\rightarrow H\) be \(\alpha \)-inverse strongly monotone mapping and \(B:H\rightarrow 2^H\) be a maximal monotone mapping. Assume that \(\Omega :=(A+B)^{-1}(0)\bigcap \bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\bigcap \mathrm{EP}(F)\ne \emptyset \). For given initial points \(x_0, x_1\in H\), let the sequences \(\{x_n\}, \{y_n\}\) and \(\{u_n\}\) be generated by

where the sequences \(\{\alpha _n\}, \{\beta _n\}\), and \(\{\gamma _n\}\subset [0, 1]\) with \(\alpha _n + \beta _n + \gamma _n=1\), for all \(n\ge 1\) and \(\{\theta _n\}\subset [0,\theta ], \theta \in [0,1],~\lim \inf \nolimits _ {n\rightarrow \infty }r_n>0~ and~0<s<2\alpha \). Suppose that the following conditions hold:

-

(i)

\(\sum \nolimits _ {n=1}^\infty \theta _n \Vert x_n-x_{n-1}\Vert <\infty \);

-

(ii)

\(\sum \nolimits _ {n=1}^\infty \alpha _n<\infty ,~ \lim \nolimits _ {n\rightarrow \infty } \alpha _n=0\);

-

(iii)

\(\sum \nolimits _ {n=1}^\infty |r_{n+1}-r_n|<\infty ,\sum \nolimits _ {n=1}^\infty |\alpha _{n+1}-\alpha _n|<\infty , \sum \nolimits _ {n=1}^\infty |\beta _{n+1}-\beta _n|<\infty , \sum \nolimits _ {n=1}^\infty |\gamma _{n+1}-\gamma _n|<\infty .\)

Then, sequence \(\{x_n\}\) converges weakly to \(q\in \Omega \).

Proof

By taking \(F_i=F\) and \(A_i=A,~\forall i=1,2,\ldots N\), in Theorem 3.1, the conclusion of Corollary 3.1 is followed. \(\square \)

Corollary 3.2

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings of C into itself with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\ne \emptyset \), and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in N\), let \(K_n\) be the K-mapping generated by \(T_1, T_2, \ldots , T_N\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\), and let K be the K-mapping generated by \(T_1, T_2, \ldots \) and \(\lambda _1, \lambda _2, \ldots \) for every \(x\in C\). For every \(i=1,2,\ldots ,N\), let \(A:H\rightarrow H\) be \(\alpha \)-inverse strongly monotone mapping and \(B:H\rightarrow 2^H\) be a maximal monotone mapping. Assume that \(\Omega :=(A+B)^{-1}(0)\bigcap \bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\ne \emptyset \). For given initial points \(x_0, x_1\in H\), let the sequences \(\{x_n\}\) and \(\{y_n\}\) be generated by

where the sequences \(\{\alpha _n\}, \{\beta _n\}\), and \(\{\gamma _n\}\subset [0, 1]\) with \(\alpha _n + \beta _n + \gamma _n=1\), for all \(n\ge 1\) and \(\{\theta _n\}\subset [0,\theta ], \theta \in [0,1],~0<s<2\alpha \). Suppose that the following conditions hold:

-

(i)

\(\sum \nolimits _ {n=1}^\infty \theta _n \Vert x_n-x_{n-1}\Vert <\infty \);

-

(ii)

\(\sum \nolimits _ {n=1}^\infty \alpha _n<\infty ,~ \lim \nolimits _ {n\rightarrow \infty } \alpha _n=0\);

-

(iii)

\(\sum \nolimits _ {n=1}^\infty |\alpha _{n+1}-\alpha _n|<\infty ,~ \sum \nolimits _ {n=1}^\infty |\beta _{n+1}-\beta _n|<\infty , \sum \nolimits _ {n=1}^\infty |\gamma _{n+1}-\gamma _n|<\infty \).

Then, sequence \(\{x_n\}\) converges weakly to \(q\in \Omega \).

Proof

By taking \(F_i\equiv 0\) and \(A_i=A,~\forall i=1,2,\ldots N\), in Theorem 3.1, the conclusion of Corollary 3.2 is followed. \(\square \)

4 Applications

In this section, we discuss various applications of inertial forward–backward method to establish weak convergence result for finding a common element of the fixed point set of infinite family of nonexpansive mappings, solution sets of a combination of equilibrium problem, and k-strict pseudo-contraction mapping in the setting of Hilbert space. To prove these results, we need the following results.

Definition 4.1

A mapping \(T : C\rightarrow C\) is said to be a k-strict pseudo-contraction mapping, if there exists \(k\in [0,1)\), such that

Lemma 4.1

Zhou (2008) Let C be a nonempty closed convex subset of a real Hilbert space H and \(T : C\rightarrow C\) a k-strict pseudo-contraction. Define \(S : C\rightarrow C\) by \(Sx = ax + (1 - a)Tx\), for each \(x\in C\). Then, S is nonexpansive, such that \(\mathrm{Fix}(S) = \mathrm{Fix}(T)\), for \(a\in [k, 1)\).

Theorem 4.1

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. For each \(i=1,2,\ldots ,N\), let \(F_i:C\times C\rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.1. Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of \(k_i\)-strictly pseudo-contractive mappings of C into itself. Define a mapping \(T_{k_i}\) by \(T_{k_i}=k_ix+(1-k_i)T_ix,~\forall x\in C, i\in {\mathbb {N}}\) with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_{k_i})\ne \emptyset \), and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, . . . ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in {\mathbb {N}}\), let \(K_n\) be the K-mapping generated by \(T_{k_1}, T_{k_2}, \ldots , T_{k_n}\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\), and let K be the K-mapping generated by \(T_{k_1}, T_{k_2}, \ldots \) and \(\lambda _1, \lambda _2, \ldots \) for every \(x\in C\). For every \(i=1,2,\ldots ,N\), let \(A_i:H\rightarrow H\) be \(\alpha _i\)-inverse strongly monotone mapping with \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}\) and \(B:H\rightarrow 2^H\) be a maximal monotone mapping. Assume that \(\Omega :=\bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)\bigcap \bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\bigcap \bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)\ne \emptyset \). For given initial points \(x_0, x_1\in H\), let the sequences \(\{x_n\}, \{y_n\}\) and \(\{u_n\}\) be generated by

where the sequences \(\{\alpha _n\}, \{\beta _n\}\), and \(\{\gamma _n\}\subset [0, 1]\) with \(\alpha _n + \beta _n + \gamma _n=1\), for all \(n\ge 1\) and \(\{\theta _n\}\subset [0,\theta ], \theta \in [0,1],~\lim \inf \nolimits _ {n\rightarrow \infty }r_n>0 ~and~0<s<2\eta \), where \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}\). Suppose that the following conditions hold:

-

(i)

\(\sum \nolimits _ {n=1}^\infty \theta _n \Vert x_n-x_{n-1}\Vert <\infty \);

-

(ii)

\(\sum \nolimits _ {n=1}^\infty \alpha _n<\infty ,~ \lim \nolimits _ {n\rightarrow \infty } \alpha _n=0\);

-

(iii)

\(\sum \nolimits _ {n=1}^\infty |r_{n+1}-r_n|<\infty ,~\sum \nolimits _ {n=1}^\infty |\alpha _{n+1}-\alpha _n|<\infty ,~ \sum \nolimits _ {n=1}^\infty |\beta _{n+1}-\beta _n|<\infty , \sum \nolimits _ {n=1}^\infty |\gamma _{n+1}-\gamma _n|<\infty .\)

Then, sequence \(\{x_n\}\) converges weakly to \(q\in \Omega \).

Proof

For every \(i\in {\mathbb {N}}\), by Lemma 4.1, we have that \(T_{k_i}\) is a nonexpansive mapping and \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_{k_i})=\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\). From Theorem 3.1 and Lemma 2.8, the conclusion of Theorem 4.1 is followed.

Now, we consider a property of finite family of strictly pseudo-contractive mappings in Hilbert space as follows: \(\square \)

Proposition 4.1

Fan et al. (2009) Let C be a nonempty closed convex subset of a real Hilbert space H.

-

(i)

For any integer \(N\ge 1\), let, for each \(1\le i\le N,~S_i:C\rightarrow H\) is \(k_i\)-strict pseudo-contraction for some \(0\le k_i<1\). Let \(\{b_i\}^ N_{i}\) is a positive sequence, such that \(\sum \nolimits _ {i=1}^ N b_i=1\). Then, \(\sum \nolimits _ {i=1}^ N b_iS_i\) is a k-strict pseudo-contraction, with \(k=\max _{i=1,\ldots , N}\{k_i\}\);

-

(ii)

Let \(\{S_i\}^ N_{i}\) and \(\{b_i\}^ N_{i}\) be given as in (i) above. Suppose that \(\{S_i\}^ N_{i}\) has a common fixed point. Then

$$\begin{aligned} \mathrm{Fix}\left( \sum \limits _ {i=1}^N b_iS_i\right) =\bigcap \limits _ {i=1}^N \mathrm{Fix}(S_i). \end{aligned}$$

Theorem 4.2

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. For each \(i=1,2,\ldots ,N\), let \(F_i:C\times C\rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.1. Let \(\{S_i\}^ N_{i=1}\) be an finite family of \(k_i\)-strictly pseudo-contractive mappings of C into itself with \(k=\max _{i=1,\ldots ,{\mathbb {N}}}\{k_i\}\). Let \(\{T_i\}^\infty _{i=1}\) be an infinite family of nonexpansive mappings with \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_{i})\ne \emptyset \), and let \(\lambda _1, \lambda _2, \ldots ,\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). For every \(n\in {\mathbb {N}}\), let \(K_n\) be the K-mapping generated by \(T_{1}, T_{2}, \ldots , T_{n}\) and \(\lambda _1, \lambda _2, \ldots ,\lambda _N\), and let K be the K-mapping generated by \(T_{1}, T_{2}, \ldots \) and \(\lambda _1, \lambda _2, \ldots \) for every \(x\in C\). Assume that \(\Omega :=\bigcap \nolimits _ {i=1}^N \mathrm{Fix}(S_i)\bigcap \bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)\bigcap \bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)\ne \emptyset \). For given initial points \(x_0, x_1\in H\), let the sequences \(\{x_n\}, \{y_n\}\) and \(\{u_n\}\) be generated by

where the sequences \(\{\alpha _n\}, \{\beta _n\}\), and \(\{\gamma _n\}\subset [0, 1]\) with \(\alpha _n + \beta _n + \gamma _n=1\), for all \(n\ge 1\) and \(\{\theta _n\}\subset [0,\theta ], \theta \in [0,1],~\lim \inf \limits _ {n\rightarrow \infty }r_n>0 and~0<s<1-k\). Suppose that the following conditions hold:

-

(i)

\(\sum \nolimits _ {n=1}^\infty \theta _n \Vert x_n-x_{n-1}\Vert <\infty ;\)

-

(ii)

\(\sum \nolimits _ {n=1}^\infty \alpha _n<\infty ,~ \lim \nolimits _ {n\rightarrow \infty } \alpha _n=0;\)

-

(iii)

\(\sum \nolimits _ {n=1}^\infty |r_{n+1}-r_n|<\infty ,~\sum \nolimits _ {n=1}^\infty |\alpha _{n+1}-\alpha _n|<\infty ,~ \sum \nolimits _ {n=1}^\infty |\beta _{n+1}-\beta _n|<\infty , \sum \nolimits _ {n=1}^\infty |\gamma _{n+1}-\gamma _n|<\infty \).

Then, sequence \(\{x_n\}\) converges weakly to \(q\in \Omega \).

Proof

Let \(A_i = I-S_i\) and \(B = 0\) in Theorem 3.1, and then, we have that \(A_i\) is \(\alpha _i\)-inverse strongly monotone with \({{1-k}\over 2}\) . Now, we show that \(\bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)=\bigcap \nolimits _ {i=1}^N \mathrm{Fix}(S_i)\). Since \(A_i = I-S_i\) and \(B = 0\), therefore, using Theorem 2.1 and Proposition 4.1, we have

It follows that

We know that \(J_{s}^B(I-s\sum \nolimits _ {i=1}^N b_iA_i)u_n=(I+sB)^{-1}(I-s\sum \nolimits _ {i=1}^N b_iA_i)u_n\).

Since \(B=0,~\mathrm{we~ have}~~J_{s}^B\left( I-s\sum \nolimits _ {i=1}^N b_iA_i\right) u_n=u_n-s\sum \nolimits _ {i=1}^N b_iA_iu_n\)

Since \(s\in (0,1-k)\subset (0, 1)\), then \((1-s)u_n+ s\sum \nolimits _ {i=1}^N b_iS_iu_n\in H\). Therefore, from Theorem 3.1, we obtain the desired result. \(\square \)

5 Example and numerical results

Finally, we give the following numerical example to illustrate Theorems 3.1 and 4.2.

Example 5.1

Let \({\mathbb {R}}\) be the set of real numbers. For each \(i=1,2,\ldots ,N\), let \(F_i:{\mathbb {R}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) be defined by

Furthermore, let \(a_i={4\over 5^i}+{1\over N5^N}\), such that \(\sum \nolimits _ {i=1}^N a_i=1\), for every \(i=1,2,\ldots ,N\). Then, we have

where \(\Psi =\sum \nolimits _ {i=1}^N \Big ({4\over 5^i}+{1\over N5^N}\Big )i\).

It is easy to check that \(\sum \nolimits _ {i=1}^N a_iF_i\) satisfies all the conditions of Theorem 3.1 and \(\mathrm{EP}\big (\sum \nolimits _ {i=1}^N a_iF_i\big )=\bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)=\{1\}.\)

For each \(i=1,2,\ldots ,N\), let \(A_i:{\mathbb {R}}\rightarrow {\mathbb {R}}\) be defined by \(A_i(x)={x-(4i+1)\over i}\) and \(B:{\mathbb {R}}\rightarrow 2^{\mathbb {R}}\) is defined by \(B(x)=\{4x\}\).

It is easy to observe that \(A_i\) is i-inverse strongly monotone mapping with \(\eta =\min _{i=1,\ldots ,N}\{\ i\}=1\) and \(\bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)=\{ 1\}\).

Further, let \(b_i={3\over 4^i}+{1\over N4^N}\), such that \(\sum \nolimits _ {i=1}^N b_i=1\), for every \(i=1,2,\ldots ,N\). It is easy to check that \(A_i\) and B satisfy all the conditions of Theorem 3.1 and \(\Big (\sum \nolimits _ {i=1}^N b_iA_i+B\Big )^{-1}(0)=\bigcap \nolimits _ {i=1}^N(A_i+B)^{-1}(0)=\{ 1\}\).

Let the mapping \(T_i:{\mathbb {R}}\rightarrow {\mathbb {R}}\) is defined by \(T_i(x)={x+i\over i+1},~i=1,2,\ldots ,\). It is easy to check that \(\{T_i\}^\infty _{i=1}\) is infinite family of nonexpansive mapping. For each i, let \(\lambda _i={i\over i+1}\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). Since \(K_n\) is K-mapping generated by \(T_1, T_2, \ldots ,\) and \(\lambda _1, \lambda _2, \ldots \); therefore, we obtain

It is easy to see that \(\bigcap \nolimits _ {i=1}^\infty \mathrm{Fix}(T_i)=\{1\}\). Therefore, it is easy to see that

By Lemma 2.9, we have that \(T^{\sum }_{r_n}x\), is a single-valued mapping for each \(x\in {\mathbb {R}}\). Hence, for \(r_n > 0\), there exist sequences \(\{x_n\}\) and \(\{u_n\}\), such that

which is equivalent to

Since \(P(y)=ay^2+by+c\ge 0,\) for all \(y\in {\mathbb {R}}\), then \(b^2-4ac=(u_n-3\Psi r_n+3\Psi r_nu_n-y_n)^2\le 0\), which yields \((u_n-3\Psi r_n+3\Psi r_nu_n-y_n)^2= 0\). Therefore, for each \(r_n>0\), it implies that

By choosing \(\alpha _n=r_n={1\over 6n}, \beta _n={18n-3\over 30n}, \gamma _n={12n-2\over 30n}\), \(\theta _n={1\over 12}\) and \(s=0.1\) as \(0<s<2\eta \), where \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}=1\). It is clear that the sequences \(\{\alpha _n\}, \{\beta _n\}\), \(\{\gamma _n\}\) and \(\{\theta _n\}\) for all \(n\ge 1\) satisfy all the conditions of Theorem 3.1. For each \(n\in {\mathbb {N}}\), using (5.1), algorithm (3.1) can be re-written as follows:

By taking \(x_0=2,~x_1=0\) with \(N=2\) and \(N=20\) for \(n=25\) iterations in the algorithm (5.2), we have the numerical results in Table 1 and Fig. 1.

We can conclude that the sequence \(\{x_n\}\) converges to 1, as shown in Table 1 and Fig. 1. It can also be easily seen that sequence \(\{x_n\}\) for \(N=20\) converges more quickly than for \(N=2\).

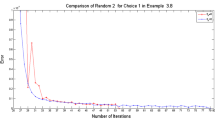

Error plot for Example 5.1

Figure 2 shows that the sequence generated by our proposed inertial forward–backward method proposed in Theorem 3.1 has a better convergence rate than standard forward–backward method (i.e., at \(\theta _n=0)\).

Example 5.2

Let \({\mathbb {R}}\) be the set of real numbers. For each \(i=1,2,\ldots ,N\), let \(F_i:{\mathbb {R}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) be defined by

Furthermore, let \(a_i={4\over 5^i}+{1\over N5^N}\), such that \(\sum \nolimits _ {i=1}^N a_i=1\), for every \(i=1,2,\ldots ,N\). Then, it is easy to check that \(\sum \nolimits _ {i=1}^N a_iF_i\) satisfies all the conditions of Theorem 3.1 and \(\mathrm{EP}\big (\sum \nolimits _ {i=1}^N a_iF_i\big )=\bigcap \nolimits _ {i=1}^N \mathrm{EP}(F_i)=\{0\}.\)

Let the mapping \(T_i:{\mathbb {R}}\rightarrow {\mathbb {R}}\) is defined by \(T_i(x)={ix\over i+1},~i=1,2,\ldots ,\). It is easy to check that \(\{T_i\}^ \infty _{i=1}\) is infinite family of nonexpansive mapping. For each i, let \(\lambda _i={i\over i+1}\) be real numbers, such that \(0<\lambda _i < 1\) for every \(i = 1, 2, \ldots ,\) with \(\sum \nolimits _ {i=1}^\infty \lambda _i<\infty \). Since \(K_n\) is K-mapping generated by \(T_1, T_2, \ldots ,\) and \(\lambda _1, \lambda _2, \ldots \); therefore, we obtain

For each \(i=1,2,\ldots ,N\), let a mapping \(S_i:{\mathbb {R}}\rightarrow {\mathbb {R}}\) is defined by

be a finite family of \({i^2-1\over {(i+1)}^2}\)-strictly pseudo-contractive mappings. Furthermore, let \(b_i={7\over 8^i}+{1\over N8^N}\), such that \(\sum \nolimits _ {i=1}^N b_i=1\), for every \(i=1,2,\ldots ,N\). It is easy to see that \(\bigcap \nolimits _ {i=1}^N \mathrm{Fix}(S_i)=\{0\}\). Therefore, it is easy to see that

By Lemma 2.9, for each \(x\in {\mathbb {R}}\), a single-valued mapping \(T^{\sum }_{r_n}x\) as Example 5.1, can be computed as

where \(S_1=\sum \nolimits _ {i=1}^N({4\over 5^i}+{1\over N5^N})i\). By choosing \(\alpha _n=r_n={1\over 6n}, \beta _n={18n-3\over 30n}, \gamma _n={12n-2\over 30n}\), \(\theta _n={1\over 20}\), and \(s=0.1\) as \(0<s<2\eta \), where \(\eta =\min _{i=1,\ldots ,N}\{\alpha _i\}=1\). It is clear that the sequences \(\{\alpha _n\}, \{\beta _n\}\), \(\{\gamma _n\}\) and \(\{\theta _n\}\) for all \(n\ge 1\) satisfy all the conditions of Theorem 4.2 For each \(n\in {\mathbb {N}}\), using (5.3), algorithm (4.2) can be re-written as follows:

By taking \(x_0=4,~x_1=4.5\) with \(N=4\) and \(N=20\) for \(n=25\) iterations in the algorithm (5.4), we have the numerical results in Table 2 and Fig. 3.

We can conclude that the sequence \(\{x_n\}\) converges to 0, as shown in Table 2 and Fig. 3. It can also be easily seen that sequence \(\{x_n\}\) for \(N=20\) converges more quickly than for \(N=4\).

Error plot for Example 5.2

Figure 4, shows that the sequence generated by our proposed inertial forward–backward method proposed in Theorem 4.2 has a better convergence rate than forward–backward method (i.e., at \(\theta _n=0)\).

6 Conclusion

In this work, we established weak convergence result for finding a common element of the fixed point sets of a infinite family of nonexpansive mappings and the solution sets of a combination of equilibrium problems and combination of inclusion problems. It has been illustrated by an example with different choices that our proposed method involving the inertial term converges faster than usual projection method. Finally, we discussed some applications of modified inclusion problems in finding a common element of the set of fixed points of a infinite family of strictly pseudo-contractive mappings and the set of solution of equilibrium problem supported by numerical result. The method and results presented in this paper generalize and unify the corresponding known results in this area (see Cholamjiak 1994; Dong et al. 2017; Khan et al. 2018; Khuangsatung and Kangtunyakarn 2014).

References

Alvarez F (2004) Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert spaces. SIAM J Optim 14(3):773–782

Alvarez F, Attouch H (2001) An inertial proximal method for monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal 9:3–11

Bauschke HH, Combettes PL (2011) Convex analysis and monotone operator theory in Hilbert spaces. CMS books in mathematics. Springer, New York

Blum E, Oettli W (1994) From optimization and variational inequalities to equilibrium problems. Math Stud 63:123–145

Cholamjiak P (1994) A generalized forward-backward splitting method for solving quasi inclusion problems in Banach spaces. Numer Algorithm 8:221–239

Combettes PL, Hirstoaga SA (2005) Equilibrium programming in Hilbert spaces. J. Nonlinear Convex Anal. 6:117–136

Combettes PL, Wajs VR (2005) Signal recovery by proximal forward-backward splitting. Multiscale Model Simul 4:1168–1200

Dang Y, Sun J, Xu H (2017) Inertial accelerated algorithms for solving a split feasibility problem. J Ind. Manag Optim 13(3):1383–1394

Dong Q, Jiang D, Cholamjiak P, Shehu Y (2017) A strong convergence result involving an inertial forwardbackward algorithm for monotone inclusions. J Fixed Point Theory Appl 19(4):3097–3118

Dong QL, Yuan HB, Cho YJ, Rassias M (2018) Modified inertial Mann algorithm and inertial CQ-algorithm for nonexpansive mappings. Optim Lett 12(1):87–102

Douglas J, Rachford HH (1956) On the numerical solution of the heat conduction problem in 2 and 3 space variables. Trans Am Math Soc 82:421–439

Fan Q, Yan M, Yu Y, Chen R (2009) Weak and strong convergence theorems for \(k\)-strictly pseudo-contractive in Hilbert space. Appl Math Sci 3(58):2855–2866

Farid M, Irfan SS, Khan MF, Khan SA (2017) Strong convergence of gradient projection method for generalized equilibrium problem in a Banach space. J Inequal Appl 2017:297

Goebel K, Kirk WA (1990) Topics In metric fixed point theory, vol 28. Cambridge University Press, Cambridge

Khan SA, Chen JW (2015) Gap functions and error bounds for generalized mixed vector equilibrium problems. J Optim Theory Appl 166(3):767–776

Khan SA, Suantai S (2018) Cholamjiak shrinking projection methods involving inertial forward backward splitting methods for inclusion problems. Revista Real Acad Ciencias Exact. https://doi.org/10.1007/s13398-018-0504-1

Kangtunyakarn A (2011) Iterative algorithms for finding a common solution of system of the set of variational inclusion problems and the set of fixed point problems. Fixed Point Theory Appl 2011:38

Khuangsatung W, Kangtunyakarn A (2014) Algorithm of a new variational inclusion problem and strictly pseudononspreading mapping with application. Fixed Point Theory Appl 2014:209

Lions PL, Mercier B (1979) Splitting algorithms for the sum of two nonlinear operators. SIAM J Numer Anal 16:964–979

Lopez G, Martin-Marquez V, Wang F, Xu HK (2012) Forward-backward splitting methods for accretive operators in Banach spaces. Abstr Appl Anal Art ID 109236

Lorenz D, Pock T (2015) An inertial forward-backward algorithm for monotone inclusions. J Math Imaging Vis 51:311–325

Moudafi A, Oliny M (2003) Convergence of a splitting inertial proximal method for monotone operators. J Comput Appl Math 155:447–454

Nesterov Y (1983) A method for solving the convex programming problem with convergence rate \({\rm O}(1/{\rm k}^2)\). Dokl Akad Nauk SSSR 269:543–547

Opial Z (1967) Weak convergence of successive approximations for nonexpansive mappings. Bull Am Math Soc 73:591–597

Passty GB (1979) Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J Math Anal Appl 72:383–390

Peaceman DH, Rachford HH (1955) The numerical solution of parabolic and elliptic differentials. J Soc Ind Appl Math 3:28–41

Polyak BT (1964) Some methods of speeding up the convergence of iteration methods, U.S.S.R. Comput Math Math Phys 4(5):1–17

Suwannaut S, Kangtunyakarn A (2013) The combination of the set of solutions of equilibrium problem for convergence theorem of the set of fixed points of strictly pseudo-contractive mappings and variational inequalities problem. Fixed Point Theory Appl 291:26

Takahashi W (2000) Nonlinear functional analysis. Yokohama Publishers, Yokohama

Tseng P (2000) A modified forward–backward splitting method for maximal monotone mappings. SIAM J Control Optim 38:431–446

Xu HK (2003) An iterative approach to quadratic optimization. J Optim Theory Appl 116(3):659–678

Zhou H (2008) Convergence theorems of fixed points for k-strict pseudocontractions in Hilbert spaces. Nonlinear Anal 69:456–462

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Carlos Conca.

Rights and permissions

About this article

Cite this article

Khan, S.A., Cholamjiak, W. & Kazmi, K.R. An inertial forward–backward splitting method for solving combination of equilibrium problems and inclusion problems. Comp. Appl. Math. 37, 6283–6307 (2018). https://doi.org/10.1007/s40314-018-0684-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-018-0684-5

Keywords

- Equilibrium problem

- Inertial method

- Inclusion problems

- Nonexpansive mapping

- \(\alpha \)-inverse strongly monotone mapping

- Fixed point problem