Abstract

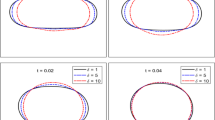

We consider a model initial- and Dirichlet boundary- value problem for a linearized Cahn–Hilliard–Cook equation, in one space dimension, forced by the space derivative of a space–time white noise. First, we introduce a canvas problem, the solution to which is a regular approximation of the mild solution to the problem and depends on a finite number of random variables. Then, fully discrete approximations of the solution to the canvas problem are constructed using, for discretization in space, a Galerkin finite element method based on \(H^2\) piecewise polynomials, and, for time-stepping, an implicit/explicit method. Finally, we derive a strong a priori estimate of the error approximating the mild solution to the problem by the canvas problem solution, and of the numerical approximation error of the solution to the canvas problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(T>0\), \(D:=(0,1)\), and \((\Omega ,{\mathcal {F}},P)\) be a complete probability space. Then, we consider the model initial- and Dirichlet boundary- value problem for a linearized Cahn–Hilliard–Cook equation formulated in Kossioris and Zouraris (2013), which is as follows: find a stochastic function \(u:[0,T]\times {\overline{D}}\rightarrow {\mathbb {R}}\), such that

a.s. in \(\Omega \), where \({\dot{W}}\) denotes a space-time white noise on \([0,T]\times D\) (see, e.g., Walsh 1986; Kallianpur and Xiong 1995) and \(\mu \) is a real constant. We recall that the mild solution to the problem above (cf. Debussche and Zambotti 2007) is given by

where

\(\lambda _k:=k\,\pi \) for \(k\in {\mathbb {N}}\), \(\varepsilon _k(z):=\sqrt{2}\,\sin (\lambda _k\,z)\) and \(\varphi _k(z):=\sqrt{2}\,\cos (\lambda _k\,z)\) for \(z\in {\overline{D}}\) and \(k\in {\mathbb {N}}\), and \(\mathsf{G}_t(x,y)\) is the space-time Green kernel of the solution to the deterministic parabolic problem: find \(w:[0,T]\times {\overline{D}}\rightarrow {\mathbb {R}}\), such that

In the paper at hand, our goal is to propose and analyze a numerical method for the approximation of u that has less stability requirements and lower complexity than the method proposed in Kossioris and Zouraris (2013).

1.1 A canvas problem

A canvas problem is an initial- and boundary- value problem the solution to which: i) depends on a finite number of random variables and ii) is a regular approximation of the mild solution u to (1.1). Then, we can derive computable approximations of u by constructing numerical approximations of the canvas problem solution via the application of a discretization technique for stochastic partial differential equations with random coefficients. The formulation of the canvas problem depends on the way which we replace the infinite stochastic dimensionality of the problem (1.1) by a finite one.

In our case, the canvas problem is formulated as follows (cf. Allen et al. 1998; Kossioris and Zouraris 2010, 2013): Let \(\mathsf{M},\mathsf{N}\in {\mathbb {N}}\), \(\Delta {t}:=\frac{T}{\mathsf{N}}\), and \(t_n:=n\,\Delta {t}\) for \(n=0,\dots ,\mathsf{N}\), \(\mathsf{T}_n:=(t_{n-1},t_n)\) for \(n=1,\dots ,\mathsf{N}\), and \(\mathsf{u}:[0,T]\times {\overline{D}}\rightarrow {\mathbb {R}}\), such that

where

and \(B^i(t):=\int _0^t\int _{\scriptscriptstyle D}\varphi _i(x)\;\mathrm{d}W(s,x)\) for \(t\ge 0\) and \(i\in {\mathbb {N}}\). According to Walsh (1986), \((B^i)_{i=1}^{\infty }\) is a family of independent Brownian motions, and thus, the random variables \(\left( \left( R^n_i\right) _{n=1}^{\scriptscriptstyle \mathsf{N}}\right) _{i=1}^{\scriptscriptstyle \mathsf M}\) are independent and satisfy

Thus, the solution \(\mathsf{u}\) to (1.5) depends on \(\mathsf{N}\mathsf{M}\) random variables and the well-known theory for parabolic problems (see, e.g, Lions and Magenes 1972) yields its regularity along with the following representation formula:

Remark 1.1

In Kossioris and Zouraris (2013), the definition of \({\mathcal {W}}\) is based on a uniform partition of [0, T] in N subintervals and on a uniform partition of D in J subintervals. At every time-slab, \({\mathcal {W}}\) has a constant value with respect to the time variable, but, with respect to the space variable, is defined as the \(L^2(D)\)-projection of a random, piecewise constant function onto the space of linear splines, the computation of which leads to the numerical solution of a \((J+1)\times (J+1)\) tridiagonal linear system of algebraic equations. Finally, \({\mathcal {W}}\) depends on \(N (J+1)\) random variables and its construction has \(O(N\,(J+1))\) complexity, which must to be added to the complexity of the numerical method used for the approximation of \(\mathsf{u}\). On the contrary, the stochastic load \({\mathcal {W}}\) of the canvas problem (1.5) which we propose here is given explicitly by the formula (1.6), and thus, no extra computational cost is required for its formation.

1.2 An IMEX finite element method

Let \(M\in {\mathbb {N}}\), \(\Delta \tau :=\frac{T}{M}\), and \(\tau _m:=m\,\Delta \tau \) for \(m=0,\dots ,M\), and \(\Delta _m:=(\tau _{m-1},\tau _m)\) for \(m=1,\dots ,M\). In addition, for \(r=2\) or 3, let \(\mathsf{M}_h^r\subset H^2(D)\cap H_0^1(D)\) be a finite element space consisting of functions which are piecewise polynomials of degree at most r over a partition of D in intervals with maximum mesh length h.

The fully discrete method which we propose for the numerical approximation of \(\mathsf{u}\) uses an implicit/explicit (IMEX) time-discretization treatment of the space differential operator along with a finite element variational formulation for space discretization. Its algorithm is as follows: first, sets

and then, for \(m=1,\dots ,M\), finds \(\mathsf{U}_h^m\in \mathsf{M}_h^r\), such that

for all \(\chi \in \mathsf{M}_h^r\), where \((\cdot ,\cdot )_{\scriptscriptstyle 0,D}\) is the usual \(L^2(D)\)-inner product.

Remark 1.2

It is easily seen that the numerical method above is unconditionally stable, while the Backward Euler finite element method is stable under the time-step restriction: \(\Delta \tau \,\mu ^2\le 4\) (see Kossioris and Zouraris 2013).

1.3 An overview of the paper

In Sect. 2, we introduce notation and we recall several results that are often used in the rest of the paper. In Sect. 3, we focus on the estimation of the error which we made by approximating the solution u to (1.1) by the solution \(\mathsf{u}\) to (1.5), arriving at the bound

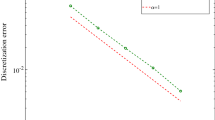

(see Theorem 3.1). Section 4 is dedicated to the definition and the convergence analysis of modified IMEX time-discrete and fully discrete approximations of the solution w to the deterministic problem (1.4). The results obtained are used later in Sect. 5, where we analyze the numerical method for the approximation of \(\mathsf{u}\), given in Sect. 1.2. Its convergence is established by proving the following strong error estimate:

for all \(\epsilon _1\in \left( 0,\frac{1}{8}\right] \) and \(\epsilon _2\in \left( 0,\frac{r}{6}\right] \) (see Theorem 5.3). We obtain the latter error bound, by applying a discrete Duhamel principle technique to estimate separately the time-discretization error and the space-discretization error, which are defined using as an intermediate the corresponding IMEX time-discrete approximations of \(\mathsf{u}\), specified by (5.1) and (5.2) (cf., e.g., Kossioris and Zouraris 2010, 2013; Yan 2005).

Since we have no assumptions on the sign, or, the size of \(\mu \), the elliptic operator in (1.5) is, in general, not invertible. This is the reason that the Backward Euler/finite element method is stable and convergent after adopting a restriction on the time-step size (see Kossioris and Zouraris 2013, Remark 1.2). On the contrary, the IMEX/finite element method which we propose here is unconditionally stable and convergent, because the principal part of the elliptic operator is treated implicitly and its lower order part explicitly. Another characteristic in our method is the choice to build up the canvas problem using spectral functions, which allow us to avoid the numerical solution of an extra linear system of algebraic equation at every time-step that is required in the approach of Kossioris and Zouraris (2013) (see Remark 1.1).

The error analysis of the IMEX finite element method is more technical than that in Kossioris and Zouraris (2013) for the Backward Euler finite element method. The main difference is due to the fact that the representation of the time-discrete and fully discrete approximations of \(\mathsf{u}\) is related to a modified version of the IMEX time-stepping method for the approximation of the solution to the deterministic problem (1.4), the error analysis of which is necessary in obtaining the desired error estimate and is of independent interest (see Sect. 4).

2 Preliminaries

We denote by \(L^2(D)\) the space of the Lebesgue measurable functions which are square integrable on D with respect to the Lebesgue measure \(\mathrm{d}x\). The space \(L^2(D)\) is provided with the standard norm \(\Vert g\Vert _{\scriptscriptstyle 0,D}:= \left( \int _{\scriptscriptstyle D}|g(x)|^2\,\mathrm{d}x\right) ^{\frac{1}{2}}\) for \(g\in L^2(D)\), which is derived by the usual inner product \((g_1,g_2)_{\scriptscriptstyle 0,D}:=\int _{\scriptscriptstyle D}g_1(x)\,g_2(x)\,\mathrm{d}x\) for \(g_1\), \(g_2\in L^2(D)\). In addition, we employ the symbol \({\mathbb {N}}_0\) for the set of all nonnegative integers.

For \(s\in {\mathbb {N}}_0\), we denote by \(H^s(D)\) the Sobolev space of functions having generalized derivatives up to order s in \(L^2(D)\), and by \(\Vert \cdot \Vert _{\scriptscriptstyle s,D}\) its usual norm, i.e., \(\Vert g\Vert _{\scriptscriptstyle s,D}:=\left( \sum _{\ell =0}^s \Vert \partial _x^{\ell }g\Vert _{\scriptscriptstyle 0,D}^2\right) ^{\scriptscriptstyle 1/2}\) for \(g\in H^s(D)\). In addition, by \(H_0^1(D)\), we denote the subspace of \(H^1(D)\) consisting of functions which vanish at the endpoints of D in the sense of trace.

The sequence of pairs \(\left\{ \left( \lambda _i^2,\varepsilon _i\right) \right\} _{i=1}^{\infty }\) is a solution to the eigenvalue/eigenfunction problem: find nonzero \(\varphi \in H^2(D)\cap H_0^1(D)\) and \(\lambda \in {\mathbb {R}}\), such that \(-\varphi ''=\lambda \,\varphi \) in D. Since \((\varepsilon _i)_{i=1}^{\infty }\) is a complete \((\cdot ,\cdot )_{\scriptscriptstyle 0,D}\)-orthonormal system in \(L^2(D)\), for \(s\in {\mathbb {R}}\), we define by

a subspace of \(L^2(D)\) provided with the natural norm \(\Vert v\Vert _{\scriptscriptstyle {\mathcal {V}}^s}:=\big (\,\sum _{i=1}^{\infty } \lambda _{i}^{2s}\,(v,\varepsilon _i)^2_{\scriptscriptstyle 0,D}\,\big )^{\scriptscriptstyle 1/2}\) for \(v\in {\mathcal {V}}^s(D)\). For \(s\ge 0\), the space \(({\mathcal {V}}^s(D),\Vert \cdot \Vert _{\scriptscriptstyle {\mathcal {V}}^s})\) is a complete subspace of \(L^2(D)\) and we define \(({\dot{\mathbf{H}}}^{{s}}(D),\Vert \cdot \Vert _{\scriptscriptstyle {\dot{\mathbf{H}}}^s}) :=({\mathcal {V}}^s(D),\Vert \cdot \Vert _{\scriptscriptstyle {\mathcal {V}}^s})\). For \(s<0\), the space \(({\dot{\mathbf{H}}}^s(D),\Vert \cdot \Vert _{\scriptscriptstyle {\dot{\mathbf{H}}}^s})\) is defined as the completion of \(({\mathcal {V}}^s(D),\Vert \cdot \Vert _{\scriptscriptstyle {\mathcal {V}}^s})\), or, equivalently, as the dual of \(({\dot{\mathbf{H}}}^{-s}(D),\Vert \cdot \Vert _{\scriptscriptstyle {\dot{\mathbf{H}}}^{-s}})\).

Let \(m\in {\mathbb {N}}_0\). It is well known (see Thomée 1997) that

and that there exist constants \(C_{m,{\scriptscriptstyle A}}\) and \(C_{m,{\scriptscriptstyle B}}\), such that

In addition, we define on \(L^2(D)\) the negative norm \(\Vert \cdot \Vert _{\scriptscriptstyle -m, D}\) by

for which, using (2.1), follows that there exists a constant \(C_{-m}>0\), such that:

Let \({\mathbb {L}}_2=(L^2(D),(\cdot ,\cdot )_{\scriptscriptstyle 0,D})\) and \({\mathcal {L}}({\mathbb {L}}_2)\) be the space of linear, bounded operators from \({\mathbb {L}}_2\) to \({\mathbb {L}}_2\). An operator \(\Gamma \in {\mathcal {L}}({\mathbb {L}}_2)\) is Hilbert–Schmidt, when \(\Vert \Gamma \Vert _{\scriptscriptstyle \mathrm HS}:=\left( \sum _{i=1}^{\infty } \Vert \Gamma \varepsilon _i\Vert ^2_{\scriptscriptstyle 0,D}\right) ^{\frac{1}{2}}<+\infty \), where \(\Vert \Gamma \Vert _{\scriptscriptstyle \mathrm HS}\) is the so-called Hilbert–Schmidt norm of \(\Gamma \). We note that the quantity \(\Vert \Gamma \Vert _{\scriptscriptstyle \mathrm HS}\) does not change when we replace \((\varepsilon _i)_{i=1}^{\infty }\) by another complete orthonormal system of \({\mathbb {L}}_2\). It is well known (see, e.g., Dunford and Schwartz 1988; Lord et al. 2014) that an operator \(\Gamma \in {\mathcal {L}}({\mathbb {L}}_2)\) is Hilbert–Schmidt iff there exists a measurable function \(\gamma :D\times D\rightarrow {\mathbb {R}}\), such that \(\Gamma [v](\cdot )=\int _{\scriptscriptstyle D}\gamma (\cdot ,y)\,v(y)\,dy\) for \(v\in L^2(D)\), and then, it holds that

Let \({\mathcal {L}}_{\scriptscriptstyle \mathrm HS}({\mathbb {L}}_2)\) be the set of Hilbert–Schmidt operators of \({\mathcal {L}}({\mathbb {L}}^2)\) and \(\Phi :[0,T]\rightarrow {\mathcal {L}}_{\scriptscriptstyle \mathrm HS}({\mathbb {L}}_2)\). In addition, for a random variable X, let \({\mathbb {E}}[X]\) be its expected value, i.e., \({\mathbb {E}}[X]:=\int _{\scriptscriptstyle \Omega }X\,\mathrm{d}P\). Then, the Itô isometry property for stochastic integrals reads

For later use, we recall that if \(({\mathcal {H}},(\cdot ,\cdot )_{\scriptscriptstyle {\mathcal {H}}})\) is a real inner product space with induced norm \(|\cdot |_{\scriptscriptstyle {\mathcal {H}}}\), then

Finally, for any nonempty set A, we denote by \({\mathcal {X}}_{\scriptscriptstyle A}\) the indicator function of A.

2.1 A projection operator

Let \({\mathcal {O}}:=(0,T)\times D\), \({\mathfrak S}_{\scriptscriptstyle \mathsf{M}}:={\mathrm{span}}(\varphi _i)_{i=1}^{\scriptscriptstyle \mathsf{M}}\), \({\mathfrak S}_{\scriptscriptstyle \mathsf{N}}:=\mathrm{span}({\mathcal {X}}_{\scriptscriptstyle T_n})_{n=1}^{\scriptscriptstyle \mathsf{N}}\) and \(\mathsf{\Pi }:L^2({\mathcal {O}})\rightarrow {\mathfrak S}_{\scriptscriptstyle \mathsf{N}}\otimes {\mathfrak S}_{\scriptscriptstyle \mathsf{M}}\), the usual \(L^2({\mathcal {O}})\)-projection operator which is given by the formula:

Then, the following representation of the stochastic integral of \(\mathsf{\Pi }\) holds [cf. Lemma 2.1 in Kossioris and Zouraris (2010)].

Lemma 2.1

For \(g\in L^2({\mathcal {O}})\), it holds that

Proof

Using (2.6) and (1.7), we have

which along (1.6) yields (2.7). \(\square \)

2.2 Linear elliptic and parabolic operators

Let \(T_{\scriptscriptstyle E}:L^2(D)\rightarrow {\dot{\mathbf{H}}}^2(D)\) be the solution operator of the Dirichlet two-point boundary-value problem: for given \(f\in L^2(D)\) find \(v_{\scriptscriptstyle E}\in {\dot{\mathbf{H}}}^2(D)\), such that \(v_{\scriptscriptstyle E}''=f\) in D, i.e., \(T_{\scriptscriptstyle E}f:=v_{\scriptscriptstyle E}\). It is well known that

and, for \(m\in {\mathbb {N}}_0\), there exists a constant \(C_{\scriptscriptstyle E}^m>0\), such that

Let, also, \(T_{\scriptscriptstyle B}:L^2(D)\rightarrow {\dot{\mathbf{H}}}^4(D)\) be the solution operator of the following Dirichlet biharmonic two-point boundary-value problem: for given \(f\in L^2(D)\) find \(v_{\scriptscriptstyle B}\in {\dot{\mathbf{H}}}^4(D)\), such that

i.e., \(T_{\scriptscriptstyle B}f:=v_{\scriptscriptstyle B}\). It is well known that, for \(m\in {\mathbb {N}}_0\), there exists a constant \(C^{m}_{\scriptscriptstyle B}>0\), such that

Due to the type of boundary conditions of (2.10), we have

which, after using (2.8), yields

Let \(({\mathcal {S}}(t)w_0)_{\scriptscriptstyle t\in [0,T]}\) be the standard semigroup notation for the solution w to (1.4). Then [see Appendix A in Kossioris and Zouraris (2013)], for \(\ell \in {\mathbb {N}}_0\), \(\beta \ge 0\) and \(p\ge 0\), there exists a constant \({\mathcal {C}}_{\beta ,\ell ,\mu ,\mu ^2 T}>0\), such that

for all \(w_0\in {\dot{\mathbf{H}}}^{p+4\ell -2\beta -2}(D)\) and \(t_a\), \(t_b\in [0,T]\) with \(t_b>t_a\).

2.3 Discrete operators

Let \(r=2\) or 3, and \(\mathsf{M}_h^r\subset H_0^1(D)\cap H^2(D)\) be a finite element space consisting of functions which are piecewise polynomials of degree at most r over a partition of D in intervals with maximum length h. It is well known (cf., e.g., Bramble and Hilbert 1970) that

where \(C_{r}\) is a positive constant that depends on r and D, and is independent of h and v. Then, we define the discrete biharmonic operator \(B_h:\mathsf{M}_h^r\rightarrow \mathsf{M}_h^r\) by \((B_h\varphi ,\chi )_{\scriptscriptstyle 0,D}=(\partial _x^2\varphi ,\partial _x^2\chi )_{\scriptscriptstyle 0,D}\) for \(\varphi ,\chi \in \mathsf{M}_h^r\), the \(L^2(D)\)-projection operator \(P_h:L^2(D)\rightarrow \mathsf{M}_h^r\) by \((P_hf,\chi )_{\scriptscriptstyle 0,D}=(f,\chi )_{\scriptscriptstyle 0,D}\) for \(\chi \in \mathsf{M}_h^r \) and \(f\in L^2(D)\), and the standard Galerkin finite element approximation \(v_{{\scriptscriptstyle B},h}\in \mathsf{M}_h^r\) of the solution \(v_{\scriptscriptstyle B}\) to (2.10) by requiring

Let \(T_{{\scriptscriptstyle B},h}:L^2(D)\rightarrow {\mathsf{M}^r_h}\) be the solution operator of the finite element method (2.16), i.e., \(T_{{\scriptscriptstyle B},h}f:=v_{{\scriptscriptstyle B},h}=B_h^{-1}P_hf\) for all \(f\in L^2(D)\). Then, we can easily conclude that

and

Finally, the approximation property (2.15) of the finite element space \(\mathsf{M}_h^r\) yields (see, e.g., Proposition 2.2 in Kossioris and Zouraris 2010) the following error estimate:

3 An approximation estimate for the canvas problem solution

Here, we establish the convergence of \(\mathsf{u}\) towards u with respect to the \(L^{\infty }_t(L^2_{\scriptscriptstyle P}(L^2_x))\) norm, when \(\Delta {t}\rightarrow 0\) and \(\mathsf{M}\rightarrow \infty \) (cf. Kossioris and Zouraris 2010, 2013).

Theorem 3.1

Let u be the solution to (1.1), \(\mathsf{u}\) be the solution to (1.5), and \(\kappa \in {\mathbb {N}}\), such that \(\kappa ^2\,\pi ^2>\mu \). Then, there exists a constant \({\widehat{c}}_{\scriptscriptstyle \mathrm{CER}}>0\), independent of \(\Delta {t}\) and \(\mathsf{M}\), such that

where \({\mathsf{\Theta }}(t):=\left( {\mathbb {E}}\left[ \Vert u(t,\cdot )-\mathsf{u}(t,\cdot )\Vert _{\scriptscriptstyle 0,D}^2 \right] \right) ^{\frac{1}{2}}\) for \(t\in [0,T]\).

Proof

In the sequel, we will use the symbol C to denote a generic constant that is independent of \(\Delta {t}\) and \(\mathsf{M}\) and may change value from one line to the other.

Using (1.2), (1.9), and Lemma 2.1, we conclude that

for \((t,x)\in [0,T]\times {\overline{D}}\), where \({\widetilde{\mathsf{\Psi }}}: (0,T)\times {D}\rightarrow L^2({\mathcal {D}})\) is given by

for \((s,y)\in T_n\times {D}\), \(n=1,\dots ,\mathsf{N}\), and for \((t,x)\in (0,T]\times D\). Now, we use (1.3) and the \(L^2(D)\)-orthogonality of \((\varphi _k)_{k=1}^{\infty }\) to obtain

for \((s,y)\in T_n\times {D}\), \(n=1,\dots ,\mathsf{N}\), and for \((t,x)\in (0,T]\times D\). In addition, we use (3.2), (2.4), and (2.3), to get

where

and

Proceeding as in the proof of Theorem 3.1 in Kossioris and Zouraris (2013), we arrive at

Combining (3) and (3.3) and using the \(L^2(D)\)-orthogonality of \((\varepsilon _k)_{k=1}^{\infty }\) and \((\varphi _k)_{k=1}^{\infty }\), we have

For \(\mathsf{M}\ge \kappa \), using the Cauchy–Schwarz inequality, we obtain

The error bound (3.1) follows by observing that \(\Theta (0)=0\) and by combining the bounds (3.4), (3.5) and (3.6). \(\square \)

4 Deterministic time-discrete and fully discrete approximations

In this section, we define and analyze auxiliary time-discrete and fully discrete approximations of the solution to the deterministic problem (1.4). The results of the convergence analysis will be used in Sect. 5 for the derivation of an error estimate for the numerical approximations of \(\mathsf{u}\) introduced in Sect. 1.2.

4.1 Time-discrete approximations

We define an auxiliary modified-IMEX time-discrete method to approximate the solution w to (1.4), which has the following structure: First, sets

and determines \(W^1\in {\dot{\mathbf{H}}}^4(D)\) by

Then, for \(m=2,\dots ,M\), finds \(W^m\in {\dot{\mathbf{H}}}^4(D)\), such that

In the proposition below, we derive a low regularity priori error estimate in a discrete in time \(L^2_t(L^2_x)\)-norm.

Proposition 4.1

Let \((W^m)_{m=0}^{\scriptscriptstyle M}\) be the time-discrete approximations defined in (4.1)–(4.3), and w be the solution to the problem (1.4). Then, there exists a constant \(C>0\), independent of \(\Delta \tau \), such that

where \(w^{\ell }(\cdot ):=w(\tau _{\ell },\cdot )\) for \(\ell =0,\dots ,M\).

Proof

In the sequel, we will use the symbol C to denote a generic constant that is independent of \(\Delta \tau \) and may changes value from one line to the other.

Let \(\mathsf{E}^m:=w^m-W^m\) for \(m=0,\dots ,M\), and

for \(m=1,\dots ,M\). Thus, combining (1.4), (4.2) and (4.3), we conclude that

First, take the \(L^2(D)\)-inner product of both sides of (4.5) with \(\mathsf{E}^1\) and of (4.6) with \(\mathsf{E}^m\), and then use (2.13) to obtain

for \(m=2,\dots ,M\). Then, using that \(\mathsf{E}^0=0\) and applying (2.5) along with the arithmetic mean inequality, we get

Observing that (4.8) yields

we use a standard discrete Gronwall argument to arrive at

Summing both sides of (4.8) with respect to m, from 2 up to M, we obtain

which, along with (4.9), yields

Using (4.7), (2.8), the Cauchy–Schwarz inequality and the arithmetic mean inequality, we have

which, finally, yields

Next, we use the Cauchy–Schwarz inequality and (2.9) to get

Finally, we use (4.10), (4.11), (4.12), and (2.14) (with \(\beta =0\), \(\ell =1\), and \(p=0\)) to obtain

which establishes (4.4) for \(\theta =1\).

From (4.2), (4.3), and (2.12), it follows that:

Taking the \(L^2(D)\)-inner product of both sides of the first equation above with \(W^1\) and of the second one with \(W^m\), and then applying (2.13), (2.5) and the arithmetic mean inequality, we obtain

The inequalities (4.13) and (4.14), easily, yield that

from which, after the use of a standard discrete Gronwall argument, we arrive at

We sum both sides of (4.14) with respect to m, from 2 up to M, and then use (4.15), to have

Thus, using (4.16), (4.13), (4.1), (2.9), and (2.2), we obtain

In addition, we have

which, along with (2.14) (with \((\beta ,\ell ,p)=(0,0,0)\) and \((\beta ,\ell ,p)=(2,1,0)\)), yields

Thus, (4.17) and (4.18) establish (4.4) for \(\theta =0\).

Finally, the estimate (4.4) follows by interpolation, since it is valid for \(\theta =1\) and \(\theta =0\). \(\square \)

We close this section by deriving, for later use, the following a priori bound.

Lemma 4.1

Let \((W^m)_{m=0}^{\scriptscriptstyle M}\) be the time-discrete approximations defined by (4.1)–(4.3). Then, there exist a constant \(C>0\), independent of \(\Delta \tau \), such that

Proof

In the sequel, we will use the symbol C to denote a generic constant that is independent of \(\Delta \tau \) and may changes value from one line to the other.

Taking the \((\cdot ,\cdot )_{\scriptscriptstyle 0,D}\)-inner product of (4.3) with \(\partial _x^2W^m\) and of (4.2) with \(\partial _x^2W^1\), and then integrating by parts, we obtain

for \(m=2,\dots ,M\). Using (2.5) and the arithmetic mean inequality, from (4.20) and (4.21), it follows that:

Now, (4.23) and (4.22), easily, yield that

which, after a standard induction argument, leads to

After summing both sides of (4.23) with respect to m, from 2 up to M, we obtain

which, after using (4.24), yields

Finally, we combine (4.25), (4.22), and (2.1) to get

which, easily, yields (4.19). \(\square \)

4.2 Fully discrete approximations

The modified-IMEX time-stepping method along with a finite element space discretization yields a fully discrete method for the approximation of the solution to the deterministic problem (1.4). The method begins by setting

and specifying \(W_h^1\in \mathsf{M}_h^r\), such that

Then, for \(m=2,\dots ,M\), it finds \(W_h^m\in \mathsf{M}_h^r\), such that

Adopting the viewpoint that the fully discrete approximations defined above are approximations of the time-discrete ones defined in the previous section, we estimate below the corresponding approximation error in a discrete in time \(L^2_t(L^2_x)\)-norm.

Proposition 4.2

Let \(r=2\) or 3, \((W^m)_{m=0}^{\scriptscriptstyle M}\) be the time-discrete approximations defined by (4.1)–(4.3), and \((W_h^m)_{m=0}^{\scriptscriptstyle M}\subset \mathsf{M}_h^r\) be the fully discrete approximations specified in (4.26)–(4.28). Then, there exists a constant \(C>0\), independent of \(\Delta \tau \) and h, such that

Proof

In the sequel, we will use the symbol C to denote a generic constant which is independent of \(\Delta \tau \) and h, and may changes value from one line to the other.

Let \(\mathsf{Z}^m:=W^m-W_h^m\) for \(m=0,\dots ,M\). Then, from (4.2), (4.3), (4.27), and (4.28), we obtain the following error equations:

where

Taking the \(L^2(D)\)-inner product of both sides of (4.31) with \(\mathsf{Z}^m\), we obtain

which, along with (2.17) and (2.5), yields

for \(m=2,\dots ,M\), where

Using (2.17), integration by parts, the Cauchy–Schwarz inequality, the arithmetic mean inequality, we have

and

Now, we combine (4.33), (4.34) and (4.35) to get

for \(m=2,\dots ,M\). Let \(\Upsilon ^{\ell }:=\Vert \partial _x^2(T_{\scriptscriptstyle B,h}\mathsf{Z}^{\ell })\Vert _{\scriptscriptstyle 0,D}^2+\Delta \tau \,\Vert \mathsf{Z}^{\ell }\Vert _{\scriptscriptstyle 0,D}^2\) for \(\ell =1,\dots ,M\). Then, (4.36) yields

from which, after applying a standard discrete Gronwall argument, we conclude that

Since \(T_{\scriptscriptstyle B,h}\mathsf{Z}^0=0\), after taking the \(L^2(D)\)-inner product of both sides of (4.30) with \(\mathsf{Z}^1\), and then, using (2.17) and the arithmetic mean inequality, we obtain

which, along with (4.37), yields

Now, summing both sides of (4.36) with respect to m, from 2 up to M, we obtain

which, along with (4.39), yields

Combining (4.40), (4.32), (2.19), and (4.19), we obtain

Thus, (4.41) yields (4.29) for \(\theta =1\).

From (4.27) and (4.28), we conclude that

Taking the \(L^2(D)\)-inner product of both sides of the first equation above with \(W_h^1\) and of the second one with \(W_h^m\), and then, applying (2.17) and (2.5), we obtain

where

Using (2.17), integration by parts, the Cauchy–Schwarz inequality, and the arithmetic mean inequality, we have

Combining (4.43) and (4.44), we arrive at

Let\(\Upsilon _h^{\ell }:=\Vert \partial _x^2(T_{\scriptscriptstyle B,h}W_h^{\ell })\Vert _{\scriptscriptstyle 0,D}^2 +\Delta \tau \,\Vert W_h^{\ell }\Vert _{\scriptscriptstyle 0,D}^2\) for \(\ell =1,\dots ,M\). Then, we use (4.42), (4.26), (2.18), (2.2), and (4.45) to obtain

and

From (4.47), after the application of a standard discrete Gronwall argument and the use of (4.46), we conclude that

Summing both sides of (4.45) with respect to m, from 2 up to M, we have

which, along with (4.48), yields

Thus, (4.49) and (4.17) yield (4.29) for \(\theta =0\).

Thus, the error estimate (4.29) follows by interpolation, since it holds for \(\theta =1\) and \(\theta =0\). \(\square \)

5 Convergence analysis of the IMEX finite element method

To estimate the approximation error of the IMEX finite element method given in Sect. 1.2, we use, as a tool, the corresponding IMEX time-discrete approximations of \(\mathsf{u}\), which are defined first by setting

and then, for \(m=1,\dots ,M\), by seeking \(\mathsf{U}^m\in {\dot{\mathbf{H}}}^4(D)\), such that

Thus, we split the total error of the IMEX finite element method as follows:

where \(\mathsf{u}^m:=\mathsf{u}(\tau _m,\cdot )\), \({\mathcal {E}}^m_{{\scriptscriptstyle \mathrm TDR}}:= \left( {\mathbb {E}}\left[ \Vert \mathsf{u}^m-\mathsf{U}^m\Vert _{\scriptscriptstyle 0,D}^2 \right] \right) ^{\scriptscriptstyle 1/2}\) is the time-discretization error at \(\tau _m\), and \({\mathcal {E}}_{{\scriptscriptstyle \mathrm SDR}}^m:= \left( {\mathbb {E}}\left[ \Vert \mathsf{U}^m-\mathsf{U}^m_h\Vert _{\scriptscriptstyle 0,D}^2 \right] \right) ^{\scriptscriptstyle 1/2}\) is the space-discretization error at \(\tau _m\).

5.1 Estimating the time-discretization error

The convergence estimate of Proposition 4.1 is the main tool in providing a discrete in time \(L^{\infty }_t(L^2_{\scriptscriptstyle P}(L^2_x))\) error estimate of the time-discretization error (cf. Yan 2005; Kossioris and Zouraris 2010, 2013).

Proposition 5.1

Let \(\mathsf{u}\) be the solution to (1.5) and \((\mathsf{U}^m)_{m=0}^{\scriptscriptstyle M}\) be the time-discrete approximations of \(\mathsf{u}\) defined by (5.1)–(5.2). Then, there exists a constant \({\widehat{c}}_{{\scriptscriptstyle \mathrm TDR}}\), independent of \(\Delta {t}\), \(\mathsf{M}\) and \(\Delta \tau \), such that

Proof

In the sequel, we will use the symbol C to denote a generic constant that is independent of \(\Delta {t}\), \(\mathsf{M}\), and \(\Delta \tau \), and may change value from one line to the other.

First, we introduce some notation by letting \(\mathsf{I}:L^2(D)\rightarrow L^2(D)\) be the identity operator, \(\mathsf{Y}:H^2(D)\rightarrow L^2(D)\) be the differential operator \(\mathsf{Y}:=\mathsf{I}-\Delta \tau \,\mu \,\partial _x^2\), and \(\mathsf{\Lambda }:L^2(D)\rightarrow {\dot{\mathbf{H}}}^4(D)\) be the inverse elliptic operator \(\mathsf{\Lambda }:=(\mathsf{I}+\Delta \tau \,\partial _x^4)^{-1}\). Then, for \(m=1,\dots ,M\), we define the operator \(\mathsf{Q}^m:L^2(D)\rightarrow {\dot{\mathbf{H}}}^4(D)\) by \(\mathsf{Q}^m:=(\mathsf{\Lambda }\circ \mathsf{Y})^{m-1}\circ \mathsf{\Lambda }\). In addition, for given \(w_0\in {\dot{\mathbf{H}}}^2(D)\), let \(({\mathcal {S}}_{\scriptscriptstyle {\Delta \tau }}^m(w_0))_{m=0}^{\scriptscriptstyle M}\) be time-discrete approximations of the solution to the deterministic problem (1.4), defined by (4.1)–(4.3). Then, using a simple induction argument, we conclude that

Let \(m\in \{1,\dots ,M\}\). Applying a simple induction argument on (5.2), we conclude that

which, along with (1.6) and (5.5), yields

In addition, using (1.9) and (1.6), and proceeding in similar manner, we arrive at

Thus, using (5.6) and (5.7) along with Remark 1.8, we obtain

which, easily, yields

with

Proceeding as in the proof of Theorem 4.1 in Kossioris and Zouraris (2013), we get

In addition, using the error estimate (4.4), it follows that:

Setting \(\theta =\tfrac{1}{8}-\epsilon \) with \(\epsilon \in \left( 0,\tfrac{1}{8}\right] \), we have

Thus, the estimate (5.4) follows, easily, as a simple consequence of (5.8), (5.9), and (5.10). \(\square \)

5.2 Estimating the space-discretization error

The outcome of Proposition 4.2 will be used below in the derivation of a discrete in time \(L^{\infty }_t(L^2_{\scriptscriptstyle P}(L^2_x))\) error estimate of the space-discretization error (cf. Yan 2005; Kossioris and Zouraris 2010, 2013).

Proposition 5.2

Let \(r=2\) or 3, \((\mathsf{U}_h^m)_{m=0}^{\scriptscriptstyle M}\) be the fully discrete approximations defined by (1.10)–(1.11) and \((\mathsf{U}^m)_{m=0}^{\scriptscriptstyle M}\) be the time-discrete approximations defined by (5.1)–(5.2). Then, there exists a constant \({\widehat{c}}_{{\scriptscriptstyle \mathrm SDR}}>0\), independent of \(\mathsf{M}\), \(\Delta {t}\), \(\Delta \tau \) and h, such that

Proof

In the sequel, we will use the symbol C to denote a generic constant that is independent of \(\Delta {t}\), \(\mathsf{M}\), \(\Delta \tau \), and h, and may change value from one line to the other.

Let us denote by \(\mathsf{I}:L^2(D)\rightarrow L^2(D)\) the identity operator, by \(\mathsf{Y}_h:\mathsf{M}_h^r\rightarrow \mathsf{M}_h^r\) the discrete differential operator \(\mathsf{Y}_h:=\mathsf{I}-\mu \,\Delta \tau \,(P_h\circ \partial _x^2)\), \(\mathsf{\Lambda }_h:L^2(D)\rightarrow \mathsf{M}^r_h\) be the inverse discrete elliptic operator \(\mathsf{\Lambda }_h:=(I+\Delta \tau \,B_h)^{-1}\circ P_h\). Then, for \(m=1,\dots ,M\), we define the auxiliary operator \(\mathsf{Q}_h^m:L^2(D)\rightarrow \mathsf{M}_h^r\) by \(\mathsf{Q}^m_h:=(\mathsf{\Lambda }_h\circ \mathsf{Y}_h)^{m-1}\circ \mathsf{\Lambda }_h\). In addition, for given \(w_0\in {\dot{\mathbf{H}}}^2(D)\), let \(({\mathcal {S}}_{h}^m(w_0))_{m=0}^{\scriptscriptstyle M}\) be fully discrete approximations of the solution to the deterministic problem (1.4), defined by (4.26)–(4.28). Then, using a simple induction argument, we conclude that

Let \(m\in \{1,\dots ,M\}\). Using a simple induction argument on (1.11), (1.6) and (5.12), we conclude that

After, using (5.13), (5.6), and Remark 1.8, and proceeding as in the proof of Proposition 5.1, we arrive at

which, along (4.29), yields

Setting \(\theta =\tfrac{1}{6}-\delta \) with \(\delta \in \left( 0,\tfrac{1}{6}\right] \), we have

which obviously yields (5.11) with \(\epsilon =r\delta \). \(\square \)

5.3 Estimating the total error

Theorem 5.3

Let \(r=2\) or 3, \(\mathsf{u}\) be the solution to the problem (1.5), and \((\mathsf{U}_h^m)_{m=0}^{\scriptscriptstyle M}\) be the finite element approximations of \(\mathsf{u}\) constructed by (1.10)–(1.11). Then, there exists a constant \({\widehat{c}}_{\scriptscriptstyle \mathrm{TTL}}>0\), independent of h, \(\Delta \tau \), \(\Delta {t}\) and \(\mathsf{M}\), such that

for all \(\epsilon _1\in \left( 0,\tfrac{1}{8}\right] \) and \(\epsilon _2\in \left( 0,\frac{r}{6}\right] \).

Proof

The error bound (5.15) follows easily from (5.4), (5.11), and (5.3). \(\square \)

References

Allen EJ, Novosel SJ, Zhang Z (1998) Finite element and difference approximation of some linear stochastic partial differential equations. Stoch Stoch Rep 64:117–142

Bramble JH, Hilbert SR (1970) Estimation of linear functionals on Sobolev spaces with application to Fourier transforms and spline interpolation. SIAM J Numer Anal 7:112–124

Debussche A, Zambotti L (2007) Conservative stochastic Cahn–Hilliard equation with reflection. Ann Probab 35:1706–1739

Dunford N, Schwartz JT (1988) Linear operators. Part II. Spectral theory. Self adjoint operators in Hilbert space. Wiley, New York (Reprint of the 1963 original, Wiley Classics Library, A Wiley-Interscience Publication)

Kallianpur G, Xiong J (1995) Stochastic differential equations in infinite dimensional spaces. Lecture notes-monograph series, vol 26. Institute of Mathematical Statistics, Hayward

Kossioris GT, Zouraris GE (2010) Fully-discrete finite element approximations for a fourth-order linear stochastic parabolic equation with additive space-time white noise. Math Model Numer Anal 44:289–322

Kossioris GT, Zouraris GE (2013) Finite element approximations for a linear Cahn–Hilliard–Cook equation driven by the space derivative of a space-time white noise. Discrete Contin Dyn Syst Ser B 18:1845–1872

Lions JL, Magenes E (1972) Non-homogeneous boundary value problems and applications, vol I. Springer, Berlin

Lord GJ, Powell CE, Shardlow T (2014) An introduction to computational stochastic PDEs, Cambridge texts in applied mathematics. Cambridge University Press, New York

Thomée V (1997) Galerkin finite element methods for parabolic problems, Springer series in computational mathematics, vol 25. Springer, Berlin

Yan Y (2005) Galerkin finite element methods for stochastic parabolic partial differential equations. SIAM J Numer Anal 43:1363–1384

Walsh JB (1986) An introduction to stochastic partial differential equations. Lecture notes in mathematics, vol 1180. Springer, Berlin, pp 265–439

Acknowledgements

Work partially supported by The Research Committee of The University of Crete under Research Grant #4339: ‘Numerical solution of stochastic partial differential equations’ funded by The Research Account of the University of Crete (2015–2016).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jorge Zubelli.

Rights and permissions

About this article

Cite this article

Zouraris, G.E. An IMEX finite element method for a linearized Cahn–Hilliard–Cook equation driven by the space derivative of a space–time white noise. Comp. Appl. Math. 37, 5555–5575 (2018). https://doi.org/10.1007/s40314-018-0650-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-018-0650-2

Keywords

- Finite element method

- Space derivative of a space–time white noise

- Spectral representation of the noise

- Implicit/explicit time-stepping

- Fully discrete approximations

- A priori error estimates