Abstract

In this paper, we provide an approximate approach based on the Galerkin method to solve a class of nonlinear fractional differential algebraic equations. The fractional derivative operator in the Caputo sense is utilized and the generalized Jacobi functions are employed as trial functions. The existence and uniqueness theorem as well as the asymptotic behavior of the exact solution are provided. It is shown that some derivatives of the solutions typically have singularity at origin dependence on the order of the fractional derivative. The influence of the perturbed data on the exact solutions along with the convergence analysis of the proposed scheme is also established. Some illustrative examples provided to demonstrate that this novel scheme is computationally efficient and accurate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional differential algebraic equations have recently verified to be a useful devise in the modeling of the various physical problems such as electrochemical processes, non-integer order optimal controller design, complex biochemical (Damarla and Kundu 2015) and etc. Recently, providing the various numerical methods for solving the functional differential equations with fractional order have been receiving more attentions by many authors (Babaei and Banihashemi 2017; Bhrawy and Zaky 2016a, b; Dabiri and Butcher 2016, 2017a, b; Dabiri et al. 2016, 2018; Ghoreishi and Mokhtary 2014; Keshi 2018; Mokhtary 2016a; Mokhtary and Ghoreishi 2014a; Mokhtary 2015, 2016b, 2017; Mokhtary and Ghoreishi 2011, 2014b; Mokhtary et al. 2016; Moghaddam and Aghili 2012; Moghaddam and Machado 2017a, b; Moghaddam et al. 2017a, b; Pedas et al. 2016; Taghavi et al. 2017; Zaky 2017). Significantly less attention has been paid for the fractional differential algebraic equations (Damarla and Kundu 2015; Ding and Jiang 2014; İbis et al. 2011; İbis and Bayram 2011; Jaradat et al. 2014; Zurigat et al. 2010). In particular, very little has been focused on some crucial items such as the analysis of the asymptotic behavior and smoothness degree of the exact solutions, introducing an easy way to implement numerical technique with powerful convergence properties. These failures motivate us in the presented paper to develop and analyze an effective numerical method for solving the fractional differential algebraic equations

where \(f, g: I \times \mathbb {R}\times \mathbb {R} \rightarrow \mathbb {R}\) are given continuous functions and x(t), y(t) are the exact solutions of the problem. The fractional derivative operator \(^C_0D_t^\alpha \) is used in the left Caputo sense and defined by Diethelm (2010), and Podlubny (1999)

where

is the left fractional integral operator of order \(1-\alpha \). Here, \(\mathbb R\) and \(\Gamma {(.)}\) are the set of all real numbers and the Gamma function respectively. Properties of the operators \(^C_0D_t^\alpha \) and \(_0I_t^\alpha \) can be found in Diethelm (2010), and Podlubny (1999). Also we recall the following relations

In this paper, we design our methodology based on the Galerkin method which represents the approximate solutions of (1) by means of a truncated series expansion such that the residual function minimized in a certain way (Canuto et al. 2006; Hesthaven et al. 2007; Shen et al. 2006). Moreover, we discuss about existence, uniqueness, smoothness and wellposedness properties of the solutions of (1). We prove that some derivatives of the exact solutions typically suffer from discontinuity at origin and thereby representation of the Galerkin solution of (1) as a linear combination of the smooth basis functions leads to a numerical method with poor convergence results. To avoid this drawback, we employ generalized Jacobi functions which were introduced by Chen et al. (2016) as trial functions. These functions are orthogonal with respect to a suitable weight function and enable us to produce a Galerkin approximation with the same asymptotic performance with the exact solution which is a essential item in providing a high accuracy.

The organization of the article is as follows: The next section is devoted to some preliminaries and definitions that are used in the sequel. In Sect. 3, the existence and uniqueness theorem for (1) as well as its regularity and well-posedness properties are discussed. In Sect. 4, we explain the numerical treatment of the problem. Convergence analysis of the proposed scheme is established in Sect. 5. In Sect. 6, we illustrate the obtained numerical results to confirm the effectiveness of the proposed scheme and finally in Sect. 7 we give our concluded remarks.

2 Preliminaries

In this section, we review the basic definitions and properties that are required in the rest of the paper. Throughout the paper C and \(C_i\) are generic positive constants independent of the approximation degree N. First we define the shifted generalized Jacobi functions on I that will be used as the basis functions in the Galerkin solution of (1). To this end, we denote the shifted generalized Jacobi functions on I by \(P^{\delta ,-\beta }_{n}(t),~n \ge 0\) and define

where \(J_{n}^{\delta ,\beta }(t)\) is the shifted Jacobi polynomials on I with real parameters (Chen et al. 2016). It can be verified that \(\{P_n^{\delta ,-\beta }(t)\}_{n \ge 0}\) are orthogonal for \(\delta , \beta >-1\) in the following sense

where \(w^{\delta ,-\beta }(t)=2^{\delta -\beta }(1-t)^{\delta } t^{-\beta }\) is the shifted weight function on I (Chen et al. 2016).

Now, let \(\mathcal {F}_{N}^{\delta ,-\beta }(I)\) be the finite-dimensional space

and define the orthogonal projection \(\Pi _N^{\delta ,-\beta }u \in \mathcal {F}_N^{\delta ,-\beta }(I)\) for \(\beta>0,~\delta >-1\) as

which can be represented by

with \(\Vert P_n^{\delta ,-\beta }\Vert _{\delta ,-\beta }^2:=\big (P_n^{\delta ,-\beta },P_n^{\delta ,-\beta }\big )_{\delta ,-\beta }\) as the weighted \(L_{\delta ,-\beta }^2\)-norm of \(P_n^{\delta ,-\beta }(t)\) on I. To simplicity we use the symbol \((L^2(I),\Vert .\Vert )\) when \(\delta =\beta =0\). To characterize the truncation error bound of \(\Pi _N^{\delta ,-\beta }u\) we introduce the weighted space (Chen et al. 2016)

and give the following lemma.

Lemma 2.1

(Chen et al. 2016) Assume that for \(\delta>-1,~\beta >0\) and a fixed number \(m \in \mathbb N_0\) we have \(u \in B_{\delta ,\beta }^{m}(I)\). Then

Note that in view of \(P_n^{\delta ,-\beta }(0)=0\) for \(\delta>-1,~\beta >0\), the functions \(\{P_n^{\delta , -\beta }(t);~~n \ge 0\}\) are suitable basis functions for the Galerkin solution of functional equations with homogeneous initial conditions.

We also recall the Legendre Gauss interpolation operator \(I_N^t\) for any function u(t), defined on I as

where \(\{L_n(t)\}_{n \ge 0}\) are the shifted Legendre polynomials on I and \(\big (u,L_n\big )_N\) is the discrete Legendre Gauss inner product defines by

where \(\{t_i,w_i\}_{i=0}^{N}\) are the shifted Legendre Gauss nodal points and corresponding weights over I, respectively (Canuto et al. 2006; Hesthaven et al. 2007; Shen et al. 2006). To provide an error estimation of the interpolation approximation of the function u(t), we define the non-uniformly sobolev space \(W^m(I)\) by Mokhtary (2015) and Shen et al. (2006)

and give the following lemma.

Lemma 2.2

(Mokhtary 2015; Shen et al. 2006) Assume that \(I_N^t(u)\) is the Legendre Gauss interpolation approximation of the function u(t). Then for any \(u(t) \in W^{m}(I)\) with \(m \ge 1\) we have

3 Existence and uniqueness theorem and influence of perturbed data

In this section, we provide an existence and uniqueness theorem for the exact solution of (1) and give its regularity properties. We also discuss about the behavior of the solution under perturbations in the given data. First we give two theorems which will be used in our analysis.

Theorem 3.1

(Zhang and Ge 2011) Assume that \(H:I \times \mathbb {R} \times \mathbb {R} \rightarrow \mathbb {R}\) is continuously differentiable and there exists a positive constant d such that \(\big |\frac{\partial }{\partial y}H(t,x,y)\big |>d>0\) for all \((t,x,y) \in I \times \mathbb R \times \mathbb R\). Then there exists a unique continuously differentiable function \(h:I \times \mathbb R \rightarrow \mathbb R\) such that \(H(t,x,h(t,x))=0\).

Theorem 3.2

(Diethelm 2010) Let the function \(F:I \times \mathbb {R} \rightarrow \mathbb {R}\) be continuous and satisfies in a Lipschitz condition with respect to its second variable, i.e., we have

for all real value functions u(t) and v(t) on I. Then, the fractional differential equation

has a unique continuous solution. Moreover, if \(F \in C^v(I\times \mathbb {R})\) for \(v\ge 0\), we have \(u(t) \in C^{v}(0,1]\cap C(I)\) with \(u'(t)=O(t^{\alpha -1})\) as \(t\rightarrow 0^+\). Here, C(I) is the space of all continuous functions on I and \(C^v(I):=\{u(t)|~u^{(v)} \in C(I),~~v \ge 0\}\).

Now, we are ready to prove the existence and uniqueness theorem of the solution of (1).

Theorem 3.3

Assume that the functions \(f, g:I \times \mathbb {R} \times \mathbb {R} \rightarrow \mathbb {R}\) are given such that the function f is continuous and satisfies in a Lipschitz condition with respect to its second and third variables, i.e., we have

and g is a continuously differentiable function and there exists a positive constant d such that \(\big |\frac{\partial }{\partial y}g(t,x,y)\big |>d>0\) for all \((t,x,y) \in I \times \mathbb R \times \mathbb R\). Then the fractional differential algebraic equation (1) has a unique continuous solution. In addition, if \(f,g \in C^v(I\times \mathbb {R}\times \mathbb {R})\) for \(v\ge 0\), we have

where the value of \(\tilde{v}\) depends on the smoothness of the function g and \(C_\alpha \) is a generic positive constant dependent on \(\alpha \).

Proof

Theorem 3.1, concludes that there exists a smooth function \(G:I \times \mathbb R \rightarrow \mathbb R\) such that \(y(t)=G(t,x(t))\) and thereby its substituting in (1) yields

with \(x(0)=0\) as the initial condition. Now we show that the function F(t, x(t)) defined in (7) has a Lipschitz property with respect to its second variable. To this end, we can write

in view of the Lipschitz assumption on f(t, x(t), y(t)). Moreover, since G(t, x(t)) is continuously differentiable then it also satisfies in a Lipschitz condition with respect to its second variable and this indicates that F(t, x(t)) satisfies in a Lipschitz condition with respect to its second component. Consequently the desired result can be obtained by applying Theorem 3.2 on (7). \(\square \)

After providing principles for the existence and uniqueness of solutions of (1) as well as its regularity properties, we now investigate the dependence of the exact solution to some small perturbations in the given data. This is clearly an important factor in the numerical solution of (1) because the influence of perturbations in the discretized equation is of fundamental importance in the analysis of convergence and determining the roundoff errors.

Theorem 3.4

Consider the Eq. (1) and suppose all conditions of Theorem 3.3 are satisfied. Let us now consider the perturbed equation

with small perturbations \(\delta _1, \delta _2, \varepsilon _0, \varepsilon _1\) and the perturbed solutions \(\tilde{x}, \tilde{y}\). Then we have

Proof

Using Theorem 3.1, y and \(\tilde{y}\) can be extracted from the algebraic constraints of (1) and (9) respectively as \(y=G(t,x)\) and \(\tilde{y}=G(t,\tilde{x})+\delta _2(t)\). Then we can write

Since G is a continuously differentiable function then it satisfies in a Lipschitz condition with respect to its second variable and this implies the following inequality

which implies (11). Now, we subtract the first equations of (9) and (1) each other and obtain

Applying the fractional integral operator \(_0I_t^\alpha \) on both sides of (13) and using Lipschitz assumption for f yield

in view of (2) and (12). Gronwall’s inequality (Mokhtary and Ghoreishi 2014a; Mokhtary 2016b) concludes

due to boundedness of operator \(_0I_t^\alpha \) (Mokhtary 2016a) which is the desired result (10). \(\square \)

Theorem 3.4, indicates that the Eq. (1) has perturbation index one along the solutions x(t) and y(t) which studied the effect of small perturbations and classified the complexities in the numerical solution of (1) (Gear 1990; Hairer et al. 1989). Moreover, Theorem 3.4 indicates the well-posedness of the considered problem (1) in the sense that small perturbations in the input data leads to a small changes in the exact solution. More precisely, the occurrence of small perturbations in the right hand sides of the inequalities (10) and (11) will translate in the numerical solution into a small discrete perturbations due to roundoff errors and wellposedness property does not allow a meaningful effect on the accuracy of the approximate solution.

4 Numerical approach

In this section, we introduce the generalized Jacobi Galerkin method for the numerical solution of (1). As we can see from Theorem 3.3, some derivatives of the exact solution of (1) have singularity at \(t=0^+\). Then, representation of the Galerkin solution of (1) by a linear combination of classical orthogonal polynomials leads to a loss in the global convergence order. To solve this difficulty, we should employ suitable basis functions which produce an approximate solution with the same asymptotic performance of the exact solution. From the definition of the shifted generalized Jacobi functions on I it can be easily seen that by representing the Galerkin solution of (1) as

we can produce a numerical solution for (1) that is matched with the singularity of the exact solution. Here \(\underline{a}=[a_0, a_1,\ldots , a_N],~\underline{b}=[b_0, b_1,\ldots , b_N]\) are the unknown vectors and \(\overline{P}=[P_{0}^{0,-\alpha }(t), P_1^{0,-\alpha }(t),\ldots ,P_N^{0,-\alpha }(t)]^{T}\) is the shifted generalized Jacobi function basis in I. P is a non-singular lower triangular coefficient matrix of order \(N+1\) and \(\underline{T}=[t^{\alpha },t^{\alpha +1},t^{\alpha +2},\ldots ,t^{\alpha +N}]^T\).

Inserting (15) into (1) we obtain

Applying (3) we can write

Substituting (17) into (16) we have

In the Galerkin method, the unknown vectors \(\underline{a}, \underline{b}\) are computed in such a way that (18) is orthogonal to the finite dimensional space \(\mathcal F_N^{0,-\alpha }(I)\). Thus the unknown vectors \(\underline{a}, \underline{b}\) must satisfy in the following algebraic system

for \(0 \le j \le N\). Using the relations (4), (6) and (17), we have

in view of the exactness of Legendre Gauss quadrature for all polynomials with degree at most \(2N+1\) (Canuto et al. 2006; Hesthaven et al. 2007; Shen et al. 2006).

In the implementation process, we approximate the integrals of the right hand side of (19) using the shifted Legendre Gauss quadrature formula over I as follows

Substituting (20), (21) and (22) into (19), we have the following \(2N+2\) nonlinear algebraic system of equations

which when solved gives us the unknown vectors \(\underline{a}\) and \(\underline{b}\).

5 Convergence analysis

In this section, we provide an error analysis to justify the convergence of the generalized Jacobi Galerkin approximation of (1).

Theorem 5.1

Assume that the conditions of Theorem 3.3 are satisfied and \(x_N(t), y_N(t)\) are the generalized Jacobi Galerkin approximations of (1) with the exact solutions x(t), y(t). If the following conditions are satisfied

-

\(f \in W^{m_1}(I) \cap B_{0,-\alpha }^{m_3}(I),\quad m_1 \ge 1,~ m_3 \ge 0,\)

-

\(g \in W^{m_2}(I) \cap B_{0,-\alpha }^{m_4}(I),\quad m_2 \ge 1,~ m_4 \ge 0,\)

-

\(^C_0D_t^{\alpha }x \in B_{0,-\alpha }^{m_5}(I),\quad m_5 \ge 0\),

then for sufficiently large N we have

where \(e_N(t)=x(t)-x_N(t)\) and \(\varepsilon _N(t)=y(t)-y_N(t)\) are the error functions.

Proof

According to the proposed numerical scheme in the previous section, we have

for \(0 \le j \le N\). Furthermore, we can write

and similarly we have

in view of the exactness of the Legendre Gauss quadrature for all polynomials with degree at most \(2N+1\). Inserting (27) and (28) into (26) we conclude

Considering (5), multiplying both sides of (29) by \(\frac{P_{i}^{0,-\alpha }(t)}{\Vert P_{i}^{0,-\alpha }\Vert _{0,-\alpha }^2}\) and summing up from 0 to N we obtain

where \(\tilde{f}=f(t,x_N(t),y_N(t))\) and \(\tilde{g}=g(t,x_N(t),y_N(t))\). Now, we subtract (1) from (30) and achieve

which can be rewritten as

with

Applying Taylor expansion formula for g(t, x, y) in some open neighborhood around \((x_N,y_N)\), we can write

where \(\xi =(t,\xi _1,\xi _2)\) which \(\xi _1\) lies between x(t) and \(x_N(t)\) and \(\xi _2\) lies between y(t) and \(y_N(t)\).

Thus using (33) and the second relation of (32) we obtain

in view of \(\frac{\partial g}{\partial y}\ne 0\). Now, applying the left fractional Riemann–Liouville integral operator \(_0I_t^{\alpha }\) on the both sides of the first equation (32) and using (2) concludes

which can be rewritten as

in view of the Lipschitz assumption on f. Inserting (34) into the above inequality we obtain to the following inequality

Applying the Gronwall’s inequality (Mokhtary and Ghoreishi 2014a; Mokhtary 2016b), in (35) we get

Due to boundedness of the operator \(_0I_t^\alpha \) (Mokhtary 2016a), and the orthogonal projection operator norm \(\Vert \Pi _N^{0,-\alpha }\Vert _{0,-\alpha }=1\) (Atkinson and Han 2009), the inequality above can be written as follows

From Lemma 2.2, we have

and from Lemma 2.1, the following inequalities hold

Substituting the relations (37)–(40) into (36) the desired error estimation (24) can be obtained by ignoring some unnecessary terms for sufficiently large values of N. In addition, from (34) we can write

Trivially, the second desired estimation (25) can be concluded by applying the relations (37) and (39) into the equation above and ignoring some unnecessary terms for sufficiently large values of N. \(\square \)

6 Numerical results

In this section, we illustrate the generalized Jacobi Galerkin method for the Eq. (1) in the context of some test problems in order to confirm the computational efficiency of the scheme. All the calculations were supported by the Mathematica\(^\circledR \) software and all obtained nonlinear algebraic systems were solved by employing the well known iterative quasi Newton method (Fletcher 1987). The numerical errors reported in tables are calculated by \(L^2\)-norm of the error function.

Example 6.1

Consider the fractional differential algebraic equation

with the initial conditions \(x(0)=y(0)=0\). The exact solution is

To show the efficiency of the proposed scheme in approximating (1), we implement the generalized Jacobi Galerkin method for the numerical solution of (6.1) with approximation degree \(N=1\) and consider

as approximate solutions of (41). Substituting (42) into (41) and using the described technique in the Sect. 4, we obtain the following nonlinear algebraic system

with the following solution

Replacing (43) into (42) we obtain

with the errors

which proves the high accuracy of the approximate solutions (42).

Example 6.2

Consider the following fractional differential algebraic equation

with the initial conditions \(x(0)=y(0)=z(0)=0\). The functions \(q_1(t), q_2(t) , q_3(t)\) are chosen such that the exact solution of the problem is \(x(t)=\sin {(\sqrt{t})}, y(t)=e^{t \sqrt{t}}-1, z(t)=t \tan (\sqrt{t})\).

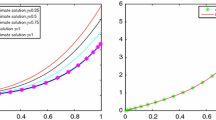

In this example, we make a comparison between our scheme and the variational iteration method (VIM) proposed in İbis and Bayram (2011) to show the efficiency of our described approach. To this end, we first implement the proposed method and the following VIM’s convergent iterations

and give the obtained results in Table 1 and Fig.1. The reported \(L^2\)-norm errors show a significant superiority of our scheme over VIM such that it produces a lower errors with less values of N in compared with the VIM.

Comparison of the obtained errors between our method (solid lines) and VIM (dashed lines) with different values of N for Example 6.2

Example 6.3

(Ding and Jiang 2014) Consider the following fractional differential algebraic equation

with the initial conditions \(x(0)=y(0)=0, z(0)=1\). The functions \(q_1(t), q_2(t) , q_3(t)\) are chosen such that the exact solution of the problem is \(x(t)=t^3,~~ y(t)=2t +t^4,~~z(t)=e^t+t \sin {(t)}\).

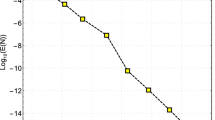

In order to obtain the homogeneous initial conditions, we apply the transformation \(w(t)=z(t)-1\) and solve the new equation using the proposed approach. The obtained numerical results are listed in Table 2 and Fig. 2. This example was also solved in Ding and Jiang (2014) by employing the waveform relaxation method and reported the following numerical errors

with 16 iterations. Comparing our reported results with those obtained in (45) approves the superiority and reliability of the proposed scheme over the method presented in Ding and Jiang (2014).

The numerical errors with different values of N for Example 6.3

In the next example, we illustrate a problem when we do not have access to exact solution.

Example 6.4

(İbis and Bayram 2011) Consider the following fractional differential algebraic equation

with the initial conditions \(x(0)=1, y(0)=0\).

Since a generally applicable method to determine the analytical solutions of (46) is not readily available, we have to return to some convergent numerically computed solutions. To this end, we can use the Adomian decomposition method (İbis and Bayram 2011) which represents the exact solutions x(t) and y(t) by the following convergent infinite series

where the iterates \(x_k(t)\) and \(y_k(t)\) are determined in the following recursive way

such that \(\tilde{g}_1(t), \tilde{g}_2(t)\) are Taylor series of \(e^{-t}+\sin {t}\) and \(\sin {t}\), respectively. In view of (47), we may be assured that the following source solutions

for sufficiently large values of L, shows a qualitatively correct picture of the exact solutions x(t) and y(t) in evaluating the precision of the proposed generalized Jacobi Galerkin method. Here we have chosen \(L=100\).

We solve (46) using the method described in Sect. 4 with \(N=15, \alpha =0.5\) and compare the obtained results with those obtained by reference solutions (48) in Table 3 and Fig. 3. The reported results approve that our approach produces the approximate solutions which are in a good agreement with source ones.

Plots of the generalized Jacobi Galerkin approximations (dashed lines) and the reference solutions (rectangle markers) of x(t) (left hand side) and y(t) (right hand side) for Example 6.4

Example 6.5

In this example, we consider a practical application of differential algebraic equations in modeling of the following simple RLC circuit with a voltage source V(t), inductance L, a resistor with conductance R and a capacitor with capacitance \(C>0\) (Fig. 4):

A simple RLC circuit for Example 6.5

To this end, applying Kirchhoff’s voltage and current laws yield

-

Conservation of current: \(i_E = i_R,~ i_R = i_C,~ i_C = i_L,\)

-

Conservation of energy: \(V_R + V_L + V_C + V_E = 0,\)

-

Ohm’s Laws: \(C V'_C = i_C; ~ L V'_L = i_L; ~ V_R = R i_R.\)

After replacing \(i_R\) with \(i_E\) and \(i_C\) with \(i_L\), we get the following differential algebraic equation

where \(\alpha =1, X=\big (V_C(t),V_L(t),V_R(t),i_L(t),i_E(t)\big )^T\) and

Clearly, for homogeneous initial conditions, \(\alpha =1\) and current \(i_L=\sin {t}\), we have the exact solutions \(V_C= \frac{1}{C}(1-\cos {t}),~V_L= \frac{1}{L}(1-\cos {t}),~V_R=-R \sin {t}, i_L=\sin {t}\) and \(V_E=\Big (\frac{1}{C}+\frac{1}{L}\Big ) (\cos {t}-1)+R \sin {t}\).

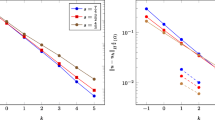

The approximate solutions with different values of \(\alpha \) and \(N=10\) for Example 6.5

Now we consider (49) with input data \(R=4, L=0.4, C=2\) and implement the proposed scheme for various \(\alpha \) and report the obtained numerical results in Table 4 and Fig. 5. In Table 4, we have listed the obtained numerical errors with various values of N and \(\alpha =1\). In Fig. 5, we plot the approximate solutions for different values of \(\alpha \). In overall, the reported results indicate that as \(\alpha \) tends to 1, the approximate solutions tend to the exact solution of (49) with \(\alpha =1\). This confirms the effectiveness and reliability of the proposed generalized Jacobi Galerkin method in approximating the practical models of fractional differential algebraic equations.

7 Conclusion

In this article, we developed and analyzed the generalized Jacobi Galerkin method for the numerical solution of a class of the nonlinear fractional differential algebraic equation. First, we investigated the existence and uniqueness theorem along with the regularity and well-posedness properties of the exact solution and proved that some derivatives of the exact solution have singularity at the origin. To obtain a numerical solution with good convergence properties we considered the Galerkin solution of the problem by a linear combination of the recently defined generalized Jacobi functions which matched with the singularity of the exact solution. We also estimated the numerical errors obtained from implementation of the presented method. Finally, we confirmed the effectiveness of the numerical scheme by illustrating some numerical examples.

References

Atkinson KE, Han W (2009) Theoretical numerical analysis, a functional analysis framework, 3rd edn, Texts in Applied Mathematics, vol 39. Springer, Dordrecht

Babaei A, Banihashemi S (2017) A stable numerical approach to solve a time-fractional inverse heat conduction problem. Iran J Sci Technol Trans A Sci. https://doi.org/10.1007/s40995-017-0360-4

Bhrawy AH, Zaky MA (2016a) Shifted fractional-order Jacobi orthogonal functions: application to a system of fractional differential equations. Appl Math Model 40(2):832–845

Bhrawy AH, Zaky MA (2016b) A fractional-order Jacobi–Tau method for a class of time-fractional PDEs with variable coefficients. Math Methods Appl Sci 39(7):1765–1779

Canuto C, Hussaini MY, Quarteroni A, Zang TA (2006) Spectral methods. Fundamentals in single domains. Springer, Berlin

Chen S, Shen J, Wang LL (2016) Generalized Jacobi functions and their applications to fractional differential equations. Math Comput 85:1603–1638

Dabiri A, Butcher EA (2016) Numerical solution of multi-order fractional differential equations with multiple delays via spectral collocation methods. Appl Math Model 56:424–448

Dabiri A, Butcher EA (2017a) Efficient modified Chebyshev differentiation matrices for fractional differential equations. Commun Nonlinear Sci Numer Simul 50:284–310

Dabiri A, Butcher EA (2017b) Stable fractional Chebyshev differentiation matrix for the numerical solution of multi-order fractional differential equations. Nonlinear Dyn 90(1):185–201

Dabiri A, Nazari M, Butcher EA (2016) Optimal fractional state feedback control for linear fractional periodic time-delayed systems. In: 2016 American control conference (ACC). https://doi.org/10.1109/acc.2016.7525339

Dabiri A, Moghaddam BP, Tenreiro Machadoc JA (2018) Optimal variable-order fractional PID controllers for dynamical systems. J Comput Appl Math 339:40–48

Damarla SK, Kundu M (2015) Numerical solution of fractional order differential algebraic equations using generalized triangular function operational matrices. J Fract Calc Appl 6(2):31–52

Diethelm K (2010) The analysis of fractional differential equations. Springer, Berlin

Ding XL, Jiang YL (2014) Waveform relaxation method for fractional differential algebraic equations. Fract Calc Appl Anal 17(3):585–604

Fletcher R (1987) Practical methods of optimization, 2nd edn. Wiley, New York

Ghoreishi F, Mokhtary P (2014) Spectral collocation method for multi-order fractional differential equations. Int J Comput Methods 11:23. https://doi.org/10.1142/S0219876213500722

Gear CW (1990) Differential algebraic equations, indices, and integral algebraic equations. SIAM J Numer Anal 27(6):1527–1534

Hairer E, Lubich C, Roche M (1989) The numerical solution of differential-algebraic systems by Runge–Kutta methods. Springer, Berlin

Hesthaven JS, Gottlieb S, Gottlieb D (2007) Spectral methods for time-dependent problems, Cambridge Monographs on Applied and Computational Mathematics, vol 21. Cambridge University Press, Cambridge

İbis B, Bayram M (2011) Numerical comparison of methods for solving fractional differential-algebraic equations (FDAEs). Comput Math Appl 62(8):3270–3278

İbis B, Bayram M, Göksel Ağargün A (2011) Applications of fractional differential transform method to fractional differential-algebraic equations. Eur J Pure Appl Math 4(2):129–141

Jaradat HM, Zurigat M, Al-Sharan S (2014) Toward a new algorithm for systems of fractional differential algebraic equations. Ital J Pure Appl Math 32:579–594

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier, Amsterdam

Keshi FK, Moghaddam BP, Aghili A (2018) A numerical approach for solving a class of variable-order fractional functional integral equations. Comput Appl Math. https://doi.org/10.1007/s40314-018-0604-8

Moghaddam BP, Aghili A (2012) A numerical method for solving linear non-homogeneous fractional ordinary differential equations. Appl Math Inf Sci 6(3):441–445

Moghaddam BP, Machado JAT (2017a) A computational approach for the solution of a class of variable-order fractional integro-differential equations with weakly singular kernels. Fract Calc Appl Anal 20(4):1305–1312. https://doi.org/10.1515/fca-2017-0053

Moghaddam BP, Machado JAT (2017b) SM-Algorithms for approximating the variable-order fractional derivative of high order. Fundam Inform 151(1–4):293–311

Moghaddam BP, Machado JAT, Behforooz HB (2017a) An integro quadratic spline approach for a class of variable order fractional initial value problems. Chaos Solitons Fractals 102:354–360

Moghaddam BP, Machado JAT, Babaei A (2017b) A computationally efficient method for tempered fractional differential equations with application. Appl Math Comput. https://doi.org/10.1007/s40314-017-0522-1

Mokhtary P (2015) Reconstruction of exponentially rate of convergence to Legendre collocation solution of a class of fractional integro-differential equations. J Comput Appl Math 279:145–158

Mokhtary P (2016a) Discrete Galerkin method for fractional integro-differential equations. Acta Math Sci 36B(2):560–578

Mokhtary P (2016b) Numerical treatment of a well-posed Chebyshev Tau method for Bagley–Torvik equation with high-order of accuracy. Numer Algorithms 72:875–891

Mokhtary P (2017) Numerical analysis of an operational Jacobi Tau method forfractional weakly singular integro-differential equations. Appl Numer Math 121:52–67

Mokhtary P, Ghoreishi F (2011) The \(L^2\)-convergence of the Legendre-spectral Tau matrix formulation for nonlinear fractional integro-differential equations. Numer Algorithms 58:475–496

Mokhtary P, Ghoreishi F (2014a) Convergence analysis of the operational Tau method for Abel-type Volterra integral equations. Electron Trans Numer Anal 41:289–305

Mokhtary P, Ghoreishi F (2014b) Convergence analysis of spectral Tau method for fractional Riccati differential equations. Bull Iran Math Soc 40(5):1275–1296

Mokhtary P, Ghoreishi F, Srivastava HM (2016) The Müntz–Legendre Tau method for fractional differential equations. Appl Math Model 40(2):671–684

Pedas A, Tamme E, Vikerpuur M (2016) Spline collocation for fractional weakly singular integro-differential equations. Appl Numer Math 110:204–214

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Shen J, Tang T, Wang LL (2006) Spectral methods algorithms, analysis and applications. J Math Anal Appl 313:251–261

Taghavi A, Babaei A, Mohammadpour A (2017) A stable numerical scheme for a time fractional inverse parabolic equations. Inverse Probl Sci Eng 25(10):1474–1491

Zaky MA (2017) A Legendre spectral quadrature Tau method for the multi-term time-fractional diffusion equations. Appl Math Comput. https://doi.org/10.1007/s40314-017-0530-1

Zhang W, Ge SS (2011) A global implicit function theorem without initial point and its applications to control of non-affine systems of high dimensions. Springer, Berlin

Zurigat M, Momani S, Alawneha A (2010) Analytical approximate solutions of systems of fractional algebraic differential equations by homotopy analysis method. Comput Math Appl 59(3):1227–1235

Acknowledgements

The authors cordially thank anonymous referees for their valuable comments that improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Vasily E. Tarasov.

Rights and permissions

About this article

Cite this article

Ghanbari, F., Ghanbari, K. & Mokhtary, P. Generalized Jacobi–Galerkin method for nonlinear fractional differential algebraic equations. Comp. Appl. Math. 37, 5456–5475 (2018). https://doi.org/10.1007/s40314-018-0645-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-018-0645-z

Keywords

- Fractional differential algebraic equation

- Generalized Jacobi–Galerkin method

- Regularity

- Convergence analysis