Abstract

This paper tackles the \(H_{\infty }\) filtering problem for 2-D discrete systems. The approach is based on the Roesser model. The objective is to propose a new design with sufficient condition via LMI formulations. Less conservative results are obtained by introducing additional free parameters by using the Finsler’s Lemma. This method provides extra degree of freedom in optimization of the \(H_{\infty }\) performance. The efficiency of the proposed approach is shown by several examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Two-dimensional (2-D) system theory has attracted considerable attention due to its extensive applications of many physical systems, such as those in state-space digital filter, image data processing and transmission, thermal processes, biomedical imaging, gas absorbtion, water stream heating, etc. So they are being extensively studied. To mention a few of the results obtained so far, modeling has been studied in Fornasini and Marchisini (1976, (1978), Roesser (1975) and Takagi (1985); the stability has been investigated in Xia and Jia (2002), Hmamed et al. (2008), Dey et al. (2012) and Kokil et al. (2012); \(H_{\infty }\) stabilization and control were solved in Du et al. (2001, (2002), Benhayoun et al. (2013), Hmamed et al. (2010), Xu et al. (2008), Wang et al. (2015), Qiu et al. (2015a, (2015b, (2015c); \(H_{\infty }\) filtering for 2-D linear, delayed and Takagi–Sugeno systems have been studied, respectively, in Gao and Li (2014), Ying and Rui (2011), Gao et al. (2008), El-Kasri et al. (2012, (2013a, (2013b), Du et al. (2000), Xu et al. (2005), Wu et al. (2008), Boukili et al. (2014b), Qiu et al. (2013), Hmamed et al. (2013), Gao and Wang (2004), Chen and Fong (2006), Boukili et al. (2013, (2014a) and Meng and Chen (2014); finally, Li and Gao (2012), Gao and Li (2011) and Li et al. (2012) has addressed the finite frequency \(H_{\infty }\) filtering for 2-D systems.

This paper concentrates on filtering, as it is an important problem in signal processing. More precisely, this paper concentrates on \(H_{\infty }\) filtering: \(H_{\infty }\) filtering for 2-D systems with parameter uncertainties has been studied in Xu et al. (2005), Hmamed et al. (2013), El-Kasri et al. (2013a, (2013b), Boukili et al. (2013), Chen and Fong (2006) and Wu et al. (2008). These previous results on robust \(H_{\infty }\) filtering are mostly based on quadratic stability conditions and are hence inevitably conservative, as the same Lyapunov function is used for the entire uncertainty domain.

To overcome this conservatism, this paper considers parameter-dependent Lyapunov functions, to reduce the overdesign inherent to a quadratic framework Gao and Li (2014), Ying and Rui (2011), Gao et al. (2008) and El-Kasri et al. (2012). In addition, in order to decouple the product terms between the Lyapunov matrix and system matrices and provide extra degrees of freedom, slack matrices are introduced. The key in our approach is then the use of four independent slack matrices and some homogenous polynomially parameter-dependent matrices of arbitrary degree: as their degree grows, increasing precision is obtained, providing less conservative filter designs. The filters are designed for systems with parameter uncertainties that belong to a polytope, where only the vertices are known. The proposed condition include as special cases the previous quadratic formulations, and also the linearly parameter-dependent approaches (that use linear convex combinations of matrices).

It must be emphasized that the theoretical results are given in the form of linear matrix inequalities (LMIs), which can be solved by standard numerical software, thus providing a simple methodology. An example shows the effectiveness of the proposed approach.

The organization of this paper is as follows: Sect. 2 states the problem to be solved and present some preliminary results. Then the analysis of robust asymptotical stability with \(H_{\infty }\) performance is given in Sect. 3. The \(H_{\infty }\) filter design scheme is then developed in Sect. 4, followed by an example to illustrate the effectiveness of the proposed approach. Finally, some conclusions are given.

Notations: The notation used throughout the paper is standard. The superscript T stands for matrix transposition. \(P>0\) means that the matrix P is real symmetric and positive definite. I is the identity matrix with appropriate dimension. In symmetric block matrices or long matrix expressions, we use an asterisk \(*\) to represent terms induced by symmetry. \(\hbox {diag}\{\ldots \}\) stands for a block-diagonal matrix. The \(l_{2}\) norm for a 2-D signal w(i, j) is given by

where w(i, j) is said to be in the space \(l_{2}\{[0,\infty ),[0,\infty )\}\) or \(l_{2}\), for simplicity, if \(\parallel w\parallel _{2}<\infty \). A 2-D signal w(i, j) in the \(l_{2}\) space is an energy-bounded signal.

2 Problem Description

Consider a 2-D discrete system described by the following Roesser model:

where \(x^{h}(i,j)\in R^{n_{1}}\) is the state vector in the horizontal direction, \(x^{v}(i,j)\in R^{n_{2}}\) the state vector in the vertical direction, \(y(i,j)\in R^{m}\) is the measured signal vector, \(z(i,j)\in R^{v}\) the signal to be estimated, and \(w(i,j)\in R^{q}\) is the disturbance signal vector. It is assumed that w(i, j) belongs to \(L_{2}\{[0,\infty ),[0,\infty )\}\). The system matrices are decomposed in blocks as follows:

where the dimensions of each block are compatible with the vectors.

The system matrices are assumed to be uncertain and bounded in a polyhedral domain

where \(\mathcal {R}\) denotes a polytope defined as

with \(\varOmega _{i}\triangleq (A_{i}, B_{i}, C_{i}, D_{i}, H_{i})\) denoting the vertices of \(\mathcal {R}\) and

is the unit simplex.

The boundary condition of the system fulfills

In this paper, we consider a 2-D filter represented by the following Roesser model:

where \(\hat{x}^{h}(i,j)\in R^{n_{1}}\) is the filter state vector in the horizontal direction, \(\hat{x}^{v}(i,j)\in R^{n_{2}}\) is the filter state vector in the vertical direction and \(\hat{z}(i,j)\in R^{p}\) is the estimation of z(i, j). The matrices are real valued and are decomposed in the following block form

Defining the augmented state vectors

and the estimation error

gives the following filtering error system:

where

The transfer function of the filtering error system is then

Thus, the robust \(H_{\infty }\) filtering error problem can be stated as follows:

\(\mathbf {Problem\,description}{:}\) Given the Roesser system (1) with parameter uncertainty (3), find a filter (7), such that the filter error system (11) is robustly asymptotically stable for all \(\tau \in \varGamma \) and satisfies the following robust \(H_{\infty }\) performance:

where \(\gamma \) is a given positive scalar.

Remark 2.1

The parameter uncertainties considered in this paper are assumed to be of polytopic type, entering into all the matrices of the system model. This description has been widely used for robust control and filtering (see, Gao and Wang 2004; Xia and Jia 2002), as many practical systems present parameter uncertainties which can be exactly modeled by a polytopic uncertainty, or at least bounded.

To derive our main results, we use Finsler’s Lemma:

Lemma 2.2

(Lacerda et al. 2011) Let \(\zeta \in \mathbb {R}^{n}, \mathcal {Q}\in \mathbb {R}^{n\times n}\) and \(\mathcal {B}\in \mathbb {R}^{m\times n}\) with rank \((\mathcal {B})=r<n\) and \(\mathcal {B}^{\perp }\in \mathbb {R}^{n\times (n-r)}\) be full-column-rank matrix satisfying \(\mathcal {B}\mathcal {B}^{\perp }=0\). Then, the following conditions are equivalent:

-

1.

\(\zeta ^\mathrm{T}\mathcal {Q}\zeta <0, \forall \zeta \ne 0:\mathcal {B}\zeta =0\)

-

2.

\(\mathcal {B}^{\perp T}\mathcal {Q}\mathcal {B}^{\perp }<0\)

-

3.

\(\exists \mu \in \mathbb {R}: \mathcal {Q}-\mu \mathcal {B}^\mathrm{T}\mathcal {B}<0\)

-

4.

\(\exists \mathcal {X}\in \mathbb {R}^{n\times m}: \mathcal {Q}+\mathcal {X}\mathcal {B}+\mathcal {B}^\mathrm{T}\mathcal {X}^\mathrm{T}<0\)

3 \(H_{\infty }\) Filtering Analysis

In this section, the filtering analysis problem is considered. More specifically, we assume that the filter matrices in (8) are known, and we will study the condition under which the filtering error system (11) is asymptotically stable with \(H_{\infty }\)-norm bounded \(\gamma \). To solve the robust \(H_{\infty }\) filtering problem, we first recall the following result (Gao et al. 2008; Du et al. 2002).

Lemma 3.1

Given a positive scalar \(\gamma \), if \((A_{\tau }, B_{\tau }, C_{\tau }, D_{\tau }, H_{\tau }) \in \varOmega \) are arbitrary but fixed, then the filtering error system (11) is asymptotically stable and satisfies the \(H_{\infty }\) performance \(\gamma \) for any fixed \(\tau \in \varGamma \), if there exists a block-diagonal matrix \(P_{\tau }=\hbox {diag}\{P^{h}_{\tau }\;,\; P^{v}_{\tau }\}>0\), where \(P^{h}_{\tau }\in \mathbb {R}^{(2\times n_{1})\times (2\times n_{1})}\) and \(P^{v}_{\tau }\in \mathbb {R}^{(2\times n_{2})\times (2\times n_{2})}\), such that

Proposition 3.2

Given a positive scalar \(\gamma \), if \((A_{\tau }, B_{\tau }, C_{\tau }, D_{\tau }, H_{\tau }) \in \varOmega \) are arbitrary but fixed, the filtering error system (11) is asymptotically stable with \(H_{\infty }\)-norm bounded \(\gamma \) if there exist parameter-dependent symmetric positive definite matrices \(P_{\tau }=\hbox {diag}\{P^{h}_{\tau }\;,\; P^{v}_{\tau }\}\), and parameter-dependent matrices \(M_{\tau }, S_{\tau }, R_{\tau }\) and \(F_{\tau }\) such that:

where

Proof

To prove the theorem above, we consider the following matrices

Therefore, the condition (2) of Lemma 2.2 is equivalent to

By Schur complement argument, it can be seen that the inequality (17) is equivalent to condition (15), which completes the proof.

Remark 3.3

\(M_{\tau }, S_{\tau }, R_{\tau }\) and \(F_{\tau }\) act as slack variables to provide extra degrees of freedom in the solution space of the robust \(H_{\infty }\) filtering problem. By setting \(R_{\tau }=0\) and \(F_{\tau }=0\), Proposition 3.2 coincides with the results of Theorem 1 in Ying and Rui (2011). Thanks to lack variable matrices, we obtain an LMI in which the Lyapunov matrix \(P_{\tau }\) is not involved in any product with the system matrices. This enables us to derive a robust \(H_{\infty }\) filtering condition that is less conservative than previous results due to the extra degrees of freedom (see the numerical example at the end of the paper).

4 \(H_{\infty }\) Filter Design

In this section, a methodology is established for designing the \(H_{\infty }\) filter (7), that is, to determine the filter matrices (8) such that the filtering error system (11) is asymptotically stable with an \(H_{\infty }\)-norm bounded by \(\gamma \).

Based on Proposition 3.2, we select for variables \(P_{\tau }\) and \(M_{\tau }\) the following structures (Gao et al. 2008):

Then, let the slack variables \(S_{\tau }\), \(F_{\tau }\) and \(R_{\tau }\) take the following structure (Lacerda et al. 2011)

where \(P_{\tau }, M_{1\tau }^{h}, M_{2\tau }^{h}, M_{1\tau }^{v}, M_{2\tau }^{v}, S_{1\tau }^{h}, S_{2\tau }^{h}, S_{1\tau }^{v}, S_{2\tau }^{v}, R^{h}_{\tau }, R^{v}_{\tau }, F^{h}_{\tau }, F^{v}_{\tau }\) depend on the parameter \(\tau \), while \(M_{3}^{h}, M_{4}^{h}, M_{3}^{v}\), and \(M_{4}^{v}\) are fixed for the entire uncertainty domain and, without loss of generality, invertible; the scalar parameters \(\lambda _{1}, \lambda _{2}, \lambda _{3}\) and \(\lambda _{4}\) will be used as optimization parameters.

Remark 4.1

The structure of \(S_{\tau }\) in (19) is different than the one proposed in Ying and Rui (2011), in which \(S = \delta M\), so it depended on M. It is important to note that \(S_{1\tau }^{h}, S_{2\tau }^{h}, S_{1\tau }^{v}\) and \(S_{2\tau }^{v}\) of S in the new structure (19) are free slack variables completely independent of M. This provides extra degrees of freedom in the solution space for the LMI optimization problems derived from Theorem 4.3.

Define matrices

Applying congruence transformations to (16) by \(\hbox {diag}\{\varPi , \varPi , I, I\}\) we get

where

We define

With a new change of variables in inequality (16) by the above matrices, we obtain the following result.

Proposition 4.2

Given the 2-D system in (1), for the filter in (7), an any fixed \(\tau \in \varGamma \), there exist a matrix \(P_{\tau }=\hbox {diag}\{P^{h}_{\tau }, P^{v}_{\tau }\}\) and filter matrices \(A_{f}, B_{f}, C_{f}\) satisfying (15) if there exist matrices

\(\bar{P}_{\tau }=\hbox {diag}\left\{ \begin{array}{lr} \bar{P}^{h}_{\tau } \;&{}\; \bar{P}^{v}_{\tau } \\ \end{array} \right\} >0, M_{\tau }=\hbox {diag}\left\{ \begin{array}{lr} M^{h}_{\tau } \;&{}\; M^{v}_{\tau } \\ \end{array} \right\} \),

\(S_{\tau }=\hbox {diag}\left\{ \begin{array}{lr} S^{h}_{\tau } \;&{}\; S^{v}_{\tau } \\ \end{array} \right\} , N_{\tau }=\hbox {diag}\left\{ \begin{array}{lr} N^{h}_{\tau } \;&{}\; N^{v}_{\tau } \\ \end{array} \right\} \),

\(U=\hbox {diag}\left\{ \begin{array}{lr} U^{h} \;&{}\; U^{v} \\ \end{array} \right\} , Q_{\tau }=\hbox {diag}\left\{ \begin{array}{lr} Q^{h}_{\tau } \;&{}\; Q^{v}_{\tau } \\ \end{array} \right\} \),

\(R_{\tau }=\left[ \begin{array}{cc} (R^{h}_{\tau })^\mathrm{T}\;&\; (R^{v}_{\tau })^\mathrm{T} \end{array} \right] ^\mathrm{T}, F_{\tau }=\left[ \begin{array}{cc} (F^{h}_{\tau })^\mathrm{T}\;&\; (F^{v}_{\tau })^\mathrm{T} \end{array} \right] ^\mathrm{T}\),

\(\bar{A}_{f}\), \(\bar{B}_{f}\), \(\bar{C}_{f}, \varLambda _{1}=\hbox {diag}\{\lambda _{1},\; \lambda _{3}\}, \varLambda _{2}=\hbox {diag}\{\lambda _{2},\; \lambda _{4}\}\) with \(\lambda _{1}, \lambda _{2}, \lambda _{3}\) and \(\lambda _{4}\) real scalars satisfying:

where

Moreover, under the above condition, the matrices for an admissible robust \(H_{\infty }\) filter are given by

Proof

We know the transfer function of the filter (7) from y(i, j) to \(\bar{z}(i,j)\) is given by

Substituting (21) into this transfer function and considering

we get

Therefore, the desired filter is given by (23) and the proof is completed.

Before presenting the formulation of Proposition 4.2 using homogeneous polynomially parameter-dependent matrices, some definitions and preliminaries are needed to represent and handle products and sums of homogeneous polynomials. First, we define the homogeneous polynomially parameter-dependent matrices of degree g by

Similarly, matrices \(M_{\tau }, N_{\tau }, R_{\tau }, Q_{\tau }, S_{\tau }\) and \(F_{\tau }\) take the same form.

The notations in the above are explained as follows. Define K(g) as the set of s-tuples obtained as all possible combination of \([k_{1},k_{2},\ldots ,k_{s}]\), with \(k_{i}\) being nonnegative integers, such that \(k_{1}+k_{2}+\cdots +k_{s}=g\). \(K_{j}(g)\) is the j-th s-tuples of K(g) which is lexically ordered, \(j=1,\ldots ,J(g)\). Since the number of vertices in the polytope \(\varGamma \) is equal to s, the number of elements in K(g) as given by \(J(g)=\frac{(s +g-1)!}{g!(s -1)!}\). These elements define the subscripts \(k_{1},k_{2},\ldots ,k_{s}\) of the constant matrices

\(\bar{P}_{k_{1},k_{2},\ldots k_{s}}\triangleq \bar{P}_{k_{j}(g)}, \quad M_{k_{1},k_{2},\ldots k_{s}}\triangleq M_{k_{j}(g)}\),

\(N_{k_{1},k_{2},\ldots k_{s}}\triangleq N_{k_{j}(g)}, \quad R_{k_{1},k_{2},\ldots k_{s}}\triangleq R_{k_{j}(g)}\),

\(Q_{k_{1},k_{2},\ldots k_{s}}\triangleq Q_{k_{j}(g)}, \quad S_{k_{1},k_{2},\ldots k_{s}}\triangleq S_{k_{j}(g)}\),

\(F_{k_{1},k_{2},\ldots k_{s}}\triangleq F_{k_{j}(g)}\), which are used to construct the homogeneous polynomial-dependent matrices \(\bar{P}_{\tau }, M_{\tau }, N_{\tau }, R_{\tau } Q_{\tau }, S_{\tau }, F_{\tau }\) in (27).

For each set K(g), define also the set I(g) with elements \(I_{j}(g)\) given by subsets of \(i,\; i\in \{1,2,\ldots ,s\}\), associated with s-tuples \(K_{j}(g)\) whose \(k_{i}\)’s are nonzero. For each i, i=1,ldots,s, define the s-tuples \(K_{j}^{i}(g)\) as being equal to \(K_{j}(g)\) but with \(k_{i}>0\) replaced by \(k_{i}-1\). Note that the s-tuples \(K_{j}^{i}(g)\) are defined only in the cases where the corresponding \(k_{i}\) is positive. Note also that, when applied to the elements of \(K(g+1)\), the s-tuples \(K_{j}^{i}(g+1)\) define subscripts \(k_{1},k_{2},\ldots ,k_{s}\) of matrices \(\bar{P}_{k_{1},k_{2},\ldots ,k_{s}}, M_{k_{1},k_{2},\ldots ,k_{s}}, N_{k_{1},k_{2},\ldots ,k_{s}},\) \(Q_{k_{1},k_{2},\ldots ,k_{s}}, S_{k_{1},k_{2},\ldots ,k_{s}},\) \(F_{k_{1},k_{2},\ldots ,k_{s}}, R_{k_{1},k_{2},\ldots ,k_{s}},\) associated with homogeneous polynomially parameter-dependent matrices of degree g. Finally, define the scalar constant coefficients \(\beta _{j}^{i}(g+1)=\frac{g!}{(k_{1}!k_{2}!\ldots k_{s}!)},\) with \([k_{1},k_{2},\dots ,k_{s}]\in K_{j}^{i}(g+1)\).

The main result in this section is given in the following Theorem 4.3.

Theorem 4.3

Given a stable 2-D system (1) and a scalar \(\gamma > 0\), a filter (7) exists such that the filtering error system (11) is robustly asymptotically stable and satisfies (14). If there exist matrices

\(\bar{A}_{f},\) \(\bar{B}_{f},\) \(\bar{C}_{f}, \varLambda _{1}=\mathrm{diag}\{\lambda _{1},\;\; \lambda _{3}\}, \varLambda _{2}=\mathrm{diag}\{\lambda _{2},\;\; \lambda _{4}\}\) with \(\lambda _{1}, \lambda _{2}, \lambda _{3}\) and \(\lambda _{4}\) real scalars such that the following LMIs hold for all \(K_{l}(g+1)\in K(g+1)\), l=1,ldots,J(g+1):

where

Then, the homogeneous polynomial matrices \(\bar{P}_{\tau }, M_{\tau }, N_{\tau }, R_{\tau }, Q_{\tau }, S_{\tau }\) and \(F_{\tau }\) assure (22) for all \(\tau \in \varGamma \).

Moreover, if the LMIs of (28) are fulfilled for a given degree \(\bar{g}\), then the LMIs corresponding to any degree \(g>\bar{g}\) are also satisfied.

Proof

\(\mathbf {First\,part}\): Since \(\bar{P}_{K_{j}(g)}>0, K_{j(g)}\in K(g),\; j=1,ldots,J(g)\), we know that \(\bar{P}_{\tau }\) defined in (27) is positive definite for all \(\tau \in \varGamma \). Now, note that \(\Xi \in \) (22) for \((A_{\tau }, B_{\tau }, C_{\tau }, D_{\tau }, H_{\tau })\in \varOmega \) and \(P_{\tau }, M_{\tau }, N_{\tau }, T_{\tau }, R_{\tau }\) and \(S_{\tau }\) given by (27) are homogeneous polynomial matrix equations of degree \(g+1\) that can be written as

Condition (28) imposed for all \(l, l=1,ldots,J(g+1)\) assure that \(\Xi _{\tau }<0\) for all \(\tau \in \varGamma \), and thus the first part is proved.

\(\mathbf {Second\,part}\): Suppose that (28) are fulfilled for a certain degree \(\hat{g}\), that is, there exit \(J(\hat{g})\) symmetric positive definite matrix \(\bar{P}_{K_{j(\hat{g})}}\) and matrices \(M_{K_{j(\hat{g})}}, N_{K_{j(\hat{g})}}, Q_{K_{j(\hat{g})}}, S_{K_{j(\hat{g})}}, R_{K_{j(\hat{g})}}, F_{K_{j(\hat{g})}}, j=1,ldots,J(\hat{g})\) such that \(\bar{P}_{\tau }\), \(M_{\tau }, N_{\tau }\), \(Q_{\tau }, S_{\tau }, F_{\tau }\) and \(R_{\tau }\) defined in (27) are homogeneous polynomially parameter-dependent Lyapunov matrices assuring \(\Xi _{\tau }<0\). Then, the terms of the polynomial matrices \(\tilde{\bar{P}}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})\bar{P}_{\tau }, \tilde{M}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})M_{\tau }, \tilde{N}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})N_{\tau },\;\;\; \tilde{Q}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})Q_{\tau }, \tilde{S}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})S_{\tau },\;\;\; \tilde{F}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})F_{\tau }\) and \(\tilde{R}_{\tau }=(\tau _{1},\tau _{2},\ldots ,\tau _{s})R_{\tau }\) also satisfy the inequalities of Theorem 4.3 corresponding to the degree \(\hat{g}+1\), which can be obtained in this case by linear combination of the inequalities of Theorem 4.3 for \(\hat{g}\)

Remark 4.4

When the scalars \(\lambda _{1}, \lambda _{2}, \lambda _{3}\) and \(\lambda _{4}\) of Theorem 4.3 are fixed to be constants, then (28) is an LMI which is effectively linear in the variables. To select values for these scalars, optimization can be used (for example fminsearch in MATLAB) to optimize some performance measure (for example \(\gamma \), the disturbance attenuation level).

Remark 4.5

As the degree g of the polynomial increases, the conditions become less conservative since new free variables are added to the LMIs. Although the number of LMIs is also increased, each LMI becomes easier to be fulfilled due to the extra degrees of freedom provided by the new free variables; as a consequence, better \(H_{\infty }\) guaranteed costs can be obtained.

5 Numerical Example

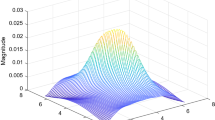

Consider the following 2-D static field model described by differential equation (El-Kasri et al. 2012):

where \(\eta (i,j)\) is the state of the random field of spacial coordinate \((i,j), \omega _{1}(i,j)\) is a noise input, \(\tau _{1}\),\(\tau _{2}\) are the vertical and horizontal correlative coefficients of the random field, respectively, satisfying \(\tau _{1}^{2}<1\) and \(\tau _{2}^{2}<1\). The output is then:

where \(\omega _{2}(i,j)\) is the measurement noise. The signal to be estimated is

As in Du et al. (2002), define \(x^{h}(i,j)=\eta (i,j+1)-\tau _{2}\eta (i,j), x^{v}(i,j)=\eta (i,j)\) and \(\omega (i,j)=[\omega ^\mathrm{T}_{1}(i,j) \omega _{2}(i,j)^\mathrm{T}]^\mathrm{T}\). It is easy to see (30)–(32) can be converted into a 2D Roesser model as follows:

where \(0.15\le \tau _{1}\le 0.45, 0.35\le \tau _{2}\le 0.85\). The uncertain 2-D system corresponds to a four vertex polytopic system.

The LMIs (28) were solved using Yalmip and SeDuMi in MATLAB 7.6, for increasing values of the degree g:

For degree \(g=0\), the proposed optimization gives \(\lambda _{1}=-0.1064, \lambda _{2}=0.0228, \lambda _{3}=0.0027\), and \(\lambda _{4}-0.0002\). For these scalars \(\gamma =2.4342\) and the corresponding filter matrices are:

The \(H_{\infty }\) norms obtained with this filter at the vertices of the uncertainties are given in Table 1.

On the other hand, when \(g=1, \lambda _{1}=-20.3646, \lambda _{2}=0.0662,\) \(\lambda _{3}=0.6369\) and \(\lambda _{4}-0.1645\), the disturbance attenuation obtained is \(\gamma =1.8043\) and the corresponding filter matrices are:

The \(H_{\infty }\) norms at the vertices are now given in Table 2.

For degree \(g=2\), \(\lambda _{1}=-3.3940, \lambda _{2}=0.0696, \lambda _{3}=0.6291\) and \(\lambda _{4}=-0.1640, \gamma =1.8042\) and the corresponding filter matrices are:

For the filter designed with \(g=2\), the actual \(H_{\infty }\) norms calculated at the four extreme plants are presented in Table 3: it can be seen that all of them are below the guaranteed bound 1.8042.

In summary, it has been shown that less conservative filter designs are achieved as g grows by applying the polynomially parameter-dependent method proposed here.

A comparison with the results obtained with the techniques proposed in Gao and Li (2014), Ying and Rui (2011) and Gao et al. (2008) is presented in Table 4, showing the improvement obtained with the methodology proposed in this paper.

The number of LMIs, the number of scalar variables and the cpu time to solve the LMIs are compared in the following Table 5. It must be pointed out that for this example, increasing the polynomial order to \(g > 2\) does not improve the noise reduction properties.

6 Conclusion

This article has investigated the \(H_{\infty }\) filtering problem for 2-D discrete systems described by uncertain Roesser models. A new condition for \(H_{\infty }\) performance analysis has been proposed in the LMI framework, by using a Lyapunov function approach and adding some slack matrix variables with specific structures that make possible to reduce the conservatism of previous works. A numerical example illustrate the effectiveness of the proposed method. As future research, we will use this technique in nonlinear systems based on fuzzy dynamic model and the SOS (Sum Of Square) Technique in this systems.

References

Benhayoun, M., Mesquine, F., & Benzaouia, A. (2013). Delay-dependent stabilizability of 2D delayed continuous systems with saturating control. Circuits Systems and Signal Processing, 32(6), 2723–2743.

Boukili, B., Hmamed, A., Benzaouia, A., & El Hajjaji, A. (2013). \(H_{\infty }\) filtering of two-dimensional T–S fuzzy systems. Circuits Systems and Signal Processing, 33(6), 1737–1761.

Boukili, B., Hmamed, A., Benzaouia, A., & El Hajjaji, A. (2014a). \(H_{\infty }\) state control for 2D fuzzy FM systems with stochastic perturbation. Circuits Systems and Signal Processing, 34(3), 779–796.

Boukili, B., Hmamed, A., & Tadeo, F. (2014b). Robust \(H_{\infty }\) filtering of 2D discrete Fornasini-Marchesini systems. International Journal on Sciences and Techniques of Automatic Control & Computer Engineering (IJ-STA), 8(1), 1998–2011.

Chen, S. F., & Fong, I. K. (2006). Robust \(H_{\infty }\) filtering for 2D state-delayed systems with NFT uncertainties. IEEE Transactions on Signal Processing, 54(1), 274–285.

Dey, A., Kokil, P., & Kar, H. (2012). Stability of two-dimensional digital filters described by the Fornasini–Marchesini second model with quantisation and overflow. IET Signal Processing, 6(7), 641–647. doi:10.1049/iet-spr.2011.0265.

Du, C., Xie, L., & Soh, Y. C. (2000). \(H_{\infty }\) fltering of 2D discrete systems. IEEE Transactions on Signal Processing, 48(6), 1760–1768.

Du, C., Xie, L., & Zhang, C. (2001). \(H_{\infty }\) control and robust stabilization of two-dimensional systems in Roesser models. Automatica, 37, 205–211.

Du, C., Xie, L., & Zhang, C. (2002). \(H_{\infty }\) control and robust stabilization of two-dimensional systems in Roesser models. Berlin: Springer.

El-Kasri, C., Hmamed, A., Alvarez, T., & Tadeo, F. (2012). Robust \(H_{\infty }\) filtering of 2D Roesser discrete systems: A polynomial approach. Mathematical Problems in Engineering, 2012(2012), 521675. doi:10.1155/2012/521675.

El-Kasri, C., Hmamed, A., & Tadeo, F. (2013a). Reduced-order \(H_{\infty }\) filters for uncertain 2D continuous systems, via LMIs and polynomial matrices circuits. Systems, and Signal Processing, 33(4), 1189–1214.

El-Kasri, C., Hmamed, A., Tissir, E. H., & Tadeo, F. (2013b). Robust \(H_{\infty }\) filtering for uncertain two-dimensional continuous systems with time-varying delays. Multidimensional Systems and Signal Processing, 24(4), 685–706.

Fornasini, E., & Marchisini, G. (1976). State-space realization theory of two-dimensional filters. IEEE Transactions on Automatic Control, 21(4), 484–492.

Fornasini, E., & Marchisini, G. (1978). Doubly-indexed dynamical systems: State-space models and structural properties. Mathematical Systems Theory, 12(1), 59–72.

Gao, C. Y., Duan, G. R., & Meng, X. Y. (2008). Robust \(H_{\infty }\) filter design for 2D discrete systems in Roesser model. International Journal of Automation and Computing, 5(4), 413–418.

Gao, H., & Li, X. (2011). \(H_{\infty }\) filtering for discrete-time state-delayed systems with finite frequency specifications. IEEE Transactions on Automatic Control, 56(12), 2935–2941.

Gao, H., & Li, X. (2014). Robust filtering for uncertain systems: A parameter-dependent approach. Switzerland: Springer International Publishing. doi:10.1007/978-3-319-05903-7.

Gao, H., & Wang, C. (2004). A delay-dependent approach to robust \(H_{\infty }\) filtering for uncertain discrete-time state-delayed systems. IEEE Transactions on Signal Processing, 52(6), 1631–1640.

Hmamed, A., Alfidi, M., Benzaouia, A., & Tadeo, F. (2008). LMI conditions for robust stability of 2D linear discrete-time systems. Mathematical Problems in Engineering, 2008, 356124.

Hmamed, A., Kasri, C. E., Tissir, E. H., Alvarez, T., & Tadeo, F. (2013). Robust \(H_{\infty }\) filtering for uncertain 2D continuous systems with delays. International Journal of Innovative Computing Information and Control, 9(5), 2167–2183.

Hmamed, A., Mesquine, F., Tadeo, F., Benhayoun, M., & Benzaouia, A. (2010). Stabilization of 2D saturated systems by state feedback control. Multidimensional Systems and Signal Processing, 21, 277–292.

Kokil, P., Dey, A., & Kar, H. (2012). Stability of 2-D digital filters described by the Roesser model using any combination of quantization and overflow nonlinearities. Signal Processing. doi:10.1016/j.sigpro.2012.05.016.

Lacerda, M. J., Oliveira, R. C. L. F., & Peres, P. L. D. (2011). Robust \(H_{2}\) and \(H_{\infty }\) filter design for uncertain linear systems via LMIs and polynomial matrices. Signal Processing, 91, 1115–1122.

Li, X., & Gao, H. (2012). Robust finite frequency \(H_{\infty }\) filtering for 2D Roesser systems. Automatica, 48, 1163–1170.

Li, X., Gao, H., & Wang, C. (2012). Generalized Kalman–Yakubovich–Popov lemma for 2D FM LSS model. IEEE Transactions on Automatic Control, 57(12), 3090–3103.

Meng, X., & Chen, T. (2014). Event triggered robust filter design for discrete-time systems. IET Control Theory Applications, 8(2), 104–113.

Qiu, J., Ding, S., Gao, H., & Yin, S. (2015). Fuzzy-model-based reliable static output feedback \(H_{\infty }\) control of nonlinear hyperbolic PDE systems. IEEE Transactions on Fuzzy Systems. doi:10.1109/TFUZZ.2015.2457934.

Qiu, J., Gao, H., & Ding, S. X. (2015b). Recent advances on fuzzy-model-based nonlinear networked control systems: A survey. IEEE Transactions on Industrial Electronics. doi:10.1109/TIE.2015.2504351.

Qiu, J., Hui, H., Lu, Q., & Gao, H. (2013). Nonsynchronized robust filtering design for continuous-time T–S fuzzy affine dynamic systems based on piecewise Lyapunov functions. IEEE Transactions on Cybernetics, 43(6), 1755–1766.

Qiu, J., Wei, Y., & Karimi, H. R. (2015). New approach to delay-dependent image control for continuous-time Markovian jump systems with time-varying delay and deficient transition descriptions. Journal of the Franklin Institute, 352(1), 189–215.

Roesser, R. (1975). A discrete state-space model for linear image processing. IEEE Transactions on Automatic Control, 20(1), 1–10.

Takagi, T. (1985). Fuzzy identification of systems and its applications to modeling and control. IEEE Transactions on Systems, Man and Cybernetics, SMC–15(1), 116–132.

Wang, T., Gao, H., & Qiu, J. (2015). A combined adaptive neural network and nonlinear model predictive control for multirate networked industrial process control. IEEE Transactions on Neural Networks and Learning Systems. doi:10.1109/TNNLS.2015.2411671.

Wu, L., Shi, P., Gao, H., & Wang, C. (2008). \(H_{\infty }\) filtering for 2D Markovian jump systems. Automatica, 44(7), 1849–1858.

Xia, Y., & Jia, Y. (2002). Robust stability functionals of state delayed systems with polytopic type uncertainties via parameter-dependent Lyapunov functions. International Journal of Control, 75, 1427–1434.

Xu, S., Lam, J., Zou, Y., Lin, Z., & Paszke, W. (2005). Robust \(H_{\infty }\) filtering for uncertain 2D continuous systems. IEEE Transactions on Signal Processing, 53(5), 1731–1738.

Xu, H., Zou, Y., Xu, S., & Guo, L. (2008). Robust \(H_{\infty }\) control for uncertain two-dimensional discrete systems described by the general model via output feedback controllers. International Journal of Control Automation, and Systems, 6(5), 785–791.

Ying, Z., & Rui, Z. (2011). A new approach to robust \(H_{\infty }\) filtering for 2D systems in Roesser model. In Proceedings of the 30th Chinese control conference, Yantai, China (pp. 22–24).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Boukili, B., Hmamed, A. & Tadeo, F. Robust \(H_{\infty }\) Filtering for 2-D Discrete Roesser Systems. J Control Autom Electr Syst 27, 497–505 (2016). https://doi.org/10.1007/s40313-016-0251-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40313-016-0251-5