Abstract

Epilepsy is a well-known nervous system disorder characterized by seizures. Electroencephalograms (EEGs), which capture brain neural activity, can detect epilepsy. Traditional methods for analyzing an EEG signal for epileptic seizure detection are time-consuming. Recently, several automated seizure detection frameworks using machine learning technique have been proposed to replace these traditional methods. The two basic steps involved in machine learning are feature extraction and classification. Feature extraction reduces the input pattern space by keeping informative features and the classifier assigns the appropriate class label. In this paper, we propose two effective approaches involving subpattern based PCA (SpPCA) and cross-subpattern correlation-based PCA (SubXPCA) with Support Vector Machine (SVM) for automated seizure detection in EEG signals. Feature extraction was performed using SpPCA and SubXPCA. Both techniques explore the subpattern correlation of EEG signals, which helps in decision-making process. SVM is used for classification of seizure and non-seizure EEG signals. The SVM was trained with radial basis kernel. All the experiments have been carried out on the benchmark epilepsy EEG dataset. The entire dataset consists of 500 EEG signals recorded under different scenarios. Seven different experimental cases for classification have been conducted. The classification accuracy was evaluated using tenfold cross validation. The classification results of the proposed approaches have been compared with the results of some of existing techniques proposed in the literature to establish the claim.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Epilepsy is a neurological disorder characterized by seizures that can affect humans of all ages. Over 40–50 million people of the world population have this disorder as reported by World Health Organization [1]. Electroencephalogram (EEG) was introduced by Berger [2] and it is used for measuring brain’s electrical activity. One of the major application of EEG in the field of clinical diagnosis is the detection of epileptic seizure [3, 4].

Analysis of an EEG signal is a challenging task. Visual inspection for seizure detection in EEG signal is time consuming and it can lead to error as well. Hence, an automated framework for seizure detection with a high accuracy is significantly required. The basic two steps involved for seizure detection in the various methods proposed in the literature are feature extraction and classification. In the feature extraction step, important attributes of the signal are collected and then these extracted features are given as input to the classifier. Some of the methods proposed in the literature are discussed below.

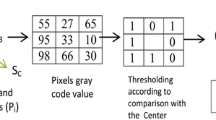

A combined approach with time–frequency (t–f) domain features and Elman neural network was proposed by Srinivasan et al. [5]. In the combined approach various t–f features like frequency, dominant frequency, spike rhythmicity etc. were extracted. The system was tested with different combinations of these extracted features for seizure detection. Adeli and collegues [6] used wavelet analysis based feature extraction technique and wavelet-chaos-neural network. Polat et al. [7] introduced a hybrid model for seizure detection. In the hybrid model, fast Fourier transform and decision tree was used for feature extraction and classification respectively. Wavelet transform with different classifiers and the use of entropies with extreme learning machine have been reported by many researchers [8,9,10,11,12,13,14]. Computation of Prediction error and power spectral density have been suggested for seizure detection [15, 16]. Principal component analysis (PCA) is used for dimensionality reduction by projecting data in the direction of maximum variation. For seizure detection, PCA with neural network has been proposed [14]. Wavelet transform, PCA, independent components analysis (ICA) and linear discriminant analysis (LDA) with SVM have been reported for the EEG signal classification [18]. Local binary pattern (LBP) is a feature extraction technique and mostly used in the field of text classification. Recently, it has been applied with different classifiers for EEG signal classification [19]. Features extracted through fractional linear prediction (FLP) and HilbertHuang transform (HHT) were fed to SVM for classification of EEG signals. FLP has been used for the computation of prediction error energy. This error energy along with signal energy were formed the feature vectors for classification [20]. Mean, skewness, etc. features were extracted through HHT in EEG signals and then used for classification [11].

Even though in recent years, a number of methods have been proposed for seizure detection, the subpattern correlation between EEG signals has not been explored in a broad manner. Subpattern correlation plays an important role in capturing informative features in a local subpattern set which could be used further in the decision making process. While recording an EEG signal, each action or abnormality possess some unique pattern. SpPCA and SubXPCA can be used to extract these hidden patterns for signal classification. The effectiveness of subpattern based feature reduction techniques for seizure detection in EEG signals has not been investigated so far. In this study, two effective approaches called SpPCA and SubXPCA are applied for feature extraction [21, 22]. Both the methods explores the subpattern correlation between EEG signals in each subpattern set. Once the feature extraction step is over, the feature vectors are fed to SVM and the classification is performed. SVM has been widely used for classification of non-stationary signals, including EEG signals [18, 23]. The experiment has been carried out with the benchmark epilepsy dataset. For evaluating the performances of the proposed approaches, ten fold cross validation is used and classification accuracy is recorded.

The remaining content of the paper is presented in the following sections. The methodology and materials used are described in “Methodology and materials”. Experimental results are shown in “Experimental results and discussion”. Finally, “Conclusions and future work” concludes the article with future direction.

Methodology and materials

Since the invention of PCA, it has been used in many applications including EEG signal processing. The abnormality or disorder recorded in EEG signal posses certain unique patterns. It is very crucial to capture these hidden patterns for correct diagnosis. PCA focus on the extraction of global features and hence its capability for detecting these unique patterns becomes limited. On the other hand, SpPCA and SubXPCA divide the input pattern set into subpattern sets and extracted features from each of the subsets locally. As a result of which, both the techniques capture the hidden unique patterns and the chances for the correct diagnosis of a disorder is maximized. Along with this, time and space complexities also play an important role in evaluating the effectiveness in real time applications. PCA is well-known to have a high time and space complexities. Whereas, the time and space complexities of these partition based PCA techniques are less as compared to PCA.

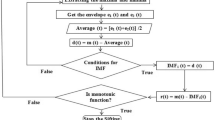

In this study, we have applied these two techniques with SVM for classification of seizure and non-seizure EEG signals. In both these techniques the input patterns are divided into subpattern sets. One example of subpattern is shown in Fig. 1.

In the case of SpPCA, features are extracted by applying PCA on each of subpattern sets. Once the feature extraction from these subpattern sets is over, the extracted features are combined in accordance with the partition sequence of patterns to form the final feature vectors. The first step of SubXPCA is done identically with SpPCA which focus on extracting the local variation of these subpattern sets [24]. SubXPCA is a two step process. The first step is constituted by SpPCA (Fig. 2). In the second step, PCA is performed on the features extracted in the previous step to further reduce the dimensionality and to extract the global features (Fig. 3).

SubXPCA

The steps involved in SubXPCA are as follows:

-

1.

The mean corrected input EEG signals \(X_{N*d}\) are divided into L (\(L\ge 2\)) non overlapping subpattern sets of equal size. So, the dimension of each subpattern reduces to \(r=d/L.\) For each subpattern set \(X_{i},\) where \(i=1\ldots{L},\) repeat the following operations:

-

(a)

Find the covariance matrix, \((C_{i})_{r*r}.\)

-

(b)

Calculate the eigenvalues (\(\lambda ^{i}_{j}\)) and corresponding eigenvectors (\(e^{i}_{j}\)), for \(j=1\ldots{r}.\)

-

(c)

Select k (\(k\le r\)) largest eigenvalues and find corresponding eigenvectors. Let \(E_{i}\) denotes the set of these k selected eigenvectors.

-

(d)

The local PCs for the subpattern set \(X_{i}\) is obtained by projecting it onto \(E_{i}.\) The local PCs set (\(Y_{i}\)) is obtained as,

$$\begin{aligned} (Y_{i})_{N*k}=(X_{i})_{N*r}(E_{i})_{r*k} \end{aligned}$$(1) -

(e)

Concatenate \(Y_{i},\) \(\forall i=1\ldots{L},\) in accordance with the partition sequence followed in step 1.

Let Y be the set obtained after concatenation.

$$\begin{aligned} Y_{N*Lk}= concatenate((Y_{i})_{N*k}) \end{aligned}$$(2)

-

(a)

-

2.

This step constituted of applying PCA on data obtained in step 1.

-

(a)

Find the covariance matrix, \((C^{F})_{Lk*Lk}\) for the data Y.

-

(b)

Calculate eigenvalues (\(\lambda ^{F}_{j}\)) and corresponding eigenvectors (\(e^{F}_{j}\)), for \(j=1\ldots{Lk}.\)

-

(c)

Select w (\(w < Lk\)) largest eigenvalues and find corresponding eigenvectors. Let \(E^{F}\) denotes the set of these w selected eigenvectors.

-

(d)

The final projection Z is obtained by projecting Y onto \(E^{G},\) i.e.

$$\begin{aligned} (Z)_{N*w}=Y_{N*Lk}(E^{F})_{Lk*w} \end{aligned}$$(3)

-

(a)

Subpattern formation

The partition of patterns into equal size subpattern sets must be carried out such that, the loss of pattern is avoided or minimized. The subpattern formation can be done in a contiguous manner or randomly. In this research, a contiguous partitioning approach has been followed (Fig. 1).

Selection of projection vectors (k, w)

In both the approaches, i.e., SpPCA and SubXPCA, there is a selection of number of eigenvectors of the covariance matrix. The basic two approaches for selecting the number of PVs are as follows: (1) selecting a fixed number of eigenvectors for projection (2) setting a threshold (\(\delta\)) on total variation.

SpPCA

As mentioned earlier, SpPCA consists of all set of operations performed in step 1 of SubXPCA. After applying SpPCA, the features set Y (Fig. 2) obtained is used for classification. However, in case of SubXPCA the features set Z (Fig. 3) is used for classification.

Time complexity of PCA, SpPCA and SubXPCA

Let \(X_{1},\) \(X_{2},\) …, \(X_{N}\) be the input patterns of N classes each having dimension d. For PCA, the time complexity of determining the covariance matrix is given by:

In case of SpPCA and SubXPCA, the input patterns are divided in to L number of subpattern sets. So, the dimension of each subpattern set is \(N*r,\) where \(r=d/L.\) The time complexity of computing the covariance matrix using SpPCA is:

The second step of SubXPCA involves the computation of an additional covariance matrix of dimension \(L.k * L.k.\) So the time complexity of SubXPCA is:

From the above three equations it can be proved that \(T(SpPCA)\le T(PCA)\) and \(T(SubXPCA)\le T(PCA).\)

Space complexity of PCA, SpPCA and SubXPCA

As \(X_{i}\) represents the set of N input patterns with each pattern having dimension d,where \(i=1\dots{N},\) the space complexity of PCA for including input patterns set (\(N*d\)), covariance matrix (\(d*d\)), eigenvalues and eigenvectors (\(d*d\)) and principal components (\(N*p\)) is given as:

where p is the number of principal components.

In case of SpPCA, the input patterns set is divided into L number of subpattern sets, reducing the dimension of each pattern in subpattern set to r, where \(r=d/L.\) The space complexity of SpPCA is:

The first step of SubXPCA is done identically with SpPCA. However, in the second step of SubXPCA, it involves the computation of an additional covariance matrix of the features set obtained in step one. The dimension of the features set obtained after step one is \(N*L.k.\) The size of the covariance matrix is L.k * L.k. If the w number of eigenvectors are chosen in the second step for projection, then the dimension of the final features set obtained by SubXPCA is \(N*w.\) The space complexity of SubXPCA is given by,

From the above three equations, it can be proved that \(S(SubXPCA)\le S(PCA)\) and \(S(SpPCA) \le S(PCA)\).

Support Vector Machine (SVM)

SVM is a supervised classification methodology and used for binary classification [25].

Let S be the set of training data having dimension d,

Here n represents the number of samples, \(c_{i}\) is the class label of input feature vector \(x_{i} \in R^{d}\) with \(c_i\in \left\{ 1,-1 \right\}.\) The decision boundary satisfies the following equation,

The optimal hyperplane can be obtained by solving the following equation:

The decision function can be expressed as follows [21]:

where \(\alpha _{i}\) is the Lagrange multiplier and \(F(x, x_{i})\) is the kernel function.

For linear separation between classes, the kernel function performs the transformation of input feature vector to a high dimensional feature space. There are different kernel function used for SVM. We have used radial basis function (RBF) kernel for the classification. For RBF kernel,

where \(\sigma\) is a free parameter that controls the width of the kernel.

Dataset

This research is carried out with the publicly available EEG dataset [27] provided by the Department of EpileptologyFootnote 1 at Bonn University, Germany. The dataset comprised of five groups. The groups are named from A to E. The standard 10–20 system electrode placement was followed for signal capturing. Each group contains 100 single-channel EEG signals. Each signal was recorded for 23.6 s duration with an 128 channel amplifier system using a common average reference. All signals were digitized through 12 bit A/D converter and the sampling frequency was 173.6 Hz. Groups A and B were taken from surface EEG recordings of five healthy volunteers Set A and B were taken from surface EEG recordings of five healthy volunteers while their eyes were opened and closed, respectively. The signals in groups C and D were recorded on patients before epileptic attack at hemisphere hippocampal formation and from the epileptogenic zone respectively. The EEG signals within group E were recorded from patients during the seizure activity. We have used all the five groups for classification of seizure and non-seizure EEG signals. The EEG signal of each group is shown in Fig. 4.

Experimental results and discussion

This section includes the experimental outcomes and analysis of results after applying PCA, SpPCA, and SubXPCA.

Results

The subpattern sets are formed by dividing the EEG signals into L non-overlapping parts of equal size. Once the subpattern sets are obtained, we have applied SpPCA and SubXPCA for feature extraction. These extracted feature vectors are then fed to SVM for classification of seizure and non-seizure signals. k number of projection vectors from each subpattern set are selected in SpPCA and in the first step of SubXPCA. The second step of SubXPCA is performed by applying PCA on the features set obtained in its previous step. The selection of projection vectors in the second step of SubXPCA is done by setting a threshold \(\delta\) on the variation of features set obtained from its step one. In this study, we have used the epilepsy time series EEG dataset. The dataset has five groups (A–E). The experimental classification is performed for ten different cases.

k -fold cross validation k-fold cross validation is well known technique for evaluating the model performance. k-fold cross validation is performed by partitioning the entire dataset into k number of equal subparts. One out of the k subparts is taken as the testing set and the remaining \(k-1\) subparts as the training set. In the next iteration, another subpart is taken as testing set and the remaining subparts as training set. In this way the training and testing is repeated k times [28]. For each experimental case, we have performed tenfold cross validation.

In this research, we have used the built-in MATLAB functions svmtrain and svmclassify for training and classifying the feature vectors of EEG signals respectively. The SVM is trained with RBF kernel. The best classification accuracy is obtained when the RBF parameters (C and \(\sigma\)) are set to 1. The crossvalind function has been used for random selection of training set and testing set for the cross validation. we have tested the proposed approaches with different number of subpattern sets, including 4, 8, 16, etc. The highest classification accuracy is obtained when the number of subpattern sets is equal to 8.

The classification accuracy achieved by SpPCA and SubXPCA, taking different number of projection vectors (PVs) are presented in Tables 1, 2, 3, 4, 5, 6, 7.

The classification accuracy of SubXPCA, SpPCA, and PCA for different experimental cases is shown in Fig. 5.

The various statistical parameters like sensitivity (Sen) and specificity (Spe) for the highest classification accuracy (Acc) achieved with with SpPCA and SubXPCA for different experimental cases have been shown in Table 8.

where Tp (True Positive): correctly identified seizure signals, Tn (True Negative): correctly identified non-seizure signals, Fp (False Positive): incorrectly marked as seizure signals and Fn (False Negative): incorrectly marked as non-seizure signals.

Performance comparison between different classifiers

Nearest Neighbor (NN), Decision Tree (DT), SVM, and Naive Bayes (NB) are some of the popular classifiers in machine learning and data mining [29]. The mean or average classification accuracy of all the seven different experimental cases (A–E, B–E, C–E, D–E, AB–E, CD–E, and ABCD–E) is computed for each of these classifiers (NN with Euclidean distance measure, SVM with rbf kernel, DT, and NB). The experimental results are shown in Fig. 6. It can be seen in Fig. 6 that SpPCA and SubXPCA achieved the best classification accuracy with SVM than any other classifier.

SpPCA and SubXPCA for multi-class classification

As mentioned earlier, epileptic seizure detection is a binary classification problem where the task is to classify the input EEG signal to either as a seizure or as a non-seizure signal. In addition to the above seven different experimental cases considered in this study, another set of experiments has been conducted by involving multiple classes to find the effectiveness of the proposed methods. The classification accuracy obtained are shown in Tables 9, 10, 11. The classification accuracy of SubXPCA, SpPCA, and PCA for these experimental cases is shown in Fig. 7.

Discussion

The following observations were made from the experimental results. For classification of seizure and non-seizure EEG signals, SpPCA and SubXPCA have shown better classification accuracy than PCA. A comparison between PCA and SpPCA (Fig. 5) shows that, in most of the cases, with the same number of projection vectors SpPCA usually achieved a better classification accuracy than PCA. It could also be observed that, with the variation in number of projection vectors the classification accuracy achieved by SpPCA is more consistent than that of PCA. SubXPCA has shown superiority over PCA and SpPCA with being able to achieve better classification accuracy with less number of projection vectors. Even though setting a single threshold (\(\delta\)) for the selection of projection vectors is a challenging task, it was found that a small variation of the threshold (\(\delta\)) in the second step of SubXPCA could result in better accuracy than SpPCA. In both the techniques, partitioning the input patterns into subpattern sets has the advantage of reduced in time and space complexities while calculating the covariance matrix. It is found that in most of the experimental cases, SpPCA achieved the best classification accuracy with 40–80 features. Similarly, SubXPCA achieved the best accuracy with 18–40 features. Several methods have been suggested in the literature for epileptic seizure detection in EEG signal. The comparison of highest classification accuracy of the proposed approaches and accuracy of different methods suggested in the literature has been presented in Table 12.

For A–E, the highest classification accuracy achieved by SpPCA and SubXPCA are both 100%. Srinivasan et al. [33] achieved 100% classification accuracy for this case with the combination of entropy and neural network. Similarly, Iscan et al. [36] achieved the same classification accuracy through different time and frequency domain features. Recently, Kumar et al. [39] achieved 100% classification accuracy with fuzzy entropy and SVM.

For B–E, C–E, and D–E, the best classification accuracy (%) achieved by SpPCA and SubXPCA are 99.50, 99.50, 95.50 and 99.50, 99.50, 95.50 respectively. [38] reported the classification accuracy of 82.88, 88.00, and 78.98 for these experimental cases with permutation entropy and SVM. Kumar et al. [39] achieved the classification accuracy of 100, 99.6 and 95.85% respectively for these experimental cases.

For AB–E, CD–E, and ABCD–E, SpPCA achieved the best accuracy (%) of 99.66, 96.66, and 97.40 respectively. Similarly, with SubXPCA the best accuracy (%) is found to be 99.66, 96.66, and 97.60 respectively.

For cases 8–10, SpPCA achieved the highest classification accuracy (%) of 96.25, 96.33, and 94.25, respectively. On the other hand, the classification accuracy achieved by SubXPCA for these experimental cases are 97.20, 97.43, and 94.60, respectively. For case 8 (A–D–E) and case 9 (AB–CD–E), Hasan and Subasi [44] reported a high classification accuracy of 99.00 and 97.40, respectively with the application of linear programming boosting technique. For case 10, Tawfic et al. [41] achieved 93.75% classification accuracy with the combination of weighted permutation entropy and SVM.

Even though the classification accuracy achieved by the proposed approaches are not 100% for all cases, still SpPCA and SubXPCA have been able to achieve better accuracy than some of the existing methods proposed in the literature. Furthermore, it can be seen from Table 12 that even though a number of methods have been proposed in the literature, none of these methods addressed the issue of subpattern correlation between the EEG signals. Subpattern correlation extracts informative features from each subpattern set and these features can be used in order to uniquely identify the activity and abnormality recorded in the EEG signals. This paper aims to strengthen the research in the direction of exploring the sub-pattern correlation in EEG signals and showing the potential for the possible application in processing other biomedical signals as well.

Conclusions and future work

This study proposed two effective approaches, namely, SpPCA and SubXPCA with SVM for automated seizure detection in EEG signal. In both the approaches EEG signals were divided into subpattern sets. Feature extraction was performed by applying PCA on each subpattern set in SpPCA. SubXPCA include an additional step of applying PCA on the feature extracted in the previous step. Once the feature extraction step was over, these extracted feature vectors were given as input to the SVM for classification. Both the approaches achieved 100% accuracy for the classification of normal and epileptic EEG signals. Along with this seven different experimental cases for classification have been conducted. By observing the experimental results it could be interpreted that the proposed schemes achieved better classification accuracy as compared to some of the existing techniques proposed in the literature. Hence, both the techniques could be considered for epileptic seizure detection in EEG signals.

Notes

EEG time series dataset http://epileptologie-bonn.de/cms/front_content.php?idcat=193&lang=3&changelang=3

References

WHO (2016) Epilepsy. http://www.who.int/mediacentre/factsheets/fs999/en/. Accessed Aug 2016

Berger H (1929) Über das elektrenkephalogramm des menschen. Eur Archiv Psychiatr Clin Neurosci 87(1):527–570

Ray GC (1994) An algorithm to separate nonstationary part of a signal using mid-prediction filter. IEEE Trans Signal Process 42(9):2276–2279

Iasemidis LD, Shiau DS, Chaovalitwongse W, Sackellares JC, Pardalos PM, Principe JC, Carney PR, Prasad A, Veeramani B, Tsakalis K (2003) Adaptive epileptic seizure prediction system. IEEE Trans Biomed Eng 50(5):616–627

Srinivasan V, Eswaran C, Sriraam N (2005) Artificial neural network based epileptic detection using time-domain and frequency-domain features. J Med Syst 29(6):647–660

Ghosh-Dastidar S, Adeli H, Dadmehr N (2007) Mixed-band wavelet-chaos-neural network methodology for epilepsy and epileptic seizure detection. IEEE Trans Biomed Eng 54(9):1545

Polat K, Güneş S (2007) Classification of epileptiform EEG using a hybrid system based on decision tree classifier and fast fourier transform. Appl Math Comput 187(2):1017–1026

Subasi A (2007) EEG signal classification using wavelet feature extraction and a mixture of expert model. Expert Syst Appl 32(4):1084–1093

Hasan O (2009) Automatic detection of epileptic seizures in EEG using discrete wavelet transform and approximate entropy. Expert Syst Appl 36(2):2027–2036

Li D, Xie Q, Jin Q, Hirasawa K (2016) A sequential method using multiplicative extreme learning machine for epileptic seizure detection. Neurocomputing 214:692–707

Satapathy SK, Dehuri S, Jagadev AK (2017) ABC optimized RBF network for classification of EEG signal for epileptic seizure identification. Egypt Inform J 18:55–66

Guo L, Rivero D, Pazos A (2010) Epileptic seizure detection using multiwavelet transform based approximate entropy and artificial neural networks. J Neurosci Methods 193(1):156–163

Chen L-L, Zhang J, Zou J-Z, Zhao C-J, Wang G-S (2014) A framework on wavelet-based nonlinear features and extreme learning machine for epileptic seizure detection. Biomed Signal Process Control 10:1–10

Swami P, Gandhi TK, Panigrahi BK, Bhatia M, Santhosh J, Anand S (2016) A comparative account of modelling seizure detection system using wavelet techniques. Int J Syst Sci Oper Logist 4:1–12

Tzallas AT, Tsipouras MG, Fotiadis DI (2009) Epileptic seizure detection in EEGs using time–frequency analysis. IEEE Trans Inform Technol Biomed 13(5):703–710

Altunay S, Telatar Z, Erogul O (2010) Epileptic EEG detection using the linear prediction error energy. Expert Syst Appl 37(8):5661–5665

Ghosh-Dastidar S, Adeli H, Dadmehr N (2008) Principal component analysis-enhanced cosine radial basis function neural network for robust epilepsy and seizure detection. IEEE Trans Biomed Eng 55(2):512–518

Subasi A, Gursoy MI (2010) EEG signal classification using PCA, ICA, IDA and support vector machines. Expert Syst Appl 37(12):8659–8666

Kaya Y, Uyar M, Tekin R, Yıldırım S (2014) 1D-local binary pattern based feature extraction for classification of epileptic EEG signals. Appl Math Comput 243:209–219

Joshi V, Pachori RB, Vijesh A (2014) Classification of ictal and seizure-free EEG signals using fractional linear prediction. Biomed Signal Process Control 9:1–5

Chen S, Zhu Y (2004) Subpattern-based principle component analysis. Pattern Recognit 37(5):1081–1083

Kadappagari VK, Atul N (2008) SubXPCA and a generalized feature partitioning approach to principal component analysis. Pattern Recognit 41(4):1398–1409

Kai F, Jianfeng Q, Chai Y, Dong Y (2014) Classification of seizure based on the time–frequency image of EEG signals using HHT and SVM. Biomed Signal Process Control 13:15–22

Kadappa VK, Negi A (2013) Computational and space complexity analysis of subXPCA. Pattern Recognit 46(8):2169–2174

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Cheng J, Dejie Y, Yang Y (2008) A fault diagnosis approach for gears based on IMF AR model and SVM. EURASIP J Adv Signal Process 2008(1):1–7

Andrzejak RG, Lehnertz K, Mormann F, Rieke C, David P, Elger CE (2001) Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: dependence on recording region and brain state. Phys Rev E 64(6):061907

Kohavi R et al (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. Ijcai 14:1137–1145

Xindong W, Vipin Kumar J, Quinlan R, Ghosh J, Yang Q, Motoda H, McLachlan GJ, Ng A, Liu B, Philip SY (2008) Top 10 algorithms in data mining. Knowl Inform Syst 14(1):1–37

Nigam VP, Graupe D (2004) A neural-network-based detection of epilepsy. Neurol Res 26(1):55–60

Kannathal N, Choo Min Lim, Acharya U Rajendra, Sadasivan PK (2005) Entropies for detection of epilepsy in EEG. Comput Methods Progr Biomed 80(3):187–194

Tzallas AT, Tsipouras MG, Fotiadis DI (2007) Automatic seizure detection based on time–frequency analysis and artificial neural networks. Comput Intell Neurosci. http://doi.org/10.1155/2007/80510

Srinivasan V, Eswaran C, Sriraam N (2007) Approximate entropy-based epileptic EEG detection using artificial neural networks. IEEE Trans Inform Technol Biomed 11(3):288–295

Guo L, Rivero D, Seoane JA, Pazos A (2009) Classification of EEG signals using relative wavelet energy and artificial neural networks. In: Proceedings of the first ACM/SIGEVO summit on genetic and evolutionary computation, pp 177–184

Chandaka S, Chatterjee A, Munshi S (2009) Cross-correlation aided support vector machine classifier for classification of EEG signals. Expert Syst Appl 36(2):1329–1336

Iscan Z, Dokur Z, Demiralp T (2011) Classification of electroencephalogram signals with combined time and frequency features. Expert Syst Appl 38(8):10499–10505

Wang D, Miao D, Xie C (2011) Best basis-based wavelet packet entropy feature extraction and hierarchical EEG classification for epileptic detection. Expert Syst Appl 38(11):14314–14320

Nicolaou N, Georgiou J (2012) Detection of epileptic electroencephalogram based on permutation entropy and support vector machines. Expert Syst Appl 39(1):202–209

Kumar Y, Dewal ML, Anand RS (2014) Epileptic seizure detection using DWT based fuzzy approximate entropy and support vector machine. Neurocomputing 133:271–279

Lee S-H, Lim JS, Kim J-K, Yang J, Lee Y (2014) Classification of normal and epileptic seizure eeg signals using wavelet transform, phase-space reconstruction, and euclidean distance. Comput Methods Progr Biomed 116(1):10–25

Tawfik NS, Youssef SM, Kholief M (2016) A hybrid automated detection of epileptic seizures in EEG records. Comput Electr Eng 53:177–190

Sharma R, Pachori RB (2015) Classification of epileptic seizures in EEG signals based on phase space representation of intrinsic mode functions. Expert Syst Appl 42(3):1106–1117

Tiwari A et al (2016) Automated diagnosis of epilepsy using key-point based local binary pattern of EEG signals. IEEE J Biomed Health Inform 21(4):888–896

Hassan AR, Subasi A (2016) Automatic identification of epileptic seizures from EEG signals using linear programming boosting. Comput Methods Progr Biomed 136:65–77

Acknowledgements

The authors would like to thank Dr. R.G. Andrzejak of the University of Bonn for providing the EEG time series dataset.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

The used dataset is publicly available.

Rights and permissions

About this article

Cite this article

Jaiswal, A.K., Banka, H. Epileptic seizure detection in EEG signal using machine learning techniques. Australas Phys Eng Sci Med 41, 81–94 (2018). https://doi.org/10.1007/s13246-017-0610-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-017-0610-y