Abstract

Epilepsy is a well known neurological disorder characterized by the presence of recurrent seizures. Electroencephalograms (EEGs) record electrical activity in the brain and are used to detect epilepsy. Traditional EEG analysis methods for epileptic seizure detection are time-consuming, which has led to the recent proposal of several automated seizure detection frameworks. Feature extraction and classification are two important steps in this procedure. Feature extraction focuses on finding the informative features that could be used in the classification step for correct decision making; therefore, proposing some effective feature extraction techniques for seizure detection is of great significance. This paper introduces two novel feature extraction techniques: local centroid pattern (LCP) and one-dimensional local ternary pattern (1D-LTP) for seizure detection in EEG signal. Both the techniques are computationally simple and easy to implement. In both the techniques, the histograms are formed in the first step using the transformation code and then these histogram-based feature vectors are fed into a classifier in the second step. The performance of the proposed techniques was evaluated through 10-fold cross-validation tested on the benchmark dataset. Different machine learning classifiers were used for the classification. The experimental results show that LCP and 1D-LTP achieved the highest accuracy of 100% for the classification between normal and seizure EEG signals with the artificial neural network classifier. Nine different experimental cases have been tested. The results achieved for different experimental cases were higher than the results of some existing techniques in the literature. The experimental results indicate that LCP and 1D-LTP could be effective feature extraction techniques for seizure detection.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Epilepsy is a central nervous system disorder caused by abnormal changes in the neural activity inside the brain. According to the World Health Organization (WHO), epilepsy affects 45–55 million people all around the world [1]. Electroencephalography (EEG) captures the brain’s electrical activity and it is considered as a tool in clinical application for epileptic seizure detection [2, 3]. Traditional methods for seizure detection usually required a long EEG recording of several hours. Seizure detection in EEG signals by visual examination not only requires high expertise, but it is also an expensive process in terms of time and it is prone to error. With the development of technology building an automated seizure detection framework is of great interest now a days. Epileptic seizure detection in EEG signal could be treated as a classification problem where the task is to classify an EEG signal to either as a seizure or as a non-seizure signal. It should be noted that feature extraction and classification are two important steps in this phenomenon. This combination enables an automated system to run faster. A number of methods have been proposed in the literature for epileptic seizure detection in EEG signals.

In recent years, feature extraction techniques based on time series signal analysis with linear prediction error energy [4], correlation [5], fractional linear prediction [6], frequency domain signal analysis with fast Fourier transform [7], time–frequency domain signal analysis techniques based on short-time Fourier transform [8], and wavelets [9,10,11,12,13,14,15,16,17] have been proposed for seizure detection.

Feature extraction based on different entropy schemes has shown effectiveness for seizure detection in EEG signals [18,19,20]. Dimensionality reduction techniques like PCA, ICA, and LDA have been successfully applied for epileptic EEG signal classification with a high accuracy [21]. The combination PCA and wavelet has been reported for automated classification of epileptic activity with a higher accuracy [22]. Techniques like recurrence quantification analysis [23, 24], higher order spectra features [25], Continuous wavelet transform [26], high order cumulants features [27], empirical mode decomposition [28,29,30,31], and Hilbert–Huang transformation [32, 33] are well known in the field of epileptic seizure detection.

As mentioned earlier the efficiency of a feature extraction technique not only depends on the extracted informative features, but it also depends on the computation complexity involved in the extraction process. The feature extraction technique should be computationally efficient. Local binary pattern (LBP) has gained popularity in the field of face recognition [34]. LBP focuses on preserving the structural property of the pattern. Because of the effectiveness of the LBP technique for different pattern recognition applications, a one-dimensional LBP (1D-LBP) scheme was proposed for signal processing [35]. Recently, the 1D-LBP technique has been used for epileptic EEG signal classification [36, 37]. 1D-LBP focuses on the local pattern of a signal to extract quantitative features for classification. However, 1D-LBP is sensitive to local variation. Local variation refers to any structural change in the local pattern of a signal.

In this study, we propose two effective feature extraction techniques, namely, local centroid pattern (LCP) and One-dimensional local ternary pattern (1D-LTP) for classification of epileptic EEG signals. Both the techniques are computationally simple, easy to implement and insensitive to local and global variations. These insensitiveness properties of LCP and 1D-LTP overcome the limitation of 1D-LBP. Both techniques (LCP and 1D-LTP) work in two phases. In the first phase, the local patterns are transformed to form the histogram. The histogram contains the structural description of the signal and represents the feature vector of the corresponding EEG signal. Histogram classification is completed in the second phase. The classification has been carried out with four different machine-learning classifiers. The classification performance is evaluated with 10-fold cross validation considering the sensitivity, specificity and accuracy.

The remaining content of this paper is organized as follows: Methodology and materials used are discussed in Sect. 2. Experimental results are shown in Sect. 3 and discussed in Sect. 4. Finally, Sect. 5 concludes the article with future direction.

2 Methodology and Materials

In this section, a brief discussion about LBP, 1D-LBP, LCP, 1D-LTP feature extraction techniques, dataset and the classifiers used has been done.

2.1 Local Binary Pattern (LBP)

LBP is a well known technique used for face recognition and two dimensional (2D) image processing [35]. For each pixel in an image it produces a binary code by comparing the pixel with the surrounding pixels or neighbor pixels (3 × 3 neighborhood). Each binary code captures the structural distribution of a small section of the image. This binary code is converted into its decimal equivalent (LBP code) to uniquely represent the pattern structure. Once the computation of all the LBP code is finished, the histogram is formed using these codes. A histogram graphically summarizes the structural distribution of patterns across the image and consists of two axes. The horizontal axis contains the LBP code in increasing order and the vertical axis represents the frequency (number of occurrences) of each LBP code. Usually, in images, for each pixel 8 surrounding pixels are considered while computing the LBP code. The LBP code of a pixel S c from its m surrounding pixels, P i (i = 0…m − 1), is computed as given below:

where,

One example of the LBP code is shown in Fig. 1.

2.2 One-Dimensional Local Binary Pattern (1D-LBP)

1D-LBP is a variant of LBP and it was introduced for signal processing [35]. The working principle 1D-LBP is similar to LBP, but it is used for one dimensional signal. In case of 1D-LBP, the transformation code for the signal point S c considering m number of surrounding points (P i , i = 0…m − 1) is computed as:

where,

In the above technique, different weights (2i, i = 0…m − 1) are assigned to different points in order to convert the binary code into a unique 1D-LBP code [35]. The 1D-LBP code of a signal point S c is shown in Fig. 2.

2.3 Proposed Techniques Based on Local Transformed Features

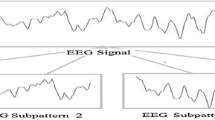

As mentioned before, in 1D-LBP, each point in the raw signal is compared with its neighbor points and extract features from the signal by focusing on local patten. Recently, 1D-LBP has gained popularity in the field of EEG signal classification [36, 37]. Usually, while recording an EEG signal, each action or abnormality possesses some unique local patterns and 1D-LBP can detect these patterns. However, 1D-LBP is sensitive to noise and hence the capability of detecting the hidden patterns is limited. In order to overcome this limitation, LCP and 1D-LTP techniques have been proposed. The LCP technique deals with the computation of centroid or mean value of the neighboring points and the comparison is carried out between the neighboring points and the centroid value. The binary code obtained after the comparison is converted into the transformation code. Centroid or mean is less sensitive to noise and it represents the local pattern structure as well. 1D-LTP is a generalization of 1D-LBP. It operates upon a user defined threshold value and can detect unique patterns. While in 1D-LBP and LCP, each comparison results in a binary value (0 or 1), the 1D-LTP produces a ternary code (+1, 0, or −1) for each comparison depending upon the threshold limit. Once the comparison finished between the center point and its neighboring points, the transformation code is computed from the ternary code. As compared to 1D-LBP, the 1D-LTP is more descriptive in nature. In this section, LCP and 1D-LTP feature extraction techniques are discussed one by one in detail. Figure 3 depicts the flowchart of the proposed methods.

2.3.1 Local Centroid Pattern (LCP)

LCP is a novel feature extraction technique based on the centroid of the surrounding points. Centroid or mean often captures the structure of a pattern. The various steps of the LCP feature extraction technique are as follows:

-

1.

Set the number of neighboring points m.

-

2.

For each signal point S c , select m/2 number of neighbor points in forward and backward directions.

-

3.

Compute the centroid (c) of the neighboring points (\( c = \frac{1}{m}\sum\nolimits_{i = 0}^{m - 1} p_{i} \)).

-

4.

Compute the difference between the neighboring points and the centroid as follows:

$$ d_{i} = p_{i} - c,{\text{ for}}\;i = 0 \ldots m - 1. $$ -

5.

Compute the LCP code.

$$ S_{c}^{LCP} = \sum\limits_{i = 0}^{m - 1} s(d_{i} )2^{i} . $$(3)where,

$$ s(x) = \left( {\begin{array}{*{20}c} {1,} & {{\text{if }}x \ge 0} \\ {0,} & {\text{ otherwise}} \\ \end{array} } \right. $$The various steps involved in the LCP are shown in Fig. 4.

2.3.2 One-Dimensional Local Ternary Pattern (1D-LTP)

Like LBP, the LTP feature extraction technique was proposed for face recognition for two dimensional (2D) face images [34, 38]. Recently, LTP gained popularity in different pattern recognition applications [39, 40]. Even though the 1D-LBP feature extraction technique has been proposed for signal processing [35] and successfully applied for epileptic EEG signal classification [36, 37], the LTP based technique is yet to be proposed for the same. In this section, 1D-LTP based feature extraction technique has been introduced for epileptic EEG signal classification. The 1D-LTP technique works in the similar way to 1D-LBP, but it produces a ternary code. Like 1D-LBP, the difference is computed between the neighbor points and the center point. However, a user define threshold (t) is set in order to avoid the variations. The various steps of the proposed 1D-LTP feature extraction techniques are as follows:

-

1.

Set the number of neighboring points m and a threshold t.

-

2.

For each signal point S c , select m/2 number of neighbor points in forward and backward directions.

-

3.

Compute the difference between the center point S c and the neighboring point p i . The difference is computed as:

$$ d_{i} = P_{i} - S_{c} ,{\text{ for}}\;i = 0 \ldots m - 1. $$ -

4.

Compute the LTP code.

$$ S_{c}^{LTP} = \sum\limits_{i = 0}^{m - 1} s(d_{i} )3^{i} $$(4)where,

$$ s(x) = \left( {\begin{array}{*{20}c} {1,} & {{\text{if }}x \ge t} \\ {0,} & { - t \,< x \,< t} \\ { - 1,} & {x \le - t} \\ \end{array} } \right.. $$

In order to reduce the LTP code range, the technique suggested in [35] is followed, where the ternary pattern is partitioned into positive (LTP pos) and negative (LTP neg) binary patterns and then concatenated as given below.

where,

and

One LTP pattern along with the positive and negative parts is shown in Fig. 5.

Once the computation of transformation codes (1D-LBP, LCP or 1D-LTP) for all the signal points is over, the histogram of these codes forms the feature vector of the EEG signal and is then fed to the classifier to carry-out the classification. In all the above three methods, the transformation code lies between 0 to 2m−1 (inclusive).

2.4 Time Complexity of LCP and 1D-LTP

Let X N×d represent the set containing N number of signals and d is the number of points present in each signal. In both the techniques, if m (m < d) number of neighboring points are considered for computation of transformation codes, then the time complexity (Tc) of both the techniques (LCP and 1D-LTP) for processing each signal is O(md). Since the set X contains N number of signals, the time complexity of both techniques for processing X is O[N.(md)].

For the same set X N×d , the time complexity of some well known techniques like PCA, LDA, Discrete Fourier Transform (DFT), fFT, WT are as follows.

where t = min(N, d)

It could be observed that the time complexity of the proposed techniques is less as compared to some of the existing techniques (PCA, LDA, DFT).

2.5 1D-LBP, LCP, and 1D-LTP in Case of Noise

Tolerance to noise is one of the important properties of a feature extraction technique. Noise may cause a local variation or a global variation. These variations can affect the pattern structure of a signal. Since all these cases belong to the same pattern, the transformation technique should produce the same transformation code for all the above cases. 1D-LBP generates the transformation code by comparing the center point with its surrounding points directly. In 1D-LBP, a small variation in the pattern structure can affect the transformation code. As a result of which the transformation codes are different for the original pattern and the noisy pattern. Hence, 1D-LBP is sensitive to noise. On the other hand, in the LCP technique the transformation code is generated by computing the mean of the surrounding points, followed by the comparison of mean with each of the surrounding points. If the subpart of a pattern sequence is affected by noise, the variation of mean with respect to the surrounding points considering the pattern structure is small for noisy pattern and this property makes LCP insensitive towards the noise by producing the same transformation code for both the original and noisy patterns. Similarly, the code computation in 1D-LTP not only depends on the center point and surrounding points, but also depends on the user defined threshold limit. This threshold limit is set in order to avoid the variation caused by noise and produces the same transformation code. The behavior of 1D-LBP, LCP, and 1D-LTP (with threshold t = 10 μV) techniques in case of local and global variations for different patterns are shown in Fig. 6. It should be noted that Fig. 6 is only an example.

In case of noise free signals, patterns with similar structures should be represented by the same transformation code. It can be seen in Fig. 6 that LCP and 1D-LTP assigns the same transformation code in case of local and global variations, whereas, 1D-LBP is sensitive to local variation. The insensitiveness property of LCP and 1D-LTP overcome the limitation of 1D-LBP.

2.6 Classification

Nearest neighbor (NN), decision tree (DT), support vector machine (SVM) and artificial neural network (ANN) are some of the well known classifiers of machine learning and data mining [41]. In this study, all the above four classifiers have been used and the classification results are shown.

2.7 Cross Validation

10-fold cross validation has been used to evaluate the performance of the proposed techniques. In 10-fold cross validation the data set is divided into ten parts. Out of these ten parts, nine parts are used as training sets and the remaining part is used as a testing set. This process is repeated ten times with different training and testing sets. Usually, the mean accuracy of all the iterations represents the final accuracy [42].

2.8 Statistical Parameters

The statistical parameters used for evaluating the performance of the proposed method are sensitivity (Sen), specificity (Spe), and accuracy (Acc). These are calculated as follows:

where, true positive (Tp): correctly identified seizure signals, true negative (Tn): correctly identified non-seizure signals, false positive (Fp): incorrectly marked as seizure signals, and false negative (Fn): incorrectly marked as non-seizure signals.

2.9 Dataset

In this research, the publicly available benchmark epilepsy EEG dataset provided by University of Bonn,Footnote 1 Germany, is used [43]. The dataset consists of five subsets (A, B, C, D, E). The standard 10–20 system electrode placement was followed for signal capturing. Each subset contains 100 single-channel EEG segments of 23.6 s duration with 4097 data points. A 128-channel amplifier system was used for the recording of these EEG signals using common average reference and the sampling rate was 173.61 Hz. The subsets A and B contain the EEG recordings of five healthy volunteers while their eyes were opened and closed, respectively. The signals in subsets C and D were recorded on patients before epileptic attack at hemisphere hippocampal formation and from the epileptogenic zone respectively. The EEG signals within subset E were recorded from patients during the seizure activity. The description of the dataset is provided in Table 1.

In this study, in order to verify the effectiveness of the proposed approaches all the five subsets have been used and different experimental cases have been tested. The EEG signal of each subset is shown in Fig. 7.

3 Results

All the five subsets (A, B, C, D, E) have been used in this study. In both the techniques, the first step is the computation of the transformation code for each signal point. Once the code computation for all the signal points is over, these codes are arranged in the form of a histogram. The histogram represents the feature vector of the corresponding EEG signal and is subsequently used for the classification using different machine learning classifiers.

The length of the feature vector (l) depends on the number of neighboring points (m) considered in evaluating the transformation code.

For the LCP feature extraction technique, the length of the feature vector is computed as follows:

For the 1D-LTP feature extraction technique, the length of the feature vector is computed as follows:

The small segments of histogram based feature vector (with m = 8) obtained with LCP and 1D-LTP techniques for different subsets are shown in Fig. 8.

The four different machine learning classifiers used in this study are NN, SVM, ANN, and DT. In case of the NN classifier the built-in MATLAB functions ClassificationKNN.fit() and predict() have been used for training and testing the feature vectors respectively. The svmtrain() and svmclassify() functions have been used for training and classifying the feature vectors with a linear kernel based SVM classifier. The kernel parameter was set to 1. For DT and ANN classifiers the fitctree() and patternnet() functions have been used. This multilayer perceptron neural network consists of three layers. The three layers are the input layer, a hidden layer, and the output layer. The input layer nodes represent the extracted features of a signal. After several experiments it is found that the highest accuracy was achieved when the cardinality of neurons in the hidden layer was set between 30 and 70. The maximum number of iterations and the minimum gradient were set to 1000 and 10−6 respectively. We have used the scaled conjugate gradient method with the hyperbolic tangent sigmoid transfer function. The cvpartition() function was used for random partitions of input dataset into training and testing sets. The various experimental cases considered in this research are shown in Table 2.

A number of experiments have been carried out considering the different lengths of neighboring points (m). The best results were obtained when the number of neighboring points was set to 8. With the number of neighboring points m = 8, the mean classification accuracy (ACC) obtained after 10-fold cross validation for different experimental cases by applying LCP and 1D-LTP feature extraction techniques with different machine learning classifiers are shown in Tables 3 and 4 respectively. In case of 1D-LTP technique the threshold (t) was set to 10 μV empirically.

Among the four machine learning classifiers used in this research, it is found that ANN achieved the highest classification accuracy. The sensitivity, specificity, and classification accuracy achieved for some experimental cases by both the techniques (LCP and 1D-LTP) with ANN classifier have been shown in Tables 5 and 6 respectively.

The classification accuracy achieved by 1D-LBP, LCP, and 1D-LTP feature extraction techniques with different classifiers is shown in Fig. 9.

4 Discussion

The benchmark dataset has been used to carry out a fair comparison between the proposed techniques and different methods in the literature. After conducting several experiments, it is found that, LCP and 1D-LTP feature extraction techniques achieved a high classification accuracy with ANN classifier (Tables 5, 6). The experimental results of the proposed techniques and different methods reported in the literature is presented in Table 7.

For case 1 (A–E), Srinivasan et al. [45] reported a 100% classification accuracy with entropy and neural network. In the same way, Kumar et al. [50] achieved the highest classification accuracy with approximate entropy and SVM. Recently, Lee et al. [52] and Tawfik et al. [55] reported the classification accuracy of 98.17 and 99.5% respectively. In this study, both the proposed techniques (LCP and 1D-LTP) achieved 100% classification accuracy with ANN classifier.

The classification accuracy achieved by LCP with ANN classifier for cases 2–4 is 99.00, 97.50, and 99.00% respectively. Similarly, 1D-LTP with ANN achieved the classification accuracy of 99.50, 99.50, and 98.00% for these cases. Nicolaou and Georgiou [47] reported the classification accuracy of 82.88, 88.00, and 78.98 for these experimental cases with the combination of permutation entropy and SVM. Recently, Kumar et al. [50] conducted a number of experiments and achieved a maximum classification accuracy (%) of 100, 99.60, and 95.85 for these experimental cases respectively.

For cases 5–7, LCP and 1D-LTP achieved the classification accuracy (%) of 99.50, 99.33, 98.67 and 100, 99.00, 99.00 respectively. Recently, Pachori and Patidar [53] reported a classification accuracy of 97.67 for case 7 (CD–E) with the combination of intrinsic mode function and ANN classifier. For the same case, Kumar et al. [37] reported a classification accuracy of 98.33% with the application of Gabor filter, LBP and NN classifier. The classification accuracy achieved by LCP and 1D-LTP for case 8 (ABCD–E) is 98.60 and 98.20 respectively. For case 8 (ABCD–E), Kumar et al. [50] achieved the accuracy of 97.38% with the application of approximate entropy and SVM. Recently, for cases 6–8, Tiwari et al. [56] reported a high classification accuracy of 100, 99.45 and 99.31% respectively with the combination of key point based LBP and SVM. For case 9 (A–D–E), the classification accuracy achieved by LCP and 1D-LTP is 98.00 and 98.33% respectively. For case 9, Acharya et al. [22] reported a classification accuracy of 99.00% with the combination of PCA and GMM model, Kaya et al. [36] reported a classification accuracy of 95.67% with 1D-LBP, and Orhan et al. [46] reported the classification accuracy of 96.67% with k-mean clustering and ANN classifier.

These results show that both LCP and 1D-LTP have been able to achieve a better classification accuracy than some of the existing techniques proposed in the literature (Table 7). In addition, the proposed techniques are computationally simple and easy to implement. Like 1D-LBP [35], both the techniques can be used for processing other one-dimensional signals. The time complexity of the proposed techniques is less as compared to some of the well known techniques like PCA, LDA, and DFT. Both the techniques also extract features directly from the raw EEG signal.

5 Conclusions

A number of feature extraction techniques have been proposed in the past for epileptic EEG signal classification. Recently, 1D-LBP has gained popularity in this field. However, 1D-LBP is sensitive to local variation. In order to overcome this issue, we have proposed two effective feature extraction techniques called LCP and 1D-LTP. Nine different experimental cases have been tested to validate the effectiveness of the proposed approaches. The highest classification accuracy (%) achieved with LCP and 1D-LTP for different experimental cases, such as A–E, B–E, C–E, D–E, A–D, AB–E, CD–E, ABCD–E, A–D–E are 100, 99.50, 97.50, 99.00, 99.50, 99.33, 98.67, 98.60, 98.00 and 100, 99.50, 99.50, 98.00, 100, 99.00, 99.00, 98.20, 98.33 respectively. With the promising performance on the benchmark dataset, it could be concluded that LCP and 1D-LTP are effective feature extraction techniques for EEG signal processing. The proposed techniques are easy to implement and computationally simple. The time complexity of the proposed techniques is less as compared to some of the well known techniques. This research strengthens the direction of developing local transformed feature based techniques for epileptic EEG signal classification. In future, the effectiveness of these feature extraction techniques may also be verified with a larger dataset. It is also observed that the length of the histogram based feature vector is large. In future, different feature reduction techniques could be applied in order to reduce the length of the histogram based feature vectors. The future direction of research also includes the processing of other biomedical signals like electrocardiogram (ECG) and electromyogram (EMG) for classification of normal and abnormal states using local transformed features.

Notes

EEG time series dataset http://epileptologie-bonn.de/cms/front_content.php?idcat=193lang=3changelang=3.

References

World Health Organization, Fact Sheet. (2016). Epilepsy. Retrieved June, 2016 from http://www.who.int/mediacentre/factsheets/fs999/en/.

Ray, G. C. (1994). An algorithm to separate nonstationary part of a signal using mid-prediction filter. IEEE Transactions on Signal Processing, 42(9), 2276–2279.

Iasemidis, L. D., Shiau, D. S., Chaovalitwongse, W., Sackellares, J. C., Pardalos, P. M., Principe, J. C., et al. (2003). Adaptive epileptic seizure prediction system. IEEE Transactions on Biomedical Engineering, 50(5), 616–627.

Altunay, S., Telatar, Z., & Erogul, O. (2010). Epileptic EEG detection using the linear prediction error energy. Expert Systems with Applications, 37(8), 5661–5665.

Chandaka, S., Chatterjee, A., & Munshi, S. (2009). Cross-correlation aided support vector machine classifier for classification of EEG signals. Expert Systems with Applications, 36(2), 1329–1336.

Joshi, V., Pachori, R. B., & Vijesh, A. (2014). Classification of ictal and seizure-free EEG signals using fractional linear prediction. Biomedical Signal Processing and Control, 9, 1–5.

Polat, K., & Güneş, S. (2007). Classification of epileptiform EEG using a hybrid system based on decision tree classifier and fast Fourier transform. Applied Mathematics and Computation, 187(2), 1017–1026.

Duque-Muñoz, L., Espinosa-Oviedo, J. J., & Castellanos-Dominguez, C. G. (2014). Identification and monitoring of brain activity based on stochastic relevance analysis of short–time EEG rhythms. Biomedical engineering online, 13(1), 1.

Faust, O., Acharya, U. R., Adeli, H., & Adeli, A. (2015). Wavelet-based EEG processing for computer-aided seizure detection and epilepsy diagnosis. Seizure, 26, 56–64.

Acharya, U. R., Sree, S. V., Swapna, G., Martis, R. J., & Suri, J. S. (2013). Automated EEG analysis of epilepsy: A review. Knowledge-Based Systems, 45, 147–165.

Swami, P., Gandhi, T. K., Panigrahi, B. K., Bhatia, M., Santhosh, J., & Anand, S. (2016). A comparative account of modelling seizure detection system using wavelet techniques. International Journal of Systems Science: Operations & Logistics. doi:10.1080/23302674.2015.1116637.

Subasi, A. (2007). EEG signal classification using wavelet feature extraction and a mixture of expert model. Expert Systems with Applications, 32(4), 1084–1093.

Chen, L. L., Zhang, J., Zou, J. Z., Zhao, C. J., & Wang, G. S. (2014). A framework on wavelet-based nonlinear features and extreme learning machine for epileptic seizure detection. Biomedical Signal Processing and Control, 10, 1–10.

Ocak, H. (2009). Automatic detection of epileptic seizures in EEG using discrete wavelet transform and approximate entropy. Expert Systems with Applications, 36(2), 2027–2036.

Swami, P., Gandhi, T. K., Panigrahi, B. K., Tripathi, M., & Anand, S. (2016). A novel robust diagnostic model to detect seizures in electroencephalography. Expert Systems with Applications, 56, 116–130.

Li, D., Xie, Q., Jin, Q., & Hirasawa, K. (2016). A sequential method using multiplicative extreme learning machine for epileptic seizure detection. Neurocomputing, 214, 692–707.

Satapathy, S. K., Dehuri, S., & Jagadev, A. K. (2016). ABC optimized RBF network for classification of EEG signal for epileptic seizure identification. Egyptian Informatics Journal. doi:10.1016/j.eij.2016.05.001.

Kannathal, N., Choo, M. L., Acharya, U. R., & Sadasivan, P. K. (2005). Entropies for detection of epilepsy in EEG. Computer Methods and Programs in Biomedicine, 80(3), 187–194.

Guo, L., Rivero, D., & Pazos, A. (2010). Epileptic seizure detection using multiwavelet transform based approximate entropy and artificial neural networks. Journal of Neuroscience Methods, 193(1), 156–163.

Acharya, U. R., Fujita, H., Sudarshan, V. K., Bhat, S., & Koh, J. E. (2015). Application of entropies for automated diagnosis of epilepsy using EEG signals: A review. Knowledge-Based Systems, 88, 85–96.

Subasi, A., & Gursoy, M. I. (2010). EEG signal classification using PCA, ICA, LDA and support vector machines. Expert Systems with Applications, 37(12), 8659–8666.

Acharya, U. R., Sree, S. V., Alvin, A. P. C., & Suri, J. S. (2012). Use of principal component analysis for automatic classification of epileptic EEG activities in wavelet framework. Expert Systems with Applications, 39(10), 9072–9078.

Acharya, U. R., Sree, S. V., Chattopadhyay, S., Yu, W., & Ang, P. C. A. (2011). Application of recurrence quantification analysis for the automated identification of epileptic EEG signals. International Journal of Neural Systems, 21(03), 199–211.

Niknazar, M., Mousavi, S. R., Vahdat, B. V., & Sayyah, M. (2013). A new framework based on recurrence quantification analysis for epileptic seizure detection. IEEE J Biomed Health Inform, 17(3), 572–578.

Du, X., Dua, S., Acharya, R. U., & Chua, C. K. (2012). Classification of epilepsy using high-order spectra features and principle component analysis. Journal of Medical Systems, 36(3), 1731–1743.

Acharya, U. R., Yanti, R., Zheng, J. W., Krishnan, M. M. R., Tan, J. H., Martis, R. J., et al. (2013). Automated diagnosis of epilepsy using CWT, HOS and texture parameters. International Journal of Neural Systems, 23(03), 1350009.

Acharya, U. R., Sree, S. V., & Suri, J. S. (2011). Automatic detection of epileptic EEG signals using higher order cumulant features. International Journal of Neural Systems, 21(05), 403–414.

Pachori, R. B., & Bajaj, V. (2011). Analysis of normal and epileptic seizure EEG signals using empirical mode decomposition. Computer Methods and Programs in Biomedicine, 104(3), 373–381.

Bajaj, V., & Pachori, R. B. (2012). Classification of seizure and nonseizure EEG signals using empirical mode decomposition. IEEE Transactions on Information Technology in Biomedicine, 16(6), 1135–1142.

Martis, R. J., Acharya, U. R., Tan, J. H., Petznick, A., Yanti, R., Chua, C. K., et al. (2012). Application of empirical mode decomposition (EMD) for automated detection of epilepsy using EEG signals. International Journal of Neural Systems, 22(06), 1250027.

Pachori, R. B., Sharma, R., & Patidar, S. (2015). Classification of normal and epileptic seizure EEG signals based on empirical mode decomposition. In Quanmin Zhu & Ahmad Taher Azar (Eds.), Complex system modelling and control through intelligent soft computations (pp. 367–388). Cham: Springer.

Fu, K., Qu, J., Chai, Y., & Dong, Y. (2014). Classification of seizure based on the time-frequency image of EEG signals using HHT and SVM. Biomedical Signal Processing and Control, 13, 15–22.

Bajaj, V., & Pachori, R. B. (2012). Separation of rhythms of EEG signals based on Hilbert-Huang transformation with application to seizure detection. In G. Lee, D. Howard, J. J. Kang, & D. Ślęzak (Eds.), Convergence and hybrid information technology. ICHIT 2012 (Vol. 7425)., Lecture Notes in Computer Science Berlin: Springer.

Ahonen, T., Hadid, A., & Pietikainen, M. (2006). Face description with local binary patterns: Application to face recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(12), 2037–2041.

Chatlani, N., & Soraghan, J. J. (2010). Local binary patterns for 1-D signal processing. In 18th European Signal Processing Conference, Aalborg, pp. 95–99.

Kaya, Y., Uyar, M., Tekin, R., & Yıldırım, S. (2014). 1D-local binary pattern based feature extraction for classification of epileptic EEG signals. Applied Mathematics and Computation, 243, 209–219.

Kumar, T. S., Kanhangad, V., & Pachori, R. B. (2015). Classification of seizure and seizure-free EEG signals using local binary patterns. Biomedical Signal Processing and Control, 15, 33–40.

Tan, X., & Triggs, B. (2010). Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Transactions on Image Processing, 19(6), 1635–1650.

Nanni, L., Brahnam, S., & Lumini, A. (2011). Local ternary patterns from three orthogonal planes for human action classification. Expert Systems with Applications, 38(5), 5125–5128.

Altınçay, H., & Erenel, Z. (2014). Ternary encoding based feature extraction for binary text classification. Applied intelligence, 41(1), 310–326.

Wu, X., Kumar, V., Quinlan, J. R., Ghosh, J., Yang, Q., Motoda, H., et al. (2008). Top 10 algorithms in data mining. Knowledge and Information Systems, 14(1), 1–37.

Kohavi, R. (1995). A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the 14th international joint conference on Artificial intelligence—Volume 2 (IJCAI’95) (pp. 1137–1143). San Francisco, CA: Morgan Kaufmann Publishers Inc.

Andrzejak, R. G., Lehnertz, K., Mormann, F., Rieke, C., David, P., & Elger, C. E. (2001). Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: Dependence on recording region and brain state. Physical Review E, 64(6), 061907.

Nigam, V. P., & Graupe, D. (2013). A neural-network-based detection of epilepsy. Neurological Research, 26(1), 55–60.

Srinivasan, V., Eswaran, C., & Sriraam, N. (2007). Approximate entropy-based epileptic EEG detection using artificial neural networks. IEEE Transactions on Information Technology in Biomedicine, 11(3), 288–295.

Orhan, U., Hekim, M., & Ozer, M. (2011). EEG signals classification using the K-means clustering and a multilayer perceptron neural network model. Expert Systems with Applications, 38(10), 13475–13481.

Nicolaou, N., & Georgiou, J. (2012). Detection of epileptic electroencephalogram based on permutation entropy and support vector machines. Expert Systems with Applications, 39(1), 202–209.

Işik, H., & Sezer, E. (2012). Diagnosis of epilepsy from electroencephalography signals using multilayer perceptron and Elman artificial neural networks and wavelet transform. Journal of Medical Systems, 36(1), 1–13.

Zhu, G., Li, Y., & Wen, P. P. (2014). Epileptic seizure detection in EEGs signals using a fast weighted horizontal visibility algorithm. Computer Methods and Programs in Biomedicine, 115(2), 64–75.

Kumar, Y., Dewal, M. L., & Anand, R. S. (2014). Epileptic seizure detection using DWT based fuzzy approximate entropy and support vector machine. Neurocomputing, 133, 271–279.

Joshi, V., Pachori, R. B., & Vijesh, A. (2014). Classification of ictal and seizure-free EEG signals using fractional linear prediction. Biomedical Signal Processing and Control, 9, 1–5.

Lee, S. H., Lim, J. S., Kim, J. K., Yang, J., & Lee, Y. (2014). Classification of normal and epileptic seizure EEG signals using wavelet transform, phase-space reconstruction, and Euclidean distance. Computer Methods and Programs in Biomedicine, 116(1), 10–25.

Pachori, R. B., & Patidar, S. (2014). Epileptic seizure classification in EEG signals using second-order difference plot of intrinsic mode functions. Computer Methods and Programs in Biomedicine, 113(2), 494–502.

Sharma, R., & Pachori, R. B. (2015). Classification of epileptic seizures in EEG signals based on phase space representation of intrinsic mode functions. Expert Systems with Applications, 42(3), 1106–1117.

Tawfik, N. S., Youssef, S. M., & Kholief, M. (2015). A hybrid automated detection of epileptic seizures in EEG records. Computers & Electrical Engineering, 53, 177–193.

Tiwari, A., Pachori, R. B., Kanhangad, V., & Panigrahi, B. (2016). Automated diagnosis of epilepsy using key-point based local binary pattern of EEG signals. IEEE Journal of Biomedical and Health Informatics. doi:10.1109/JBHI.2016.2589971.

Acknowledgements

The authors would like to thank Dr. R.G. Andrzejak of University of Bonn, Germany, for providing permission to use the EEG dataset available online.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they do not have any real or perceived conflicts of interest pertaining to the present study.

Rights and permissions

About this article

Cite this article

Jaiswal, A.K., Banka, H. Local Transformed Features for Epileptic Seizure Detection in EEG Signal. J. Med. Biol. Eng. 38, 222–235 (2018). https://doi.org/10.1007/s40846-017-0286-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40846-017-0286-5