Abstract

In recent years, algorithms to recovery low-rank matrix have become one of the research hotspots, and more corresponding optimization models with nuclear norm have also been proposed. However, nuclear norm is not a good approximation to the rank function. This paper proposes a matrix completion model and a low-rank sparse decomposition model based on truncated Schatten p-norm, respectively, which combine Schatten p-norm with truncated nuclear norm, so that the models are more flexible. To solve these models, the function expansion method is first used to transform the non-convex optimization models into the convex optimization ones. Then, the two-step iterative algorithm based on alternating direction multiplier method (ADMM) is employed to solve the models. Further, the convergence of the proposed algorithm is proved mathematically. The superiority of the proposed method is further verified by comparing the existing methods in synthetic data and actual images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As a special case of matrix recovery, matrix completion addresses to complete the incomplete matrix according to the existing information, which has been widely applied in image restoration [1,2,3,4], video denoising [5], and recommendation system [6, 7]. A typical example is Netflix prize problem [8], which can infer the user’s favorite degree for other films according to the user’s evaluation of some films, and then the movie recommendation is made for other users.

Assuming that \(M\in {\mathbf {R}}^{m \times n}\) is a given low rank or approximate low rank matrix and \(X\in {\mathbf {R}}^{m \times n}\) is a low rank matrix to be recovered, then the completion problem of X can be represented as the following optimization problem:

where \(\text {rank}{(\cdot )}\) denotes the rank of matrix, \(\Omega \subset \{1,\ldots ,m\}\times \{1,\ldots ,n\}\) is a set of location coordinates for known data. If the sampling operator, \(P_{\Omega }(X)\),

is introduced, then the problem (1.1) can be denoted as

Another important problem of matrix recovery is low rank sparse decomposition, also known as robust principal component analysis (RPCA), which has been widely applied in medical image processing [9, 10], video surveillance [11, 12], pattern recognition [13,14,15], and so on. The main purpose of RPCA is to decompose M into the sum of a low rank matrix X and a sparse matrix E, that is, \(M=X+E\), where X and E are unknown matrices. In other words, the optimization problem

needs to be solved, where \(\Vert \cdot \Vert _{0}\) denotes the \(\ell _{0}\) norm of matrix, and \(\lambda \) (\(>0\)) is regularization parameter.

Since the rank function is non-convex and discontinuous, the optimization problems (1.3) and (1.4) are both NP hard problems, which cannot be solved directly by the commonly used optimization algorithms. Fazel [16] proposed a method to replace the rank function in the optimization problems (1.3) and (1.4) by using the nuclear norm (NN) \(\Vert \cdot \Vert _{ *}\), and the \(\ell _{1}\) norm instead of the \(\ell _{0}\) norm. Therefore, Eqs. (1.3) and (1.4) are converted to

and

respectively. Here \(\Vert X\Vert _{*}=\sum _{i=1}^{\min {(m,n)}}\sigma _{i}(X)\) (\(\sigma _1(X)\ge \sigma _2(X)\ge \cdots \ge \sigma _{\min {(m,n)}}(X)\)) is NN of matrix X, that is, the sum of all singular values of matrix X, and \(\Vert E\Vert _{1}=\sum _{i=1}^{m}\sum _{j=1}^{n}|e_{ij}|\) is the \(\ell _1\) norm of matrix E, that is, the sum of all the absolute values of elements of matrix E.

Up to now, a series of studies [16,17,18,19] have shown that NN can be used as an approximate substitute for rank function. At the same time, some algorithms for solving convex optimization with NN [20,21,22,23] have been proposed one after another. This is because in the rank function, all the non-zero singular values have the same effect, and NN adds all the non-zero singular values and minimizes them at the same time, so that the different singular values have different contributions as much as possible. However, NN is not the best approximate substitute for rank function. Although the algorithms based on NN have strong theoretical guarantee, these algorithms can only get the suboptimal solution in practical application.

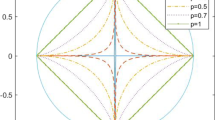

In 2012, a non-convex minimum optimal substitution for NN is proposed, which is named as Schatten p-norm [6], and defined by \(\Vert X\Vert _{p}= \left( \sum _{i=1}^{\min {(m,n)}}\sigma _{i}^{p}(X) \right) ^{1/p}\) (\(0<p\le 1\)). Obviously, when \(p=1\), it is equivalent to the NN. And when p approaches 0, the Schatten p-norm approximates the rank function. In 2013, Hu et al. [1] proposed a new norm called as truncated nuclear norm (TNN): \(\Vert X\Vert _{r}=\sum _{i=r+1}^{\min {(m,n)}}\sigma _{i}(X)\). Its main idea is to remove the larger singular values of r and add the remaining singular values of \((\min (m, n)-r)\) to reduce the influence of large singular values on low rank. Recently, Gu et al. [24] proposed to replace NN with the weighted nuclear norm (WNN) defined by \(\Vert X\Vert _{\omega ,*}=\sum _{i=1}^{\min {(m,n)}}\omega _{i}\sigma _{i}(X)\) in order to change the influence of singular value on rank function with different weights, and showed that the proposed WNN has a good approximate effect. In [25] and [26], a truncated Schatten p-norm (TSPN) is proposed, which sums only the p power of singular values of \((\min (m,n)-r)\), that is, \(\Vert X\Vert _{r}^{p}=\sum _{i=r+1}^{\min {(m,n)}}\sigma _{i}^{p}(X)\). In [25], a compression sensing model based on truncated Schatten p-norm is proposed, and the alternating direction multiple method (ADMM) was used to solve the model. The proposed method in [25] has a good performance in image restoration. In [26], Chen et al. used truncated Schatten p-norm and proposed an optimization model of human motion recovery, and further showed that the truncated Schatten p-norm has a better effect of approximate rank function than other norms by using a series of experiments.

The motivations of the paper are as follows.

-

In the rank function, all the non-zero singular values have the same effect. However, the different singular values should have different contributions as much as possible. So NN is not the best approximate substitute for rank function.

-

The main idea of TNN is to remove the larger singular values of r and add the remaining singular values of \((\min (m, n)-r)\) to reduce the influence of large singular values on low rank. It was shown that TNN has better approximate effect than NN.

-

When \(0<p<1\), Schatten p-norm is a non-convex minimum optimal substitution for NN, and when \(p=1\), it is equivalent to the nuclear norm. Specially, when p approximates 0, the Schatten p-norm approximates the rank function.

-

Truncated Schatten p-norm sums only the p power of singular values of \((\min (m,n)-r)\), which combines TNN and Schatten p-norm advantages. It has been shown in [25, 26] that Schatten p-norm has a good performance in image restoration and a better effect of approximate rank function than other norms by using a series of experiments.

The main contributions of this paper can be summarized as follows.

-

The truncated Schatten p-norm based non-convex regularization models, which are directed against matrix completion and RPCA problems, respectively, are proposed;

-

The function expansion method is used to transform the non-convex optimization model into the convex optimization model, and the two-step iterative algorithm of ADMM [27] is utilized to solve the model;

-

The convergence of the proposed algorithm is proved mathematically;

-

The advantages of the proposed methods are further illustrated by synthetic data, actual image restoration, and background separation experiments.

The contents of this paper are arranged as follows. In Sect. 2, we establish regularization models of matrix completion based on truncated Schatten p-norm, corresponding algorithms, and convergence theorem. The corresponding RPCA regularization model and solution method based on truncated Schatten p-norm are described in Sect. 3. Section 4 describes and analyses the experimental results, and through the comparison of experimental results, the advantages of the proposed method are further explained. Section 5 makes a summarization and reduces the conclusion of the whole article. Finally, the details of the proof of the convergence theorem are attached in “Appendix”.

2 Regularization of matrix completion based on truncated Schatten p-norm (TSPN-MC)

2.1 TSPN-MC model

From [25] and [26], it follows that truncated Schatten p-norm can be written as

where \(A\in {\mathbf {R}}^{r\times m}\), and \(B\in {\mathbf {R}}^{r\times n}\). Further, we need the following lemma.

Lemma 2.1

( see[25]) Suppose that the rank of matrix \(X\in {\mathbf {R}}^{m\times n}\) is \(r (r\le \min (m,n))\), and its singular value decomposition is \(X=U\Delta V^{\top }\), where \(U=(\mu _{1}, \ldots , \mu _{m})\in {\mathbf {R}}^{m\times m}\), \(\Delta \in {\mathbf {R}}^{m \times n}\), \(V=(\nu _{1}, \ldots , \nu _{n})\in {\mathbf {R}}^{n\times n}\). Then when \(A=(\mu _{1}, \ldots , \mu _{r})^{\top }\in {\mathbf {R}}^{r\times m}\), \(B=(\nu _{1}, \ldots , \nu _{r})^{\top }\in {\mathbf {R}}^{r\times n}\), and \(0<p\le 1\), the optimization problem:

has optimal solution.

From Eq. (2.1) and Lemma 2.1, we improve the model of matrix completion (1.3) and obtain the new model based on the truncated Schatten p-norm:

where \(A\in {\mathbf {R}}^{r\times m}\), \(B\in {\mathbf {R}}^{r\times n}\), \(AA^{\top }=I_{r\times r}\), \(BB^{\top }=I_{r\times r}\), and \(0<p\le 1\).

2.2 Solving TSPN-MC

Since (2.3) is a non-convex optimization problem, it cannot be solved directly by the usual method. To this end, we first put our hand to transform the model (2.3).

Let \(F(\sigma (X))=\sum _{i=1}^{\min (m,n)}(1-\sigma _{i}(B^{\top }A))(\sigma _{i}(X))^{p}\), then its derivative with respect to \(\sigma (X)\) is

Therefore, we can obtain that the first-order Taylor expansion of \(F(\sigma (X))\):

Let \(\omega _{i}=p(1-\sigma _{i}(B^{\top }A))(\sigma _{i}(X_{k}))^{p-1}\), then \(F(\sigma (X))=\sum _{i=1}^{\min (m,n)}\omega _{i}\sigma _{i}(X)\), where \(W:=\{\omega _{i}\}_{i=1}^{\min (m,n)}\) is a no decreasing weight sequence. As a result, the model (2.3) becomes a model based on weighted nuclear norm:

Consequently, we can use ADMM and divide into two steps to solve the convex optimization model (2.6):

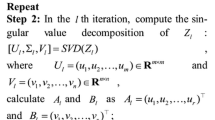

-

Step 1: Initialize \(X_{1}=M\), and calculate \(X_{s} = U_{s} \Delta _{s}V_{s}^{\top }\) in the \((s+1)\)-th iteration. Then, \(A_{s}\) and \(B_{s}\) are calculated from \(U_{s}\) and \(V_{s}\);

-

Step 2: Fix \(A_{s}\) and \(B_{s}\), and calculate the weight \(W=\{\omega _{i}\}_{i=1}^{r}\) of K-th iteration. Then, the ADMM algorithm is used to solve (2.6).

We sort out the specific solution process, and obtain the following Algorithm 1.

The process of using ADMM to solve (2.6) is described in detail below. We first introduce the variable N and rewrite the model (2.6) as

Then, we construct augmented Lagrangian function of (2.7) as

where Y is a Lagrangian multiplier, and \(\mu > 0\) is a penalty factor.

Fixing X, and updating N follows

and

Then, fixing N and updating X follows

Let \(Q_{X}=M-N_{k+1}+\frac{1}{\mu _{k}}Y_{k}\), then (2.11) can be solved by the following Lemma 2.2.

Lemma 2.2

(see [24]) Suppose that the rank of matrix Q is r, and its singular value decomposition is \(Q=U\Delta V^{\top }\), \(\Delta =\text {Diag}(\sigma _{i}(Q))\), \(1\le i\le r\), then for any \(\tau >0\), the optimization problem

has optimum solution:

where \(S_{\omega ,\tau }(\cdot )\) is weighted singular value shrink operator, \((\cdot )_{+}:=\max (\cdot ,0)\), and \(W=\{\omega _{i}\}_{i=1}^{r}\).

Therefore, from Lemma 2.2 it follows the solution of (2.11):

So updating Y and \(\mu \) achieves

where \(\rho (>1)\) is a constant.

Sorting out the above details obtains the following matrix completion regularization (TSPN-MC) algorithm based on truncated Schatten p-norm 2.

As the end of this section, we give the convergence theorem of Algorithm 2. The details of its proof will be given in “Appendix”.

Theorem 2.1

\(\{X_{k}\}\) obtained by Algorithm 2 is convergent.

3 Low rank sparse decomposition based on truncated Schatten p-norm (TSPN-RPCA)

In the previous section, we introduced the TSPN-MC model and presented solution algorithms. In this section, we build a matrix low-rank sparse decomposition model based on truncated Schatten p-norm and its solution algorithm.

We can rewrite the model (1.4) as

Similarly, the TSPN-RPCA model can be solved by two-steps iterative method. Because the first step solution process is the same as the first step solution process of model TSPN-MC, we here only introduces the second step solving process with ADMM. Clearly, the Lagrangian functions of (3.1) can be represented as

Applying ADMM obtains

Since the method of solving \(X_{k+1}\), \(Y_{k+1}\), and \(\mu _{k+1}\) is the same as that of TSPN-MC model, it is not introduced here. For \(E_{k 1} \), it can be solved by the following Lemma 3.1.

Lemma 3.1

(see [28]) For a given matrix \(C\in {\mathbf {R}}^{m\times n}\), and any \(\tau >0\), the solution of optimization problem

is \(E^{*}=\Theta _{\tau }[c_{ij}] \), where \(c_{ij}\) are the elements of matrixC, \(\Theta _{\tau }[\cdot ]\) is shrink operator defined by

Furthermore, the algorithm of TSPN-RPCA solved by ADMM can be summarized in following Algorithm 3.

4 Experiments

In this section, the effectiveness of the proposed model and algorithm are verified by a series of experiments on synthetic data and actual images. The simulations of all experiments are carried out in a MATLAB R2014a environment running on an Intel(R), Core(TM) i3-4150, CPU @ 3.5GHz with 4 GB main memory.

4.1 Application of TSPN-MC in image restoration

Firstly, the experiments results of the methods NN-MC [17], TNN-MC [1], WNN-MC [29], and TSPN-MC on synthetic data are compared, and the feasibility of the proposed algorithm is illustrated. Then, the effectiveness of the four methods is compared by the experimental results on actual image restoration.

4.1.1 Experimental results on synthetic data

Following the generated method of synthetic data in [29], the generated data obeys the Gaussian distribution \({\mathcal {N}}(0,1)\). We set the matrix size as \(m=n=400\), and take \(ps=0.1\), 0.2, and 0.3, respectively. So the number of missing data in the matrix is \((m\times n\times ps)\). Then, we select the value of \(pr\in [0.05, 0.3]\) for each ps at an interval of 0.05, and calculate the rank \(r=pr\times m\) of the data matrix. In order to illustrate the performance of the model, the matrix recovery error is used as an evaluation index, which is defined by

For the data generated by each group of (ps, pr) values, we conduct 10 tests, and then take the average as the final result for that group. For the parameter p in TSPN-MC, depending on the different synthetic data, its specific value will be indicated in the results of the experiment.

From the error results shown in Tables 1, 2, and 3, we can see that TSPN-MC and WNN-MC are better than the other two methods in recovery. However, the recovery error of TSPN-MC is the smallest, and it has better performance than the other three methods. On the other hand, with the increase of missing data and the rank of synthetic data matrix, the error fluctuation of TSPN-MC is small and has certain stability. Table 4 shows the average time of four methods to run on synthetic data. Because the singular value decomposition was used three times in the computation weight W, so the running time of TSPN-MC is longer than the other three methods.

4.1.2 Experimental results on image restoration

We select four images with the size of \(300\times 300\). By comparing the peak signal-to-noise ratio (PSNR), we verify the image restoration effect of TSPN-MC under the condition of missing rate of \(20\%, 40\%, 60\%, 80\%\), and text masking, respectively, to illustrate the performance advantages of TSPN-MC. As usual, the PSNR is defined by

where \(X\in {\mathbf {R}}^{m \times n}, M\in {\mathbf {R}}^{m \times n}\) represents the original image and the restored image, respectively. Since the parameter settings of different images are different, we will mark the parameter p and truncated rank r of each image in the experimental results. The test images used in this article are shown in Fig. 1.

From PSNR shown in Tables 5, 6, 7 and 8, it can be concluded that the restoration effect of TSPN-MC is better than that of the other three methods, and even with the increase of the missing rate, the PSNR value is still decreasing. On the whole, TSPN-MC’s PSNR is higher than other methods, and the recovery effect is better. In order to further illustrate the advantages of the proposed method, Figs. 2, 3, and 4 show the restoration visual effects under different missing or masking conditions, respectively. From these Figures, we can clearly see that the image restored by TSPN-MC is closer to the original image and clearer than that of other methods. To sum up, TSPN-MC model has better recovery performance.

4.2 Application of TSPN-RPCA in background subtraction

4.2.1 Experimental results on synthetic data

In this section, the recovery effects of TSPN-RPCA and LRSD-TNN [30], IALM [21], ALM [21] and APG [31] are compared by generating synthetic data, and the effectiveness of the proposed algorithm is illustrated. First of all, we are generating a synthetic matrix with rank r and Sparsity degree spr: \(M_{0}=X_{0}+E_{0}\). Here the low rank matrix can be written as \(X_{0}=HG^{\top }\), where \(H\in {\mathbf {R}}^{m\times r}\), and \(G\in {\mathbf {R}}^{n\times r}\) satisfies Gaussian distribution \({\mathcal {N}}(0,1)\). And the sparse matrix \(E_{0}\) is a pulse sparse matrix with uniformly distribution on \([- t, t]\), where \(t=\max {(|X_{0}|)}\), and the number of elements in the matrix is \((spr\times m\times n)\). In the experiment, p is set as 0.2.

In order to illustrate the accuracy of synthetic data recovery, we compare the errors of different methods, including recovery total error Totalerr, low rank matrix error LRerr, and sparse matrix error SPerr. These errors are defined by

respectively, where M, X, and E are restored matrices.

We first fix the rank of matrix as \(r=0.1n\), sparsity degree as \(spr=0.05\), and three sets of data of \(m=n=100, 500, 900\) are generated respectively. The matrix recovery experiments are then carried out in five methods, and the results are shown in Tables 9, 10, and 11. By analyzing the results in the Tables, we know that the error of TSPN-RPCA is the smallest, the accuracy is higher than the other four methods, and the original matrix can be recovered more accurately, and the rank and sparsity of the restored matrix are the same as those of the initial matrix. Even with the increase of matrix dimension, the recovery error of TSPN-RPCA can be maintained at a stable level. Because TSPN-RPCA involves matrix singular value decomposition many times, the time consumption is larger than other methods, especially when the matrix dimension is larger.

On the other hand, we fix matrix size as \(m=n=500\). When setting \(r=0.1n, spr=0.1\) and \(r=0.05n, spr=0.05\), we respectively illustrate the advantages of the proposed method. The results are shown in Tables 12 and 13. By comparing the results of Tables 10 and 12, we find that the recovery accuracy of TSPN-RPCA decreases when the sparsity of matrix is reduced, but it is still superior to other methods. In addition, when the rank of matrix decreases, comparison Tables 10 and 13 finds that the recovery accuracy of TSPN-RPCA does not fluctuate much, and time and the number of iterations are obviously reduced. In a word, TSPN-RPCA has better recovery effect and better performance than other methods.

4.2.2 Experimental results on background subtraction

In this subsection, the background subtraction experiment is carried out through the actual video, and the superior performance of the proposed method is further verified by the comparative experiment of LRSD-TNN, IALM, and TSPN-RPCA.

For a given video, each frame can be regarded as a column of elements of the matrix. We chose three different videos [30]: Bootstrap, Hall, and Lobby, respectively. We select 16 frames of each video and convert it into a matrix with \((mn \times 16)\) dimension, where m, n is the dimension size of each video. Due to the limited space of the article, this paper only shows the effect diagram of the separation prospect. It can be seen from the visual diagram Fig. 5 that the moving target in the video can be clearly separated by the proposed method. The separation effect of the other two methods is relatively poor, and the light in the background and the “ghost shadow” formed by the moving target appear. In this experiment, the parameter value of TSPN-RPCA is set as \(p=0.2\), and the truncation rank is taken as \(r=3\).

5 Conclusion

This paper studied matrix completion (TSPN-MC) and matrix low rank sparse decomposition (TSPNR-PCA) based on truncated Schatten p-norm. Combined with the characteristics and advantages of truncated norm and Schatten p-norm, the flexibility of the model and its effectiveness in practical problems are enhanced by adjusting the value of \(p (0 < p\le 1)\). We first used Taylor expansion to transform the proposed TSPN-MC and TSPN-RPCA non-convex models into convex optimization models. Then, the popular alternating direction multiplier method (ADMM) was used to solve the models. In particular, the convergence of the proposed algorithm is proved. A series of comparative experiments further verified the effectiveness and superiority of the proposed method.

It should be pointed out that in the solution of the models, the singular value of the matrix needs to be decomposed and calculated, which is bound to increase the time cost of the experiment. Therefore, in the future research, we should pay attention to how to find new methods to reduce the time consumption caused by singular value decomposition (SVD). In addition, for the selection of truncated rank, we will consider using adaptive method to determine its value in order to reduce the repetition times of the experiment.

References

Hu Y, Zhang D, Ye J, Li X, He X (2013) Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans Pattern Anal Mach Intell 35(9):2117–2130

Fan J, Chow TW (2017) Matrix completion by least-square, low-rank, and sparse self-representations. Pattern Recognit 71:290–305

Yu Y, Peng J, Yue S (2018) A new nonconvex approach to low-rank matrix completion with application to image inpainting. Multidimens Syst Signal Process 1:1–30

Qian W, Cao F (2019) Adaptive algorithms for low-rank and sparse matrix recovery with truncated nuclear norm. Int J Mach Learn Cybern 10(6):1341–1355

Ji H, Liu C, Shen Z, Xu Y (2010) Robust video denoising using low rank matrix completion. In: Proc IEEE Conf Comp Vis Pattern Recognit (CVPR), pp 1791–1798

Nie F, Wang H, Cai X, Huang H, Ding C (2012). Robust matrix completion via joint Schatten \(p\)-norm and \(l_{p}\)-norm minimization. In: Proc Int Comp Data Mining (ICDM), pp 566–574

Lu J, Liang G, Sun J, Bi J (2016) A sparse interactive model for matrix completion with side information. In: Proc Adv Neural Inf Proc Syst (NIPS), pp 4071–4079

Bennett J, Lanning S (2007) The netflix prize. Proc KDD Cup Workshop 2007:35–38

Otazo R, Candès E, Sodickson DK (2015) Low-rank plus sparse matrix decomposition for accelerated dynamic MRI with separation of background and dynamic components. Magn Res Med 73(3):1125–1136

Ravishankar S, Moore BE, Nadakuditi RR, Fessler JA (2017) Low-rank and adaptive sparse signal (LASSI) models for highly accelerated dynamic imaging. IEEE Trans Med Imaging 36(5):1116–1128

Javed S, Bouwmans T, Shah M, Jung SK (2017) Moving object detection on RGB-D videos using graph regularized spatiotemporal RPCA. In: Proc Int Conf Image Anal Proc (ICIAP), pp 230–241

Dang C, Radha H (2015) RPCA-KFE: key frame extraction for video using robust principal component analysis. IEEE Trans Image Process 24(11):3742–3753

Jin M, Li R, Jiang J, Qin B (2017) Extracting contrast-filled vessels in X-ray angiography by graduated RPCA with motion coherency constraint. Pattern Recognit 63:653–666

Werner R, Wilmsy M, Cheng B, Forkert ND (2016) Beyond cost function masking: RPCA-based non-linear registration in the context of VLSM. In: Proc Int Workshop Pattern Recognit Neuroimaging (PRNI), pp 1–4

Song Z, Cui K, Cheng G (2019) Image set face recognition based on extended low rank recovery and collaborative representation. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-019-00941-6

Fazel M (2002) Matrix rank minimization with applications. Doctoral dissertation, PhD thesis, Stanford University

Candès EJ, Recht B (2009) Exact matrix completion via convex optimization. Found Comput Math 9(6):717–772

Oymak S, Mohan K, Fazel M, Hassibi B (2011) A simplified approach to recovery conditions for low rank matrices. In: Proc Int Symp Inf Theory (ISIT), pp 2318–2322

Li G, Yan Z (2019) Reconstruction of sparse signals via neurodynamic optimization. Int J Mach Learn Cybern 10(1):15–26

Lin Z, Ganesh A, Wright J, Wu L, Chen M, Ma Y (2009) Fast convex optimization algorithms for exact recovery of a corrupted low-rank matrix. Comput Adv Multisens Adapt Process 61(6):1–18

Lin Z, Chen M, Ma Y (2010) The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv preprint arXiv: 1009.5055

Toh KC, Yun S (2010) An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac J Optim 6(3):615–640

Yang AY, Sastry SS, Ganesh A, Ma Y (2010) Fast \(\ell _{1}\)-minimization algorithms and an application in robust face recognition: a review. In: Proc 17th Int Conf Image Process, pp 1849–1852

Gu S, Zhang L, Zuo W, Feng X (2014) Weighted nuclear norm minimization with application to image denoising. In: Proc Conf Comp Vis Pattern Recognit, pp 2862–2869

Feng L, Sun H, Sun Q, Xia G (2016) Image compressive sensing via Truncated Schatten-\(p\) Norm regularization. Signal Proc Image Commun 47:28–41

Chen B, Sun H, Xia G, Feng L, Li B (2018) Human motion recovery utilizing truncated Schatten \(p\)-norm and kinematic constraints. Inf Sci 450:89–108

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn 3(1):1–122

Candès EJ, Li X, Ma Y, Wright J (2011) Robust principal component analysis? J ACM 58(3):1–37

Gu S, Xie Q, Meng D, Zuo W, Feng X, Zhang L (2017) Weighted nuclear norm minimization and its applications to low level vision. Int J Comput Vis 121(2):183–208

Cao F, Chen J, Ye H, Zhao J, Zhou Z (2017) Recovering low-rank and sparse matrix based on the truncated nuclear norm. Neural Netw 85:10–20

Beck A, Teboulle M (2009) A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imaging Sci 2(1):183–202

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 61933013.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The proof of Theorem 2.1

Let \(Q_{X}=M-N_{k+1}+\frac{1}{\mu _{k}}Y_{k}\), then in \((k+1)\)-th iteration, its singular value decomposition is \([U_{k}, \Delta _{k}, V_{k}]=SVD(Q_{X})\). From Lemma 2.2, it follows that \(X_{k+1}=U_{k}\Lambda _{k}V_{k}^{\top }\), where \(\Lambda _{k}=\{(\Delta _{k}-\frac{1}{\mu _{k}}W)_{+}\}\). So from (2.14) it follows that

which shows that \(\{Y_{k}\}\) is bounded. Recalling the definition of augmented Lagrangian function (2.8), for the solution of \((k+1)\)-th iteration \(\{X_{k+1}, N_{k+1}\}\), there is

Noticing \(Y_{k+1}=Y_{k}+\mu _{k}(M-X_{k+1}-N_{k+1})\), we have

Therefore,

Since \(\{Y_{k}\}\) is bound, and there is \(\mu _{k+1}=\min (\rho \mu _{k}, \mu _{\text {max}})\), \(\Gamma (X_{k+1}, N_{k+1}, Y_{k}, \mu _{k})\) is bounded.

And because

\(\{X_{k}\}\) is bounded. From \(N_{k+1}=M-X_{k}+\frac{1}{\mu _{k}}Y_{k}\), it can be deduced that \(\{N_{k}\}\) is also bounded. So \(\{X_{k}, N_{k}, Y_{k}\}\) has at least one point of accumulation, and

We prove the convergence of \(X_{k}\) below. Because

we have

This completes the proof of Theorem 2.1. \(\square \)

Rights and permissions

About this article

Cite this article

Wen, C., Qian, W., Zhang, Q. et al. Algorithms of matrix recovery based on truncated Schatten p-norm. Int. J. Mach. Learn. & Cyber. 12, 1557–1570 (2021). https://doi.org/10.1007/s13042-020-01256-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01256-7