Abstract

Low-rank prior knowledge has indicated great superiority in the field of image processing. However, how to solve the NP-hard problem containing rank norm is crucial to the recovery results. In this paper, truncated weighted schatten-p norm, which is employed to approximate the rank function by taking advantages of both weighted nuclear norm and truncated schatten-p norm, has been proposed toward better exploiting low-rank property in image CS recovery. At last, we have developed an efficient iterative scheme based on alternating direction method of multipliers to accurately solve the nonconvex optimization model. Experimental results demonstrate that our proposed algorithm is exceeding the existing state-of-the-art methods, both visually and quantitatively.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Compressive Sensing (CS) [1,2,3,4,5] draws recently much more attention in the field of image processing. Compared with the traditional scheme of sampling followed by compressing, CS carries out the above two steps at the same time. From fewer measurements than required by Nyquist theorem, CS can efficiently reconstruct images under the condition that they satisfy the sparsity.

Traditional CS algorithms often exploit the sparsity in some transform domains [6,7,8,9,10,11]. Lately, the intrinsic low-rank property exploited by self-similarity and nonlocal operation, has widely used in many research fields, such as face recognition [12,13,14], image inpainting [15] and compressive sensing [16, 17]. These methods based on low-rank property have shown competitive performances in each area.

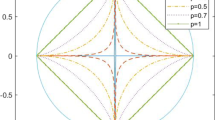

The success of these low-rank regularization based CS methods depends on the solution of the low-rank regularization problem. Unfortunately, the low-rank regularization problem is NP-hard and there is no method to solve it directly. Many methods use different substitution functions to approximate the rank function [18,19,20]. [18, 19] selected convex nuclear norm and employed singular value thresholding [21] to efficiently resolve the rank regularization problem. Compared with the original rank definition has the fact that all nonzero singular values play the same important role, the nuclear norm based approaches minimized the summarization of all the singular values. [16] chose logdet function (the logarithm sum of all the singular values) and can obtain better results than nuclear norm. However, logdet function is fixed and essentially deviates from the rank function. In order to obtain a competitive solution of low-rank regularization problem, many methods adopt distinct schemes and treat each singular value differently [22,23,24,25]. Weighted nuclear norm [22] assigned larger weights to larger singular values and smaller weights to smaller ones. So smaller singular values are penalized more than larger ones. Since the largest r (the rank) singular values will not impact the rank, in our previous work, truncated schatten-p norm [24] abandoned them and only minimized the summation of surplus singular values to the power of p.

In this paper, truncated weighted schatten-p norm (TWSP), which is taking advantages of both weighted nuclear norm and truncated schatten-p norm, has been firstly proposed toward better exploiting low-rank property in image CS recovery. At last, we further propose an efficient iterative scheme based on alternating direction method of multipliers to accurately solve the nonconvex optimization model.

The reminder is organized as follows. Section 2 simply reviews the weighted nuclear norm based image CS model and truncated schatten-p norm based image CS model. In Sect. 3, our proposed TWSP and an efficient iterative scheme for the optimization model are given in details. In Sect. 4, the effectiveness of our method is proved by several experiments. Finally, we summarize our proposed model in Sect. 5.

2 Related Work

In this section, we will review low-rank regularization based image CS recovery model. Suppose an image is defined as \(x \in {R^n}\) and its sampling matrix is \(\varPhi \in {R^{m \times n}}(m \ll n)\), the purpose of CS is to reconstruct the image x from the measurement \(y \in {R^m}\) (\(y = \varPhi x\)) than suggested by traditional Nyquist sampling theorem.

Usually, natural images have a large number of repetitive structures and these blocks with repetitive structures are located in the global scope of the images. So the rank of each block matrix grouped by corresponding nonlocal similar blocks is low. The intrinsic low-rank property exploited by self-similarity and nonlocal operation, has widely used in face recognition, compressive sensing and image denoising. These methods based on low-rank property have shown competitive performances in each area.

Suppose \({x_j} \in {R^d}\) is a block, we query for h most similar blocks from all image blocks. \({R_j}x = \left[ {{R_{{j_1}}}x,{R_{{j_2}}}x, \cdots {R_{{j_h}}}x} \right] \in {R^{d \times h}}\) (\({R_{{j_k}}}\) is the corresponding extraction matrix) is the j-th block matrix formed by these similar blocks. Then the CS recovery problem is formulated as follows:

where \(\left\| {\; \cdot \;} \right\| _F^2\) is the square sum of all elements.

Weighted nuclear norm [22] assigned larger weights to larger singular values and smaller weights to smaller ones. So smaller singular values are penalized more than larger ones. So the weighted nuclear norm based image CS model is represented as follows:

where \({w_i} = \rho \sqrt{h} /\left( {{\delta _i}\left( {A_j^s} \right) \mathrm{{ + }}\varepsilon } \right) \), \(\rho \) is a normal number and \(\varepsilon \mathrm{{ = }}{10^{\mathrm{{ - }}16}}\).

Since the largest r (the rank) singular values will not impact the rank, truncated schatten-p norm [24] abandoned them and only minimized the summation of surplus singular values to the power of p. Then the truncated schatten-p norm based image CS model is defined as

Assume \(U\varDelta {V^T}\) is the singular value decomposition of \({L_j}\), where \(\varDelta \in {R^{d \times h}}\), \(U = ({u_1}, \ldots {u_d}) \in {R^{d \times d}}\) and \(V = ({v_1}, \ldots {v_h}) \in {R^{h \times h}}\), then \(A \in {R^{r \times d}}\) and \(B \in {R^{r \times h}}\) are the corresponding transposition of former r columns from U and V respectively.

3 Truncated Weighted Schatten-p Norm for Image Compressive Sensing Recovery

In this paper, to obtain better results of low-rank regularization problem, truncated weighted schatten-p norm regularization, which is taking advantages of both weighted nuclear norm and truncated schatten-p norm, is presented toward better exploiting low-rank property. Specially, we only minimize the summation of few smallest singular values to the power of p multiplied by the certain weights.

From the above analysis, our method can be modeled as

Except for the compressive measurements, all the other information cannot be known. We introduce auxiliary variables and address the above problem by alternating direction method of multipliers, where the image x, the block group \({R_j}x\) and the corresponding auxiliary variable \({L_j}\) are computed in turn. Then Eq. (4) can be reformulated as

Equation (5) contains the following four subproblems.

3.1 \(\left\{ {A_j},{B_j} \right\} \) Subproblem

According to the definition of truncated schatten-p norm [24], when \({A^{t + 1}}\) and \({B^{t + 1}}\) are calculated based on singular value decomposition of \(L_j^t\), Eq. (6) gets the maximal value. Given intermediate estimated value \(L_j^t\), we first calculate the singular value decomposition of \(L_j^t\) (\(\left[ {U_j^t,\varDelta _j^t,V_j^t} \right] = svd(L_j^t)\)), and then estimate \({A^{t + 1}}\) and \({B^{t + 1}}\) by the following formula.

3.2 \({w_j}\) Subproblem

Since the larger singular values are corresponding to the energy of the major components, they are more important than the smaller ones. According to the definition of weighted nuclear norm [22], we set

3.3 \({L_j}\) subproblem

Although \({L_j}\) subproblem is nonconvex, we can obtain a suboptimal solution through a local minimization method referred to [4, 16, 24]. \(g(\delta ) = w_i^{t + 1}\delta _i^p\left( {{L_j}} \right) \) \(\left( {1 - {\delta _i}\left( {{{\left( {B_j^{t + 1}} \right) }^T}A_j^{t + 1}} \right) } \right) \) can be approximated using first-order Taylor expansion.

Suppose \({\delta ^{t + 1,k}} = \delta \left( {L_j^{t + 1,k}} \right) \) is the k-th iteration solution. Therefore, neglecting the constant term, Eq. (12) can be solved with by iteratively computing

Equation (13) denotes the weighted nuclear norm and has an analytical solution [4, 16, 24].

3.4 x Subproblem

Equation (9) is a quadratic problem and has a closed-form solution.

When an estimated image x is obtained, the variables \({w_j}\), \({A_j}\), \({B_j}\) and \({L_j}\) can be updated. Then \({L_j}\) is used to obtain a better estimated image. This process continues to iterate until convergence. The algorithm is summarized as bellows.

4 Experimental Results

In order to prove the effectiveness of our model, TWSP is compared with total variation based method (TV) [25], low-rank regularization based method (NLR) [16], weighted nuclear norm based method (WNN) [22] and truncated schatten-p norm based method (TSPN) [24]. Note that TV exploits the gradient sparsity; NLR, WNN and TSPN all employ nonconvex substitution function to exploit nonlocal low-rank property; TSPN shows the current state-of-the-art performance.

The parameters are set as follows: random sampling rate is \(\frac{{100m}}{n}\% \); the size of block is \(6 \times 6\) and the number of similar blocks is 45; we divide the image into overlapping blocks into reference blocks for every five pixels; the regularization parameter p is 0.6, r is 4, \(\lambda \) and \(\beta \) are tuned separately for each sampling rate. Eight test natural images are used which are shown in Fig. 1. The Peak Signal-to-Noise Ratio (PSNR) is employed to evaluate the different recovery results.

4.1 Experiments on Noiseless Measurements

The PSNR comparison when the sampling rates are 2.5%, 5%, 10%, 15% and 20% is provided in Table 1. From Table 1, we can see that (1) WNN, TSPN and TWSP outperform NLR for almost all the situations; (2) on average, WNN and TSPN get similar recovery results; (3) TWSP obtains the highest PSNRs in all the cases; (4) The average PSNR gains of TWSP over TV, NLR, WNN and TSPN are 3.66 dB, 1.57 dB, 0.39 dB and 0.36 dB respectively with 2.5% sampling rate. The visual results of three images are provided as Figs. 2, 3, and 4. We can see that TWSP can better exploit the nonlocal low-rank property and shows better performance than NLR, WNN and TSPN.

4.2 Experiments on Noisy Measurements

In this subsection, experiments with noisy measurements are carried out to verify the robustness of TWSP approach to Gaussian White noise. The sampling rate is 20%. The signal-to-noise ratio (\(SNR = 20*\log \frac{{\bar{A}}}{{\bar{D}}}\)), where \({\bar{A}}\) is the average value and \({\bar{D}}\) is the standard derivation of noise, varies from 15dB to 35 dB. The PSNR comparisons when SNR is 15 dB, 20 dB, 25 dB, 30 dB and 35 dB are provided in Table 2. TWSP achieves the highest PSNR results among all the methods. The average PSNR gains of TWSP over TV, NLR, WNN and TSPN can be as much as 5.07 dB, 2.80 dB, 1.80 dB and 1.82 dB respectively when SNR=15dB. Some visual results are shown in Figs. 5, 6 and 7, which verify the superiority of our proposed TWSP approach.

5 Conclusion

In this paper, we have presented a new approach toward image recovery via truncated weighted schatten-p norm which is taking advantages of both weighted nuclear norm and truncated schatten-p norm. Truncated weighted schatten-p norm can better exploit the nonlocal low-rank property than current CS methods based on low-rank regularization. In addition, we also propose an efficient iterative scheme based on alternating direction method of multipliers to accurately solve the nonconvex optimization model. Experimental results demonstrate that our proposed algorithm is exceeding the existing state-of-the-art methods, in terms of subjective and objective qualities.

References

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Cand, E.J., Wakin, M.B.: “people hearing without listening:” an introduction to compressive sampling. IEEE Signal Process. Mag. 25, 21–30 (2008)

Feng, L., Sun, H.: Blind compressive sensing method via local sparsity and nonlocal similarity. J. Nanjing Univ. Sci. Technol. 41, 399–404 (2017)

Feng, L., Sun, H., Sun, Q., Xia, G.: Compressive sensing via nonlocal low-rank tensor regularization. Neurocomputing 216, 45–60 (2016)

Zou, X., Feng, L., Sun, H.: Robust compressive sensing of multichannel eeg signals in the presence of impulsive noise. Inf. Sci. 429, 120–129 (2018)

Zhang, J., Zhao, D., Zhao, C., Xiong, R., Ma, S., Gao, W.: Compressed sensing recovery via collaborative sparsity. In: Data Compression Conference, pp. 287–296 (2012)

Yao, X., Han, J., Zhang, D., Nie, F.: Adaptively determining regularization parameters in non-local total variation regularization for image denoising. Electron. Lett. 51, 144–145 (2015)

Bioucas-Dias, J.M., Figueiredo, M.A.T.: A new twist: Two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16, 2992 (2007)

Becker, S., Bobin, J., Cands, E.J.: Nesta: A fast and accurate first-ordermethod for sparse recovery. SIAM J. Imaging Sci. 4, 1–39 (2009)

Javaherian, A., Soleimani, M., Moeller, K., Movafeghi, A., Faghihi, R.: An accelerated version of alternating direction method of multipliers for tv minimization in eit. Appl. Math. Model. 40, 8985–9000 (2016)

Bertalmio, M., Caselles, V., Roug, B., Sol, A.: Tv based image restoration with local constraints. J. Sci. Comput. 19, 95–122 (2003)

Wang, Y.C.F., Wei, C.P., Chen, C.F.: Low-rank matrix recovery with structural incoherence for robust face recognition. In: Computer Vision and Pattern Recognition, pp. 2618–2625 (2012)

Wei, C.P., Chen, C.F., Wang, Y.C.F.: Robust face recognition with structurally incoherent low-rank matrix decomposition. IEEE Trans. Image Process. 23, 3294–3307 (2014)

Nguyen, H., Yang, W., Shen, F., Sun, C.: Kernel low-rank representation for face recognition. Neurocomputing 155, 32–42 (2015)

Han, J., Quan, R., Zhang, D., Nie, F.: Robust Object Co-Segmentation Using Background Prior. IEEE Trans. Image Process. 27, 1639–1651 (2018)

Dong, W., Shi, G., Li, X., Ma, Y.: Compressive sensing via nonlocal low-rank regularization. IEEE Trans. Image Process. 23, 3618–3632 (2014)

Wang, H., Zhao, R., Cen, Y.: Rank adaptive atomic decomposition for low-rank matrix completion and its application on image recovery. Neurocomputing 145, 374–380 (2014)

Cabral, R., Torre, F.D.L., Costeira, J.P., Bernardino, A.: Unifying nuclear norm and bilinear factorization approaches for low-rank matrix decomposition. In: IEEE International Conference on Computer Vision, pp. 2488–2495 (2014)

Toh, K.C., Yun, S.: An accelerated proximal gradient algorithm for nuclear norm regularized least squares problems. Pac. J. Optim. 6, 615–640 (2009)

Wang, J., Wang, M., Hu, X., Yan, S.: Visual data denoising with a unified schatten-p norm and lq norm regularized principal component pursuit. Pattern Recognit. 48, 3135–3144 (2015)

Cai, J.F., Candes, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20, 1956–1982 (2008)

Gu, S., Zhang, L., Zuo, W., Feng, X.: Weighted nuclear norm minimization with application to image denoising. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2862–2869 (2014)

Xie, Y., Gu, S., Liu, Y., Zuo, W., Zhang, W., Zhang, L.: Weighted schatten p-norm minimization for image denoising and background subtraction. IEEE Trans. Image Process. 25, 4842–4857 (2015)

Feng, L., Sun, H., Sun, Q., Xia, G.: Image compressive sensing via truncated schatten-p norm regularization. Signal Process. Image Commun. 47, 28–41 (2016)

Zhang, J., Liu, S., Xiong, R., Ma, S., Zhao, D.: Improved total variation based image compressive sensing recovery by nonlocal regularization. In: IEEE International Symposium on Circuits and Systems, pp. 2836–2839 (2013)

Acknowledgments

The authors would like to express their gratitude to the anonymous referees as well as the Editor and Associate Editor for their valuable comments which lead to substantial improvements of the paper. This work was supported by High-level Talent Scientific Research Foundation of Jinling Institute of Technology (No. jit-b-201801), National Natural Science Foundation of China (No. 61772272), Doctor Initial Captional of Jinling Institute of Technology Nanjing (No. jit-b-201508), Jiangsu Key Laboratory of Image and Video Understanding for Social Safety (Nanjing University of Science and Technology) (No. 30916014107).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Feng, L., Zhu, J. (2018). Image Recovery via Truncated Weighted Schatten-p Norm Regularization. In: Sun, X., Pan, Z., Bertino, E. (eds) Cloud Computing and Security. ICCCS 2018. Lecture Notes in Computer Science(), vol 11068. Springer, Cham. https://doi.org/10.1007/978-3-030-00021-9_50

Download citation

DOI: https://doi.org/10.1007/978-3-030-00021-9_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00020-2

Online ISBN: 978-3-030-00021-9

eBook Packages: Computer ScienceComputer Science (R0)