Abstract

Extended grey numbers (EGNs), integrated with discrete grey numbers and continuous grey numbers, have a powerful capacity to express uncertainty and thus have been widely studied and applied to solve multi-criteria decision-making (MCDM) problems that involve uncertainty. Considering stochastic MCDM problems with interval probabilities, we propose a grey stochastic MCDM approach based on regret theory and Technique for Order Preference by Similarity to Ideal Solution (TOPSIS), in which the criteria values are expressed as EGNs. We also construct the utility value function, regret value function, and perceived utility value function of EGNs, and we rank the alternatives in accordance with classical TOPSIS method. Finally, we provide two examples to illustrate the method and make comparison analyses between the proposed approach and existing methods. The comparisons suggest that the proposed approach is feasible and usable, and it provides a new method to solve stochastic MCDM problems. It not only fully considers decision-makers’ bounded rationality for decision-making, but also enriches and expands the application of regret theory.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Decision-making is the process of identifying and choosing alternatives based on the values and preferences of the decision-makers. Because of the uncertainty and imprecision of real-life decision problems, a variety of methods have been proposed in the literature, such as multi-criteria decision-making (MCDM) [11, 16, 27, 46] and uncertainty decision-making [49–52]. MCDM is an important component of decision theory and method research, which has been studied and applied to many fields, including agriculture [11], medical care [9], economics [41], human resources [30], and investment [60].

In traditional MCDM problems, a decision-maker uses precise numbers to express his or her preferences. However, due to the increasing complexity and uncertainty of MCDM problems, decision-makers are no longer able to reduce decisions to finite, precise numbers. Instead, they can provide a range of numbers, also called interval grey numbers [7], to evaluate alternative decisions based on uncertain information. For example, a decision-maker can use the age range 18–25 to classify a person as a “youth”. In this instance, the interval age range may provide a better representation than would a single year. Nevertheless, in some cases interval grey numbers cannot fully depict the information available in a discrete grey number. For instance, if a decision-maker wants to choose a single value from the choices 18, 20, and 25, the discrete grey number \(\{ 18,20,25\}\) would be appropriate, whereas the interval grey number \([18,25]\) cannot accurately represent this situation.

Liu [25] explained that discrete grey numbers can coexist with interval grey numbers, and Yang [56] proposed the use of extended grey numbers (EGNs), which combine both intervals and discrete sets of numbers and can therefore express uncertainty in a more powerful way. Suppose a company has two channels through which to add investments: in one channel, stockholders add 1000 or 2000 dollars, and in the other channel the employees collect 3000 to 4000 dollars. In this case, the EGN \(\{ 1000,2000\} \cup [3000,4000]\) expresses the total investment, which neither the discrete grey number nor the interval grey number do individually. Essentially, grey numbers—including discrete grey numbers, interval grey numbers, and EGNs—are the most basic concepts of grey systems theory, and they are more flexible and practical in dealing with problems involving uncertain information.

Since Deng [7, 8] first proposed grey systems theory, it has been an excellent tool in researching grey MCDM problems. At present, grey MCDM problems have been widely studied and applied to various fields, such as engineering design, economics, supply chain management, and water source protection. Stanujkic [34] proposed an extended multi-objective optimization on the basis of ratio analysis (MOORA) method, in which the criteria values are interval grey numbers. Wang et al. [47] defined the expected probability degree and proposed a grey stochastic MCDM method, in which criteria weights are incompletely certain and alternatives’ criteria values take the form of interval grey numbers. Su et al. [35] proposed a novel hierarchical grey decision-making trial and evaluation laboratory method to identify and analyze criteria values in incomplete information. Chithambaranathan et al. [6] integrated interval grey numbers with ELimination and Choice Translating REality (ELECTRE) and VIsekriterijumska optimizacija i KOmpromisno Resenje (VIKOR) to evaluate the environmental performance of service supply chains. Kuang et al. [17] integrated continuous grey numbers with linguistic expressions and proposed an approach based on Preference Ranking Organization Method for Enrichment Evaluation II (PROMETHEE II) for handling uncertainty in MCDM. The aforementioned grey MCDM problems generally express criteria values as interval grey numbers. However, limited research has been conducted on grey MCDM problems where criteria values are expressed as EGNs.

In some practical MCDM problems, decision-makers may find that the criteria values are random variables with unknown probability density functions or probability mass functions. These problems are called stochastic MCDM problems, and they have extensive application backgrounds [23, 27, 37, 48, 55]. Liu et al. [23] proposed a method based on prospect theory to solve stochastic MCDM problems with interval probability, in which criteria values take the form of uncertain linguistic variables. Okul et al. [27] proposed a method for addressing stochastic MCDM problems by combining the Stochastic Multi-criteria Acceptability Analysis (SMAA) and the Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) method. Tan et al. [37] proposed another method based on prospect stochastic dominance for solving discrete stochastic MCDM problems with aspiration levels. Most existing studies that solve stochastic MCDM problems use real numbers as the criteria values, while only a few studies use grey numbers. Owing to the increasing complexity and uncertainty of decision problems, MCDM methods in which criteria values are simultaneously random and grey are increasingly used in the practical decision-making process.

Currently available methods for solving MCDM problems can be classified into two categories: methods based on complete rationality and methods based on bounded rationality. Within these two categories, more specific decision-making models and methods are based on the rational choice model using Expected Utility Theory (EUT). These can be further subdivided into three types as follows. (1) The first subtype includes methods based on distance measures, including TOPSIS [13] and VIKOR [28]. The TOPSIS method determines the solution with the shortest distance from the ideal solution and the farthest distance from the negative-ideal solution; it possesses the merits of robust logical structure and simple computation procedure as well as the ability to consider the ideal and negative-ideal solution simultaneously [16]. The VIKOR method provides the maximum group utility of the majority and the minimum of the individual regret of the opponent; its calculations are simple and straightforward. (2) The second subtype of methods includes those based on aggregation operators, such as the arithmetic aggregation operator [22], the geometric aggregation operator [22], the Heronian mean (HM) operator [24, 57], the Prioritized Average (PA) operator [45, 53], the Bonferroni mean operator [3, 38, 39], and the Choquet integral operator [36, 44]. These aggregation operators, which provide a comprehensive evaluation value for each alternative, are widely used in solving MCDM problems. However, the calculations involved are complex, and different operators consistently produce different ranking results. (3) The third subtype of methods is based on outranking relation models such as ELECTRE [32, 42] and PROMETHEE [4]. The ELECTRE method considers the minimum individual regret, while the PROMETHEE method focuses on the maximum group utility.

In the aforementioned MCDM methods based on complete rationality, the decision-makers are fully rational when evaluating the given alternatives. That is to say, their information is complete, their cognition is infinite, and they have sufficient time. However, during the real-life decision-making process, decision-makers’ rationality is limited by the information they are given, their personal cognitive limitations, and the amount of time provided for them to make the decision. As a result, their real-life behaviors usually depart from the predictions using EUT. Furthermore, various contradictions to EUT have been identified, such as the Allias paradox [1] and the Ellsberg paradox [10]. Responding to the fact that people only have limited rationality in a decision-making process, Simon [33] considered and proposed the bounded rationality principle. Ever since Simon’s [33] pioneer work in the field, the concept of bounded rationality has been increasingly emphasized in MCDM methods [14, 43]. The most commonly used decision-making methods that are based on bounded rationality are prospect theory [15, 40] and regret theory [2, 26]. Both of these theories are designed to preserve the basic structure of EUT, but they also account for behaviors not predicted by EUT [18]. For example, in prospect theory, researchers use value and probability weight, respectively, to replace utility and probability in EUT. In regret theory, researchers compare the obtained outcome with the outcome that could have been obtained had the decision-maker chosen a different option to introduce a correction to change the utility assessment.

In prospect theory, alternatives are evaluated by different functions in terms of gains and losses with respect to one or more reference points. The calculation functions contain five parameters, \(\alpha ,\beta ,\theta ,\gamma \, and \, \delta\), which depend on the psychological behavior of the decision-makers and are therefore difficult to determine. \(\alpha\) and \(\beta\) are the concave-convex degree of the region power function of the gains and losses, \(\theta\) shows that the region value power function is steeper for losses than for gains, \(\gamma\) is the risk gain attitude coefficient, and \(\delta\) is the risk loss attitude coefficient. Unlike prospect theory, regret theory does not need to specify a reference point; furthermore, the calculation functions only contain two parameters, the risk aversion coefficient and the regret aversion coefficient, resulting in more extensive applications. The regret reflects the difference in decision-makers’ positions by choosing one of the unselected alternatives instead of the selected alternative. Rejoice reflects the additional pleasure gained from knowing that the best alternative was selected.

Many studies have focused on regret theory because of its descriptive power. Özerol et al. [29] investigated the findings of regret theory and explored the parallel between regret theory and the PROMETHEE II method. Zhang et al. [58] proposed a decision analysis method based on regret theory to solve stochastic MCDM problems in which both the criteria values and probabilities of the states take the form of interval numbers. Zhang et al. [59] considered the regret aversion characteristic of decision-makers’ psychological behavior and proposed a method for dealing with stochastic MCDM problems. However, few attempts have been made to apply regret theory to solving grey stochastic MCDM problems.

As a result, this paper proposes a stochastic MCDM method using regret theory, in which criteria values take the form of EGNs. For simplicity, the classical TOPSIS method is combined with the aforementioned grey stochastic MCDM method. Other methods may be substituted and studied in the future. In the proposed method, the utility value function and regret value function of EGNs are constructed, and then the perceived utility value is obtained by calculating the sum of the utility value and regret value for the given criteria. By using the function of interval probability, the overall perceived utility of alternatives is calculated in the form of interval grey numbers. Based on the TOPSIS method, all alternatives are ranked according to their relative closeness. The contributions and benefits of the proposed method include (1) applying EGNs to evaluate the incomplete information, (2) modeling the decision problem while considering the decision-makers’ regret and rejoice values, and (3) combining regret theory with the TOPSIS method to solve grey stochastic MCDM problems.

This paper is organized as follows: Sect. 2 reviews key concepts, including interval probability, grey numbers and EGNs, grey random variables, and regret theory. Section 3 focuses on the grey stochastic MCDM approach based on regret theory and TOPSIS, with criteria values taking the form of EGNs. Section 4 provides two specific examples and compares our experimental results with results from the existing methods. Finally, Sect. 5 discusses conclusions.

2 Preliminaries

This section reviews the concepts of interval probability, grey numbers and EGNs, grey random variables, and regret theory.

2.1 Interval probability

Consider a variable \(x\) taking its values in a finite set \(X = \{ x_{1} ,x_{2} , \ldots ,x_{n} \}\) and a set of intervals \([L_{i} ,U_{i} ] \, (i = 1,2, \ldots ,n)\) satisfying \(0 \le L_{i} \le U_{i} \le 1 \, (i = 1,2, \ldots ,n)\). We can interpret these intervals as interval probability.

Definition 1 [12]:

A set of intervals \([L_{i} ,U_{i} ] \, (i = 1,2, \ldots ,n)\) with \(0 \le L_{i} \le U_{i} \le 1 \, (i = 1,2, \ldots ,n)\) that describe the probability of a fundamental event is called a \(n\)-dimensional probability interval \((n - PRI)\). Let \(L = (L_{1} ,L_{2} , \ldots ,L_{n} )\) and \(U = (U_{1} ,U_{2} , \ldots ,U_{n} )\), then \(n - PRI\) can be denoted as \(n - PRI(L,U)\).

Definition 2 [12]:

Let \(p_{1} ,p_{2} , \ldots ,p_{n}\) be a group of positive real numbers. If \(L_{i} \le p_{i} \le U_{i} \, (i = 1,2, \ldots ,n)\) and \(\sum\nolimits_{i = 1}^{n} {p_{i} = 1}\), then \(n - PRI(L,U)\) is reasonable. Otherwise, \(n - PRI(L,U)\) is unreasonable.

Lemma 1 [54]:

A \(n - PRI(L,U)\) is reasonable, iff \(\sum\nolimits_{i = 1}^{n} {L_{i} \le 1 \le } \sum\nolimits_{i = 1}^{n} {U_{i} }\).

Definition 3 [54]:

If a \(n - PRI(L,U)\) is reasonable, the probability intervals \([L_{i} ,U_{i} ] { }(i = 1,2, \ldots ,n)\) can be transformed into more precise probability intervals \([\overline{L}_{i} ,\overline{U}_{i} ] { }(i = 1,2, \ldots ,n)\). The formulae are given as follows:

2.2 Grey numbers and extended grey numbers

A grey number is an uncertain number, which is usually represented as closed intervals and used to describe insufficient and incomplete information.

Definition 4 [25]:

A grey number is a number with clear upper and lower boundaries but has an unknown position within these boundaries, the mathematical expression of which is

Here, \({\tilde{ \otimes }}\) is a grey number, and \(a\) and \(b\) are the upper and lower limits of the given information, \(a,b \in R\). A grey number represents the range of the possible variance of the underlying number, which is the same as an interval value with the same upper and lower limit. Thus, it can also be called an interval grey number.

Definition 5 [19]:

Let \({\tilde{ \otimes }}_{1} \in [a,b]\) and \({\tilde{ \otimes }}_{2} \in [c,d]\) be two interval grey numbers, and let \(l({\tilde{ \otimes }}_{1} ) = b - a\) and \(l({\tilde{ \otimes }}_{2} ) = d - c\) be the lengths of the interval grey numbers, respectively. In this case, the expected probability degree of \({\tilde{ \otimes }}_{1}\) against \({\tilde{ \otimes }}_{2}\) is defined as follows:

Definition 6 [19]:

The relationship between \({\tilde{ \otimes }}_{1}\) and \({\tilde{ \otimes }}_{2}\) can be determined as follows:

(1) If \(P({\tilde{ \otimes }}_{1} \ge {\tilde{ \otimes }}_{2} ) < 0.5\), then \({\tilde{ \otimes }}_{1}\) is less than \({\tilde{ \otimes }}_{2}\), which can be denoted as \({\tilde{ \otimes }}_{1} < {\tilde{ \otimes }}_{2}\).

(2) If \(P({\tilde{ \otimes }}_{1} \ge {\tilde{ \otimes }}_{2} ) = 0.5\), then \({\tilde{ \otimes }}_{1}\) is equal to \({\tilde{ \otimes }}_{2}\), which can be denoted as \({\tilde{ \otimes }}_{1} = {\tilde{ \otimes }}_{2}\).

(3) If \(P({\tilde{ \otimes }}_{1} \ge {\tilde{ \otimes }}_{2} ) > 0.5\), then \({\tilde{ \otimes }}_{1}\) is larger than \({\tilde{ \otimes }}_{2}\), which can be denoted as \({\tilde{ \otimes }}_{1} > {\tilde{ \otimes }}_{2}\).

Definition 7 [21]:

Let \({\tilde{ \otimes }}_{1} \in [a,b]\) and \({\tilde{ \otimes }}_{2} \in [c,d]\) be two interval grey numbers. The Euclidean distance between \({\tilde{ \otimes }}_{1}\) and \({\tilde{ \otimes }}_{2}\) can be calculated as follows:

Grey numbers are usually represented as closed intervals, but continuous intervals cannot fully reveal the uncertainty of the given information. In light of this, Yang [56] provided a new definition of grey numbers that considers both continuous and discrete grey numbers.

Definition 8 [56]:

Let \(\otimes\) be a union set of closed or open intervals. An EGN can be represented as follows:

Here, \(i = 1,2, \ldots ,n\), \(n\) is an integer, and \(0 < n < \infty\), \(a_{i} ,b_{i} \in R\) and \(b_{i - 1} < a_{i} \le b_{i} < a_{i + 1}\). The set of all EGNs that meet Eq. (4) is represented as \(R( \otimes )\).

Theorem 1:

Let \(\otimes\) be an EGN. The following properties are true:

(1) \(\otimes\) is a continuous EGN \(\otimes = [a_{1} ,b_{n} ]\) iff \(a_{i} \le b_{i - 1}\) (\(\forall i > 1\)) or \(n = 1\);

(2) \(\otimes\) is a discrete EGN \(\otimes = \{ a_{1} ,a_{2} , \cdots ,a_{n} \}\) iff \(a_{i} = b_{i}\);

(3) \(\otimes\) is a mix EGN iff only part of its intervals shrink to crisp numbers and the others remain as intervals.

Definition 9 [56]:

Let \(\otimes_{1} = \bigcup\nolimits_{i = 1}^{n} {[a_{i} ,b_{i} ]}\) and \(\otimes_{2} = \bigcup\nolimits_{j = 1}^{m} {[c_{j} ,d_{j} ]}\) be two EGNs, \(a_{i} \le b_{i} (i = 1,2, \ldots ,n)\), \(c_{j} \le d_{j} (j = 1,2, \ldots ,m)\), \(\lambda \in R,\lambda \ge 0\), then:

(1) \(\otimes_{1} + \otimes_{2} = \bigcup\nolimits_{i = 1}^{n} {\bigcup\nolimits_{j = 1}^{m} {[a_{i} + c_{j} ,b_{i} + d_{j} ]} } ;\)

(2) \(- \otimes_{1} = \bigcup\nolimits_{i = 1}^{n} {[ - b_{i} , - a_{i} ]} ;\)

(3) \(\otimes_{1} - \otimes_{2} = \bigcup\nolimits_{i = 1}^{n} {\bigcup\nolimits_{j = 1}^{m} {[a_{i} - d_{j} ,b_{i} - c_{j} ]} } ;\)

(4) \(\otimes_{1} \times \otimes_{2} = \bigcup\nolimits_{i = 1}^{n} {\bigcup\nolimits_{j = 1}^{m} {[\hbox{min} \{ a_{i} c_{j} ,a_{i} d_{j} ,b_{i} c_{j} ,b_{i} d_{j} \} ,\hbox{max} \{ a_{i} c_{j} ,a_{i} d_{j} ,b_{i} c_{j} ,b_{i} d_{j} \} ]} } ;\)

(5) \(\frac{{ \otimes_{1} }}{{ \otimes_{2} }} = \bigcup\nolimits_{i = 1}^{n} {\bigcup\nolimits_{j = 1}^{m} {[\hbox{min} \{ \frac{{a_{i} }}{{c_{j} }},\frac{{a_{i} }}{{d_{j} }},\frac{{b_{i} }}{{c_{j} }},\frac{{b_{i} }}{{d_{j} }}\} ,\hbox{max} \{ \frac{{a_{i} }}{{c_{j} }},\frac{{a_{i} }}{{d_{j} }},\frac{{b_{i} }}{{c_{j} }},\frac{{b_{i} }}{{d_{j} }}\} ]} } (c_{j} \ne 0,d_{j} \ne 0(j = 1,2, \ldots ,m));\)

(6) \(\lambda \otimes_{1} = \bigcup\nolimits_{i = 1}^{n} {[\lambda a_{i} ,\lambda b_{i} ]} ;\)

(7) \(\otimes_{1}^{\lambda } = \bigcup\nolimits_{i = 1}^{n} {[min(a_{i}^{\lambda } ,b_{i}^{\lambda } ),max(a_{i}^{\lambda } ,b_{i}^{\lambda } )]} .\)

The expected probability degree of EGNs is provided, inspired by the expected probability degree of the grey numbers.

Definition 10:

Let \(\otimes_{1} = \bigcup\nolimits_{i = 1}^{n} {[a_{i} ,b_{i} ]}\) and \(\otimes_{2} = \bigcup\nolimits_{j = 1}^{m} {[c_{j} ,d_{j} ]}\) be two EGNs, \(\otimes_{1} , \otimes_{2} \in R( \otimes )\). The expected probability degree of \(\otimes_{1}\) against \(\otimes_{2}\) is defined as follows:

Definition 11:

Let \(\otimes_{1} = \bigcup\nolimits_{i = 1}^{n} {[a_{i} ,b_{i} ]}\) and \(\otimes_{2} = \bigcup\nolimits_{j = 1}^{m} {[c_{j} ,d_{j} ]}\) be any two EGNs, \(\otimes_{1} , \otimes_{2} \in R( \otimes )\). The order relationship between these EGNs is defined as follows:

(1) If \(P( \otimes_{1} \ge \otimes_{2} ) < 0.5\), then \(\otimes_{1}\) is less than \(\otimes_{2}\), which can be denoted as \(\otimes_{1} < \otimes_{2}\).

(2) If \(P( \otimes_{1} \ge \otimes_{2} ) = 0.5\), then \(\otimes_{1}\) is equal to \(\otimes_{2}\), which can be denoted as \(\otimes_{1} = \otimes_{2}\).

(3) If \(P( \otimes_{1} \ge \otimes_{2} ) > 0.5\), then \(\otimes_{1}\) is larger than \(\otimes_{2}\), which can be denoted as \(\otimes_{1} > \otimes_{2}\).

2.3 Grey random variables

Discrete grey random variables, called grey random variables in this paper, are a group of random variables made up of a finite set of EGNs. A grey random variable is denoted as \(\xi ( \otimes )\), and the probability distribution of \(\xi ( \otimes )\) is shown in Table 1.

In Table 1, \(\otimes_{i}\) is the ith value that would be taken by \(\xi ( \otimes )\), while \(n\) is the number of values that a grey random variable can have. \(p_{i}\) is the probability with respect to \(\otimes_{i}\), and \(\sum\nolimits_{i = 1}^{n} {p_{i} } = 1\). The probability distribution function can be denoted as \(f(\xi ( \otimes ) = \otimes_{i} ) = p_{i}\).

2.4 Regret theory

Loomes and Sugden [26] and Bell [2] introduced regret theory separately. They defined regret as a reflection of the difference in a decision-maker’s position resulting from choosing one of the unselected alternatives instead of the selected alternative. They defined rejoice as a reflection of the additional pleasure gained from knowing that the best alternative was selected.

In regret theory, these authors developed a modified utility function to measure the expected value of satisfaction derived from choosing alternative \(A\) and rejecting alternative \(B\). Let \(x\) and \(y\) be the consequence of choosing alternative \(A\) and \(B\), respectively, then the modified utility function of achieving \(x\) can be denoted as

Here, \(v(x)\) represents the utility value that the decision-maker would derive from consequence \(A\) if he experiences it without having to choose. \(R\left( {v(x) - v(y)} \right)\) indicates the regret-rejoice value, and the difference \(v(x) - v(y)\) is the loss/gain value of having chosen \(A\) rather than the forgone choice \(B\). The regret-rejoice function \(R( \cdot )\) is monotonically increasing and decreasingly concave, with \(R^{\prime}( \cdot ) > 0\), \(R^{\prime\prime}( \cdot ) < 0\) and \(R(0) = 0\).

The regret theory was initially derived for pair-wise choices, and since then it has been extended to general choice sets [31]. Let \(x_{1} ,x_{2} , \ldots ,x_{m}\) be the consequence of choosing alternatives \(A_{1} ,A_{2,} \ldots ,A_{m}\), respectively. \(A_{i}\) is the ith alternative, and \(x_{i}\) is the consequence of \(A_{i}\). The modified utility function of achieving \(x_{i}\) can be denoted as

Here, \(x^{*} = \hbox{max} \{ x_{i} \left| {i = 1,2, \ldots ,m} \right.\}\). \(R\left( {v(x_{i} ) - v(x^{*} )} \right)\) indicates the regret value, which is always non-positive. Rational decision-makers choose the optimal alternative by maximizing their expected modified utility of all possible alternatives.

3 Grey stochastic MCDM approach based on regret theory and TOPSIS

This section introduces the grey stochastic MCDM approach based on regret theory and TOPSIS.

3.1 Description of the decision-making problem

Consider the following grey stochastic MCDM problem: assume that \(A = \{ a_{1} ,a_{2} , \ldots ,a_{m} \}\) is a discrete alternative set of \(m\) possible alternatives, and that \(C = \{ c_{1} ,c_{2} , \ldots ,c_{n} \}\) is a set of \(n\) criteria. The weighted vector of the criteria is \(W = (w_{1} ,w_{2} , \ldots ,w_{n} )\), where \(w_{j} \in [ 0 , 1 ] { }\) and \(\sum\nolimits_{j = 1}^{n} {w_{j} } = 1\).

Due to the uncertainty of the decision-making environment, the alternatives would have some possible status. Let \(\varTheta_{j} = (\theta_{1} ,\theta_{2} , \ldots ,\theta_{{l_{j} }} )\) be the possible status belonging to the criterion \(c_{j}\), and let \(p_{j}^{t} = [p_{j}^{Lt} ,p_{j}^{Ut} ]\) be the interval probability of the status \(\theta_{t} \, (1 \le t \le l_{j} )\) that belongs to the criterion \(c_{j}\), where \(0 \le p_{j}^{Lt} \le p_{j}^{Ut} \le 1\), \(\sum\nolimits_{t = 1}^{{l_{j} }} {p_{j}^{Lt} } \le 1\) and \(\sum\nolimits_{t = 1}^{{l_{j} }} {p_{j}^{Ut} } \ge 1\). Suppose that the characteristics of the alternative \(a_{i}\) with respect to \(c_{j}\) are represented by the EGN \(\otimes u_{ij}\), the t-th status of which is denoted as \(\otimes u_{ij}^{t} = \bigcup\nolimits_{k = 1}^{l} {[a_{ijk}^{t} ,b_{ijk}^{t} ]}\), and \(a_{ij1}^{t} \le b_{ij1}^{t} < a_{ij2}^{t} \le b_{ij2}^{t} < \cdots < a_{ijl}^{t} \le b_{ijl}^{t}\). The grey stochastic decision matrix can be represented as \(R^{t} = \left( { \otimes u_{ij}^{t} } \right)_{m \times n}\). We are then able to rank the alternatives according to the presented information.

3.2 Decision-making procedure

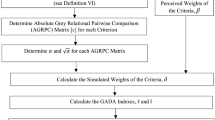

For the aforementioned grey stochastic MCDM problem, the solving procedure can be summarized as follows.

-

Step 1:

Normalize the decision matrix.

In order to eliminate the influence of different dimensions of physical quantity, the decision matrix needs to be normalized so as to transform the various criteria values into comparable values. The criteria are normally classified into two types: criteria of the maximizing type and criteria of the minimizing type. If the criteria are of the maximizing type, the transforming formula is as follows:

$$r_{ij}^{t} = \frac{{ \otimes u_{ij}^{t} }}{{b_{ijk}^{t(max)} }} = \bigcup\limits_{k = 1}^{l} {\left[ {\frac{{a_{ijk}^{t} }}{{b_{ijk}^{t(max)} }},\frac{{b_{ijk}^{t} }}{{b_{ijk}^{t(max)} }}} \right]} .$$(8)Here, \(b_{ijk}^{t(max)} = \mathop {\hbox{max} }\nolimits_{1 \le k \le l,1 \le i \le m} b_{ijk}^{t}\).

If the criteria are of the minimizing type, the formula is as follows:

$$r_{ij}^{t} = \frac{{a_{ijk}^{t(\hbox{min} )} }}{{ \otimes u_{ij}^{t} }} = \bigcup\limits_{k = 1}^{l} {\left[ {\frac{{a_{ijk}^{t(\hbox{min} )} }}{{b_{ijk}^{t} }},\frac{{a_{ijk}^{t(\hbox{min} )} }}{{a_{ijk}^{t} }}} \right]} .$$(9)Here, \(a_{ijk}^{t(\hbox{min} )} = \mathop {\hbox{min} }\nolimits_{1 \le k \le l,1 \le i \le m} a_{ijk}^{t}\).

The normalized decision matrix is denoted by \(N^{t} = \left( {r_{ij}^{t} } \right)_{m \times n}\), where \(r_{ij}^{t} = \bigcup\nolimits_{k = 1}^{l} {[\underline{r}_{ijk}^{t} ,\overline{r}_{ijk}^{t} ]}\).

-

Step 2:

Identify the ideal point.

Identify the ideal point \(I = (I_{1}^{ + } ,I_{2}^{ + } , \ldots ,I_{n}^{ + } )\). Let \(I_{j}^{ + } = (r_{j}^{1 + } ,r_{j}^{2 + } , \ldots ,r_{j}^{{l_{j} + }} )\) be the ideal point related to criterion \(c_{j}\), and \(1 \le t \le l_{j}\); then \(r_{j}^{t + } = [\underline{r}_{j}^{t + } ,\overline{r}_{j}^{t + } ]\) can be calculated according to the following formula:

$$\underline{r}_{j}^{t + } = \overline{r}_{j}^{t + } = \hbox{max} \{ \overline{r}_{ijk}^{t} \left| {1 \le i \le m,1 \le j \le n,1 \le t \le l_{j} } \right.,1 \le k \le l\} .$$(10) -

Step 3

Calculate the utility values and regret values concerning the criteria.

-

(a)

The utility function should be built before calculating the criteria’s utility values. Due to the decision-maker’s risk aversion, the utility function \(v(x)\) is monotonically increasing concave, with \(v^{\prime}(x) > 0\) and \(v^{\prime\prime}(x) < 0\). Here, the power function is used as the criteria value of the utility function.

$$v(x) = x^{\alpha } ,$$(11)where \(\alpha\) is the coefficient of risk aversion and \(0 < \alpha < 1\). If parameter \(\alpha\) is smaller, the decision-maker’s risk aversion is greater.

For the criteria value \(r_{ij}^{t} = \bigcup\nolimits_{k = 1}^{l} {[\underline{r}_{ijk}^{t} ,\overline{r}_{ijk}^{t} ]}\), the actual criteria value \(x\) is in the range of \(\bigcup\nolimits_{k = 1}^{l} {[\underline{r}_{ijk}^{t} ,\overline{r}_{ijk}^{t} ]}\) and subject to various kinds of distribution [58]. Let \(f_{ij}^{t} (x)\) be the probability density function, then the utility value \(v_{ij}^{t}\) can be calculated with the following formula:

$$v_{ij}^{t} = \frac{1}{l}\sum\limits_{k = 1}^{l} {\int_{{\underline{r}_{ijk}^{t} }}^{{\overline{r}_{ijk}^{t} }} {v(x)f_{ijk}^{t} (x)dx} } , \, 1 \le i \le m,1 \le j \le n,1 \le t \le l_{j} ,1 \le k \le l.$$(12)Here, two kinds of distribution are taken into consideration.

-

(1)

Uniform distribution

Uniform distribution is one of the most common distributions. For a grey random variable \(x\) following uniform distribution, the probability density function is

$$f_{ijk}^{t} (x) = \left\{ \begin{array}{ll} \frac{1}{{\overline{r}_{ijk}^{t} - \underline{r}_{ijk}^{t} }},&\quad \underline{r}_{ijk}^{t} \le x \le \overline{r}_{ijk}^{t} \hfill \\ 0,&\quad {\text{otherwise}} \end{array} \right.\quad1 \le i \le m,1 \le j \le n,1 \le t \le l_{j} ,1 \le k \le l.$$(13) -

(2)

Normal distribution

Normal distribution is extremely important in statistics and is often used in the natural and social sciences for real-valued random variables with unknown distributions [5]. For a grey random variable \(x\) following normal distribution, the probability density function is:

$$f_{ijk}^{t} (x) = \left\{ \begin{array}{ll} \frac{1}{{\sqrt {2\pi } \sigma_{ijk}^{t} }}exp\left[ {{\raise0.7ex\hbox{${ - (x - \mu_{ijk}^{t} )^{2} }$} \!\mathord{\left/ {\vphantom {{ - (x - \mu_{ijk}^{t} )^{2} } {2(\sigma_{ijk}^{t} )^{2} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${2(\sigma_{ijk}^{t} )^{2} }$}}} \right], &\quad \underline{r}_{ijk}^{t} \le x \le \overline{r}_{ijk}^{t} \\ 0,&\quad{\text{otherwise}} \\ \end{array} \right. \quad 1 \le i \le m,1 \le j \le n,1 \le t \le l_{j} ,1 \le k \le l.$$(14)Here, the mean value is \(\mu_{ijk}^{t} = {{( {\underline{r}_{ijk}^{t} + \overline{r}_{ijk}^{t} } )} / 2}\), and the standard deviation is \(\sigma_{ijk}^{t} = {{( {\overline{r}_{ijk}^{t} - \underline{r}_{ijk}^{t} } )} / 6}.\)

-

(b)

The regret-rejoice function \(R(\Delta v)\) is constructed to determine the regret values concerning the criteria; it is monotonically increasing and decreasingly concave with \(R^{\prime}( \cdot ) > 0\), \(R^{\prime\prime}( \cdot ) < 0\) and \(R(0) = 0\). \(R(\Delta v)\) can be represented as follows:

$$R(\Delta v) = 1 - \exp ( - \delta \Delta v).$$(15)Here, \(\delta\) is the regret aversion coefficient, and \(\delta > 0\). If the value of \(\delta\) is larger, then the decision-maker tends toward regret aversion. \(\Delta v\) represents the difference between the utility values of two alternatives. When \(R(\Delta v) > 0\), \(R(\Delta v)\) represents the rejoice value; conversely, \(R(\Delta v)\) represents the regret value when \(R(\Delta v) < 0\).

Compared with the ideal point, the regret value of alternative \(a_{i}\) with respect to \(c_{j}\) under the t-th status can be calculated as follows:

$$R_{ij}^{t} = 1 - \exp \left[ { - \delta \left( {v_{ij}^{t} - v_{j}^{t + } } \right)} \right], \, 1 \le i \le m,1 \le j \le n,1 \le t \le l_{j} .$$(16)Here, \(v_{j}^{t + } = \int_{{\underline{r}_{j}^{t + } }}^{{\overline{r}_{j}^{t + } }} {v(x)f_{j}^{t + } (x)dx} = \left( {\overline{r}_{j}^{t + } } \right)^{\alpha }\) and \(v_{ij}^{t} \le v_{j}^{t + }\); thus \(R_{ij}^{t} \le 0\), and \(R_{ij}^{t}\) represent the regret values.

-

(a)

-

Step 4:

Calculate the overall perceived utility values of alternatives.

The perceived utility values of alternatives can be calculated on the basis of Step 3. These represent the sum of the utility value and regret value of the alternatives. Let \(u_{ij}^{t}\) be the perceived utility value of alternative \(a_{i}\) with respect to \(c_{j}\) under the t-th status, then

$$u_{ij}^{t} = v_{ij}^{t} + R_{ij}^{t} , { }1 \le i \le m,1 \le j \le n,1 \le t \le l_{j} .$$(17)The overall perceived utility value of alternative \(a_{i}\) with respect to \(c_{j}\) can be calculated as follows:

$$u_{ij} = \sum\limits_{t = 1}^{{l_{j} }} {u_{ij}^{t} \overline{p}_{j}^{t} } .$$(18)Here, \(\bar{p}_{j}^{t}\) is the more precise interval probability, which can be calculated by

$$\bar{p}_{j}^{Lt} = \hbox{max} \left( {p_{j}^{Lt} ,1 - \sum\nolimits_{{t^{\prime} = 1,t^{\prime} \ne t}}^{{l_{j} }} {p_{j}^{Ut} } } \right),$$(19)$$\bar{p}_{j}^{Ut} = \hbox{min} \left( {p_{j}^{Ut} ,1 - \sum\limits_{{t^{\prime} = 1,t^{\prime} \ne t}}^{{l_{j} }} {p_{j}^{Lt} } } \right).$$(20)The overall perceived utility matrix is denoted by \(U = (u_{ij} )_{m \times n}\), where \(u_{ij} = [\underline{u}_{ij} ,\overline{u}_{ij} ]\).

-

Step 5:

Prioritize the alternatives according to the overall perceived utility intervals.

Identify the positive ideal solution \(U^{ + }\) and the negative ideal solution \(U^{ - }\) of the overall perceived utility intervals as follows:

$$U^{ + } = (u_{1}^{ + } ,u_{2}^{ + } , \ldots ,u_{m}^{ + } ),\; U^{ - } = (u_{1}^{ - } ,u_{2}^{ - } , \ldots ,u_{m}^{ - } ).$$The ideal solutions are chosen according to the following formula:

$$u_{j}^{ + } = \mathop {\hbox{max} }\limits_{i = 1}^{m} u_{ij} ,{ 1} \le i \le m,1 \le j \le n,$$(21)$$u_{j}^{ - } = \mathop {\hbox{min} }\limits_{i = 1}^{m} u_{ij} , \, 1 \le i \le m,1 \le j \le n.$$(22)Using Eq. (3), calculate the distances between each overall perceived utility interval and both the positive ideal solution \(d_{i}^{ + }\) and the negative ideal solution \(d_{i}^{ - }\).

$$d_{i}^{ + } = \sum\limits_{j = 1}^{m} {w_{j} d(u_{ij} ,u_{j}^{ + } )} ,$$(23)$$d_{i}^{ - } = \sum\limits_{j = 1}^{m} {w_{j} d(u_{ij} ,u_{j}^{ - } )} .$$(24)Then, estimate the relative closeness with the following formula:

$$C_{i} = \frac{{d_{i}^{ - } }}{{d_{i}^{ - } + d_{i}^{ + } }} .$$(25)The bigger \(C_{i}\) is, the better \(a_{i}\) will be.

4 Illustrative examples

This section uses two examples to perform a detailed and precise demonstration of the proposed approach. The first example shows the use of the proposed approach with interval grey numbers, which can be considered a special case of EGNs. The second example demonstrates the application of the proposed approach with general EGNs. In addition to validating the effectiveness of the proposed method, we also perform comparison analyses.

4.1 Illustration of the proposed approach

To demonstrate the use of the proposed approach with interval grey numbers, consider the problem of evaluating companies for investment decisions [20].

Example 1

An investment bank is planning to invest in three companies denoted as \(A = \{ a_{1} ,a_{2} ,a_{3} \}\). Three criteria are taken into account: \(c_{1}\), annual product income; \(c_{2}\), social benefit; and \(c_{3}\), environmental pollution degree. The criteria’s weights vector is denoted as \(W = (0.1,0.2,0.7)\). All three companies have three possible statuses: good, \(\theta_{1}\); fair, \(\theta_{2}\); and poor, \(\theta_{3}\). The probability of each status is expressed as an interval probability, the values of which are \(P_{1} = [0.3,0.5]\), \(P_{2} = [0.4,0.9]\), and \(P_{3} = [0.1,0.5]\). The criteria value of each alternative takes the form of interval grey numbers, and the grey random variable is subject to normal distribution. Table 2 lists the associated assessment values. It is possible to choose the best alternative according to the information provided.

The following procedure yields the most desirable alternative.

-

Step 1:

Normalize the decision matrix.

In Example 1, \(c_{1}\) and \(c_{2}\) are of the maximizing type, while \(c_{3}\) is of the minimizing type. Applying Eqs. (8) and (9) yields the normalized decision matrix, as shown in Table 3.

Table 3 The normalized decision matrix -

Step 2:

Identify the ideal point.

Applying Eq. (10) to the data shown in Table 3 yields the absolute ideal point \(I = (I_{1}^{ + } ,I_{2}^{ + } , \ldots ,I_{n}^{ + } )\). Here, \(I_{j}^{ + } = (r_{j}^{1 + } ,r_{j}^{2 + } , \ldots ,r_{j}^{{l_{j} + }} )\), and \(r_{j}^{t + } = [\underline{r}_{j}^{t + } ,\overline{r}_{j}^{t + } ] = [1,1] \, (1 \le j \le n,1 \le t \le l_{j} )\).

-

Step 3:

Calculate the utility values and regret values concerning the criteria.

Calculating the utility values and regret values concerning the criteria requires consideration of two parameters, namely the risk aversion coefficient and the regret aversion coefficient. According to Tversky and Kahneman [40], the coefficient of risk aversion is \(\alpha = 0.88\). Assume the regret aversion coefficient is \(\delta = 0.3\); other values of \(\delta\) will be discussed later. The utility values and regret values concerning the criteria can be determined using Eqs. (11), (12), (14), and (16). For example, \(v_{11}^{1} = 0.860\), \(R_{11}^{1} = - 0.043\), \(v_{21}^{1} = 0.982\), and \(R_{21}^{1} = - 0.005\). All criteria values are compared with the absolute ideal point, so the regret values concerning the criteria are non-positive.

-

Step 4:

Calculate the overall perceived utility values of alternatives.

By applying Eq. (17), the utility values and regret values calculated above can be added to get the perceived utility values for the alternatives, which are shown in Table 4.

Table 4 The perceived utility values matrix More precise interval probabilities can be found using Eqs. (19) and (20), with the results \(\overline{p}_{1} = [0.3,0.5]\), \(\overline{p}_{2} = [0.4,0.6]\), and \(\overline{p}_{3} = [0.1,0.3]\). Table 5 shows the overall perceived utility values calculated using Eq. (18).

Table 5 The overall perceived utility values matrix -

Step 5:

Prioritize the alternatives according to the overall perceived utility intervals.

First, identify the positive ideal solution \(U^{ + }\) and the negative ideal solution \(U^{ - }\) of the overall perceived utility intervals:

$$u_{1}^{ + } = [0.751,1.312],\;u_{2}^{ + } = [0.719,1.267],\; u_{3}^{ + } = [0.577,0.989],$$$$u_{1}^{ - } = [0.659,1.154],\;u_{2}^{ - } = [0.584,1.024],\; u_{3}^{ - } = [0.336,0.647].$$Next, calculate the distances between each overall perceived utility interval and both the positive ideal solution \(d_{i}^{ + }\) and the negative ideal solution \(d_{i}^{ - }\) using Eqs. (23) and (24):

$$d_{1}^{ + } = 0.218,\;d_{2}^{ + } = 0.113,\;d_{3}^{ + } = 0.052,$$$$d_{1}^{ - } = 0.042,\;d_{2}^{ - } = 0.148,\;d_{3}^{ - } = 0.207.$$Finally, apply Eq. (25) to obtain the relative closeness:

$$C_{1} = 0.160,\;C_{2} = 0.567,\;C_{3} = 0.799.$$\(C_{3} > C_{2} > C_{1}\) thus determining that the best alternative is \(a_{3}\).

The value of coefficient \(\delta\) reflects the degree of the decision-makers’ regret aversion. Table 6 shows the relative closeness and ranking results using different \(\delta\) values.

Table 6 The relative closeness and ranking results using different \(\delta\) values Table 6 shows that the ranking results remain the same when different \(\delta\) values are used. However, in some cases the ranking results may change as the \(\delta\) value changes.

Example 2

Assume the assessment values of the alternatives under each criterion in Example 1 take the form of general EGNs, and the grey random variable is subject to uniform distribution. The associated assessment values are shown in Table 7.

The following procedure will yield the most desirable alternative.

-

Step 1:

Normalize the decision matrix.

In Example 2, \(c_{1}\) and \(c_{2}\) are of the maximizing type, while \(c_{3}\) is of the minimizing type. Applying Eqs. (8) and (9) yields the normalized decision matrix, as shown in Table 8.

Table 8 The normalized decision matrix -

Step 2:

Identify the ideal point.

The absolute ideal point \(I = (I_{1}^{ + } ,I_{2}^{ + } , \ldots ,I_{n}^{ + } )\) can be obtained from the data shown in Table 8 by applying Eq. (10). Here, \(I_{j}^{ + } = (r_{j}^{1 + } ,r_{j}^{2 + } , \ldots ,r_{j}^{{l_{j} + }} )\) and \(r_{j}^{t + } = [\underline{r}_{j}^{t + } ,\overline{r}_{j}^{t + } ] = [1,1] \, (1 \le j \le n,1 \le t \le l_{j} )\).

-

Step 3:

Calculate the utility values and regret values concerning the criteria.

The parameters used in this example are again \(\alpha = 0.88\) and \(\delta = 0.3\). The utility values and regret values concerning the criteria can be obtained using Eqs. (11), (12), (13), and (16). For example, \(v_{11}^{1} = 0.807\), \(R_{11}^{1} = - 0.060\), \(v_{21}^{1} = 0.752\), and \(R_{21}^{1} = - 0.078\). All the criteria values are compared with the absolute ideal point, so the regret values concerning the criteria are non-positive.

-

Step 4:

Calculate the overall perceived utility values of alternatives.

The utility values and regret values can be calculated using Eq. (17), and then added to get the perceived utility values of alternatives, which are shown in Table 9.

Table 9 The perceived utility values matrix Similar to Example 1, the more precise interval probabilities are \(\overline{p}_{1} = [0.3,0.5]\), \(\overline{p}_{2} = [0.4,0.6]\), and \(\overline{p}_{3} = [0.1,0.3]\). The overall perceived utility values are calculated using Eq. (18), as shown in Table 10.

Table 10 The overall perceived utility values matrix -

Step 5

Prioritize the alternatives according to the overall perceived utility intervals.

First, identify the positive ideal solution \(U^{ + }\) and the negative ideal solution \(U^{ - }\) of the overall perceived utility intervals as follows:

$$u_{1}^{ + } = [0.716,1.251],\;u_{2}^{ + } = [0.737,1.288],\; u_{3}^{ + } = [0.238,0.450],$$$$u_{1}^{ - } = [0.566,1.005],\;u_{2}^{ - } = [0.530,0.953],\; u_{3}^{ - } = [0.118,0.253].$$Next, calculate the distances between each overall perceived utility interval to both the positive ideal solution \(d_{i}^{ + }\) and the negative ideal solution \(d_{i}^{ - }\) using Eqs. (23) and (24):

$$d_{1}^{ + } = 0.057,\;d_{2}^{ + } = 0.135,\;d_{3}^{ + } = 0.056,$$$$d_{1}^{ - } = 0.138,\;d_{2}^{ - } = 0.056,\;d_{3}^{ - } = 0.135.$$Finally, apply Eq. (25) to obtain the relative closeness:

$$C^{\prime}_{1} = 0.708,\;C^{\prime}_{2} = 0.293,\;C^{\prime}_{3} = 0.707.$$\(C^{\prime}_{1} > C^{\prime}_{3} > C^{\prime}_{2}\), thus the best alternative is \(a^{\prime}_{1}\).

Table 11 shows the relative closeness and ranking results using different \(\delta\) values.

Table 11 Relative closeness and ranking results using different \(\delta\) values Table 11 shows that the ranking of \(a^{\prime}_{2}\) is certain, but whether \(a^{\prime}_{1}\) or \(a^{\prime}_{3}\) is the better alternative depends on the \(\delta\) value. When \(\delta = 0.1\), \(a^{\prime}_{3}\) is superior to \(a^{\prime}_{1}\), but the situation reverses when \(\delta \ge 0.2\). This shows that the decision-makers’ regret aversion exerts an impact on the ranking of the alternatives.

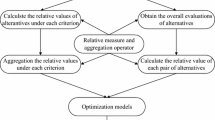

4.2 Comparison analysis and discussion

A comparison analysis is performed to verify the validity and feasibility of the proposed decision-making method. The first type of method uses the prospect theory and VIKOR, which is also based on bounded rationality. The second type of method uses the expected probability degree, which is based on complete rationality.

-

1.

Comparison of the proposed approach with the method based on prospect theory and VIKOR

Li and Zhao [20] proposed a VIKOR method based on prospect theory to achieve a comprehensive look at the alternatives. After normalization, the prospect value function of the interval grey numbers was defined, and the prospect value of each alternative was calculated using all other alternatives as a reference point. After this step the positive ideal solution and the negative ideal solution can be easily identified. Finally, the \(S_{i} ( \otimes )\), \(R_{i} ( \otimes )\), and \(Q_{i} ( \otimes )\) values can be obtained as shown in Table 12.

Table 12 \(S_{i} ( \otimes )\), \(R_{i} ( \otimes )\), and \(Q_{i} ( \otimes )\) values Since \(S_{3} ( \otimes ) < S_{ 2} ( \otimes ) < S_{ 1} ( \otimes )\), \(R_{3} ( \otimes ) < R_{ 2} ( \otimes ) < R_{ 1} ( \otimes )\), and \(Q_{3} ( \otimes ) < Q_{ 2} ( \otimes ) < Q_{ 1} ( \otimes )\), the definitive ranking is \(a_{3} > a_{2} > a_{1}\), and the best alternative is \(a_{3}\).

When we use the same example, Li and Zhou’s method [20] produces the same ranking of alternatives as our proposed method. These results verify the validity of the method proposed in this paper. Though there are some differences between the two methods, both the method based on prospect theory and the proposed method based on regret theory take the decision-makers’ psychological behavior into account. One main difference between the two methods is that the decision-makers utilizing prospect theory need to give one or more reference points, which are not necessary when utilizing regret theory. Also, five parameters used in the prospect theory calculation formula are difficult to determine. Compared with prospect theory, the regret theory calculation formula involves only two parameters, giving it superior ease of use. In addition, the EGNs used in this paper are more powerful in expressing evaluation information than interval grey numbers. Thus, to some extent, the method proposed in this paper is more practical than the method proposed by Li and Zhao [20].

-

2.

Comparison of the proposed approach with the method based on expected probability degree

Wang et al. [47] proposed a grey stochastic MCDM method based on expected probability degree. For comparison, the interval probability should be transformed to the known probability by substituting each interval value with the mean value of its upper and lower limits and normalizing the values. After transformation, the probability values of the statuses in Example 1 are \(p_{1} = 0.364\), \(p_{2} = 0.455\), and \(p_{3} = 0.181\).

In this method, the expected probability degrees of the alternative under criteria were calculated to form the expected probability degree judgment matrix. With respect to each criterion, the comprehensive judgment matrix of expected probability degrees was easily established. Then, the comprehensive sorting value was determined with the calculation formula \((\omega_{i} = \frac{{\left( {\sum\nolimits_{l = 1}^{m} {\sum\nolimits_{k = 1}^{n} {w_{k} \times E\left( {P\left( {x_{ik} > x_{lk} } \right)} \right)} } } \right) + \frac{m}{2} - 1}}{{m \times \left( {m - 1} \right)}},\) where \(E\left( {P\left( {x_{ik} > x_{lk} } \right)} \right)\) is the expected probability degree of the grey stochastic variable \(x_{ik}\) against \(x_{lk}\)). The values are \(\omega_{1} = 0.291\), \(\omega_{2} = 0.360\), and \(\omega_{3} = 0.255\). Since \(\omega_{2} > \omega_{1} > \omega_{3}\), the ranking is \(a_{2} > a_{1} > a_{3}\), and the best alternative is \(a_{2}\).

The ranking obtained by the method based on the expected probability degree is visibly different from that obtained by the method based on regret theory proposed in this paper. The difference lies in the position of \(a_{3}\). When the method based on the expected probability degree is used, \(a_{3}\) is inferior to \(a_{2}\) and \(a_{1}\), but with the use of the proposed method, the situation is reversed. Compared with Wang et al.’s method [47], which is based on complete rationality using the expected probability degree, the proposed method is based on bounded rationality using regret theory. The proposed method fully considers decision-makers’ bounded rationality for decision-making, making it superior to traditional decision-making methods.

Compared with the existing methods, the proposed grey stochastic MCDM method based on regret theory and TOPSIS has the following advantages.

-

1.

The EGNs, combining both intervals and discrete sets of numbers, can express the evaluation information with more flexibility. They have a more powerful capability to express uncertainty due to missing information, and thus have a wider range of applications.

-

2.

The method proposed in this paper is based on bounded rationality, which fully considers the decision-makers’ psychological behavior. Compared with traditional decision-making methods based on complete rationality, the proposed method can address decision-making problems more reasonably and effectively.

-

3.

The proposed method is based on regret theory, which takes the decision-maker’s attitude of regret into account. Compared with prospect theory, regret theory does not use reference points, and the calculation formula includes fewer parameters. Therefore, the proposed method is more advantageous than prospect theory in practical application.

-

4.

The proposed method based on regret theory employs a new form of criteria values, EGNs, thus expanding the application of regret theory.

5 Conclusions

Stochastic MCDM methods are widely used in real-life decision-making processes, and the decision-making method based on regret theory is more congruent with people’s actual decision-making behavior. In this paper, we proposed a grey stochastic MCDM approach based on regret theory using EGNs to express criteria values. Regret theory was first used to obtain the utility value and regret value concerning the criteria, and then the perceived utility values of the alternatives were calculated. Next, the TOPSIS method was used to prioritize the alternatives according to the overall perceived utility intervals. Finally, two examples and comparison analyses were presented to demonstrate the feasibility and usability of the proposed method.

The proposed method makes several important contributions. First, dealing with stochastic MCDM problems with EGNs expands the research scope of MCDM. The proposed method also incorporates the decision-makers’ regrets into the decision-making model, which makes the method more applicable to real-world choices. Additionally, the proposed method expands the application of regret theory and provides a new method through which to solve stochastic MCDM problems.

It is worth noting that there are a considerable number of MCDM problems with criteria values that are expressed as grey and show randomness. Grey stochastic MCDM problems have a wide range of applications, such as investment, new product development project selection, medical care evaluation, supplier selection, and project risk assessment. Therefore, the study of grey stochastic MCDM problems has important practical applications.

In the future, we expect to investigate the generalized distance measures and aggregation operators of EGNs. Furthermore, we may apply our distance measures and aggregation operators to other grey stochastic MCDM methods based on bounded rationality. Meanwhile, we will also consider expanding the scope of application of our method.

References

Allais M (1953) Le comportement de l’homme rationnel devant le risque: critique des postulats et axiomes de l’ecole Americaine. Econometrica 21(4):503–546

Bell DE (1982) Regret in decision making under uncertainty. Oper Res 30(5):961–981

Bonferroni C (1950) Sulle medie multiple di potenze. Bollettino dell’Unione Matematica Italiana 5(3–4):267–270

Brans JP, Vincke PH, Mareschal B (1986) How to select and how to rank projects: the PROMETHEE method. Eur J Oper Res 24(2):228–238

Casella G, Berger RL (2001) Statistical inference, 2nd edn. Duxbury Press, Belmont

Chithambaranathan P, Subramanian N, Gunasekaran A, Palaniappan PLK (2015) Service supply chain environmental performance evaluation using grey based hybrid MCDM approach. Int J Prod Econ 166:163–176

Deng JL (1982) Control problems of grey systems. Syst Control Lett 1(5):288–294

Deng JL (1989) Introduction to grey system theory. J Grey Syst 1(1):1–24

Dolan JG (2010) Multi-criteria clinical decision support: a primer on the use of multiple criteria decision making methods to promote evidence-based, patient-centered healthcare. Patient Patient Centered Outcomes Res 3(4):229–248

Ellsberg D (1961) Risk, ambiguity, and the savage axioms. Q J Econ 75(4):643–669

Gómez-Limón JA, Arriaza M, Riesgo L (2003) An MCDM analysis of agricultural risk aversion. Eur J Oper Res 151(3):569–585

He DY (2007) Decision-making under the condition of probability interval by maximum entropy principle. Oper Res Manag Sci 16:74–78

Hwang CL, Yoon K (1981) Multiple attribute decision making: methods and applications. Springer, Berlin

Jiang ZZ, Fang SC, Fan ZP, Wang DW (2013) Selecting optimal selling format of a product in B2C online auctions with boundedly rational customers. Eur J Oper Res 226(1):139–153

Kahneman D, Tversky A (1979) Prospect theory: an analysis of decision under risk. Econometrica 47(2):263–292

Karsak E (2002) Distance-based fuzzy MCDM approach for evaluating flexible manufacturing system alternatives. Int J Prod Res 40(13):3167–3181

Kuang H, Kilgour DM, Hipel KW (2015) Grey-based PROMETHEE II with application to evaluation of source water protection strategies. Inf Sci 294:376–389

Laciana CE, Weber EU (2008) Correcting expected utility for comparisons between alternative outcomes: a unified parameterization of regret and disappointment. J Risk Uncertain 36(1):1–17

Li GD, Yamaguchi D, Nagai M (2007) A grey-based decision-making approach to the supplier selection problem. Math Comput Model 46(3–4):573–581

Li QS, Zhao N (2015) Stochastic interval-grey number VIKOR method based on prospect theory. Grey Syst Theory Appl 5(1):105–116

Lin YH, Lee PC, Ting HI (2008) Dynamic multi-attribute decision making model with grey number evaluations. Expert Syst Appl 35(4):1638–1644

Liu PD, Jin F (2012) Methods for aggregating intuitionistic uncertain linguistic variables and their application to group decision making. Inf Sci 205:58–71

Liu PD, Jin F, Zhang X, Su Y, Wang MH (2011) Research on the multi-attribute decision-making under risk with interval probability based on prospect theory and the uncertain linguistic variables. Knowl Based Syst 24:554–561

Liu PD, Liu ZM, Zhang X (2014) Some intuitionistic uncertain linguistic Heronian mean operators and their application to group decision making. Appl Math Comput 230:570–586

Liu SF, Dang YG, Fang ZG, Xie NM (2010) Grey systems theory and its applications. Science Press, Beijing

Loomes G, Sugden R (1982) Regret theory: an alternative theory of rational choice under uncertainty. Econ J 92(368):805–824

Okul D, Gencer C, Aydogan EK (2014) A method based on SMAA-Topsis for stochastic multi-criteria decision making and a real-world application. Int J Inf Technol Decis Mak 13(5):957–978

Opricovic S, Tzeng GH (2002) Multicriteria planning of post-earthquake sustainable reconstruction. Comput Aided Civil Infrastruct Eng 17(3):211–220

Özerol G, Karasakal E (2008) A parallel between regret theory and outranking methods for multicriteria decision making under imprecise information. Theor Decis 65(1):45–70

Peng JJ, Wang JQ, Wang J, Yang LJ, Chen XH (2015) An extension of ELECTRE to multi-criteria decision-making problems with multi-hesitant fuzzy sets. Inf Sci 307:113–126

Quiggin J (1994) Regret theory with general choice sets. J Risk Uncertain 8(2):153–165

Roy B (1991) The outranking approach and the foundations of ELECTRE methods. Theor Decis 31(1):49–73

Simon HA (1971) Administrative behavior: a study of decision-making process in administrative organization. Macmillan publishing co Inc, New York

Stanujkic D, Magdalinovic N, Jovanovic R, Stojanovic S (2012) An objective multi-criteria approach to optimization using MOORA method and interval grey numbers. Technol Econ Dev Econ 18(2):331–363

Su CM, Horng DJ, Tseng ML, Chiu ASF, Wu KJ, Chen HP (2015) Improving sustainable supply chain management using a novel hierarchical grey-DEMATEL approach. J Clean Prod. doi:10.1016/j.jclepro.2015.05.080

Tan CQ, Chen XH (2010) Intuitionistic fuzzy Choquet integral operator for multi-criteria decision making. Expert Syst Appl 37(1):149–157

Tan CQ, Ip WH, Chen XH (2014) Stochastic multiple criteria decision making with aspiration level based on prospect stochastic dominance. Knowl Based Syst 70:231–241

Tian ZP, Wang J, Wang JQ, Chen XH (2015) Multi-criteria decision-making approach based on grey linguistic weighted Bonferroni mean operator. Int Trans Oper Res. doi:10.1111/itor.12220

Tian ZP, Wang J, Zhang HY, Chen XH, Wang JQ (2015) Simplified neutrosophic linguistic normalized weighted Bonferroni mean operator and its application to multi-criteria decision-making problems. FILOMAT (In Press)

Tversky A, Kahneman D (1992) Advances in prospect theory: cumulative representation of uncertainty. J Risk Uncertain 5(4):297–323

Vaidogas ER, Sakenaite J (2011) Multi-attribute decision-making in economics of fire protection. Eng Econ 22(3):262–270

Wang J, Wang JQ, Zhang HY, Chen XH (2015) Multi-criteria decision-making based on hesitant fuzzy linguistic term sets: an outranking approach. Knowl Based Syst 86:224–236

Wang JQ, Li KJ, Zhang HY (2012) Interval-valued intuitionistic fuzzy multi-criteria decision-making approach based on prospect score function. Knowl Based Syst 27(1):119–125

Wang JQ, Wang DD, Zhang HY, Chen XH (2015) Multi-criteria group decision making method based on interval 2-tuple linguistic and Choquet integral aggregation operators. Soft Comput 19(2):389–405

Wang JQ, Wu JT, Wang J, Zhang HY, Chen XH (2014) Interval-valued hesitant fuzzy linguistic sets and their applications in multi-criteria decision-making problems. Inf Sci 288:55–72

Wang JQ, Wu JT, Wang J, Zhang HY, Chen XH (2015) Multi-criteria decision-making methods based on the Hausdorff distance of hesitant fuzzy linguistic numbers. Soft Comput. doi:10.1007/s00500-015-1609-5

Wang JQ, Zhang HY, Ren SC (2013) Grey stochastic multi-criteria decision-making approach based on expected probability degree. Scientia Iranica 20(3):873–878

Wang XF, Wang JQ, Deng SY (2014) Some geometric aggregation operators based on log-normally distributed random variables. Int J Comput Intell Syst 7(6):1096–1108

Wang XZ, Dong CR (2009) Improving generalization of fuzzy if-then rules by maximizing fuzzy entropy. IEEE Trans Fuzzy Syst 17(3):556–567

Wang XZ, Dong LC, Yan JH (2012) Maximum ambiguity based sample selection in fuzzy decision tree induction. IEEE Trans Knowl Data Eng 24(8):1491–1505

Wang XZ, He YL, Wang DD (2014) Non-naive bayesian classifiers for classification problems with continuous attributes. IEEE Trans Cybern 44(1):21–39

Wang XZ, Xing HJ, Li Y et al (2015) A study on relationship between generalization abilities and fuzziness of base classifiers in ensemble learning. IEEE Trans Fuzzy Syst 23(5):1638–1654

Yager RR (2008) Prioritized aggregation operators. Int J Approx Reason 48(1):263–274

Yager RR, Kreinovich V (1999) Decision making under interval probabilities. Int J Approx Reason 22(3):195–215

Yang F, Zhao FG, Liang L, Huang ZM (2014) SMAA-AD model in multicriteria decision-making problems with stochastic values and uncertain weights. Ann Data Sci 1(1):95–108

Yang YJ (2007) Extended grey numbers and their operations. In: Proceeding of 2007 IEEE international conference on fuzzy systems and intelligent services, man and cybernetics, Montreal, pp 2181–2186

Yu SM, Zhou H, Chen XH, Wang JQ (2015) A multi-criteria decision-making method based on Heronian mean operators under linguistic hesitant fuzzy environment. Asia Pac J Oper Res 32(5):1550035 (35 pages)

Zhang X, Fan ZP, Chen FD (2013) Method for risky multiple attribute decision making based on regret theory. Syst Eng Theory Pract 33(9):2313–2320

Zhang X, Fan ZP, Chen FD (2014) Risky multiple attribute decision making with regret aversion. J Syst Manag 23(1):111–117

Zhou H, Wang JQ, Zhang HY, Chen XH (2016) Linguistic hesitant fuzzy multi-criteria decision-making method based on evidential reasoning. Int J Syst Sci 47(2):314–327

Acknowledgments

The authors thank the editors and anonymous reviewers for their very helpful comments and suggestions. This work was supported by the National Natural Science Foundation of China (Nos. 71271218 and 71571193).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhou, H., Wang, Jq. & Zhang, Hy. Grey stochastic multi-criteria decision-making based on regret theory and TOPSIS. Int. J. Mach. Learn. & Cyber. 8, 651–664 (2017). https://doi.org/10.1007/s13042-015-0459-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-015-0459-x