Abstract

A degree-constrained minimum spanning tree (DCMST) problem involving any network aims to find the least weighted spanning tree of that network, subject to constraints on node degrees. In this paper, we first define a DCMST problem in an uncertain random network, where some weights are uncertain variables and others are random variables. We also introduce the concept of an ideal chance distribution for DCMST problem. In order to seek out the degree-constrained spanning tree (DCST) closest to the ideal chance distribution, an uncertain random programming model is formulated. An algorithm is presented to solve the DCMST problem. The effectiveness of our method and algorithm are exhibited by solving a numerical example.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The minimum spanning tree (MST) problem, aiming to find the least weight in a deterministic network, was first introduced by Borüvka (1926). Extending Borüvka’s work Narula and Ho (1980) aimed to find the MST of a deterministic network subject to certain constraints on each node. In a deterministic network, all the weights are crisp numbers. In the past decades, the DCMST problem involving deterministic networks has drawn great interest researchers among the world over and it has been applied in many fields such as transportation, communications and logistics, etc. Many efficient classical algorithms have been used to solve the DCMST problem, such as heuristic algorithm (Narula and Ho 1980; Volgenant 1989), ant colony optimization algorithm (Bau et al. 2008), evolutionary algorithm (Raidl 2000), and genetic algorithm (Zhou and Gen 1997; Mu and Zhou 2008), etc.

However in case of real network the weights of the DCMST are analyzed in a state of indeterminacy. In order to model indeterminacy in a network, the concept of a random network was first proposed by Frank and Hakimi (1965) for modeling communication network with random capacities. Knowles and Corne (2000) first investigated the DCMST problem with random weights. Subsequently Torkestani (2012, 2013) proposed an algorithm based on learning automata to solve DCMST problems and min-degree constrained MST problems in random network. Although random network has been well applied in working on non-deterministic DCMST problems, in reality indeterminacy present in networks cannot always be explained by randomness in all cases. Since in practice the network is inevitably affected by collisions, congestions and interferences. For the lack of statistical data about the weights when making decisions, the concept of random network can not be adopted fruitfully. In this case, we have to rely on the experts in order to obtain their belief degrees on the possible outcomes. As a breakthrough, for dealing with the lack of historical data on the weights, uncertain networks were first investigated by Liu (2010a) for modeling project scheduling problem where the duration of activities were uncertain variables. Later, Gao et al. (2015) investigated the distribution of the diameter and the edge-connectivity was discussed by Gao and Qin (2016) of uncertain graph. Besides, the shortest path problem with uncertain arcs was investigated by Gao (2011), the maximum flow problem of uncertain network was investigated by Han et al. (2014), the uncertain minimum cost flow problem was investigated by Ding (2014), Gao et al. (2016) proposed some uncertain models on railway transportation planning problem, and the related uncertain MST problems have been solved, for example, inverse MST problem was investigated by Zhang et al. (2013), quadratic MST problem was investigated by Zhou et al. (2014), path optimality conditions for MST problem was investigated by Zhou et al. (2015), and the DCMST problem was first introduced by Gao and Jia (2017) and proposed uncertain programming models.

In our daily life, uncertainty and randomness may coexist in a complex network. For example, some weights have no historical data while others may have enough statistical data to obtain their probability distributions. In order to deal with this phenomenon, the concept of uncertain random network was first proposed by Liu (2014) and many network optimization problems subsequently studied by many researchers. Using these concepts many uncertain random network optimization problems have been solved, for example, the MST problem studied by Sheng et al. (2017), the maximum flow problem studied by Sheng and Gao (2014), and the shortest path problem studied by Sheng and Gao (2016) etc. In this paper, we consider the DCMST problem for an uncertain random network in which some weights are uncertain variables and others are random variables. We will propose an ideal chance distribution and formulate an uncertain random programming model to find the DCMST.

The remainder of this paper is organized as follows. In Sect. 2, we review some preliminaries of uncertainty theory, chance theory and uncertain random network. In Sect. 3, we introduce a concept of ideal chance distribution of DCMST. In Sect. 4, an uncertain random programming model is formulated here to find the DCMST. In Sect. 5, a numerical example is given to illustrate its effectiveness. In Sect. 6, we give a brief summary of this paper.

2 Preliminary

In this section, we revise some basic concepts and properties of uncertainty theory, chance theory and uncertain random network.

2.1 Uncertain theory

For modelling belief degrees, uncertainty theory was founded by Liu (2007) and perfected by Liu (2009a) based on normality, duality, subadditivity, and product axioms. Uncertainty theory has become a branch of mathematics. In theory, uncertain process (Liu 2008), uncertain differential equation (Chen and Liu 2010; Yang and Yao 2016) and uncertain set theory (Liu 2010b) have been established. In practice, uncertain programming (Liu 2009b), uncertain inference (Gao et al. 2010), uncertain statistics (Liu 2010a), uncertain optimal control (Zhu 2010), and uncertain differential game (Gao 2013; Yang and Gao 2013, 2016), etc., have also developed quickly. Nowadays, uncertain theory has been applied to many areas such as economics (Yang and Gao 2017, 2016), Management (Gao et al. 2017; Gao and Yao 2015) and Finance (Chen and Gao 2013; Guo and Gao 2017).

Let \(\varGamma\) be a nonempty set and \(\mathcal {L}\) be a \(\sigma\)-algebra over \(\Gamma.\) Each element \(\Lambda \in \mathcal {L}\) is called an event. Liu (2007) defined an uncertain measure by the following axioms:

Axiom 1

(Normality Axiom) \(\mathcal {M}\{\Gamma \} = 1\) for the universal set \(\Gamma\).

Axiom 2

(Duality Axiom) \(\mathcal {M}\{\Lambda \} + \mathcal {M}\{\Lambda ^{c}\} = 1\) for any event \(\Lambda\).

Axiom 3

(Subadditivity Axiom) For every countable sequence of events \(\Lambda _{1}, \Lambda _{2}, \ldots\), we have

The triplet \((\Gamma ,\mathcal {L},\mathcal {M})\) is called an uncertainty space. Furthermore, Liu (2009a) defined a product uncertain measure by the fourth axiom:

Axiom 4

(Product Axiom) Let \((\Gamma _{k},\mathcal{L}_{k},\mathcal {M}_{k})\) be uncertainty spaces for \(k=1,2,\ldots\) The product uncertain measure \(\mathcal {M}\) is an uncertain measure satisfying

where \(\Lambda _{k}\) are arbitrarily chosen events form \(\mathcal {L} _{k}\) for \(k=1,2,\ldots ,\) respectively.

Definition 1

(Liu 2007) An uncertain variable is a measurable function \(\xi\) from an uncertainty space \((\Gamma ,\mathcal {L},\mathcal {M})\) to the set of real numbers, i.e., for any Borel set B of real numbers, the set

is an event.

The uncertainty distribution of an uncertain variable \(\xi\) is defined by \(\Phi (x)=\mathcal {M}\{\xi \le x\}\) for any real number x. For example, linear uncertain variables L(a, b) has the following uncertainty distribution as shown in Fig. 1,

and the zigzag uncertain variable Z(a, b, c) has the following uncertainty distribution as shown in Fig. 2,

An uncertainty distribution \(\Phi\) is said to be regular if its inverse function \(\Phi ^{-1}(\alpha )\) exists and is unique for each \(\alpha \in (0,1)\). It is clear that the linear uncertain variable and zigzag uncertain variable are regular, and their inverse uncertainty distributions are as follows,

Definition 2

(Liu 2009a) The uncertain variables \(\xi _1,\) \(\xi _2,\ldots , \xi _n\) are said to be independent if

for any Borel sets \(B_1, B_2,\ldots , B_n\) of real numbers.

It is usually assume that all uncertainty distributions in practical applications are regular. In the following, we will see the inverse uncertainty distribution \(\Phi ^{-1}(\alpha )\) has some good operational properties, which makes easier to obtain the solution of uncertain programming problems.

Theorem 1

(Liu 2010a) Let \(\xi _1,\xi _2,\ldots ,\xi _n\) be independent uncertain variables with regular uncertainty distributions \(\Phi _1, \Phi _2,\ldots , \Phi _n,\) respectively. If \(f(\xi _1,\xi _2,\ldots ,\xi _n)\) is strictly increasing with respect to \(\xi _1,\xi _2,\ldots ,\xi _m\) and strictly decreasing with respect to \(\xi _{m+1}, \xi _{m+2}, \ldots , \xi _n,\) then

has an inverse uncertainty distribution

Example 1

Let \(\xi _1,\xi _2,\ldots ,\xi _n\) be independent uncertain variables with regular uncertainty distributions \(\Phi _1, \Phi _2,\ldots , \Phi _n,\) respectively. Then

has an inverse uncertainty distribution

Definition 3

(Liu 2007) Let \(\xi\) be an uncertain variable. Then the expected value of \(\xi\) is defined by

provided that at least one of the two integrals is finite.

Theorem 2

(Liu 2007) Let \(\xi\) be an uncertain variable with uncertainty distribution \(\Phi\). Then

According to Theorem 2, the expected value of a linear uncertain variable L(a, b) is

and the expected value of zigzag uncertain variable Z(a, b, c) is

2.2 Chance theory

Chance theory was pioneered by Liu (2013a) for modelling complex systems with not only uncertainty but also randomness. In this theory, the concepts of uncertain random variable, chance measure and chance distribution were proposed. In addition, the concepts of expected value and variance of uncertain random variables were also proposed. The operational law of uncertain random variables was presented by Liu (2013b). As an extension, a law of large numbers for uncertain random variables was proved by Yao and Gao (2016). Uncertain random process was investigated by Gao and Yao (2015). In practice, uncertain random programming (Liu 2013b), uncertain random risk analysis (Liu and Ralescu 2014) have been established.

Let \((\Gamma , \mathcal {L} , \mathcal {M})\) be an uncertainty space and let \((\Omega , \mathcal {A},\Pr )\) be a probability space. Then the product \((\Gamma , \mathcal {L} , \mathcal {M})\times (\Omega , \mathcal {A}, \Pr )\) is called a chance space.

Definition 4

(Liu 2013a) Let \((\Gamma , \mathcal {L} , \mathcal {M})\times (\Omega , \mathcal {A}, \Pr )\) be a chance space, and \(\Theta \in \mathcal {L} \times \mathcal {A}\) be an event. Then the chance measure of \(\Theta\) is defined as

Theorem 3

(Liu 2013a) Let \((\Gamma , \mathcal {L} , \mathcal {M})\times (\Omega , \mathcal {A}, \Pr )\) be a chance space. Then

for any \(\Lambda \in \mathcal {L}\) and any \(A \in \mathcal {A}\). Especially, we have

Theorem 4

(Liu 2013a, Monotonicity Theorem) The chance measure is a monotone increasing set function. That is, for any events \(\Theta _1\) and \(\Theta _{2}\) with \(\Theta _1\le \Theta _{2}\), we have

Theorem 5

(Liu 2013a, Duality Theorem) The chance measure is self-dual. That is, for any event \(\Theta\), we have

Theorem 6

(Hou 2014, Subadditivity Theorem) The chance measure is subadditive. That is, for any countable sequence of events \(\Theta _1, \Theta _2, \ldots ,\) we have

Definition 5

(Liu 2013a) An uncertain random variable is a function \(\xi\) from a chance space \((\Gamma , \mathcal {L} , \mathcal {M})\times (\Omega , \mathcal {A}, \Pr )\) to the set of real numbers such that \(\{\xi \in B\}\) is an event in \(\mathcal{L} \times \mathcal {A}\) for any Borel set B of real numbers.

Definition 6

(Liu 2013a) Let \(\xi\) be an uncertain random variable. Then its chance distribution is defined by

for any \(x \in \mathfrak {R}.\)

Theorem 7

(Liu 2013b) Let \(\eta _{1}, \eta _{2}, \ldots , \eta _{m}\) be independent random variables with probability distributions \(\Psi _{1}, \Psi _{2}, \ldots \Psi _{m},\) and let \(\tau _{1}, \tau _{2}, \ldots , \tau _{n}\) be independent uncertain variables with regular uncertainty distributions \(\Upsilon _{1}, \Upsilon _{2}, \ldots , \Upsilon _{n}\), respectively. Assume \(f(\eta _{1}, \eta _{2}, \ldots , \eta _{m},\tau _{1},\) \(\tau _{2}, \ldots , \tau _{n})\) is strictly increasing with respect to \(\tau _{1}, \tau _{2}, \ldots , \tau _{k}\) and strictly decreasing with respect to \(\tau _{k+1}, \tau _{k+2}, \ldots , \tau _{k+n}\). Then

has a chance distribution

where \(F(x;y_{1}, y_{2}, \ldots , y_{m})\) is the root of \(\alpha\) of the equation

Example 2

Let \(\eta\) be a random variable with probability distribution \(\Psi\), and let \(\tau\) be an uncertain variable with regular uncertainty distribution \(\Upsilon\). Then

is an uncertain random variable with chance distribution

Theorem 8

(Liu 2013b) Let \(\eta _{1}, \eta _{2}, \ldots , \eta _{m}\) be independent random variables with probability distributions \(\Psi _{1}, \Psi _{2}, \ldots \Psi _{m},\) respectively, let \(\tau _{1}, \tau _{2}, \ldots , \tau _{n}\) be uncertain variables, and let f be a measurable function. Then

has an expected value

where \(E[f(y_{1}, y_{2}, \ldots , y_{m},\tau _{1}, \tau _{2}, \ldots , \tau _{n})\) is the expected value of the uncertain variable \(f(y_{1}, y_{2}, \ldots , y_{m},\tau _{1},\) \(\tau _{2}, \ldots , \tau _{n})\) for any real numbers \(y_1, y_2, \ldots , y_m\).

2.3 Uncertain random network

The term network is a synonym for a weighted graph, where the weights may be understood as cost, distance, time and others. For modelling the network in which some weights are random variables and others are uncertain variables, Liu (2014) first proposed the concept of uncertain random network.

Definition 7

(Liu 2014) Assume that \(\mathcal {N}\) is the collection of nodes, \(\mathcal {U}\) is the collection of uncertain arcs, \(\mathfrak {R}\) is the collection of random arcs, and \(\mathcal {W}\) is the collection of uncertain and random weights. Then the quartette \((\mathcal {N}, \mathcal {U}, \mathfrak {R}, \mathcal {W})\) is said to be an uncertain random network.

The uncertain random network becomes a random network (Frank and Hakimi 1965) if all weights are random variables; and becomes an uncertain network (Liu 2010a) if all weights are uncertain variables. Figure 3 shows an uncertain random network \((\mathcal {N}, \mathcal {U}, \mathfrak {R}, \mathcal {W})\) of order 6 in which

3 Ideal chance distribution

In a deterministic undirected network, all the weights of edges are deterministic and finite. Let \((\mathcal {N},\mathrm{E},\mathcal {W})\) be a deterministic network, where \(\mathcal {N}\) is the set of vertices, \(\mathrm{E}\) is the edges set and \(\mathcal {W}\) is the set of deterministic weights. Let \(d_i~(i\in \mathcal {N})\) be the degree value of node i, and let \(b_i~(i\in \mathcal {N})\) be the degree constraint of node i. We recall at this point that a spanning tree is a subnetwork that contains all the nodes. The MST is a spanning tree with the least weight. The DCMST problem is to find the least weight spanning tree of a given graph, subject to constraints on the degree of the nodes. Many classical algorithms such as evolutionary algorithms (Raidl 2000), and genetic algorithm (Zhou and Gen 1997; Mu and Zhou 2008) can be used to solve the DCMST problem for the given network. Let \(w_{ij}\) be the weight of the edge (i, j) for \((i,j)\in \mathrm{E}\), then the weight of the DCMST is a function of the weight \(w_{ij}\). We denote the function by \(f(\{w_{ij}|(i,j)\in \mathrm{E}\})\) or \(f(\mathcal {W})\). Assume that \(\mathcal {W}_1=\{{w^{1}_{ij}|(i,j)\in \mathrm{E}}\}\) and \(\mathcal {W}_2=\{{w^{2}_{ij}|(i,j)\in \mathrm{E}}\}\) are two sets of deterministic weights. If \(w^{1}_{ij}\le w^{2}_{ij}\) for each \((i,j)\in \mathrm{E}\), it is easy to prove that \(f(\mathcal {W}_1)\le f(\mathcal {W}_2)\). In other words, the function f is an increasing function about weights \(w_{ij}\).

In real life, lots of networks are indeterministic, which means uncertainty and randomness often simultaneously appear in a complex network. For example, some edge weights are considered as random variables when we obtain history data, and the others are considered as uncertain variables when data are not available and they are subjectively provided. Next we try to discuss the DCMST problem in a connected and undirected uncertain random network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\). Some assumptions are listed as follows:

-

(i)

The uncertain random network is connected and undirected;

-

(ii)

The weight of each edge \((i,j)\in \mathcal {U}\cup \mathfrak {R}\) is positive and finite;

-

(iii)

The weight of each edge \((i,j)\in \mathcal {U}\cup \mathfrak {R}\) is either an uncertain variable or a random variable;

-

(iv)

All the uncertain variables and the random variables are independent;

-

(v)

All the degree values of DCMST are less than the given degree constraints.

Given a connected and undirected uncertain random network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\), where \(\mathcal {N}=\{1,2,\ldots ,n\}\) is the set of vertices, and \(\mathcal {W}=\{\tau _{ij}, ~(i,j)\in \mathcal {U}; \xi _{ij}, ~(i,j)\in \mathfrak {R}\}\) is the set of edges. We assume there is a constraint on each node, and the degree value \(d_i~(i\in \mathcal {N})\) is less than a given value \(b_i~(i\in \mathcal {N})\) of the node i. We assume that the uncertain weights \(\tau _{ij}\) for \((i,j)\in \mathcal {U}\) are defined on an uncertainty space \((\Gamma , \mathcal {L} , \mathcal {M})\) and random weights \(\xi _{ij}\) for \((i,j)\in \mathfrak {R}\) are defined on probability space \((\Omega , \mathcal {A}, \Pr )\). Then for any given \(\gamma \in \Gamma\) and \(\omega \in \Omega\), \(\tau _{ij}(\gamma ), (i,j)\in \mathcal {U}\) and \(\xi _{ij}(\omega ), (i,j)\in \mathfrak {R}\) are all crisp numbers. In other words, if we know the realization of random and uncertain edge weights, then in fact, the network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\) is a certain network and its DCMST can be obtained by classical algorithms. For a given network, the degree-constrained spanning tree (DCST) is not unique, however the DCMST have the same weight. We still denote the weight of DCMST by \(f(\tau _{ij}, (i,j)\in \mathcal {U}; \xi _{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\), which suggests that f is a function of \(\gamma\) and \(\omega\). With this method, for each uncertain random network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\) corresponding to a related f which is an uncertain random weight. According to the operational law that \(f(\tau _{ij}, (i,j)\in \mathcal {U}; \xi _{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\) is also an uncertain random variable. The chance distribution of \(f(\tau _{ij}, (i,j)\in \mathcal {U}; \xi _{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\) is called an chance distribution of the DCMST with respect to the uncertain random network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\). Owing to the fact that ideal chance distribution of DCMST is unique for a given uncertain random network, we give the following theorem to illustrate how to calculate ideal chance distribution of DCMST for an uncertain random network.

Theorem 9

Let \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\) be an uncertain random network. Assume that the uncertain weights are uncertain variables \(\tau _{ij}\) with regular uncertainty distributions \(\Upsilon _{ij}\) for \((i,j)\in \mathcal {U}\), and the random weights are random variables \(\xi _{ij}\) with probability distributions \(\Psi _{ij}\) for \((i,j)\in \mathfrak {R}\), respectively. Then the ideal chance distribution of DCMST with respect to the uncertain random network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\) is

where \(F(x;y_{ij},(i,j)\in \mathfrak {R}, d_i \le b_i)\) is the uncertainty distribution of uncertain variable \(f(\tau _{ij}, (i,j)\in \mathcal {U}; y_{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\).

Proof

Let \(f(\tau _{ij}, (i,j)\in \mathcal {U}; y_{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\) be the weight of ideal chance distribution of DCMST. From Definition 6 and Theorem 7, we can obtain the ideal chance distribution of DCMST as follows,

where \(F(x;y_{ij},(i,j)\in \mathfrak {R}, d_i \le b_i)\) is the uncertainty distribution of \(f(\tau _{ij}, (i,j)\in \mathcal {U}; y_{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\) for any real numbers \(y_{ij}, ~(i,j)\in \mathfrak {R}\). \(\square\)

Remark 1

The uncertainty distribution \(F(x;y_{ij},(i,j)\in \mathfrak {R}, d_i \le b_i)\) is determined by its inverse uncertainty distribution \(F^{-1}(\alpha ; y_{ij}, (i,j)\in \mathfrak {R}, d_i \le b_i)\). By Theorem 7, for given \(\alpha \in (0,1)\), we have

which is just the weight of the DCMST in a deterministic network and can be calculated by using the genetic algorithm or other evolutionary algorithms.

Remark 2

Let \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\) be an uncertain random network, and let \(\Phi (x)\) be the ideal chance distribution of DCMST. Assume \(\Phi _{T^{'}}(x)\) is the chance distribution of a DCST \(T^{'}\). Then for each DCST \(T^{'}\), we have

Remark 3

An uncertain random network degenerates to a random network if all the weights are independent random variables. Thus the ideal chance distribution of DCMST is actually the following probability distribution

Remark 4

An uncertain random network degenerates to an uncertain network if all the weights are independent uncertain variables. Thus the ideal chance distribution of DCMST is actually an uncertainty distribution with the following inverse function

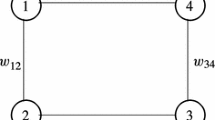

Example 3

Let us consider an uncertain random network with 4 nodes and 4 edges with degree constraint of node 1 is 2 is shown in Fig. 4. Assume that uncertain weights \(\tau _{12},~\tau _{14}\) have regular uncertainty distributions \(\Upsilon _{12}, \Upsilon _{14}\), and random weights \(\xi _{13},~ \xi _{23}\) have probability distributions \(\Psi _{13}, \Psi _{23}\), respectively. Then from Theorem 9, the ideal chance distribution of DCMST is

where \(F(x;y_{13},y_{23},d_1\le 2)\) is determined by its inverse uncertainty distribution

Theoretically, it is difficult to obtain an analytical expression of ideal chance distribution of DCMST for an uncertain random network. Practically, we can calculate it by using numerical techniques.

4 Model of uncertain random DCMST problem

In real life, lots of networks are indeterministic, which means some of the edges weights are uncertain variables and others are random variables. Let us consider connected and undirected uncertain random networks, which are finite and loopless. In this work, we will concentrate on an uncertain random network \((\mathcal {N}, \mathcal {U}, \mathfrak {R}, \mathcal {W})\), where \(\mathcal {N}=\{1,2,\ldots ,n\}\) is the collection of vertices, \(\mathcal {U}\) is the collection of uncertain weights, \(\mathfrak {R}\) is the collection of random weights, and \(\mathcal {W}=\{\tau _{ij},(i,j)\in \mathcal {U}; \xi _{ij},(i,j)\in \mathfrak {R}\}\) is the collection of edges. Assume \(\tau _{ij},(i,j)\in \mathcal {U}\) are independent uncertain variables, and \(\xi _{ij},(i,j)\in \mathfrak {R}\) are independent random variables. Assume further that there is a constraint on each node, and the degree value \(d_{i}\) is less than a given value \(b_{i}\). Let \(\{x_{ij},(i,j)\in \mathcal {W}\}\) be decision vectors, where \(x_{ij}=1\) means that edge (i, j) is chosen in DCST \(T^{'}\), otherwise \(x_{ij}=0\). Then \(T^{'}=\{x_{ij},(i,j)\in \mathcal {U}\cup \mathfrak {R}, d_{i}\le b_{i}\}\) is a DCST if and only if

where \(\mathcal {U}\cup \mathfrak {R}(S)\) represents the edges set of S, and S is a subset of \(\mathcal {N}\). The weight of a DCST \(T^{'}=\{x_{ij},(i,j)\in \mathcal {U}\cup \mathfrak {R}, d_{i}\le b_{i}\}\) is

Obviously it is an uncertain random variable. Its chance distribution is \(\Phi _{T^{'}}(z|x_{ij}, (i,j)\in \mathcal {U}\cup \mathfrak {R}, d_{i}\le b_{i})\),

Under the chance theory, each \((\gamma , \omega )\) corresponds to a DCMST and different \((\gamma , \omega )\) may correspond to different DCMSTs. If there exists a DCMST for all \((\gamma , \omega )\), then we call it the ideal chance distribution of DCMST. However, it may happen that such an ideal chance distribution of the DCMST perhaps may not exists or else we may not know what \((\gamma , \omega )\) can be reached. Perhaps a better way is to find a real DCST, in which the chance distribution is closest to the ideal chance distribution.

Definition 8

Let \((\mathcal {N}, \mathcal {U}, \mathfrak {R}, \mathcal {W})\) be an uncertain random network, and let \(\Phi (z)\) be its ideal chance distribution of DCMST problem. Assume that \(T^{'}= \{x_{ij}, (i,j)\in \mathcal {U}\cup \mathfrak {R}, d_i \le b_i\}\) is a DCST with chance distribution \(\Phi _{T^{'}}(z|x_{ij}, (i,j)\in \mathcal {U}\cup \mathfrak {R}, d_i \le b_i)\). If \(\Phi _{T^{'}}(z|x_{ij}, (i,j)\in \mathcal {U}\cup \mathfrak {R}, d_i \le b_i)\) is closest to the \(\Phi (z)\), i.e.,

is minimum, then the DCST \(T^{'}\) is defined DCMST for the given uncertain random network.

Based on Definition 8, we formulate the following DCMST model for an uncertain random network,

Theorem 10

Let \((\mathcal {N}, \mathcal {U}, \mathfrak {R}, \mathcal {W})\) be an uncertain random network, and let \(\Phi (z)\) be its ideal chance distribution of DCMST problem. Assume that the edge uncertain weights \(\tau _{ij}\) are uncertain variables with regular uncertainty distributions \(\Upsilon _{ij}\) for \((i,j)\in \mathcal {U}\), and random weights \(\xi _{ij}\) are random variables with probability distributions \(\Psi _{ij}\) for \((i,j)\in \mathfrak {R}\), respectively. Then model (3) is equivalent to the following model:

where

Proof

Let \(T^{'}= \{x_{ij}, (i,j)\in \mathcal {U}\cup \mathfrak {R}, d_i \le b_i\}\) be a DCST, and let \(\Psi (y)\) be the probability distribution of \(\sum \limits _{(i,j)\in \mathcal {R}}x_{ij}\xi _{ij}.\) According to assumption (iv), we can obtain

From Theorem 7, we have

Further, from Theorem 1,

Combining the above two formulas into the objective function of model (3), the theorem is completed.

Next we propose the following algorithm to solve the model above. \(\square\)

Algorithm:

Step 1: For each random edge \((i,j)\in \mathfrak {R}\), give a partition \(\Pi _{ij}\) on the interval \([a_{ij},b_{ij}]\) with the step length is 0.01, random weight values only in \(\Pi _{ij}\), denote by \(y_{ij}\).

Step 2: For any \((i,j)\in \mathfrak {R}\), given \(y_{ij} \in \Pi _{ij}\) and \(\alpha \in \{0.01,0.02,\ldots ,0.99\}\), calculate \(F^{-1}(\alpha ;y_{ij},(i,j)\in \mathfrak {R},d_i\le b_i)\).

Step 3: The uncertain distribution function \(F(x;y_{ij},(i,j)\in \mathfrak {R},d_i \le b_i)\) can be obtained by using linear interpolation from the discrete form of inverse uncertainty distribution.

Step 4: Submit \(F(x;y_{ij},(i,j)\in \mathfrak {R},d_i \le b_i)\) into objective function (1), calculate the ideal chance distribution.

Step 5: Find all the DCSTs for the given uncertain random network by using the genetic algorithm, and label each DCST.

Step 6: Calculate chance distribution of each DCST.

Step 7: Calculate the objective function of model (4). Choose the DCST corresponds to the minimum value, and that is the DCMST.

5 Numerical experiment

In this section, we will present a numerical example to illustrate the effectiveness of the proposed model. Given an uncertain random network \((\mathcal {N},\mathcal {U},\mathfrak {R},\mathcal {W})\) with 5 nodes and 8 edges as shown in Fig. 5, assume that the degree constraint of each node is 3. Assume that the uncertain weights \(\tau _{12},\tau _{24},\tau _{25},\tau _{34},\tau _{45}\) are independent uncertain variables with regular uncertainty distributions \(\Upsilon _{12},\Upsilon _{24},\Upsilon _{25},\Upsilon _{34},\Upsilon _{45},\) and the random weights \(\xi _{15},\xi _{23},\xi _{35}\) are independent random variables with probability distributions \(\Psi _{15},\Psi _{23},\Psi _{35},\) respectively. These parameters of weights are listed in Table 1, where U(a, b) represent a uniformly random variable, L(a, b) and Z(a,b,c) are linear and zigzag uncertain variables respectively.

According to Theorems 7 and 9, the ideal chance distribution of DCMST problem with respect to the given uncertain random network can be easily obtained as follows,

where \(F(x;y_{15},y_{23},y_{35},d_i\le 3)\) is determined by its inverse uncertainty distribution

where f can be obtained by the genetic algorithm for each given \(\alpha\).

Base on the genetic algorithm, we can easily find all the DCSTs and label each DCST. Since we are able to fully obtain 38 DCSTs, we just listed part of them from tree 31 to tree 36 in Fig. 6. By the proposed algorithm above, we first calculate the ideal chance distribution of the DCMST and the chance distribution of all DCSTs as shown in Fig. 7. From Fig. 7, we can easily observe Tree 36 is closest to the ideal chance distribution. According to model (4), we come out to calculate the difference between the area of each DCST and the ideal chance distribution of the DCMST and the results are listed in Table 2. From Table 2, we once again confirm that Tree 36 is closest to the ideal chance distribution. So we conclude that Tree 36 can be regarded as DCMST.

6 Conclusion

This paper first introduced an uncertain random network into a DCMST problem in which uncertainty coexists with randomness. That is, in an uncertain random network, some weights are uncertain variables and others are random variables. In order to solve the DCMST problem, we applied chance theory and uncertain random variables to deal with this situation. We have also introduced the concept of ideal chance distribution and a new model of DCMST problem for uncertain random network, and solved the problem by finding the closest DCST to the ideal one. The performance of our method, that is in terms of its relevance and efficacy, is validated through a numerical illustration.

Future directions may be as follows:

-

1.

Since the DCMST is an uncertain random variable, some other uncertain random programming models can be found to solve the problem.

-

2.

The study can be extended to other constraint on MST problem in uncertain random network.

-

3.

The proposed model and algorithm can be used in uncertain random traveling salesman problem.

References

Borüvka O (1926) O jistém problému minimálním. Práce Mor. Přírodovéd. Spol. v Brně 3:37–58

Bau Y, Ho C, Eve H (2008) Ant colony optimization approaches to the degree-constrained minimum spanning tree problem. J Assoc Inf Sci Tech 24(4):1081–1094

Chen X, Liu B (2010) Existence and uniqueness theorem for uncertain differential equations. Fuzzy Optim Decis Mak 9(1):69–81

Chen X, Gao J (2013) Uncertain term structure model of interest rate. Soft Comput 17(4):597–604

Ding S (2014) Uncertain minimum cost flow problem. Soft Comput 18(11):2201–2207

Frank H, Hakimi S (1965) Probabilistic flows through a communication network. IEEE Trans Circuit Theory 12:413–414

Gao J, Yang X, Liu D (2017) Uncertain Shapley value of coalitional game with application to supply chain alliance. Appl Soft Comput. doi:10.1016/j.asoc.2016.06.018

Gao J (2013) Uncertain bimatrix game with applications. Fuzzy Optim Decis Mak 12(1):65–78

Gao J, Yao K (2015) Some concepts and theorems of uncertain random process. Int J Intell Syst 30(1):52–65

Gao X, Jia L (2017) Degree-constrained minimum spanning tree problem with uncertain edge weights. Appl Soft Comput. doi:10.1016/j.asoc.2016.07.054

Gao X, Gao Y, Ralescu D (2010) On Liu’s inference rule for uncertain systems. Int J Uncertain Fuzziness Knowl Syst 18(1):1–11

Gao Y (2011) Shortest path problem with uncertain arc lengths. Comput Math Appl 62(6):2591–2600

Gao Y, Yang L, Li S, Kar S (2015) On distribution function of the diameter in uncertain graph. Inf Sci 296:61–74

Gao Y, Qin Z (2016) On computing the edge-connectivity of an uncertain graph. IEEE Trans Fuzzy Syst 24(4):981–991

Gao Y, Yang L, Li S (2016) Uncertain models on railway transportation planning problem. Appl Math Model 40:4921–4934

Guo C, Gao J (2017) Optimal dealer pricing under transaction uncertainty. J Intell Manuf 28(3):657–665

Han S, Peng Z, Wang S (2014) The maximum flow problem of uncertain network. Inf Sci 265:167–175

Hou Y (2014) Subadditivity of chance measure. J Uncertainty Anal Appl 2(Article 14)

Knowles J, Corne D (2000) A new evolutionary approach to the degree-constrained minimum spanning tree problem. IEEE T Evol Comput 4:125–134

Liu B (2007) Uncertainty theory, 2nd edn. Springer-Verlag, Berlin

Liu B (2008) Fuzzy process, hybrid process and uncertain process. J Uncertain Syst 2(1):3–16

Liu B (2009a) Some research problems in uncertainty theory. J Uncertain Syst 3(1):3–10

Liu B (2009b) Theory and practice of uncertain programming, 2nd edn. Springer-Verlag, Berlin

Liu B (2010a) Uncertainty theory: a branch of mathematics for modeling human uncertainty. Springer-Verlag, Berlin

Liu B (2010b) Uncertain set theory and uncertain inference rule with application to uncertain control. J Uncertain Syst 4(2):83–98

Liu B (2014) Uncertain random graph and uncertain random network. J Uncertain Syst 8(1):3–12

Liu Y (2013a) Uncertain random variables: A mixture of uncertainty and randomness. Soft comput 17(4):625–634

Liu Y (2013b) Uncertain random programming with applications. Fuzzy Optim Decis Mak 12(2):153–169

Liu Y, Ralescu D (2014) Risk index in uncertain random risk analysis. Int J Uncertain Fuzziness Knowl Syst 22(4):491–504

Mu Y, Zhou G (2008) Genetic algorithm based on prufer number for solving degree-constrained minimum spanning tree problem. Com Eng App 44(12):53–56

Narula S, Ho C (1980) Degree-constrained minimum spanning tree. Comput Oper Res 7:239–249

Raidl G (2000) An efficient evolutionary algorithm for the degree-constrained minimum spanning tree problem. IEEE Trans Evol Comput 1(104–111):2000

Sheng Y, Qin Z, Shi G (2017) Minimum spanning tree problem of uncertain random network. J Intell Manuf 28(3):565–574

Sheng Y, Gao J (2014) Chance distribution of the maximum flow of uncertain random network. J Uncertain Anal Appl 2(Article 14)

Sheng Y, Gao Y (2016) Shortest path problem of uncertain random network. Comput Ind Eng 99:97–105

Torkestani J (2012) Degree-constrained minimum spanning tree problem in stochastic graph. Cybernet Syst 43(1):1–21

Torkestani J (2013) A learning automata-based algorithm to the stochastic min-degree constrained minimum spanning tree problem. Int J Found Comput 24(3):329–348

Volgenant A (1989) A lagrangean approach to the degree-constrained minimum spanning tree problem. Eur J Oper Res 39(3):325–331

Yang X, Gao J (2017) Bayesian equilibria for uncertain bimatrix game with asymmetric information. J Intell Manuf 28(3):515–525

Yang X, Gao J (2013) Uncertain differential games with applications to capitalism. J Uncertainty Anal Appl 1(Article 17)

Yang X, Gao J (2016) Linear-quadratic uncertain differential games with applicatians to resource extraction problem. IEEE T Fuzzy Syst 24(4):819–826

Yang X, Yao K (2016) Uncertain partial differential equation with application to heat conduction. Fuzzy Optim Decis Mak. doi:10.1007/s10700-016-9253-9

Yao K, Gao J (2016) Law of large numbers for uncertain random variables. IEEE T Fuzzy Syst 24(3):615–621

Zhang X, Wang Q, Zhou J (2013) Two uncertain programming models for inverse minimum spanning tree problem. Ind Eng Manag Syst 12(1):9–15

Zhou G, Gen M (1997) Approach to the degree-constrained minimum spanning tree problem using genetic algorithm. Eng Des Autom 3:157–165

Zhou J, He X, Wang K (2014) Uncertain quadratic minimum spanning tree problem. J Commum 9(5):385–390

Zhou J, Chen L, Wang K (2015) Path optimality conditions for minimum spanning tree problem with uncertain edge weights. Int J Unc Fuzz Knowl Based Syst 23(1):49–71

Zhu Y (2010) Uncertain optimal control with application to a portfolio selection model. Cybernet Syst 41(7):535–547

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities No.2016MS65 and National Natural Science Foundation of China Grant No.71671064.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gao, X., Jia, L. & Kar, S. Degree-constrained minimum spanning tree problem of uncertain random network. J Ambient Intell Human Comput 8, 747–757 (2017). https://doi.org/10.1007/s12652-017-0493-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-017-0493-5