Abstract

This paper deals with the problems of high detection cost and low detection efficiency in geometric errors identification of five-axis welding equipment, proposing a new method for rotating axis equipment, based on screw theory and monocular vision. In this paper, a low-cost and high-precision pose measurement method is first proposed, while the screw theory is used to establish a kinematics model for five-axis welding equipment. Furthermore, an identification method for the kinematics parameters and geometric errors of the rotating axis is proposed. In order to verify the validity and feasibility of the proposed identification scheme, the high detection accuracy of the pose detection system is first verified. Following, the significant improvement of the motion accuracy, after geometric errors identification, is verified, based on sampling within the working range of the five-axis welding equipment. The experimental results show that, the average value of the relative direction error of the five-axis welding equipment is reduced from 0.5427°, prior to identification, to 0.0478°, post identification, while the average value of the relative position error is reduced from 0.1472 to 0.0174 mm, respectively. Therefore, the identification scheme is proven can effective in identifying the geometric errors for the rotating axis, while it significantly improves the motion accuracy of the five-axis welding equipment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Compared to the three-axis motion platform, the five-axis platform has higher processing flexibility and efficiency [1]. However, due to the introduction of two rotating axes, more geometric errors are generated, resulting in greater processing errors for the five-axis platform. The geometric errors in the rotating axes of the five-axis platform have a more complicated impact on the accuracy than those in the linear axes as they comprehensively affects the accuracy of the actuator's position and orientation relative to the workpiece and produce a highly nonlinear kinematics relationship. Therefore, the identification of the rotating axis error can significantly improve the motion accuracy of the five-axis welding equipment. Geometric errors are usually divided into position-dependent geometric errors (PDGEs) and position-independent geometric errors (PIGEs) [2, 3]. PDGEs are mainly caused by manufacturing defects relating to machine tools, while PIGEs are mainly caused by assembly defects [4,5,6]. The geometric error measurement methods of the five-axis platform are generally divided into direct measurement methods and indirect measurement methods [7, 8]. The direct measurement method, uses instruments to directly measure a single geometric error item. The method is simple but the measurement efficiency is low. The indirect measurement method solves the geometric errors by establishing a mathematical model of the error [9], exhibiting high detection efficiency. Many researchers have proposed different geometric errors identification methods based on different measuring instruments. M. Tsutsumi et al. [10] used a ballbar to identify the PIGEs of the five-axis platform, based on the indirect measurement method, while they verified the feasibility of this method through experiments. High-precision testing instruments, such as Capball [11, 12], laser tracker [13, 14], R-test [15, 16], etc. can be used to indirectly detect geometric errors. In recent years, the rapid developments in the computer vision field, provided achievements that have gradually been used in the research of parameter identification of industrial automation equipment. The use of computer vision as a detection method can largely reduce the cost and complexity of detection compared to the use of traditional expensive and precise detection instruments [17,18,19,20]. Wang et al. [21] proposed a robot identification method based on machine vision; Yusuke et al. [18] used a camera to measure the two-dimensional position error of a machine tool; Liu W et al. [22] used binocular vision to identify the PIGEs of a five-axis platform. As mentioned above, traditional high-precision measurement equipment requires a high degree of operational expertise and complexity. In addition, traditional measurement methods are time-consuming and difficult to meet the needs of industrial automation processes for high-volume error detection, while the use of vision methods provides great convenience, in terms of automated operation and programmability. The main contribution of this paper is to propose a low-cost and high-precision method for the identification of geometric errors in rotating axes, based on an improved pose measurement method. The goal is to achieve efficient calibration of a five-axis motion stage, in an automated process, so as to meet specific needs, such as five-axis welding processing.

The article is organized as follows: Sect. 2 proposes an optimized algorithm for pose measurement and establishes the kinematics model of the five-axis welding equipment. Section 3 proposes a geometric errors identification method of rotating axes. In Sect. 4, experiments are carried out on the five-axis welding equipment, in order to verify the feasibility and effectiveness of the proposed modeling and identification schemes. Finally, Sect. 5 summarizes the proposed concept.

2 Pose Measurement System and Kinematics Modeling of Five-Axis Welding EQUIPMENT

2.1 An Optimized Direct Linear Transform (DLT) Algorithm for Pose Measurement

As shown in Fig. 1, the monocular camera is fixed at the end of the Z-axis actuator, the checkerboard target (hereafter referred to as the target) is fixed on the C-axis table. The spatial pose of the camera is determined by the amount of movement of the X, Y, and Z axes, while the spatial pose of the target is determined by the amount of movement of the A and C axes.

The imaging of the target, according to the ideal camera imaging model, is shown in Fig. 2, including 4 coordinates systems: the camera coordinates system \(O_{c} { - }X_{c} Y_{c} Z_{c}\), the target coordinates system \(O_{b} { - }X_{b} Y_{b} Z_{b}\), the image physical coordinates system \(o{ - }xy\) and the image pixel coordinates system \(o{ - }uv\). The PnP(Perspective-n-Point) problem is described as knowing the 3D position point \(\left( {\begin{array}{*{20}c} {X_{c} } \\ {Y_{c} } \\ {Z_{c} } \\ 1 \\ \end{array} } \right)\) and the corresponding 2D position \(\left( {\begin{array}{*{20}c} u \\ v \\ 1 \\ \end{array} } \right)\) of the projection, and solving the pose matrix \(M_{2}\) of the camera, as shown in Eq. (1):

where \(M_{1} = \left[ {\begin{array}{*{20}c} {f_{x} } & 0 & {u_{0} } & 0 \\ 0 & {f_{y} } & {v_{0} } & 0 \\ 0 & 0 & 1 & 0 \\ \end{array} } \right]\) is the internal parameter matrix and \(M_{2} = \left[ {\begin{array}{*{20}c} {r_{3 \times 3} } & {t_{3 \times 1} } \\ {0^{T} } & 1 \\ \end{array} } \right]\) is the external parameter matrix; \(r_{3 \times 3} = \left[ {\begin{array}{*{20}c} {r_{11} } & {r_{12} } & {r_{13} } \\ {r_{21} } & {r_{22} } & {r_{23} } \\ {r_{31} } & {r_{32} } & {r_{33} } \\ \end{array} } \right]\) is the rotation matrix and \(t_{3 \times 1} = \left[ {\begin{array}{*{20}c} {t_{1} } \\ {t_{2} } \\ {t_{3} } \\ \end{array} } \right]\) is the translation vector in the matrix \(M_{2}\). The calibration method of Zhang Zhengyou [23] was used to calibrate the camera's internal parameter matrix \(M_{1}\).

Expanding the Eq. (1) and canceling out \(\lambda\), the following equation can be obtained:

Since the number of corresponding points of 3D-2D is greater than 6, the pose matrix \(M_{2}\) can be obtained by finding the least square solution of the above overdetermined equation. When the above-mentioned traditional DLT method is used to solve the external parameter matrix \(M_{2}\), this is regarded as composed of 12 unknown numbers, and its connection is ignored. In fact, although the rotation matrix \(r_{3 \times 3}\) in the matrix \(M_{2}\) has 9 unknown numbers, it has only three degrees of freedom. Therefore, this paper proposes an optimized DLT algorithm to solve the pose matrix \(M_{2}\). Considering the influence of noisy data, the following method is used to estimate the rotation matrix \(r_{3 \times 3}\):

The solved rotation matrix \(\tilde{r}_{3 \times 3}\) is substituted into Eq. (2) and then, the translation vector \(t_{3 \times 1}\) is solved using the Singular Value Decomposition (SVD) method.

2.2 Kinematics Model of Five-Axis Welding Equipment

According to the principle of pose measurement system, the camera coordinates system on the Z axis can be defined as the actuator coordinates system{A}, while the target coordinates system on the C axis can be defined as the workbench coordinates system{W}. In order to obtain the kinematics model from the actuator coordinates system{A} to the workbench coordinates system{W}, the transformation relationship between the coordinates systems is required. The term \(^{a} g_{w}\) represents the pose homogeneous transformation matrix of the workbench coordinates system{W} relative to the actuator coordinates system{A}. Based on product of exponentials formula [1], the forward kinematics model from the workbench coordinates system{W} to the actuator coordinates system{A} is:

where the term \(^{a} g_{w} (0)\) represents the initial pose matrix of the workbench coordinates system {W} relative to the actuator coordinates system {A}. \({{\varvec{\upxi}}}_{x}\), \({{\varvec{\upxi}}}_{y}\), \({{\varvec{\upxi}}}_{{\text{z}}}\), \({{\varvec{\upxi}}}_{a}\) and \({{\varvec{\upxi}}}_{c}\) represent the screw coordinates of the X, Y, Z, A and C axes respectively.\(x\),\(y\),\(z\),\(\theta_{a}\) and \(\theta_{c}\) represent the motion components of X, Y, Z, A and C axes respectively.

In this section, a kinematics model of the five-axis welding machine is developed, based on the derivation of the screw theory (Appendix 1). The specific matrix form of forward kinematics model is mathematically expressed as:

where

The ideal kinematics parameters and screw coordinates are expressed as follows:

\({{\varvec{\upxi}}}_{x} = \left[ {\begin{array}{*{20}c} {{\mathbf{v}}_{x} } \\ {0_{3 \times 1} } \\ \end{array} } \right]\),\({\mathbf{v}}_{x} = \left[ {\begin{array}{*{20}c} 1 & 0 & 0 \\ \end{array} } \right]^{T}\),\({{\varvec{\upxi}}}_{y} = \left[ {\begin{array}{*{20}c} {{\mathbf{v}}_{y} } \\ {0_{3 \times 1} } \\ \end{array} } \right]\),\({\mathbf{v}}_{y} = \left[ {\begin{array}{*{20}c} 0 & 1 & 0 \\ \end{array} } \right]^{T}\),\({{\varvec{\upxi}}}_{z} = \left[ {\begin{array}{*{20}c} {{\mathbf{v}}_{z} } \\ {0_{3 \times 1} } \\ \end{array} } \right]\),\({\mathbf{v}}_{z} = \left[ {\begin{array}{*{20}c} 0 & 0 & 1 \\ \end{array} } \right]^{T}\), \({{\varvec{\upxi}}}_{a} = \left[ {\begin{array}{*{20}c} { - {{\varvec{\upomega}}}_{a} \times {\mathbf{q}}_{a} } \\ {{{\varvec{\upomega}}}_{a} } \\ \end{array} } \right]\),\({{\varvec{\upomega}}}_{a} { = }\left[ {\begin{array}{*{20}c} 1 & 0 & 0 \\ \end{array} } \right]^{T}\),\({\mathbf{q}}_{a} { = }\left[ {\begin{array}{*{20}c} 0 & 0 & 0 \\ \end{array} } \right]^{T}\),\({{\varvec{\upxi}}}_{c} = \left[ {\begin{array}{*{20}c} { - {{\varvec{\upomega}}}_{c} \times {\mathbf{q}}_{c} } \\ {{{\varvec{\upomega}}}_{c} } \\ \end{array} } \right]\),\({{\varvec{\upomega}}}_{c} { = }\left[ {\begin{array}{*{20}c} 0 & 0 & 1 \\ \end{array} } \right]^{T}\),\({\mathbf{q}}_{c} { = }\left[ {\begin{array}{*{20}c} 0 & 0 & 0 \\ \end{array} } \right]^{T}\).

3 A Geometric Errors Identification Method of Rotating Axis

In this section, based on the pose measurement system of the monocular camera, a geometric errors identification scheme for the five-axis welding equipment is designed. Although it is difficult to identify the kinematics parameters directly by five-axis linkage, this paper proposes an analytical method, based on the least squares method, to identify the kinematics parameters. Then, the geometric errors of the A-axis and C-axis identification follows. The flowchart of the geometric errors identification method is shown in Fig. 3.

3.1 Kinematics Parameters Identification Method for Kinematic Chain

Based on the principle of the monocular camera measurement, the target on the platform is fixed, while the monocular camera is used to take an image of the calibration target, to calculate the pose matrix \(^{a} g_{w}\), based on the image information. The kinematic chain of the five-axis welding equipment is shown in Fig. 4.

Equation (5) is simplified as follows:

where

where\({{\varvec{\upomega}}}_{{\mathbf{1}}}\), \({{\varvec{\upomega}}}_{{\mathbf{2}}}\), \(\theta_{1}\), \(\theta_{2}\), \({\mathbf{q}}_{{\mathbf{1}}}\), \({\mathbf{q}}_{{\mathbf{2}}}\), \({\mathbf{v}}_{{\mathbf{1}}}\), \({\mathbf{v}}_{{\mathbf{2}}}\), \({\mathbf{v}}_{{\mathbf{3}}}\) are as listed in Table 1.

According to Eq. (8), the matrix \(R_{3 \times 3}\) in Eq. (9) can be obtained as follows:

The values of \(W_{1}\),\(W_{2}\),\(W_{3}\),\(W_{4}\),\(W_{5}\),\(W_{6}\),\(W_{7}\),\(W_{8}\),\(W_{9}\) are listed in Table 2.

According to Eq. (8), the following expression can be obtained:

where, \(^{a} {\text{g}}_{w}\) represents the pose matrix, while the welding equipment is represented by \((x,y,z,\theta_{1} ,\theta_{2} )\);\(^{a} g_{w} (0)\) represents the pose matrix as the motion component of each axis of the platform is \(\left( {0,0,0,0,0} \right)\) in the initial state.

Let \(^{a} G_{w} = \left( {^{a} {\text{g}}_{w} } \right)\left( {^{a} g_{w} (0)} \right)^{ - 1}\), then the following expression can be obtained:

where, the pose matrix \(^{a} {\text{g}}_{w}\) and \(^{a} g_{w} (0)\) can be obtained by image data processing, provided by a camera, so \(^{a} G_{w}\) can be measured by a monocular camera.

Regarding the matrix \(R_{3 \times 3}\) in matrix \(^{a} G_{w}\), it can be solved according to Eq. (10). For any \(i \in \left\{ {1,2,3} \right\}\) and \(j \in \left\{ {1,2,3} \right\}\),\(^{a} G_{w}^{(i,j)} = R_{3 \times 3}^{(i,j)}\). Each element in the matrix \(R_{3 \times 3}\) can be matched to a corresponding expression. Considering the element \(^{a} G_{w}^{(1,1)}\) (which is also \(R_{3 \times 3}^{(1,1)}\))in the first row and the first column of \(^{a} G_{w}\) as an example, set:

Next, the following expression can be obtained:

Actually, for any \(i \in \left\{ {1,2,3} \right\}\) and \(j \in \left\{ {1,2,3} \right\}\), the following expressions can be obtained:

When the five-axis welding equipment is at the initial position, the initial pose matrix \(^{a} g_{w} (0)\) can be obtained. As the five-axis welding equipment moves to different positions, the pose matrix \(^{a} g_{w}\),corresponding to the different positions,is obtained, while then the matrixes \(^{a} G_{w}^{(i,j)}\) and \(\Theta _{1}\), corresponding to different positions, are obtained. Moreover, Eq. (16) is a linear type of equation, while the matrix \(W^{(i,j)}\) can be obtained through multiple sets of different \(^{a} G_{w}^{(i,j)}\) and \(\Theta _{1}\), using the least square method. Therefore, according to Eqs. (17) (18) (19) and (20), the values of \({{\varvec{\upomega}}}_{1}\) and \({{\varvec{\upomega}}}_{2}\) are easily derived from matrix \(W_{2}\) and matrix \(W_{4}\),as shown in Table 2.

The vector \({\mathbf{P}}_{3 \times 1}\) in Eq. (12) is simplified into the following expression:

where vector \({\mathbf{q}}_{1} = \left[ {\begin{array}{*{20}c} {{\mathbf{q}}_{1}^{(1,1)} } \\ {{\mathbf{q}}_{1}^{(2,1)} } \\ {{\mathbf{q}}_{1}^{(3,1)} } \\ \end{array} } \right]\),vector \({\mathbf{q}}_{2} = \left[ {\begin{array}{*{20}c} {{\mathbf{q}}_{2}^{(1,1)} } \\ {{\mathbf{q}}_{2}^{(2,1)} } \\ {{\mathbf{q}}_{2}^{(3,1)} } \\ \end{array} } \right]\), the values of \(\Omega_{ \, 1}\), \(\Omega_{ \, 2}\) and \(W_{10}\) are listed in Table.3.

Each element in the vector \({\mathbf{P}}^{(1)}_{3 \times 1}\) can be matched to the corresponding expression. Considering the first element \({\mathbf{P}}^{(1)}_{3 \times 1}\) in the \({\mathbf{P}}_{3 \times 1}\) vector as an example, the following expression can be obtained:

where,

For \({\mathbf{P}}^{(2)}_{3 \times 1}\) and \({\mathbf{P}}^{(3)}_{3 \times 1}\), the same expressions can be used:

The values of \({{\varvec{\upomega}}}_{1}\) and \({{\varvec{\upomega}}}_{2}\) have already been calculated, as previously described. Substituting the values of \({{\varvec{\upomega}}}_{1}\) and \({{\varvec{\upomega}}}_{2}\) into \(\Omega_{1}\) and \(\Omega_{2}\), and considering \(\theta_{1}\)、 \(\theta_{2}\)、\(x\)、\(y\) and \(z\) as the recorded components of each motion axis, the expressions of \(\Psi_{1}\),\(\Psi_{2}\) and \(\Psi_{3}\) are derived. When the five-axis welding equipment moves to different positions, the \(^{a} G_{w}\) is obtained, followed by the respective \({\mathbf{P}}^{(1)}_{3 \times 1}\) and \(\Psi_{1}\). Considering that Eq. (22) is a linear equation, the \(\Phi^{(1)}\) can be obtained through multiple sets of different \({\mathbf{P}}^{(1)}_{3 \times 1}\) and \(\Psi_{1}\), using the least square method. The values of \(\Phi^{(2)}\) and \(\Phi^{(3)}\) can also be obtained. Therefore, vector \({\mathbf{q}}_{1}\) and vector \({\mathbf{q}}_{2}\) can be easily derived from \(\Phi^{(1)}\),\(\Phi^{(2)}\) and \(\Phi^{(3)}\).

3.2 Identification of Geometric Errors for Rotating Axis

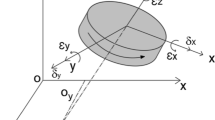

Considering the case of the C-axis as an example, a single rotating axis has 6 geometric errors, as shown in Fig. 5. It will produce errors in the six degrees of freedom in space, including one axial position error, which is represented by \(\delta_{xc}\);two radial position errors, which are represented by \(\delta_{yc}\) and \(\delta_{zc}\); three angle errors, represented by \(\varepsilon_{xc}\),\(\varepsilon_{yc}\) and \(\varepsilon_{zc}\), respectively were used to describe the angular error of the X-axis, Y-axis, and Z-axis of the coordinates system.

The composite error matrix \(T_{ec}^{D}\), formed by the 6 geometric errors on the C-axis, is as follows:

where \(T^{x} (*)\), \(T^{y} (*)\) and \(T^{z} (*)\), represent the \(4 \times 4\) homogeneous transformation matrix of translational motion along the x-axis, y-axis and z-axis, respectively; \(R^{x} (*)\),\(R^{y} (*)\) and \(R^{z} (*)\) represent the \(4 \times 4\) homogeneous transformation matrix of the rotational motion around the X-axis, Y-axis and Z-axis, respectively. Since the geometric errors are very small quantities, expanding the expression \(T_{ec}^{D}\) and ignoring the higher-order terms in \(T_{ec}^{D}\), provides a simplified expression of the error matrix \(T_{ec}^{D}\) as follows:

When identifying the geometric errors of a single axis, in order to prevent other axes from affecting the geometric errors measurement of the specific axis, the other axes are maintained in their initial position, while only the investigated axis is moved. Regarding the C-axis, the respective kinematics model can be established as:

Therefore, Eq. (29) can be obtained as:

where,

The terms \({{\varvec{\upomega}}}_{c}\) and \({\mathbf{q}}_{c}\) have been calculated in Sect. 3.1, whereas \(^{a} {\text{g}}_{w}\) and \(^{a} {\text{g}}_{w(0)}\) can be obtained by a monocular camera. Therefore, for any \(\theta_{c}\), the corresponding \(T_{ec}^{D}\) can be obtained according to Eq. (30). Therefore, when the axis is at \(\theta_{c}\), 6 geometric errors of C-axis can be obtained from Eq. (28).

After measuring A-axis and C-axis geometric errors at different positions, each error term can be smoothed and fitted to obtain the expression for geometric errors compensation. For example, the C-axis can move within the range of 360°, while each error term can be considered as a periodic function of \(\theta_{c}\) with a maximum period of 2π. The following finite term Fourier series can be selected to fit the various errors:

4 Experiment

4.1 Inspection of Monocular Camera Measurement Accuracy

The vision measurement system in this article needs to have relatively high accuracy requirements for the pose measurement. Therefore, the measurement accuracy is analyzed by separately moving the axis to a specified distance in the five-axis welding equipment workspace. The feedback value by the motor encoder is regarded as the actual value \(d_{a}\), while the value of the visual pose measurement is represented as \(d_{m}\). The accuracy of the vision measurement system is detected by examining the difference \(\Delta d\) between \(d_{a}\) and \(d_{m}\).

A single-axis motion experiment of the C-axis was performed. The specific motion control commands are: rotate 2 degree each time and rotate continuously for one cycle in a single direction, then calculate the angle error \(\Delta d\) generated by sampling points. The actual value \(d_{a}\) is obtained by calculating the change between two encoder counts, before and after. The measured value \(d_{m}\) by monocular vision is obtained by calculating the change between the two camera external parameter matrices, before and after, as the pose matrix \(M_{2} = \left[ {\begin{array}{*{20}c} {r_{3 \times 3} } & {t_{3 \times 1} } \\ {0_{1 \times 3} } & 1 \\ \end{array} } \right]\) in Eq. (1). The measured value \(d_{m}\) by monocular vision can be solved by the Eq. (33):

where \(r_{{0_{3 \times 3} }}\) represents the rotation matrix and \(t_{{0_{3 \times 1} }}\) represents the translation vector in the previous pose matrix \(M_{1}\);\(r_{{1_{3 \times 3} }}\) represents the rotation matrix and \(t_{{1_{3 \times 1} }}\) represents the translation vector in the latter pose matrix \(M_{1}\).\(\Delta \left( t \right)\) represents the displacement of this movement, while \(\Delta \left( r \right)\) represents the respective rotation.

As shown in Fig. 6, the proposed optimized DLT algorithm proposed exhibits higher detection accuracy in pose measurement than the traditional DLT algorithm. As shown in Fig. 6, the angular error of the optimized DLT algorithm, caused by the single-axis rotation of the C-axis, is within 0.0025°, while the traditional DLT algorithm provides a slightly larger value. Comprehensive analysis shows that, the optimized DLT algorithm shows higher accuracy of pose measuring than the traditional algorithm, which meets the requirements of the process of parameter identification in a five-axis welding equipment, as presented in this paper.

4.2 Identification Experiment of Geometric Errors for Rotating Axis

In order to accurately identify the kinematics parameters, the following experiment is carried out. The physical map of the five-axis welding equipment is shown in Fig. 7, where a Basler monocular camera with a resolution of 5 million pixels is used; the target is a \(15 \times 15\) black and white chessboard, with \(2 \times 2\,{\text{mm}}\) squares. The sensor chip of the monocular camera is CMOS type and the frame rate is 14 fps. The closed-loop control of each single axis based on motion controllers, servo drives and motors, while the communication format is EtherCAT bus. Each motion axis provides real-time feedback of motor position signal based on incremental photoelectric encoder. The X, Y and Z axes are equipped with Panasonic AC servo motors and precision ball screw with a pitch of 5 mm, whereas their operating ranges are 425 mm, 375 mm and 220 mm, respectively. The motion assembly of A-axis consists of Panasonic AC servo motor and speed reducer, where the reduction ratio is 20:1. The motion of C-axis is realized by Akribis direct drive motor.

In the actual operation of the five-axis welding equipment, the working stroke range of the A-axis is ± 25°, and the operating range of the C-axis is ± 180°. The A axis is sampled every 0.5° according to the full stroke, while C axis is sampled every 1° across the full stroke path. Since the imaging of the monocular camera requires that the target is within the view field of the camera, it is necessary to roughly determine the positions of the translation axes X, Y, and Z according to the positions of the A-axis and the C-axis, as well as ensure that the target imaging is clear. Three items of data are recorded during sampling: the initial pose matrix \(^{a} g_{w} (0)\) at the initial position, the pose matrix \(^{a} g_{w}\) when the axes of the platform are located at each sampling point, and the components \((x,y,z,\theta_{1} ,\theta_{2} )\) of each axis at the respective sampling point. According to Eq. (8), the initial pose matrix \(^{a} g_{w} (0)\) is a key data in the modeling process. In order to eliminate random errors, the average value of multiple measurements is considered in the calculation of \(^{a} g_{w} (0)\). After identification, the actual kinematics parameters are listed in Table 4. The ideal and actual screw coordinates are shown in Table 5.

4.2.1 Identification of C-Axis Geometric Errors

During the identification of geometric errors of the C axis, the other axes are remain at their initial positions, while the C axis is recorded every 1° within a range of ± 180°. According to the geometric errors identification method of the rotation axis, proposed in Sect. 3.2, the pose matrix of the target is measured at the sampling point, and the geometric errors of the C axis are calculated at each sampling points. Finally, considering n = 4, the various geometric errors of the C axis are fitted according to Eq. (32). Figure 8 illustrates the identified values of the geometric errors of the sampled C axis and the fitted curve. The points in Fig. 8 represent the identified values at each angle, while the curve represents the fitted curve.

4.2.2 Identification of A-Axis Geometric Errors

The identification method of the A-axis geometric errors is similar to that of C-axis. For this experimental platform, if only the A-axis is rotated and the X, Y, and Z axes remain still, the target will move out of the camera's field of view after the A-axis is rotated to a certain angle, causing the camera to fail to measure the target's pose. Therefore, only a small range of geometric errors identification is performed on the A axis here. The A-axis records a sampling point every 0.1° within a range of ± 7°, and the geometric errors are calculated at each sampling point. Considering n = 1, the geometric errors of the A-axis are fitted according to Eq. (32). Figure 9 shows the identified values of the geometric errors of the A-axis and their fitted curves.

4.3 Verification of the Identification Accuracy of Geometric Errors

In order to intuitively evaluate the motion accuracy of the five-axis welding equipment before and after the geometric errors identification, the relative position error and the relative direction error are defined, and used to evaluate the spatial pose accuracy. Figure 10 shows a common spiral-machining trajectory of a five-axis welding equipment in the actual workspace. The trajectory maintains the same pose at any position, while the sampling points are uniformly selected within the trajectory, in order to analyze the accuracy, before and after error identification.

For any sampling point \(S_{i}\), the actual measured pose matrix of the workbench coordinates system {W} relative to the actuator coordinates system {A}, obtained by the monocular camera imaging is \((\tilde{R}_{wa}^{i} ,\tilde{T}_{wa}^{i} )\), where \(\tilde{R}_{wa}^{i}\) denotes the rotation matrix and \(\tilde{T}_{wa}^{i}\) denotes the position vector in the pose matrix. Before geometric errors identification, the theoretical pose matrix of the workbench coordinates system {W} relative to the actuator coordinates system {A}, obtained by the ideal model is \((\overline{R}_{wa}^{i} ,\overline{T}_{wa}^{i} )\). After geometric errors identification, the theoretical pose matrix of the workbench coordinates system {W} relative to the actuator coordinates system {A}, obtained by the actual model is \((\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{R}_{wa}^{i} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{T}_{wa}^{i} )\).

The relative direction error, before and after geometric errors identification, are defined as \(\delta \overline{R}_{wa}^{i}\) and \(\delta \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{R}_{wa}^{i}\), respectively:

where \({\text{v}}\) denotes the transformation of rotation matrix to rotation vector according to the relationship between Lie group and Lie algebra.

The relative position error, before and after geometric errors identification, are defined as \(\delta \overline{T}\) and \(\delta \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{T}\), respectively:

Figure 11 shows the relative position error and relative direction error at each sampling point \(S_{i}\), before and after geometric errors identification. Table 6 lists the average and maximum values of the relative position error and relative direction error. As shown in Table 6, the relative position error and relative direction error of the five-axis welding equipment have been significantly reduced after identification, compared to their respective values prior to identification. It is evident that, the identification method, as provided in this article, has a significant effect on improving the accuracy of the five-axis welding equipment.

5 Conclusions

In order to deal with the problems of high cost and low efficiency of geometric errors identification of five-axis welding equipment, this paper proposes a new method for rotating axes, based on screw theory and monocular vision.

-

(1)

Based on the structural characteristics of the five-axis welding equipment, this paper proposes an optimized DLT algorithm for pose measurement, based on the monocular camera.

The proposed method demonstrates its low cost and high accuracy and contributes, to a certain extent, to the automated process of effective calibration of five-axis welding equipment.

-

(2)

According to the screw theory and the above mentioned pose measurement system, this papers proposes a geometric errors identification method for the rotating axis of five-axis welding equipment. The sampled experimental results show that, before identification, the average relative position error of the five-axis welding equipment is 0.1472 mm and the average value of the relative direction error is 0.5427°. After identification, the average relative position error of the five-axis welding equipment, is 0.0174 mm and decreased by 88.18%, while the average value of the relative direction error, is 0.0478° and decreased by 91.19%. Therefore, the accuracy and effectiveness of the identification scheme are verified.

References

Wang, J., Cheng, C., & Li, H. (2020). A novel approach to separate geometric error of the rotary axis of multi-axis machine tool using laser tracker. International Journal of Precision Engineering and Manufacturing, 21, 983–993. https://doi.org/10.1007/s12541-020-00329-5

Qiao, Y., Chen, Y., Yang, J., & Chen, B. (2017). A five-axis geometric errors calibration model based on the common perpendicular line (CPL) transformation using the product of exponentials (POE) formula. International Journal of Machine Tools & Manufacture, s, 118–119, 49–60. https://doi.org/10.1016/j.ijmachtools.2017.04.003

Yang, S. H., & Lee, K. I. (2022). A dual difference method for identification of the inherent spindle axis parallelism errors of machine tools. International Journal of Precision Engineering and Manufacturing, 23, 701–710. https://doi.org/10.1007/s12541-022-00653-y

Maeng, S., & Min, S. (2020). Simultaneous geometric error identification of rotary axis and tool setting in an ultra-precision 5-axis machine tool using on-machine measurement. Precision Engineering. https://doi.org/10.1016/j.precisioneng.2020.01.007

Liu, W. H. (2018). Generalized actual inverse kinematic model for compensating geometric errors in five-axis machine tools. International Journal of Mechanical Sciences. https://doi.org/10.1016/j.ijmecsci.2018.07.022

Lee, K. I., Jeon, H. K., Lee, J. C., et al. (2022). Use of a virtual polyhedron for interim checking of the volumetric and geometric errors of machine tools. International Journal of Precision Engineering and Manufacturing, 23, 1133–1141. https://doi.org/10.1007/s12541-022-00666-7

Yang, J., & Ding, H. (2016). A new position independent geometric errors identification model of five-axis serial machine tools based on differential motion matrices. International Journal of Machine Tools and Manufacture. https://doi.org/10.1016/j.ijmachtools.2016.02.001

Schwenke, H., Knapp, W., Haitjema, H., Weckenmann, A., Schmitt, R., & Delbressine, F. (2008). Geometric error measurement and compensation of machines—An update. CIRP Annals - Manufacturing Technology, 57(2), 660–675. https://doi.org/10.1016/j.cirp.2008.09.008

Ibaraki, S., & Knapp, W. (2013). Indirect measurement of volumetric accuracy for three-axis and five-axis machine tools: A review. International Journal of Automation Technology, 6(2), 110–124. https://doi.org/10.3929/ethz-a-007593181

Tsutsumi, M., & Saito, A. (2003). Identification and compensation of systematic deviations particular to 5-axis machining centers. International Journal of Machine Tools & Manufacture, 43(8), 771–780. https://doi.org/10.1016/S0890-6955(03)00053-1

Zargarbashi, S., & Mayer, J. (2009). Single setup estimation of a five-axis machine tool eight link errors by programmed end point constraint and on the fly measurement with capball sensor. International Journal of Machine Tools and Manufacture, 49(10), 759–766. https://doi.org/10.1016/j.ijmachtools.2009.05.001

Hong, C., Ibaraki, S., & Oyama, C. (2012). Graphical presentation of error motions of rotary axes on a five-axis machine tool by static R-test with separating the influence of squareness errors of linear axes. International Journal of Machine Tools & Manufacture, 59, 24–33. https://doi.org/10.1016/j.ijmachtools.2012.03.004

Nubiola, A., Slamani, M., Joubair, A., & Bonev, I. A. (2014). Comparison of two calibration methods for a small industrial robot based on an optical cmm and a laser tracker. Robotica, 32(pt.3), 447–466. https://doi.org/10.1017/S0263574713000714

Wang, J. (2019). The identification method of the relative position relationship between the rotary and linear axis of multi-axis numerical control machine tool by laser tracker. Measurement. https://doi.org/10.1016/j.measurement.2018.09.062

Hong, C., & Ibaraki, S. (2013). Non-contact R-test with laser displacement sensors for error calibration of five-axis machine tools. Precision Engineering, 37(1), 159–171. https://doi.org/10.1016/j.precisioneng.2012.07.012

Ibaraki, S., Oyama, C., & Otsubo, H. (2011). Construction of an error map of rotary axes on a five-axis machining center by static R-test. International Journal of Machine Tools and Manufacture, 51(3), 190–200. https://doi.org/10.1016/j.ijmachtools.2010.11.011

Zhou, K., Huang, X., Li, S., Li, H., & Kong, S. (2021). 6-D pose estimation method for large gear structure assembly using monocular vision. Measurement, 183, 109854. https://doi.org/10.1016/j.measurement.2021.109854

Ibaraki, S., & Tanizawa, Y. (2011). Vision-based measurement of two-dimensional positioning errors of machine tools. Journal of Advanced Mechanical Design, Systems, and Manufacturing, 5(4), 315–328. https://doi.org/10.1299/jamdsm.5.315

Liu, W., Li, X., Jia, Z., Yan, H., & Ma, X. (2017). A three-dimensional triangular vision-based contouring error detection system and method for machine tools. Precision Engineering. https://doi.org/10.1016/j.precisioneng.2017.04.016

Gao, H., Shen, F., Zhang, F., et al. (2022). A high precision and fast alignment method based on binocular vision. International Journal of Precision Engineering and Manufacturing, 23, 969–984. https://doi.org/10.1007/s12541-022-00674-7

Wang, H., et al. (2015). A vision-based fully-automatic calibration method for hand-eye serial robot. Industrial Robot, 42(1), 64–73. https://doi.org/10.1108/IR-06-2014-0352

Liu, W., Li, X., Jia, Z., Li, H., Ma, X., Yan, H., et al. (2017). Binocular-vision-based error detection system and identification method for PIGEs of rotary axis in five-axis machine tool. Precision Engineering. https://doi.org/10.1016/j.precisioneng.2017.08.013

Zhang, Z. (1999). Flexible camera calibration by viewing a plane from unknown orientations. In Seventh IEEE International Conference on Computer Vision. IEEE.

Funding

This work is financially supported by the National Natural Science Foundation of China (No.51975590).

Author information

Authors and Affiliations

Contributions

XT established the kinematic model and designed the identification algorithm; he also drafted the manuscript. TX carried out relevant experiments and data processing; HZ made suggestions and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1. Derivation of Screw Theory

Appendix 1. Derivation of Screw Theory

In the case of a rotary axis, the screw coordinate \({{\varvec{\upxi}}}\) can be expressed as:

where \({{\varvec{\upomega}}}\) is the unit direction vector of the rotation axis-line and \({\mathbf{p}}\) is a point on the rotation axis-line.

The exponent \(e^{{\hat{\xi }\theta }}\) of a spinor coordinate for a rotary axis, is expressed as follows:

where

\(\theta\) is the rotation angle of the axis.

In the case of purely rotating motion, when \(\left\| {\mathbf{v}} \right\| \ne 0\), \({{\varvec{\upomega}}}\) is orthogonal to \({\mathbf{v}}\), so \({{\varvec{\upomega}}}^{T} {\mathbf{v}} = 0\);when \(\left\| {\mathbf{v}} \right\| = 0\), \({{\varvec{\upomega}}}^{T} {\mathbf{v}} = 0\) is still valid. Therefore, the following relation is obtained as:

Let \({\mathbf{q}} = {{\varvec{\upomega}}} \times {\mathbf{v}}\), while the geometric meaning of \({\mathbf{q}}\) is the vertical point between the origin and the line of the rotation axis. Thus, \({\mathbf{q}}\) represents the position of the vertical point of the rotation axis, while \({{\varvec{\upomega}}}\) represents the direction of the rotation axis.

The exponent \(e^{{{\hat{\mathbf{\xi }}}\theta }}\) of a rotary axis can be simplified as follows:

Regarding the translational axis, the screw coordinate \({{\varvec{\upxi}}}\) can be expressed as:

where \({\mathbf{v}}\) is the unit direction vector of the translational axis.

The exponent \(e^{{{\hat{\mathbf{\xi }}}\theta }}\) of a translational axis is expressed as:

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang, X., Zhou, H. & Xu, T. A Geometric Errors Identification Method for the Rotating Axis of Five-Axis Welding Equipment. Int. J. Precis. Eng. Manuf. 24, 1355–1367 (2023). https://doi.org/10.1007/s12541-023-00829-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12541-023-00829-0