Abstract

Grinding process modeling represents a great challenge due to its stochastic nature. The uncertainty factor of grinding technology is mainly attributable to the undefined grain morphology, with the influence of this aspect becoming more pronounced in a dry configuration. Even though grinding has always used lubricants, nowadays the reduction or complete elimination of this element could mean a significant reduction in environmental pollution. Many modeling approaches have been used in literature to investigate phenomena related to grinding but each exhibits some disadvantages. In this paper a hybrid FEM—ML approach is proposed to forecast forces generated by the action of a single grain in dry conditions, overcoming the main modeling limitations observed to date. Experiments and force measurements were performed on a CNC surface grinding machine using sintered aluminum oxide grains of size 60. FEM simulations were developed in DEFORM 3D to predict grinding forces and increase the data set. ML algorithms were proposed to increase model prediction productivity and optimize the control of process parameters.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Amongst the various finishing technologies, grinding is one of the most widely used processes, usually chosen to achieve high surface quality characteristics on hard materials. Obtaining such finishing levels requires grinding processes that are characterized by a very low removal rate. For this reason, grinding provides the highest specific energy of all cutting processes. Grinding is not only characterized by very low cutting depths, but also by the prevalence of negative rake angles, which amplifies this phenomenon. Heat generated by grinding often leads to thermal burns and product rejection, especially in a dry configuration. Dry processes, however, could lead to a cleaner manufacturing route with substantial reductions in environmental pollution and production costs. Therefore, more in-depth investigation is needed to predict material behavior under dry abrasive process configurations. Grinding forces and temperatures are generally considered to exhibit a threshold value below which the process can be performed with nominal operating values without generating thermal defects [1]. Heat-related problems require the ability to predict the process energy while varying materials and process parameters. Many researchers have dealt with this challenge using different modeling approaches. To date, models can be grouped into two different categories:

-

Physical models (fundamental analytical, finite element, kinematic, molecular dynamics, and regression models)

-

Empirical and heuristic models (regression, artificial neural net, and rule-based models)

At the simplest level, physical models focus on microscopic grinding phenomena and are generally implemented to design the process and avoid manufacturing defects. Empirical and heuristic models instead analyze the macroscopic behavior of the grinding system and are much more likely to be used to control the process [2]. Empirical and semi-empirical models have seen widespread uptake, providing a lot of information in relation to a given application; however, they generally only work for a specific parameter and material set. Experiments are therefore usually required to determine calibration coefficients, which are often difficult to obtain [3,4,5]. Meanwhile, physical models require previous knowledge for analysis of results, as well as long computational times, but allow microscopic phenomena behind the process to be identified. Amongst the various physical approaches, grinding FEM models have been implemented following two different approaches, which can be classified as micro- and macro-scale models [6]. Macro-scale models consider the interaction between the whole grinding wheel and the material from a thermal point of view [7,8,9]. They are focused on forecasting thermal burns by considering a threshold value of temperature starting from process power measurements and assuming a certain value of energy partition, which represents the heat absorbed by the workpiece. Micro-scale approaches instead focus on the action of a single grain on the workpiece, analyzing mechanical behavior during ploughing and cutting mechanism to provide information about the forces generated during the process due to the material and kinematics [10,11,12]. To deal with the stochastic nature of abrasive grains in micro-scale approaches, grinding force and power prediction modeling are often based on the probabilistic distribution of the undeformed chip thickness as a function of the kinematic conditions, material properties and wheel microstructure [13, 14]. Force modeling at each grain is then developed, deducing the dynamic grain density from the static grain density, and considering kinematic effects such as shadows generated by active grains and dynamic effects due to local grain deflection. In the kinematic modeling approach, statistical analysis of the grain shape is generally performed considering the apex, rake, wedge and opening angles, followed by the creation of a database of abrasive grains that is mathematically designed by considering the grains, bond and pore volumetric fraction [15, 16].

Apart from the nondeterministic nature of the grinding wheel geometry, other aspects such as machine tool vibration, abrasive tool wear, chip formation and undefined contacts make theoretical modeling of abrasive processes difficult [17]. Hence, finishing processes often involve a large gap between process conditions and human understanding, for which it is worth investigating grinding through machine learning (ML) and deep learning (DL), together with FEM analysis. In this case, modeling is assisted by real-time monitoring of the process through specific and accurate sensors capable of consistently revealing the physical phenomena taking place. There are, however, some key technologies to be improved. Restricted data volume and difficulties in data unification and collection in grinding, with a lack of advanced integrated multi-sensor online monitoring equipment, still limit ML application to grinding [18]. In order to overcome the limits of each approach and thus combine the reliability of FEM analysis with the productivity of ML techniques, a hybrid model was developed in which the advantages of FEM and ML were combined within cutting [19, 20] and grinding [21] force prediction models. In some cases, the ML database made up of experimental data can be enlarged by introducing data calculated through FEM simulations, with the possibility of forecasting process outcomes that are difficult to measure experimentally [22]. The hybrid method can overcome the limits of single approaches adopted for modeling grinding, handling problems relating to lack of experimental data by introducing FEM model outcomes into the data set, making the strategy faster by avoiding time-consuming simulations and taking advantage of validation based on experimental data. Once the manufacturing process is optimized, it is also possible to develop digital twin models for grinding that include the machine tool and cutting process [23].

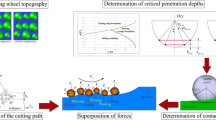

In this paper, a FEM simulation was developed in DEFORM 3D to forecast grinding forces generated by the interaction between a single grain and workpiece material in dry conditions. FEM simulations were carried out while varying the cut depth, feed rate and cutting speed, with statistical analysis performed based on the geometry of real sintered aluminum oxide grains to improve model precision. A combined database deriving from experimental outcomes and FEM simulations was processed with Artificial Neural Networks (ANN) and Decision Trees (a Random Forest in this specific case) to increase prediction productivity and verify the accuracy and applicability of different algorithms to the cutting operation. Moreover, the present work applied the Synthetic Minority Over-sampling Techniques (SMOTE) function, which can repopulate database balancing data with inhomogeneous entities and fill in gaps due to missing values. Observed data were compared with predicted data and the accuracy of grinding force forecasting was evaluated through performance index calculation. A concise explanation of the concept and the procedure is shown schematically in Fig. 1.

2 Materials and Methods

2.1 Experimental and Numerical Data Sources

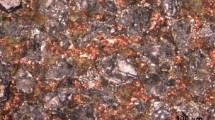

Experiments were performed in dry conditions on a CNC tangential grinding machine, applying the grain on a support as shown in Fig. 2. Cutting force components were measured using a Kistler 9255C dynamometer. Sintered aluminum oxide abrasive grains with a FEPA size of 60 were used for experiments. Case-hardened 27MnCr5 steel was employed as the workpiece material, characterized by a hardness of 62 HRC to a depth of at least 1 mm after heat treatment. Single grain grinding tests were performed using the process parameters reported in Table 1. Force signals acquired by the dynamometer were then processed in MATLAB® to extract the maximum cutting load during the interaction between grain and material.

Real grain geometries were acquired through computed tomography, with a group of reference grains imported into STL editor software Magics Materialize to measure and statistically analyze their geometric characteristics (Fig. 3a, b). A defined equivalent geometry, representative of the class of grain material and size, was designed with a rake angle of 68°, tip radius of 0.1 mm and total length and width of 0.55 mm and 0.6 mm, respectively. The defined equivalent grain geometry was then imported as a tool into a thermomechanical FEM simulation implemented in DEFORM 3D, adopting a Lagrangian incremental formulation. The grain was modeled as a rigid body, while the workpiece was instead represented as a deformable body due to the very high difference in hardness between the grain and workpiece. The workpiece was discretized with tetrahedral elements distributed with smallest dimensions in the interaction zone to model the depth of cut with at least three elements. The workpiece mesh was set as an absolute mesh, while the grain mesh was set as a relative mesh with a size ratio of 20. Dry contact conditions were considered with a constant Coulomb friction coefficient of 0.2. Movement was assigned to the grain. Zero velocity boundary conditions were applied to the lower workpiece surface to maintain its position fixed in space.

The Johnson–Cook (J&C) model was employed to describe material flow according to Eq. (1).

A hardened steel based on split Hopkinson pressure bar (SHPB) technology [24], with the same hardness as case-hardened 27MnCr5 (62 HRC), was employed as the reference material. The Cockroft-Latham model was used to predict the fracture criterion for chip formation with the material critical value set to 0.22.

Simulations were implemented using the process parameters shown in Table 2.

2.2 ML Structure for Prediction of Grinding Forces

2.2.1 Theoretical Background of ML Algorithms

Artificial neural networks (ANNs) are popular statistical methods that can explore the relationships between variables with high accuracy. ANNs are one of the most famous groups of ML algorithms and are the basis of the main Deep Learning architectures. Essentially, the structure of an ANN is computer-based and consists of several simple processing elements operating in parallel. ANNs are formed by layer nodes, each node connecting an initial layer called the input layer, one or more hidden layers and a final layer called the output layer (see Fig. 4).

The fundamental component of ANNs is the node or neuron. Many neurons, arranged in an interconnected structure, form a neural network; each neuron is connected to the inputs and outputs of the others. The connections are represented by a matrix of weights w. Neurons receive weighted values as input, add them together and use an activation function to process the result. The data summed up in this way, to which a bias b has also been added, exceeds a certain threshold and, as a result, the activation function transforms by increasing the values stored in the node; otherwise, it extinguishes the signal by reducing it or even cancelling it. The temporary result is ready to be sent to the next connection, until each level is completed, and a result called output is obtained. This process is called feedforward network. Equations (2) and (3) define the mathematical model for the regression formula in each node:

In the equation below, Eq. 4 defines the error function J (cost or loss function, or more commonly, mean square error MSE function), where:

-

i represents the index of the sample

-

θ0, θ1 are the parameters

-

hθ(x) is the hypothesis or predicted output, with \({h}_{\theta }\left(x\right)={\theta }_{0}+{\theta }_{1}\left(x\right)\)

-

y(x) is the actual value

-

m is the number of the sample

$$J\left({\theta }_{0},{\theta }_{1}\right)=\frac{1}{2m}\sum_{i=1}^{m}{\left({h}_{\theta }\left({x}^{\left(i\right)}\right)-{y}^{\left(i\right)}\right)}^{2}$$(4)

Using this equation, the accuracy of the neural network's prediction process can be determined. The aim of the computational architecture of the feedforward neural network is to minimise the MSE by ensuring that the function J, through gradient descent, reaches the convergence point or a local minimum. In this paper, the application of a feedforward neural network with backpropagation algorithm was considered. Hence, the neural network will be applied here to forecast the grinding process. In this study, the experimental and simulation data will be utilized to train the neural network. Then, the implemented neural network algorithm of the force model will be adopted to predict tangential and normal components of the grinding force [20, 25, 26].

Decision Trees are a very common set of supervised ML algorithms. They are very famous because they can: handle mixed types of features and predictors, with very little pre-processing of the former; ignore redundant features and select only the relevant ones; operate without having to make complex changes to hyper-parameters; visualise the predictive process as a set of recursive rules arranged in a tree with branches and leaves (see Fig. 5a), thus offering ease of interpretation. Using a sample of observations as a starting point, the algorithm goes back to the rules that generated the output classes (or numerical values, in the case of a regression problem) by dividing the input matrix into smaller and smaller partitions, until the process triggers a stopping rule. To determine how to perform the splits in a decision tree, various statistical measurements are used: Gini heterogeneity index, information gain and variance reduction.

Along with Decision Trees, one has to consider their extended evolution: the Random Forest RF (see Fig. 5b). These ML algorithms are an ensemble of decision trees and allow numerous calculations to be replicated between the various decision trees. The availability of this ensemble of decision trees, trained individually and then merged together, allows for more stable, accurate and robust predictions than the single decision tree.

Random Forests have been applied to experimental and simulation data to forecast grinding forces [25]. One of the most significant advantages of ANN and RF models is that they can capture the non-linear interaction between the features and target in the forecasting of grinding processes. The results obtained by the two models have been assessed and compared.

The ML model architecture considered in this paper involves the following steps:

-

1.

Data pre-processing.

-

2.

Data oversampling using the Synthetic Minority Over-sampling Technique (SMOTE).

-

3.

Train-test splitting of dataset.

Application of ML models for prediction of grinding forces was performed with

-

4.

The Neural Network and Bayesian – Regularization algorithm to find the best and most robust solution.

-

5.

The Random Forest and Least Squares Boosting methods to fit the regression ensemble.

In data science, the performance of an algorithm is affected by data pre-processing and handling. The performance of an algorithm can be increased and made more robust through the use of feature engineering. This process provides global analysis of a dataset, feature selection, handling missing values, handling outliers and feature scaling. Table 3 summarizes the main statistical values for the considered dataset:

Analysis of the main statistical values is useful to correctly select the features for training of the ML algorithms and to ensure that oversampling of the data through SMOTE does not lead to the presence of values outside the range of application of process parameters, considering both parts relating to experiments and FEM simulation. Amongst the various methods for feature selection, use of the correlation matrix was considered with Heatmap in the present work. This gives the relationship between dependent and independent features by Spearman's rank correlation coefficient, as shown in Eq. 5:

where \({r}_{s}\) denotes the usual Pearson correlation coefficient, but applied to the rank variables, \(cov\left({rg}_{X},{rg}_{Y}\right)\) is the covariance of the rank variables, \({\sigma }_{{rg}_{X}}{\sigma }_{{rg}_{Y}}\) are the standard deviations of the rank variables.

The heatmap of the correlation matrix is shown in the Fig. 6. Amongst the various process parameters, the depth of cut was the variable most closely related to the cutting forces (Spearman index in both cases greater than 0.5) and, consequently, the one with the greatest influence on the development of forces.

Within the considered dataset, outliers were considered as points more than three standard deviations from the mean. Within the samples considered in the dataset, it was possible to identify only one outlier amongst the given values of tangential force, as highlighted in Fig. 7.

Feature scaling is a technique used to resize and standardise the field of features of data. This method through standardization or mean-normalization can be an important pre-processing step for many machine learning algorithms. This can be useful to ensure that the MSE function is able to reduce prediction errors more effectively and that the algorithm converges correctly and quickly. In this work the mean – normalization method was proposed for data features (depth of cut, cutting speed, and feed rate) scaling because the distribution of data does not follow a Gaussian distribution and the reference Eq. 6 was reported below, where x is an original value, x’ is the normalized value:

2.2.2 Novel Approach Using the Synthetic Minority Over-Sampling Technique

The Synthetic Minority Over-Sampling Technique (SMOTE) and its function developed in MATLAB® are based on [27, 28]. This function provides new samples based on input data and a k-nearest neighbor (KNN) approach. If multiple classes are given as input, only neighbours within the same class are considered. This function can be used to over-sample minority classes in a dataset to create a more balanced dataset, as was done for the dataset considered in this paper. SMOTE is an oversampling method where the synthetic observations are created for the minority class. The below-given diagram represents the SMOTE procedure (see Fig. 8):

For this paper, the implementation of the SMOTE technique for all the dataset was carried out, after an optimisation process, considering an amount of oversampling N equal to 3 and a number of nearest neighbours k to consider equal to 8. An example of the application of the SMOTE technique to oversample tangential force values is shown in the Fig. 9. The possibility of locating outliers was also considered for the oversampled dataset.

The train-test dataset separation is a method for assess the goodness of a ML algorithm. The technique initially considers a dataset and splitting it into two subsets. The first set is used to train the model and is referred to as the training dataset. The second set is used to validate the training ML model. The objective is to estimate the performance of the machine learning model on new data, which was not used to train the model. To avoid a result with an over-fitting prediction, we can perform something called cross-validation. In this paper, a K-Fold Cross Validation was used. In K-Folds Cross Validation data were divide into k folds. K−1 folds were applied to fit the data and leave the last fold as a partition only for test data. Finally, the operations are concluded by averaging the model over the various subsets (see Fig. 10).

The Test Dataset part is necessary to validate the accuracy and robustness of the ML algorithms considered.

2.3 Application of ML Models

The following section introduces the Neural Network with Bayesian – Regularization algorithm and Random Forest by the application of Least Square Boosting method, which were used as a technique to perform machine learning experiments.

2.3.1 Neural Network and Bayesian: Regularization Algorithm

In general, a backpropagation algorithm trains a feedforward network. In ANNs, in order to optimise the MSE function and, consequently, achieve a low error and avoid also to overfit the forecasting, some regularisation procedures are used with the backpropagation training algorithm. In this article, among the various regularisation methods, Bayesian Regularisation BR has been chosen. [29]. The BR framework for neural networks is based on the probabilistic interpretation of network parameters. The network with trainbr function was trained in MATLAB®. In addition, an automated procedure was implemented which, by proceeding iteratively and evaluating the root-mean-square error in the training set and the test set, was able to identify the optimal number of neurons and hidden layers for the chosen neural network configuration. Using the proposed ML architecture, an ANN with five hidden layers and forwards neurons 23/23/21/21/19 for each layer learned with the hybrid data sources inclusive of train—test splitting with five k-folds was built. Instead of using the original data, the data was pre-processed with a mean-normalization feature scaling in the input layer. The ML model was trained with 1500 iterations for each fold (Table 4).

2.3.2 Random Forest and Least Square Boosting

Random Forest (RF) regression uses an ensemble of unpruned decision trees, each grown using a bootstrap sample of the training data, and randomly selected subsets of predictor variables as candidates for splitting tree nodes. The motivation is to combine several weak models to produce a powerful ensemble to optimise accuracy over a single tree. In MATLAB®, Least-squares boosting (LSBoost) fits regression ensembles to minimize mean-squared error. The development of the relationship between the grinding process parameters and cutting forces RF model was carried out using MATLAB®. Some parameters such as method, maximal number of decision splits and minimum number of leaf node observations were optimised in the random forest model upon the minimisation of the mean square error (MSE). In this paper, after optimisation, the tuning parameters used for developing the RF regression model is listed in Table 5:

3 Results and Discussion

The configuration parameters presented in Table 4 were used to determine the best network structure of the ANN prediction model. The ANN algorithm used all input data for model training and validation via the k-fold technique using Bayesian regularization backpropagation. The performance of an ANN model depends on the number of hidden layers in the ANN network structure. An increase in the number of hidden layers has a direct impact on modeling time requirements. Determining the number of hidden layers and nodes during training was based on a trial-and-error approach using the minimum number of iterations required to achieve the necessary performance goal, which in the present case was set to the minimum mean square error. Training was stopped once the error was reduced to below the performance goal. Upon completion of the training stage, the network was tested with the validation set.

Figure 11 shows a comparison between the actual grinding force values y and the predicted results yhat based on neural network analysis of the entire dataset. The black line represents perfect prediction, while the red dots indicate the observed error between the predicted and actual values. The smaller the error, the smaller the observations deviated from the black line. For both tangential and normal forces, predicted values tended to be very close to the actual values. The low deviation between actual and predicted values obtained with the ANN architecture is highlighted in Fig. 11 a, where the residuals are plotted (y − yhat). Most observations were close to the perfect prediction line (i.e., the black dotted line with a residual value of zero) or were within an acceptable prediction range (bounds with at least 95% accuracy). The predicted values calculated with the neural network regression model were found to be close to the measured values. The calculated value of R, the correlation between the predicted and observed values, was 0.97064, suggested a satisfactory fit of the model, as shown Fig. 12.b where the black dotted line represents perfect prediction, the blue line indicates the regression equation fitting the predicted values to the true values and the black dots represent the considered observations.

The RF model could rank the predictors (wheel speed, feed rate, and cut depth in the present case) based on their importance. Figure 13 shows the importance of each predictor, where it can be seen that the cut depth had a higher correlation with material removal than the others, in line with the correlation matrix heatmap in Fig. 6.

The robustness of the developed random forest model was evaluated by identifying the deviation of observed values in the validation dataset from those predicted by the model. Figure 14 shows a comparison of the actual grinding force values y and the predicted results yhat from RF analysis of the entire dataset. The low deviation between actual and predicted values obtained with the RF architecture is highlighted in Fig. 15a, where the residuals are plotted. Most observations were again close to the perfect prediction line (i.e., the black dotted line with a residual value of zero) or were within an acceptable prediction range (bounds with at least 95% accuracy). The calculated value of R based on the fitted regression line was 0.9671, as shown in Fig. 15b, which also showed that the fit of the RF model was good.

Further improvements will focus on limiting overfitting and improving the performance and robustness of ML regression models for abrasive processes. A larger reference dataset, including different process parameters and configurations, will be developed. The possibility of evaluating other features available through FEM simulations, such as heat generation, will also be further pursued. Both the neural network and random forest algorithm can predict a new dataset with updated values of grinding forces. This would require a new input dataset, which would then be applied to the previously trained forecasting procedures. Only in this way can new performance values be achieved.

4 Conclusions

This paper illustrates a comprehensive procedure that merges data from experiments and simulations by applying two ML regression techniques to verify the applicability of different ML algorithms in predicting grinding forces. The achieved outcomes demonstrate the practicality of this approach in developing a model for the prediction of grinding forces in dry conditions. Based on the developed regression models, the following generalized conclusions can be drawn:

-

The predictions of the two ML models were in agreement with the data collected in experimental and FEM simulation phases with an accuracy of about 0.97.

-

Compared to experiments and FEM simulation, the two models proved to be significantly less costly and time-consuming.

-

A novel global approach for forecasting grinding forces was implemented by combining experimental and simulation data, a SMOTE function for resampling the original dataset and a comparative procedure with two ML techniques.

-

In order to reduce overfitting, it was important to take care during data pre-processing and oversampling phases, focusing attention on finding the optimal initial conditions for modeling the algorithm.

-

The two models achieved similar accuracies. In particular, the model using ANNs was easy to interpret and provided the possibility of automatically obtaining a wide variety of results, which were easy to manipulate. On the other hand, this model involved a very elaborate phase to find the optimal conditions in order to implement a robust and accurate model. The RF model proved to be faster than the neural networks and involved a less elaborate optimization phase. The programming interface did not, however, allow as wide and immediate access to the results as that of ANNs.

-

Both models proved to be accurate and robust, and therefore suitable for optimizing process parameters to predict cutting forces in a highly non-linear process such as grinding. They were flexible to variations in process and grinding wheel parameters and allowed excellent generalization and extension to a wide range of processes involving cutting and material removal.

References

Yuan Zhang, F., Zheng Duan, C., Jie Wang, M., & Sun, W. (2018). White and dark layer formation mechanism in hard cutting of AISI52100 steel. Journal of Manufacturing Processing, 32, 878–887. https://doi.org/10.1016/j.jmapro.2018.04.011

Brinksmeier, E., et al. (2006). Advances in modeling and simulation of grinding processes. CIRP Annals—Manufacturing Technology, 55(2), 667–696. https://doi.org/10.1016/j.cirp.2006.10.003

Mishra, V. K., & Salonitis, K. (2013). Empirical estimation of grinding specific forces and energy based on a modified werner grinding model. Procedia CIRP, 8, 287–292. https://doi.org/10.1016/j.procir.2013.06.104

Patnaik Durgumahanti, U. S., Singh, V., & Venkateswara Rao, P. (2010). A New Model for Grinding Force Prediction and Analysis. International Journal of Machine Tools and Manufacture, 50, 231–240. https://doi.org/10.1016/j.ijmachtools.2009.12.004

Aslan, D., & Budak, E. (2014). Semi-analytical force model for grinding operations. Procedia CIRP, 14, 7–12. https://doi.org/10.1016/j.procir.2014.03.073

Doman, D. A., Warkentin, A., & Bauer, R. (2009). Finite element modeling approaches in grinding. International Journal of Machine Tools and Manufacture, 49(2), 109–116. https://doi.org/10.1016/j.ijmachtools.2008.10.002

Mamalis, A. G., Kundrák, J., Manolakos, D. E., Gyáni, K., & Markopoulos, A. (2003). Thermal modelling of surface grinding using implicit finite element techniques. International Journal of Advanced Manufacturing Technology, 21(12), 929–934. https://doi.org/10.1007/s00170-002-1410-3

Chryssolouris, G., Tsirbas, K., Salonitis, K., & Systems, M. (2005). An analytical, numerical, and experimental. Brockhoff, 1999, 9.

Foeckerer, T., Zaeh, M. F., & Zhang, O. B. (2013). A three-dimensional analytical model to predict the thermo-metallurgical effects within the surface layer during grinding and grind-hardening. International Journal of Heat and Mass Transfer, 56(1–2), 223–237. https://doi.org/10.1016/j.ijheatmasstransfer.2012.09.029

Doman, D. A., Bauer, R., & Warkentin, A. (2009). Experimentally validated finite element model of the rubbing and ploughing phases in scratch tests. Proceedings of the Institution of Mechanical Engineers, Part B, 223(12), 1519–1527.

Chena, X., & Öpözb, T. T. (2010). Simulation of grinding surface creation - A single grit approach. Advances in Materials Research, 126–128, 23–28.

Lei Zhang, X., Yao, B., Feng, W., Huang Shen, Z., & Meng Wang, M. (2015). Modeling of a virtual grinding wheel based on random distribution of multi-grains and simulation of machine-process interaction. Journal of Zhejiang University Science A, 16(11), 874–884. https://doi.org/10.1631/jzus.A1400316

Hecker, R. L., Liang, S. Y., & Jian, X. (2007). Grinding force and power modeling based on chip thickness analysis (pp 449–459). https://doi.org/10.1007/s00170-006-0473-y.

Hecker, R. L., Ramoneda, I. M., & Liang, S. Y. (2003). Analysis of wheel topography and grit force for grinding process modeling. Transactions of the North American Manufacturing Research Institution SME, 31, 281–288.

Klocke, F., et al. (2016). Modelling of the grinding wheel structure depending on the volumetric composition. Procedia CIRP, 46, 276–280. https://doi.org/10.1016/j.procir.2016.04.066

Klocke, F., Wrobel, C., Rasim, M., & Mattfeld, P. (2016). Approach of characterization of the grinding wheel topography as a contribution to the energy modelling of grinding processes. Procedia CIRP, 46, 631–635. https://doi.org/10.1016/j.procir.2016.04.011

Pandiyan, V., Shevchik, S., Wasmer, K., Castagne, S., & Tjahjowidodo, T. (2020). Modelling and monitoring of abrasive finishing processes using artificial intelligence techniques: A review. Journal of Manufacturing Processes, 57(June), 114–135. https://doi.org/10.1016/j.jmapro.2020.06.013

Lv, L., Deng, Z., Liu, T., Li, Z., & Liu, W. (2002). Intelligent technology in grinding process driven by data: A review. The Journal of Artificial Intelligence Research, 16(January), 321–357. https://doi.org/10.1016/j.jmapro.2020.09.018

Hashemitaheri, M., Mekarthy, S. M. R., & Cherukuri, H. (2020). Prediction of specific cutting forces and maximum tool temperatures in orthogonal machining by Support Vector and Gaussian Process Regression Methods. Procedia Manuf., 48, 1000–1008. https://doi.org/10.1016/j.promfg.2020.05.139

Peng, B., Bergs, T., Schraknepper, D., Klocke, F., & Döbbeler, B. (2019). A hybrid approach using machine learning to predict the cutting forces under consideration of the tool wear. Procedia CIRP, 82, 302–307. https://doi.org/10.1016/j.procir.2019.04.031

Markopoulos, A. P., & Kundrák, J. (2016). FEM/AI models for the simulation of precision grinding. Manufacturing Technology, 16(2), 384–390. https://doi.org/10.21062/ujep/x.2016/a/1213-2489/mt/16/2/384

Finkeldey, F., Saadallah, A., Wiederkehr, P., & Morik, K. (2020). Real-time prediction of process forces in milling operations using synchronized data fusion of simulation and sensor data. Engineering Applications of Artificial Intelligence, 94, 103753. https://doi.org/10.1016/j.engappai.2020.103753

Fortunato, A., & Ascari, A. (2013). The virtual design of machining centers for HSM: Towards new integrated tools. Mechatronics, 23(3), 264–278. https://doi.org/10.1016/j.mechatronics.2012.12.004

Wang, C., Ding, F., Tang, D., Zheng, L., Li, S., & Xie, Y. (2016). Modeling and simulation of the high-speed milling of hardened steel SKD11 (62 HRC) based on SHPB technology. International Journal of Machine Tools and Manufacture, 108, 13–26. https://doi.org/10.1016/j.ijmachtools.2016.05.005

Pandiyan, V., Caesarendra, W., Glowacz, A., & Tjahjowidodo, T. (2020). Modelling of material removal in abrasive belt grinding process: A regression approach. Symmetry (Basel), 12(1), 1. https://doi.org/10.3390/SYM12010099

Bin Wang, S., & Wu, C. F. (2006). Selections of working conditions for creep feed grinding: Part(III): Avoidance of the workpiece burning by using improved BP neural network. International Journal of Advanced Manufacturing Technology, 28(1–2), 31–37. https://doi.org/10.1007/s00170-004-2343-9

Nitesh, W. P. K., Chawla, V., Bowyer, K. W., & Hall, L. O. (2020). SMOTE: Synthetic Minority Over-sampling Technique. The Journal of Artificial Intelligence Research, 16, 321–357.

Skogstad Larsen, B. (2021). Synthetic Minority Over-sampling Technique (SMOTE). (https://github.com/dkbsl/matlab_smote/releases/tag/1.0), GitHub. Retrieved September 7, 2021.

Kayri, M. (2016). Predictive abilities of Bayesian regularization and levenberg-marquardt algorithms in artificial neural networks: A comparative empirical study on social data. Mathematical Computer Applications, 21(2), 1. https://doi.org/10.3390/mca21020020

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lerra, F., Candido, A., Liverani, E. et al. Prediction of Micro-scale Forces in Dry Grinding Process Through a FEM—ML Hybrid Approach. Int. J. Precis. Eng. Manuf. 23, 15–29 (2022). https://doi.org/10.1007/s12541-021-00601-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12541-021-00601-2