Abstract

This paper deals with the variable blank holder force in sheet metal forming in order to reduce springback effects after forming. A structural risk minimization principle-based metamodeling technique, least square support vector regression (LSSVR) method is applied to optimization. In order to improve the efficiency, an intelligent sampling strategy proposed by Wang et al. (Mater Des 30:1468–1479, 2009a) is integrated with the LSSVR. Therefore, the proposed strategies establish an adaptive metamodeling optimization system. The optimization procedure can be carried out automatically. To valid the flexibility of this system, the presented method is used to optimize the variable blank force parameters of the models from NUMISHEET’96 and torsion rail model. Compared with other popular metamodel-based optimization methods, the test results demonstrate the potential capability for nonlinear engineering problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Springback is mainly an elastic deformation which occurs upon the removal of tool [8, 14]. Springback of the part during unloading largely determines whether the part conforms to the design dimensions and tolerances. An accurate prediction of the springback is very importance issue for the design of tools in automotive and aircraft industries. It is desired to predict and reduce springback so that the final blank dimensions can be controlled as much as possible. Several analytical methods have been proposed to predict the change in radius of curvature and included angle due to springback for plane-strain conditions and simple axisymmetric shapes. These methods are approximate and associate the source of springback to non-uniform distribution of strain and bending moment upon unloading.

The finite element method (FEM) is used widely to predict springback in research and industry. However, the accuracy of FEM is not yet always sufficiently accurate and efficient. Commonly corrections to compensate for springback are made by modifying the shape of stamping tools. It is very important to predict springback and correct it at the tool design stage, since the optimization procedure for controlling springback [3, 9, 17] commonly leads to tremendous expensive function evaluations. This is particularly the case when objective and constraint functions are obtained by complete FE simulations involving fine meshes, many degrees of freedom (DOFs), strongly nonlinear geometrical and material behaviors. The gradient-based optimization, necessary for common minimization algorithms is not always available neither accurate, especially when commercial codes are used. Some heuristic methods, such as genetic algorithm (GA), particle swarm optimization (PSO), etc., can’t guarantee the efficiency and convergence ratio. Therefore, metamodeling technique is used as an alternative method to develop the optimization efficiency. A metamodel can be used as a surrogate for calculating fitness values, which are normally based on time-consuming simulations. Such a metamodel can be effectively integrated into the search process to gradually substitute the large portion of simulation which model runs require. The metamodeling technique was first proposed by Blanning [4]. Recently, some efforts have been focused on the springback reduction. Daniel [7] used FE simulation and polynomial regression (PR)-based metamodeling technique to optimize springback in bending processes. Naceur et al. [16] combined DKTRF (discrete Kirchhoff triangular element rotation free element) shell element and response surface method (RSM) involving diffuse approximation technique and pattern search optimization to control springback effects. Bahloul et al. [1] predicted springback by experiments and RSM-based optimization. Liu et al. developed [13] an automatic optimization method integrated with for compensating springback of stamping parts. Ingarao et al. [11] suggested a multi-objective approach to reduce springback and thinning failure of dual phase steels.

Above mentioned metamodel-based optimization methods are based on empire risk minimization (ERM) principle, such as PR, moving least square (MLS) and Kriging (KG) methods, etc. The major bottleneck of the ERM-based metamodeling technique is the reliability of optimization results. Compared with the ERM principle, structural risk minimization (SRM) suggested by Vapnik and Chervonenkis [19] is an inductive principle for model selection used for learning from finite training data sets. It describes a general model of capacity control and provides a tradeoff between hypothesis space complexity and quality of fitting the training data (empirical error).

In order to enforce the reliability of optimization results, a structural risk minimization (SRM) method, support vector regression (SVR) proposed by Vapnik [20] is used for constructing metamodel-based optimization method and is applied to springback compensation. Unlike traditional methods which minimize the empirical training error, SVR aims to minimize the upper bound of generalization error through maximizing the margin between separating hyperplane and data. In the past few years, several studies have successfully applied SVR to function estimation.

According to the conclusion summarized by Clarke et al. [6], the SVR-based metamodeling technique achieves more accurate and robust function approximations than RSM, KG, Radial basis function (RBF), and multivariate adaptive regression splines (MARS). Clarke et al. [6] also claimed that SVR was a feasible technique for approximating complex engineering analyses. Recently, a least square (LS) version of SVR (least square SVR, LSSVR) technique proposed by Suykens and Vandewalle [18] has received attention for function estimation. In LSSVR, Vapnik’s ε-insensitive loss function has been replaced by a sum-squared error cost function. According to the theory of LSSVR, LSSVR is reformulation to the standard SVRs which leads to solving a linear KKT (Karush–Kuhn–Tucker) system. This reformulation greatly simplifies a problem such that the LSSVR solution follows directly from solving a set of linear equations.

The rest of this paper is organized as follows. “Related theories” describes the fundamentals of LSSVR and intelligent sampling strategy. In “Statement of problem”, the proposed method is used to reduce springback of sheet forming design. Conclusions are given in “Concluding Remarks”.

Related theories

In this section, the basic theories of LSSVR are briefly introduced. According to the comparison tests performed by Clarke et al. [6], the LSSVR is a feasible alternative for nonlinear metamodeling technique. In this study, the LSSVR is integrated with an intelligent design of experiment method (DOE), boundary and best neighbor sampling (BBNS).

Theories of LSSVR

Considering a regression problem with a training set \( \left\{ {{x_i},{y_i}} \right\}_{{i = 1}}^N \) with N input data x i and output data y i as presented in Eq. 1

Using a kernel function, we can obtain a nonlinear predictor by solving an optimization problem in the primal weight space:

where \( \phi ( \cdot ) = {R^n} \Rightarrow {R^{{{n_h}}}} \) is a nonlinear mapping which maps the input data into a high dimensional feature space whose dimension can be infinite; this feature makes SVR solve high dimensional problems. In Eq. 2, \( w \in {R^{{{n_h}}}} \) denotes the weight vector in the primal space; \( {\varepsilon_i} \in R \) is an error variable and b is the bias term. The cost function J consists of a sum-squared error fitting error and regulation term. The γ is a constant coefficient to determine the relative importance of the ERM and SRM terms. A linear SVR has been taken with γ = 1. In the case of noisy data one avoids over fitting by taking a smaller value of γ. But, if the γ is smaller, the reliability of approximation can’t be promised. Therefore, it is recommended that the value of γ can be determined by considering the kernel function coefficient together.

The model of primal space can be presented as follows

Then, the Lagrangian multiplier expression applied to Eq. 2 is obtained as

where α i are the Lagrangian multipliers, which can be either positive or negative.

The conditions for optimality

After elimination of w and ε, we can obtain the following linear expression

where

Based on the Mercer’s condition, there exists a mapping φ(·) and an expression

If and only if, for any Ψ(x) such that \( \int {\psi {{(x)}^2}dx} \) is finite, one has

which is motivated by the Mercer’s Theorem [15]. Note that for specific cases, it may not be easy to check whether Mercer’s condition is met. Equation 9 must hold for every Ψ(x) with a finite L 2 norm. It is known, however, that the condition is satisfied for positive integral powers of the dot product K(x i , x j )

The final LSSVR model for the function estimation is then obtained as

where a l and b are the solutions to Eq. 5, and

Among all kinds of kernel functions, Gaussian kernel function as shown in Eq. 12 is the most popular choice and also chosen in this study. The radius r of the Gaussian kernel function is manually set in this study after a few trials for each function; x i and x j are input vectors. Automatically optimizing kernel function during training could potentially further improve modeling the efficiency. The optimal choice for the kernel function is still an area of active research and will be investigated in future work. In this study, we substitute different combinations of (γ, r) into Eq. 2, and select the optimal one according to the results. In order to improve the efficiency, this procedure is implemented by the ANN method in this work.

Boundary and best neighbor sampling strategy

DOE is very important issue for metamodeling techniques. Traditional DOEs are “offline” strategy; DOE and metamodeling techniques are independent models in optimization procedure. For the LSSVR method, it is necessary to collect enough sample points to construct a reliable model. Due to the difficulty of knowing the “appropriate” size of sample points in advance, intelligent or sequential sampling has gained popularity recently [12]. Intelligent or sequential sampling strategies actually are “online” style, sampling and metamodeling procedure are integrated, sampling direction is determined according the response value of objective functions. The sample points generated by such strategy commonly concentrated in optimization region.

The boundary and best neighbor searching (BBNS) strategy is such a way to generate sample points and reduce design space as well. The distinctive characteristic of the BBNS strategy suggested by Wang et al. [22–24] is to create the new sample points according to generated sample points distributed in boundaries and neighbor domain. The fuzzy clustering scheme suggested by Wang et al. [23] is also integrated and applied to sheet forming optimization successfully. In order to further enhance the efficiency, a parallel sampling strategy developed by Wang et al. [24] was also developed and engaged in sheet forming design. The details of the BBNS are presented as follows.

Theoretically, any space filling sampling methods, such as Latin hypercube design (LHD) can be used for generating initial sample points at the first stage of the BBNS. Considering the uniformity and orthogonality of sample points, LHD is employed in this method. Additionally, to improve the efficiency of sampling procedure and control the size of sample points, the initial samples should be sparsely distributed in design space. Furthermore, fractional factorial design (FFD) is used for locating the boundary sample points. Although the initial sample points are generated by traditional space filling strategies, the latter algorithm is quite different due to its “intelligent” characteristic;

-

1.

The inside initial sample points generated LHD should be evaluated. Conversely the evaluations with boundary samples derived from FFD don’t need to be performed till the corresponding criterion is satisfied;

-

2.

The several better sample points (the number of sample points can be specified by the user, called better sample set) are collected and the new sample points are generated by Eqs. 13–14;

-

3.

The position of the new sample points is determined by Eq. 13

$$ X_j^i = \left( {\frac{{X_j^{{Current}} + X_j^{{Boundary(Nearest)}}}}{{{m_1}}}} \right){c_1} + \left( {\frac{{X_j^{{Current}} + X_j^{{Best(Nearest)}}}}{{{m_2}}}} \right){c_2} $$(13)where X is a vector of value, \( X_j^i \) denotes the ith new sample in the jth iteration. The distance between the current and nearest boundary sample points should be divided into m 1 segments, the distance between the current and best neighbor influence domain is divided into m 2 segments. The values of m 1 and m 2 are also assigned by the user. Both m 1 and m 2 are set to the number of design variables N as shown in Fig. 1; c 1, c 2 denote the acceleration weight coefficients, they are determined by Eq. 14 according to the objective values.

$$ \left\{ \begin{gathered} {c_1} = \frac{{R\left( {X_j^{{Current}}} \right) + R\left( {X_j^{{Boundary(Nearest)}}} \right)}}{{\left( {R\left( {X_j^{{Current}}} \right) + R\left( {X_j^{{Boundary(Nearest)}}} \right)} \right) + \left( {R\left( {X_j^{{Current}}{ }} \right) + R\left( {X_j^{{Best(Nearest)}}} \right)} \right)}} \hfill \\ {c_1} = 1 - {c_2} \hfill \\ \end{gathered} \right. $$(14)where R(·) denotes the response value obtained from function evaluation* with corresponding sample points, such as \( X_j^{{Best}} \), \( X_j^{{Boundary(Nearest)}} \) and \( X_j^{{Best(Nearest)}} \).

Definitions of the superscripts are presented as:Footnote 1

Current

the current sample point in the better sample set

Nearest

the nearest sample point from the current sample point

Boundary

boundary of the design space

Best

the sample point which has the best value of objective function

Boundary (nearest)

the nearest boundary sample from the current sample

Best (nearest)

the nearest sample of the better sample set

-

3.1.

If the location of a new sample point is duplicated or is outside of the design space, the best sample point should be substituted by the current one, and the procedure goes back to regenerate new sample points;

-

3.1.

The function should be evaluated at the new generated sample points;

-

3.2.

The better sample set is updated.

If

$$ \frac{{\left| {R\left( {X_j^{{Best}}} \right) - R\left( {X_{{j - 1}}^{{Best}}} \right)} \right|}}{{\left| {R\left( {X_j^{{Best}}} \right)} \right|}} \leqslant \delta, \delta \in (0,1), $$(15)then procedure ends, else it goes to Step 3, where δ is the threshold which can be given by the user and the default value is 0.1.

Integrating LSSVR and BBNS for metamodeling-based optimization

In this section, we suggest an adaptive metamodel-based optimization method called as BBNS-based LSSVR optimization. The BBNS is used for generating sample points and metamodel is constructed by the LSSVR. The proposed algorithm is a close-loop one and can run for optimization automatically. The flowchart of this strategy is presented in Fig. 2.

As shown in Fig. 2, the proposed method is composed of three modules: sampling, modeling and optimization. In the sampling procedure, the response value is evaluated by actual simulation evaluation. When the convergence described in Eq. 15 of the BBNS is satisfied, the modeling procedure proceeds. The key issue in of the proposed method is how to objectively decide when to switch to the metamodel instead of using the simulation during optimization.

Compared with traditional convergence conditions, test sample points are used for predicting the accuracy of metamodel. In this work, leave-one-out cross-validation (LOOCV) is used for obtaining \( {\text{R}}_{{avg}}^2 \) for each sample point.

where y i is the real value of objective function, \( {{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{y}}_i} \) and \( {\bar{y}_i} \) denote the predicted function value at the test sample point and average predicted value, m is the number of sample points.

For a mathematical problem, the convergence condition should be set strictly, such as\( {\text{R}}_{{avg}}^2 > 0.8 \). For engineering problems, the convergence condition can be slack according to the complexity of cases. This step is used for the expensive sample points whose responses are obtained from the computational intensive simulations.

Statement of problem

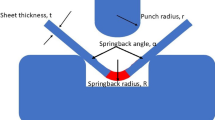

Blank holder force (BHF) is often used for controlling springback as springback can be decreased with the increase of BHF, while other processing and material parameters are held constant. However, the BHF also causes a subsequent increase of the maximum strain in material, and it commonly makes blank cracked. To solve such problem, a stepped variable BHF strategy was proposed to control both of the springback and strain by Hishida and Wagoner [10]) However, they had not given an effective approach to determine the stepped BHF parameters. In this work, the proposed method is used for predicting the corresponding parameters to control the springback of sheet forming.

The basic idea of the stepped variable BHF is demonstrated as Fig. 3. Compared with a constant BHF (CBHF), a stepped variable BHF (SVBHF) is composed of two stages. A low BHF (LBHF) is first acted on blank to facilitate the flow of material. At one specifies percentage of the total punch displacement (PTPD), a higher BHF (HBHF) is instead of the LBHF to cause plastic strains in the sidewall. Therefore, the SVBHF is determined by three parameters: LBHF, PTPD and HBHF.

Numisheet’96 S-rail

Problem description

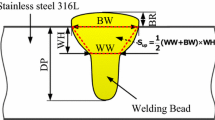

The tools and sheet-metal blank of the Numisheet’96 springback benchmark problem are shown in Fig. 4, applied on an aluminum alloy whose properties are presented in Table 1. A drawn height of 37 mm is taken into account. The die fillet radius is equal to 3 mm while clearance between die and punch is equal to 1.2 mm.

The process is numerically simulated using the explicit code LS-DYNA, and a subsequent springback analysis is carried out with implicit solver. A full integrated quadrilateral shell element with nine integration points along thickness is utilized. A Coulomb model is considered for frictional actions. In order to take into account material anisotropy Barlat and Lian [2] constitutive model with an isotropic work hardening is utilized.

The anisotropic yield criterion Φ for plane stress is defined as

where \( \sigma_Y^m \)is yield stress and \( {K_{{i = 1,2}}} \) are given by

The anisotropic material constants a, c, h and p are obtained through R00, R45 and R90, which are the material Lankford parameters in 0°, 45° and 90° relative to rolling direction. For body centered cubic (BCC) materials, m = 6 is recommended. The relationship between stress and strain for the materials used in this study is given by

where, K and n are the strength coefficient and exponent for swift exponential hardening, ε 0 and \( {\bar{\varepsilon }^P} \) are strain and effective plastic strain, respectively.

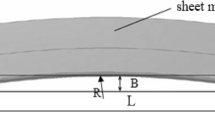

In order to evaluate springback entity, a comparison with a reference target shape is performed at the end of implicit simulation. In particular, the total deviation error between the target shape and obtained one is calculated (i.e., the maximum normal distance between the two overlapped surfaces d (mm)). In the present application the distance d (mm) is considered a direct and quick indicator of the geometrical distortions due to springback (see Fig. 5 which shows the comparison of one of the obtained transverse cross section and reference shape).

To stay objectivity, we select four transverse cross sections for construct objective function. In each transverse cross section, 20 uniform sample points along the transverse cross section are selected to calculate difference between the target and obtained shape. The distance of each section can be presented as follows.

where

- k :

-

The transverse cross section number

- n :

-

The number of sample points along the transverse cross section line

- yi :

-

The position of sample point i in target shape

- \( {\hat{y}_i} \) :

-

The position of sample point i in current optimum shape

- \( \overline y \) :

-

\( \frac{1}{n}\sum\limits_{{i = 1}}^n {{{\hat{y}}_i}} \)

Equation 20 is the prediction of future outcomes on the basis of other related information. It is the proportion of variability in a data set that is accounted for by the statistical model. Therefore, d k can be regarded as R2; R2 is often interpreted as the proportion of response variation “explained” by the repressors in the model. Thus, R2 = 1 indicates that the fitted model explains all variability in y, while R2 = 0 indicates no ‘linear’ relationship (for straight line regression, this means that the straight line model is a constant line between the response variable and repressors. Finally, all transverse cross sections are considered for the objective function as

where m denotes the number of transverse cross sections.

In this case, mathematical equations of optimization can be formulated as

where X denotes the design variables related with the SVBHF composed of the HBHF, LBHF, PTPD.

Optimization procedure and results

According to the proposed method described in Fig. 2, we use the LHS generate 15 sample points. Then the BBNS start generating new sample points and the LSSVR is used for constructing metamodel, the corresponding optimization procedure demonstrated in Fig. 6. The ranges of objective function and \( {\text{R}}_{{avg}}^2 \) are from 0 to 1, so we can observe the convergence of LSSVR and optimization method. Finally, we use 47 sample points to complete optimization and corresponding optimum results presented in Table 2.

To validate the proposed optimization method, the performance of optimization procedure is also considered. Some widely used metamodel-based optimization methods, such as particle swarm optimization-based intelligent sampling (PSOIS), successive response surface approximations (SRSM) proposed by Breitkopf et al. [5] and mode pursuing sampling (MPS) method proposed by [21]. For give a fair comparison, we use the same DOE method to generate the same number of sample points. The optimization procedure of each method is also presented in Fig. 6. It is observed that the proposed BBNS-based LSSVR optimization method obtained better results with the fewest sample points according to Table 2 and Fig. 6. The PSOIS and MPS also archive the acceptable optimum results. Although the SRSM is widely used and implemented in some commercial software, the performance is far from satisfactory for engineering applications, especially for nonlinear problems.

Torsion rail

In this case, we use the proposed method to investigate a torsion rail as shown in Fig. 7 We select 6 transverse cross sections to measure the springback as shown in Fig. 8. The SVBHF strategy is also used for control the torsion springback in this case. The optimization problem can be described as

The material constitutive model introduced by Barlat and Lian [2] with anisotropic hardening is also adopted. The material parameters of DP800 summarized in Table 3 and the CAE model of the stamping system is shown as Fig. 9.

We also use the proposed method, PSOIS, MPS and SRSM for optimizing the torsion rail component. Design variables used in the case are engaged. The number of initial sample points of each method is the same. The procedures of each metamodel assisted optimization are demonstrated in Fig. 10. The SRSM costs the most number of sample points, the proposed method uses the fewest sample points. According to Table 4, the proposed method also achieves the best optimum design. In order to verify the simulation results, we also establish the stamping system shown in Fig. 11. Compared with optimum and real shape as shown in Fig. 12, it is easy to prove that the proposed method achieves the accepted design.

Concluding remarks

In this paper, a metamodel-based optimization method is proposed. An intelligent sampling strategy, BBNS, is used for generating sample points in optimization procedure. The advantage of the BBNS is that the new samples concentrate near the current local minima and yet still statistically cover the entire design space. Therefore, the corresponding metamodel is constructed based on more attractive sample points for the purpose of optimization. To obtain more reliable optimum results, the recently developed LSSVR is employed, which can also help filter noises in data. To assess the performance of the BBNS-based LSSVR optimization method, the performance of metamodeling and optimization is tested. Compared with other popular metamodeling techniques, the LSSVR is proved to be an attractive method for integration with the BBNS. Furthermore, to verify the accuracy and efficiency of the proposed method, other metamodel-based optimization methods including the PSOIS, SRSM and MPS are also tested.

Our purpose is to develop a feasible optimization approach for controlling springback. Thus, we apply the proposed method to two different springback problems. These problems are successfully optimized by the proposed method. The tests demonstrate that the proposed method shows potential for sheet forming related problems.

Notes

For practical problems, the function evaluation should be simulation.

References

Bahloul R, Ben-Elechi S, Potiron A (2006) Optimisation of springback predicted by experimental and numerical approach by using response surface methodology. J Mater Process Tech 173:101–110

Barlat F, Lian FJ (1989) Plastic behaviour and stretchability of sheet metal Part 1. A yield function for orthotropic sheets under plane stress conditions. Int J Plast 5:51–66

Barlet O, Batoz JL, Guo YQ, Mercier F, Naceur H, Knopf-Lenoir C (1996) The inverse approach and mathematical programming techniques for optimum design of sheet forming parts. Eng Syst Des Anal 3:227–232

Blanning RW (1975) The construction and implementation of metamodels. Simul 24:177–184

Breitkopf P, Naceur H, Rassineux A, Villon P (2005) Moving least squares response surface approximation: formulation and metal forming applications. Comput Struct 83:1411–1428

Clarke SM, Griebsch JH, Simpson TW (2005) Analysis of support vector regression for approximation of complex engineering analyses. ASME Trans J Mech Des 127:1077–1087

Lepadatu D, Hambli R, Kobi A, Barreau A (2005) Optimisation of springback in bending processes using FEM simulation and response surface method. Int J Adv Manuf Technol 27:40–47

Gelin JC, Picart P (1999) In: 4th international conference on numerical simulation of 3D sheet metal forming processes. Numisheet’99, Besanc¸on, France

Guo YQ, Batoz JL, Naceur H, Bouabdallah S, Mercier F, Barlet O (2000) Recent developments on the analysis and optimum design of sheet metal forming parts using a simplified inverse approach. Comput Struct 78:133–148

Hishida Y, Wagoner R (1993) Experimental analysis of blank holding force control in sheet forming. J Mater Manuf 2:409–415

Ingarao G, Lorenzo R, Di MF (2009) Analysis of stamping performances of dual phase steels: a multi-objective approach to reduce springback and thinning failure. Mater Des 30:4421–4433

Kleijnen JPC (2008) Design and analysis of simulation experiments. Springer, New York

Liu W, Yang YY, Xing ZW, Zao LH (2009) Springback control of sheet metal forming based on the response-surface method and multi-objective genetic algorithm. Mater Sci Eng A 499:325–328

Makinouchi A, Nakamichi E, Onate E, Wagoner RH (1995) Prediction of spring-back and side-wall curl in 2-D draw bending. J Mater Process Technol 50:361–374

Mercer J (1909) Functions of positive and negative type and their connection with the theory of integral equations. Philos Trans Roy Soc London 209:415–446

Naceur H, Guo YQ, Ben-Elechi S (2006) Response surface methodology for design of sheet forming parameters to control springback effects. Comput Struct 84:1651–1663

Shu J-S, Hung C (1996) Finite element analysis and optimization of springback reduction: the “Double-Bend” technique. Int J Mach Tool Manufact 36:423–434

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9:293–300

Vapnik VN, Chervonenkis AY (1974) Teoriya Raspoznavaniya Obrazov: Statisticheskie Problemy Obucheniya, (Russian) [Theory of Pattern Recognition: Statistical Problems of Learning]. Nauka, Moscow

Vapnik V (1999) Three remarks on the support vector method of function estimation. In: Schőlkopf B, Burges CJC, Smola AJ (eds) Advances in Kernel methods—support vector learning. MIT Press, Cambridge, pp 25–42

Wang L, Shan S, Wang GG, (2004) Mode-pursuing sampling method for global optimization on expensive black-box functions. J Eng Optim 36(4):419–438

Wang H, Li GY, Enying L, Zhong ZH (2008) Development of metamodeling based optimization system for high nonlinear engineering problems. Adv Eng Software 2008:629–645

Wang H, Li E, Li GY (2009) The least square support vector regression coupled with parallel sampling scheme metamodeling technique and application in sheet forming optimization. Mater Des 30:1468–1479

Wang H, Li E, Li GY (2009) Optimization of drawbead design in sheet metal forming based on intelligent sampling by using response surface methodology. J Mater Process Tech 206:45–55

Acknowledgements

This work is supported by Project of National Science Foundation of China (NSFC) under the grant number 10902037; Youth Scientific Research Foundation of Central South University of Forestry & Technology; Hunan Provincial Natural Science Foundation of China under the grant number 11JJA001.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, E. Reduction of springback by intelligent sampling-based LSSVR metamodel-based optimization. Int J Mater Form 6, 103–114 (2013). https://doi.org/10.1007/s12289-011-1076-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12289-011-1076-1