Abstract

In order to have a competitive edge, manufacturing companies have to develop superior quality products at minimum cost. Tolerance design is the most critical part of concurrent engineering in which optimal values of tolerances have to be determined for all components of an assembly, with due consideration towards the cost as well as quality. In this paper, tolerance design optimization of two products namely piston – cylinder & punch die assembly are considered. To solve the constraint-based optimization problems which are nonlinear and multi-objective in nature, novel techniques like Particle Swarm Optimization (PSO), and the Non Dominated Sorting Genetic algorithm II (NSGA II) have been used. The results of the piston-cylinder assembly have been compared to those of complicated and evolutionary techniques like Simulated (SA) and Genetic Algorithms (GA). In addition, their performances have been examined.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

To be competitive in the global market, manufacturing companies have developed minimal-cost, superior-quality products. Producing superior-quality goods at a low cost in today's market involves the simultaneous consideration of design and production processes. In this case, tolerance design is critical. Tolerance design is the process of allocating tolerances to individual components or subassemblies to reach the final assembly tolerance. To produce a high-quality product at a low cost, the tolerances must be established to perform the desired function with the least amount of machining. In practice, tolerances are typically established as an informal compromise between usefulness and production expense. The tolerance design optimization issue of piston-cylinder assembly and punch and die assembly is used for the study.

Machining costs, product quality, and the cost of quality loss are all affected by tolerance requirements for manufactured item dimensions. Tolerance specification is based on qualitative estimations of tolerance cost, allowing mechanical assembly component tolerances to be specified for the lowest practical production cost. Assembly tolerances are frequently dictated by performance requirements, whereas component tolerances are determined by the manufacturing process's capabilities. The most prevalent problem that engineering designers face when defining tolerances is defined assembly tolerance between assembly components.

The component tolerance of an assembly may be distributed uniformly across all of its constituent parts. However, according to the conditions, the complexity of the product or the production process, every component tolerance could have a variable manufacturing cost. Component tolerances may be supplied to reduce manufacturing costs by defining objective functions for every component dimension and applying it to each component dimension. Improper tolerance specifications can also lead to poor product performance and market share loss. Tight tolerances can lead to higher process costs, whereas loose tolerances might result in more waste and assembly problems. In peer-reviewed journals, several cost-tolerance models have been published. A good function for determining machining cost can be utilized to distribute the optimal tolerances. Tolerance should be as low as feasible. Tolerance allocation has always been done based on the designer's expertise, handbooks, and guidelines. As a result, the assembly quality cannot be assured, and the production cost may be greater than necessary. Optimization is essential to attain the aforementioned goals. The Particle Swarm Optimization approach and the Non-dominated sorting algorithm (NSGA II) are given in this paper for handling the single and multi-objective, constraint, nonlinear programming problems. A systematic optimization strategy for the tolerance allocation problem has been created using PSO and NSGA II.

2 Literature review

A review of current studies suggests many tolerance synthesis mechanisms. It is separated into classic optimization approaches such as statistical methods, complicated methods, stochastic integer programming, and so on, as well as non-traditional strategies such as GA and SA algorithms and fuzzy neural learning.

The overwhelming majority of publications on optimization-based tolerance synthesis have made use of cost-tolerance models, which have been published in peer-reviewed journals. Dong et al. [1] established unique tolerance synthesis models that were based on the tolerance of manufacturing costs. When the nominal ranges of the design variables are employed, Siddall [2] modified the fundamental design optimization issue to contain the optimum allocation of manufacturing tolerance and the optimum allocation of manufacturing tolerance. As defined by Lee and Woo [3], tolerance synthesis is a probabilistic optimization technique in which a random variable is related to a dimension as well as the tolerance associated with that dimension. As the complexity of mechanical components increases, the above-mentioned optimization-based tolerance synthesis procedures become untenable. Gadallah and ElMaraghy [1] were among the first to use quality engineering parameter design methodologies to tackle the problem of tolerance optimization. The notion of a quality loss function is another application of quality engineering. Several studies in the literature, including Bhoi et al. [4, 5], Bho et al. [6], and Beng et al. [7], In order to solve the tolerance scheduling problems, employ the loss function concept.

Numerous different attempts aimed to deal with the impracticality of optimization-based tolerance provisiondifficulties resulted in the development of a variety of innovative solutions based on comparatively recent procedures such as genetic algorithms, neural networks, evolutionary algorithms, and fuzzy logic. Genetic algorithms are one such technique. To promote tolerance, Kopardekar [7] employed a neural network, which they developed themselves. Backpropagation is utilized to prepare the network that produces part tolerances to test how effectively it manages machine competency and industrial production issues like a mean shift. Ji et al. [8], Ta-Cheng Chen et al. [9], and Hupinet et al. [10] employ fuzzy logic and simulated annealing, while Ji et al. [8], Ba-Cheng Bhen et al. [9], and Bupinet et al. [10] do not. Joth Ji et al. [11] and NoorulHaq et al. [6] application of the genetic approach with the assistance of PSO and NSGA II.

3 Materials and functional methods

3.1 Assembly of the piston and cylinder

The piston-cylinder bore assemblage was suggested by Al-Ansaray, as well as Deiab [12]. The dimensions of the piston-cylinder bore assemblage are presented in Fig. 1.

Piston-cylinder bore assemblage [8]

The cylinder bore diameter (dc) is 50.856 mm, and the clearance is 0.056 0.025 mm. When measured in millimeters, the piston diameter (dp) is 50.8 mm, and the cylinder bore diameter (dc) is 50.856 mm. To complete the piston cylinder-bore assemblage, the subsequent eight machining operations are planned in the following order: On the piston, rough turn, final turn, coarse grind, as well as completion grind are conducted; on the cylinder bore, drill, bore, semi-finish bore, as well as grind, are completed. The piston's main machining limits in millimeters are, 0.005 ≤ t1 ≤0.02, 0.002 ≤ t2 ≤ 0.012, 0.0005 ≤ t3 ≤ 0.003 and 0.0002 ≤ t4 ≤ 0.001, and the primary machining limits in millimeters for the cylinder bore are, 0.007 ≤ t5 ≤ 0.02, 0.003 ≤ t6 ≤ 0.012, 0.0006 ≤ t7 ≤ 0.005, 0.0003 ≤ t8 ≤ 0.002.

This effort will find the best machining tolerance allocation of piston and cylinder about the clearance between them. The piston has a t11d design limit, while the cylinder bore has a t21d design tolerance. The machining tolerance limitations are t1i for the piston (I = 1,2,3,4) and t2i for the cylinder bore (I = 1,2,3,4) for the four cylinder bore production methods.

3.2 Impartial function

The impartial function of minimizing the machining cost is measured in this work. The total cost of machining (Cm) is articulated as,

The exponential cost tolerance model F (t) is used in this work to find the machining cost of piston cylinder bore assembly

Subject to,

-

(i)

t1d + t2d ≤ 0.001

where t1d = t4(Piston) and t2d = t8(Cylinder bore)

-

(ii)

For the piston, the margins on the machining limits are,

t1 + t2 ≤ 0.02, t2 + t3 ≤ 0.005,t3 + t4 ≤ 0.0018

-

(iii)

For the cylinder bore, the restraints on the machining are,

t5 + t6 ≤ 0.02, t6 + t7 ≤ 0.005,t7 + t8 ≤ 0.0018

where a0 through a3 are parameters for each cost tolerance equation computed from the assessment data are given in Table1.

3.3 Punch and die assembly

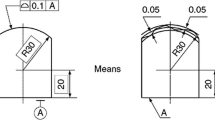

The problem considered is an assembly consists of an upper punch, lower punch and die. The upper, lower punch and die components are presented in Fig. 2.

The dimensions are given that the punch diameter is 7.5mm, and the die diameter is 7.55mm. The clearance amongst punch and die is 0.05 ± 0.025 mm. There are three machining processes involved in punch manufacturing, and two machining processes are involved in the die manufacturing process. The machining process plan for the punch is, turning, profile grinding and finally polishing, and for the die is, wire cutting and polishing,

-

The ranges of dimension in millimeters of the machining acceptances for the punch are, 0.0075 ≤ t1 < 0.045, 0.003 ≤ t2 < 0.0075, 0.002 ≤ t3 < 0.0045

-

The ranges of dimensions in millimeters of the machining tolerance for the die are0.001≤ t4 ≤ 0.0075, 0.002 ≤ t5 ≤ 0.045

3.4 Decision variables and constraints

There is just one resultant dimension in this case, which is the clearance between punch and die, as well as the punch and die dimensions that make up the dimensional chain. The precision with which the punch and die parts are machined in relation to the clearance between them. For the punch die assembly, seven design elements are taken into account for optimal machining tolerance allocation.

The design limits parameter for the punch is tp, and the die is td. The machining limits parameters for the punch are t1, t2, t3 and for the die t4, t5.

The designer establishes tolerances for the resulting dimension and machining limits, which are determined by the entire punch and die diameter design. Tolerances must be at or below the same level as the manufacturer's clearance tolerance, which is specified by, tp + td ≤ 0.005

-

(ii)

The decision tolerance for a particular component feature is equivalent to the final machining limits for that feature and is expressed as,

tp = t3 for the punch and td = t5 for the die

-

(iii)

The constraints in the machining tolerance for the punch is, t1+ t2 ≤ 0.04, t2 +t3 ≤ 0.007

-

(iv)

The constraints in the machining tolerance for the die is, t4 + t5 ≤ 0.008

4 Material combination

The material combination used by the manufacturer for the punch is given by (percentage):

4.1 Independent function

The major goal of the challenge is to decrease the overall cost of lower/upper punch and die machining while satisfying the product's functional requirements. The entire cost is the sum of the machining and quality-loss costs.

To successfully machine a component, three machining processes are used in a punch, and two machining processes are used in a die. The combined Reciprocal powers and Exponential model were used to establish the relationship between tolerance and cost for each machining operation. In earlier studies, the machining cost was exclusively evaluated to have the best tolerance allocation. Scrap or rework costs, on the other hand, are incurred when manufactured components fail to meet standards. As a result, the total cost must include machining as well as rework/scrap charges. The cost of rework/scrap is determined by an excellence loss function, which designates that the superior the departure after the nominal, the superior the excellence loss sustained by the client.

4.2 Development of tolerance allocation model

The machining cost M (t) is multiplied by the Excellence Loss Cost QLC to create the tolerance allocation model. Using combined reciprocal powers and an exponential equation, a nonlinear, constraint, multi-objective tolerance allocation model was built. The machining cost is calculated using the combined reciprocal powers and exponential model. SPSS version 8 software is used to find the model parameters. According to Wu et al. [13], the combined reciprocal powers and exponential model have fewer modelling errors when compared to empirical production data. The unequal curvature of the empirical production data necessitates the use of two distinct basic functions to produce a decent fit in both the flat and rapid ascending regions. The exponential function accounts for the flat area in this model, whereas the reciprocal power function explains the fast-climbing region. To calculate the quality loss, the Taguchi loss function is utilized. A quality loss function defines the rework or scarp cost, stating that the greater the departure since the nominal, the greater the quality loss suffered by the client. With the least amount of machining expense and excellence loss, each component's tolerance design optimization may be created. The manufacturing restrictions are specified by the multi-objective tolerance distribution model,

The machining cost for the upper punch and die assembly is:

where

Machining cost of turning for punch is:

A: consumer quality loss. ti: module tolerance. T: 1-sided tolerance stack up limit . Ai,Bi,Ci,Di,Ei: model parameters (i = 1,2..) are shown in Tables 2 and 3. l: Number of component tolerances.

5 Optimization process

5.1 Particle swarm optimization method

Particle Swarm Optimization (PSO) is a stochastic optimization approach created by Bberhartas well as Kennedy [14] and motivated by the social behaviour of swarming birds or fish schools. The particle swarm model was invented as a simple social system simulation. The initial intention was to make an aesthetically pleasing rendition of a flock of birds or a school of fish. The particle swarm model, on the other hand, was found to be an excellent optimizer.

PSO is analogous to the flocking behaviour observed in birds, which has been previously documented. Take the following example into consideration: A swarm of birds is on the prowl for food in an unidentified location. There is just one kind of edible item in the area being searched, and it is a sandwich. The birds are oblivious to the fact that their meal is being served. They do, however, have an understanding of how far the dish has progressed with each repetition. So, how do you go about finding the food? Following the bird that is closest to the meal is the most successful method. PSO applied what it had learned from the scenario to the optimization issues.

In PSO, each clarification is represented in the search area by a "bird." It's referred to as a "particle" by us. The fitness function examines all particles' fitness values to optimize them, as well as their velocities, which control their flight. The particles traverse the problem space in the same order as the present optimal particles. PSO begins with a collection of random particles (solutions), in addition, then iterates through successive generations in search of an optimal solution. A further "best" value captured by the particle swarm optimizer is the greatest value achieved so far by every particle in the population throughout the simulation. This is referred to as a "global best" and is abbreviated as "gbest."'

where v[] denotes particle velocity and existing[] denotes the current particle (solution). Gbes t[] = Greatest between defined as mentioned above rand pbest[] = Best solution between every particle () = Inertia = Random numbers amongst 0 and 1 C1, C2 are learning factors, generally C1 = C2 = 2. Weights are commonly 0.8 or 0.9 C1, C2 are learning factors, generally C1 = C2 = 2.

5.2 Particle swarm optimization algorithm

The following is the process used in most evolutionary techniques:

Random population creation at the start of computing each subject's fitness value. The distance to the optimum is exactly proportional to it. ii. Fitness-based population reproduction. iii. If all requirements have been met, they come to a halt. Otherwise, go back to (ii).

We may deduce from the technique that PSO and GA have a lot in common. Both procedures start with a randomly created population that is evaluated using fitness values. Random approaches are used to refresh the population and look for the best solution. Both systems aren't guaranteed to work.

PSO, on the other hand, lacks genetic machinists such as boundary and mutation. The particle's internal velocity is updated. They have a memory as well, which is required for the algorithm to work.

5.3 Implementation of particles swarm optimization

Formation of the initial population at random i. Developing fitness score for each subject. It is proportional to the distance from the optimum. ii. Based on fitness levels, population reproduction occurs. iii. Stop after all requirements have been met. Otherwise, go back to (ii). For piston-cylinder assembly, the greatest preceding point, that is, the position equivalent to the greatest function importance of the ith particle, is stored as pbest (pi) = (pi1.pi2,...pi8), and for punch and die assembly, pbest (pi) = (pi1.pi2,...pi8). For the piston-cylinder assembly, the position change (velocity) of the ith particle is vi =(vi1,vi2,...vi8), and for the punch and die assembly, vi =(vi1,vi2,...vi5). Equations 1 and 2 are used to modify the particles, where I = 1,2,...N and N are the population size. At each repetition, Eq. 1 is utilized to compute the ith particle's new velocity, while Eq. 2 supplies the new velocity to its present position. Each particle's performance is evaluated using a fitness or objective function [15,16,17,18,19,20,21,22,23].

5.4 Parameters used for piston-cylinder assembly

-

The number of population/particles (N) = 100 to 500

-

The quantity of iterations = 50 to 500

-

Measurement of particles = 8

-

Knowledge factors

-

C1= 2

-

C2 = 2 and Inertia weight factor (ω) = 0.9

5.5 Parameters used for punch and die assembly

-

The number of population/particles (N) = 100

-

The number of iterations = 100

-

Dimension of particles = 5

-

Learning factors

-

C1= 2

-

C2 = 2

-

Inertia weight factor (ω) = 0.9

5.6 NSGA-II: elitist non-dominated sorting genetic algorithm

Kalyanmoy Deb [24] created the NSGA-II procedure. NSGA-II varies from non-dominated Sorting Genetic Algorithm procedure (NSGA) implementation in several respects:

The elite-preserving approach used by NSGA-II assures that previously identified good solutions are preserved. The NSGA-II sorting method is both quick and non-dominant. The NSGA-II algorithm does not need several user-adjustable parameters, making it user-independent. It began with a haphazard parent population. The people are organized via non-domination. A novel accounting mechanism was developed to lower the computing complexity to O. (N2). A fitness score is assigned to each solution based on its level of non-dominance (1 is the greatest level). As a result, fitness reduction is an unavoidable result. Binary tournament selection, recombination, and mutation techniques were used to produce the N-person child population Qo. Following that, in each generation, we use the approach below. A Ri=PiUQi mixed population emerged first. It encourages elitism by allowing parent solutions to be compared to solutions for the entire population of children. Ri has a population of 2N people. Following that, the Ri population is classified according to whether or not it has been influenced by a dominant gene. As solutions from the first front are added, the fresh parent population Pi+1 is generated. This process is repeated till the population size reaches or exceeds N. The first N points are chosen after sorting the replies from the preceding acceptable front using a crowded comparison criterion. We utilize a partial order relation n, as illustrated below since we need a wide variety of options.

To put it another way, we choose the point with the lowest non-domination rank out of two options with different non-domination ratings. If both points are on the same front, we choose the one that is in a less densely populated region (or with a superior crowded distance). When determining which solutions to choose from Ri, fewer dense portions of the search space are given greater weight. The Pi+1 population is created as a result of this. This size N population is currently being utilised for assortment, crossover, and mutation to produce an inventive size N population, Qi+1. The binary tournament assortment operator is still engaged, but the crowded comparison operator n is now the criteria. For a certain number of generations, the technique outlined above is repeated [20–23].

As can be seen from the preceding explanation, NSGA-II employs a quicker non-dominated sorting methodology, (ii) an elitist approach, and (iii) no nicking parameter, in addition to the other features described above. The usage of a crowded comparison principle in the random selection and population decrease fosters volatility in the results. On a variety of challenging test tasks, it has been demonstrated that NSGA-II outperforms other existing elitist multi-objective EAs. Figure 3 depicts the NSGA-procedures II that have been proposed for finding the optimum solution.

6 Results and discussion

6.1 Piston cylinder assembly

With a particle size of 100 to 500, an iteration size of 50-500, an inertia weight factor of 0.9, and a learning element of c1=c2=2, the PSO algorithm was performed. The results of PSO for various particle combinations and iterations have been tested and are shown in Table 4. As per the reference of Al-Ansary,M.D. et al. [12], the GA procedure requires 160 bits binary numbers and a total evaluation of 10000(100 samples and 100 generations,) for which the machining cost obtained is $ 66.91.But in PSO with the same number of total evaluations of 10000( 50 iterations and 200 particle size), the cost obtained is $65.33 (Table 4 ).

In addition, the GA technique needs three operators (reproduction, crossover, and mutation), whereas the PSO procedure only necessitates one (velocity up-gradation). Similarly, the NSGA II algorithm was run with an inhabitants size of 100, a cross over the probability of 0.7, a mutation probability of 0.2155, a mutation parameter of 10, and a total number of generations of 100. The NSGA II results for various random seed values were tested, and the optimal value is shown in Table 5. The NSGA II algorithm surpasses the GA algorithm because it is a quicker non-dominated sorting technique that is based on an elitist strategy and does not need any nicking parameters.

As per Table 4, it can be observed that the machining cost has been reduced for PSO when compared to complex methods, SA, GA and even NSGA II, whose best result is $66.756866.The best result obtained by PSO is given in Table 4, and it is $64.872 (400 iterations and 400 particles). The optimal machining tolerance value for the assembly of piston-cylinder bore for all the techniques is given in Table 5. The optimum machining tolerances are within the specified limit and also satisfy the constraints. Figures 4 and 5 show the solution history of the PSO and NSGA II techniques. It is observed that the PSO converges earlier than that of NSGA II. Though data on computational time is not available, definitely it may be less for PSO when compared with GA and NSGA II.

6.2 Punch and die assembly

The results of PSO and NSGA II are tabulated for analysis. The cost of machining with PSO and NSGA is Rs. 371.15 and Rs. 367.874115, respectively. Tables 6 and 7 show the achieved tolerances for PSO and NSGA II, respectively. The findings obtained using PSO and NSGA II for the remaining two situations (A=10 and 20) are likewise shown in Tables 6 and 7. In all three scenarios, NSGA II outperforms PSO and produces the lowest machining cost with the best machining tolerances. Figures 6 and 7 show the solution histories for PSO and NSGA II, respectively.

7 Conclusions

The assignment of tolerances is the assembly's most crucial and difficult responsibility. The component's functionality is determined by the tolerance design. Higher manufacturing costs result in higher product quality, but tighter tolerance. Lower production costs but lower product quality results from wider tolerances. The allocation of tolerance was improved to improve product performance while reducing machining costs. As can be seen from the explanation above, in addition to the other features mentioned above, NSGA-II uses I a quicker non-dominated sorting method, (ii) an elitist approach, and (iii) a no nicking parameter. The results are more unpredictable when a crowded comparison criterion is used in tournament assortment and population reduction. It has been shown that NSGA-II performs better than other multi-objective elitist EAs when tested on a variety of challenging test problems. Figure 3 shows the suggested NSGA-procedures II for identifying the best solution. The suggested approach, which makes use of PSO and NSGA II, significantly reduces computing time and machining expenses.

References

Wang, Y., Huang, A., Quigley, C.A., Li, L., Sutherland, J.W.: Tolerance allocation: Balancing quality, cost, and waste through production rate optimization. J. Clean. Prod. 285, 124837 (2021)

Goetz, S., Roth, M., Schleich, B.: Early Robust Design—Its Effect on Parameter and Tolerance Optimization. Appl. Sci. 11, 9407 (2021)

Kong, X., Yang, J., Hao, S.: Reliability modeling-based tolerance design and process parameter analysis considering performance degradation. Reliab. Eng. \& Syst. Saf. 207, 107343 (2021)

Ali, A., Iqbal, M.M., Jamil, H., Qayyum, F., Jabbar, S., Cheikhrouhou, O., Baz, M., Jamil, F.: An efficient dynamic-decision based task scheduler for task offloading optimization and energy management in mobile cloud computing. Sensors. 21, 4527 (2021)

Chakraborty, S., Kumar, V.: Development of an intelligent decision model for non-traditional machining processes. Decis. Mak. Appl. Manag. Eng. 4, 194–214 (2021)

Roth, M., Schaechtl, P., Giesert, A., Schleich, B., Wartzack, S.: Toward cost-efficient tolerancing of 3D-printed parts: a novel methodology for the development of tolerance-cost models for fused layer modeling. Int. J. Adv. Manuf. Technol. 1–18 (2021)

Kumari, A., Acherjee, B.: Selection of non-conventional machining process using CRITIC-CODAS method. Mater. Today Proc. 56, 66–71 (2022)

Armillotta, A.: An extended form of the reciprocal-power function for tolerance allocation. Int. J. Adv. Manuf. Technol. 1–14 (2022)

Jana, T.K.: Role of Non-Traditional Machining Equipment in Industry 4.0. In: Machine Learning Applications in Non-Conventional Machining Processes. pp. 203–214. IGI Global (2021)

Goyal, A., Gautam, N., Pathak, V.K.: An adaptive neuro-fuzzy and NSGA-II-based hybrid approach for modelling and multi-objective optimization of WEDM quality characteristics during machining titanium alloy. Neural Comput. Appl. 33, 16659–16674 (2021)

Rao, B.C.: Frugal manufacturing in smart factories for widespread sustainable development. R. Soc. open Sci. 8, 210375 (2021)

Roy, M.K., Das, P.P., Mahto, P.K., Singh, A.K., Oraon, M.: Non-Traditional Machining Process Selection: A Holistic Approach From a Customer Standpoint. In: Data-Driven Optimization of Manufacturing Processes. pp. 165–178. IGI Global (2021)

Geng, Z., Bidanda, B.: Tolerance estimation and metrology for reverse engineering based remanufacturing systems. Int. J. Prod. Res. 60, 2802–2815 (2022)

Ajith, S. V, Nair, N.N., Sathik, M.B., Meenakumari, T.: Breeding for low-temperature stress tolerance in Heveabrasiliensis: screening of newly developed clones using latex biochemical parameters. J. Rubber Res. 1–10 (2022)

Zadafiya, K., Kumari, S., Chatterjee, S., Abhishek, K.: others: Recent trends in non-traditional machining of shape memory alloys (SMAs): A review. CIRP J. Manuf. Sci. Technol. 32, 217–227 (2021)

Pandey, A., Singh, G., Singh, S., Jha, K., Prakash, C.: 3D printed biodegradable functional temperature-stimuli shape memory polymer for customized scaffoldings. J. Mech. Behav. Biomed. Mater. 108, 103781 (2020)

Basak, A.K., Pramanik, A., Prakash, C.: Deformation and strengthening of SiC reinforced Al-MMCs during in-situ micro-pillar compression. Mater. Sci. Eng. A. 763, 138141 (2019)

Prakash, C., Singh, G., Singh, S., Linda, W.L., Zheng, H.Y., Ramakrishna, S., Narayan, R.: Mechanical reliability and in vitro bioactivity of 3D-printed porous polylactic acid-hydroxyapatite scaffold. J. Mater. Eng. Perform. 30, 4946–4956 (2021)

Prakash, C., Singh, S., Gupta, M.K., Mia, M., Królczyk, G., Khanna, N.: Synthesis, characterization, corrosion resistance and in-vitro bioactivity behavior of biodegradable Mg--Zn--Mn--(Si--HA) composite for orthopaedic applications. Materials (Basel). 11, 1602 (2018)

LetaTesfaye, J., Krishnaraj, R., Nagaprasad, N., Vigneshwaran, S.: Vignesh, V: Design and analysis of serial drilled hole in composite material. Materials Today Proceeding. 45(6), 5759–5763 (2021)

LetaTesfaye, J., Krishnaraj, R., Bulcha, B., Abel, S., Nagaprasad, N.: Experimental Investigation on the Impacts of annealing temperatures on titanium dioxide nanoparticles structure, size and optical properties Synthesized through Sol-gel methods. Materials Today Proceeding 45(6), 5752–5758 (2021)

Jule, L.T., Krishnaraj, R., Nagaprasad, N., Stalin, B., Vignesh, V., Amuthan, T.: Evaluate the structural and thermal analysis of solid and cross drilled rotor by using finite element analysis. Materials Today: Proceedings 47, 4686–4691 (2021)

Amuthan, T., Nagaprasad, N., Krishnaraj, R., Narasimharaj, V., Stalin, B., Vignesh, V.: Experimental study of mechanical properties of AA6061 and AA7075 alloy joints using friction stir welding. Materials Today: Proceedings 47, 4330–4335 (2021)

Prakash, C., Singh, S., Basak, A., Królczyk, G., Pramanik, A., Lamberti, L., Pruncu, C.I.: Processing of Ti50Nb50- xHAx composites by rapid microwave sintering technique for biomedical applications. J. Mater. Res. Technol. 9, 242–252 (2020)

Acknowledgements

The Mechanical Engineering department at Saranathan College of Engineering in Trichy, Tamil Nadu, India, and SRM TRP Engineering College in Trichy, Tamil Nadu, India, both funded this project. We'd also like to express our gratitude to the administration, professors, and technicians who assisted us with this research.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Conceptualisation: TM, JG, PG, and BHB. Data curation: TM, JG, PG, and BHB. Formal analysis: NN, DB, MG, LTJ, and KR. Investigation: TM, JG, PG, BHB, LTJ, NN, and KR. Methodology: TM, JG, PG, BHB, LTJ, NN, and KR. Project administration: KR and LTJ. Resources: TM, JG, PG, BHB, LTJ, NN, and KR. Software: KR. Supervision: KR and LTJ. Validation: TM, JG, PG, BHB, LTJ, NN, and KR. Visualization: TM, JG, PG, BHB. Writing—original draft: TM, JG, PG, BHB, LTJ, NN, and KR. Data visualization, editing and rewriting: TM, JG, PG, BHB, LTJ, NN, and KR

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Thilak, M., Jayaprakash, G., Paulraj, G. et al. Non-traditional tolerance design techniques for low machining cost. Int J Interact Des Manuf 17, 2349–2359 (2023). https://doi.org/10.1007/s12008-022-00992-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12008-022-00992-0